Abstract

We recorded the time series of location data from stationary, single-frequency (L1) GPS positioning systems at a variety of geographic locations. The empirical autocorrelation function of these data shows significant temporal correlations. The Gaussian white noise model, widely used in sensor-fusion algorithms, does not account for the observed autocorrelations and has an artificially large variance. Noise-model analysis—using Akaike’s Information Criterion—favours alternative models, such as an Ornstein–Uhlenbeck or an autoregressive process. We suggest that incorporating a suitable enhanced noise model into applications (e.g., Kalman Filters) that rely on GPS position estimates will improve performance. This provides an alternative to explicitly modelling possible sources of correlation (e.g., multipath, shadowing, or other second-order physical phenomena).

Dataset License: BY-NC-ND

1. Introduction

Numerous applications are built using location provided by Global Positioning System (GPS) receivers. While centimetre precision has been demonstrated for dual-frequency [1] and differential GPS systems, a very large number of systems rely on single-frequency non-differential units, which report positions with typical precision of many metres [2]. We use the term “noise” to refer to the errors in a sequence of reported positions. The sources of the noise in the positions include atmospheric and ionospheric phenomena [3], multipath effects [4,5], canopy trees [6], and a wide variety of physical phenomena that may be impractical to model. However, other types of errors are also inadvertently added to the signal, e.g., errors present in the modelling of satellite orbits [7], finite precision of the digital components of receivers, variations in the built-in phased-locked loop [8], or the signal processing techniques [9], etc. The common structure and frequent formulations of GPS algorithms are discussed in Xu and Xu [10], including some aspects of the mathematical formulation that carry non-trivial correlation in readings between epochs. In summary, however, it is sufficient for this work to realise that there are noise sources that can be adequately modelled by uncorrelated processes, e.g., white noise, and there are sources that inherently carry temporal correlations.

All GPS signals are encumbered with noise, whose sources are hard to distinguish [11,12]. The noise could be attributed to satellite clock and orbit errors, to the propagation of the GPS signal through media (ionosphere, troposphere, etc.), and to the receiver units. Many of these noise components are time-correlated and caused by stochastic processes external to the useful signal. It would be ambitious to model and distinguish between all these processes; alternatively, one can consider their cumulative effect on the time series [13,14]. Irrespective of the source, a robust signal-processing algorithm needs some model of noise processes. Such a model unavoidably contains assumptions on the nature of the noise, e.g., amplitude and correlation in the time domain, which must be experimentally validated. Often, the measurement errors are modelled by independent, identically distributed random Gaussian variables (Gaussian white noise). While this model is sufficient for many applications, it is generally accepted that a linear combination of coloured noise (whose power spectrum follows a law with a characteristic exponent ) is a more suitable approximation [15]. With the assumption of white noise, the time correlation in GPS datastreams leads to biased and inconsistent parameter estimates [16], especially for sampling rates above 5 Hz. Moreover, it is unrealistic that measurements from different satellites would have the same accuracy; therefore, stochastic models assuming independent and constant variance result in unreliable statistics, and stochastic modelling is needed. Directly estimating statistical parameters of the GPS time series by explicitly taking into account the heteroscedastic space- and time-correlated error structure of the observations could result in improved accuracy at minimal computational cost.

Noise in long GPS time series has been analysed for geodetic purposes by [17,18], who found in the relevant frequency band. Their results are, however, not conclusive, as many spectra would fit this range, e.g., white noise () or flicker noise (). Many processes that these GPS observations are expected to capture, e.g., earthquakes or the wobble of the Earth around its axis, have similar spectra [19]. Consequently, the physical process being observed by GPS and the inherent noise of a GPS signal are present simultaneously. The noise amplitude changes with time and, for older data, can be a considerable fraction of the signal [20].

In this study, we analyse time series of GPS measurements with duration between a day and a week at 1 Hz sampling frequency. Observations were made in the Southern Hemisphere with stationary units. The recordings show significant autocorrelation incompatible with a Gaussian white noise model. The observed presence of correlations implies that there are some stochastic equations governing the dynamics of the signal. There are two major sources that plausibly introduce correlations: (a) the movement of GPS satellites and, thus, their constellation, and (b) the algorithms coded in the GPS emitters and receivers. Both sources are, to a high degree, deterministic; thus, in a perfect environment, they would produce a strongly correlated signal. However, there are many other phenomena, e.g., weather and surface reflectance, that introduce effects into the signal that can be most effectively modelled by noise, rather than searching for their respective governing equations. We develop mathematical models for the observed noise and suggest how these models can be used for improved positioning and uncertainty quantification in future devices.

2. Description of the Data Collection Process

Data were collected from civilian L1-band GPS units at multiple locations. At each location, data were collect from four units with a Telit Jupiter-F2 integrated GPS module, as well as a single standard USB-connected GPS receiver—GlobalSat BU-353S4. The first- and second-order statistics of the location data showed no significant difference between the two devices. For brevity, we present our findings using the data obtained only with the GlobalSat BU-353S4.

The location of satellites is a major factor of GPS accuracy degradation. Devices function well when the satellites are widely distributed [21]; hence, the altitude errors in measurements are typically much larger than those in latitude or longitude. The GPS satellites operate at an inclination of around 55; consequently, for devices at higher latitudes, the GPS satellite positions are biased strongly towards the equator.

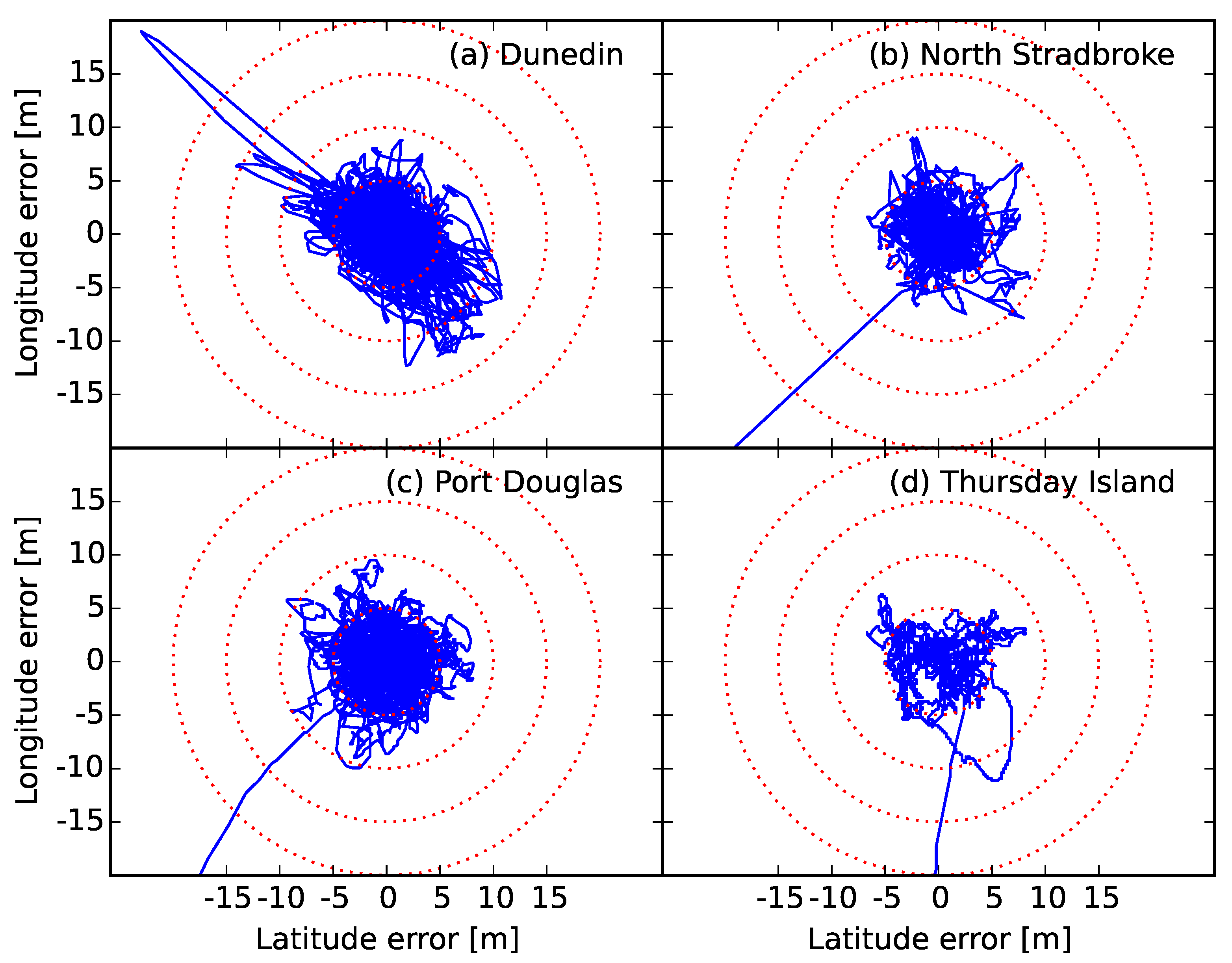

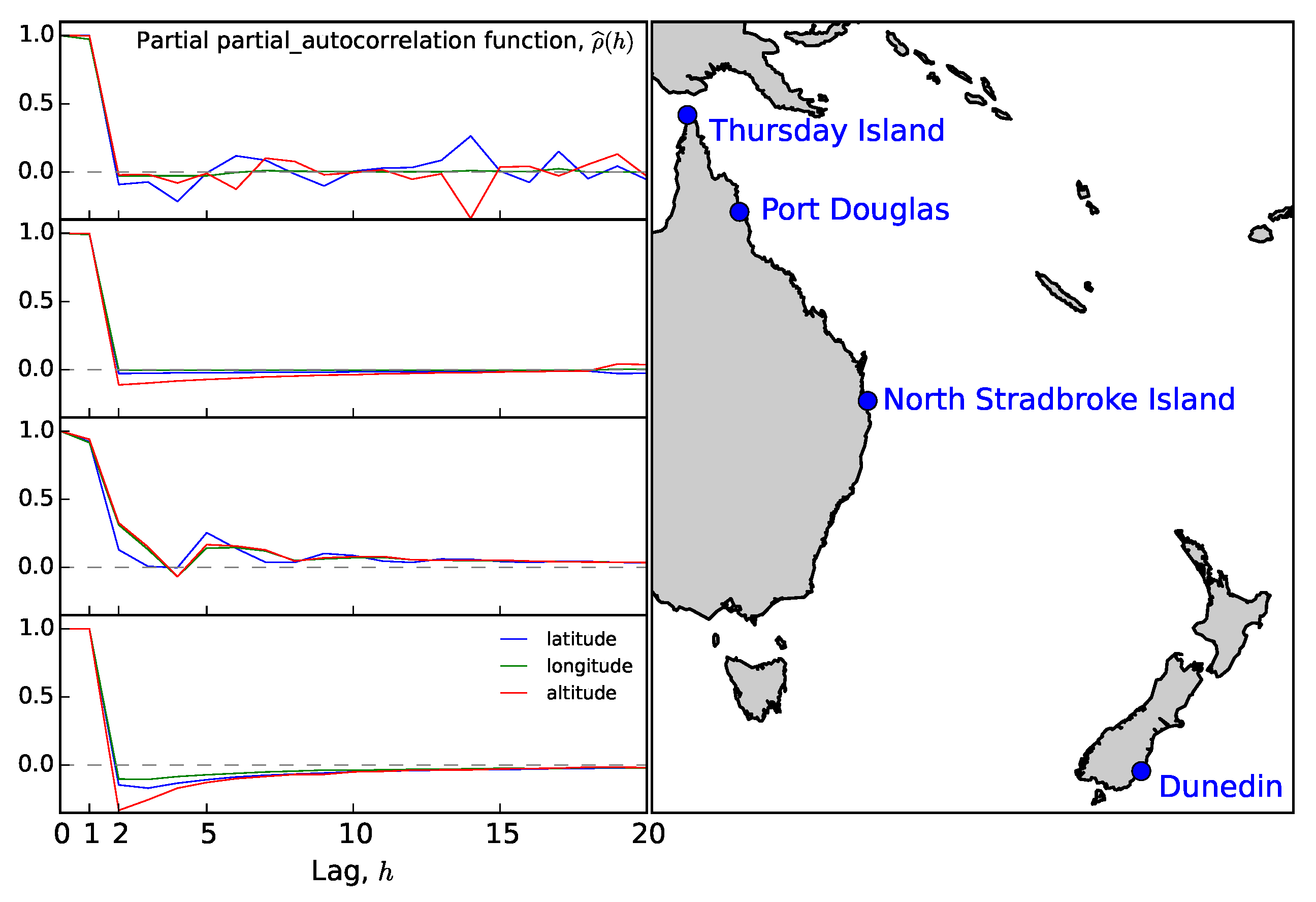

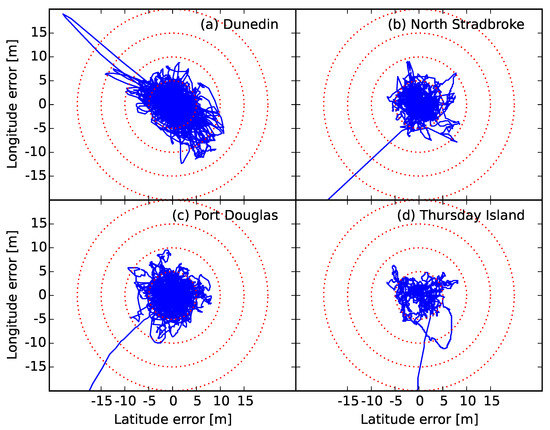

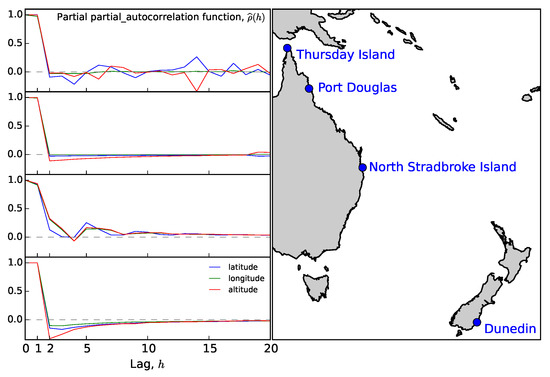

To ensure that our analysis was not biased due to specifics of the GPS satellite constellation geometry, we carried out measurements at four locations with latitudes between 45 and 10 South (see Table 1). Data were recorded at each site for approximately 48 h. The decimated latitude and longitude errors (deviations from time average) are shown in Figure 1. Disregarding the initialisation period (typically ∼1 min), the data resemble a random walk.

Table 1.

Measurement sites and dates of observations.

Figure 1.

The longitude and latitude errors at each location. Due to the large number of observations, the data have been decimated.

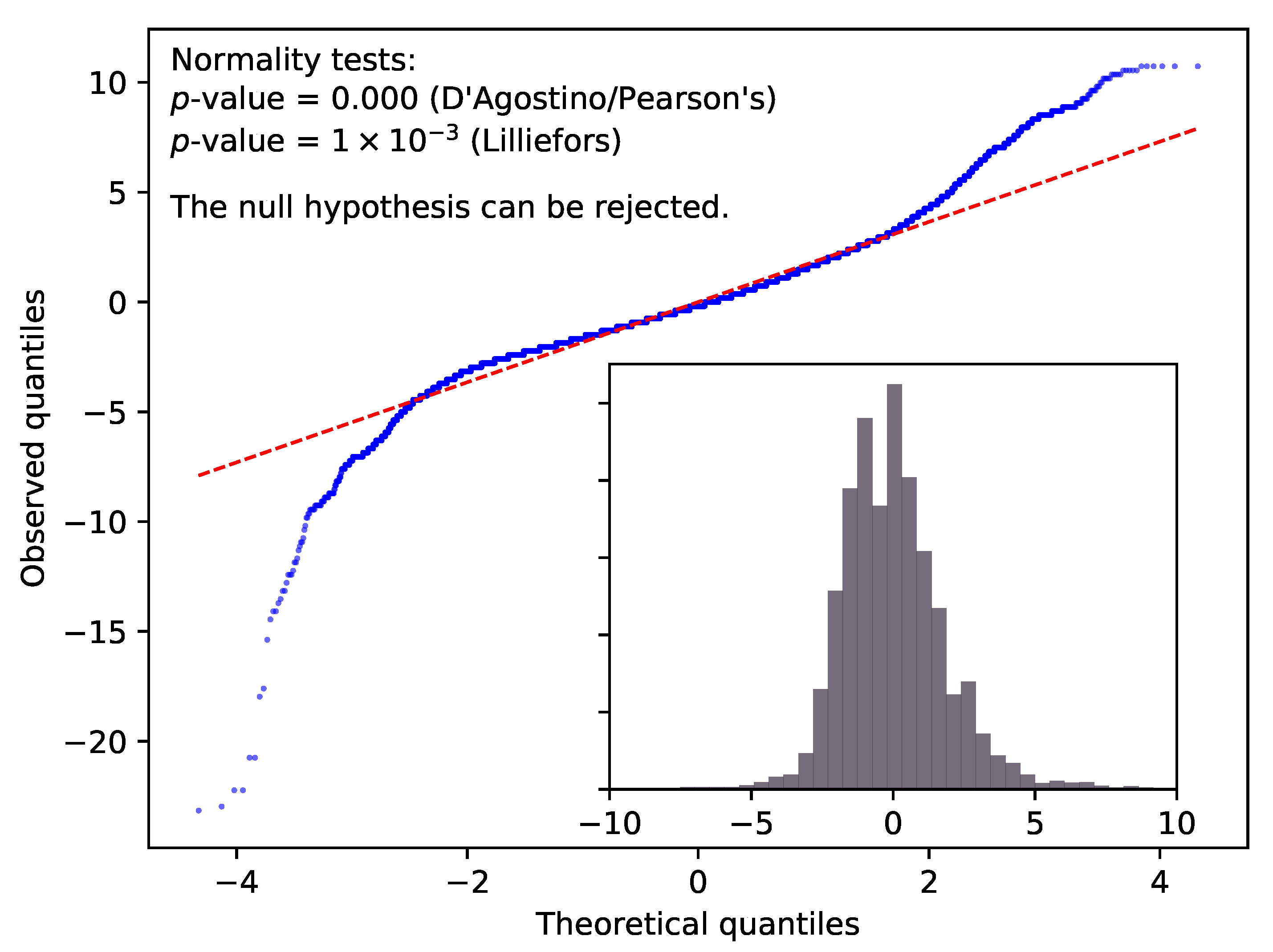

While this cursory look seems suggestive, calculating the residuals defined as (here, denotes the long-time average, or the ‘true’ position) and creating a QQ-plot [22] reveals the non-Gaussian nature of (for technical details see Appendix A).

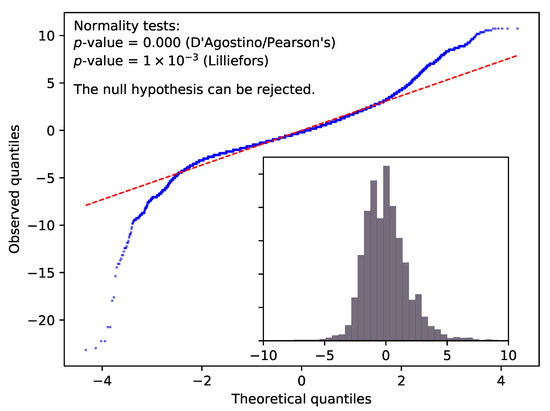

In Figure 2 the QQ-plot of the latitude data collected in Dunedin is depicted. In order to support the graphical findings, we have also calculated two more normality tests [23], one based on d’Agostino and Pearson’s work [24,25], and another one suggested by Lilliefors [26]. The latter is the most suitable for our use, as it does not assume the knowledge of mean and standard deviation of the normal distribution, but estimates these from the sample. The null hypothesis of both tests is that the data come from a normal distribution. Since both tests return p-values much smaller than 0.01, we can thus conclude at a 99% confidence level that latitude data do not support the assumption of normal distribution. Similar conclusions can be drawn for longitude and altitude data at all locations.

Figure 2.

The main figure shows the QQ-plot of the residual of latitude data (measured in metres) collected in Dunedin. The inset depicts the histogram of the same data. The p-values of two normality tests are also given in the top left corner. Based on this evidence, the data do not support the assumption that the residual follows a Gaussian distribution.

3. Analysis of Observations

One may think of the collection of positions—emitted by the GPS chip—as a stochastic process, a sequence of random variables, labelled by time In our study, may represent the latitude, longitude, or altitude returned by a GPS unit. We denote random variables with capital letters, e.g., , and its particular realisation with lower case letter, e.g., . Any estimator of a statistic, e.g., the expectation value , is denoted by the same letter but decorated with a symbol, e.g., .

Stochastic Processes

The simplest non-trivial stochastic process can be formulated as the sum of the true position, , and an additive noise term:

The measurement error, , is often considered to be a Gaussian white noise with zero mean and variance . The problems of fitting Gaussian noise models to GPS measurements have been recognised by [27,28], and two models were proposed to describe the measurements more accurately: a moving average process (MA) and an autoregressive model (AR).

We check whether adjacent recordings can be considered independent random variables using the empirical autocorrelation function [29,30,31,32,33], defined as

where and are the expectation value and variance of the random process at step r, respectively. The process is stationary if and are both time-independent; in such a case, the autocorrelation function depends only on the time difference (lag) between its arguments and not explicitly on the arguments themselves. Henceforth, we assume stationarity; thus, while and both become constants, which are calculated by time-averaging. Furthermore, we consider the empirical partial autocorrelation function calculated using the Yule–Walker method [32,34] and defined as

which provides the amount of correlation between a variable and a lag of itself that is not explained by correlations at all lower-order lags.

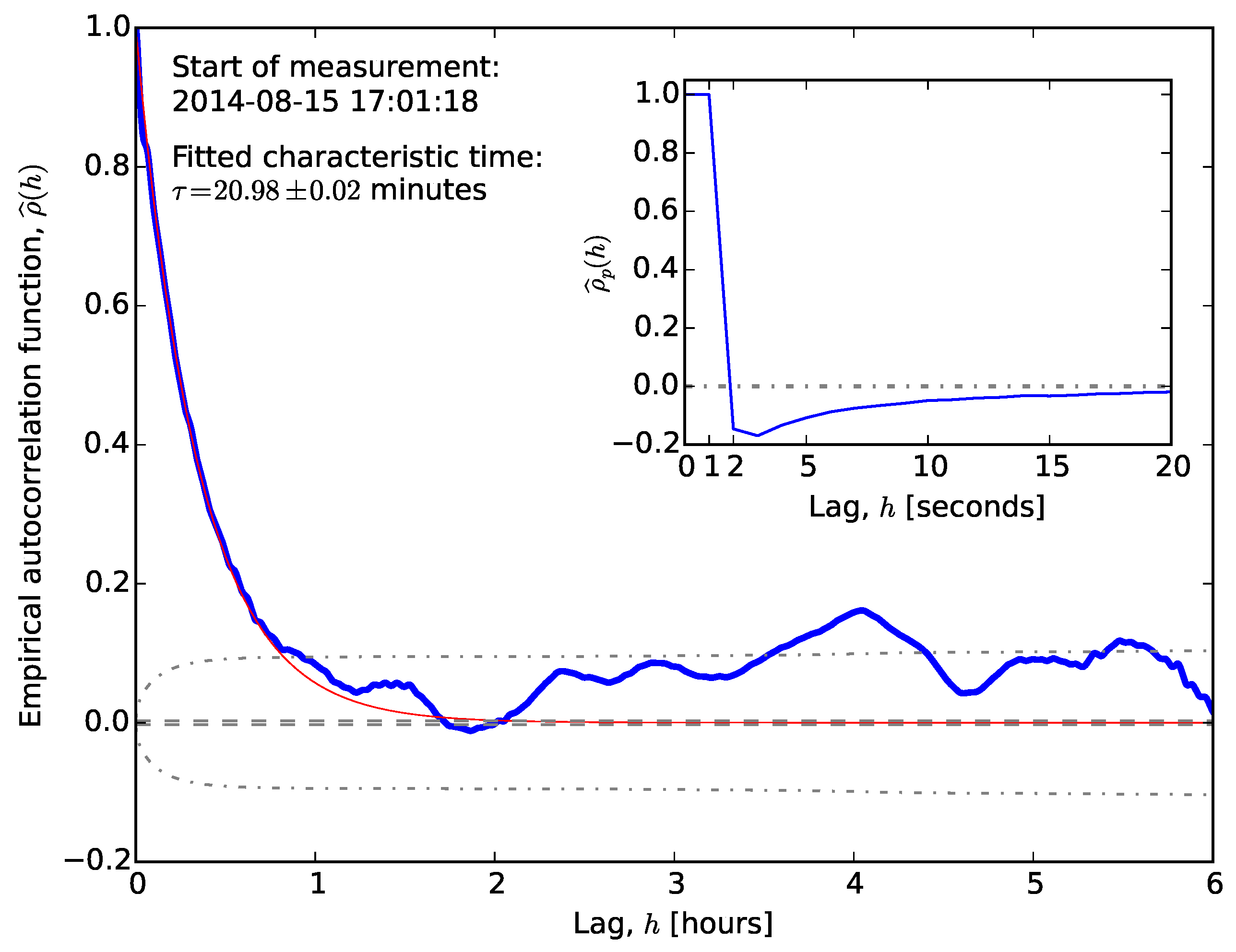

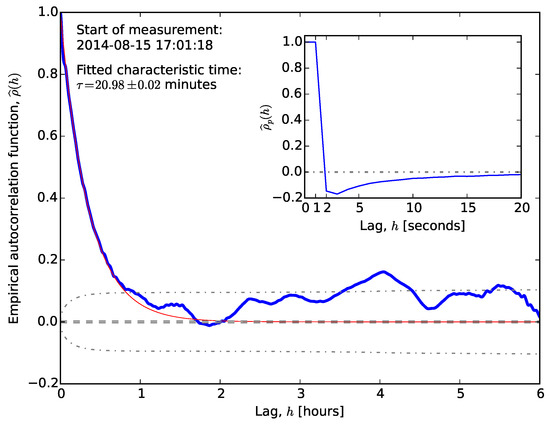

As an example, Figure 3 shows the autocorrelation of a typical time series. The autocorrelation function decays slowly. Fitting an exponential decay to the first half-hour dataset provides a characteristic time estimate of minutes, i.e., it takes approximately an hour () to reach statistical independence. Using the reported position for periods much shorter than would lead to a biased estimate. We wish to draw the reader’s attention to the fact that long-term correlations usually imply a power-law decay of correlation, while our fit assumes an exponential correlation. While exponential decay of correlation is relatively fast, compared to power-law decay, it seems to have an observable effect on location time series, and its inclusion in modelling may provide better positioning algorithms in the future.

Figure 3.

Correlogram of latitude errors of a time series from Dunedin of ∼250 h. The dashed lines correspond to the classical 95% confidence interval, while the dash-dotted curve corresponds to the Box–Jenkins standard error. The thin solid line represents the exponentially decaying curve fitted to the first half-hour section. The inset depicts the empirical partial autocorrelation function.

The theoretical autocorrelation function of Gaussian white noise is the Dirac-delta, . Assuming this model, one may test for statistical independence of and by checking whether the autocorrelation function is different from zero, i.e., whether it falls within the confidence interval around [35,36].

where is the cumulative distribution function of the standard normal probability distribution, is the required level of significance, denotes the sample standard deviation, and n is the number of measurements. This confidence interval at the level is plotted with dashed lines in Figure 3. The majority of falls outside this interval; therefore, our observations and the assumption of Gaussian white noise are inconsistent with each other. One may take into account that the empirical autocorrelation function estimated from a finite-length time series also carries error [37] and could derive a modified confidence interval. At significance level , this is given by the Box–Jenkins standard error [38,39,40]:

where . Figure 3 also depicts the Box–Jenkins standard error with dash-dotted lines, and it is apparent that the two confidence intervals differ from each other substantially, thus confirming the statistical dependence in the measured location readings. It is worth noting here that the exponential fit and the first crossing of with the Box–Jenkins confidence interval agree with each other. The inset in Figure 3 shows for s with its standard error at a 0.95 significance level. This graph may suggest the application of a high-order autoregressive noise model (see next section), since vanishes only for lags larger than approximately 30 s.

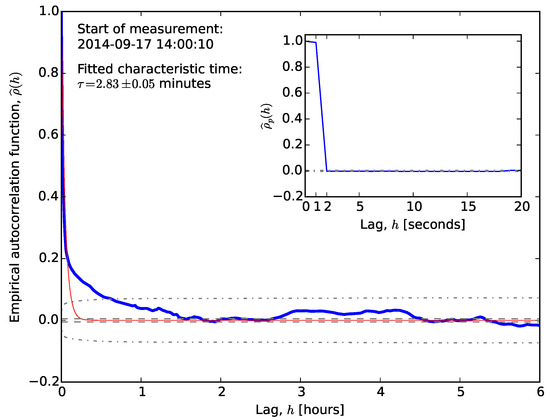

However, we find that other datasets exhibit much shorter ‘memory’, so lower-order processes might also be sufficient.

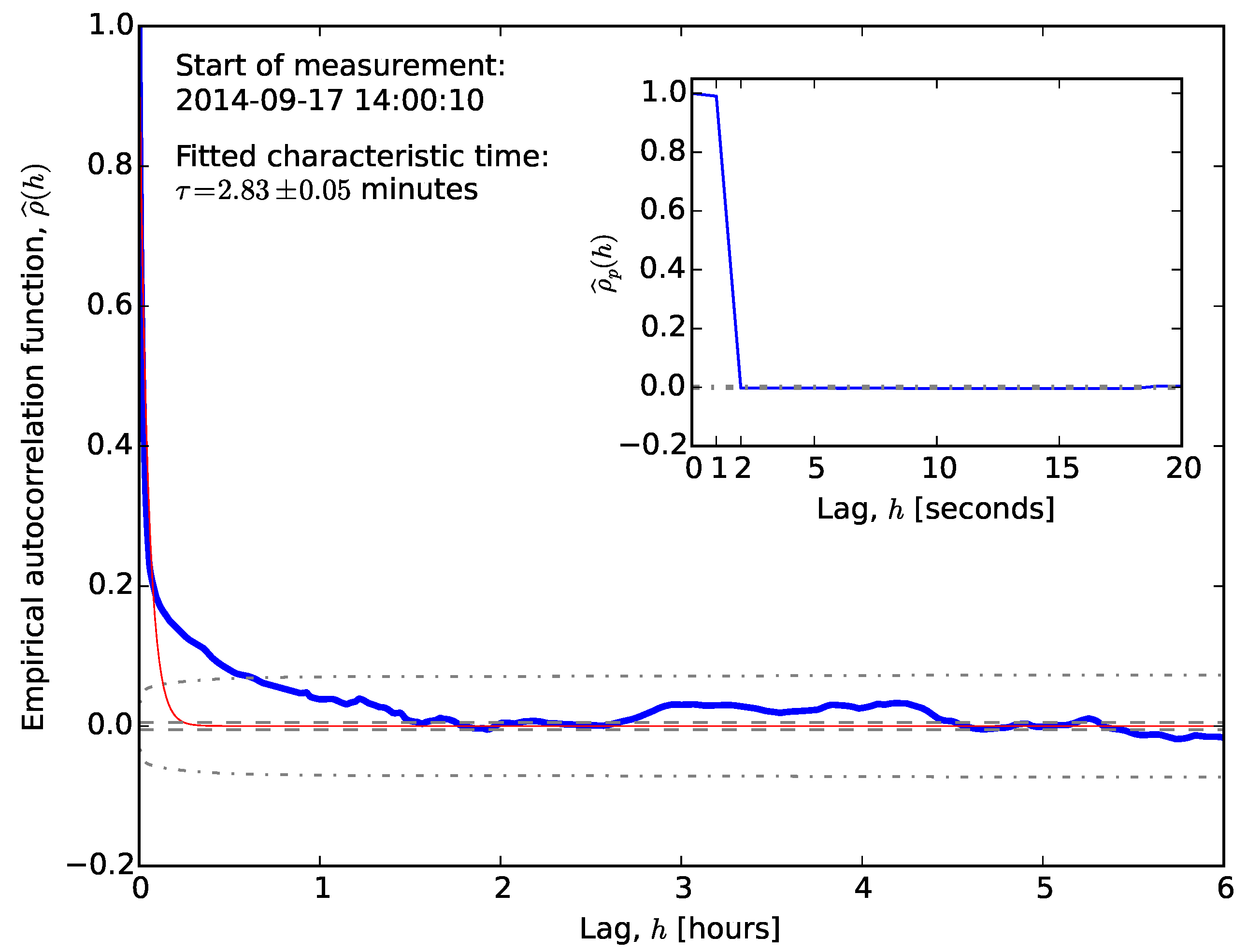

Datasets from other locations exhibited different behaviour (see Figure 4). The autocorrelation still takes less than an hour to reach the Box–Jenkins standard error, but the initial drop is much sharper. This drop may explain the different behaviour of the partial autocorrelation function, which vanishes for lags larger than unity. Figure 3 and Figure 4 illustrate the extreme cases.

Figure 4.

Correlogram of longitude errors for the GPS data taken in Port Douglas. The line styles are identical to those in Figure 3.

The autocorrelation of the Thursday Island dataset, see Figure 5, displays persistent oscillations not observed at other locations. This could be because of a temporary loss of power experienced on Thursday Island, which forced the data to be split into two sets, each approximately 24 h long, or an artefact of the local geography contributing to long-term multipath effects.

Figure 5.

The partial autocorrelation function, , for longitude, latitude, and altitude at all geographic locations visited. The lag is measured in seconds.

4. Modelling the Noise

In this section, we develop some statistical models for the observed noise. A model is a relationship between random variables, , in the following form:

where and are sets of integers, h and g are fixed functions, and . Here, the quartet (h, g, , ) constitutes the model to be inferred. To simplify the task, we presume that h and g are linear in all their variables (so-called linear models). The stochastic model is then

where and are all constants with (convenience), and causality restricts summations to the current or past values of or [41]. This model requires an infinite number of coefficients, which cannot be estimated using a finite number of observations. Therefore, we focus on finite-parameter linear models and estimate the unknowns via the maximum likelihood method, in which the likelihood function below is constructed for Gaussian processes.

The likelihood function, L, is the joint probability density of obtaining the actually observed data , , …, , according to the model. It is a function of the unknown parameters , , …, , , , and . Under general assumptions, the log-likelihood function of a finite parameter linear model is:

where the -th component of the covariance matrix is calculated from

Although the likelihood function, L, is derived under Gaussian processes, if the time series is causal and invertible, then maximising this likelihood leads to asymptotically consistent estimators [42,43]. This approximation is convenient, as the asymptotic likelihood function has a unique stationary point, the global maximum [44].

Below, we introduce and analyse finite-parameter linear noise models and compare their performance, including the Gaussian white noise model for a benchmark.

4.1. Gaussian White Noise Model

The simplest noise model treats measurements as statistically independent, normally distributed random variables: for fixed . Thus, , with yet unknown parameter values and . Using the maximum likelihood approach, these parameters are estimated from the observations as

4.2. Autoregressive Models

An autoregressive process of order p, abbreviated as AR(p), is a stochastic process where h is a linear combination of p terms:

where are constants. The process has parameters: and . The estimators and are found by linear algebra [37]. Alternatively, the estimators can be found by least squares regression [45]. The parameter estimation procedure costs, at most, operations, where n is the number of observations.

As the Laplace transformation of suggests the autocorrelation function of an AR(p) process is formally the sum of p exponentially decaying terms, thus, exhibits exponential decay or oscillatory behaviour [32], while vanishes for lags .

4.3. Moving Average Model

Rather than using the past values of the random variable, a moving average model of order q, abbreviated as MA(q), regresses on past errors, for , and is defined as

The process has parameters. One may cast any stationary AR(p) process as an MA(∞) model and an invertible MA(q) model as an AR(∞) process. Nevertheless, MA(q) models are useful, as one cannot fit an AR(∞) model on a finite number of observations.

Unlike autoregressive processes, for MA processes, any estimator based on a finite amount of observations inherently carries some loss of information. Resultantly, parameter estimation is harder than for AR processes of comparable order.

The qualitative behaviours of the autocorrelation and partial autocorrelation functions, and , are similar to those in autoregressive processes, but with roles interchanged. The autocorrelation function vanishes for , while the partial autocorrelation function is dominated by damped exponential and/or periodic functions. In the examples shown in Figure 3 and Figure 4, the autocorrelation function vanishes too slowly to be described by a low-order MA process.

4.4. Autoregressive Moving Average Model

The combination of AR(p) and MA(q) processes can be formulated as

The combination inherits most of its properties from these simpler processes. Neither the autocorrelation nor the partial autocorrelation function vanishes after a threshold lag. The autocorrelation function exhibits damped exponential and/or oscillatory behaviour for lags , while is dominated by exponential and periodic terms for lag values . The variance of the sample autocorrelation function can exceed the Box–Jenkins error (see Figure 3). As a result, it is often difficult to determine the model orders; model verification and iterative parameter estimation are needed.

For normally and independently distributed with zero mean and constant variance, and for stationary , Melard [46] provided an efficient algorithm for calculating the exact likelihood function of an ARMA(p, q) model. This algorithm requires operations for n observations, which—in practice—can be reduced by considering the observations in a finite window.

4.5. Ornstein–Uhlenbeck Model

In this section, we consider a stochastic differential equation (SDE) rather than a discrete stochastic process. Stochastic partial differential equations are a natural choice to model dynamical systems subject to random fluctuation. For an introduction to SDEs, we point to Evans’ [47] and Øksendal’s [48] excellent monographs.

The scatter-plots in Figure 1 resemble a random walk on a phenomenological level. However, in a Brownian motion, the variance of the position increases with time, which we do not observe here. On the contrary, we observe (and expect) that the GPS reading will return to the true location even if it deviates from it momentarily, i.e., it is mean-reverting. Furthermore, Figure 3 shows that the correlation function decreases exponentially. These properties suggest an Ornstein–Uhlenbeck (OU) process [49], defined by the SDE

where , , and are real parameters, with . For historical and physical reasons, the parameters and are called the drift and diffusion coefficients, respectively. The fluctuation part, , is a Wiener process. The same process can also be represented as

The first term corresponds to the long-term but diminishing influence of the initial observation. The second reaches the steady value exponentially fast, while the third describes a scaled and time-transformed random fluctuation. The expectation value at time t is .

One may simplify (6) by eliminating with the transformation, but we keep explicit, as it is the ‘true’ position of the GPS device. The parameter sets the scale of the autocorrelation function , while determines the noise between measurements. The OU process is the continuous-time generalisation of an AR(1) process. It has several desirable properties: (a) It is mean-reverting for , (b) it has finite variance for all contrary to Brownian motion, (c) its analytic covariance permits a long autocorrelation time, (d) the autocorrelation function is insensitive to the sampling rate, and (e) it approaches Gaussian white noise as . More precisely, the OU process is the only non-trivial, mean-reverting, Gauss–Markov process, i.e., whose transitions are memory-less, and it thus allows predictions of the future state based on the current state [50]. This does not prohibit more sophisticated models for GPS time series, but these models will lack at least one of the properties listed above.

To model our observations as an Ornstein–Uhlenbeck process, we must estimate , , and using maximum likelihood estimation [51]. Tang and Chen [52] provided a detailed derivation of estimators for these parameters, and Dacunha-Castelle and Florens-Zmirou [53] showed that for time-equidistant observations, the maximum likelihood estimators are consistent and asymptotically normal, irrespective of the sampling time-step, .

The estimators for , , and require operations each. Therefore, the overall parameter estimation takes operations. However, Tang also gives explicit estimates for the asymptotic form of these estimators, e.g.,

Thus, one needs only a moderate number of observations, n, or short sampling time to estimate the parameter, especially compared with the estimator of the mean for an uncorrelated process, which would have (much slower) convergence to the true mean.

Parameter estimation for SDEs is a rapidly developing field, especially the sub-field of online estimators that update the parameter estimates as newer observations become available. Such estimators are usually associated with constant computational cost, as their performance is independent of n. A method of online estimators for a sampled, continuous-time Ornstein–Uhlenbeck is the subject of future publication.

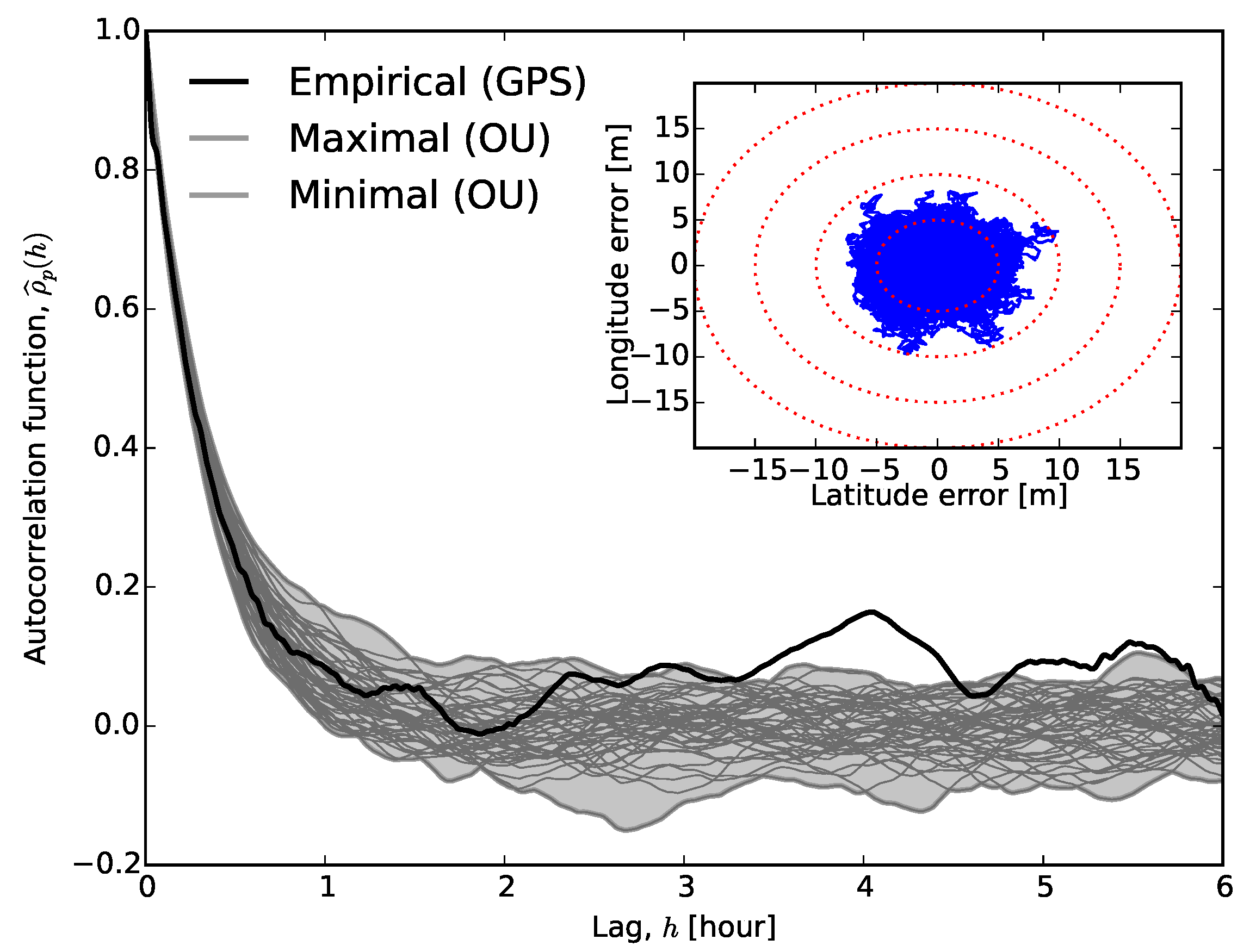

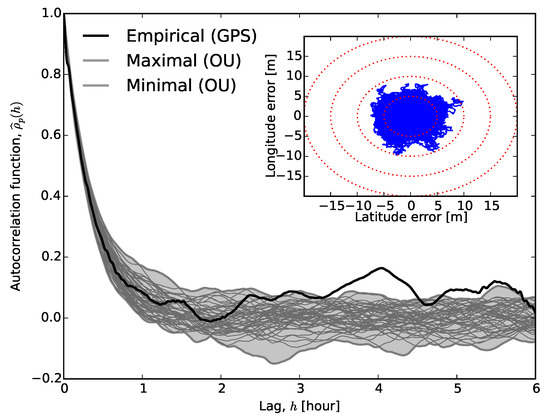

After estimating , , and , we simulated an ensemble of Ornstein–Uhlenbeck processes using the Euler–Maruyama method [54]. We calculated and plotted the autocorrelation function for each run (see Figure 6) as well as the range covered by these functions. Clearly, the OU noise model represents the autocorrelation in the data up to at least two hours. However, it is unable to capture the undulation of . Therefore, an Ornstein–Uhlenbeck process should be complemented with some other process for longer-term predictability. We believe that a more complicated noise model is unwarranted unless the sampling period exceeds an hour.

Figure 6.

The thick black solid line depicts for the GPS recordings on 15 August, 2014 in Dunedin, as in Figure 3. All grey lines represent the autocorrelation function of a simulated Ornstein–Uhlenbeck process with input parameters , , and . The lines labelled as ‘maximal’ and ‘minimal’ are connecting the extremal values of simulated autocorrelation functions at all lags.

5. Quantitative Analysis of Noise Models

In this section, we present and discuss the quantitative performance of the previously described noise models. For each model, the parameters were chosen to best fit the location data. The process of selecting which of these models best describes the data, called model selection, is a large and complex field in statistics; thus, we leave detailed explanation to the technical literature, e.g., [55] and references therein.

There are three properties of model selection criteria: consistency, efficiency, and parsimony. Consistency means that the criterion selects the true model with probability approaching unity with increasing sample size provided the true model is among those considered. However, nothing guarantees that the true model is simple and tractable; thus, it is also necessary to measure how much error one might make by accepting a model knowing that it is not the true model. Efficiency is a measure of this property; as the sample size becomes large, the error in predictions using the selected model becomes indistinguishable from the error obtained using the best model among the candidates. Parsimony dictates that if all measures are equal for two competing statistical models, the preferred one should be the simpler model.

The most common model selection criteria use the likelihood function, L, and introduce some penalty proportional to the model complexity. Akaike’s Information Criterion (AIC) is defined as , where k is the number of fit parameters in the model [56,57]. The best model has the smallest AIC value—it encodes the most information using the least number of fitted parameters. The AIC has a definite minimum value with respect to k, and the appropriate order of the model is determined by the value of k at this minimum. The AIC is biased for small samples, and a bias-corrected version (AICc) is gaining popularity [43]. Here, we analyse time series with and , so we can ignore this correction. Other criteria have been developed, e.g., Bayesian Information Criterion (BIC), which more severely penalises the introduction of extra model parameters by replacing the term with .

Regarding the model selection criteria, AIC and AICc are both inconsistent, while BIC is only asymptotically consistent. Meanwhile, AIC is efficient, while BIC is not. Therefore there is no clear choice between AIC and BIC. The latter is asymptotically consistent as the sample size approaches infinity, while the former selects models that have acceptable predictive power [58].

We use AIC, as it is almost universally accepted. While it does not validate a statistical model, it determines relative probabilities between alternative models. We compare AIC values for a selection of models. Table 2 shows AIC scores for model fits of latitude, longitude, and altitude at four geographic locations. For each location, we individually fitted different noise models using the same observation datasets. For the AR(p), MA(q), and the ARMA(p, q), we increased the number of parameters involved. Occasionally the statistics routine was unable to converge for the MA(q) processes. In these cases, the corresponding entry in Table 2 is left empty.

Table 2.

AIC values (rounded) of noise models for GPS time series.

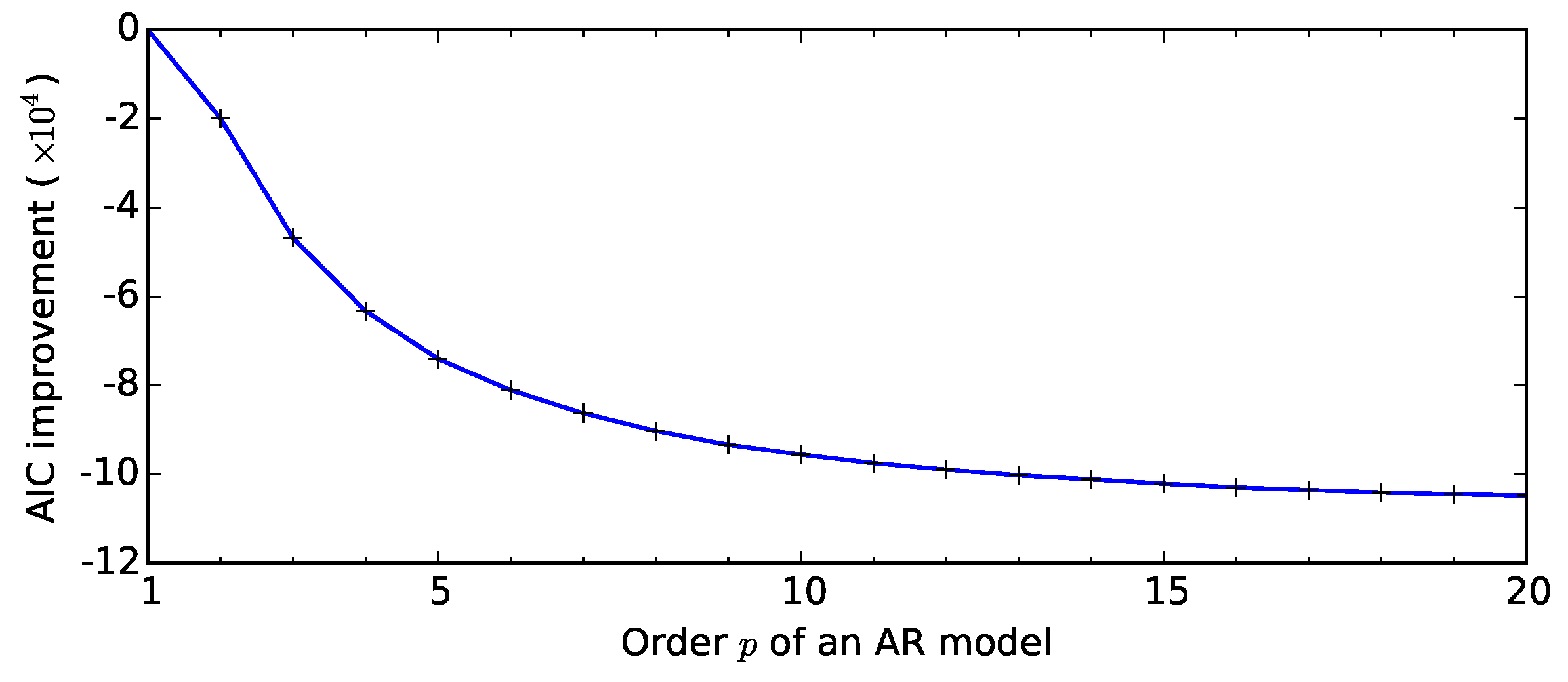

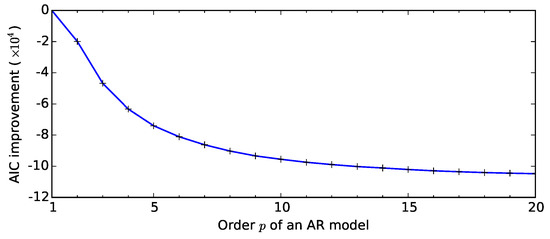

Table 2 clearly shows that the Gaussian white noise model, irrespective of the geographic location or the coordinate, is the worst-performing noise model. All of its AIC values are positive, indicating a very low likelihood that the observations are described by the model. The MA models also seem a poor fit to the data, although one may increase the order of the model, q, to achieve lower AIC scores. Our standard numerical routine, based on the Yule–Walker method [37], could not converge. In contrast, an AR model with increasing order fit best among the noise models examined. Although both the ARMA(p, q) and Ornstein–Uhlenbeck models could achieve comparable AIC values (with the exception of the North Stradbroke Island dataset), the quantitative comparison of AIC values always favoured the high-order AR model. We note here that slightly lower AIC values could be achieved for autoregressive models by increasing their order, but the gain is becoming negligible around (see Figure 7).

Figure 7.

The Akaike’s Information Criterion (AIC) score for autoregressive (AR) models of increasing order using the Dunedin GPS altitude dataset recorded on 15 August 2014, scaled relative to the AIC score for .

Figure 7 also captures two typical phenomena: For low values of p, the AR(p) models do improve significantly with increasing values of p, but there is a ‘kink’ in the AIC curve around approximately , where some improvement can be still achieved by increasing p, but these may not be worthwhile considering the increasing computational workload. In many GPS applications, the sampling period is often shorter than a few minutes or an hour, so the aim of developing a noise model is to focus on the short-term behaviour of the time series. For this reason, the choice of seems a good compromise between modelling performance and computational load (cf. Table 2).

In summary, the Gaussian white noise model is the worst fit to the observation. The next worst model, according to the AIC, is a low-order MA process, followed by the Ornstein–Uhlenbeck model. A higher-order AR scores better, with the higher-order AR processes scoring best. The AR process has the further advantage of robust parameter estimation.

6. Discussion

The use of Gaussian white noise models is very common in sensor fusion algorithms that incorporate GPS data (Hamilton 1994). We have shown here that the assumption of uncorrelated noise does not capture important features of real-world GPS data. Fitting a Gaussian white noise model results in an artificially large variance and does not account for the observed autocorrelations. Analysis of location data shows that the error between the position measurement and the ‘true’ location shows strong autocorrelation up to approximately an hour. We proposed stochastic noise models that take into account such autocorrelation. Estimating the model parameters for the Ornstein–Uhlenbeck and AR models based on likelihood maximisation is straightforward where either the analytic solution is known or the numerical algorithm is well known and can be performed efficiently. Conversely, estimating the parameters for the MA or ARMA models is more involved and computationally expensive. If the stochastic process is known to be non-stationary, then the MA model is non-invertible, and further measures are required to estimate the model parameters. Here, we mention an alternative approach for supporting the suitability of novel noise models. One can calculate the second moment of the residuals, , using single-path or multipath ensemble averaging for the observed time series and the one generated by the model. However, this approach is left for future analysis.

Autoregressive processes of moderate order provide a sufficient noise model for the signal returned by the commercial GPS device, and the extra computational cost compared to that of a naive Gaussian white noise model is negligible. Increasing the order of the autoregressive process results in lower AIC values, indicating that, from the purely statistical perspective, the likelihood maximisation outweighs the increase in complexity. However, for the less accurate GPS units, the higher-order AR processes are only slightly more likely than the first-order AR(1) process. For an AR(1) process, we would advise a discretised Ornstein–Uhlenbeck process, which brings several advantages. First, the sampling period of the device is explicit in its formulation; thus, variable sampling periods can easily be fit into this description. Second, the other parameters of an Ornstein–Uhlenbeck process give clear insight into the noise characteristics: describes how the rate measurements revert towards the mean , while indicates the amount of noise present between each measurement.

Wendel and Trommer [59] analysed the effect of time-correlated noise on the applicability of classical Kalman Filtering, and proposed new filter equations. The noise models we proposed here can thus be incorporated into a Kalman Filter or Bayesian inference algorithms and provide both an estimate for the location along with quantified uncertainty. In addition, recent work [60] has shown that there are practical algorithms for sensor fusion in multi-dimensional systems that allow arbitrary noise distributions and approach the computational efficiency of Kalman Filtering.

Author Contributions

Conceptualisation: A.W.R.S., T.M., P.B., and D.S.; Methodology: A.W.R.S., A.M., and D.S.; Software and validation: A.W.R.S., A.M., and T.M.; Formal analysis: A.W.R.S., A.M., and D.S.; Resources, B.J.P., P.B., and T.C.A.M.; Writing: A.W.R.S., A.M., and D.S.; Review and editing: A.M., T.M., and D.S.; Visualisation: D.S.; Supervision: D.S. and T.C.A.M.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the New Zealand Ministry of Business, Innovation, and Employment under grant number UOOX-1208. D. Schumayer is grateful for the financial support from the Dodd-Walls Centre for Photonic and Quantum Technologies.

Acknowledgments

The authors are grateful for the reviewers for their recommendations, which helped to clarify some of our earlier discussions. We are also indebted for one of their suggestions, which will provide an alternative measure of goodness for the noise models presented in this study.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Short Description of the QQ-Plot Method for Testing Normality

Before constructing non-Gaussian noise models, it is desirable to show that the distribution of noise is significantly different from a Gaussian distribution [23]. We thus carried out three normality tests for each coordinate (latitude, longitude, and altitude): the d’Agostino–Pearson test [25], the Lilliefors test [26], and the graphical QQ-plot method [61]. In Section 2, we demonstrated the deviation of the residuals from a Gaussian distribution. Here, a short description of the QQ-plot method is provided.

The central concept of the QQ-plot method is that of the quantiles, a measurable functional of the empirical distribution, such as the sample mean and sample median. The empirical distribution function, , of a sample of size n, is defined as , or, in words, the ratio of elements of the sample that are less than x. The symbol * means that the probability is estimated by the observed frequency. Assuming that converges in probability to a distribution function as , we can define the quantile, , of order p as . Here, the usage of sup guarantees that this definition remains meaningful even if has points of discontinuity. As a function of p, the quantile is the inverse function of . A widely used example of a quantile is the median, which is simply , i.e., the value of the sample for which half of the observations are smaller than , and half of them are higher than . Similarly, we may divide up the entire range of the random variable into segments in a way that the number of samples belonging into each segment are the same. In the sample quantile , where , is equal to the k-th smallest value of the sample.

The sample quantiles provide us a graphical approach to check whether the sample comes from a given probability distribution, . Let us assume that , , …are independent, identically distributed random variables, with and . Even if the observed values do not satisfy these mild conditions, after normalisation, the two conditions can be fulfilled. Let G denote the distribution function of the standard normal distribution, while stands for the empirical distribution function of , where

One measure of whether the sample comes from a standard normal distribution is the ‘closeness’ of and G. The closeness is visually represented in a two-dimensional plot, where the abscissa is the t-th quantile, , while the ordinate is the t-th sample quantile, . The closer they are, the closer the points are to the diagonal. The plot of points for is called the quantile–quantile plot, or, in short, the QQ-plot. References

References

- Zumberge, J.F.; Heflin, M.B.; Jefferson, D.C.; Watkins, M.M.; Webb, F.H. Precise point positioning for the efficient and robust analysis of GPS data from large networks. J. Geophys. Res. 1997, 102, 5005–5017. [Google Scholar] [CrossRef]

- Zandbergen, P.A.; Barbeau, S.J. Positional accuracy of assisted GPS data from high-sensitivity GPS-enabled mobile phones. J. Navig. 2011, 64, 381–399. [Google Scholar] [CrossRef]

- Olynik, M.C. Temporal Characteristics of GPS Error Sources and Their Impact on Relative Positioning; University of Calgary: Calgary, AB, Canada, 2002. [Google Scholar]

- Van Nee, R.D. Multipath effects on GPS code phase measurements. Navigation 1992, 39, 177–190. [Google Scholar] [CrossRef]

- Georgiadou, Y.; Kleusberg, A. On carrier signal multipath effects in relative GPS positioning. Manuscripta Geod. 1988, 13, 172–179. [Google Scholar]

- Sigrist, P.; Coppin, P.; Hermy, M. Impact of forest canopy on quality and accuracy of GPS measurements. Int. J. Remote Sens. 1999, 20, 3595–3610. [Google Scholar] [CrossRef]

- Griffiths, J.; Ray, J.R. On the precision and accuracy of IGS orbits. J. Geod. 2009, 83, 277–287. [Google Scholar] [CrossRef]

- Forte, B. Analysis of the PLL phase error in presence of simulated ionospheric scintillation events. Radio Sci. 2012, 47, 1–21. [Google Scholar] [CrossRef]

- Tiberius, C.; Jonkman, N.; Kenselaar, F. The stochastics of GPS observables. GPS World 1999, 10, 49–54. [Google Scholar]

- Xu, G.; Xu, Y. GPS: Theory, Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Santamaría-Gómez, A.; Bouin, M.N.; Collilieux, X.; Wöppelmann, G. Correlated errors in GPS position time series: Implications for velocity estimates. J. Geophys. Res. Solid Earth 2011, 116. [Google Scholar] [CrossRef]

- Zhang, J.; Bock, Y.; Johnson, H.; Fang, P.; Williams, S.; Genrich, J.; Wdowinski, S.; Behr, J. Southern California permanent GPS geodetic array: Error analysis of daily position estimates and site velocities. J. Geophys. Res. Solid Earth 1997, 102, 18035–18055. [Google Scholar] [CrossRef]

- Bos, M.; Fernandes, R.; Williams, S.; Bastos, L. Fast error analysis of continuous GPS observations. J. Geod. 2008, 82, 157–166. [Google Scholar] [CrossRef]

- Olivares, G.; Teferle, F. A Bayesian Monte Carlo Markov Chain Method for Parameter Estimation of Fractional Differenced Gaussian Processes. IEEE Trans. Signal Process. 2013, 61, 2405–2412. [Google Scholar] [CrossRef]

- Jin, S.; Wang, J.; Park, P.H. An improvement of GPS height estimations: Stochastic modeling. Earth Planets Space 2005, 57, 253–259. [Google Scholar] [CrossRef]

- Borre, K.; Tiberius, C. Time Series Analysis of GPS Observables. In Proceedings of the 13th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GPS 2000), Salt Lake City, UT, USA, 19–22 September 2000; pp. 1885–1894. [Google Scholar]

- Segall, P.; Davis, J.L. GPS applications for geodynamics and earthquake studies. Annu. Rev. Earth Planet. Sci. 1997, 25, 301–336. [Google Scholar] [CrossRef]

- Amiri-Simkooei, A.R. Noise in multivariate GPS position time-series. J. Geod. 2009, 83, 175–187. [Google Scholar] [CrossRef]

- Williams, S.D.P.; Bock, Y.; Fang, P.; Jamason, P.; Nikolaidis, R.M.; Prawirodirdjo, L.; Miller, M.; Johnson, D.J. Error analysis of continuous GPS position time series. J. Geophys. Res. Solid Earth 2004, 109, B03412. [Google Scholar] [CrossRef]

- Santamaría-Gómez, A.; Bouin, M.N.; Collilieux, X.; Wöppelmann, G. Chapter Time-Correlated GPS Noise Dependency on Data Time Period. In Reference Frames for Applications in Geosciences; Springer: Berlin/Heidelberg, Germany, 2013; pp. 119–124. [Google Scholar] [CrossRef]

- Kaplan, E.D.; Hegarty, C.J. Understanding GPS Principles and Applications, 2nd ed.; Artech House: Norwood, MA, USA, 2006. [Google Scholar]

- Thode, H. Testing for Normality; Taylor & Francis Group: Abingdon, UK, 2019. [Google Scholar]

- Yap, B.W.; Sim, C.H. Comparisons of various types of normality tests. J. Stat. Comput. Simul. 2011, 81, 2141–2155. [Google Scholar] [CrossRef]

- Pearson, E.S.I. Note on Tests for Normality. Biometrika 1931, 22, 423–424. [Google Scholar] [CrossRef]

- D’Agostino, R.B.; Belanger, A. A Suggestion for Using Powerful and Informative Tests of Normality. Am. Stat. 1990, 44, 316–321. [Google Scholar]

- Lilliefors, H.W. On the Kolmogorov-Smirnov Test for Normality with Mean and Variance Unknown. J. Am. Stat. Assoc. 1967, 62, 399–402. [Google Scholar] [CrossRef]

- Abdel-Hafez, M.F. The autocovariance least-squares technique for GPS measurement noise estimation. IEEE Trans. Veh. Technol. 2010, 59, 574–588. [Google Scholar] [CrossRef]

- Fallahi, K.; Cheng, C.T.; Fattouche, M. Robust positioning systems in the presence of outliers under weak GPS signal conditions. IEEE Syst. J. 2012, 6, 401–413. [Google Scholar] [CrossRef]

- Durbin, J. The Fitting of Time-Series Models. Rev. Int. Stat. Inst. 1960, 28, 233–244. [Google Scholar] [CrossRef]

- Durbin, J. Efficient Fitting of Linear Models for Continuous Stationary Time Series from Discrete Data; Technical Report; North Carolina State University, Department of Statistics: Raleigh, NC, USA, 1960. [Google Scholar]

- Durbin, J. Efficient fitting of linear models for continuous stationary time series from discrete data. Bull. Int. Stat. Inst. 1961, 38, 273–281. [Google Scholar]

- Cryer, J.D.; Chan, K.S. Time Series Analysis: With Applications in R; Springer: New York, NY, USA, 2008. [Google Scholar]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; Wiley Series in Probability and Statistics; John Wiley & Sons; Inc.: Hoboken, NJ, USA, 2016. [Google Scholar]

- Walker, A.M. Large-Sample Estimation of Parameters for Autoregressive Processes with Moving-Average Residuals. Biometrika 1962, 49, 117–131. [Google Scholar] [CrossRef]

- Box, G.E.P.; Pierce, D.A. Distribution of Residual Autocorrelations in Autoregressive-Integrated Moving Average Time Series Models. J. Am. Stat. Assoc. 1970, 65, 1509–1526. [Google Scholar] [CrossRef]

- Ljung, G.M.; Box, G.E.P. On a measure of lack of fit in time series models. Biometrika 1978, 65, 297–303. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C. Time Series Analysis, Forecasting and Control, 3rd ed.; Prentice Hall: Englewood Clifs, NJ, USA, 1994. [Google Scholar]

- Bartlett, M.S. On the theoretical specification of sampling properties of an autocorrelated process. J. R. Stat. Soc. B 1946, 8, 27–41. [Google Scholar]

- Anderson, O. Time Series Analysis and Forecasting: The Box-Jenkins Approach; Butterworths: Carlisle City, UK, 1976. [Google Scholar]

- Fishman, G. Principles of Discrete Event Simulation; Wiley Series on Systems Engineering and Analysis; John Wiley & Sons: Hoboken, NJ, USA, 1978. [Google Scholar]

- Porat, B. Digital Processing of Random Signals: Theory and Methods; Prentice Hall Information and System Sciences Series; Prentice Hall: Englewood Cliffs, NJ, USA, 1994. [Google Scholar]

- Akaike, H. Maximum likelihood identification of Gaussian autoregressive moving average models. Biometrika 1973, 60, 255–265. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A. Time Series: Theory and Methods; Springer Series in Statistics; Springer: New York, NY, USA, 1991. [Google Scholar]

- Astrom, K.; Soderstrom, T. Uniqueness of the maximum likelihood estimates of the parameters of an ARMA model. IEEE Trans. Autom. Control 1974, 19, 769–773. [Google Scholar] [CrossRef][Green Version]

- Mann, H.B.; Wald, A. On the Statistical Treatment of Linear Stochastic Difference Equations. Econometrica 1943, 11, 173–220. [Google Scholar] [CrossRef]

- Melard, G. Algorithm AS197: A Fast Algorithm for the Exact Likelihood of Autoregressive-Moving Average Models. J. R. Stat. Society. Ser. C Appl. Stat. 1984, 33, 104–114. [Google Scholar] [CrossRef]

- Evans, L.C. An Introduction to Stochastic Differential Equations; American Mathematical Society: Berkeley, Berkeley, CA, 2014. [Google Scholar]

- Øksendal, B. Stochastic Differential Equations: An Introduction with Applications; Universitext; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Uhlenbeck, G.E.; Ornstein, L.S. On the Theory of the Brownian Motion. Phys. Rev. 1930, 36, 823–841. [Google Scholar] [CrossRef]

- Doob, J.L. The Brownian Movement and Stochastic Equations. Ann. Math. 1942, 43, 351–369. [Google Scholar] [CrossRef]

- Pedersen, A.R. A New Approach to Maximum Likelihood Estimation for Stochastic Differential Equations Based on Discrete Observations. Scand. J. Stat. 1995, 22, 55–71. [Google Scholar]

- Tang, C.Y.; Chen, S.X. Parameter estimation and bias correction for diffusion processes. J. Econom. 2009, 149, 65–81. [Google Scholar] [CrossRef]

- Dacunha-Castelle, D.; Florens-Zmirou, D. Estimation of the coefficients of a diffusion from discrete observations. Stochastics 1986, 19, 263–284. [Google Scholar] [CrossRef]

- Kloeden, P.E.; Platen, E. Numerical Solution of Stochastic Differential Equations; Springer: Berlin/Heidelberg, Germany, 1992; Volume 23. [Google Scholar]

- Claeskens, G.; Hjort, N.L. Model Selection and Model Averaging; Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 2008; p. 332. [Google Scholar]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the Dimension of a Model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Wendel, J.; Trommer, G.F. An Efficient Method for Considering Time Correlated Noise in GPS/INS Integration. In Proceedings of the 2004 National Technical Meeting of The Institute of Navigation, San Diego, CA, USA, 26–28 January 2004; pp. 903–911. [Google Scholar]

- Fox, C.; Norton, R.A.; Morrison, M.E.; Molteno, T.C. Sequential Bayesian Inference for Dynamical Systems Using the Finite Volume Method. In 2017 MATRIX Annals; Springer: Berlin/Heidelberg, Germany, 2019; pp. 13–23. [Google Scholar]

- Thode, H. Testing for Normality; Marcel Dekker: New York, NY, USA, 2002. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).