Abstract

Distribution mismatch caused by various resolutions, backgrounds, etc. can be easily found in multi-sensor systems. Domain adaptation attempts to reduce such domain discrepancy by means of different measurements, e.g., maximum mean discrepancy (MMD). Despite their success, such methods often fail to guarantee the separability of learned representation. To tackle this issue, we put forward a novel approach to jointly learn both domain-shared and discriminative representations. Specifically, we model the feature discrimination explicitly for two domains. Alternating discriminant optimization is proposed to obtain discriminative features with an l2 constraint in labeled source domain and sparse filtering is introduced to capture the intrinsic structures exists in the unlabeled target domain. Finally, they are integrated in a unified framework along with MMD to align domains. Extensive experiments compared with state-of-the-art methods verify the effectiveness of our method on cross-domain tasks.

1. Introduction

A basic assumption of many machine learning algorithms is that the training and testing data share the same distribution. Now, data sources are more diverse due to the lower costs of data acquisition. For visual images, it may cause inconsistent distribution with some small changes such as lighting conditions, acquisitions, or backgrounds. It is expensive to label each source’s data. Domain adaptation aims to train a robust classifier based on a labeled source domain to predict on an unlabeled target domain [], which achieved significant progress in image classification [,], speech recognition [,], person re-identification [], and many other areas.

An intuitive idea for domain adaptation is to re-weight the training samples and reduce the distance between the source and target domains at the instance level []. Another popular way is to reduce the discrepancy between domains at the feature level, which attempts to learn domain-shared representations. Ben et al. pointed out that the transferable features can be obtained by minimizing the distance of domains and maximizing the source margin simultaneously []. Based on this theory, many feature-driven domain adaptation methods have been proposed. Pan et al. mapped the data from both domains to high-dimensional Hilbert space and then minimized the domain discrepancy []. The measurement employed in [] is maximum mean discrepancy (MMD) [], which is capable of characterizing the distance between two sets of samples. Long et al. adapted the marginal probability and conditional probability of the two domains simultaneously by assigning pseudo-labels to the target domain, and achieved more accurate results in an iterative manner []. Gong et al. integrated an infinite number of subspaces and characterized changes in consideration of geometric and statistical properties, then proposed a subspace disagreement measure (SDM) to determine the optimal subspace dimension []. The combination of distribution matching measures and deep neural networks also achieves remarkable performance. Yosinski et al. studied the transferability of layers in deep neural networks and pointed out that for a particular deep neural network, the first few layers learn general features such as lines or points, and the latter layers learn more specific features []. Inspired by this, a series of works have been proposed. Ghifary et al. proposed a domain adaptive neural network for domain adaptation. Compared with a classical neural network, it modeled domain discrepancy explicitly []. Tzeng et al. added an MMD adaptation layer to the classic Alexnet [] for distribution alignment []. Long et al. obtained better results by adapting more layers of network and using multi-kernel MMD (MK-MMD) []. Inspired by the success of residual structure in image classification, Long et al. proposed residual transfer networks (RTN) to learn cross-layer transferable features []. Another interesting idea is adversarial training, which establishes a domain classifier to judge whether a sample comes from the source or target domain. Suppose that a well-trained classifier cannot distinguish samples in two domains; we can say that there are little differences between domains. It differs from other methods in that adversarial training does not measure domain discrepancy with hand-crafted indicators but uses a dynamic classifier. Ganin et al. put forward a gradient reversal layer (GRL) to learn domain-invariant features []. Long et al. combined MMD and adversarial training to form a more powerful joint maximum mean discrepancy []. Pei et al. considered the multi-mode structures of data and used multiple adversarial networks (each for a class) to align domains []. Zhang et al. simultaneously learned both domain-informative and domain-uninformative features through domain collaborative and domain adversarial learning [].

Many studies focus on dimensionality reduction to facilitate calculation and visualization []. Similarly, domain adaptation can also be considered as a feature extraction problem to extract discriminative and shared features. For features with low dimension, it is generally considered that the good features may hold more information, which has been proven to be effective in auto-encoder [] and reconstruction independent component analysis []. The reconstruction error guarantees the completeness of features. However, for the problem of domain adaptation, feature completeness is not necessary because of the inconsistent distribution between the two domains. The knowledge or features are not completely shared between the two domains []. Another criterion for extracting features is sparsity, which is generally used as a regular item []. Sparse filtering proposed in [] avoids explicitly modeling raw data, and obtains ideal features by constraining the sparsity of features. Sparse representation-based methods have also made dramatic progress in visual recognition [].

Most existing methods attempt to shorten the distance between two domains in different feature spaces while maintaining certain statistical characteristics (e.g., variance []). Despite the great success achieved, they do not model feature distinctiveness explicitly. In order to handle this problem, we propose a novel dimensionality reduction method for unsupervised domain adaptation in this paper. Apart from reducing the distance between domains, we employ different measurements for source and target domain to obtain discriminative features. Our contributions can be summarized as follows.

- We propose a novel unsupervised domain adaptation solution to reduce domain discrepancy and extract discriminative features simultaneously. Compared to existing works, the proposed method models feature distinctiveness with explicitly constraint. Comparisons with state-of-the-art methods show that our method works well in accuracy and efficiency.

- Alternating discriminant optimization is proposed to obtain discriminative features in the labeled source domain, which utilizes an l2 objective to measure feature distinctiveness. We use a toy example to demonstrate how it works.

- We combine sparse filtering and maximum mean discrepancy into an integrated framework, and propose an unified optimization method with full-batch and mini-batch gradient descent.

The rest of the paper is organized as follows. Section 2 details the domain adaptation problem and related works, then introduces sparse filtering and maximum mean discrepancy. Our method is introduced in Section 3 and experimental evaluation is presented in Section 4. At last, we summarize this paper and discuss future work in Section 5.

2. Related Works

In this section, we give a definition of transfer leaning and explain its relationship with domain adaptation. According to whether the labeled samples in the target domain are available, the problem can be divided into semi-supervised and unsupervised domain adaptation. In this paper, we focus on unsupervised domain adaptation, which means that the target domain does not have any labeled samples. Following that, we introduce sparse filtering and maximum mean discrepancy.

2.1. Transfer Learning and Domain Adaptation

There are two important concepts in transfer learning, domain and task. A domain, , can be thought of as a set of data, which has a feature space X and a marginal probability distribution . The task also has two components, , Y is the label space and is the mapping function. Traditional machine learning methods have the same domains and tasks between training and testing. When domains or tasks are different, we call it transfer learning (TL). According to the similarity of domain and task, TL can be divided into inductive TL and transductive TL. In this paper, we focus on transductive TL, where the domains are different but related and the tasks are the same.

Domain adaptation can be seen as a kind of transductive TL. Given source data and label (,) and target data (), where data in two domains have different distributions, domain adaptation (DA) aims to find the label of target data (). When the test set is completely unlabeled, it is called unsupervised domain adaptation, which is also the focus of this paper. The mathematical form is defined as follows [].

In this paper, we focus on unsupervised domain adaptation which means that target domain has no labels at all. Existing methods try to align features by means of varieties of transformations (e.g., kernel [], deep neural networks []). One crucial thing is how to measure the discrepancy between domains. There are two widely used methods: (a) alignment with moments, whether the first-order moment (maximum mean discrepancy []) or the second-order (CORAL []); and (b) adversarial training. The main idea is to establish a feature extractor and a domain discriminator simultaneously and train them as generative adversarial nets [].

2.2. Sparse Filtering

Sparse filtering is an effective and simple unsupervised feature extraction method proposed in []. It only requires one input parameter: the number of features. Unlike other feature extraction methods, it does not attempt to model the raw data. Instead, it starts with what are good features and directly constrains the extracted features. As a major contribution, the authors gave three principles of the so-called good features.

- Population sparsity: Each example should be represented by only a few active features. Specifically, non-zero elements represent the activation of features, so each sample has few non-zero elements.

- Lifetime sparsity: Good features should be distinguishable. Therefore, a feature is only allowed to be activated in few samples. For example, if we want to classify cats and dogs, the feature of having a tail is activated for all samples, then it is not a good feature.

- High dispersal: It requires each feature to have similar statistical properties across all samples. No one feature should have significantly more activity than the others. This avoids the extraction of features that are only activated on very few samples, and prevents the extraction of similar features.

Furthermore, the authors pointed out that we can obtain ideal representations by jointly optimizing population sparsity and high dispersal, so there is no need to optimize lifetime sparsity explicitly; interested readers can refer to the original paper for more details. Suppose now we have n samples, each with m-dimensional features that can be written as . The optimization of sparse filtering is as follows:

(1) Linear feature extraction. Let represent the jth feature of the ith sample. . Then we can use some activation functions to make it more expressive, such as the soft absolute function.

(2) Solving high dispersal. Each feature is divided by the -norm of the feature on all samples.

Remember that the requirement of high dispersal is that the statistical properties of each feature are similar. This step forces the sum of the squares of all features to be 1 roughly.

(3) Solving population sparsity. Each sample is divided by its own -norm. Then, we can get the objective function.

An advantage of using normalization is to introduce the competition mechanism—that is to say, while some components become larger, some components will become smaller. The result of competition is that the representation becomes sparse.

2.3. Maximum Mean Discrepancy

Maximum mean discrepancy (MMD) is widely used to measure the difference between distributions []. For domain adaptation problems, researchers pointed out that marginal distribution adaptation can be achieved by minimizing MMD which computes the distance between sample means in the dimensional embeddings [,].

where is the MMD matrix that can be computed as:

Intuitively, the source and target data are integrated together as where m denotes the feature dimension of original data and denotes the number of source/target samples. The first columns are instances from the source domain and followed columns from the target domain. is the adaptation matrix which maps the original and to dimensional. As shown on the left of Equation (5), MMD computes the mean vectors for the source and target domains first, then takes the norm of the difference between the two vectors.

3. Methodology

In this section, we describe the proposed method in detail. First the framework of our approach is introduced. Then, it is followed by a detailed description of the proposed alternating discriminant optimization (ADO) and how the MMD is used in our method. Finally, we summarize the specific optimization problem.

3.1. Framework of Discriminative Sparse Filtering

In this paper, we try to learn both discriminative and domain-shared features. Our model consists of two parts: feature transformation and loss function construction. Using the notations defined in Table 1, feature transformation can be described as:

Table 1.

Notations and descriptions used in this paper.

Step 1. Linear feature extraction. Let denote the selected activation function. We use the soft absolute function as in this paper

where denotes a small number, such as 1e-5.

Step 2. Solving high dispersal. Observing the form of , each row represents a sample, and each column represents a feature. So this step is actually doing a column normalization. It is worth noting that we do within-domain normalization instead of cross-domain (which means that using all the samples from two domains to normalize). The idea is to force each feature has similar statistical properties in two domains by setting their norm to 1 rudely. As a consequence, a given feature should (a) have similar statistical properties in different domains and (b) be distinguishable over samples in the same domain.

Here, the symbol ∘ represents Hadamard product.

Step 3. Solving population sparsity. Just like the previous step, this step does an row normalization.

Notice that steps 2 and 3 do not change the dimension of samples; we can regard them as a specific activation. Based on the descriptions, the transformation from the initial data X to is summarized as . The loss function can be described as:

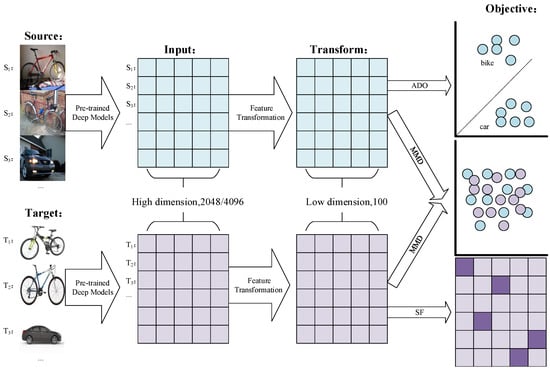

where represents the sparse loss on the target domain, represents the discriminative loss of the source domain, and denotes the MMD loss between the source and target features. are the parameters that balance the three objectives. Obviously, and correspond to the two goals presented in []. Furthermore, we require the target domain features to be discriminant. A graphical illustration of the framework is shown in Figure 1. Given raw pictures from source and target domains, we first extract their vectorized features with pre-trained deep models, e.g., Alexnet and Resnet. It is worth noting that we do not employ any fine-tuning. Then, they are further reduced in dimension by a linear transformation matrix W, after steps 1–3. The objective constructing on the learned representation can be divided into three parts: (1) source domain—objects from different category should far away from each other; (2) target domain—the learned representation should be sparse; and (3) cross-domain—there should not be clear gap among two domains’ samples.

Figure 1.

Graphical illustration of the proposed framework.

3.2. Target Domain Sparsity: Sparse Filtering

In order to obtain discriminative features, we first need an indicator to evaluate the impact of current features on the classification. According to the theory in [], classification error is the most effective evaluation criterion for feature selection. The specific process is to establish a classifier using the existing features and labels, and then take the classifier error as the discriminant index of the current features. However, there are not any labels in the target domain for unsupervised domain adaptation, which brings difficulties to extract discriminative structures. Since sparse filtering has made remarkable achievements in many areas, in this paper, we introduce sparse filtering for the target domain.

3.3. Source Domain Discriminability: Alternating Discriminant Optimization

For the labeled source domain, we can establish a classifier using the transformed features and labels directly. Different from heuristic feature selection, we hope to solve the optimal transformation matrix by combining the sparsity of the target domain data, which requires that this indicator can be optimized using gradient information. So the classification model whose parameters are solved in an iterative manner (e.g., neural network and SVM) is no longer applicable. In this paper, we use mean square error, then obtain discriminative features by alternately optimizing two parameters.

Suppose that we have source features and labels . To measure the discriminability of the features, we need to map features to label space; here, we use linear mapping function because it can be easily solved without multiple iterations. The objective can be described in mathematical form as:

A linear regressor maps original data to label space by means of a transformation matrix (). Obviously, we actually perform linear regression in the feature space. As mentioned earlier, classification error is the most effective index for feature selection, but it is an constraint and makes trouble for optimizing with gradient information. Here, we relax the constraint to which is equivalent to linear regression. In general, we hope to measure the discriminability of features by constraint and optimize it with gradient descent.

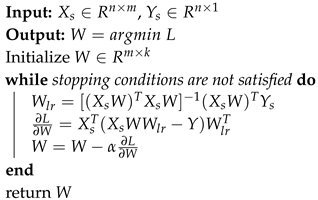

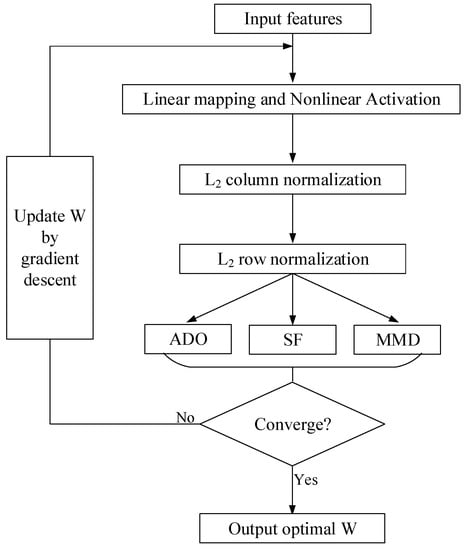

For this two-variable (W and ) optimization problem, it is hard to optimize two parameters simultaneously. So we borrow the ideas of alternating direction method of multipliers (ADMM) []. W transforms original data to features space where we construct a linear regressor by means of . At each iteration, we first solve the linear regressor by normal equation, then update W by the chain rule and gradient descent. The specific process is showed in Algorithm 1.

| Algorithm 1: Alternating Discriminant Optimization. |

|

The main idea of ADO is to find the optimal classifier parameters for each generation of input features, and then optimize the mapping function W based on it. With the iterations, the mapping features will have a smaller regression error with the optimal . In Figure 2. we show how ADO solves XOR problem. Specifically, we set four examples, i.e., class zero (denoted by blue diamonds): [0,1], [1,0] and class one (denoted by red circular): [0,0], [1,1], which cannot be divided by a single line. ADO computes the optimal decision boundary, then learns a nonlinear mapping to minimize classification error. As the figure shows, samples are mapped into another two-dimensional feature space where they are linearly separable.

Figure 2.

A toy experiment of using ADO for XOR problems.

Correspondingly, we can formulate as follows.

3.4. Domain Discrepancy: MMD

We have described how MMD works with linear transformation, but there is a small change in our method. In the previous presentation, we mapped the data to the feature space by multiplying matrix (). The case is more complicated here (), but the idea is similar.

where denotes the merged data sets.

3.5. Optimization

In this section, we give the detailed process to solve three objectives.

3.5.1. Optimization of

It is the same as applying sparse filtering on the target domain data.

At each iteration, update and then use the updated parameters to calculate the gradient. Notice that we do not give the specific derivation of the select activation functions (soft absolute function); a more general form of the problem is given here, and more activation functions can be used, such as sigmoid and tanh.

3.5.2. Optimization of

Based on the derivation in the previous section and the chain rule, we have:

where represents the analytic solution of linear regression applied on source features.

3.5.3. Optimization of

We give the derivation of ; the rest is the same as .

where X consists of and , so Equation (17) provides the gradients of and . It is worth noting that are different for the two domains, so we should compute the gradients separately.

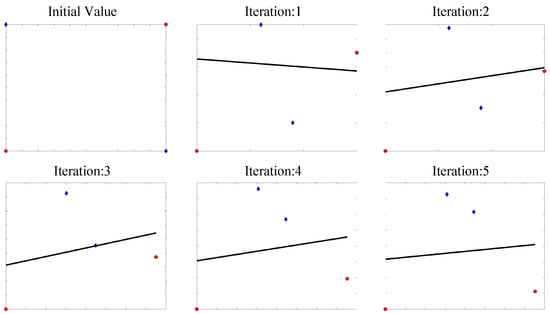

Given these, we can update W with , and the flowchart can be found in Figure 3.

Figure 3.

Flowchart of the proposed method.

4. Experiments

In this section, we introduce two data sets for domain adaptation and the experimental settings, then give the results. In addition, we provide an empirical analysis to show the robustness of the proposed method.

4.1. Data Set

4.1.1. Office-Caltech10

Office-Caltech10 data set is proposed in [], which consists of four domains—AMAZON (A), CALTECH (C), DSLR (D), and WEBCAM (W). It comes from the e-commerce website (AMAZON), data set caltech-256 (CALTECH), high-resolution digital camera photo (DSLR), and low-resolution photo (WEBCAM). Each domain has 10 types of objects, including laptop, monitor, and so on. Figure 4. shows the laptop in different domains. It can be seen that there are differences between domains, which brings difficulties to image recognition.

Figure 4.

Laptops in different domains.

4.1.2. ImageCLEF

ImageCLEF is an online competition for domain adaptation, which has three domains (Caltech (C), Imagenet (I), and PASCAL (P)) and twelve classes of objects.

4.2. Experimental Setting

The existing methods can be roughly divided into shallow methods and deep methods. Though our method does not have deep architectures, we choose some deep methods to illustrate its effectiveness. Following [], we convert the data to 100 dimensions by our method, then use a 1-nearest neighbor for classification. For our method, we set and .

The selected state-of-the-art methods are:

- Nearest neighbor (NN): NN is served as a baseline model to check whether the learned representations really work for DA problems.

- Joint distribution alignment (JDA): []. JDA [ICCV2013] adopts pseudo labels to align the conditional distributions of two domains.

- Correlation alignment (CORAL): []. CORAL [AAAI2016] obtains transferable representations by aligning the second-order statistics of distributions.

- Confidence-aware pseudo-label selection (CAPLS): [].CAPLS [IJCNN2019] uses a selective pseudo labeling procedure to obtain more reliable labels.

- Modified A-distance sparse filtering (MASF): [].MASF [Pattern Recognit.2020] employs an L2 constraint combining sparse filtering to learn both domain-shared and discriminative representations.

- Selective pseudo-labeling (SPL): [].SPL [AAAI2020] is also a selective pseudo labeling strategy based on structured prediction.

- Generalized softmax (GSMAX): []. GSMAX [Inf. Sci.2020] aims to learn smooth representation with both labeled source domain and unlabeled target domain.

Follow the experimental setting of JDA and MASF, we set the subspace dimension . For JDA, we set the regularization coefficient and the number of iterations . For CAPLS, we set the number of iteration . For MASF, we set the regularization coefficient . For SPL, we set the number of iterations . For GSMAX, we set the regularization factor to . It is worth emphasizing that the input features are extracted by deep networks without fine-tuning and no pre-processing strategy is applied in the experiments.

Following the setting of [,], we report the classification accuracy on target data as the evaluation metric.

where denotes the predicted label and y is the true label, so .

4.3. Implementation Details

(1) Initialization. We find that setting the initial value near 0 can significantly improve the convergence. In this paper, we set it to where denotes Gaussian distribution. We fix the random number seed to 0 (in MATLAB) for the reproducibility of this paper.

(2) Gradient descent. We set the maximum number of iterations to 200 and the step size to 0.1.

(3) Our code will be available at https://github.com/wobuhuiyingyu/DA_DSF.

4.4. Results

In this section, we report the accuracy of the proposed method (abbreviated as DSF for discriminative sparse filtering) and other state-of-the-art works; the results are shown on Table 2 and a detailed comparison can be found on Table 3. From experimental results, we have the following observations:

Table 2.

Performance (accuracy %)on Office-Caltech10 (No.1-12) and ImageCLEF (No.13-18).

Table 3.

An intuitive comparison of average performance (accuracy, %) and average running time (time, s).

- DSF vs. NN. According to the results, DSF is significantly better than NN. NN cannot handle the domain discrepancy, thus results in unsatisfying performance. On the other hand, it indicates that our method is able to learn transferable representations.

- DSF vs. CORAL, JDA. DSF is superior to CORAL and JDA. These two methods are classical distribution matching methods, but they have limited considerations on the discrimination of learned representations.

- DSF vs. MASF. MASF is another framework based on sparse filtering, which adopts a modified distance for domain alignment. Compared to our method, it cannot ensure that the learned representation can be classified easily.

- DSF vs. CAPLS, SPL. Objectively speaking, our method DSF has comparable performance when compared state-of-the-art works, only 0.5% decreasing on average accuracy. It reveals that the proposed discriminative features are applicable for domain adaptation problems.

The difference of sample numbers, also referred as class weight bias, is a fundamental problem for measuring distribution differences. Existing measurements, e.g., MMD and CORAL, employ the first/second/higher order moments to quantify distribution differences, which assume that the source and target data share the same class weights; however, such an assumption does not always hold (like Office-Caltech10). However, our method also yields good classification results. The reason is twofold: (1) the class weight biases are not so severe that they will lead to catastrophic accumulation of errors. (2) There are other regularizations, i.e., the proposed ADO and sparse filtering. The ideal features should be both domain-shared and discriminative, so the negative effects can be further suppressed. Another interesting phenomenon is the different results after changing the order of two domains; this can be explained by the information asymmetry. Imagine that two sets A and B, where A∈B, so if we choose B as training set and A for testing, the model would achieve satisfactory performance. If the order is changed, the model would fail since A cannot provide enough discrimination power.

4.5. Empirical Analysis

4.5.1. Ablation Study

For better understanding of the proposed method, we conduct an ablation study to analyze how different components contribute to the final performance. Since there are too few samples for some domains of the Office-Caltech10, e.g., 157 images in total for DSLR, we use ImageCLEF for ablation study only. Compared to original sparse filtering, we proposed two strategies, i.e., MMD for distribution matching and ADO for source discrimination. Through the arrangement and combination of two elements, we can construct experiments. We use ✓and ✗to denote the status of two components, e.g., MMD (✓) + ADO (✗) indicates that current model is MMD regularized sparse filtering. As Table 4 shows, when the two components are all activated, the method achieves the highest average performance. Adding one component can also improve the final prediction.

Table 4.

Ablation study. Classification accuracy (%) with different measures.

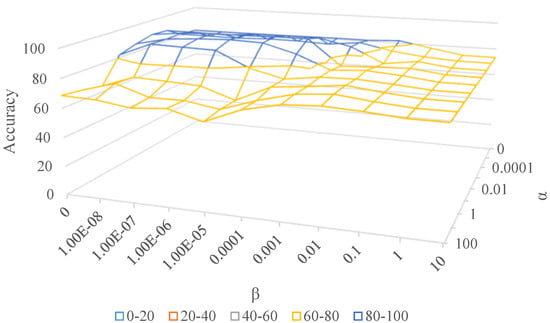

4.5.2. Parameter Sensitivity Analysis

In this paper, we introduce two parameters, and , to balance the three parts of our objective. is the coefficient of target sparsity; we hope to preserve the invisible structure of target samples by constraining its sparsity. Similarly, indicates how much we care about source discriminability.

(1) .

In this situation, we do not care about the discriminability of both domains. All we need is to reduce domain discrepancy by reducing the MMD loss, which is similar to TCA [].

(2) .

Extended from TCA, we hope to obtain discriminative representations while reducing domain discrepancy in some sense. However, if they are too large, we cannot learn transferable knowledge across domains. As Figure 5 shows, becomes larger from front to back and increases from left to right. Obviously, the highest peak occurs in the middle of the surface, which manifests that the proposed two strategies are both necessary. When becomes too large (corresponding to the right and rear of the surface), the accuracy decreases sharply since we pay too much attention to feature discriminability while ignoring the fundamental problem, i.e., distribution matching.

Figure 5.

Accuracy (%) with different and on Office-Caltech10.

4.5.3. Running Time

Using given notations, the computational cost is detailed as follows: for solving , for , and for . In summary, suppose we take T as the number of iterations; the overall computation complexity of algorithm is .

In this section, we record the running time (feature extraction + classification with NN) of previous experiments. All algorithms are implemented via MATLAB 2017a and executed on a Windows PC with Intel Core i7 CPU at 3.6GHz and 8GB RAM. Table 5 shows the results. Intuitively, we can see that the proposed method computes faster than most of other works on average running time, especially CAPLS and SPL.

Table 5.

Running time (S) on Office-Caltech10 (No.1–12) and ImageCLEF (No.13–18).

5. Discussion

In this section, we discuss the influence of different gradient-based optimization methods on the proposed framework.

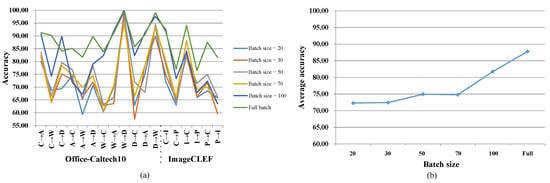

5.1. Mini-Batch versus Full-Batch

In the previous section, we show how to apply gradient descent for optimizing the proposed method, which means that we need all data () for computing. However, real-world applications may have large amount of data so that our computer cannot handle the heavy computation. Consequently, stochastic gradient descent (SGD), which adopts a subset () of data during each iteration, is necessary. In this section, we analyze how mini-batch based optimization may affect our method both theoretically and practically.

5.1.1. Implementation of Mini-Batch-Based Optimization

Mini-batch SGD randomly selects a part of samples to calculate gradients rather than on the whole data set. Similarly, we can solve the proposed framework with mini-batch SGD. Firstly, we should select samples in source and target domain () separately since MMD needs data from both domains. The batch size can be determined by our computation resource. Then by treating the two mini-batch () as and , we can update the parameters using gradient descent (showed in Section 3.5. Optimization).

5.1.2. Influence of Mini-Batch SGD

Here, we analyze how mini-batch SGD affects the proposed method. Since we use random mini-batch instead of the full batch, the sampling error cannot be ignored. For sparse filtering (corresponding to ), as an unsupervised feature extraction method, it requires a diversity of data. In extreme cases, suppose that we have data from the same class. Sparse filtering tries to extract distinguishable features; in other works, it tries to make samples from the same class to be different, which is counterintuitive. For alternating discriminant optimization (corresponding to ), it learns discriminative features with labeled source samples. If the samples belong to the same class in the mini-batch, it outputs meaningless gradients. For MMD (corresponding to ), it measures the domain discrepancy with first-order statistics. The sampling error is reflected in the gap between the mean of mini-batch and the full batch (). To summarize, using mini-batch SGD will lead to performance degradation and the degradation will become larger as the batch size becomes smaller. As Figure 6 shows, the proposed method achieves higher accuracy as the batch size becomes larger, as does the average accuracy. It is worth emphasizing that using mini-batch based optimization is not time-efficient; in fact, it often costs more time. The reason is that we need a small step size and more iterations to train the model, since a min-batch provides a biased estimation of the whole data set. It works when our computer cannot handle the large data set at a time—in other words, it can be seen as a trade-off of time and space.

Figure 6.

Experiments with different batch sizes. (a) Accuracy (%) on every subtask with different batch sizes. (b) Average accuracy (%) with different batch sizes.

6. Conclusions

In this paper, we propose a novel feature extraction method for unsupervised domain adaptation, which consists of three parts: (a) Since the target domain has no labels, sparse filtering is introduced to capture its discriminative structure in nature. (b) For the labeled source domain, we propose alternating discriminant optimization to directly model the relation of learned representation and labels; a toy experiment of XOR problem shows its validity. (c) We integrate MMD into the framework to reduce domain discrepancy and a unified optimization based on gradient descent is raised. Adequate experiments show that the proposed method is comparable or superior to existing methods. Furthermore, we give a mini-batch based optimization framework such the proposed method can be applied in large-scale problems. In the future, we plan to study how different metrics work to measure domain discrepancy.

Author Contributions

Conceptualization, C.H. and Y.X.; methodology, C.H. and Z.Y.; software, C.H. and Z.Y.; validation, C.H., Z.Y., and K.Z.; formal analysis, C.H.; investigation, C.H.; resources, Y.X.; data curation, Y.X.; writing—original draft preparation, C.H.; visualization, C.H.; supervision, D.Z.; project administration, D.Z.; funding acquisition, D.Z.; writing—review and editing, Z.Y. and K.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Busto, P.P.; Iqbal, A.; Gall, J. Open Set Domain Adaptation for Image and Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 413–429. [Google Scholar] [CrossRef] [PubMed]

- Cai, R.; Li, J.; Zhang, Z.; Yang, X.; Hao, Z. DACH: Domain Adaptation Without Domain Information. IEEE Trans. Neural Netw. Learn. Syst. 2020, 99, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Des Combes, R.T.; Zhang, K.; Gordon, G. On Learning Invariant Representation for Domain Adaptation. arXiv 2019, arXiv:1901.09453. [Google Scholar]

- Song, L.; Wang, C.; Zhang, L.; Du, B.; Zhang, Q.; Huang, C.; Wang, X. Unsupervised domain adaptive re-identification: Theory and practice. Pattern Recognit. 2020, 102, 107173. [Google Scholar] [CrossRef]

- Dai, W.; Yang, Q.; Xue, G.R.; Yu, Y. Boosting for transfer learning. In Proceedings of the 24th International Conference on Machine Learning, Corvalis, OR, USA, 20–24 June 2007; pp. 193–200. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of representations for domain adaptation. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 4–5 December 2006; pp. 137–144. [Google Scholar]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. Learn. Syst. 2011, 22, 199–210. [Google Scholar] [CrossRef] [PubMed]

- Smola, A.J.; Gretton, A.; Song, L.; Scholkopf, B. A Hilbert Space Embedding for Distributions. Int. Conf. Algorithmic Learn. Theory 2007, 4754, 13–31. [Google Scholar]

- Long, M.; Wang, J.; Ding, G.; Sun, J.; Yu, P.S. Transfer Feature Learning with Joint Distribution Adaptation. In Proceedings of the International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2200–2207. [Google Scholar]

- Gong, B.; Shi, Y.; Sha, F.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the Computer Vision and Pattern Recognition, Providence, RI, USA, 16-21 June 2012; pp. 2066–2073. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the Neural Information Processing Systems, Montreal, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Ghifary, M.; Kleijn, W.B.; Zhang, M. Domain adaptive neural networks for object recognition. arXiv 2014, arXiv:1409.6041. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar]

- Long, M.; Cao, Y.; Cao, Z.; Wang, J.; Jordan, M.I. Transferable Representation Learning with Deep Adaptation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 3071–3085. [Google Scholar] [CrossRef] [PubMed]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Unsupervised domain adaptation with residual transfer networks. In Proceedings of the Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 136–144. [Google Scholar]

- Ganin, Y.; Lempitsky, V.S. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Deep transfer learning with joint adaptation networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2208–2217. [Google Scholar]

- Pei, Z.; Cao, Z.; Long, M.; Wang, J. Multi-Adversarial Domain Adaptation. In Proceedings of the National Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3934–3941. [Google Scholar]

- Zhang, W.; Ouyang, W.; Li, W.; Xu, D. Collaborative and Adversarial Network for Unsupervised Domain Adaptation. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3801–3809. [Google Scholar]

- Weng, J.; Young, D.S. Some dimension reduction strategies for the analysis of survey data. J. Big Data 2017, 4, 43. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Le, Q.V.; Karpenko, A.; Ngiam, J.; Ng, A.Y. ICA with reconstruction cost for efficient overcomplete feature learning. In Proceedings of the Neural Information Processing Systems, Granada, Spain, 12–14 December 2011; pp. 1017–1025. [Google Scholar]

- d’Aspremont, A.; Ghaoui, L.E.; Jordan, M.I.; Lanckriet, G.R. A direct formulation for sparse PCA using semidefinite programming. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; pp. 41–48. [Google Scholar]

- Ngiam, J.; Chen, Z.; Bhaskar, S.A.; Koh, P.W.; Ng, A.Y. Sparse filtering. In Proceedings of the Neural Information Processing Systems, Granada, Spain, 12–15 December 2011; pp. 1125–1133. [Google Scholar]

- Zhang, Z.; Xu, Y.; Yang, J.; Li, X.; Zhang, D. A Survey of Sparse Representation: Algorithms and Applications. IEEE Access 2015, 3, 490–530. [Google Scholar] [CrossRef]

- Long, M.; Wang, J.; Sun, J.; Yu, P.S. Domain Invariant Transfer Kernel Learning. IEEE Trans. Knowl. Data Eng. 2015, 27, 1519–1532. [Google Scholar] [CrossRef]

- Sun, B.; Feng, J.; Saenko, K. Return of frustratingly easy domain adaptation. In Proceedings of the National Conference on Artificial Intelligence, Phoenix, AR, USA, 12–17 February 2016; pp. 2058–2065. [Google Scholar]

- Goodfellow, I.; Pougetabadie, J.; Mirza, M.; Xu, B.; Wardefarley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Wang, Q.; Bu, P.; Breckon, T.P. Unifying Unsupervised Domain Adaptation and Zero-Shot Visual Recognition. In Proceedings of the International Joint Conference on Neural Network, Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Han, C.; Lei, Y.; Xie, Y.; Zhou, D.; Gong, M. Visual Domain Adaptation Based on Modified A Distance and Sparse Filtering. Pattern Recognit. 2020, 104, 107254. [Google Scholar] [CrossRef]

- Wang, Q.; Breckon, T.P. Unsupervised Domain Adaptation via Structured Prediction Based Selective Pseudo-Labeling. In Proceedings of the National Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 1–10. [Google Scholar]

- Han, C.; Lei, Y.; Xie, Y.; Zhou, D.; Gong, M. Learning Smooth Representations with Generalized Softmax for Unsupervised Domain Adaptation. Inf. Sci. 2020. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).