End-to-End Automated Lane-Change Maneuvering Considering Driving Style Using a Deep Deterministic Policy Gradient Algorithm

Abstract

1. Introduction

2. Related Works

3. Problem Formulation

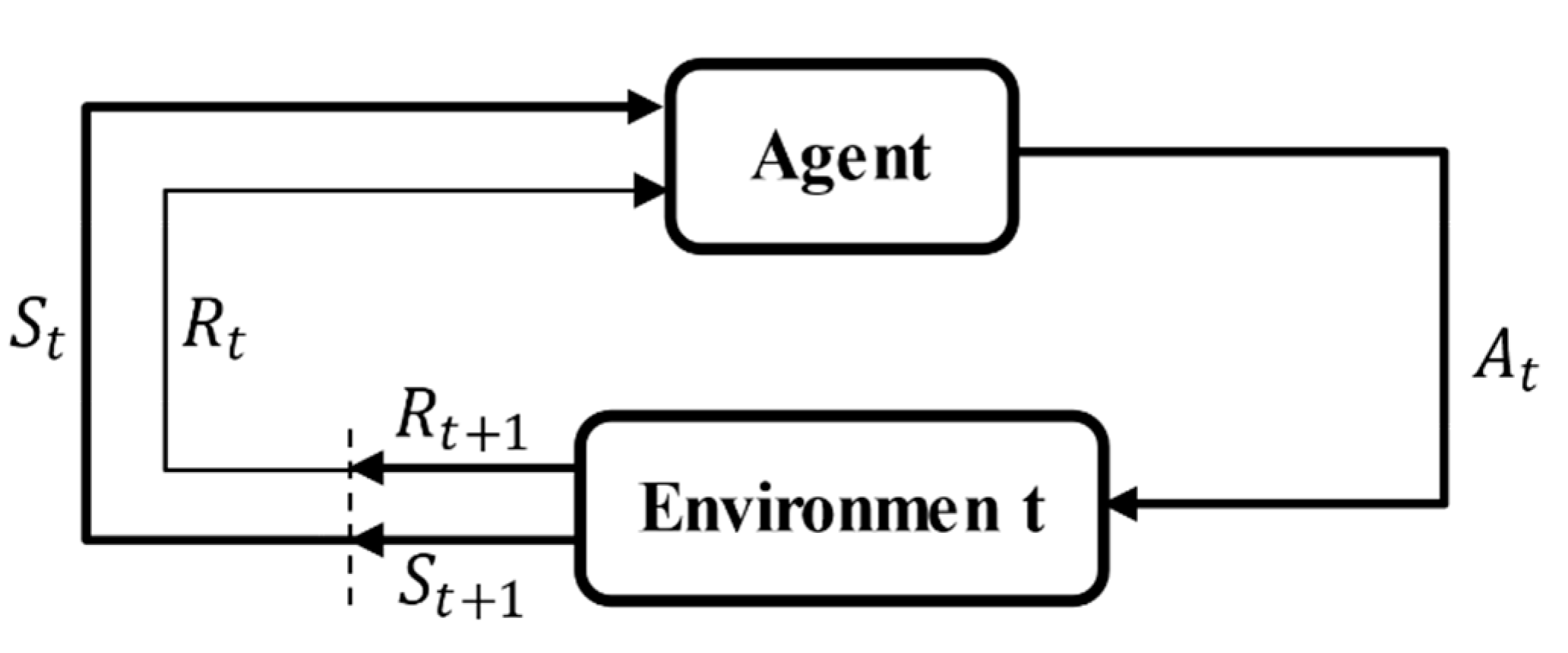

3.1. Reinforcement Learning

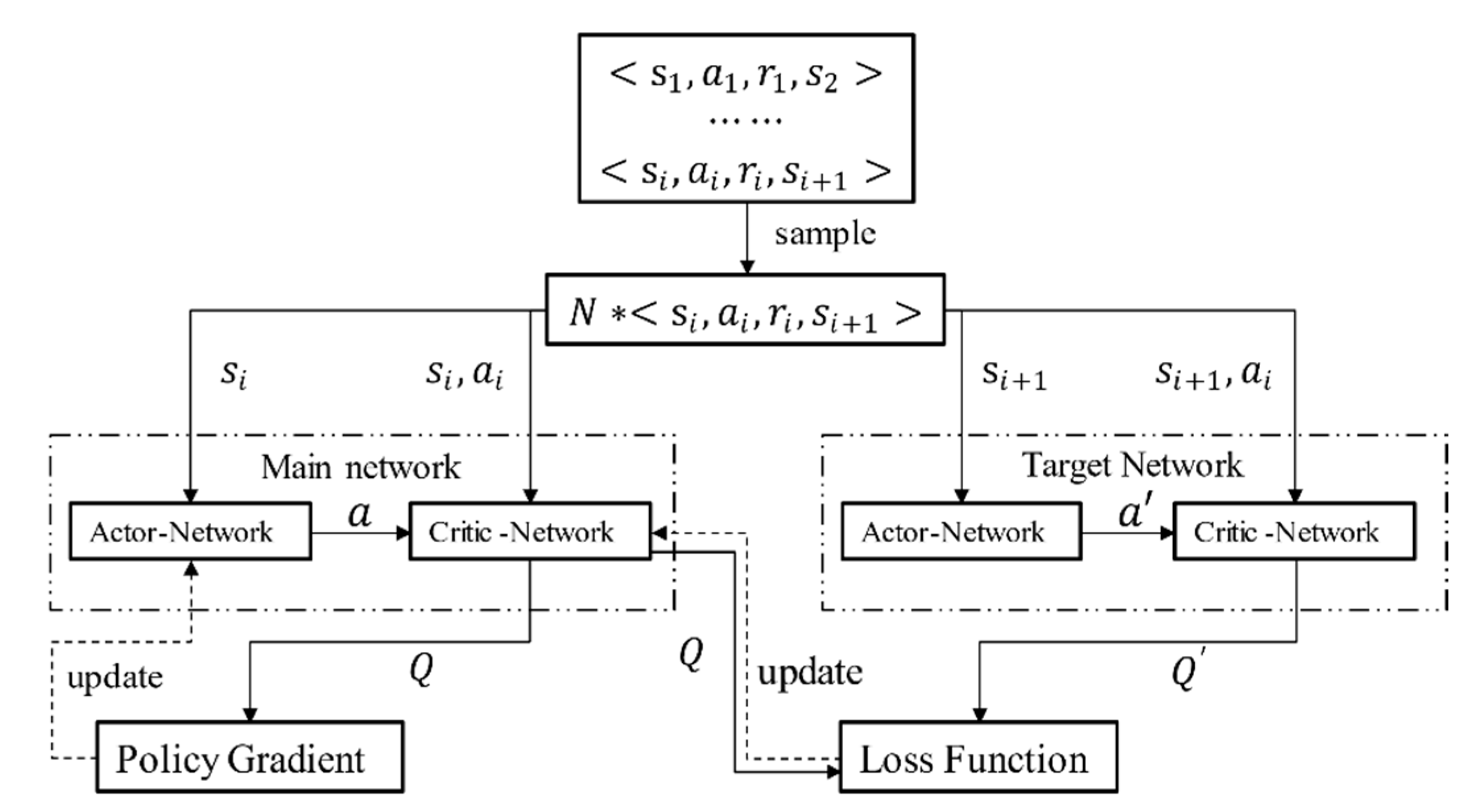

3.2. DDPG Algorithm

| Algorithm 1 DDPG algorithm |

| Randomly initialize critic network and actor with weights and Initialize target network and with weights , Initialize replay buffer R for episode = 1, M do Initialize a random process N for action exploration Receive initial observation state for t = 1, T do Select action Nt according to the current policy and exploration noise Execute action and observe reward and observe new state Store transition in R Sample a random minibatch of N transitions from R Set Update critic by minimizing the loss: Update the actor policy using the sampled gradient: Update the target networks: end for end for |

3.3. Actor–Critic Network Structure Design

3.4. Construction of Simulation Environment

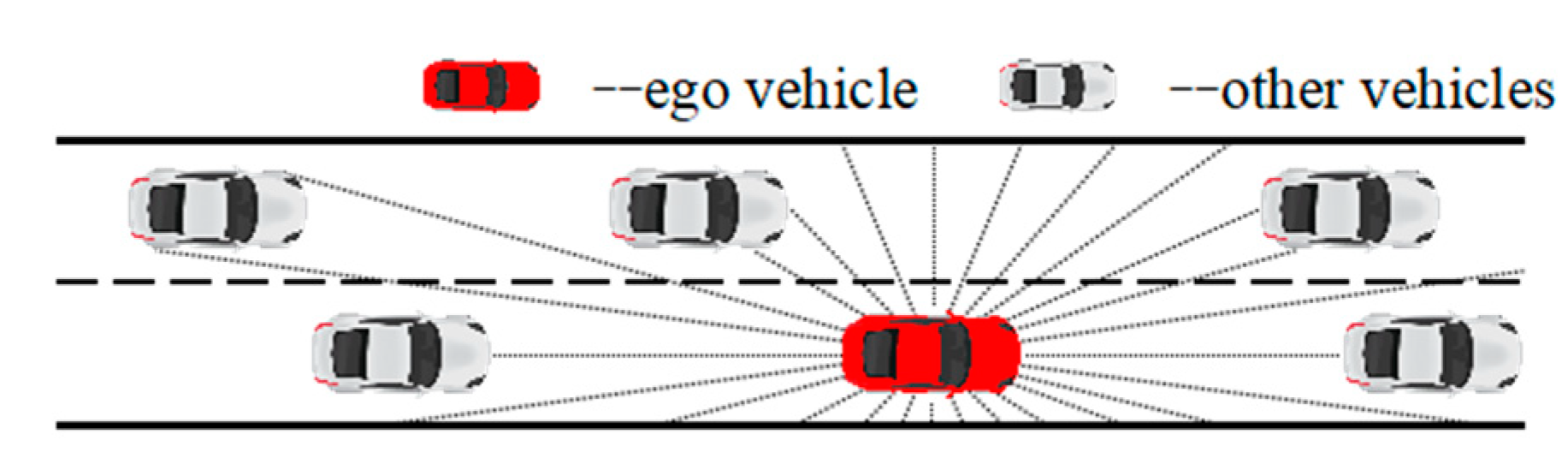

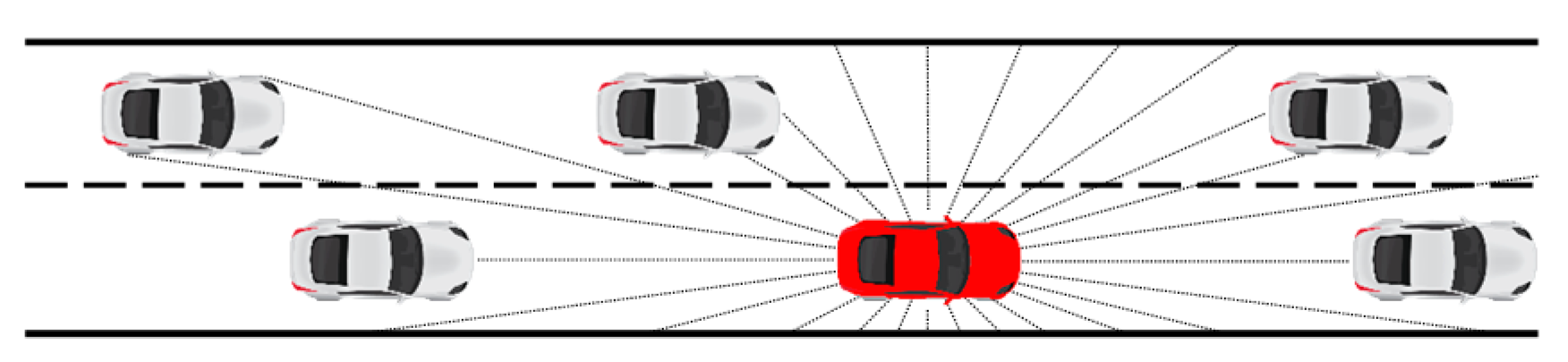

3.5. State Space Design

3.6. Action Space Design

3.7. Reward Function Design

- 1.

- Security:

- 2.

- Comfort:

- 3.

- Efficiency:

4. Materials and Methods

4.1. Simulation Environment Settings

4.2. Parameters Setting in Simplified Traffic Scene

- When the lane-change condition is not reached, the agent remains in the current lane;

- After the lane-change condition is reached, the agent changes the lane automatically;

- After the lane change is completed, the agent remains in the new lane.

4.3. Parameters Setting in Complex Traffic Scene

- Complete the task of changing lanes in the dynamic traffic scene;

- After the lane change is completed, adjust the speed to adapt to the current lane traffic flow.

5. Results

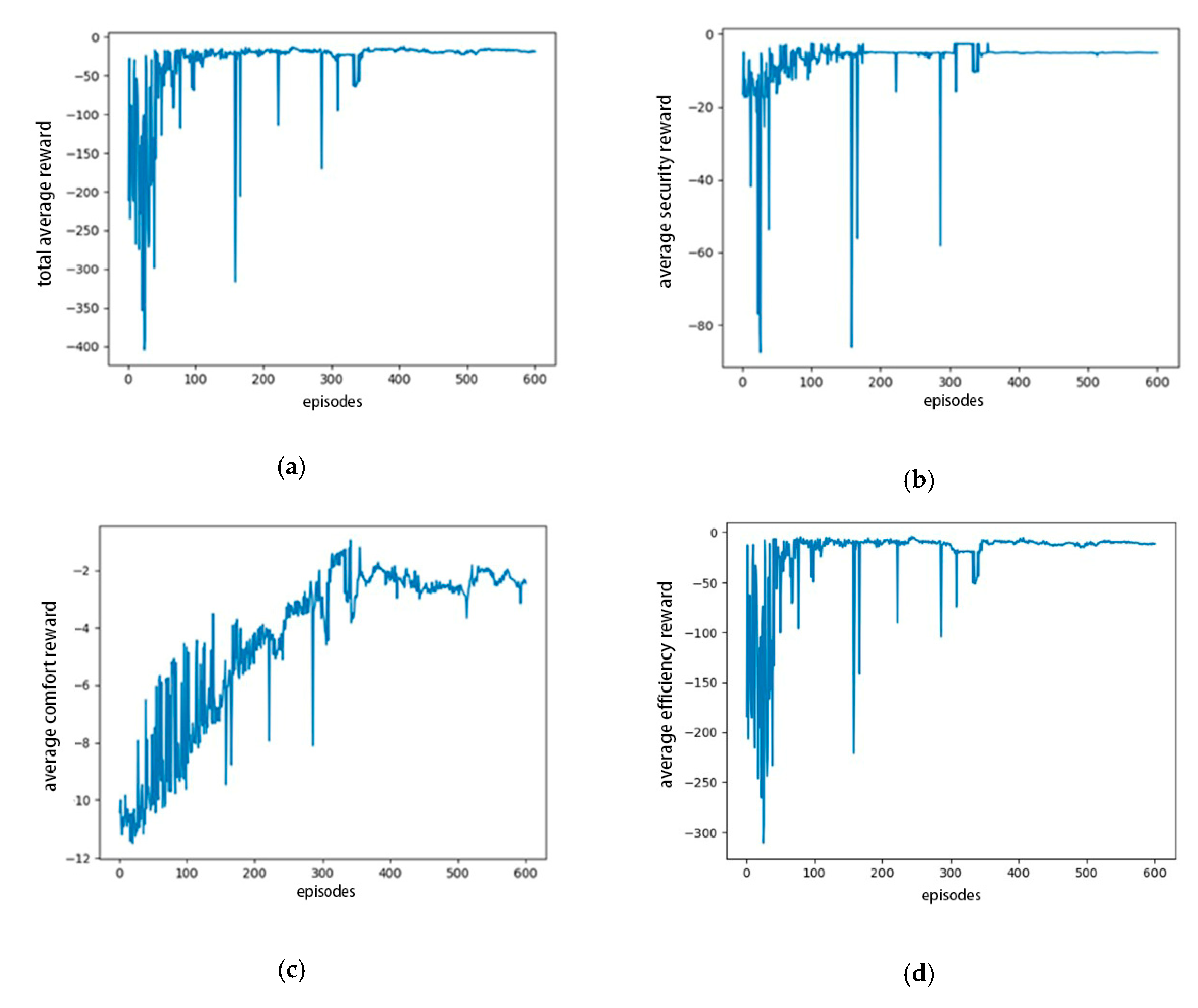

5.1. Training Results in Simplified Traffic Scenes

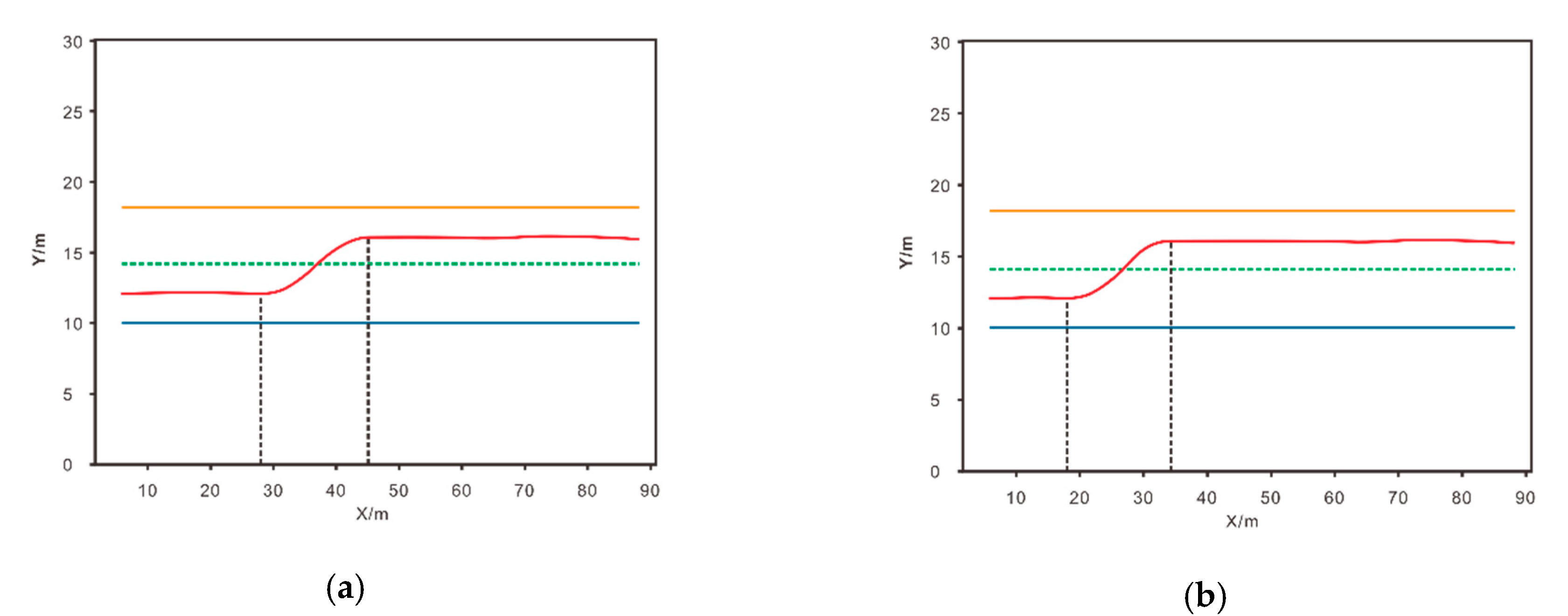

5.2. Verification Results in Simplified Traffic Scenes

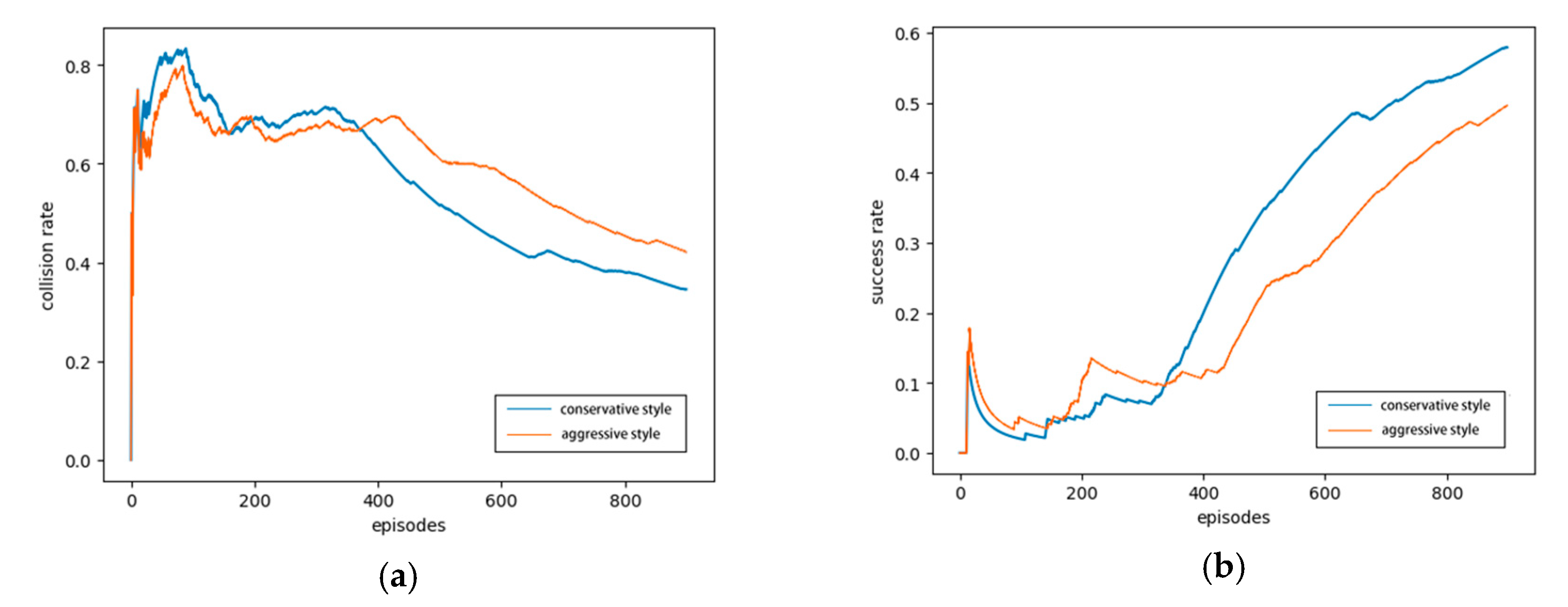

5.3. Training Results in Complex Traffic Scenes

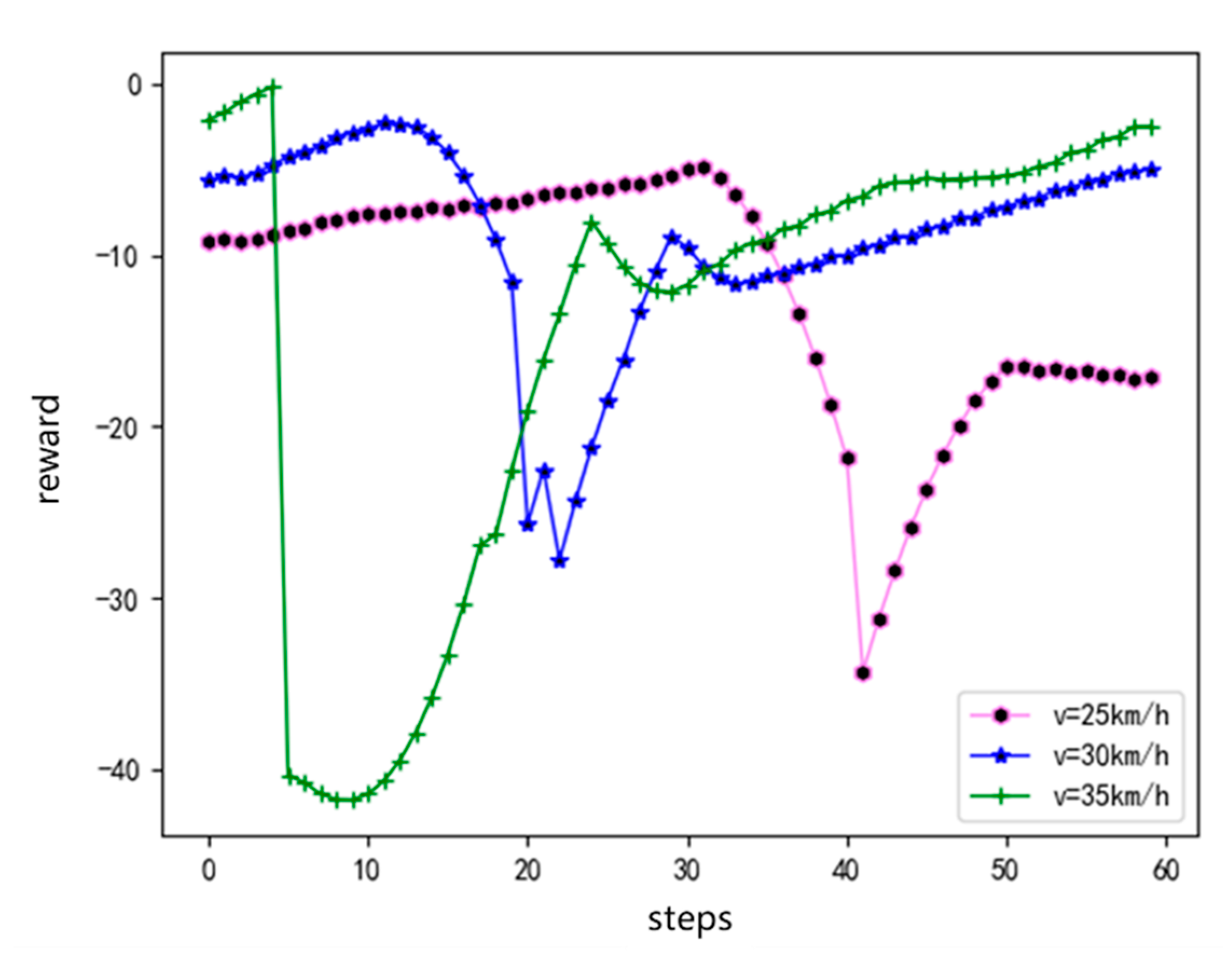

5.4. Verification Results in Complex Traffic Scenes

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviation

| DDPG | Deep Deterministic Policy Gradient |

| MDP | Markov Decision Process |

| DQN | Deep Q Network |

| TORCS | The Open Racing Car Simulator |

| CAN | Controller Area Network |

| GPS | Global Positioning System |

| A3C | Asynchronous Advantage Actor–Critic |

| WRC6 | World Rally Championship 6 |

| DPG | Deterministic Policy Gradient |

References

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; National Center for Statistics and Analysis (NCSA): Washington, DC, USA, 2015. [Google Scholar]

- Yang, Q.; Koutsopoulos, H.N. A Microscopic Traffic Simulator for evaluation of dynamic traffic management systems. Transp. Res. Part C Emerg. Technol. 1996, 4, 113–129. [Google Scholar] [CrossRef]

- Gipps, P. A model for the structure of lane-changing decisions. Transp. Res. Part B Methodol. 1986, 20, 403–414. [Google Scholar] [CrossRef]

- Kesting, A.; Treiber, M.; Helbing, D. General Lane-Changing Model MOBIL for Car-Following Models. Transp. Res. Rec. J. Transp. Res. Board 2007, 1999, 86–94. [Google Scholar] [CrossRef]

- Urmson, C.; Baker, C.; Dolan, J.; Rybski, P.; Salesky, B.; Whittaker, W.; Ferguson, D.; Darms, M. Autonomous Driving in Traffic: Boss and the Urban Challenge. AI Mag. 2009, 30, 17. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic Programming and the Numerical Solution of Variational Problems; INFORMS: Catonsville, MD, USA, 1957; pp. 277–288. [Google Scholar]

- Yu, A.; Palefsky-Smith, R.; Bedi, R. Course Project Reports: Deep Reinforcement Learning for Simulated Autonomous Vehicle Control. Available online: http://cs231n.stanford.edu/reports/2016/pdfs/112_Report.pdf (accessed on 17 July 2020).

- Wang, P.; Chan, C.-Y.; de La Fortelle, A. A reinforcement learning based approach for automated lane change maneuvers. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1379–1384. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1989. [Google Scholar]

- Kretchmar, R.M. Parallel reinforcement learning. In Proceedings of the 6th World Conference on Systemics, Cybernetics, and Informatics, Orlando, FL, USA, 14–18 July 2002. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Banino, A.; Barry, C.; Uria, B.; Blundell, C.; Lillicrap, T.; Mirowski, P.; Pritzel, A.; Chadwick, M.J.; Degris, T.; Modayil, J.; et al. Vector-based navigation using grid-like representations in artificial agents. Nature 2018, 557, 429–433. [Google Scholar] [CrossRef] [PubMed]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic meta-learning for fast adaptation of deep networks. arXiv 2017, arXiv:1703.03400. [Google Scholar]

- Gao, Y.; Peters, J.; Tsourdos, A.; Zhifei, S.; Joo, E.M. A survey of inverse reinforcement learning techniques. Int. J. Intell. Comput. Cybern. 2012, 5, 293–311. [Google Scholar] [CrossRef]

- Lin, J.-L.; Hwang, K.-S.; Haobin, S.; Pan, W. An ensemble method for inverse reinforcement learning. Inf. Sci. 2020, 512, 518–532. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Yin, Y.; Lin, L.; Wang, X. Human-like autonomous vehicle speed control by deep reinforcement learning with double Q-learning. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1251–1256. [Google Scholar]

- Fares, A.; Gomaa, W. Freeway ramp-metering control based on reinforcement learning. In Proceedings of the 11th IEEE International Conference on Control & Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 1226–1231. [Google Scholar]

- Yu, L.; Shao, X.; Yan, X. Autonomous overtaking decision making of driverless bus based on deep Q-learning method. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 2267–2272. [Google Scholar]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. End-To-End deep reinforcement learning for lane keeping assist. arXiv 2016, arXiv:1612.04340. [Google Scholar]

- Wang, P.; Chan, C.-Y. Autonomous ramp merge maneuver based on reinforcement learning with continuous action space. arXiv 2018, arXiv:1803.09203. [Google Scholar]

- Gu, W.-Y.; Xu, X.; Yang, J. Path following with supervised deep reinforcement learning. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 448–452. [Google Scholar]

- Liu, K.; Wan, Q.; Li, Y. A deep reinforcement learning algorithm with expert demonstrations and supervised loss and its application in autonomous driving. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 2944–2949. [Google Scholar]

- Wang, P.; Li, H.; Chan, C.-Y. Continuous control for automated lane change behavior based on deep deterministic policy gradient algorithm. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1454–1460. [Google Scholar]

- Pan, X.; You, Y.; Wang, Z.; Lu, C. Virtual to real reinforcement learning for autonomous driving. arXiv 2017, arXiv:1704.03952. [Google Scholar]

- Jaritz, M.; De Charette, R.; Toromanoff, M.; Perot, E.; Nashashibi, F. End-To-End race driving with deep reinforcement learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2070–2075. [Google Scholar]

- An, H.; Jung, J.-I. Decision-Making System for Lane Change Using Deep Reinforcement Learning in Connected and Automated Driving. Electronics 2019, 8, 543. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, Y.; Pu, Z.; Hu, J.; Wang, X.; Ke, R. Safe, efficient, and comfortable velocity control based on reinforcement learning for autonomous driving. Transp. Res. Part C Emerg. Technol. 2020, 117, 102662. [Google Scholar] [CrossRef]

- Wang, G.; Wu, J.; He, R.; Yang, S. A Point Cloud-Based Robust Road Curb Detection and Tracking Method. IEEE Access 2019, 7, 24611–24625. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An introduction; MIT press: Cambridge, MA, USA, 2018. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

| Name | Size | Annotation |

|---|---|---|

| Input layer | n | State space |

| Fully connected layer 1 | 150 × 1 | Number of neurons in layer 1 |

| Fully connected layer 2 | 20 × 1 | Number of neurons in layer 2 |

| Output layer | 2 × 1 |

| Name | Size | Annotation |

|---|---|---|

| Input layer | n + 2 | State space + action |

| Fully connected layer 1 | 150 × 1 | Number of neurons in layer 1 |

| Fully connected layer 2 | 20 × 1 | Number of neurons in layer 2 |

| Output layer | 1 × 1 | Q-values |

| Hardware and Software | Model/Version |

|---|---|

| CPU | Intel Core i7-4770 |

| RAM | 16.0 GB |

| Operating system | Windows Server 2019 64-bits |

| Python | 3.7 |

| Deep learning framework | TensorFlow-1.14 |

| Visualization tool | Pyglet-1.2.4 |

| Parameter Name | Parameter Value | Parameter Name | Parameter Value |

|---|---|---|---|

| MAX_EPISODES | 600 | MEMORY_CAPACITY | 2000 |

| MAX_EP_STEPS | 100 | BATCH_SIZE | 64 |

| Actor-network initial learning rate | 0.001 | Critic-network initial learning rate | 0.001 |

| γ | 0.9 | −1 | |

| −0.1 | 0.1 | ||

| −0.4 | −200 |

| Parameter Name | Parameter Value | Parameter Name | Parameter Value |

|---|---|---|---|

| MAX_EPISODES | 900 | MEMORY_CAPACITY | 2000 |

| MAX_EP_STEPS | 60 | BATCH_SIZE | 64 |

| Actor-network initial learning rate | 0.001 | Critic-network initial learning rate | 0.001 |

| γ | 0.9 | −0.4 | |

| −10 | 4.17 (m/s) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, H.; Lu, Z.; Wang, Q.; Zheng, C. End-to-End Automated Lane-Change Maneuvering Considering Driving Style Using a Deep Deterministic Policy Gradient Algorithm. Sensors 2020, 20, 5443. https://doi.org/10.3390/s20185443

Hu H, Lu Z, Wang Q, Zheng C. End-to-End Automated Lane-Change Maneuvering Considering Driving Style Using a Deep Deterministic Policy Gradient Algorithm. Sensors. 2020; 20(18):5443. https://doi.org/10.3390/s20185443

Chicago/Turabian StyleHu, Hongyu, Ziyang Lu, Qi Wang, and Chengyuan Zheng. 2020. "End-to-End Automated Lane-Change Maneuvering Considering Driving Style Using a Deep Deterministic Policy Gradient Algorithm" Sensors 20, no. 18: 5443. https://doi.org/10.3390/s20185443

APA StyleHu, H., Lu, Z., Wang, Q., & Zheng, C. (2020). End-to-End Automated Lane-Change Maneuvering Considering Driving Style Using a Deep Deterministic Policy Gradient Algorithm. Sensors, 20(18), 5443. https://doi.org/10.3390/s20185443