SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images

Abstract

1. Introduction

2. Related Work

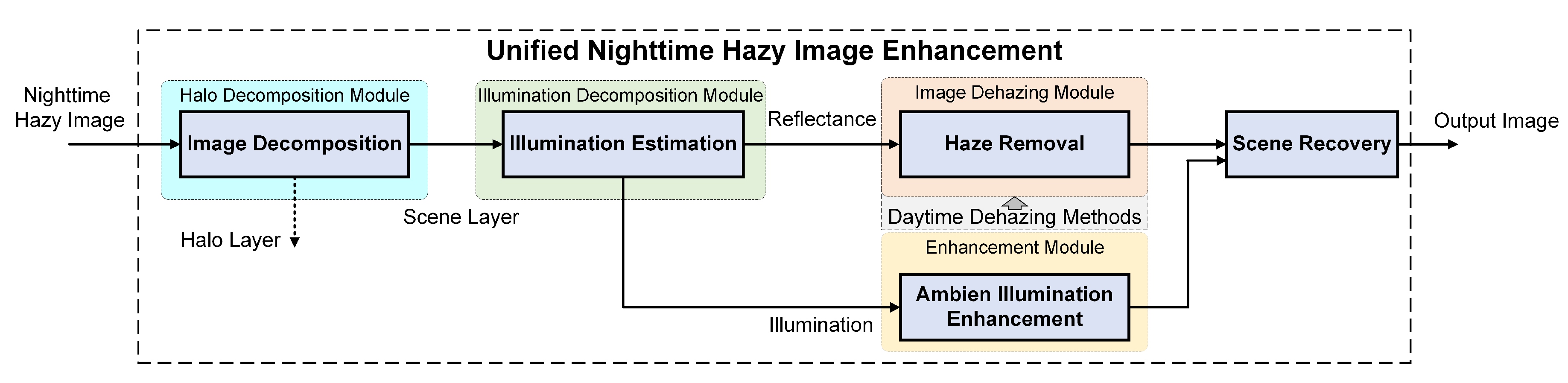

3. Methodology of the Proposed SIDE

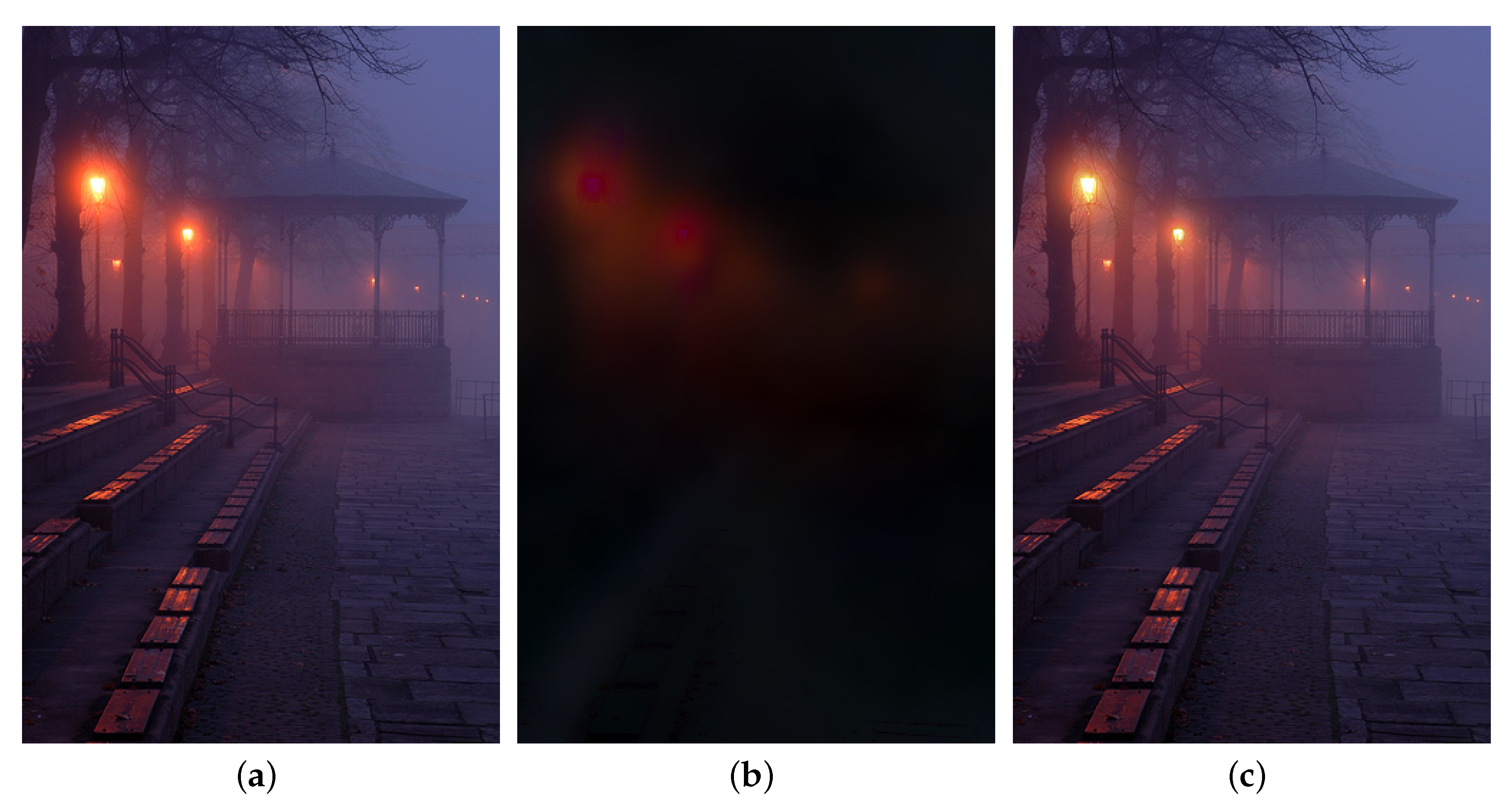

3.1. Halo Decomposition Module

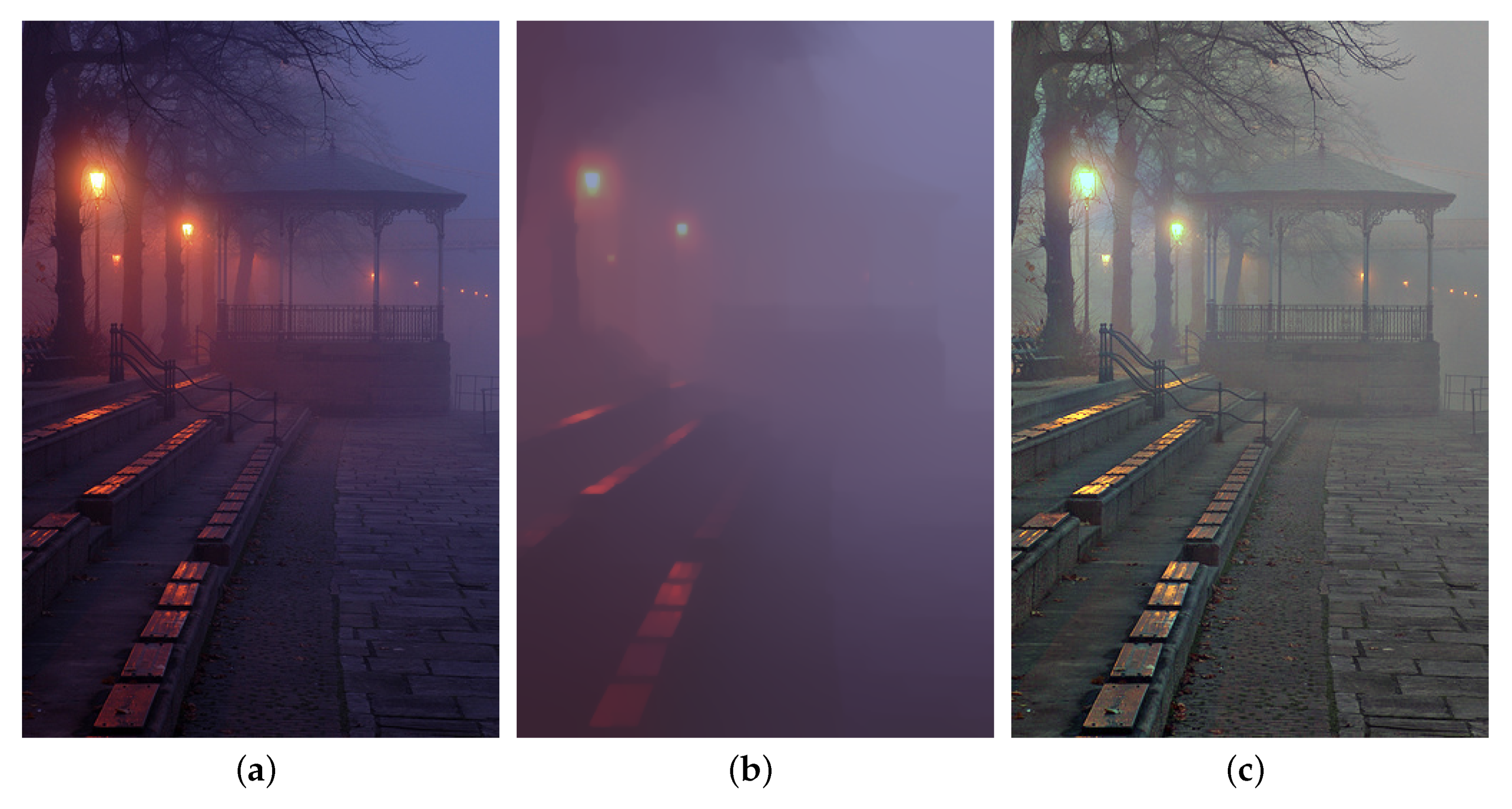

3.2. Illumination Decomposition Module

3.3. Scene Recovery Module

4. Experimental Results and Analysis

4.1. Results on Hazy Scene Estimation

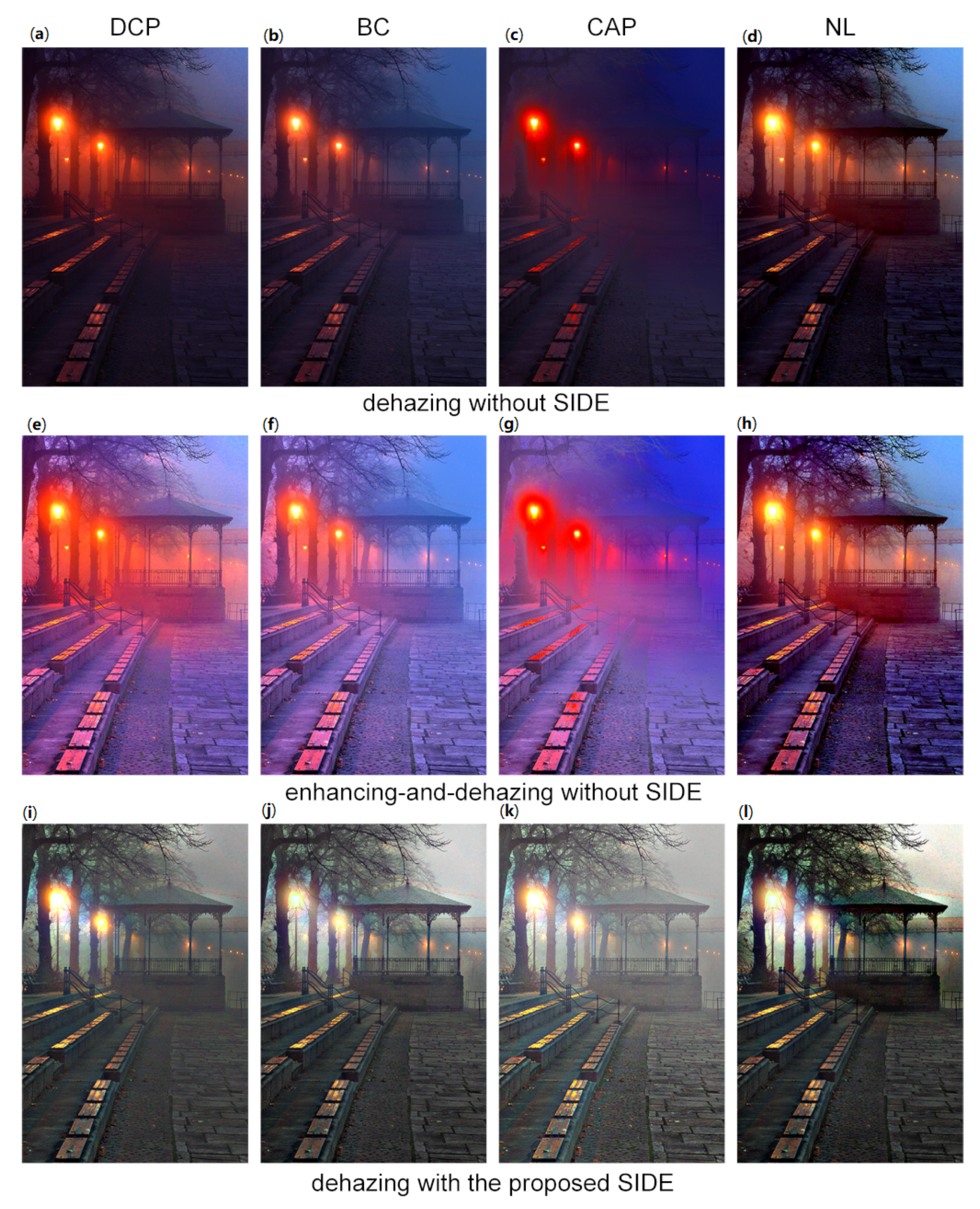

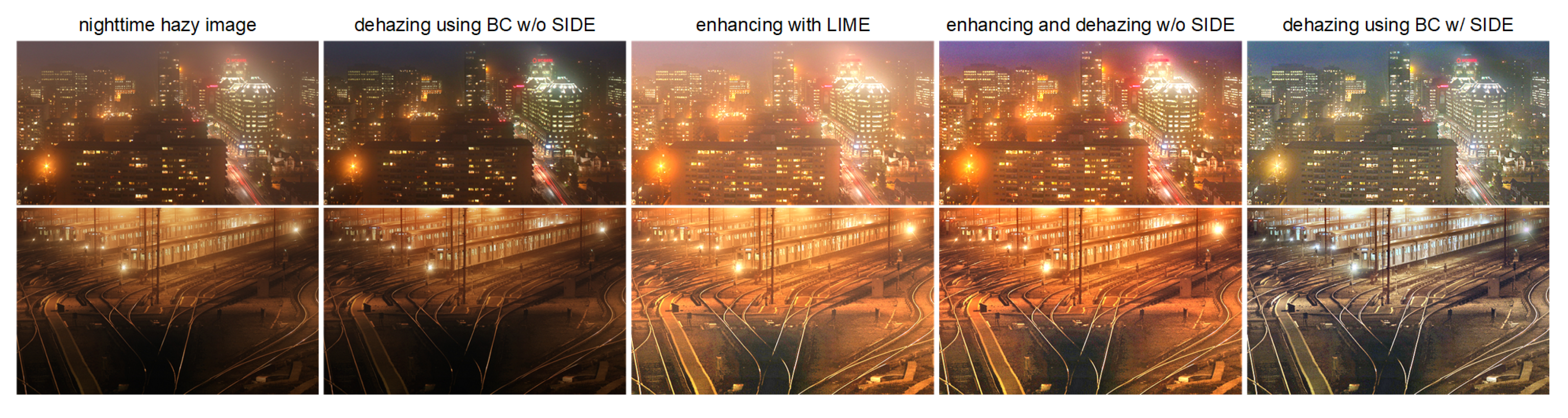

4.2. Verification on Daytime Dehazing Methods

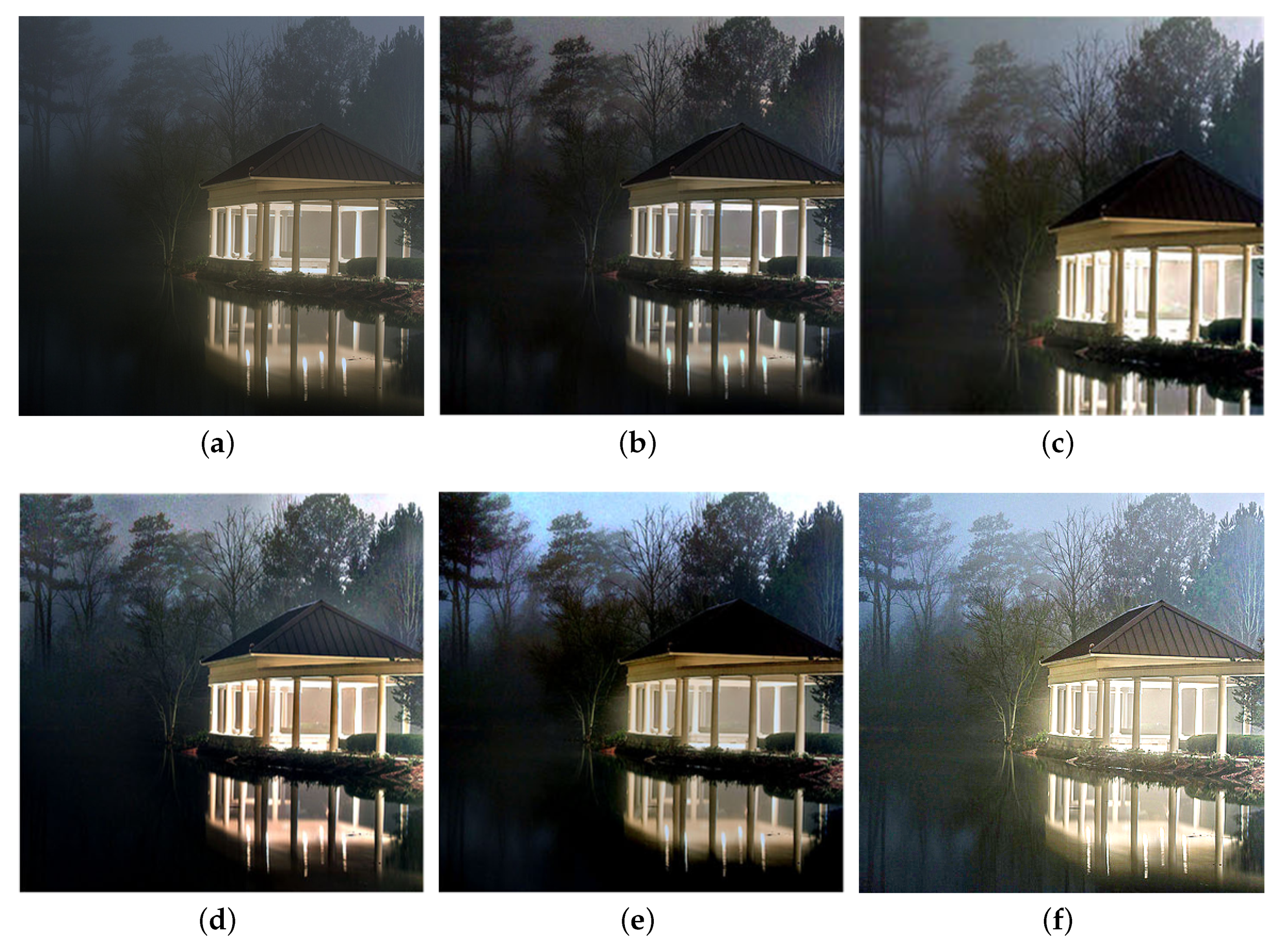

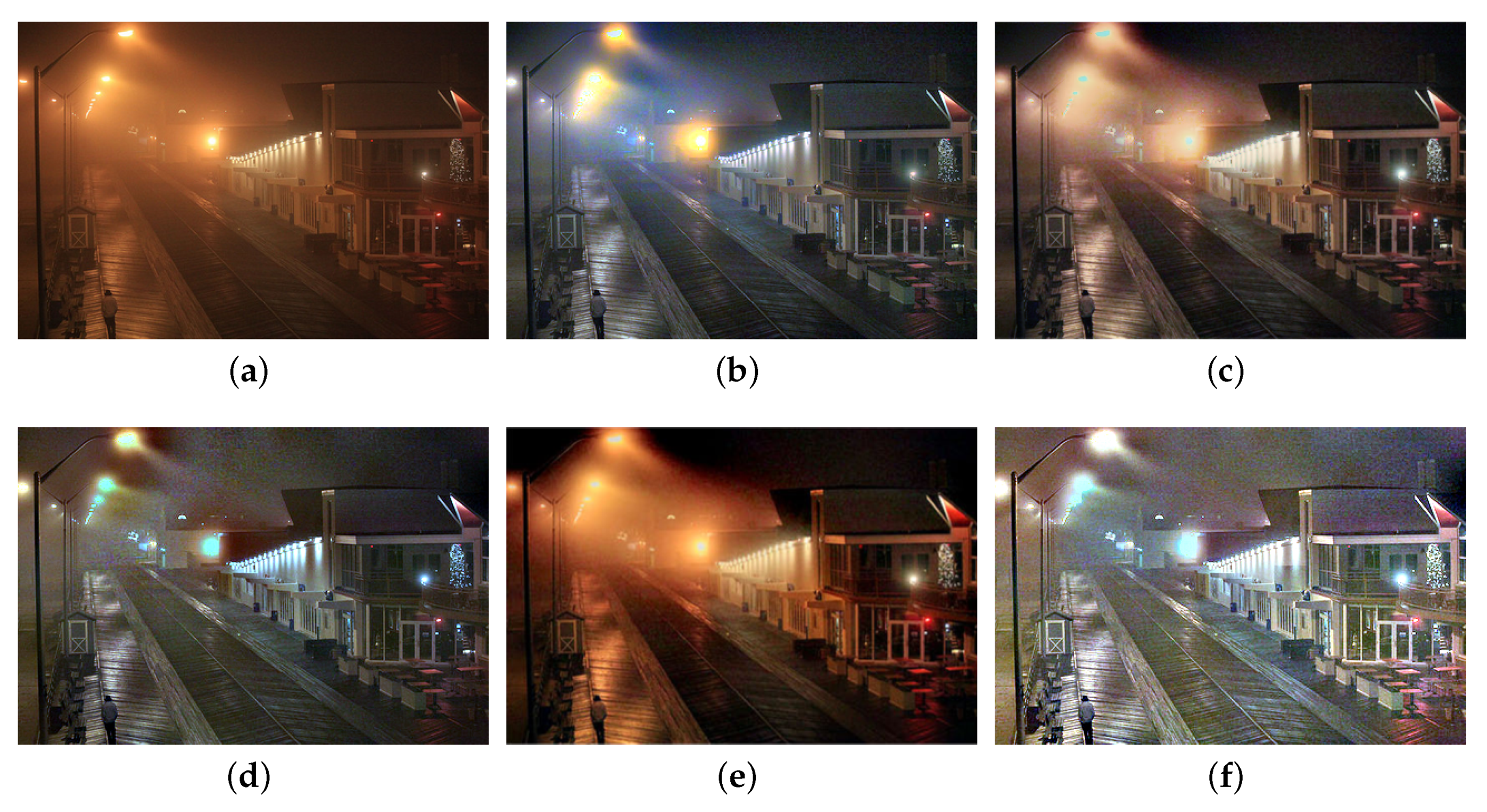

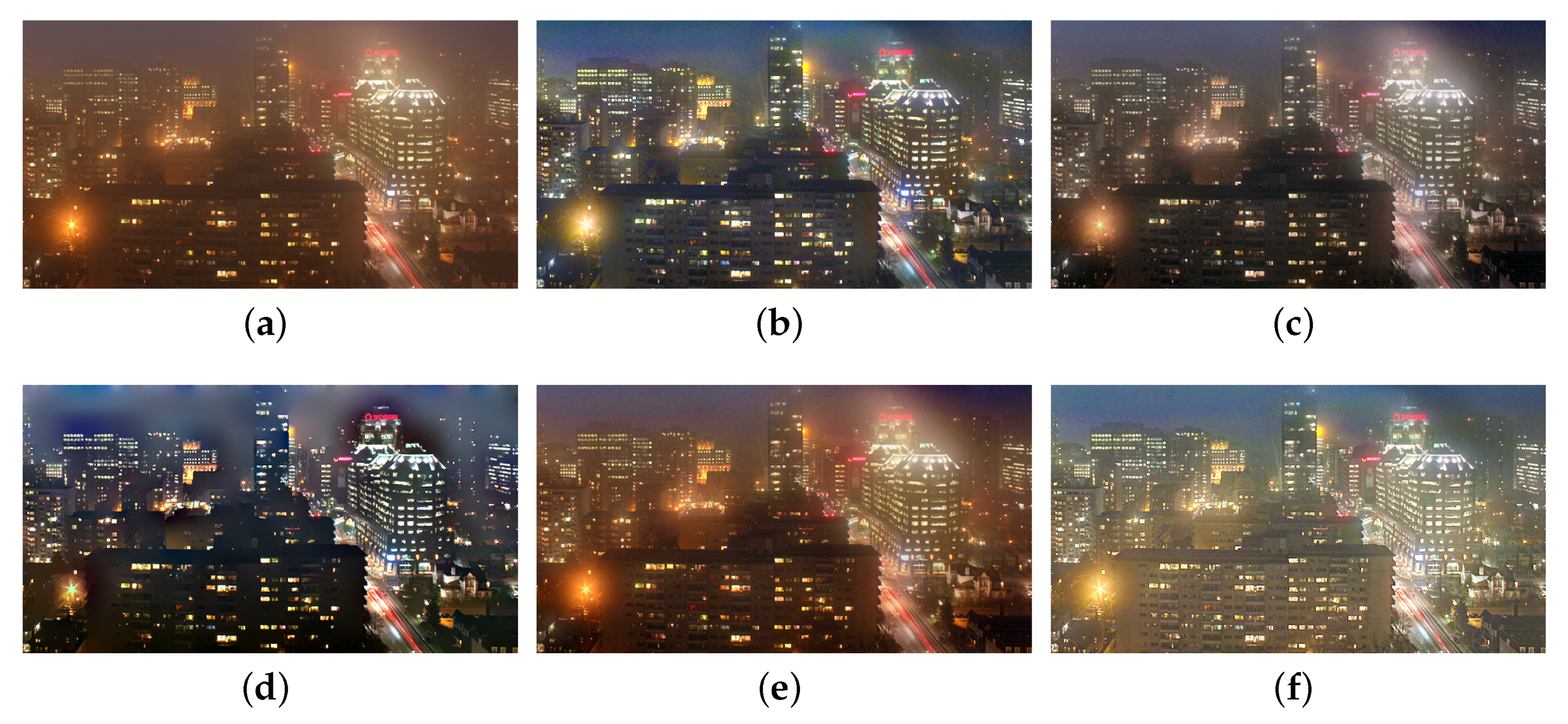

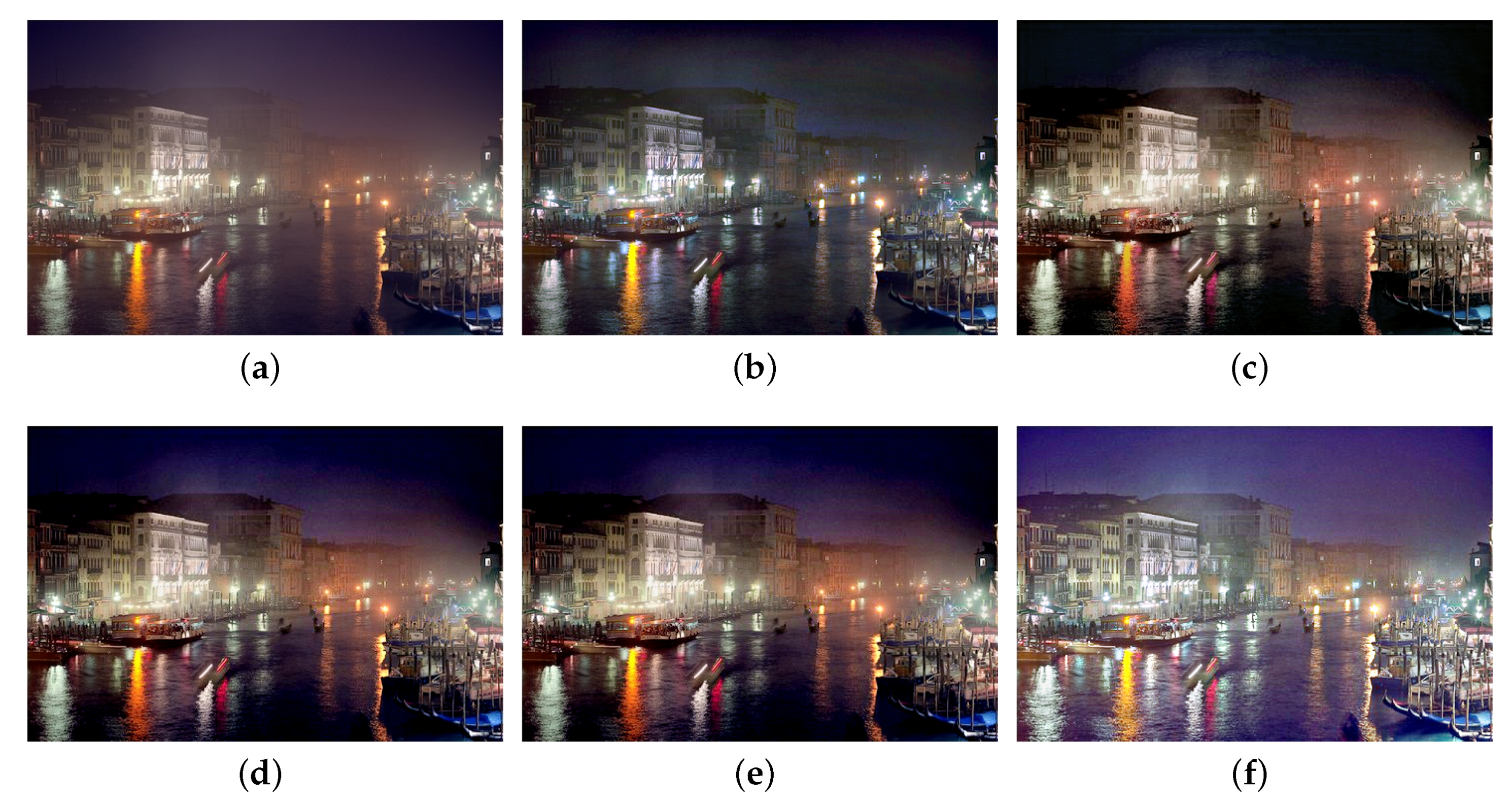

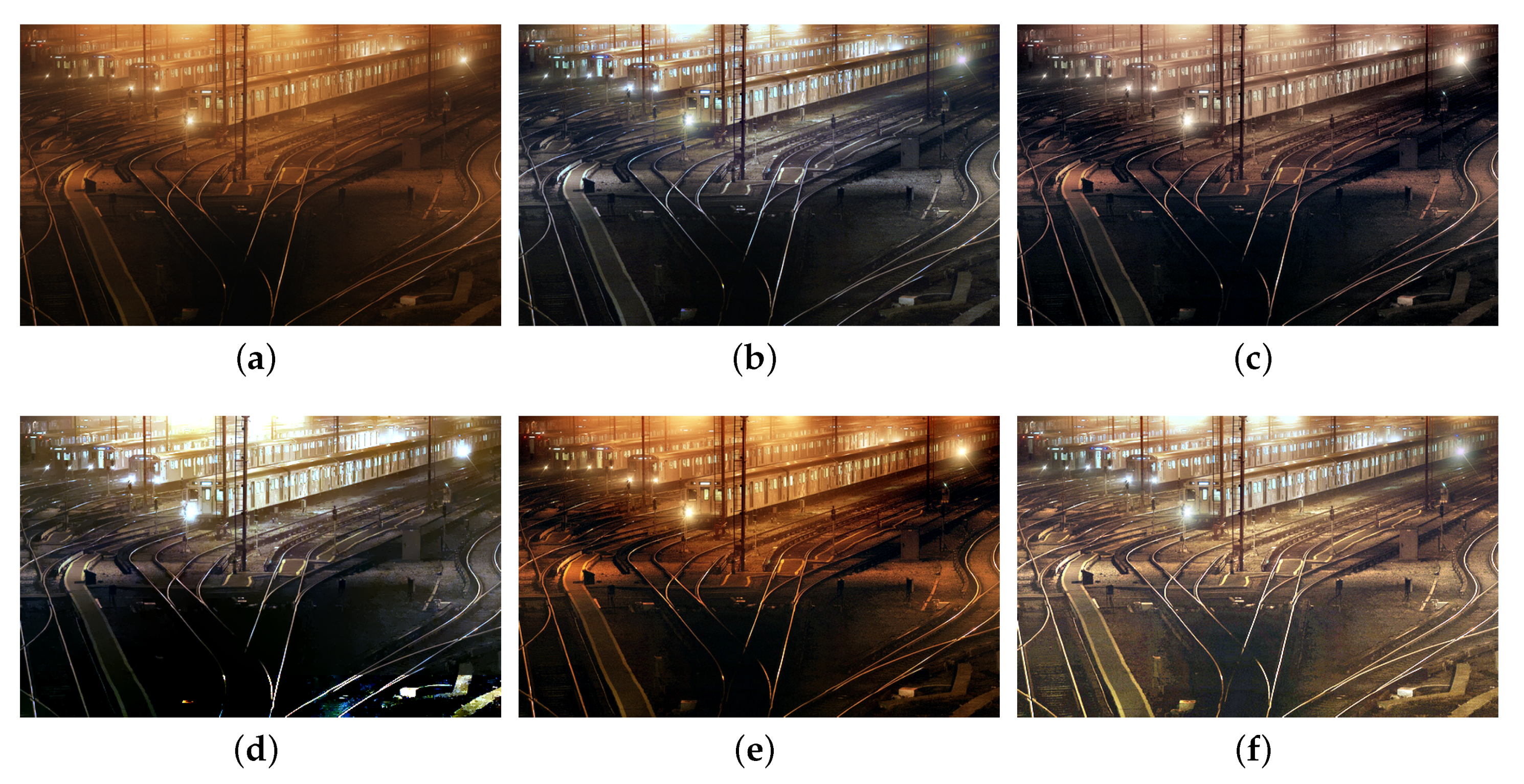

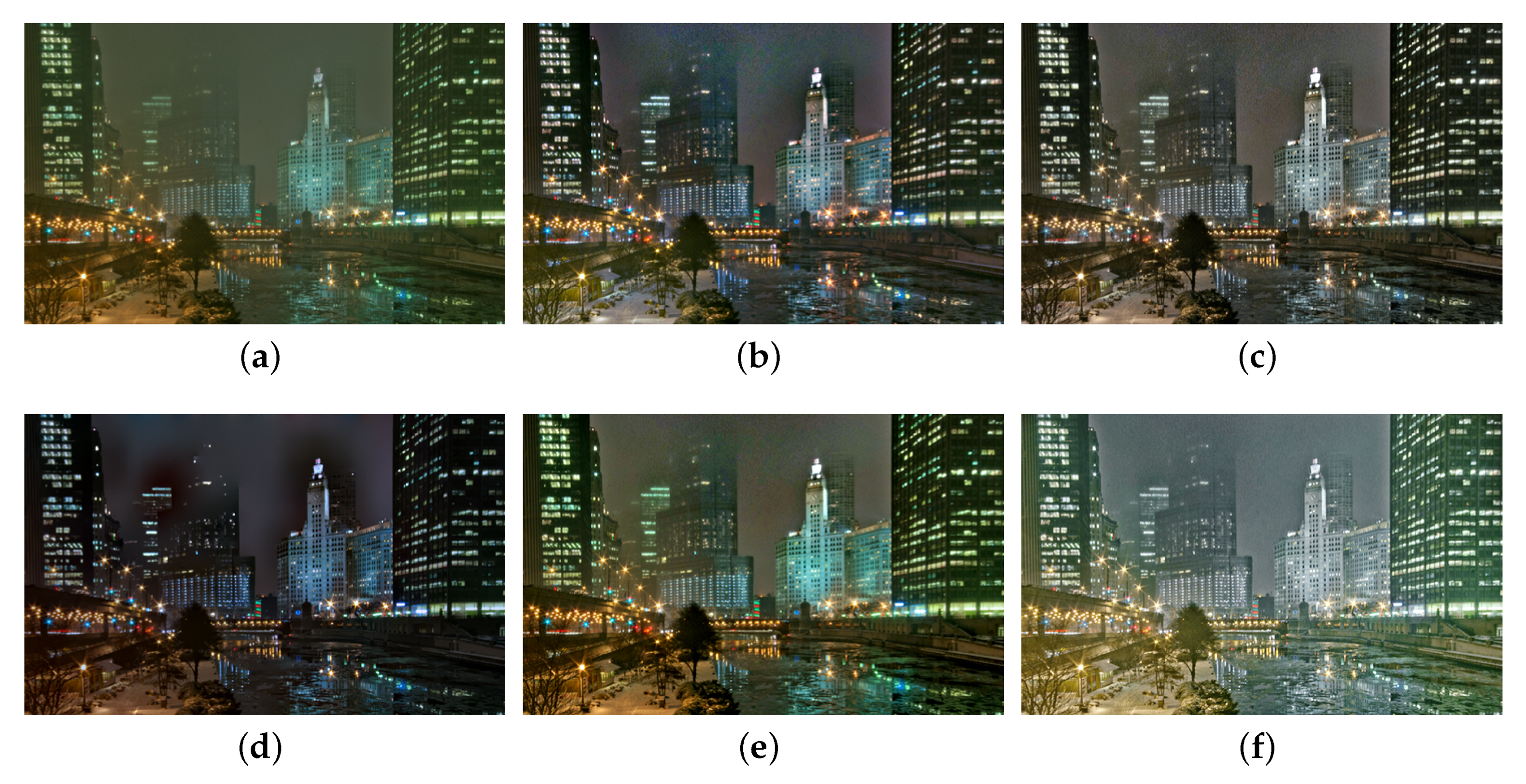

4.3. Qualitative Comparisons on Real Nighttime Hazy Images

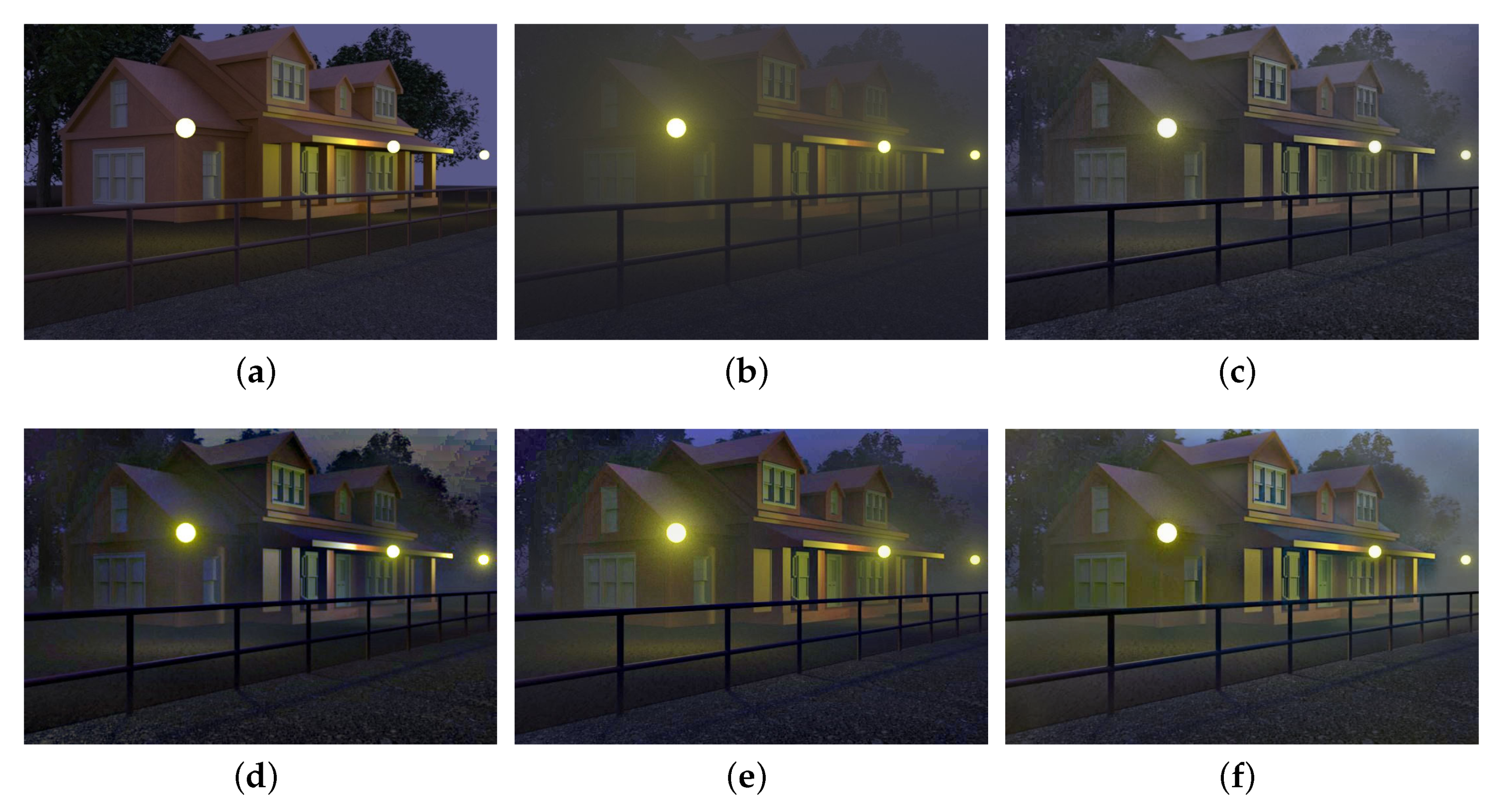

4.4. Comparisons on Synthesized Nighttime Hazy Images

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar]

- Ancuti, C.; Ancuti, C.; Hermans, C.; Bekaert, P. A Fast Semi-inverse Approach to Detect and Remove the Haze from a Single Image. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2011; pp. 501–514. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient Image Dehazing with Boundary Constraint and Contextual Regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 3–6 December 2013; pp. 617–624. [Google Scholar]

- Fattal, R. Dehazing Using Color-Lines. ACM Trans. Graph. 2014, 34, 1–14. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- He, R.; Huang, X. Single Image Dehazing Using Non-local Total Generalized Variation. In Proceedings of the IEEE International Conference on Industrial Engineering and Applications, Xi’an, China, 19–21 June 2019; pp. 19–24. [Google Scholar]

- Yu, T.; Song, K.; Miao, P.; Yang, G.; Yang, H.; Chen, C. Nighttime Single Image Dehazing via Pixel-Wise Alpha Blending. IEEE Access 2019, 7, 114619–114630. [Google Scholar]

- Pei, S.; Lee, T. Nighttime haze removal using color transfer pre-processing and dark channel prior. In Proceedings of the IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 957–960. [Google Scholar]

- Zhang, J.; Cao, Y.; Fang, S.; Kang, Y.; Chen, C.W. Fast haze removal for nighttime image using maximum reflectance prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7418–7426. [Google Scholar]

- Zhang, J.; Cao, Y.; Wang, Z. Nighttime haze removal based on a new imaging model. In Proceedings of the IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 4557–4561. [Google Scholar]

- Li, Y.; Tan, R.T.; Brown, M.S. Nighttime haze removal with glow and multiple light colors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 226–234. [Google Scholar]

- Ancuti, C.; Ancuti, C.; De Vleeschouwer, C.; Bovik, A. Night-time dehazing by fusion. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 2256–2260. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C.; Bovik, A.C. Day and night-time dehazing by local airlight estimation. IEEE Trans. Image Process. 2020, 29, 6264–6275. [Google Scholar]

- Park, S.; Yu, S.; Moon, B.; Ko, S.; Paik, J. Low-Light Image Enhancement using Variational Optimization-based Retinex Model. IEEE Trans. Consum. Electron. 2017, 63, 178–184. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-Revealing Low-Light Image Enhancement via Robust Retinex Model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar]

- He, R.; Guan, M.; Wen, C. SCENS: Simultaneous Contrast Enhancement and Noise Suppression for Low-light Images. IEEE Trans. Ind. Electron. 2020, in press. [Google Scholar] [CrossRef]

- Tan, R. Visibility in bad weather from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Joshi, N.; Cohen, M. Seeing Mt. Rainier: Lucky imaging for multi-image denoising, sharpening, and haze removal. In Proceedings of the IEEE International Conference on Computational Photography, Cambridge, MA, USA, 29–30 March 2010; pp. 1–8. [Google Scholar]

- Matlin, E.; Milanfar, P. Removal of haze and noise from a single image. In Proceedings of the IS&T/SPIE Electronic Imaging, Burlingame, CA, USA, 22–26 January 2012; pp. 177–188. [Google Scholar]

- Huang, S.; Chen, B.; Wang, W. Visibility restoration of single hazy images captured in real-world weather conditions. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1814–1824. [Google Scholar]

- Li, Z.; Zheng, J. Edge-preserving decomposition-based single image haze removal. IEEE Trans. Image Process. 2015, 24, 5432–5441. [Google Scholar] [PubMed]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2015, 24, 120–129. [Google Scholar] [PubMed]

- Li, Z.; Zheng, J. Single image de-hazing using globally guided image filtering. IEEE Trans. Image Process. 2018, 27, 442–450. [Google Scholar]

- Liu, Y.; Shang, J.; Pan, L.; Wang, A.; Wang, M. A Unified Variational Model for Single Image Dehazing. IEEE Access 2019, 7, 15722–15736. [Google Scholar]

- Tang, K.; Yang, J.; Wang, J. Investigating Haze-Relevant Features in a Learning Framework for Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2995–3002. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Li, R.; Pan, J.; Li, Z.; Tang, J. Single image dehazing via conditional generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8202–8211. [Google Scholar]

- Li, J.; Li, G.; Fan, H. Image dehazing using residual-based deep CNN. IEEE Access 2018, 6, 26831–26842. [Google Scholar]

- Zhang, H.; Patel, V. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3194–3203. [Google Scholar]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar]

- Zhang, X.; Wang, T.; Wang, J.; Tang, G.; Zhao, L. Pyramid Channel-based Feature Attention Network for image dehazing. Comput. Vis. Image Underst. 2020, 197, 103003. [Google Scholar]

- Zhu, H.; Peng, X.; Chandrasekhar, V.; Li, L.; Lim, J.H. DehazeGAN: When Image Dehazing Meets Differential Programming. In Proceedings of the International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1234–1240. [Google Scholar]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced pix2pix dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8160–8168. [Google Scholar]

- Dudhane, A.; Singh Aulakh, H.; Murala, S. Ri-gan: An end-to-end network for single image haze removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 2014–2023. [Google Scholar]

- Dong, Y.; Liu, Y.; Zhang, H.; Chen, S.; Qiao, Y. FD-GAN: Generative Adversarial Networks with Fusion-Discriminator for Single Image Dehazing. In AAAI; AAAI Press: Palo Alto, CA, USA, 2020; pp. 10729–10736. [Google Scholar]

- Lou, W.; Li, Y.; Yang, G.; Chen, C.; Yang, H.; Yu, T. Integrating Haze Density Features for Fast Nighttime Image Dehazing. IEEE Access 2020, 8, 3318–3330. [Google Scholar]

- Kuanar, S.; Rao, K.; Mahapatra, D.; Bilas, M. Night time haze and glow removal using deep dilated convolutional network. arXiv 2019, arXiv:1902.00855. [Google Scholar]

- Rahman, Z.; Jobson, D.; Woodell, G. Retinex Processing for Automatic Image Enhancement. J. Electron. Imaging 2004, 13, 100–111. [Google Scholar]

- Jobson, D.; Rahman, Z.; Woodell, G. Properties and Performance of a Center/Surround Retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.; Woodell, G. A Multiscale Retinex for Bridging the Gap Between Color Images and the Human Observation of Scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Kimmel, R.; Elad, M.; Shaked, D.; Keshet, R.; Sobel, I. A Variational Framework for Retinex. Int. J. Comput. Vis. 2003, 52, 7–23. [Google Scholar]

- Ng, M.; Wang, W. A Total Variation Model for Retinex. SIAM J. Imaging Sci. 2011, 4, 345–365. [Google Scholar]

- Ma, W.; Morel, J.M.; Osher, S.; Chien, A. An ℓ1-based Variational Model for Retinex Theory and Its Application to Medical Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 153–160. [Google Scholar]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar]

- Wang, W.; He, C. A Variational Model with Barrier Functionals for Retinex. SIAM J. Imaging Sci. 2015, 8, 1955–1980. [Google Scholar]

- Fu, X.; Liao, Y.; Zeng, D.; Huang, Y.; Zhang, X.; Ding, X. A Probabilistic Method for Image Enhancement With Simultaneous Illumination and Reflectance Estimation. IEEE Trans. Image Process. 2015, 24, 4965–4977. [Google Scholar]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.; Ding, X. A Weighted Variational Model for Simultaneous Reflectance and Illumination Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2782–2790. [Google Scholar]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A Deep Autoencoder Approach to Natural Low-Light Image Enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar]

- Park, S.; Yu, S.; Kim, M.; Park, K.; Paik, J. Dual Autoencoder Network for Retinex-based Low-Light Image Enhancement. IEEE Access 2018, 6, 22084–22093. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; pp. 1–12. [Google Scholar]

- Cai, J.; Gu, S.; Zhang, L. Learning a Deep Single Image Contrast Enhancer from Multi-Exposure Images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the Darkness: A Practical Low-light Image Enhancer. In Proceedings of the ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Goldstein, T.; Osher, S. The Split Bregman Method for ℓ1-Regularized Problems. SIAM J. Imaging Sci. 2009, 2, 323–343. [Google Scholar]

- Wang, Y.; Yin, W.; Zeng, J. Global Convergence of ADMM in Nonconvex Nonsmooth Optimization. J. Sci. Comput. 2019, 78, 29–63. [Google Scholar]

- Land, E. The Retinex Theory of Color Vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; You, S.; Brown, M.S.; Tan, R.T. Haze visibility enhancement: A survey and quantitative benchmarking. Comput. Vis. Image Underst. 2017, 165, 1–16. [Google Scholar]

- Jenatton, R.; Mairal, J.; Obozinski, G.; Bach, F. Proximal Methods for Sparse Hierarchical Dictionary Learning. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 487–494. [Google Scholar]

- Gu, K.; Lin, W.; Zhai, G.; Yang, X.; Zhang, W.; Chen, C.W. No-Reference Quality Metric of Contrast-Distorted Images Based on Information Maximization. IEEE Trans. Cybern. 2016, 47, 4559–4565. [Google Scholar] [CrossRef]

| MRP [11] | HDF [41] | GMLC [13] | PAB [9] | SIDE | ||

|---|---|---|---|---|---|---|

| Pavilion | e↑ | 0.08 | 0.13 | 0.15 | 0.09 | 0.17 |

| ↓ | 0.01 | 0.01 | 0 | 0.03 | 0 | |

| ↑ | 4.35 | 2.97 | 5.01 | 4.67 | 5.08 | |

| NIQMC↑ | 4.67 | 4.82 | 4.93 | 5.01 | 5.16 | |

| Lake | e↑ | 0.03 | 0.08 | 0.13 | 0.19 | 0.23 |

| ↓ | 0.01 | 0.02 | 0 | 0 | 0 | |

| ↑ | 2.33 | 3.54 | 5.28 | 5.66 | 7.21 | |

| NIQMC↑ | 4.91 | 4.88 | 5.14 | 4.99 | 5.37 | |

| Street | e↑ | 0.20 | 0.17 | 0.11 | 0.09 | 0.22 |

| ↓ | 0.03 | 0.18 | 0.05 | 0.24 | 0.01 | |

| ↑ | 4.55 | 3.82 | 4.39 | 1.61 | 4.74 | |

| NIQMC↑ | 4.89 | 4.28 | 5.32 | 4.47 | 5.15 | |

| Cityscape | e↑ | 0.11 | 0.13 | 0.02 | 0.08 | 0.19 |

| ↓ | 0.03 | 0.44 | 0.25 | 0.19 | 0.05 | |

| ↑ | 3.69 | 3.17 | 2.01 | 1.87 | 3.96 | |

| NIQMC↑ | 3.98 | 4.96 | 5.11 | 4.20 | 5.03 | |

| Church | e↑ | 0.11 | 0.10 | 0.12 | 0.18 | 0.29 |

| ↓ | 0.07 | 0.06 | 0.08 | 0.03 | 0.04 | |

| ↑ | 4.77 | 3.83 | 2.98 | 3.95 | 5.10 | |

| NIQMC↑ | 3.97 | 4.98 | 5.05 | 4.19 | 5.61 | |

| Riverside | e↑ | 0.06 | 0.09 | 0.10 | 0.14 | 0.25 |

| ↓ | 0.09 | 0.10 | 0.04 | 0.05 | 0.03 | |

| ↑ | 2.85 | 3.68 | 4.17 | 4.23 | 6.51 | |

| NIQMC↑ | 4.71 | 4.39 | 4.97 | 4.85 | 5.43 | |

| Railway | e↑ | 0.18 | 0.13 | 0.15 | 0.07 | 0.27 |

| ↓ | 0.08 | 0.12 | 0.04 | 0.16 | 0.02 | |

| ↑ | 2.68 | 2.74 | 2.30 | 2.87 | 4.98 | |

| NIQMC↑ | 4.25 | 4.19 | 5.03 | 5.24 | 5.48 | |

| Tomb | e↑ | 0.26 | 0.18 | 0.09 | 0.31 | 0.45 |

| ↓ | 0.03 | 0.07 | 0.16 | 0.02 | 0 | |

| ↑ | 5.13 | 4.25 | 3.97 | 5.72 | 7.11 | |

| NIQMC↑ | 5.04 | 4.61 | 4.02 | 5.26 | 6.07 | |

| Building | e↑ | 0.11 | 0.18 | 0.09 | 0.21 | 0.30 |

| ↓ | 0.04 | 0.06 | 0.29 | 0.03 | 0.01 | |

| ↑ | 3.79 | 4.05 | 2.88 | 3.81 | 4.74 | |

| NIQMC↑ | 4.56 | 4.80 | 2.73 | 5.76 | 6.20 | |

| Average | e↑ | 0.12 | 0.16 | 0.11 | 0.05 | 0.29 |

| ↓ | 0.09 | 0.13 | 0.08 | 0.19 | 0.04 | |

| ↑ | 3.29 | 3.45 | 2.16 | 2.37 | 5.62 | |

| NIQMC↑ | 4.80 | 4.93 | 5.15 | 5.04 | 5.86 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, R.; Guo, X.; Shi, Z. SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images. Sensors 2020, 20, 5300. https://doi.org/10.3390/s20185300

He R, Guo X, Shi Z. SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images. Sensors. 2020; 20(18):5300. https://doi.org/10.3390/s20185300

Chicago/Turabian StyleHe, Renjie, Xintao Guo, and Zhongke Shi. 2020. "SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images" Sensors 20, no. 18: 5300. https://doi.org/10.3390/s20185300

APA StyleHe, R., Guo, X., & Shi, Z. (2020). SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images. Sensors, 20(18), 5300. https://doi.org/10.3390/s20185300