1. Introduction

Vision-based positioning systems [

1,

2,

3,

4,

5,

6] have been explored as an alternative for the autonomous navigation of spacecraft and other robotics applications. The Smartphone Video Guidance Sensor (SVGS) is a photogrammetric embedded sensor developed at NASA Marshall Space Flight Center using an Android-based smartphone [

7,

8] for support of proximity operations and formation flight maneuvers in small satellites. SVGS estimates the relative position and orientation of a moving target relative to a coordinate system attached to the camera by capturing an image of a set of retroreflective or illuminated targets mounted on the target in a known geometric pattern. The image is processed using a modification of algorithms originally developed for the Advanced Video Guidance Sensor (AVGS) [

9,

10,

11], which successfully flew on the Demonstration for Autonomous Rendezvous Technology (DART) and Orbital Express missions [

12,

13]. SVGS can be deployed in a variety of robotic platforms by using a camera and CPU available in the target, and is part of the development at NASA Marshall Space Flight Center of a low-cost, low mass, system that enables navigation within proximity distance between small satellites, enabling formation flight and autonomous rendezvous and capture (AR & C) maneuvers. AVGS is capable of estimating the full six-degrees-of-freedom relative position and attitude vector in the near range, and further developments are being investigated to expand its capabilities for long-range proximity operations [

9,

14]. SVGS is similar to AVGS in that both use photogrammetric techniques to calculate the 6 × 1 position and attitude vector of the target. However, the hardware and deployment scenarios of AVGS are significantly different from those of SVGS [

7,

8,

9,

10,

11]. SVGS has not been deployed in space missions yet, but NASA foresees a significant role for SVGS as a leading technology in future rendezvous, docking and proximity operations—a demonstration mission for SVGS on board the International Space Station is currently ongoing. Furthermore, SVGS has potential to be used in a variety of robotic applications where proximity operations, landing, the coordination of agents and docking is needed and can therefore be of potential use to a larger community. “Near range” in SVGS can be somewhere between a few meters to up to 200 m depending on the application: The target dimensions need to be adjusted to fit the required range for a given application. This paper presents a performance assessment of SVGS as a position and attitude sensor for proximity operations. The error statistics of SVGS enable its incorporation (by synthesis of a Kalman estimator) in advanced motion control systems for navigation and guidance.

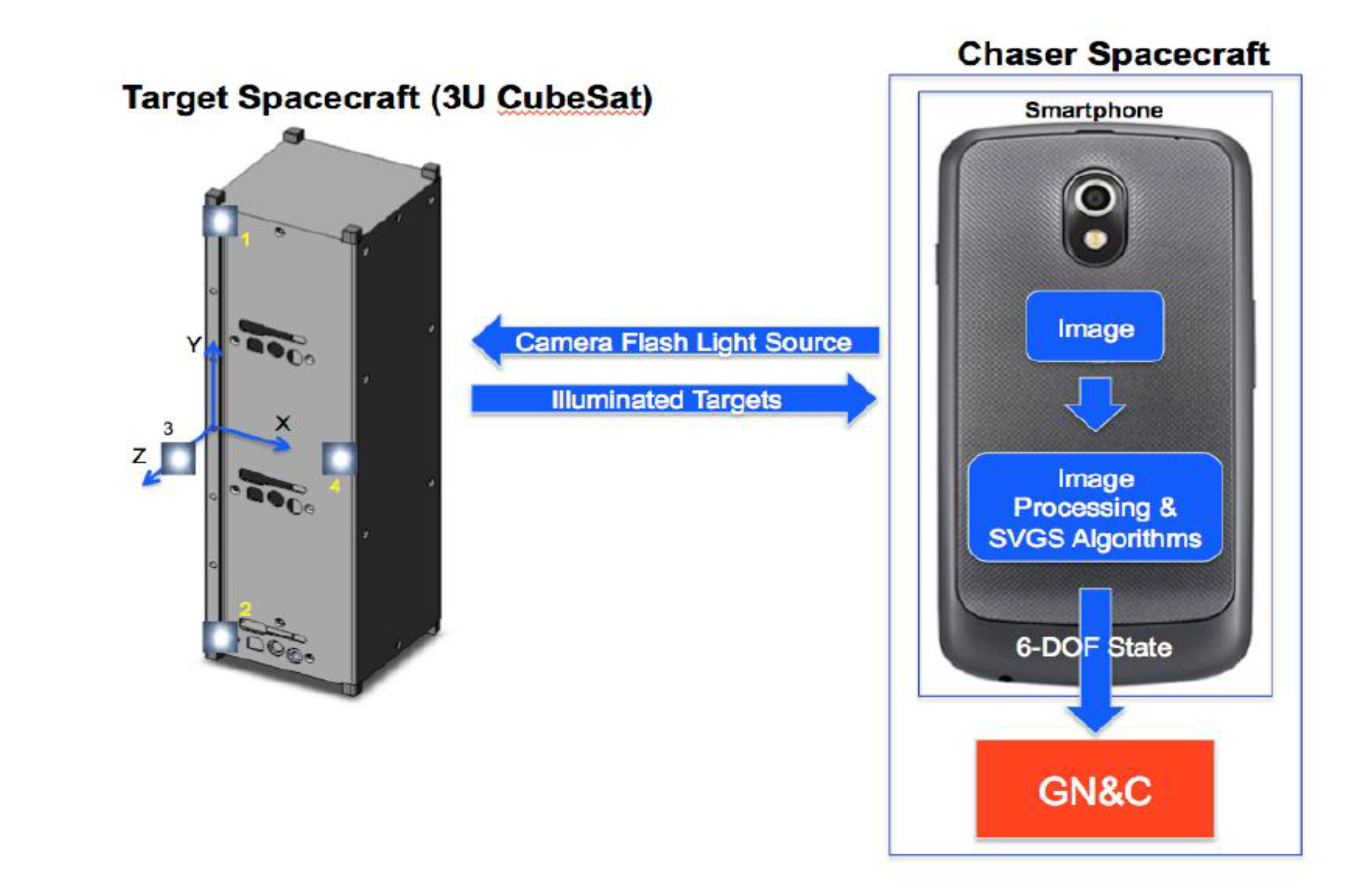

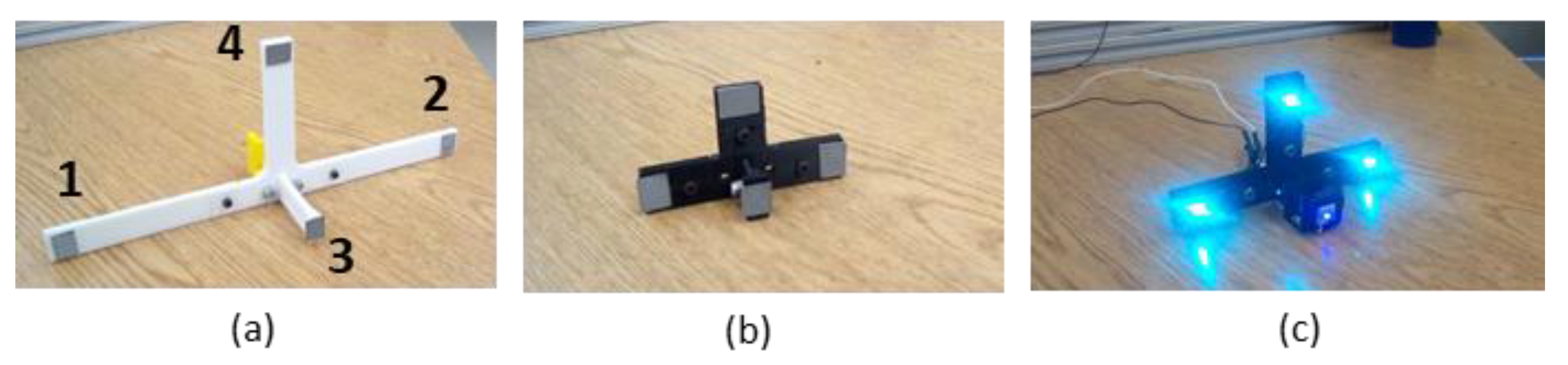

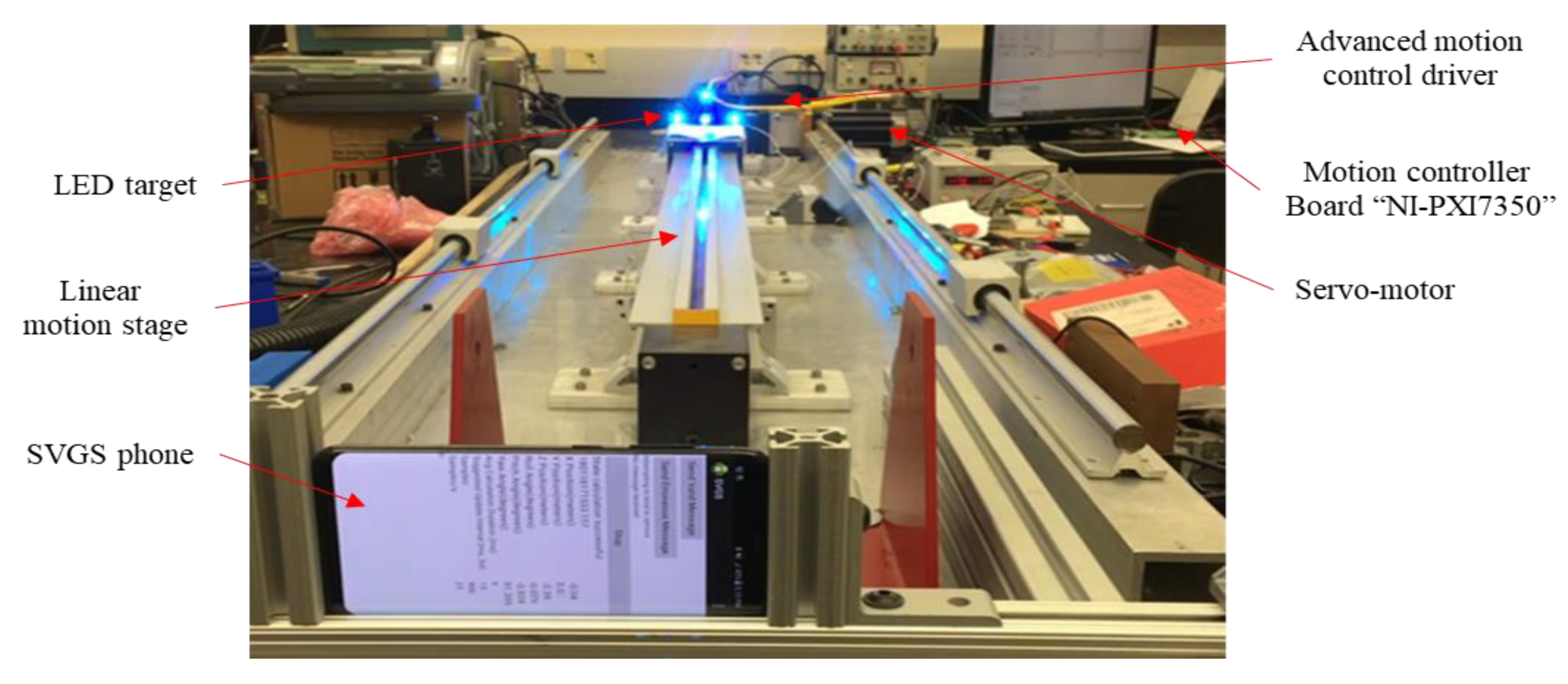

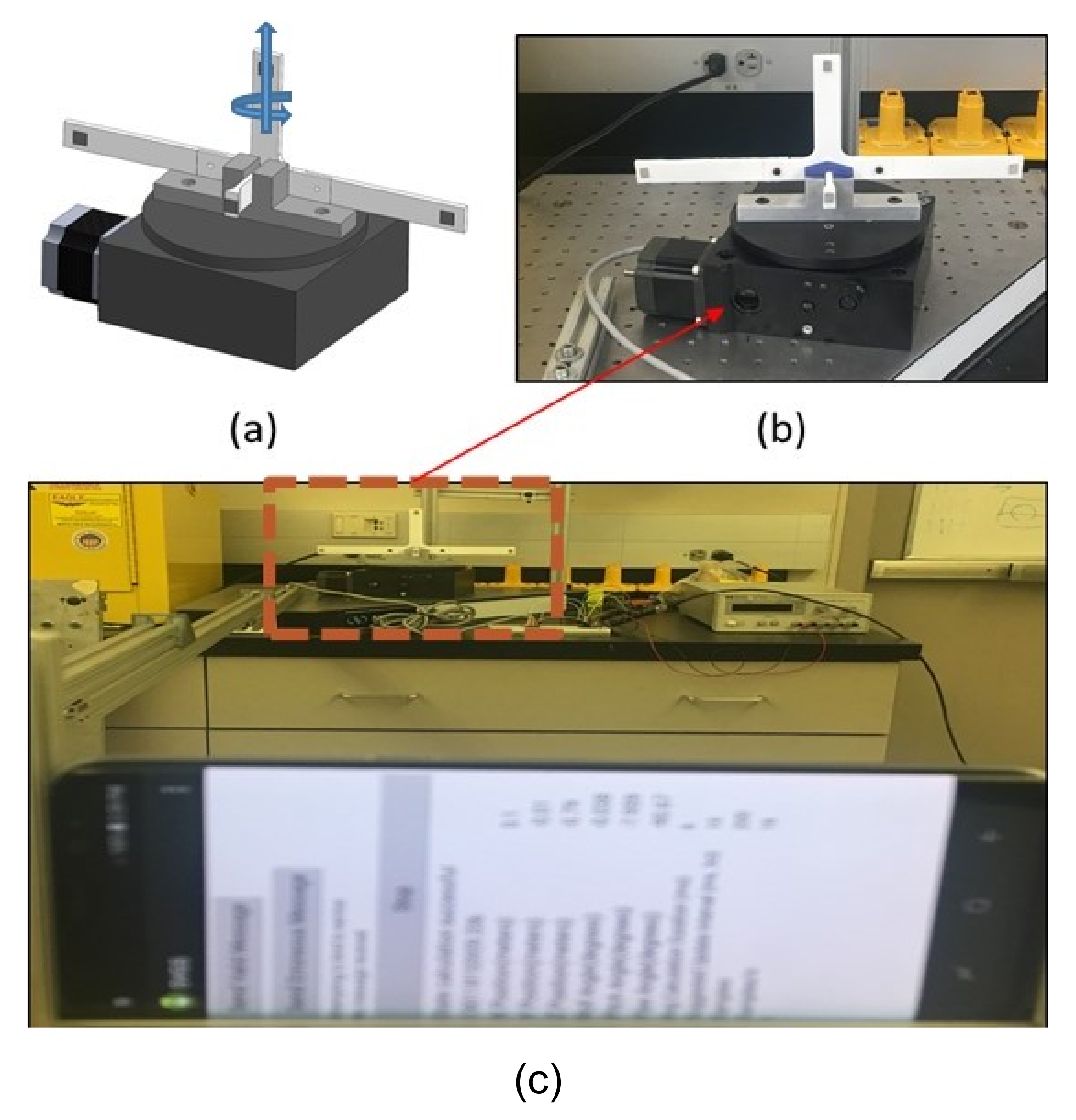

Figure 1 shows the operational concept of SVGS. Estimating the target’s position and attitude relative to the camera’s coordinate system starts with the image capture of a set of illuminated targets. The six-degrees-of-freedom position and attitude vector are estimated using geometric photogrammetry techniques [

7,

8,

15], where all image processing and state estimation are performed onboard the smartphone, alleviating the computational load on a motion control computer.

The basic rendition of the SVGS sensor uses a smartphone camera (

Figure 1) to identify a known pattern of retro-reflective or illuminated targets placed on a target spacecraft. An image of the illuminated targets is captured, and using simple geometric techniques, the six-degrees-of-freedom (DOF) position and attitude state are extracted from the two-dimensional image. While AVGS used a laser as its illumination source and a high-quality CMOS sensor to capture the images, SVGS [

7,

8] uses the camera and flash on a generic Android smartphone. SVGS is a low-mass, low-volume, low-cost implementation of AVGS, designed for application on CubeSats and other small satellites to enable autonomous rendezvous and capture (AR & C) and formation flying.

In SVGS, the complete state calculation, including image capture, image processing and relative state derivation is performed on the Android device, and the 6-DOF relative state is calculated. The computed state can then be used by other applications or passed from the phone to other avionics onboard a small satellite as input data for the guidance, navigation and control functions.

Target Pattern and Coordinate System. The target pattern used for SVGS is a modified version of the AVGS pattern. A target example is shown on a 3U CubeSat mockup in

Figure 1. Three illuminated targets are mounted coplanar (at the edges of the long face of the CubeSat), while a fourth is mounted on a boom. By placing the fourth illuminated target out of plane relative to the others, the accuracy of the relative attitude calculations is increased. The SVGS target spacecraft coordinate system is defined as follows: (i) the origin of the coordinate system is at the base of the boom, (ii) the

y-axis points along the direction from target 2 to target 1, (iii) the

z-axis points from the origin towards target 3, and (iv) the

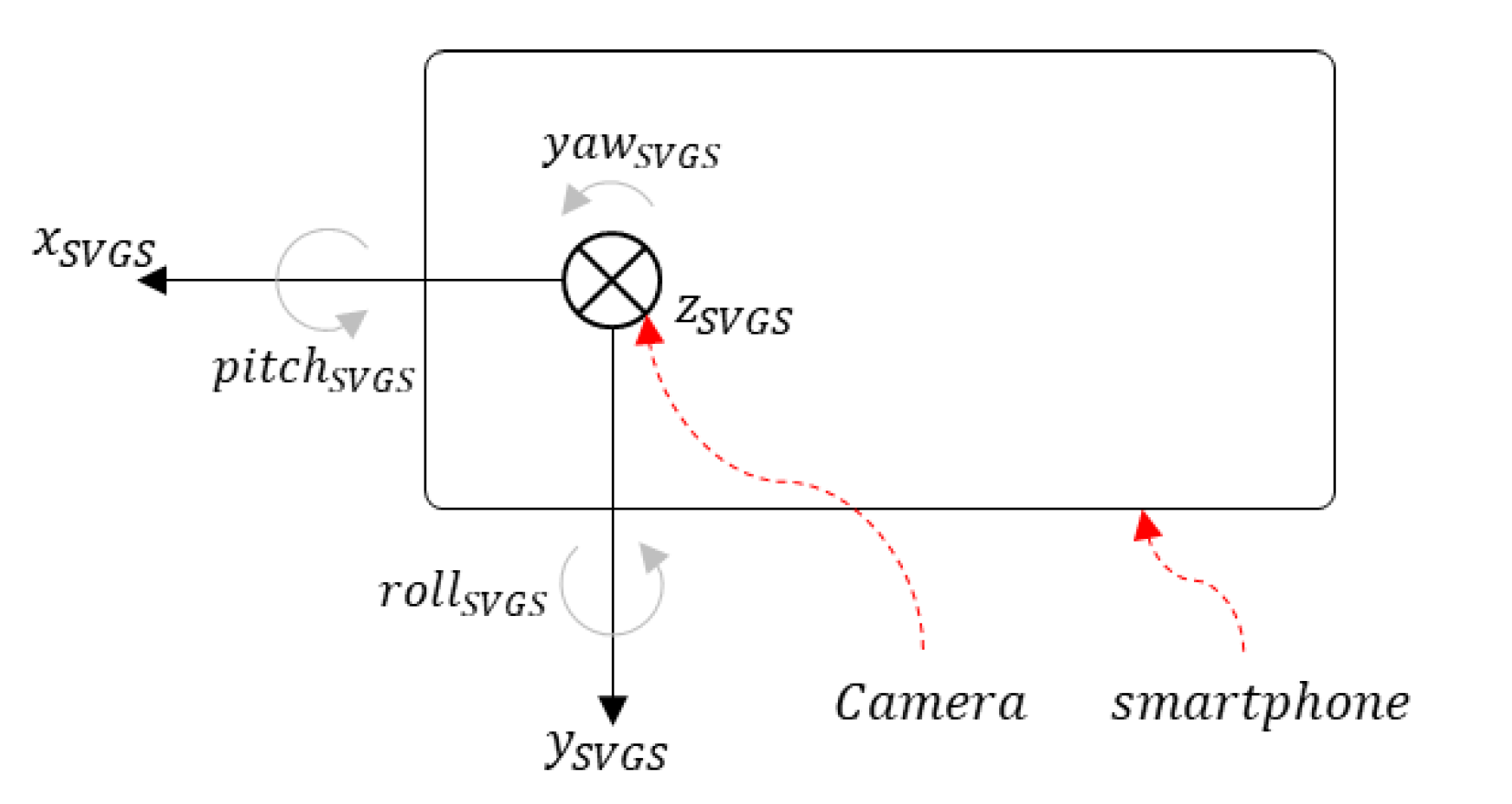

x-axis completes the right-handed triad. The 6-DOF position/attitude vector calculated by the SVGS algorithm is defined in a coordinate system with the same orientation as above but with the origin located at the center of the image plane formed by the smartphone camera.

AVGS uses the Inverse Perspective algorithm [

10] to calculate the 6-DOF relative state between the target and chase vehicles. SVGS, on the other hand, uses photogrammetry techniques [

13,

14] and an adaption of the collinearity equations developed by Rakoczy [

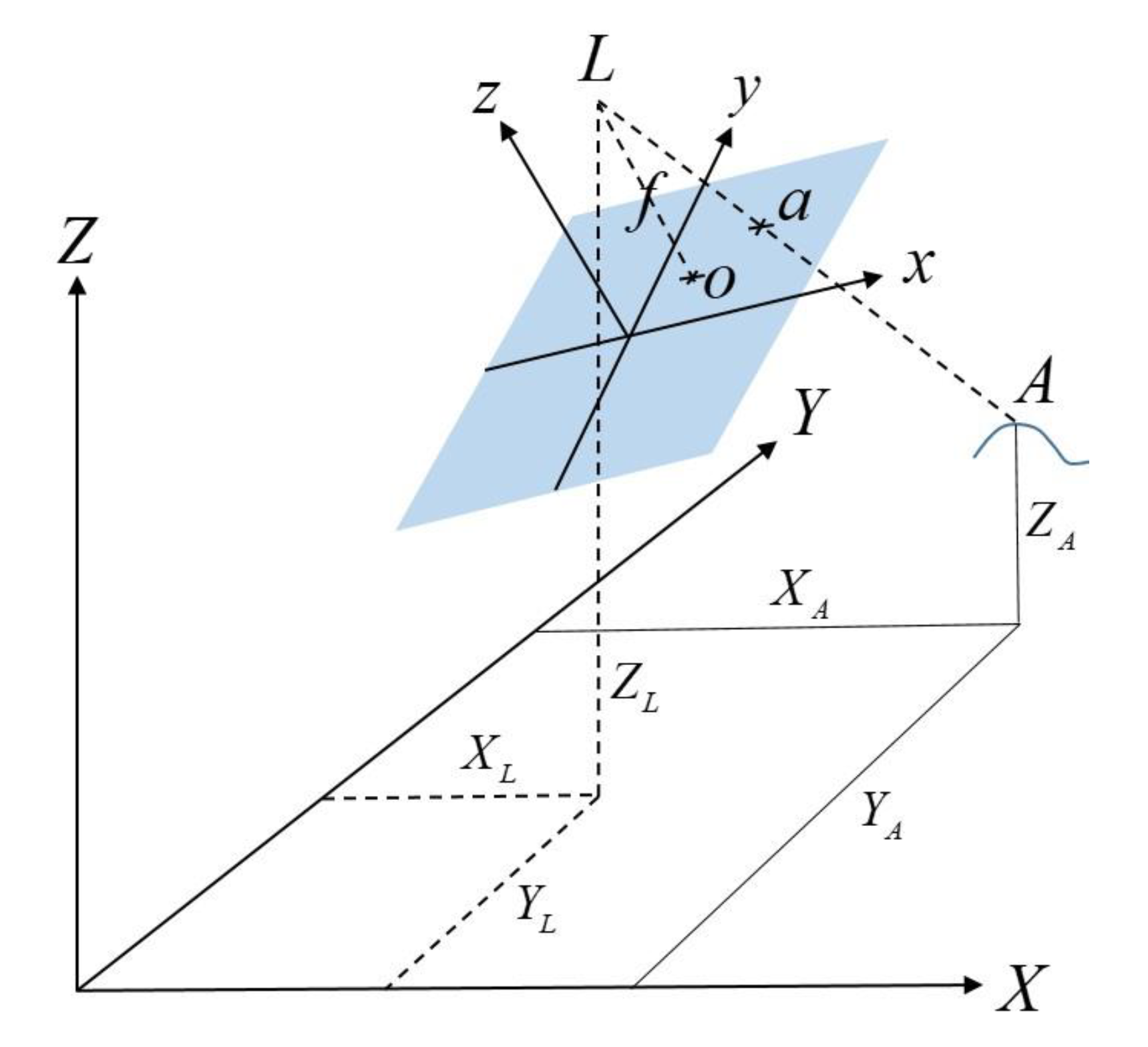

8] to solve for the desired state vector. If a thin lens camera system captures point A as shown in

Figure 2 [

8], all light rays leaving point A and entering the camera are focused at point L (called the perspective center), at the location of the camera lens. An image of point A on the image plane is represented as point “a”; the image plane is located a distance

f from the perspective center, where

f is the focal length of the lens.

Figure 2 shows two coordinate frames: the object (or target) frame <

X,

Y,

Z>, and the image (or chase) frame <

x,

y,

z>. A vector from the perspective center to point “A” can be defined in the object frame as

vA, while a vector to point “a” from the perspective center is

va:

These two vectors are related by Equation (2), where k is a scaling factor and M is a rotation matrix representing an x, y, z rotation sequence transforming the object frame to the image frame:

Dropping the “

a” and “

A” subscripts and solving for the image frame coordinates

x,

y and

z of point “a”, followed by dividing by “

z” to eliminate the scaling factor

k, yields the following two equations for

Fx and

Fy, where the

mij values are elements of the direction cosine matrix

M:

The relative 6-DOF state vector that needs to be solved for is

V is defined below, where

ϕ,

θ and

ψ represent the

x,

y and

z rotation angles, respectively:

Linearizing

Fx and

Fy using a Taylor series expansion truncated after the second term yields:

where

V0 is an initial guess for the state vector, and Δ

V is the difference between this guess and the actual state vector:

εx and

εy are the

x and

y error due to the Taylor series approximation. Each of the four targets in the SVGS target pattern has a corresponding set of these two equations; the resulting eight equations can be represented in matrix form using the following notation:

This equation is solved for the V that minimizes the square of the residuals ε. This value is then added to the initial estimate of V to get the updated state vector. The process is iterated until the residuals are sufficiently small, yielding the final estimate of the 6-DOF state vector V.

SVGS Collinearity Formulation. In SVGS, the general form of the collinearity equations described above is narrowed down to reflect the state vector formulation used by AVGS. AVGS sensor measurements used angle pairs, azimuth and elevation, measured in the image frame to define the location of each retro-reflective target in the image. Azimuth and elevation are measured with respect to a vector to the perspective center and the target locations in the captured image. Azimuth,

Az, (Equation (11)), and elevation,

El (Equation (12)), replace Equations (3) and (4) to yield:

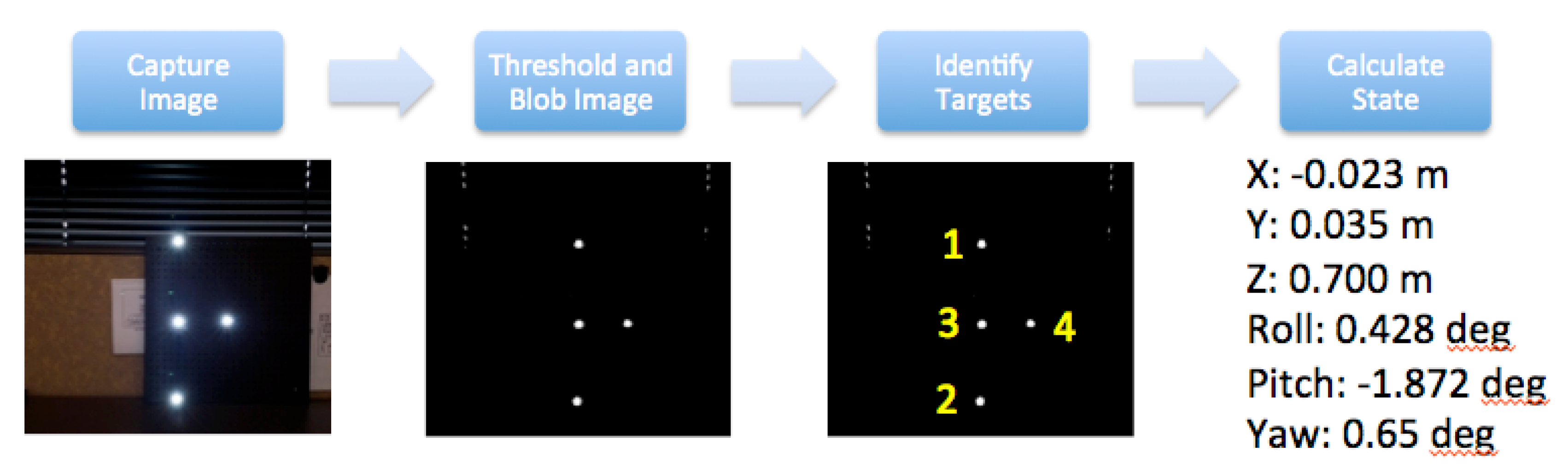

Implementation of the SVGS Algorithm. The SVGS calculation begins with the capture by the smartphone camera of the illuminated pattern on the target spacecraft. The image is then processed: the image is first converted to a binary image using a specified brightness threshold value. Blob extraction is performed on the binary image to find all bright spot locations. Location, size and shape characteristics of the blobs are captured. Depending on whether there are any other objects in the field of view that may generate bright background-noise spots, the number of blobs may exceed the number of targets. To account for any noise and to properly identify which target is which, a subset of four blobs is selected from among all that are identified, and some basic geometric alignment checks derived from the known orientation of the targets are applied. This process is iterated until the four targets have been identified and properly labeled. The target centroids are then fed into the state determination algorithms. Using the collinearity equation formulation, the relative state is determined using a least-squares procedure. The SVGS algorithm flow is shown in

Figure 3 [

7].

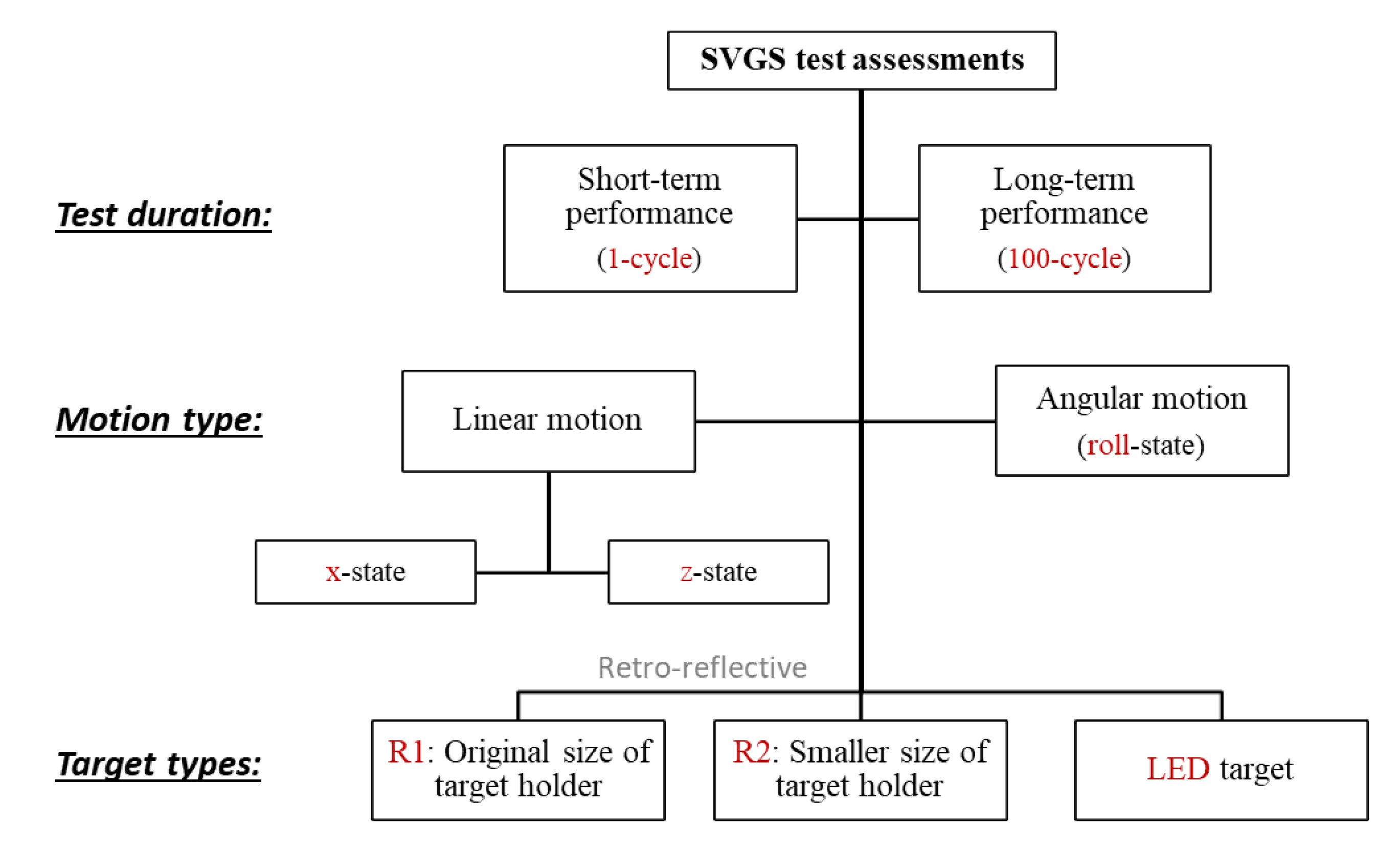

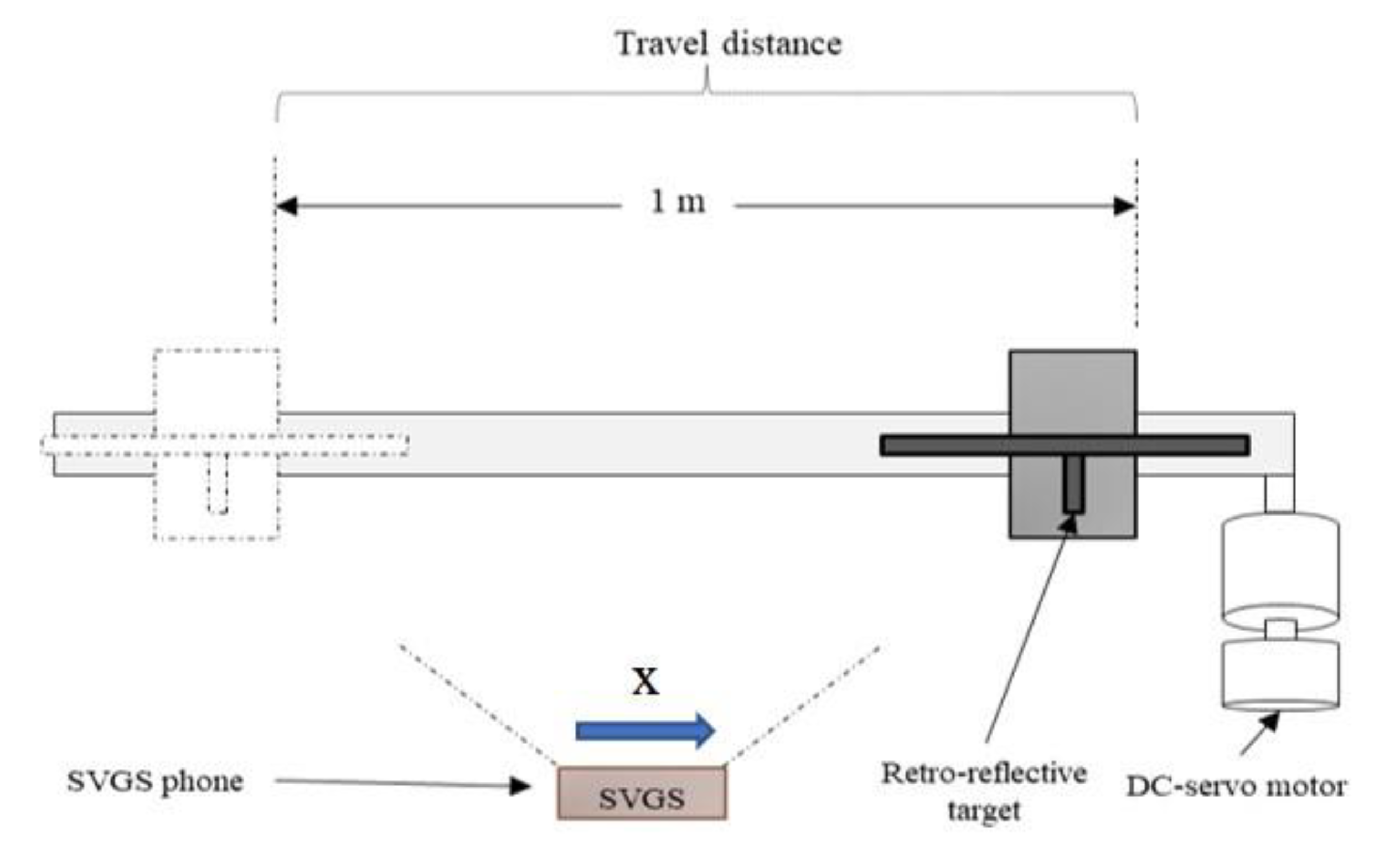

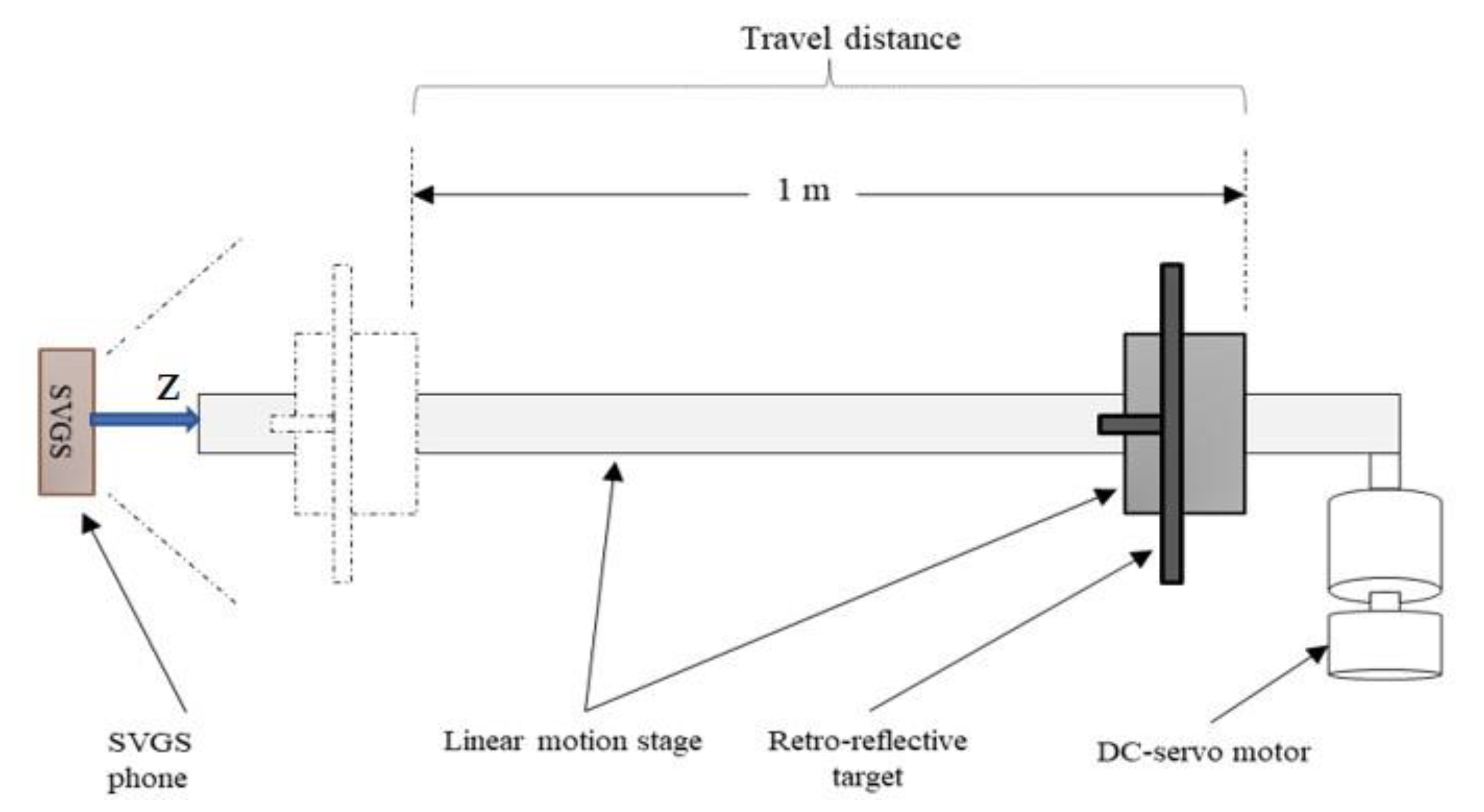

4. Discussion

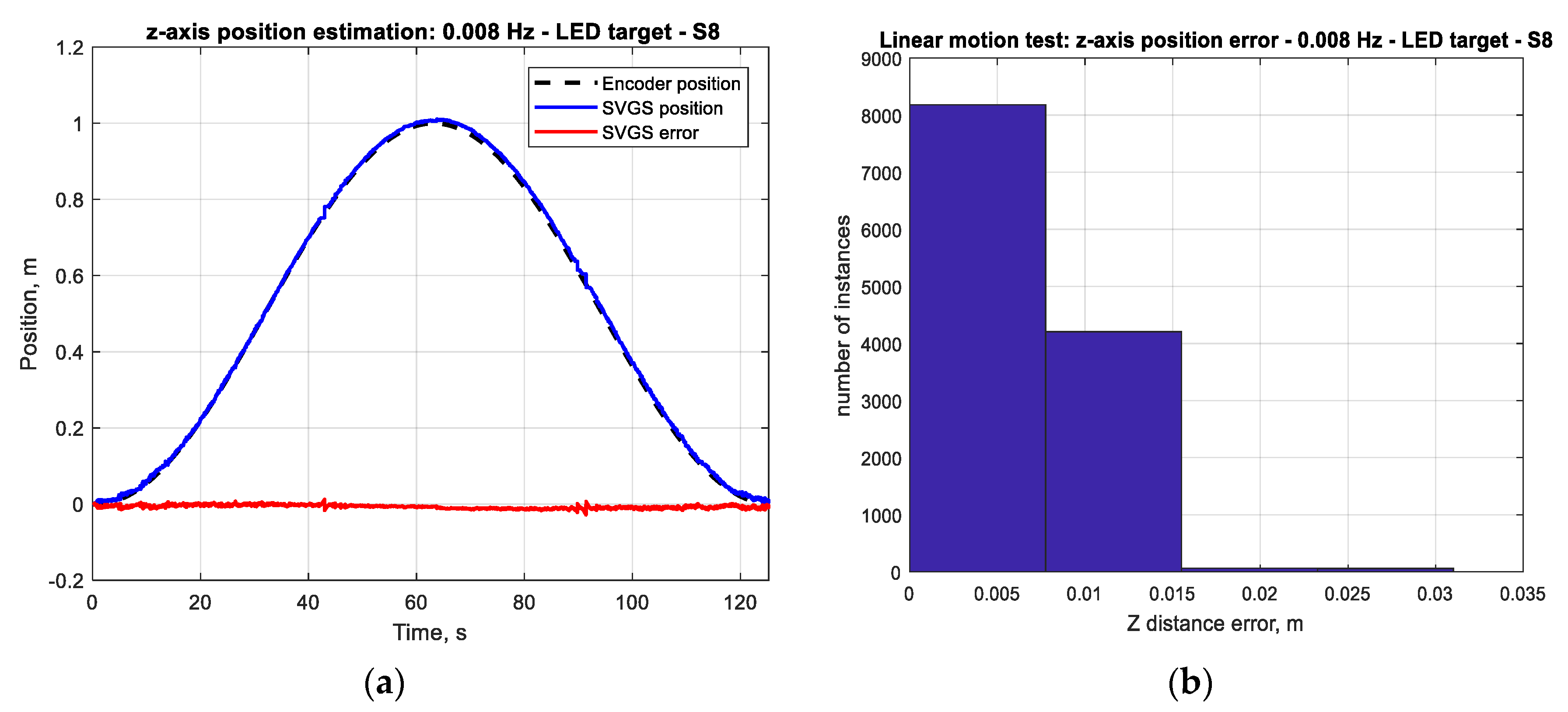

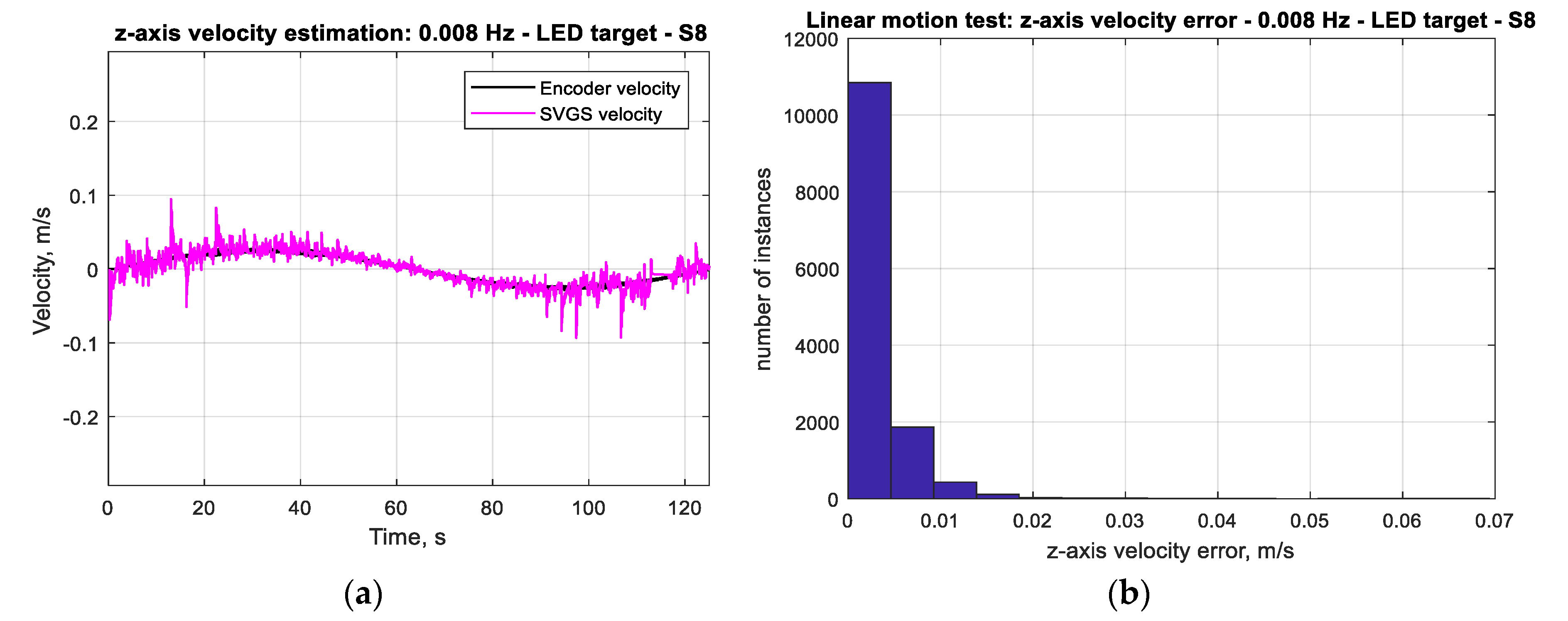

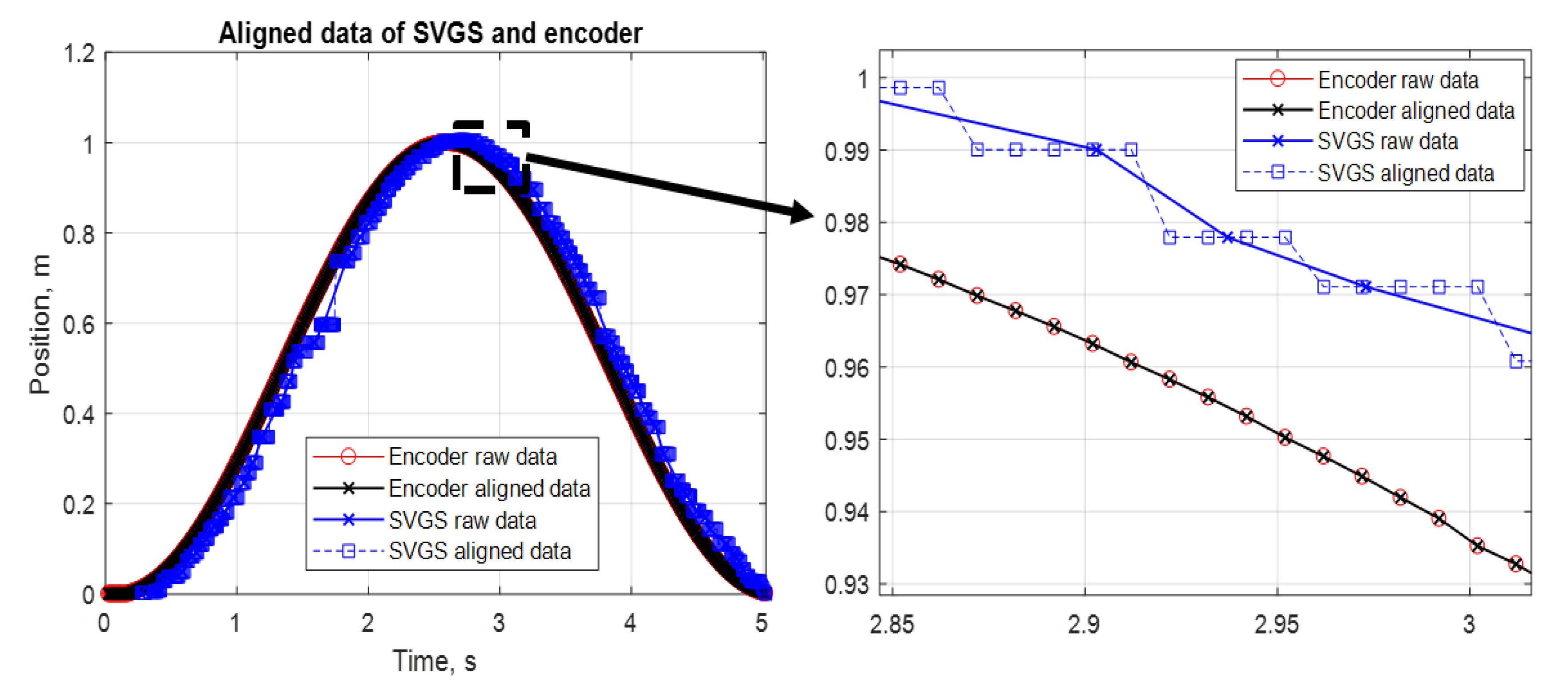

The assessment of SVGS presented in this paper focuses on its use as a feedback sensor in real-time motion control applications, where time samples are evenly spaced. In such applications, SVGS data are used in equally spaced time samples, even though the SVGS native update rate is uneven when implemented on an Android platform.

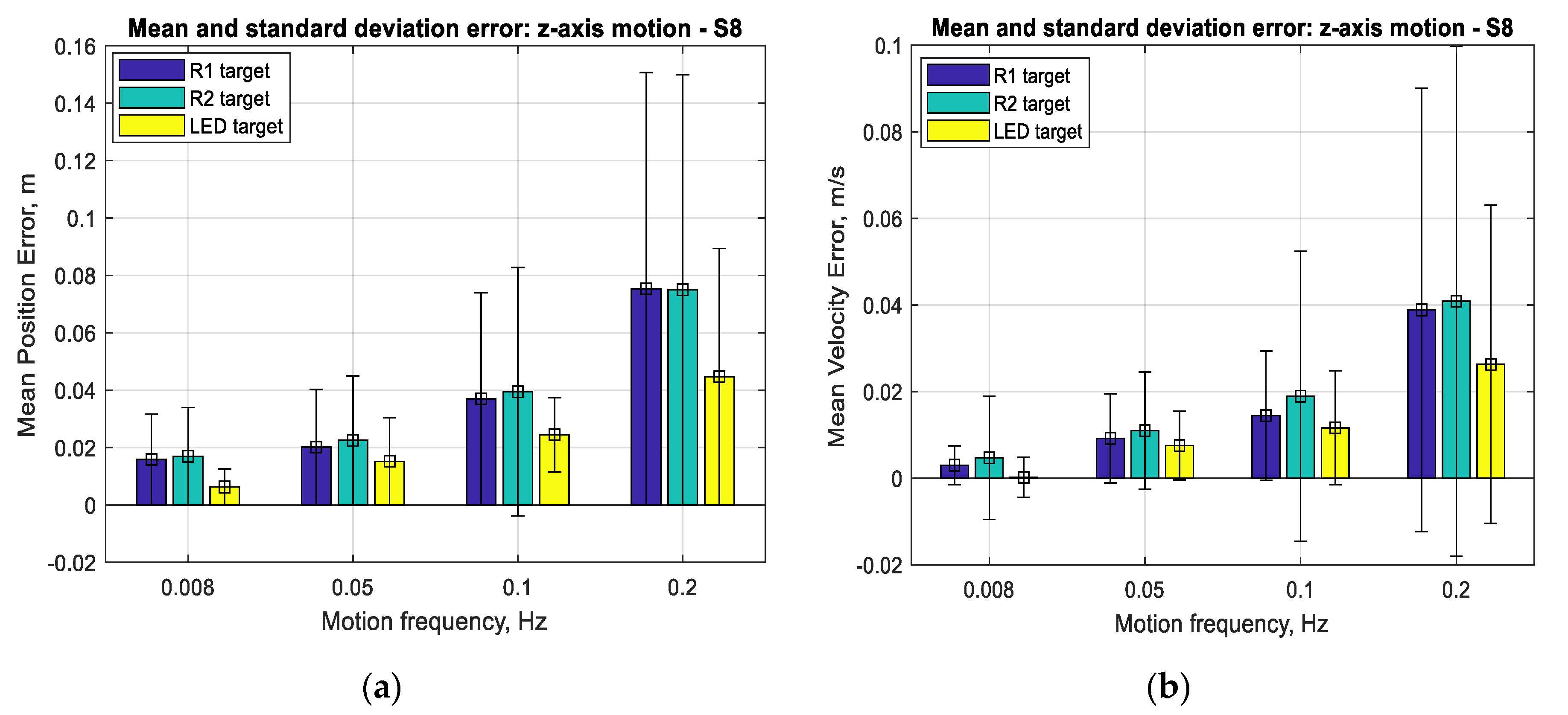

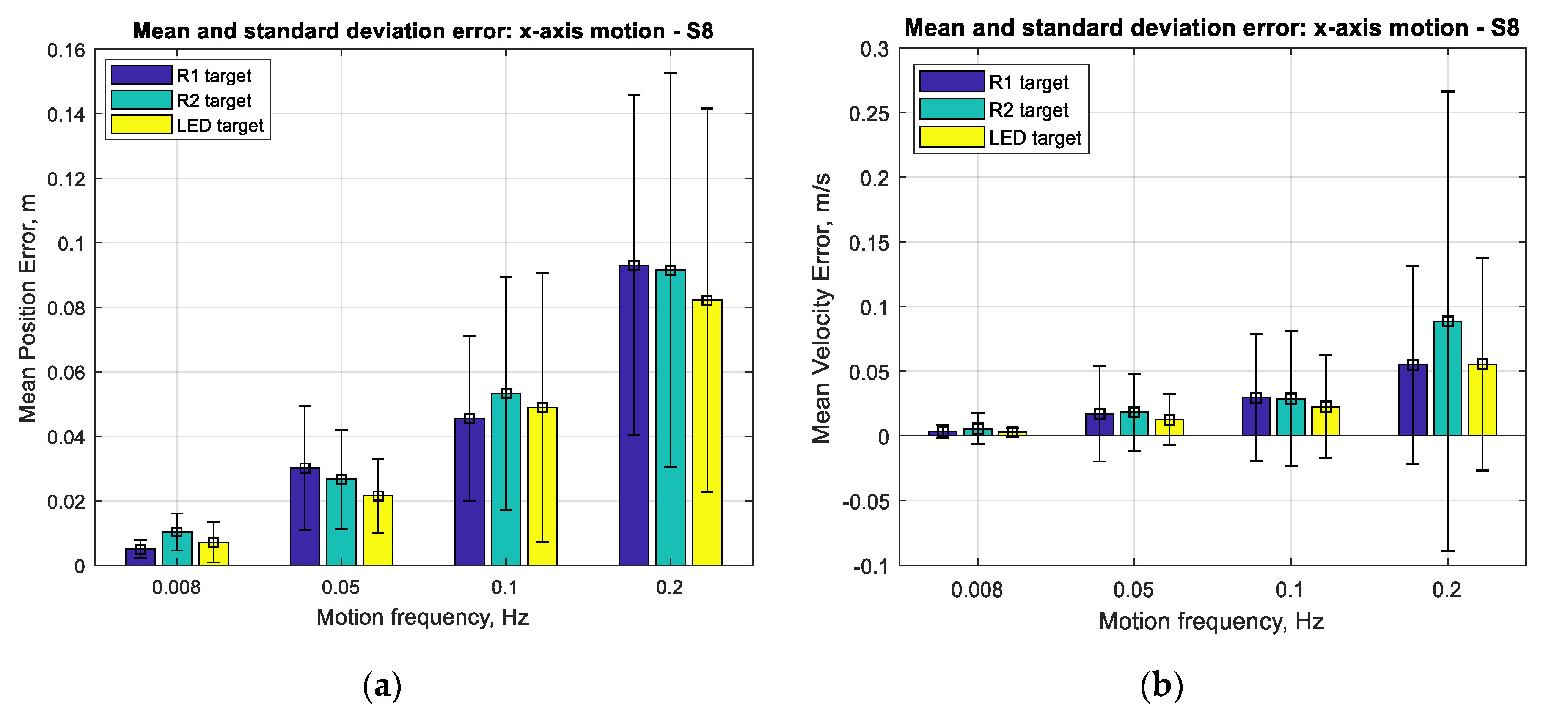

Figure 17 and

Figure 18 show that the mean positioning error for all types of SVGS targets increases with target velocity, as expected. LED targets consistently show smaller positioning errors compared to retro-reflective targets. The same can be said from the corresponding errors in linear velocity estimates, as expected.

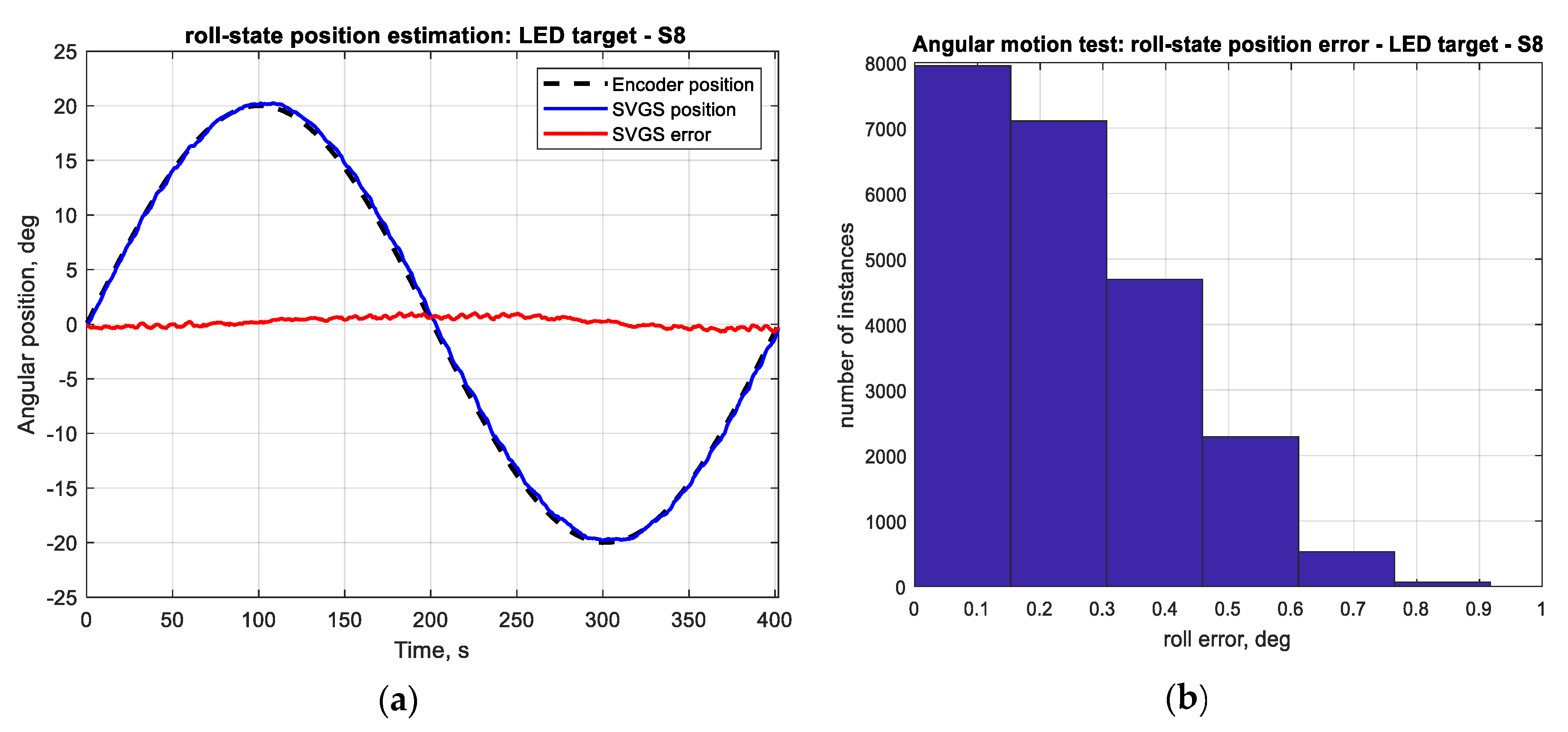

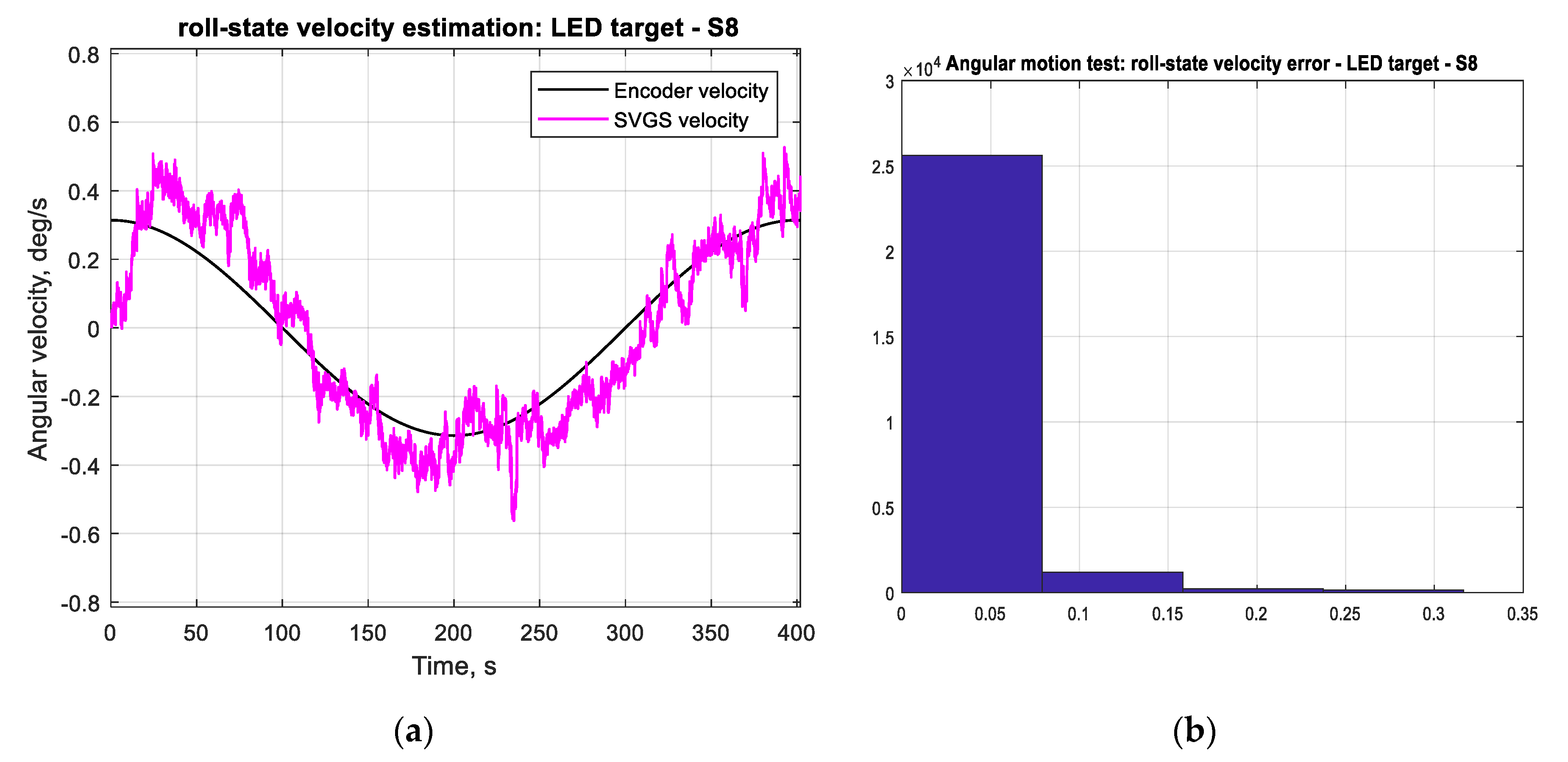

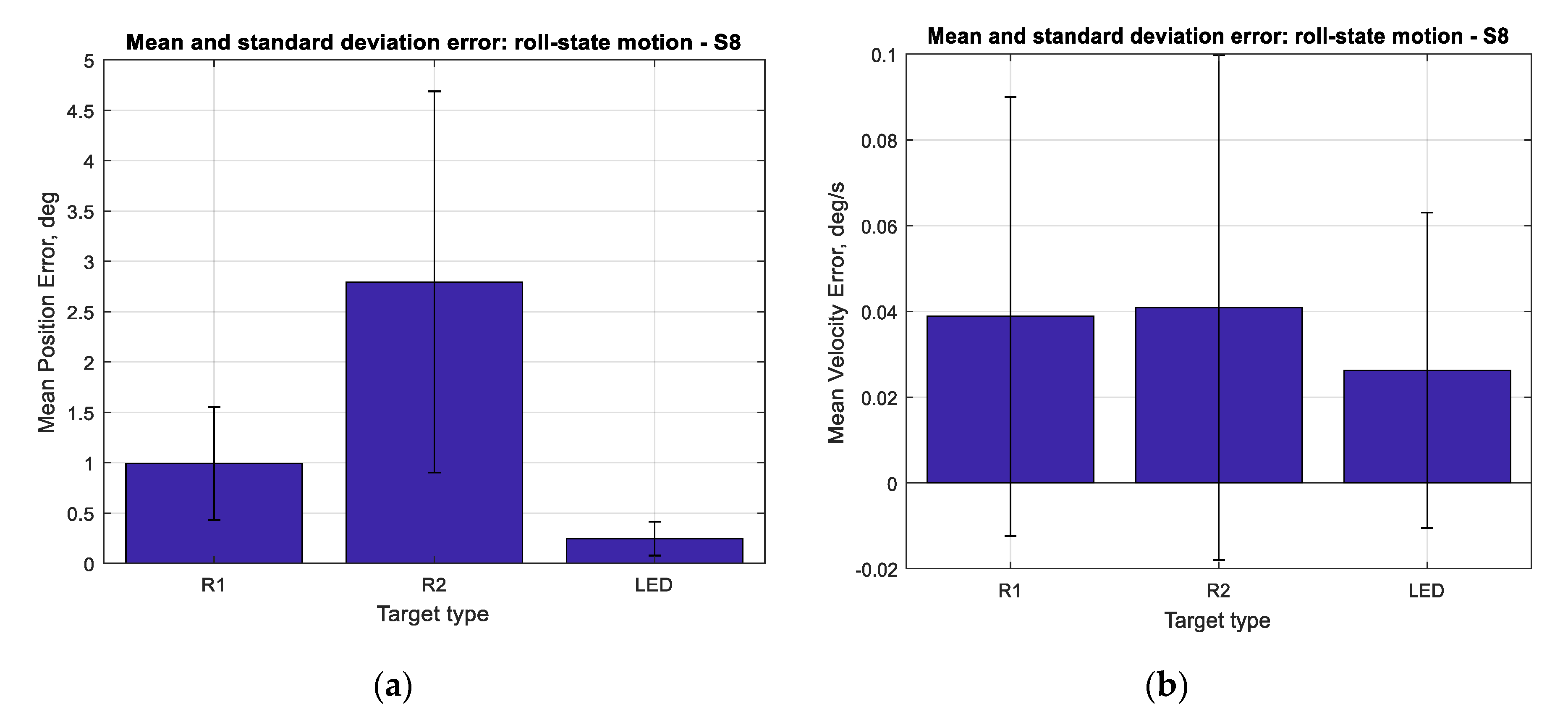

Figure 19 shows the mean errors and standard deviations of the angular position measurements and angular velocity estimates. Angular motion tests also show substantially better performance when using LED targets (a mean error of less than 0.25°) compared to retroreflective targets (a mean error of 1° for the R1 target).

With respect to target size, the main effect on error is given by the overall size of the target holder: the R2 target has a consistently larger mean error for angular position estimates compared to the R1 target. The means of errors in

Figure 17 and

Figure 18 seem large when expressed in meters but are more meaningful (and much smaller) when expressed as percentages of the actual measurements (see

Figure 11a and

Figure 13a, for example). While the errors seem more meaningful when expressed as percentages of the measurements, the error statistics (in meters) are what is needed for use in an SVGS-based Kalman estimator. For this reason, the error results are presented in meters, and not as percentages.

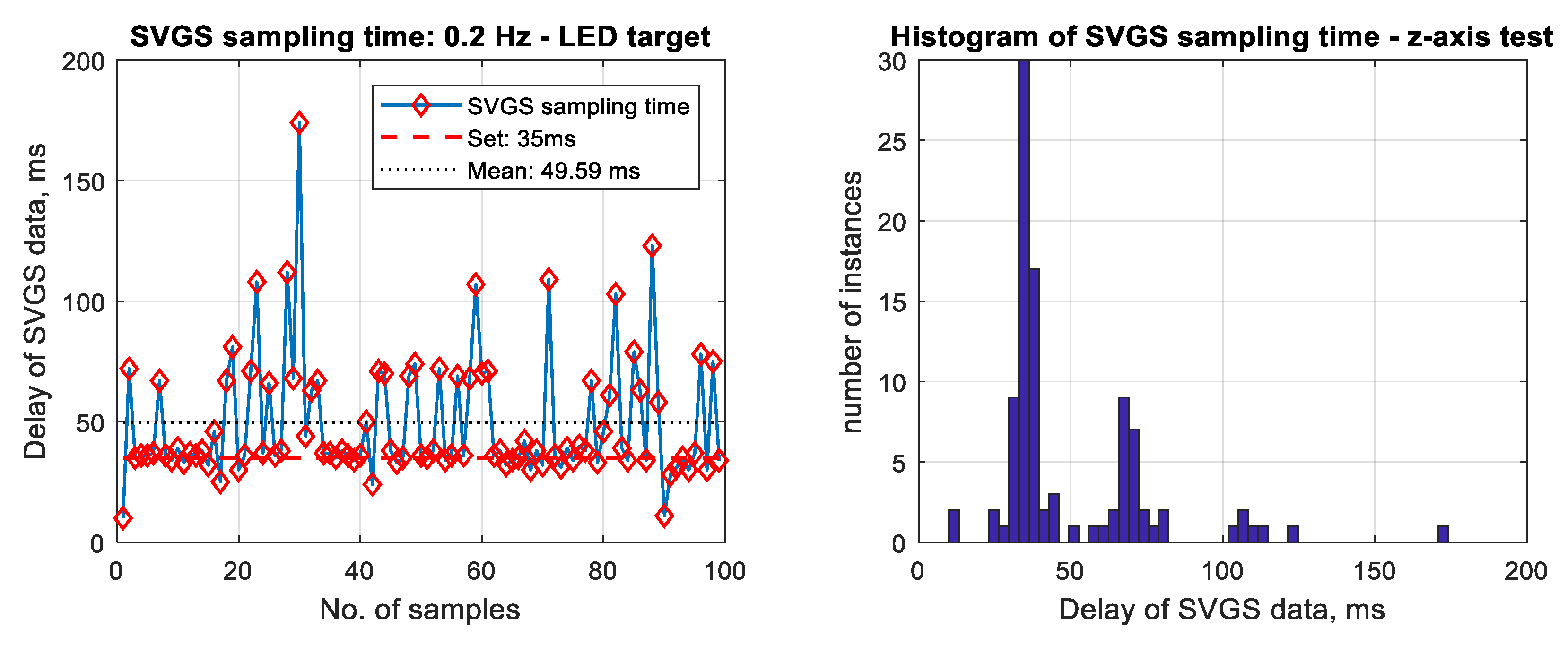

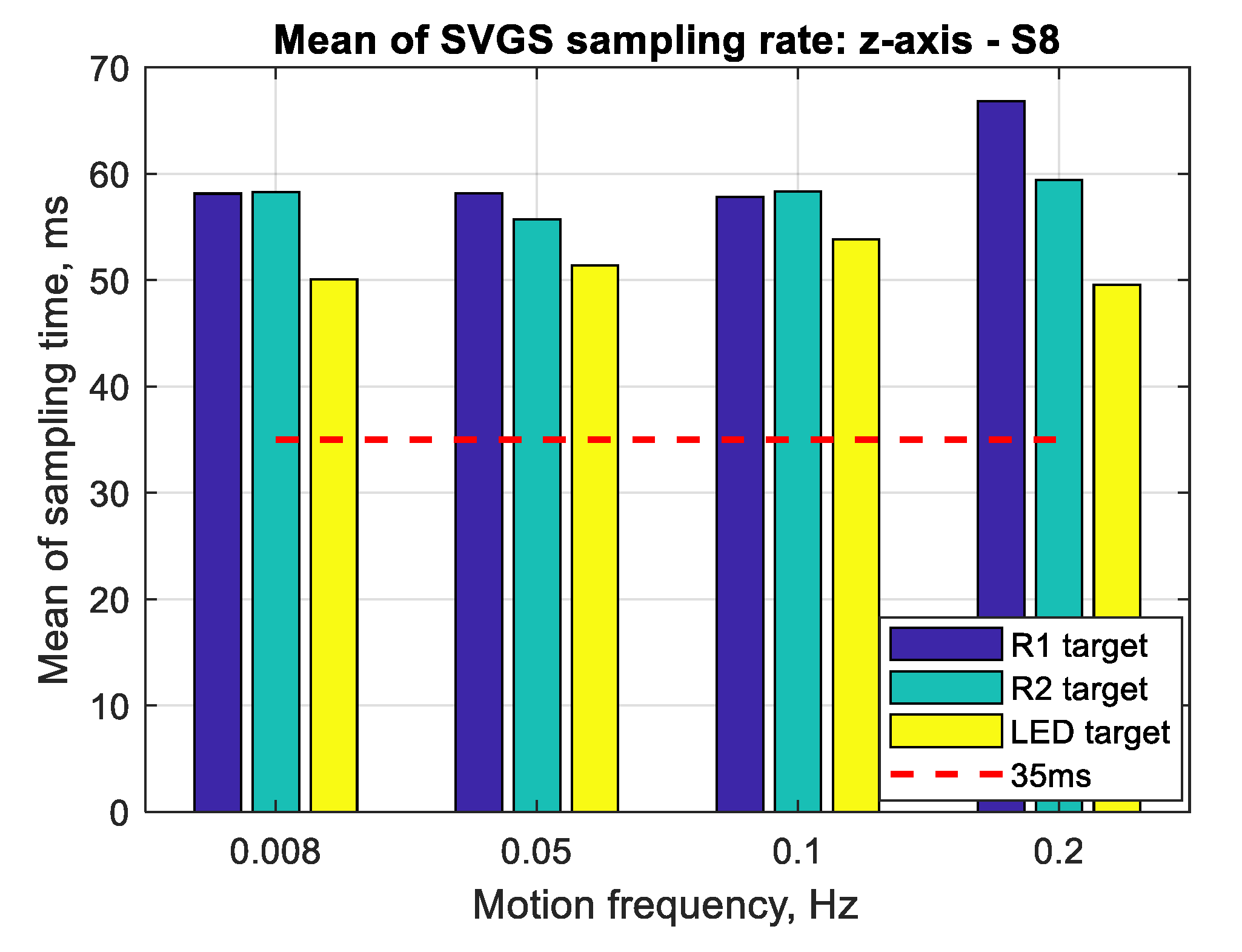

Figure 20 shows the mean SVGS sampling time for different motion velocities and SVGS targets. The test was conducted at 35 msec update rate in the SVGS software: the intention was to identify whether a relationship existed between the target velocity and target type, and the convergence and average sampling rate of SVGS. No significant dependency is found between the sampling time and different motion profiles or types of targets; however, LED targets consistently converge faster and therefore provide a faster sampling rate compared to retro-reflective targets (R1 and R2).

5. Conclusions

The purpose of this paper is two-fold. First, it set out to introduce SVGS to the technical community as a relative position and attitude sensor that can be used for a variety of robotic proximity operations (docking, landing and rendezvous) and not just in space guidance and control, as AVGS has been. Second, the paper describes in detail the error statistics of SVGS, as necessary building blocks for estimating noise and covariance matrices for SVGS. These are fundamental tools for the incorporation of SVGS in motion control systems via Kalman filter and LQG control design methods.

SVGS is a stand-alone relative position and attitude sensor that holds great promise for proximity operations in small satellite applications, such as relative navigation, rendezvous and docking. SVGS provides estimates of the 6DOF position and attitude vector of a target relative to the camera coordinate frame, at sampling rates as high as 35 ms, when deployed on a Samsung S8 smartphone. Since SVGS is a vision-based technique, the error is, of course, dependent on range and camera resolution. To scale the results of this paper to other geometries and camera resolutions, the blob size (the light markers identified in the images) must be scaled with the total resolution (number of pixels) in the image.

This paper provides a quantitative assessment of its performance in terms of accuracy (mean error) and precision (standard deviation). Different factors that affect precision and accuracy were illustrated, such as the effect of the target’s motion profile and the size and type of SVGS targets. The effect of these factors on the sampling time (speed of convergence) of SVGS was also assessed. The exact control of the sampling time is not possible on a sensor that runs on a hardware platform that does not provide strict control of timing (such as most operating systems), but this may not be a critical issue in satellite proximity operations since the target speed is usually small compared to a sensor update rate in the order of 10 Hz or more. The performance of SVGS as a real-time sensor for motion control and the relative navigation of small satellites can be improved based on the specifics of a given application (range, target speed and target size). Future work includes the deployment, testing and assessment of SVGS as motion-control and navigation sensors on other platforms such as single-board PCs and other Android-based systems, thereby eliminating the need for using smartphones for implementing SVGS. SVGS is envisioned to be deployable either on inexpensive, compact platforms (such as the Raspberry Pi 4 or Beaglebone boards) or as a software-based sensor on the user’s platform, such as the guest scientist platform (HLP) on NASA’s Astrobee free-flying robot onboard the ISS, where SVGS can be deployed using both the camera and processor board that are part of the HLP.

The analysis of the error statistics of SVGS presented in this paper enables the incorporation of SVGS as part of a multi-DOF motion-control system by using SVGS error statistics to synthesize a Kalman estimator, which can be part of an advanced control system for navigation and guidance.