1. Introduction

Hyperspectral images (HSIs) provide detailed spectral information about the imaged objects in hundreds of narrow bands, thereby allowing differentiation between materials that are often visually indistinguishable. This has led to numerous applications of hyperspectral imaging in various domains, including geosciences [

1,

2], agriculture [

3,

4,

5], defense and security [

6,

7] and environment monitoring [

8,

9,

10].

Supervised classification plays a vitally important role for analyzing HSIs by assigning image pixels into distinct categories or classes of interest available in a scene, based on a relatively small amount of annotated examples. Recent comprehensive surveys on HSI classification in remote sensing include [

11,

12,

13,

14,

15]. It is generally agreed upon that incorporating spatial context together with spectral information leads to better classification results than using spectral information alone [

16,

17,

18,

19,

20]. Further improvements in the classification accuracy can be obtained by combining multiple data sources, e.g., by augmenting HSI data with Light Detection and Ranging (LiDAR) data [

21,

22,

23], Synthetic Aperture Radar (SAR) data [

24,

25] and/or high-resolution colour images [

26,

27,

28]. Fusion of these multiple data sources is typically accomplished at feature level [

29,

30,

31], or at decision level [

26,

32,

33]. The concept of multiple classifier systems has been widely studied as a method for designing high performance classification systems at the decision level [

34,

35,

36]. Among these, the Dynamic Classifier Selection (DCS) [

37,

38] approach selects the classifier that yields the highest probability of being classified correctly. By design, the combined classifier outperforms all the classifiers it is based on [

33,

39,

40]. The key idea of DCS is to identify the best classifier dynamically for each sample from a set of classifiers. This classifier is usually selected based on a local region of the feature space where the query sample is located in. Most works use the K-Nearest Neighbors technique (grouping samples with similar features) to define this local region [

41,

42,

43]. Then, for a given unseen sample the best classifier is estimated based on some selection criteria [

44,

45]. In this work, we group samples differently, by incorporating the robustness concept to the model specification.

While current machine learning systems have shown excellent performance in various applications [

46,

47,

48], they are not yet sufficiently robust to various perturbations in the data and to model errors to make them reliably support high-stakes applications [

49,

50,

51]. Therefore, increasing attention is being devoted to various robustness aspects of the models and inference procedures [

52,

53,

54]. The work in [

55,

56] proposed robust methods by adopting empirical Bayesian learning strategies to parameterize the prior and used this Bayesian perspective for learning autoregressive graphical models and Kronecker graphical models. Earlier work by one of us [

57] analyzed the global sensitivity of a maximum a posteriori (MAP) configuration of a discrete probabilistic graphical model (PGM) with respect to perturbations of its parameters, and provided an algorithm for evaluating the robustness of the MAP configuration with respect to those perturbations. For a family of PGMs, obtained by perturbation, the critical perturbation threshold was defined as the maximum perturbation level that does not alter the MAP solution. In classification problems, these thresholds determine the level to which the classifier parameters can be altered without changing its prediction. The experiments in [

57] empirically showed that instances with higher perturbation thresholds tend to have a higher chance of being classified correctly (when evaluated on instances with similar perturbation thresholds). We combined this property with DCS and applied it to classification in our earlier work [

58], but only as a proof of concept for toy cases with binary classes and two classifiers. In a follow up work [

59] we presented an abstract concept of how the robustness measures can be employed to improve the classification performance of DCS in HSI classification.

Here we develop a novel robust DCS (R-DCS) model in a general setting with multiple classes and multiple classifiers, and use it to take into account the imprecision of the model that is caused by errors in the sample labels. The main novelty lies in interpreting erroneous labels as model imprecision and addressing this problem from the point of view of the robustness of PGMs to model perturbations; this also sets this work apart from our previous—more theoretical—work on robustness of PGMs [

57,

58,

59], which did not consider the problem of erroneous label. The main issue with erroneous labels, also referred to as noisy labels [

60,

61], is that they mislead the model training and severely decrease the classification performance [

62,

63,

64]. Recent works that address this problem usually focus on noisy label detection and cleansing [

65,

66,

67]. However, detection of erroneous labels is never entirely reliable, and their correction even less so, especially when the sample labels are relatively scarce or spatially scattered across the image. Thus, it is imperative to study the robustness of classifier models under different levels of label noise and to understand how the performance of different classifiers deteriorates with label noise.

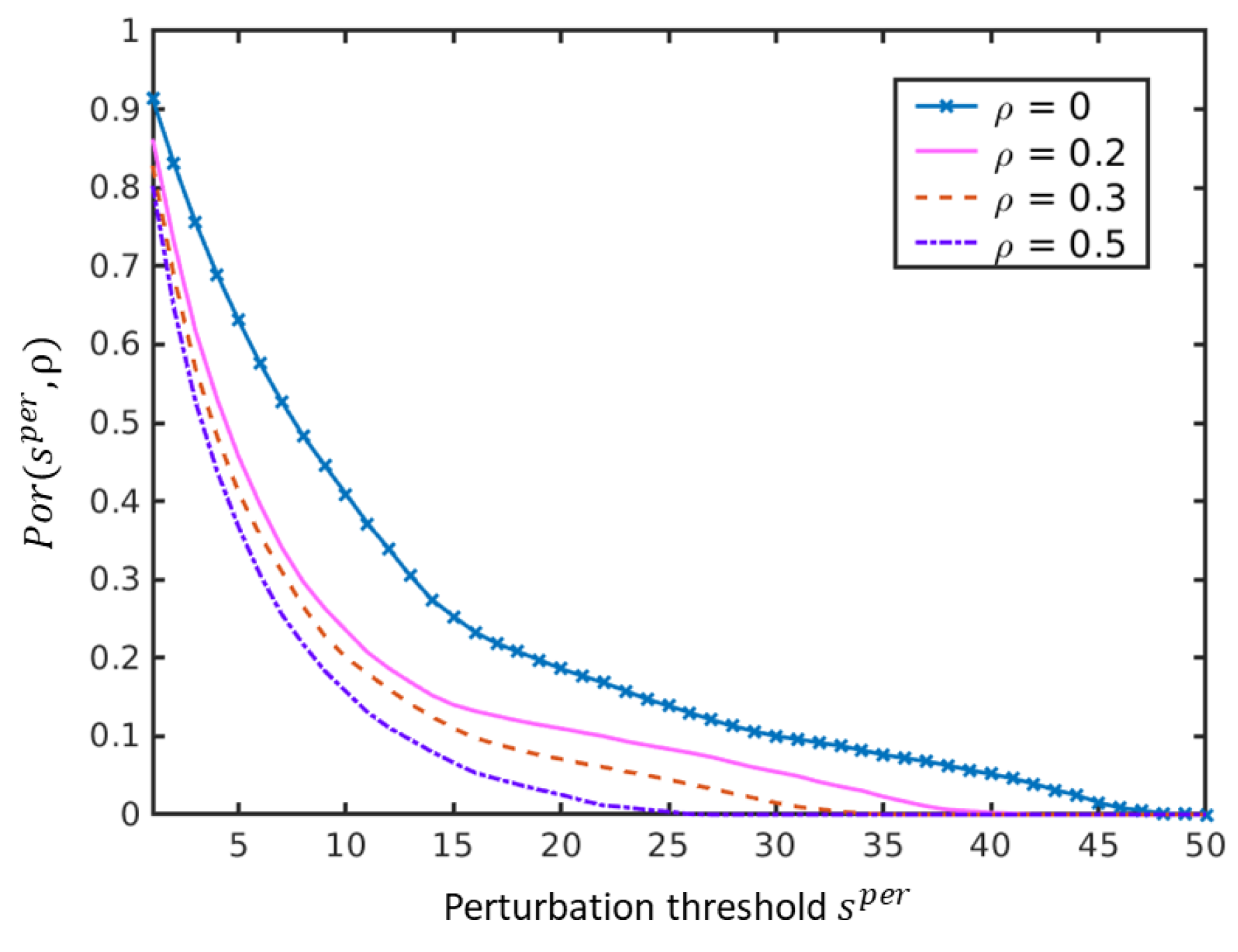

We hypothesize that the framework of imprecise probabilities can offer a viable approach to improve a classifier’s robustness to low-to-moderate amounts of label noise. Therefore, we build our robust DCS (R-DCS) model based on an imprecise probabilistic extension of a classical PGM. Particularly, we build on Naive Bayes Classifiers (NBCs), but it is possible to extend the proposed framework to other classification models. We use an adapted version of the Imprecise Dirichlet Model (IDM) [

68] to perturb the local probability mass functions in the model to corresponding probability sets. This imprecise probabilistic extension of an NBC is called a Naive Credal Classifier (NCC) [

69]. The amount of perturbation of such an NCC is determined by a hyperparameter that specifies the degree of imprecision of the IDM. The maximum value of the hyperparameter under which the NCC still remains determinate—yields a single prediction—is the perturbation threshold of the NCC. Such perturbation thresholds essentially show how much we can vary the local models of the NBC without changing the prediction result, thereby providing us with a framework for dealing with model uncertainty.

The influence of label noise on the classification performance was studied earlier from a different perspective in [

70,

71]. The work in [

70] showed experimentally that NBCs yield favourable performance in the presence of label noise compared to classifiers based on KNN, support vector machine (SVM) and decision trees [

72]. These conclusions were based on empirical classification results on thirteen synthetic datasets, designed for several selected problems from the UCI repository [

73]. The work in [

71] empirically analyzed the effect of class noise on supervised learning on eight medical datasets. Only the end classification results were analysed in both works.

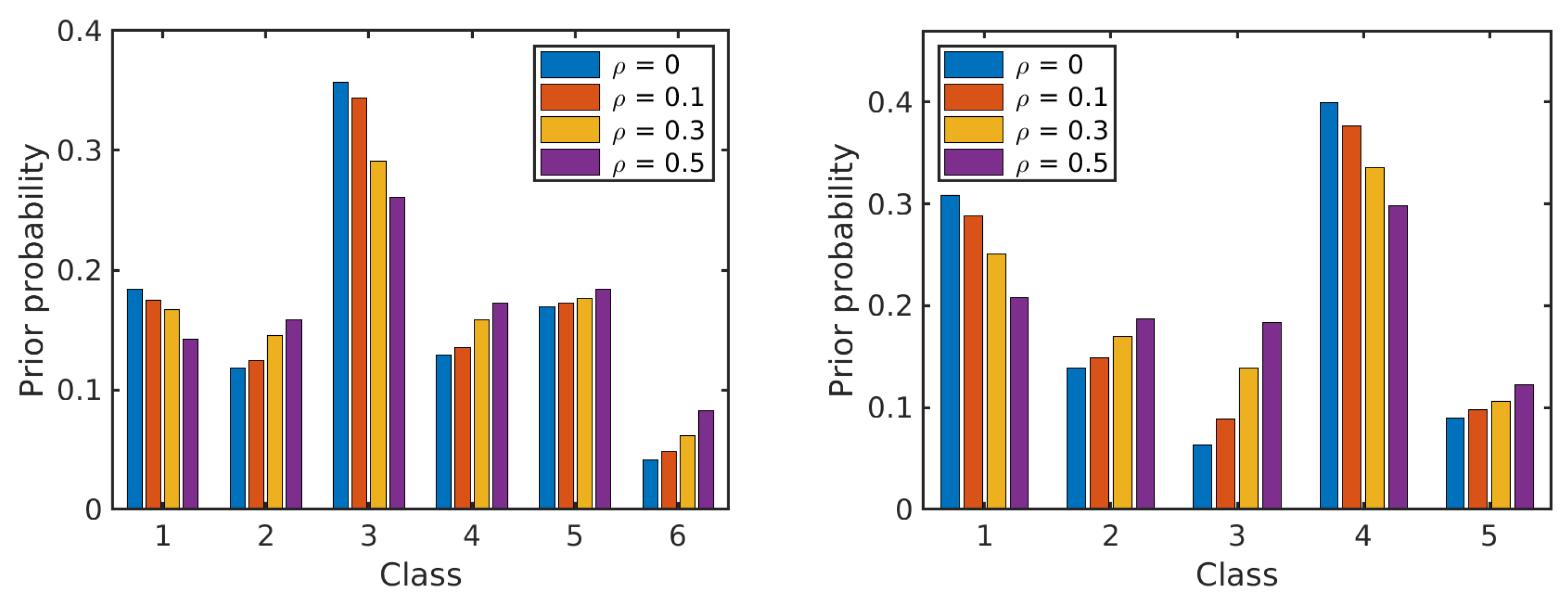

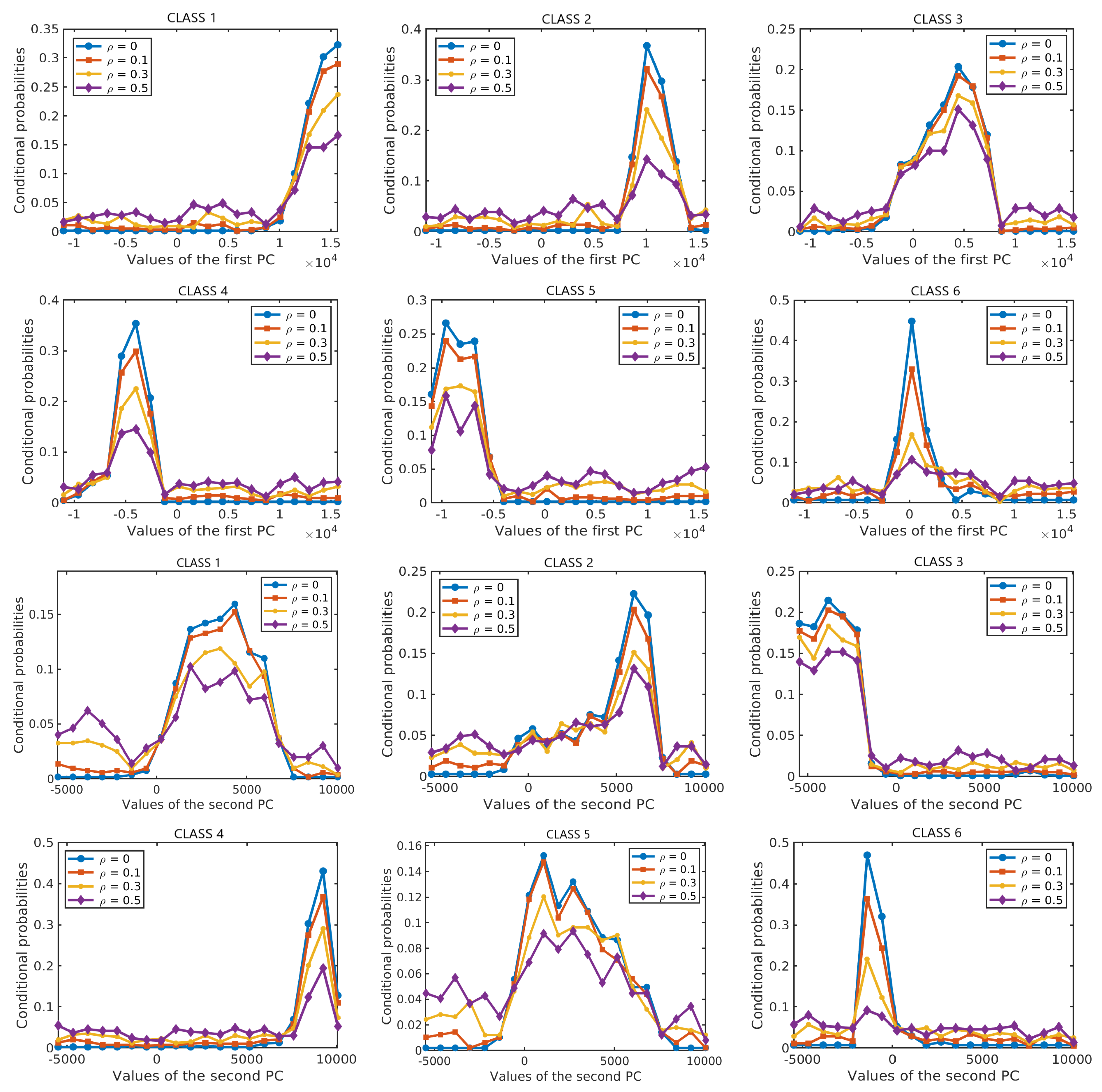

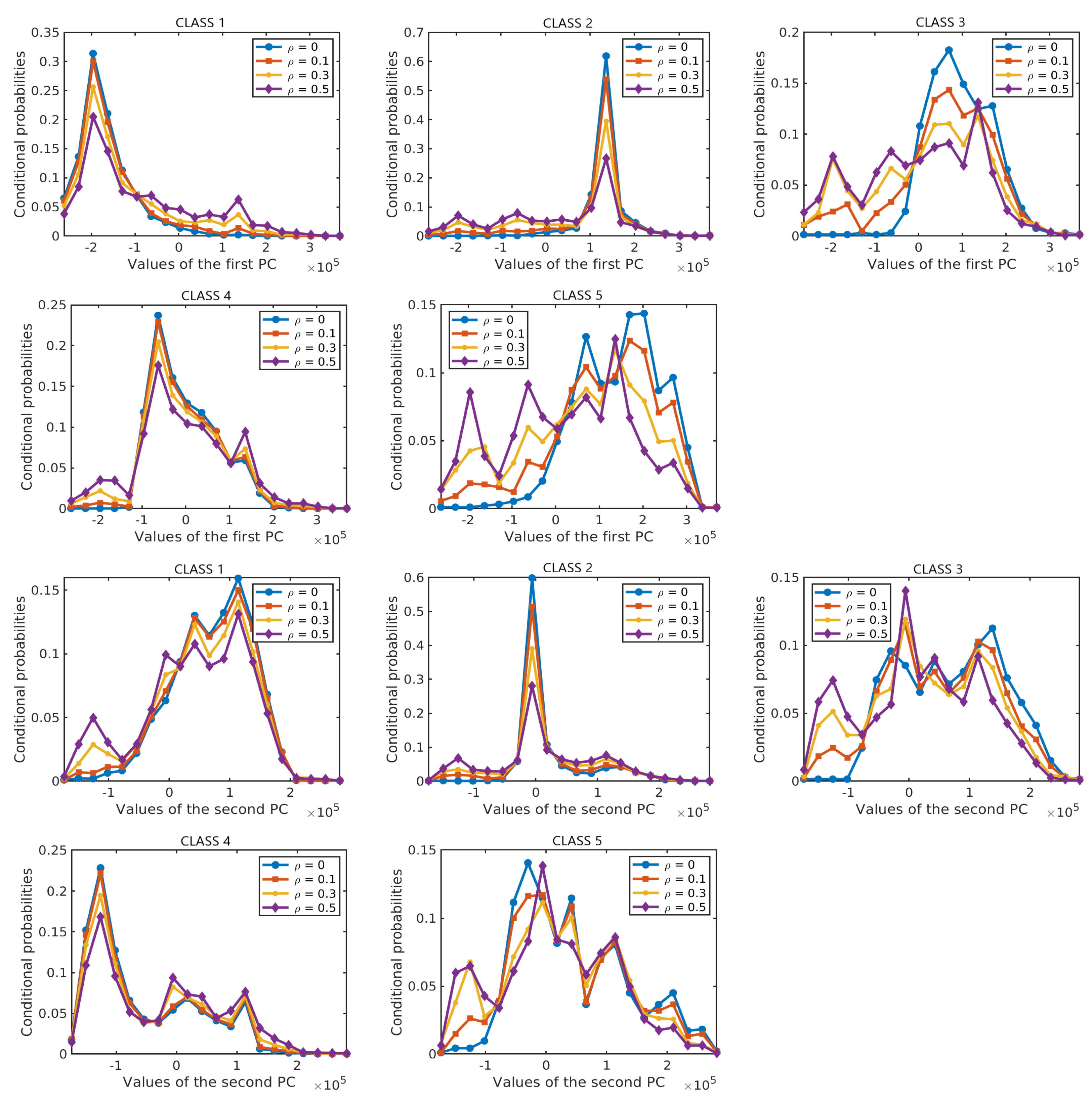

Here we take a different approach and we characterise statistically the effect of erroneous labels on statistical distributions of features and on the estimated spectral signatures of landcover classes. The empirical results explain from this perspective clearly the reasons for the considerable robustness of NBCs to label noise and at the same time they also show how erroneous labels affect the actual conditional distributions of features given the class labels, introducing inaccuracies in the classification process. This motivates us to employ the framework of imprecise probabilities to develop a classifier that is more robust to model uncertainties. As a first step, we use this framework to analyze the effect of noisy labels on the robustness of the predictions of NBCs.

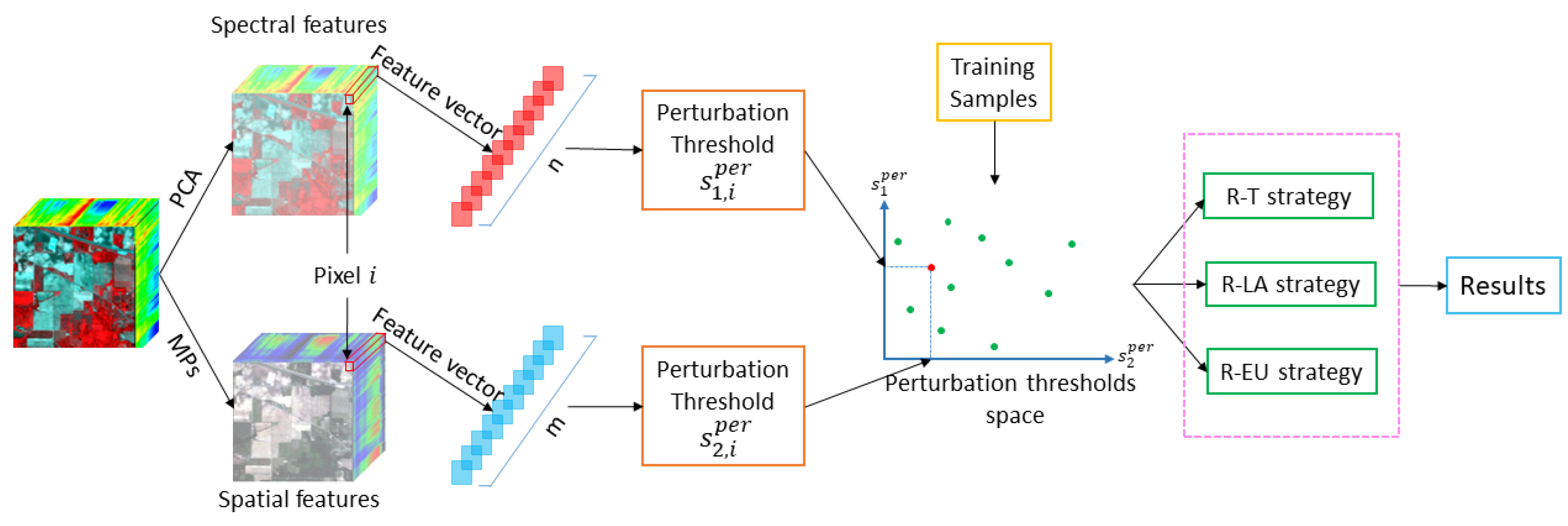

Next, we put forward our robust DCS (R-DCS) model and first apply it to HSIs classification in the presence of noisy labels. We perform dynamic selection among two classifiers: one based on spectral and the other based on spatial features. Both of these are formulated as an NBC. Specifically, we apply a principal component analysis (PCA) to extract spectral features from HSIs, where the decorrelating property of PCA justifies the conditional independence assumption that NBC relies on. The spatial features are generated by applying morphological operators on the first five principal components (PCs). We also apply our R-DCS model to a multi-source data set that includes HSI and LiDAR data. For this data set, we perform dynamic selection among three classifiers: the two classifiers corresponding to HSI and a third classifier based on the elevation information in the LiDAR data.

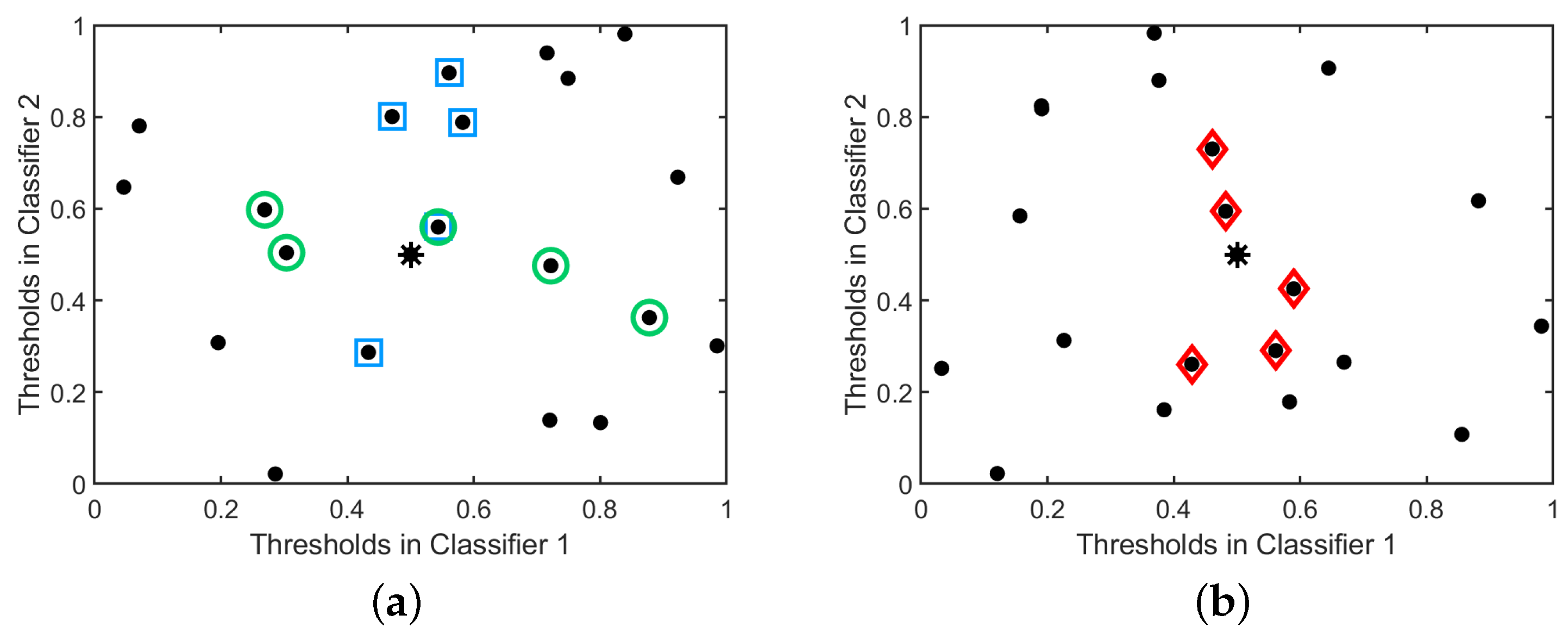

For the selection criteria for our R-DCS, we define three selection strategies—R-T, R-LA and R-EU—that differ in computational complexity. R-T simply selects the classifier with the highest perturbation threshold. While computationally efficient, this approach does not always perform well because the exact relation between perturbation thresholds and performance differs from one classifier to another. Two other strategies are proposed to improve upon this by determining empirical relations between the perturbation thresholds of different classifiers and their probabilities of correctly classifying the considered instance. Particularly, the empirical probabilities of correctly classifying the test sample are estimated based on the training samples that are closest to the test sample in terms of a given perturbation distance. In R-LA, the perturbation distance between two data samples is defined by the absolute value of the difference in their perturbation thresholds for a given classifier. R-EU defines this distance as the Euclidean distance in a space spanned by the perturbation thresholds of all the considered classifiers. R-EU is computationally more complex but outperforms the others in most cases of practical importance. Experimental results on three real data sets demonstrate the efficacy of the proposed model for HSIs classification in the presence of noisy labels. In the two HSI data sets, the R-EU strategy performs best among the three selection strategies when the label noise is relatively low, while R-T and R-LA offer better performance when the label noise is rather high. In the multi-source data set, the R-EU strategy always outperforms the other methods in all cases.

The main contributions of the paper can be summarized as follows:

- (1)

We characterise statistically the effect of label noise on the estimated spectral signatures of different classes in HSIs and on the resulting probability distributions (both prior probabilities and conditional probabilities given the class label). This analysis provides insights into the robustness of NBC models to erroneous labels, and at the same time it shows in which way errors in data labels introduce uncertainty in the involved statistical distributions of the classifier features.

- (2)

We further analyze the effect of noisy labels on the robustness of NBCs and, in particular, on perturbation thresholds of the corresponding NCCs. The results show that this robustness decreases as the amount of label noise that is applied increases.

- (3)

In order to cope with this decrease in robustness due to label noise, we propose a robust DCS model, dubbed R-DCS, which selects classifiers that are more robust, using different selection strategies that are based on the critical perturbation thresholds of the involved classifiers. In particular, we provide three possible selection strategies: R-T, R-LA and R-EU. Two of these (R-T and R-LA) already appeared in a preliminary version of this work [

59], but in a more abstract set-up, without exploring their performance in the presence of label noise. The third selection strategy, R-EU, proposed here, is computationally more complex, but performs better than the other two in cases with low to moderate levels of label noise (up to 30%), which are of most interest in practice.

- (4)

The proposed R-DCS models are validated on three real data sets. Compared to our conference paper [

59], we take into account the label noise into HSI classification and conduct more experiments to evaluate the proposed model. The results reveal that the proposed model outperforms each of the individual classifiers it selects from and is more robust to errors in labels compared to some common methods using SVM and graph-based feature fusion.

The rest of this paper is organized as follows.

Section 2 contains preliminaries, reviewing briefly the basic concept behind NBC, its imprecise-probabilistic extension NCC and the notion of perturbation thresholds that we build upon.

Section 3 describes three representative (hyperspectral and hybrid) real data sets that we use in our experiments. The following two sections contain the main contributions of this work:

Section 4 is devoted to the statistical characterisation of the influence of noisy labels on spectral signatures, on statistical distributions of the classifier features and on perturbation thresholds. In

Section 5, the proposed model R-DCS is described and three possible selection strategies based on imprecise-probabilistic measures are defined. The overall proposed framework for robust dynamic classifier selection for hyperspectral images is presented and discussed. Experimental results on the three real data sets are reported in

Section 6. The results demonstrate that the proposed model outperforms each classifier it selects from. Comparing to the competing approaches such as SVM and graph-based feature fusion, the proposed model proves to be more robust to label errors, inheriting this robustness from the NBCs that are at its core. A detailed discussion of the main results and findings is presented in

Section 7, and

Section 8 concludes the work.

3. Datasets

We conduct our experiments on two real HSI datasets: Salinas Scene and HYDICE Urban, and a multi-source data set GRSS2013.

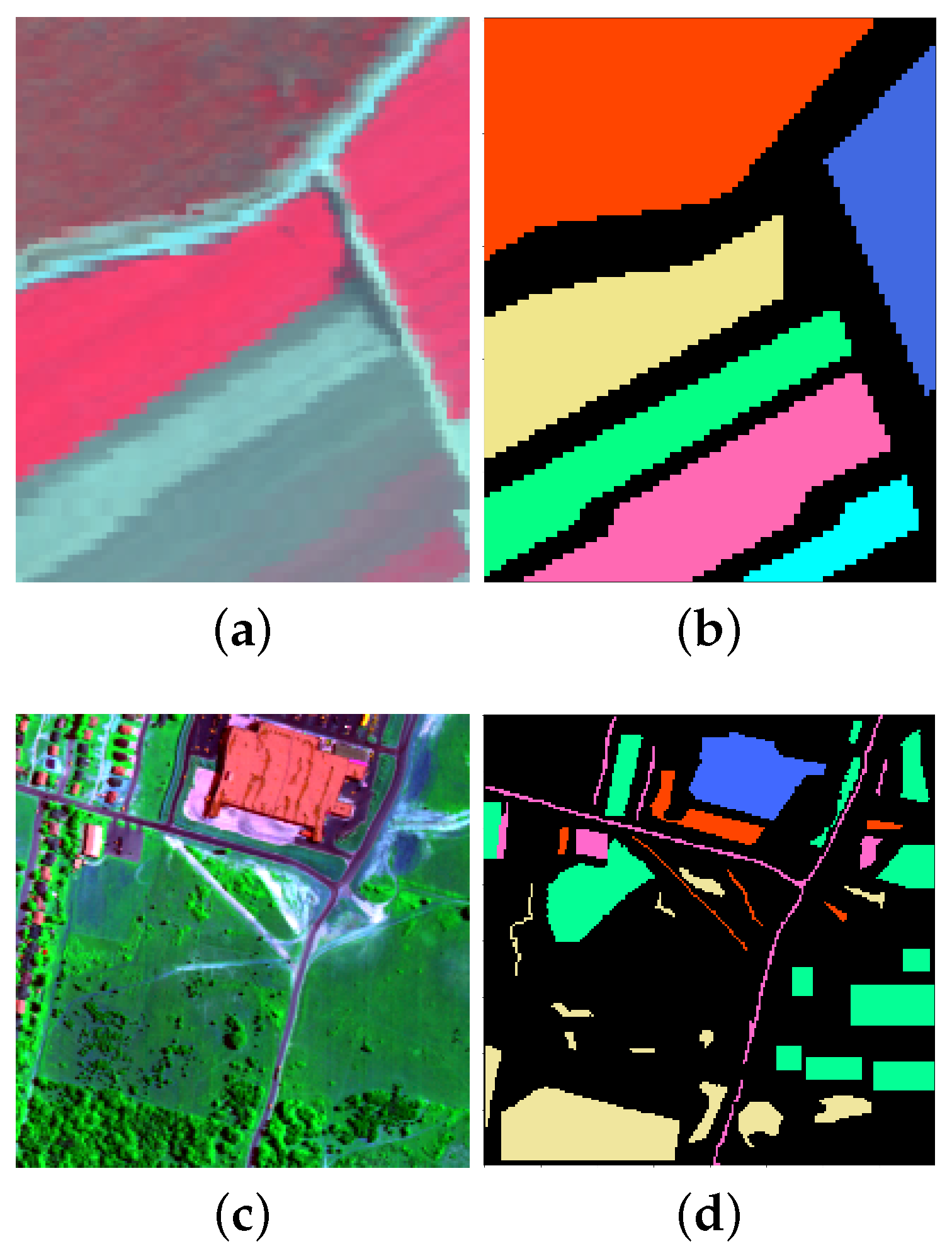

The

Salinas Scene dataset was gathered by the AVIRIS sensor with 224 bands in 1998 over Salinas Valley, California. The original data set consists of

pixels with a spatial resolution of 3.7 m per pixel. It includes 16 classes in total. For our experiments, we select a typical region of size

shown with a false color image in

Figure 1a. There are six classes in this region, as listed in

Table 1, which also shows the number of labeled samples per class.

Figure 1b shows the ground truth spatial distribution of these classes.

The

HYDICE Urban dataset was captured by the HYDICE sensor. The original data contains

pixels, each of which corresponds to a

m

area. There are 210 wavelengths ranging from 400 nm to 2500 nm, resulting in a spectral resolution of 10 nm. In our experiments, we use a part of this image with size

shown in

Figure 1c. The number of bands was reduced from 210 to 188 by removing the bands 104–108, 139–151 and 207–210, which were seriously polluted by the atmosphere and water absorption. Detailed information on the available classes and the number of samples per class is given in

Table 2. The ground truth classification is shown in

Figure 1d.

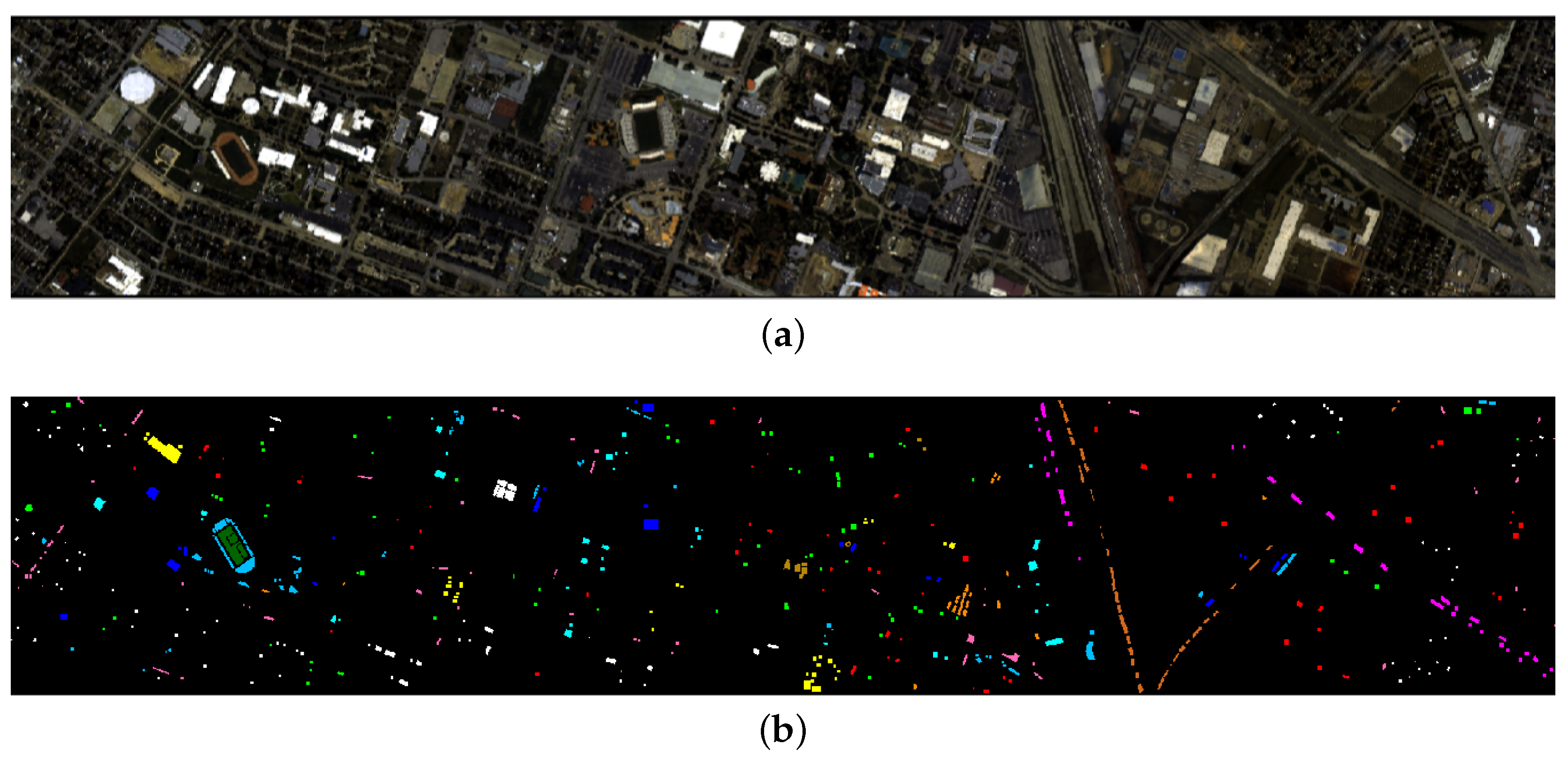

Our third data set,

GRSS2013, was a benchmark data set for the 2013 IEEE GRSS data fusion contest [

77]. This data set, consisting of HSI and LiDAR data was acquired over the University of Houston campus and the neighboring urban area in June 2012. The HSI has 144 spectral bands and 349 × 1905 pixels, containing in total 15 classes as shown in

Table 3. The false color image is shown in

Figure 2a and the ground truth classification is shown in

Figure 2b.

6. Experimental Results in HSI Classification

We evaluate the performance of our methods on three real data sets: two HSI data sets (

Salinas scene and

Urban area HYDICE) and a multi-source data set (

GRSS2013), which contains HSI and LiDAR data. The details of the three data sets were described in

Section 3.

In the following experiments, we extract the first 50 PCs for the spectral features as a compromise between the performance and complexity for all the methods. The morphological profiles for spatial features are generated from the first 5 principal components (representing more than 99% of the cumulative variance) of the HSI data with 5 openings and closings by using disk-shaped SE (ranging from 2 to 10 with step size increment of 2). The morphological profiles for elevation features are generated from the LiDAR data with 25 openings and closings by using disk-shaped SE (ranging from 2 to 50 with step size increment of 2). The values of each PC and morphological profile are uniformly discretized into 10 intervals.

We compare the proposed R-DCS model under different selection strategies with the following schemes:

- (1)

NBC implemented with spectral features alone (NBC-Spe), with spatial features alone (NBC-Spa) and with elevation features alone (NBC-LiDAR).

- (2)

K-nearest neighbors (KNN) classifier with spectral features of HSIs. The number of neighbors is obtained by five-fold cross validation over the training samples.

- (3)

Support vector machine (SVM) classifier with polynomial kernel, applied on spectral features.

- (4)

Generalized graph-based fusion (GGF) method of [

29], which makes use of all types of features.

Observe that GGF and the proposed R-DCS combine different types of features, while other methods use one or the other type of features. The only parameter of the proposed model is the number of neighbors

N in the R-LA and R-EU methods. We estimate this parameter by five-fold cross validation over the training samples, as we do for the KNN method. Three widely used performance measures are used for quantitative assessment: overall accuracy (OA), average accuracy (AA) and the Kappa coefficient (

). Overall accuracy is the ratio between correctly classified testing samples and the total number of testing samples. Average accuracy is obtained by first computing the accuracy for each class and then considering the average of these accuracies. The Kappa coefficient, finally, measures the level of agreement between the ground truth and the classification result of the classifier [

80]:

corresponds to complete agreement and hence a perfect classifier, whereas

corresponds to a classifier that ignores the feature vector and classifies purely at random. Let

be the number of testing samples in class

i that are labeled as class

j by the classifier. Then OA, AA and

are computed as:

where

is the total number of testing samples,

is the number of classes,

is the number of testing samples in class

i, EA =

is the expected accuracy of a classifier that ignores the feature vector and

is the number of testing samples that are classified in class

i. In the following experiments, 10 percent of the labeled samples are used for training and the rest are for testing. The reported experimental results are averages over 10 runs with different training samples.

6.1. Experiments on the HYDICE Urban Data Set

The first experiment is conducted on the

HYDICE Urban data set. The reference classes and their corresponding number of labeled training and testing samples are shown in

Table 4. The false color image and the ground truth are shown in

Figure 1a,b.

The classification results for the

HYDICE Urban data set with different levels of label noise are listed in

Table 5, where the best result is marked in bold. All the three proposed strategies outperform the basic classifiers they select from with different levels of label noise. The proposed R-DCS model with the R-EU strategy outperforms the others in most cases in this data set. When the level of label noise is 0, the GGF method performs the best and the two NBC models: NBC-Spe and NBC-Spa are inferior to all other methods. However, all the three proposed strategies improve the performance of NBC with about 3% improvement over NBC-Spe and 5% improvement over NBC-Spa for the R-EU strategy. With increasing levels of label noise, the accuracy of the GGF method decreases heavily from 94% to 64%, and similarly for SVM from 91% to 58%. Remarkably, all of our methods show only a slight decrease in OA which is within 5%. The more complex strategy R-EU outperforms the more simply ones R-T and R-LA, especially for the cases with less errors in labels.

Table 5 also demonstrates that the proposed methods mostly outperform (and the R-EU strategy always outperforms) all the reference methods in terms of all the performance measures (OA, AA and

) in all cases where label noise exists.

Table 6 shows the classification accuracy per class for the

HYDICE Urban data set with different classifiers. We compare

and

to study the change in accuracy for each class in the presence of label noise. The results show that the class-specific accuracies mostly drop significantly due to the effect of label noise. Class 2 is an exception: label noise there increases the accuracies for NBC-Spe, NBC-Spa, KNN, SVM and R-T. We can also see from

Table 6 that when

, the GGF method yields the highest accuracy in each class. Our proposed methods R-LA and R-EU outperform NBC-Spe and NBC-Spa in Class 2 with about 20% improvement. When

, all the methods perform badly in Class 3 due to the limited number of training samples. Our proposed methods show competing accuracies in the first three classes and the R-LA method performs the best in Class 4 and Class 5.

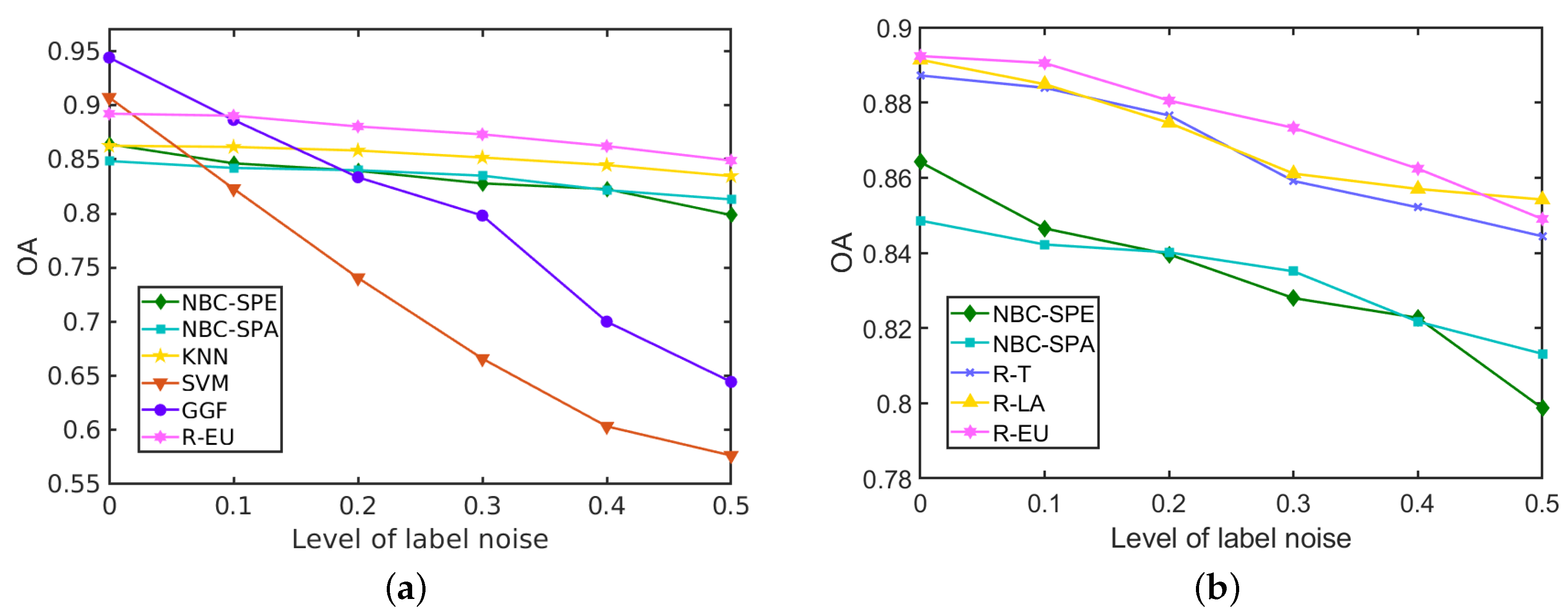

In

Figure 13a we compare the performance of our best strategy for the

HYDICE Urban data set, R-EU, with the reference methods. With increasing levels of label noise, the performance of the GGF and SVM deteriorates significantly, while NBCs, KNN and the proposed methods are more stable in terms of OA. The classification accuracy of all the three analysed strategies is depicted in

Figure 13b for different levels of label noise. All the strategies outperform the individual NBCs (NBC-SPE and NBC-SPA) that they select from. When the level of label noise is less than 0.5, the R-EU method performs better, while the R-T and R-LA methods achieve higher accuracy at

. Compared to NBC with spatial features, accuracy improvement is above 3% at different levels of label noise, which is significant in the task of HSI classification. Compared to some of the most competitive methods SVM and GGF, an important improvement is obtained in the presence of label noise.

6.2. Experiments on the Salinas Scene Data Set

The second experiment is conducted on the

Salinas Scene data set. Information about the classes and number of samples used for training and testing are listed in

Table 7. The false color image and ground truth are shown in

Figure 1.

The classification results for the

Salinas Scene data set with different levels of label noise are depicted in

Table 8 and

Figure 14. The three strategies within the proposed method perform similarly due to the better performance of NBCs on this data set. The proposed R-DCS model under any of the three presented strategies outperforms all the other methods at every level of label noise, even when the level of label noise is 0. When the level of label noise increases, the accuracy of SVM drops heavily from 98% to 69%, and similarly for GGF the accuracy drops from 99% to 87%. The KNN’s accuracy drops only a little in this data set from 94% to 92%. For the proposed model, with any of the three selection strategies, the decrease in the accuracy is also only about 2%. It is interesting to notice that with lower levels of label noise, the R-EU strategy performs better than R-T and R-LA, while the opposite is true at larger levels of label noise.

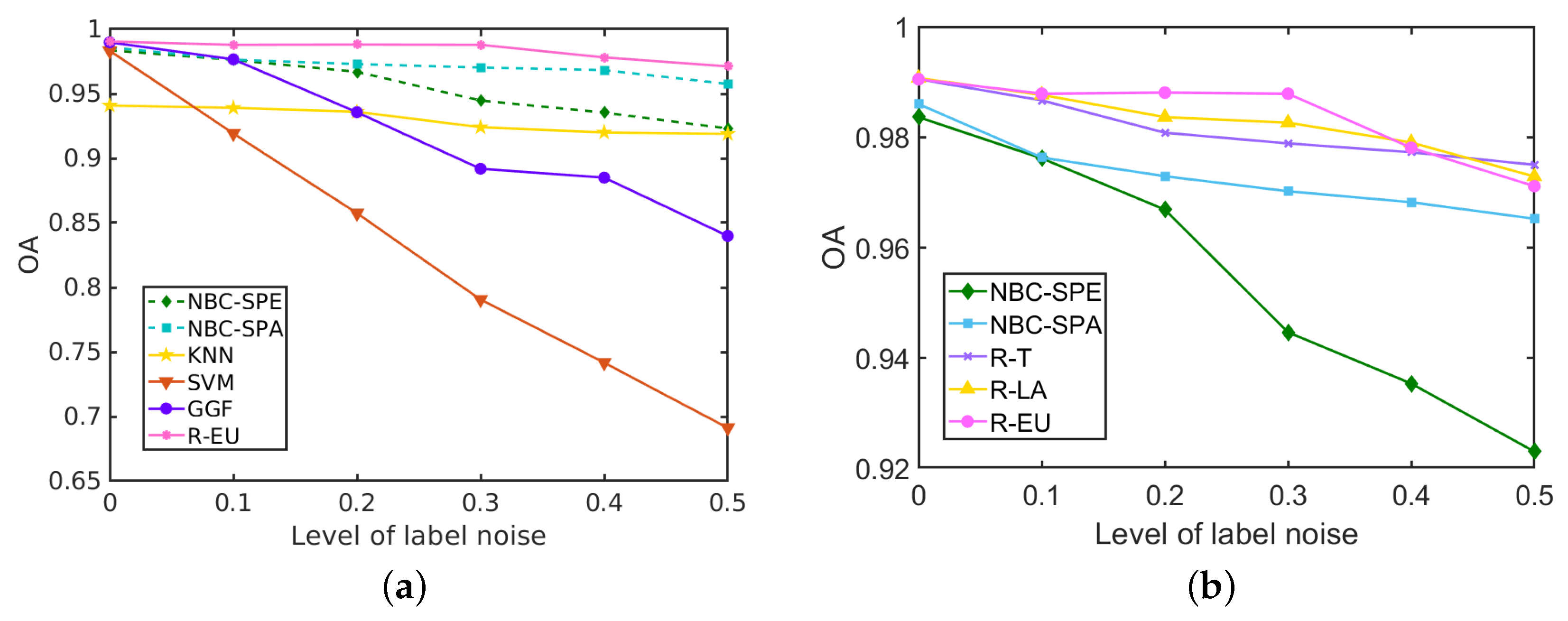

6.3. Experiments on GRSS2013 Data Set

The third experiment is conducted on the

GRSS2013 data set. Information about the classes and number of samples used for training and testing are listed in

Table 9. The false color image and ground truth are shown in

Figure 2.

The classification results for the

GRSS2013 data set with different levels of label noise are depicted in

Table 10 and

Figure 15. Our proposed methods and the representative GGF method combine the spectral features, spatial features and elevation features in these experiments. The results show that our R-EU method yields the best performance in terms of OA, AA and

at each level of label noise. R-LA outperforms the three NBCs, i.e., NBC-Spe, NBC-Spa and NBC-LiDAR, at low levels of label noise (

), but performs worse than NBC-Spa when

. When label noise rises from 0 to 0.5, the OA of SVM drops heavily from 85% (

) to 54% (

) and similarly GGF has an OA decrease of 39%. KNN proves to be much more robust to the label noise—its OA decreases only by 3% in the same range of

values. Among our methods, R-T does not yield good results on this data set. Compared with R-LA, R-EU performs consistently better, demonstrating the effectiveness of the R-EU strategy.

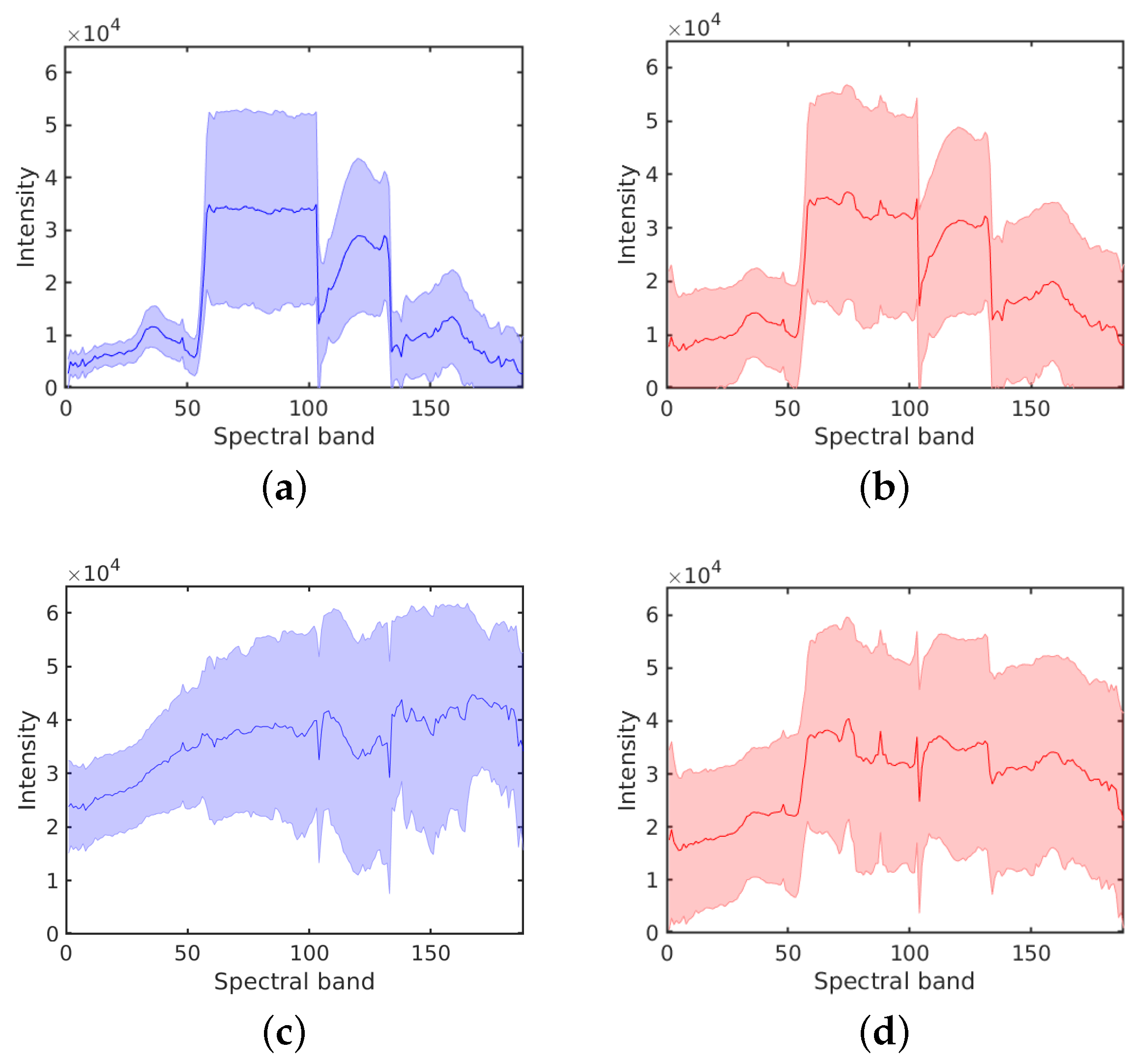

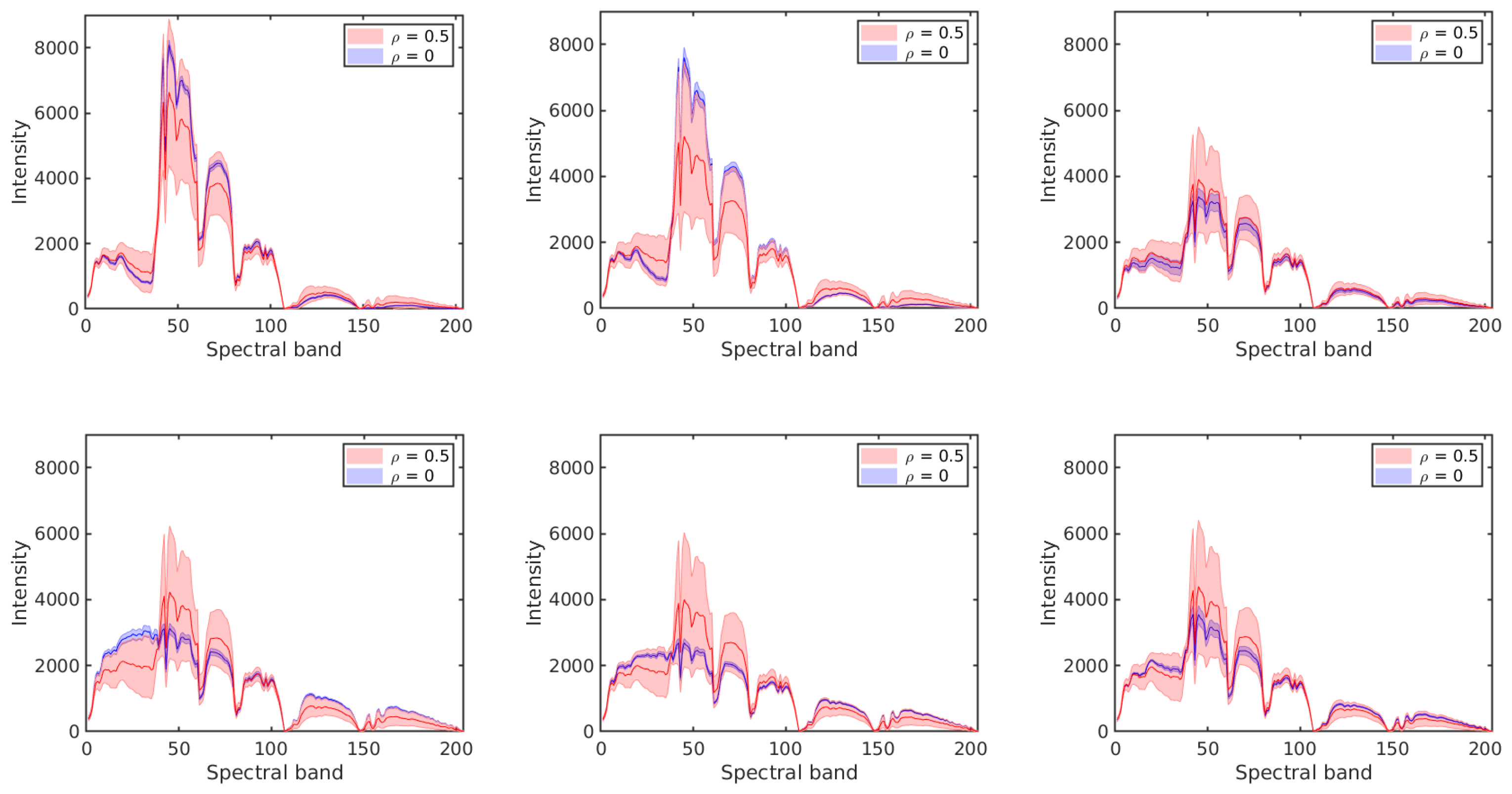

6.4. Performance at Extremely Large Levels of Label Noise

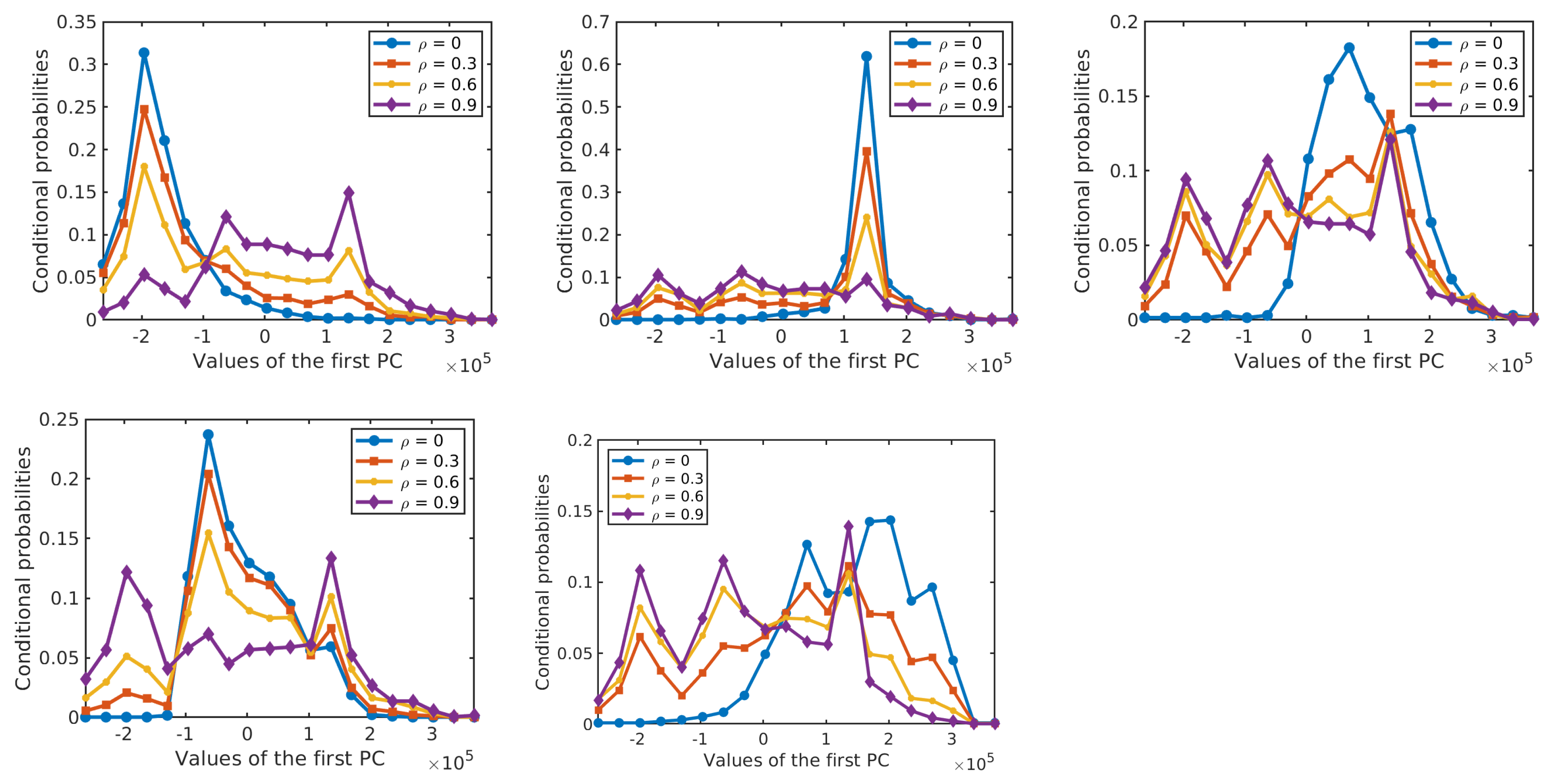

The previous analysis showed that the performance of NBC-based classifiers and our approach that is build upon NBC remains remarkably stable even at large levels of label noise (). To explain this behaviour and to explore at which levels of label noise this performance starts to drop, we also perform experiments with extremely large levels of label noise (). For these experiments, we choose the selection strategy that yields the best performance the most times (R-T for the two HSI data sets and R-EU for the GRSS2013 data set), and compare it with other methods.

Figure 16 shows the performance of the resulting classifiers under different levels of label noise on the three analysed data sets. In the three data sets, the overall accuracy of NBC-Spe decreases gradually with increasing label noise in the range

, and it drops abruptly afterwards reaching a value near zero when

. The reason for this sharp decrease can be attributed to the flattening of the conditional densities for larger

as shown in

Figure 17 and

Figure 18 for the first PC in the two HSI data sets. (Note that the statistical distributions in

Figure 17 and

Figure 18 as well as the statistical distributions in

Section 4 were obtained with 50% of the labeled samples per class in order to allow for a more reliable empirical estimation of the corresponding distributions.) NBC-Spa and our method R-T in the two HSI data sets show a similar evolution in both data sets and have a sudden drop in OA around

. KNN and GGF suffer from a significant performance drop at

, while SVM shows approximately linear decrease in both datasets. The trend exhibited by NBCs and our proposed method is much better than a linear decrease in the accuracy, because when the accuracy drops below a certain level the exact values do not matter anymore as all methods are useless in that range. In the

GRSS2013 data set, NBCs with different types of features and our method R-EU show a similar evolution and have a sudden drop in OA around

. KNN shows behaviour that is similar to NBC-Spe, while SVM and GGF almost decrease linearly in this data set.

7. Discussion

The experimental results presented in the previous section as well as the statistical characterization in

Section 4, provide new insights into the effects of label noise on supervised classification of hyperspectral remote sensing images. Empirical conditional probability distributions of HSI features conditioned on class labels, as well as prior distributions of class labels, exhibited graceful flattening with increasing amounts of label noise. Their evolution clarifies why Bayesian classifiers such as the relatively simple NBCs are much more robust to label noise than some other, more complex methods. These results are consistent with previous findings from [

70,

71], where it was experimentally established that NBCs yield better classification accuracy in the presence of label noise compared to classifiers based on KNN, SVM, and decision trees.

Our experimental analysis shows also clearly that incorporating spatial features into the classification process not only improves the classification accuracy but increases robustness to label noise as well. It is well established that using spatial information typically improves the classification accuracy, and some recent works that addressed HSI classification in the presence of noisy labels [

66,

81] also incorporate spatial features.

We addressed the effect of label noise from the point of view of the robustness of probabilistic graphical models to model perturbations. We built a robust dynamic classifier selection method upon this reasoning. The proposed approach enjoys remarkable robustness to label noise, inherited from the naive Bayesian classifiers that lie at its core. We instantiated our general approach with three particular selection strategies that have different levels of complexity. The proposed approach improves upon the NBCs that it combines and lends itself to incorporating easily multiple data sources and multiple types of features. Both NBC and the proposed robust dynamic classifier exhibit a characteristic trend in the presence of label noise: the classification accuracy decreases very slowly until the level of label noise becomes excessively high (60% or more erroneous labels) and then it drops abruptly. The evolution of the probability distributions of HSI features and estimated priors for class labels provides a nice explanation for this behaviour as was pointed out in the previous section. Interestingly, classifiers based on SVM and on an advanced graph fusion method show a faster decrease of the classification accuracy with increasing levels of label noise. While these more sophisticated classifiers outperform the other analysed ones in the case of ideally correct labels, they appear to be rather more vulnerable to label noise and become inferior to NBCs, KNN and the proposed approach already when a small percentage of labels are erroneous.

Based on these results and findings, we believe that the following research directions are interesting to explore:

- (1)

Analyzing the performance of more advanced Bayesian classifiers, e.g., based on Markov Random Fields, in the presence of label noise.

- (2)

Exploring which levels of label noise are acceptable for a given tolerance in the classification accuracy and how robust are different learning models in this respect. This can significantly help in practice for optimizing the resources and ensuring the prescribed tolerance levels.

- (3)

Deep learning methods are becoming the dominant technology for supervised classification. It is well known already that these models tend to be extremely susceptible to various degradations in the data such as noise and to different adversarial attacks. It would be of interest to study thoroughly their behaviour in the presence of label noise. Motivated by the excellent performance of Bayesian models to erroneous labels, a natural idea to explore is how Bayesian approaches can be incorporated to improve the robustness of deep learning methods to label noise.