Parallel Three-Branch Correlation Filters for Complex Marine Environmental Object Tracking Based on a Confidence Mechanism

Abstract

1. Introduction

2. Related Work

3. Proposed Approach

3.1. Parallel Three-Branch Correlation Filters

3.2. Confidence Machanism

3.3. Algorithm of the Proposed Tracker

| Algorithm 1: Parallel Three-Branch Correlation Filters Tracking Algorithm Based on a Confidence Mechanism |

| Input: 1: Image . 2: Detected target position and scale . |

| Output: |

| 1: Detected target position and scale . |

| Loop: 1: Initialize , , and , in the first frame by Equations (14),(15),(16) and (12),(13). 2: for do. 3: Position detection: 4: Extract position features from at and by a search region. 5: Compute three parallel correlation scores , , . 6: Merge the three correlation scores to by Equation (17). 7: Set to the target position by Newton iterative method. 8: Scale detection: 9: Extract scale feature from at and by a search region. 10: Compute correlation scores by Equation (19). 11: Set to the target scale that maximizes . 12: Model update: 13: Extract new sample features and from and . 14: Generate new training set by the method. 15: Compute response scores by Equation (21). 16: If > threshold, return step 2; else continue. 17: Update the model , , by the learning rate , , . 18: Update the scale model , by the learning rate . 19: Return , . 20: end for. |

4. Results

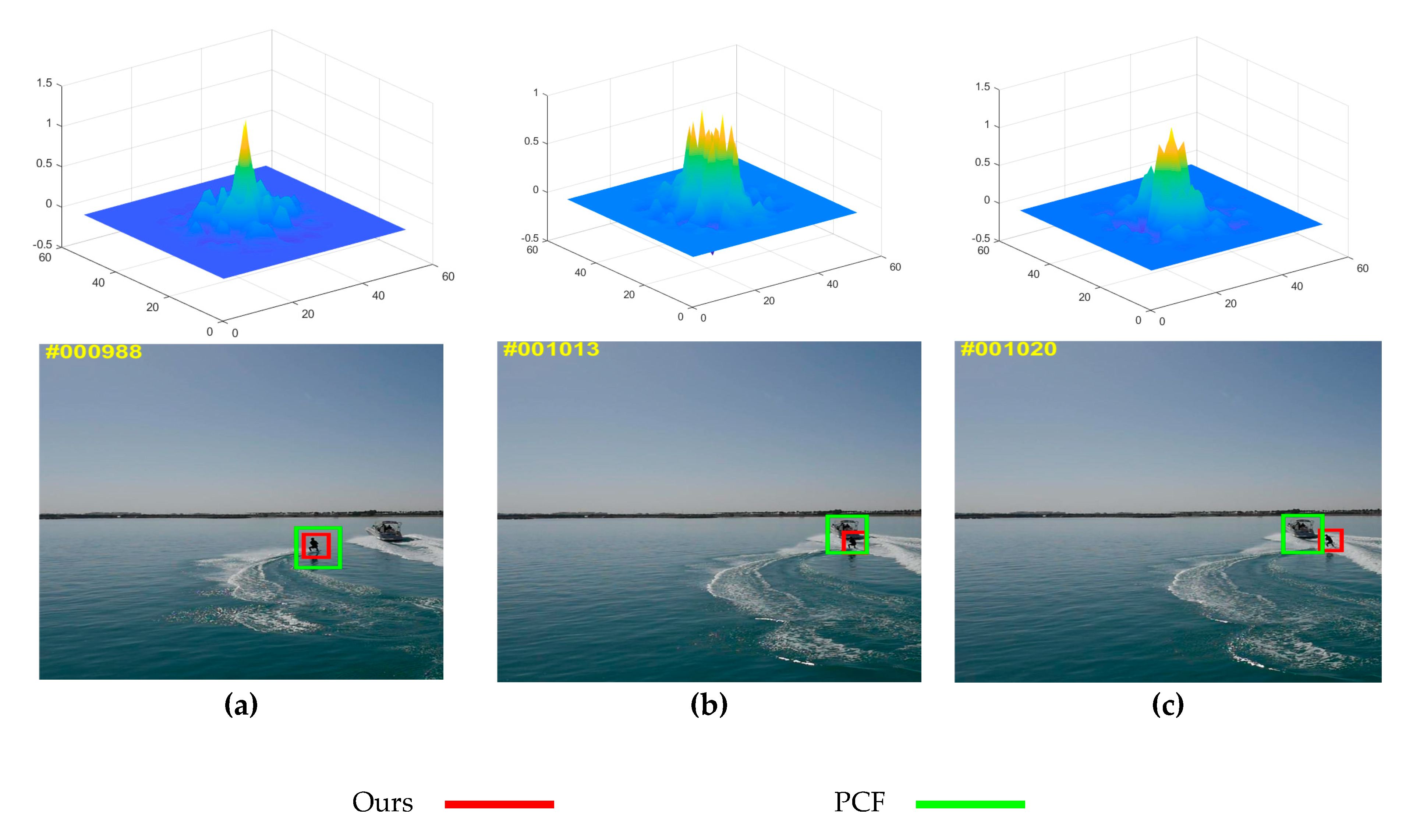

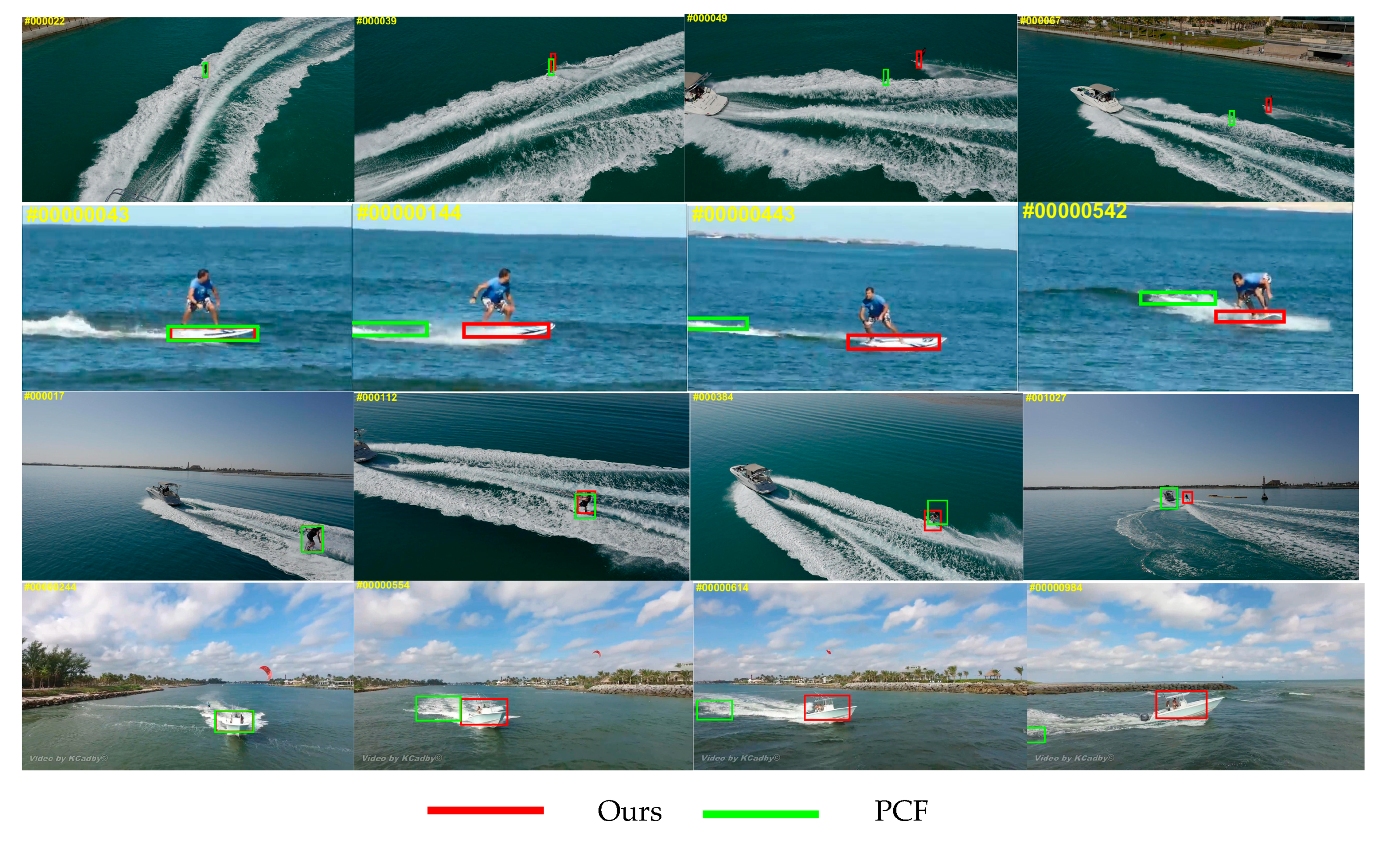

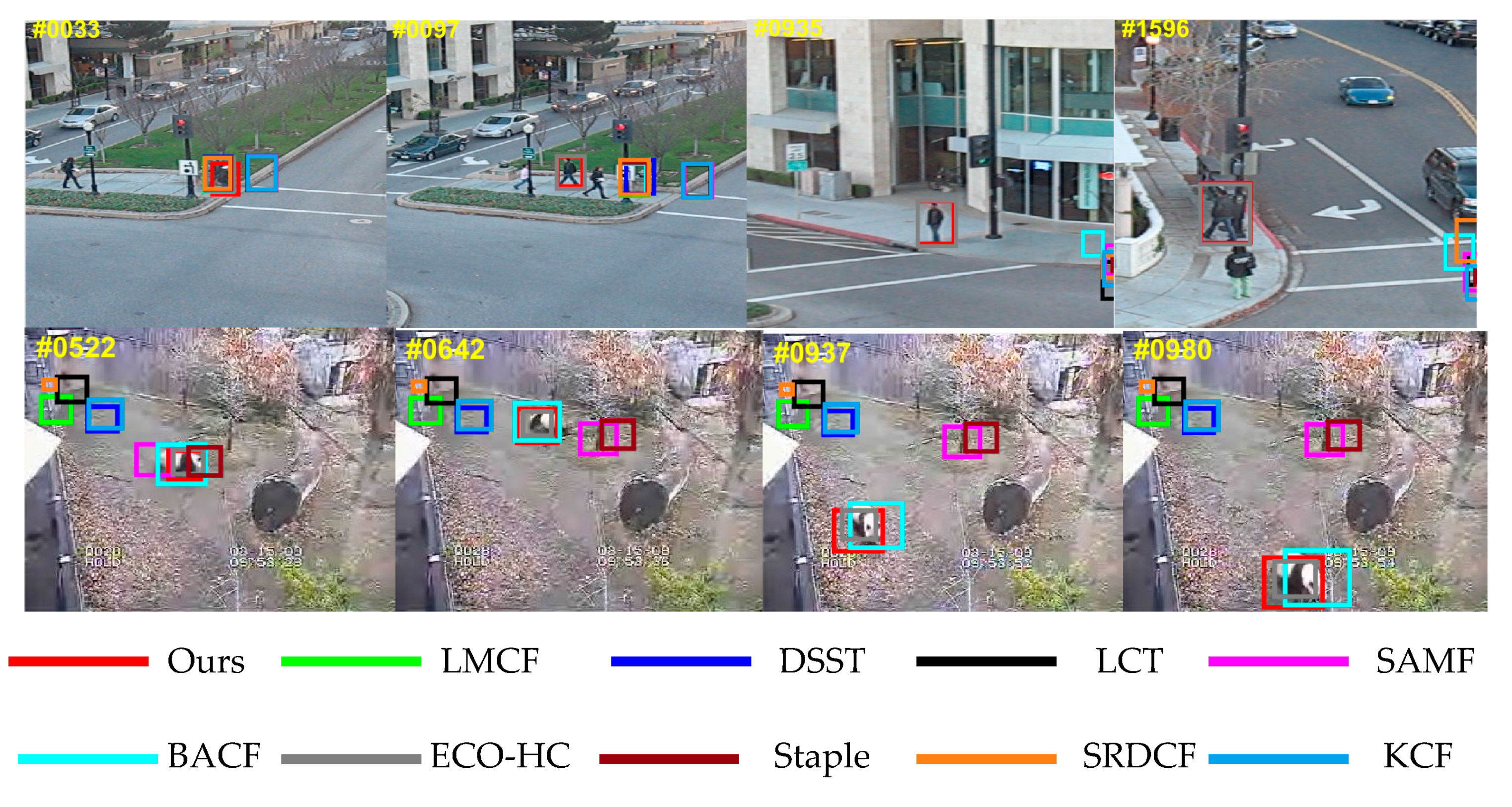

4.1. Experimental Results and Analysis in the Complex Marine Environment

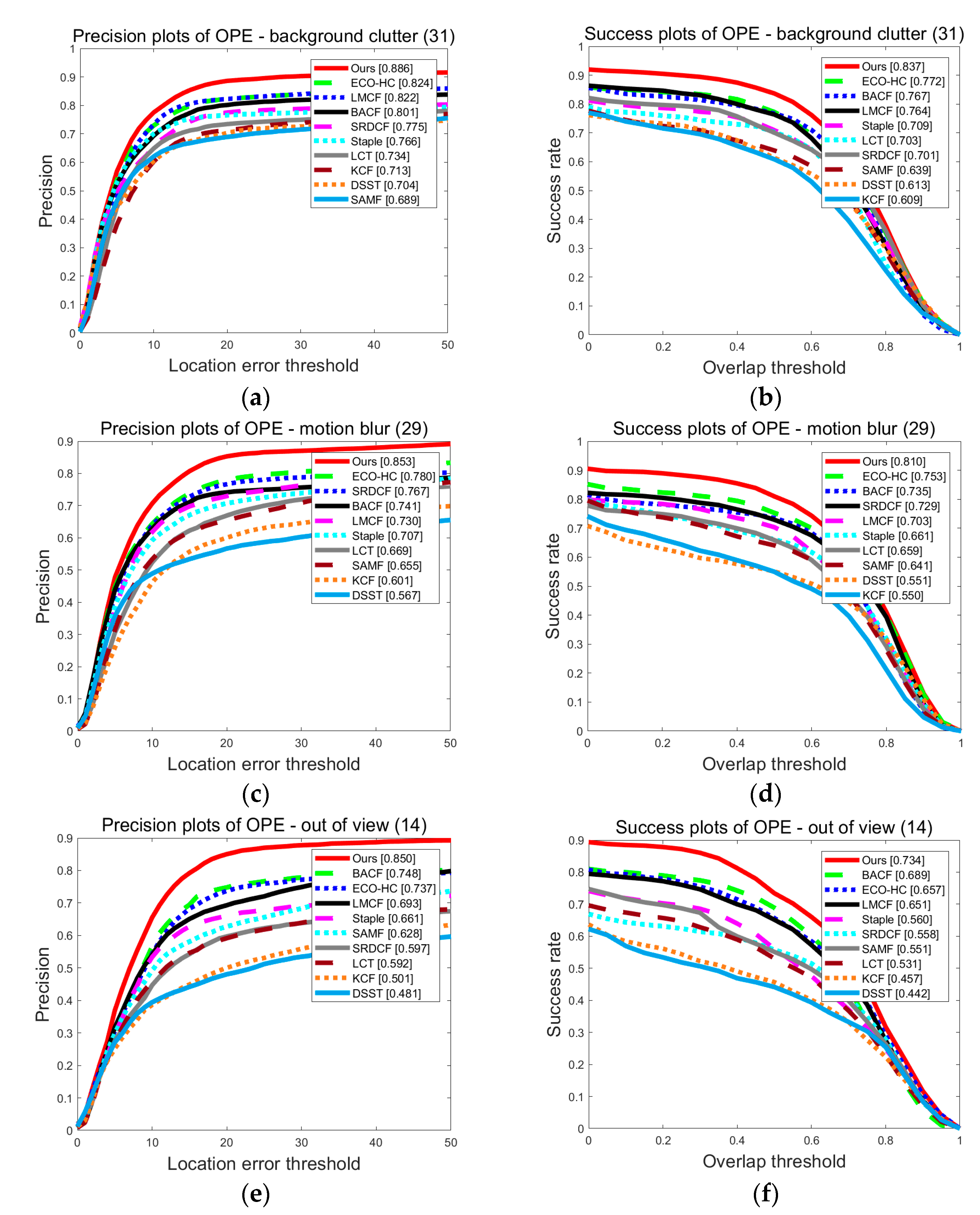

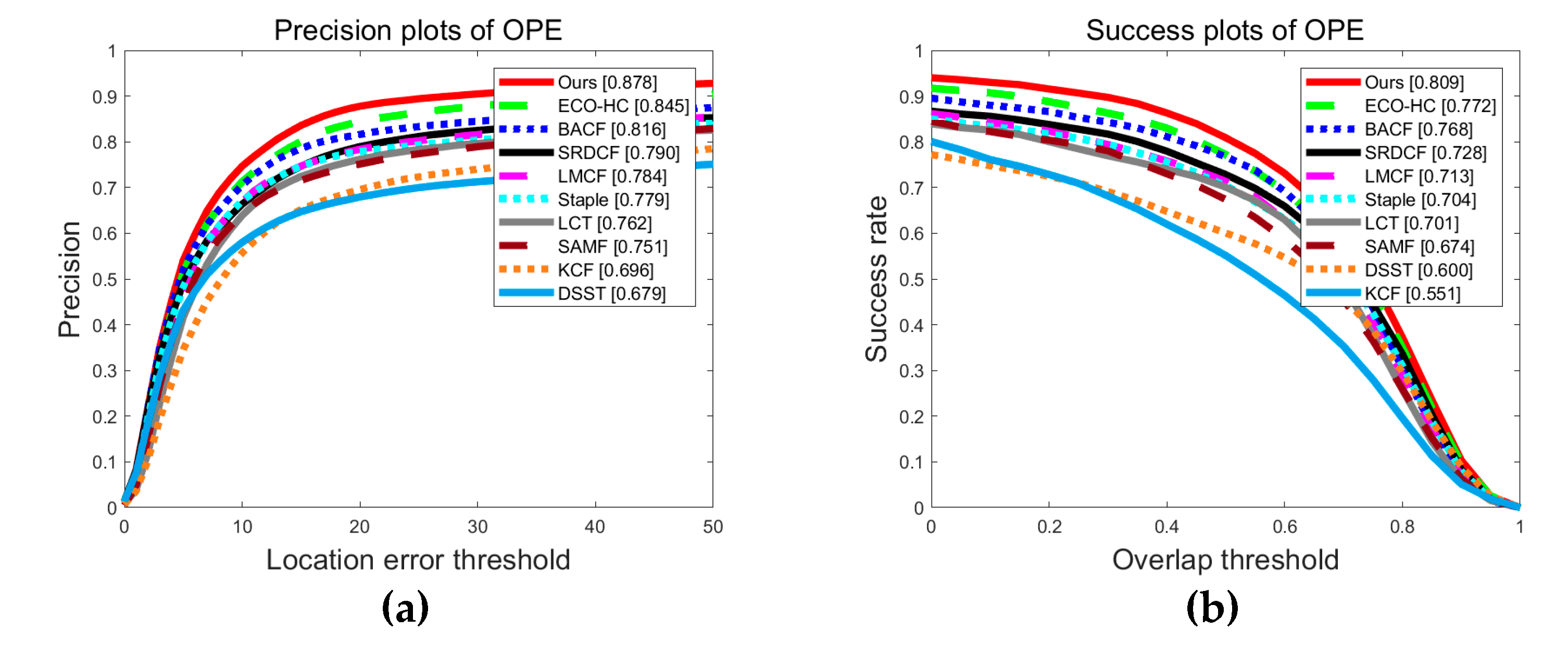

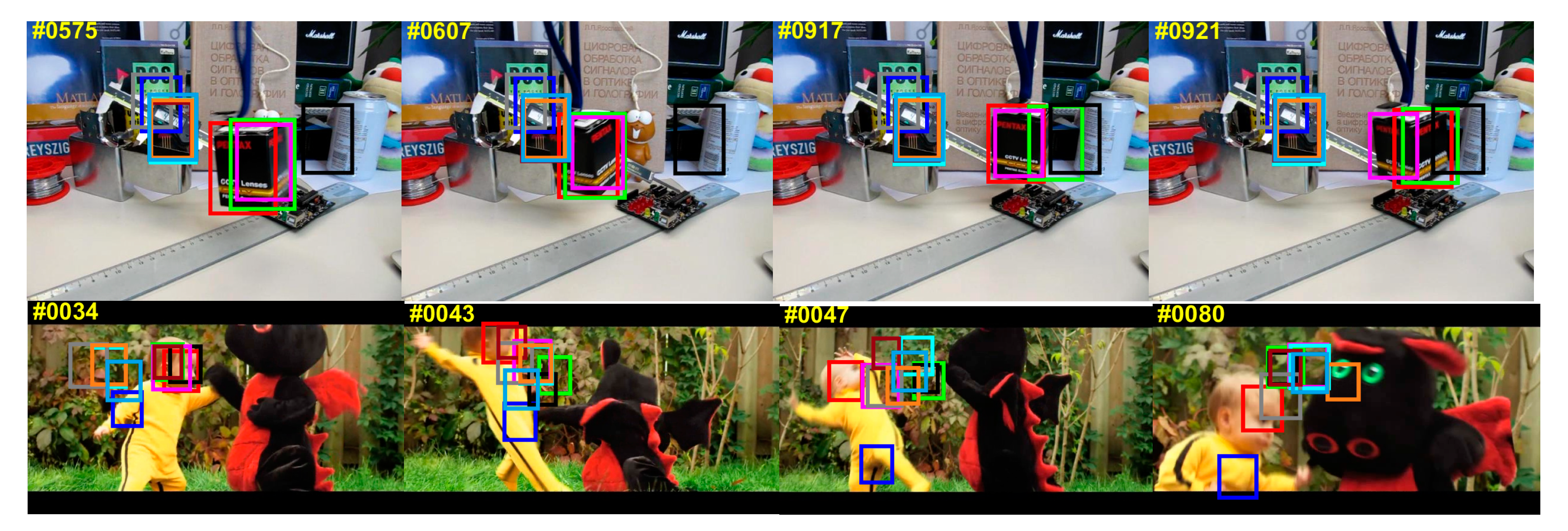

4.2. Comparison of the Proposed Tracker and the Baseline Tracker on OTB-2013

4.3. Results and Analysis on the Dataset OTB-2015

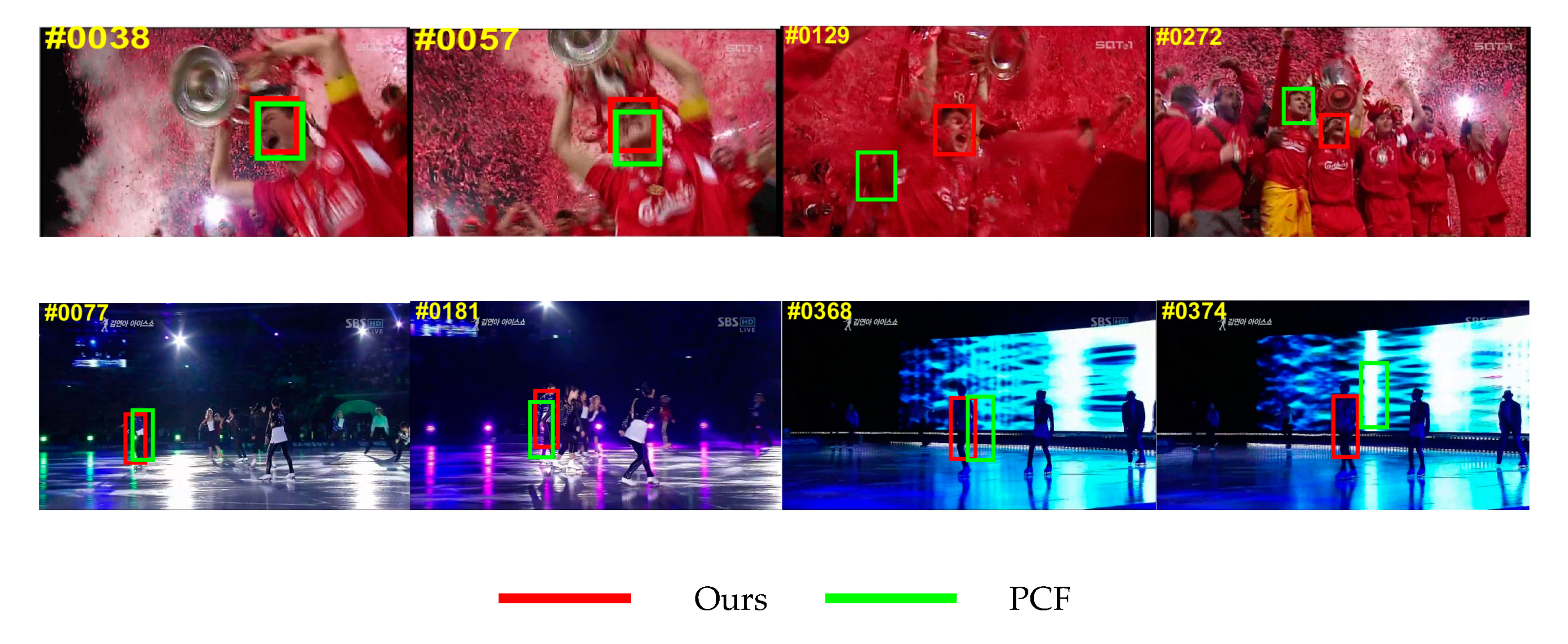

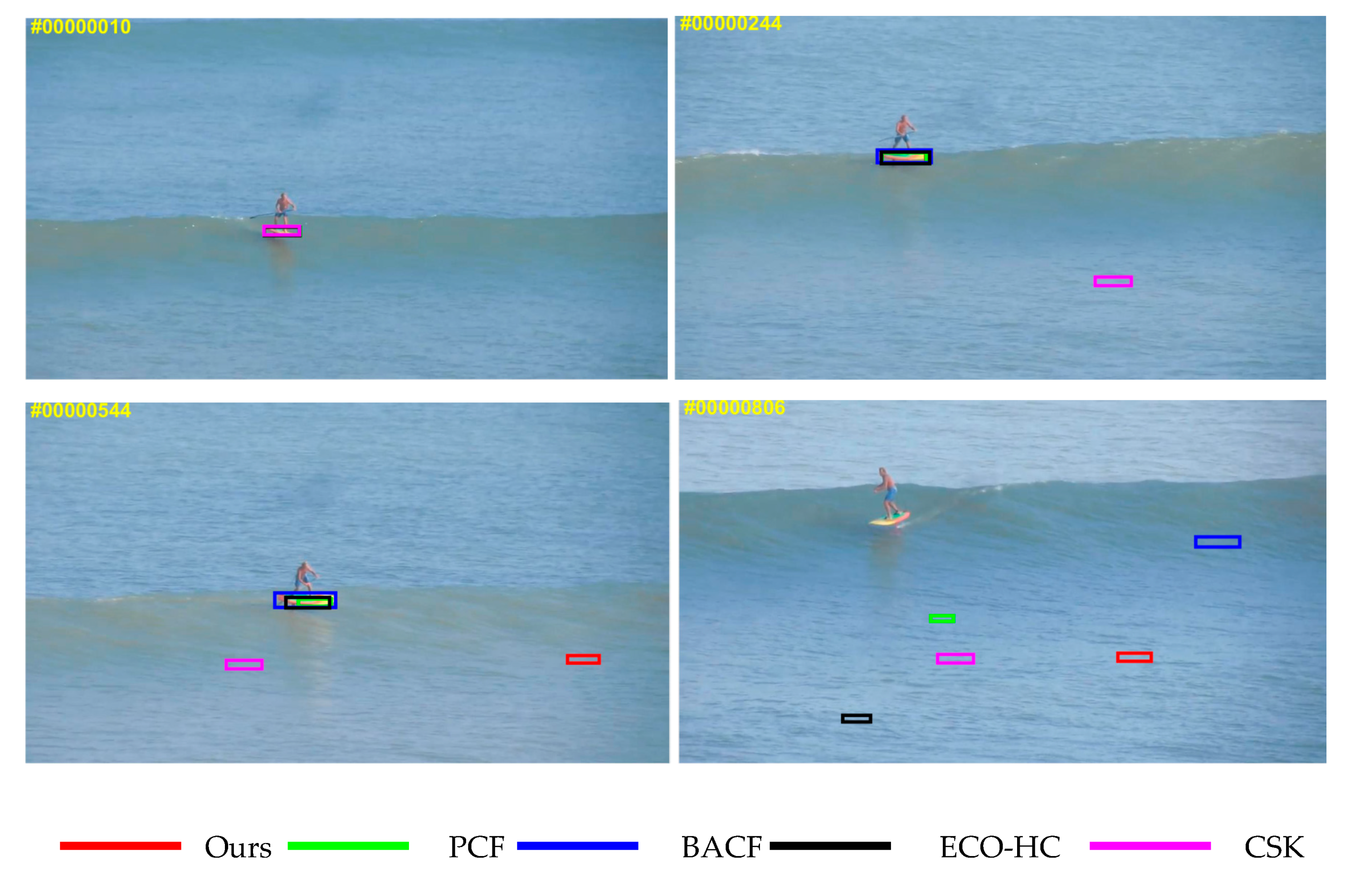

4.4. Failure Case

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chen, X.; Xu, X.; Yang, Y.; Wu, H.; Tang, J.; Zhao, J. Augmented Ship Tracking Under Occlusion Conditions From Maritime Surveillance Videos. IEEE Access 2020, 8, 42884–42897. [Google Scholar] [CrossRef]

- Singh, M.; Khare, S.; Kaushik, B.K. Performance Improvement of Electro-Optic Search and Track System for Maritime Surveillance. Def. Sci. J. 2020, 70, 66–71. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. Lect Notes Comput Sc 2012, 7575, 70715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Danelljan, M.; Hger, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Aftab, W.; Hostettler, R.; De Freitas, A.; Arvaneh, M.; Mihaylova, L. Spatio-Temporal Gaussian Process Models for Extended and Group Object Tracking With Irregular Shapes. IEEE Trans. Veh. Technol. 2019, 68, 2137–2151. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, Y.; Li, D.; Wang, Z. Parallel Correlation Filters for Real-Time Visual Tracking. Sensors 2019, 19, 2362. [Google Scholar] [CrossRef]

- Lu, X.; Li, J.; He, Z.; Liu, W.; You, L. Visual object tracking via collaborative correlation filters. Signal Image Video Process. 2020, 14, 177–185. [Google Scholar] [CrossRef]

- Fang, Y.; Ko, S.; Jo, G.S. Robust visual tracking based on global-and-local search with confidence reliability estimation. Neurocomputing 2019, 367, 273–286. [Google Scholar] [CrossRef]

- Tjaden, H.; Schwanecke, U.; Schömer, E.; Cremers, D. A Region-Based Gauss-Newton Approach to Real-Time Monocular Multiple Object Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1797–1812. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Chu, J.; Leng, L.; Miao, J.; Kim, B.-G. A scale-adaptive object-tracker with occlusion detection. Eurasip J. Image & Video Process. 2020, 1, 1–15. [Google Scholar]

- Guo, Y.; Li, Y.; Xue, A.; Tharmarasa, R.; Kirubarajan, T. Simultaneous tracking of a maneuvering ship and its wake using Gaussian processes. Signal Process. 2020, 172, 107547. [Google Scholar] [CrossRef]

- Zheng, H.; Tang, Y. A novel failure mode and effects analysis model using triangular distribution-based basic probability assignment in the evidence theory. IEEE Access 2020, 8, 66813–66827. [Google Scholar] [CrossRef]

- Zhang, Q. Using Wavelet Network in Nonparametric Estimation. IEEE Trans. Neural Netw. 1997, 8, 227–236. [Google Scholar] [CrossRef] [PubMed]

- Mercorelli, P. Biorthogonal wavelet trees in the classification of embedded signal classes for intelligent sensors using machine learning applications. J. Frankl. Inst. 2007, 344, 813–829. [Google Scholar] [CrossRef]

- Mahdavi-Amiri, N.; Shaeiri, M. A conjugate gradient sampling method for nonsmooth optimization. A Q. J. Oper. Res. 2020, 18, 73–90. [Google Scholar] [CrossRef]

- Gao, J.; Wang, Q.; Xing, J.L.; Ling, H.B.; Hu, W.M.; Maybank, S. Tracking-by-Fusion via Gaussian Process Regression Extended to Transfer Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 939–955. [Google Scholar] [CrossRef]

- Gao, Y.; Zhao, J.; Luo, J.; Zhou, H. Adaptive feature fusion with the confidence region of a response map as a correlation filter tracker. Opt. Precis. Eng. 2019, 27, 1178–1187. [Google Scholar]

- Vojir, T.; Noskova, J.; Matas, J. Robust Scale-Adaptive Mean-Shift for Tracking. Pattern Recognit. Lett. 2014, 49, 250–258. [Google Scholar] [CrossRef]

- Sun, H.; Chen, X.; Xiao, H. A deep object tracker with outline response map. CAAI Trans. Intell. Syst. 2019, 14, 725–732. [Google Scholar]

- Li, X.; Zhou, J.; Hou, J.; Zhao, L.; Tian, N. Research on improved moving object tracking method based on ECO-HC. J. Nanjing Univ. Nat. Sci. 2020, 56, 216–226. [Google Scholar]

- Oron, S.; Bar-Hillel, A.; Levi, D.; Avidan, S. Locally Orderless Tracking. Int. J. Comput. Vis. 2014, 111, 213–228. [Google Scholar] [CrossRef]

- Han, Y.; Deng, C.; Zhao, B.; Tao, D. State-Aware Anti-Drift Object Tracking. IEEE Trans Image Process. 2019, 28, 4075–4086. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Xie, W.; Zeng, W.; Liu, W. Learning to Update for Object Tracking With Recurrent Meta-Learner. IEEE Trans. Image Process. 2019, 28, 3624–3635. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Chen, J.; Lin, K. Integration of Texture and Depth Information for Robust Object Tracking. In Proceedings of the 2014 IEEE International Conference on Granular Computing (GrC), Noboribetsu, Japan, 22–24 October 2014; pp. 170–174. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Wang, M.; Liu, Y.; Huang, Z. Large Margin Object Tracking with Circulant Feature Maps. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4800–4808. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-Term Correlation Tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Proceedings of the IEEE European Conference on Computer Vision Workshops (ECCVW), Zurich, Switzerland, 6–12 September 2014; pp. 254–265. [Google Scholar]

| Trackers | SV | IV | OPR | OCC | BC | DEF | MB | FM | IPR | OV | LR | AP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 83.5 | 82.3 | 87.0 | 90.1 | 84.5 | 91.7 | 76.8 | 77.7 | 81.2 | 81.0 | 53.3 | 88.0 |

| Ours | 85.9 | 84.9 | 88.6 | 92.2 | 87.8 | 91.6 | 79.6 | 81.4 | 83.3 | 80.1 | 53.9 | 89.3 |

| Trackers | SV | IV | OPR | OCC | BC | DEF | MB | FM | IPR | OV | LR | AUC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 77.1 | 78.5 | 79.6 | 84.7 | 80.5 | 91.0 | 76.8 | 75.3 | 72.8 | 82.8 | 53.9 | 82.5 |

| Ours | 77.7 | 79.4 | 79.9 | 85.3 | 81.6 | 90.7 | 76.8 | 75.6 | 73.2 | 81.9 | 55.3 | 82.9 |

| Trackers | SV | IV | OPR | OCC | BC | DEF | MB | FM | IPR | OV | LR | AP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 84.3 | 84.0 | 86.5 | 84.8 | 88.6 | 84.3 | 85.3 | 82.5 | 82.3 | 85.0 | 79.0 | 87.8 |

| ECO-HC | 80.5 | 79.2 | 81.1 | 80.6 | 82.4 | 81.8 | 78.0 | 79.2 | 78.3 | 73.7 | 79.8 | 84.5 |

| BACF | 76.7 | 80.3 | 77.9 | 73.0 | 80.1 | 76.4 | 74.1 | 78.7 | 79.2 | 74.8 | 74.1 | 81.6 |

| SRDCF | 74.1 | 78.6 | 74.2 | 73.0 | 77.5 | 72.8 | 76.7 | 76.9 | 74.5 | 59.7 | 65.5 | 79.0 |

| LMCF | 72.3 | 79.5 | 76.0 | 73.6 | 82.2 | 72.9 | 73.0 | 73.0 | 75.5 | 69.3 | 67.9 | 78.4 |

| Staple | 71.5 | 78.7 | 73.0 | 72.1 | 76.6 | 74.3 | 70.7 | 69.7 | 77.0 | 66.1 | 63.1 | 77.9 |

| LCT | 67.8 | 74.3 | 74.6 | 67.9 | 73.4 | 68.5 | 66.9 | 68.1 | 78.2 | 59.2 | 53.7 | 76.2 |

| SAMF | 70.1 | 70.8 | 73.9 | 72.2 | 68.9 | 68.0 | 65.5 | 65.4 | 72.1 | 62.8 | 68.5 | 75.1 |

| KCF | 63.5 | 72.4 | 67.7 | 63.2 | 71.3 | 61.9 | 60.1 | 62.1 | 70.1 | 50.1 | 56.0 | 69.6 |

| DSST | 63.3 | 71.5 | 64.4 | 58.9 | 70.4 | 53.3 | 56.7 | 55.2 | 69.1 | 48.1 | 56.7 | 67.9 |

| Trackers | SV | IV | OPR | OCC | BC | DEF | MB | FM | IPR | OV | LR | AUC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 75.4 | 81.0 | 77.8 | 78.3 | 83.7 | 77.6 | 81.0 | 76.0 | 72.2 | 73.4 | 65.4 | 80.9 |

| EOC-HC | 71.2 | 75.4 | 72.3 | 74.4 | 77.2 | 73.4 | 75.3 | 74.0 | 67.7 | 65.7 | 69.4 | 77.2 |

| BACF | 69.8 | 78.0 | 70.9 | 69.2 | 76.7 | 69.1 | 73.5 | 75.6 | 71.1 | 68.9 | 66.3 | 76.8 |

| SRDCF | 66.2 | 74.0 | 66.4 | 67.8 | 70.1 | 65.9 | 72.9 | 71.7 | 66.2 | 55.8 | 62.6 | 72.8 |

| LMCF | 62.2 | 74.5 | 67.7 | 68.7 | 76.4 | 65.9 | 70.3 | 67.4 | 65.6 | 65.1 | 54.6 | 71.3 |

| Staple | 61.0 | 72.1 | 64.6 | 67.2 | 70.9 | 67.2 | 66.1 | 63.8 | 67.3 | 56.0 | 49.1 | 70.4 |

| LCT | 58.3 | 71.5 | 67.6 | 63.1 | 70.3 | 61.6 | 65.9 | 65.5 | 69.4 | 53.1 | 43.6 | 70.1 |

| SAMF | 58.4 | 64.0 | 66.0 | 66.4 | 63.9 | 60.6 | 64.1 | 59.5 | 64.1 | 55.1 | 51.5 | 67.4 |

| DSST | 52.5 | 64.9 | 55.1 | 53.1 | 61.3 | 47.9 | 55.1 | 51.7 | 58.9 | 45.7 | 44.2 | 60.0 |

| KCF | 41.5 | 55.0 | 52.7 | 51.2 | 60.9 | 50.3 | 55.0 | 52.6 | 55.3 | 44.2 | 29.5 | 55.1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Li, S.; Li, D.; Zhou, W.; Yang, Y.; Lin, X.; Jiang, S. Parallel Three-Branch Correlation Filters for Complex Marine Environmental Object Tracking Based on a Confidence Mechanism. Sensors 2020, 20, 5210. https://doi.org/10.3390/s20185210

Zhang Y, Li S, Li D, Zhou W, Yang Y, Lin X, Jiang S. Parallel Three-Branch Correlation Filters for Complex Marine Environmental Object Tracking Based on a Confidence Mechanism. Sensors. 2020; 20(18):5210. https://doi.org/10.3390/s20185210

Chicago/Turabian StyleZhang, Yihong, Shuai Li, Demin Li, Wuneng Zhou, Yijin Yang, Xiaodong Lin, and Shigao Jiang. 2020. "Parallel Three-Branch Correlation Filters for Complex Marine Environmental Object Tracking Based on a Confidence Mechanism" Sensors 20, no. 18: 5210. https://doi.org/10.3390/s20185210

APA StyleZhang, Y., Li, S., Li, D., Zhou, W., Yang, Y., Lin, X., & Jiang, S. (2020). Parallel Three-Branch Correlation Filters for Complex Marine Environmental Object Tracking Based on a Confidence Mechanism. Sensors, 20(18), 5210. https://doi.org/10.3390/s20185210