Noise-Aware and Light-Weight VLSI Design of Bilateral Filter for Robust and Fast Image Denoising in Mobile Systems

Abstract

1. Introduction

2. Related Works

2.1. Optimal Parameter Selection of Bilateral Filter

2.2. VLSI Design of Bilateral Filter

3. Proposed Approach

3.1. Noise-Aware Bilateral Filter (NABF)

3.2. Binary Noise-Aware Bilateral Filter (B-NABF)

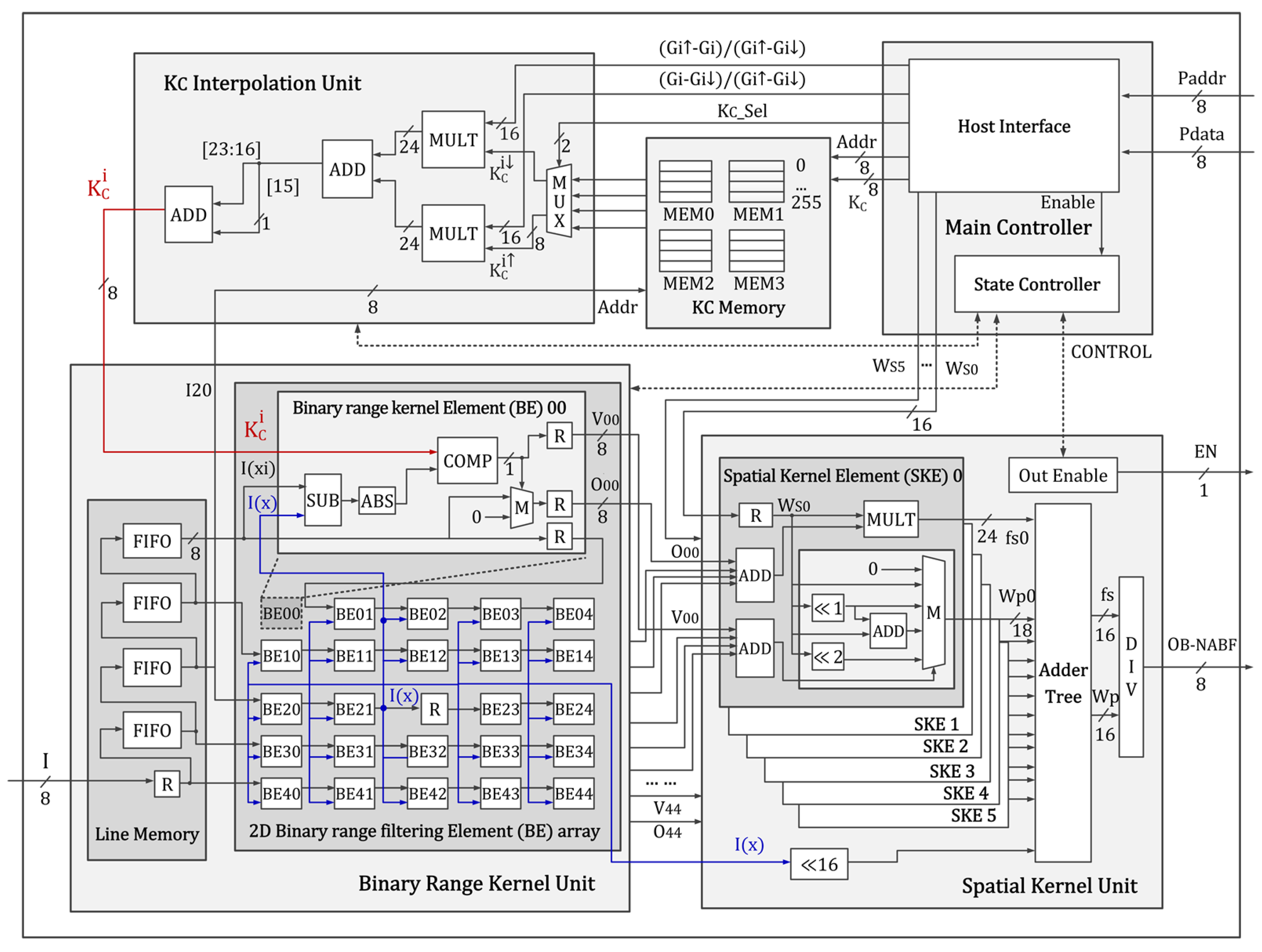

4. VLSI Design

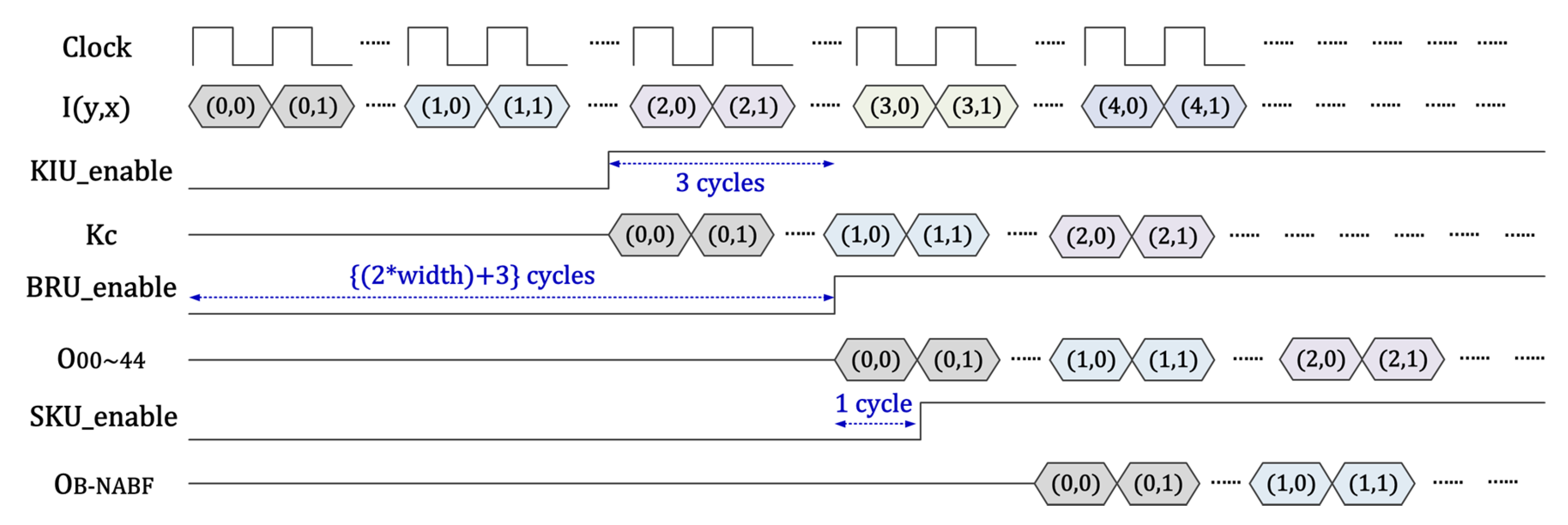

4.1. Main Controller

4.2. Binary Range Kernel Unit

4.3. Memory & Interpolation Unit

4.4. Spatial Kernel Unit

5. Experimental Results

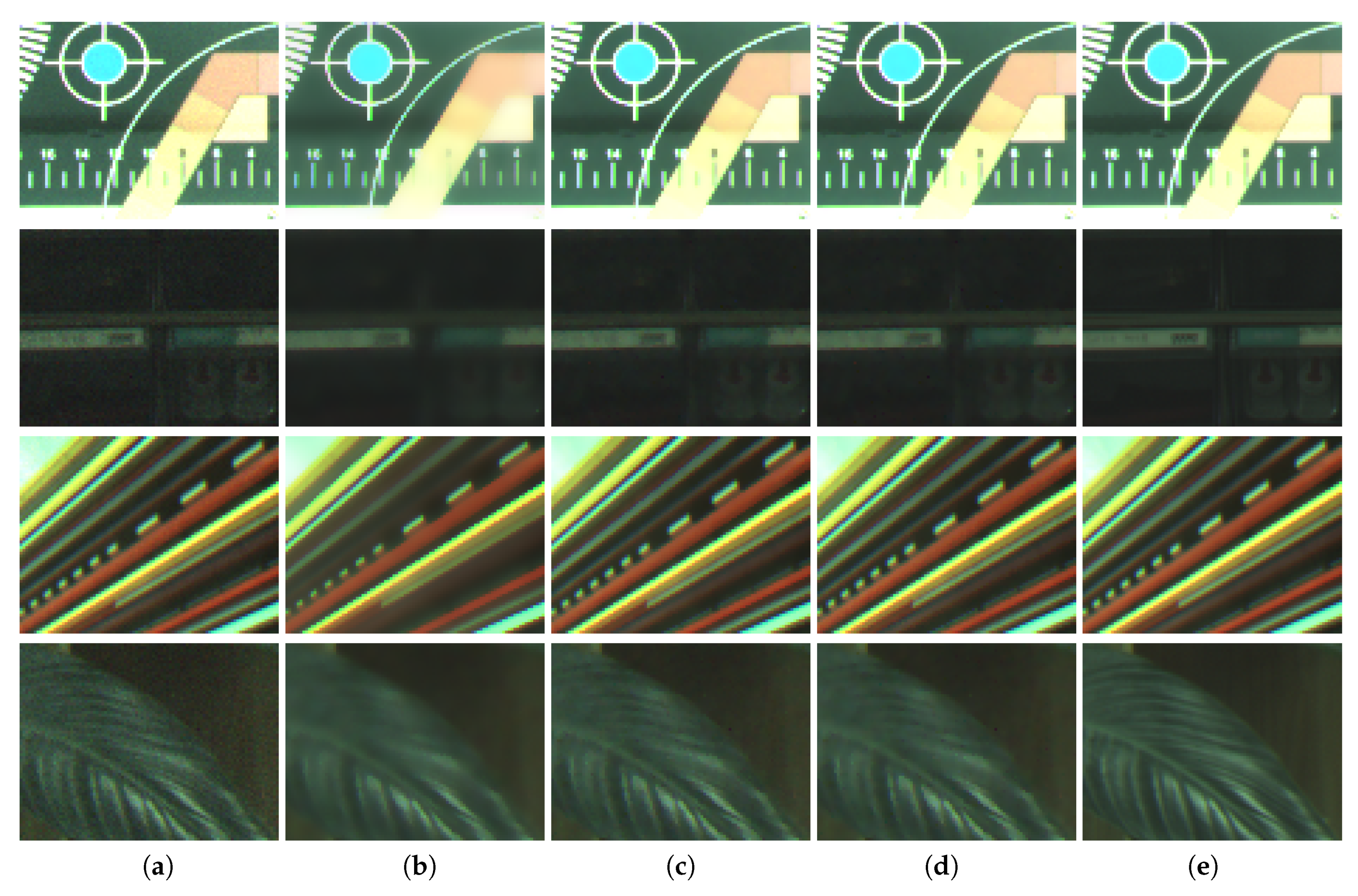

5.1. Image Quality by Denoising

5.2. Implementation Result and Comparison

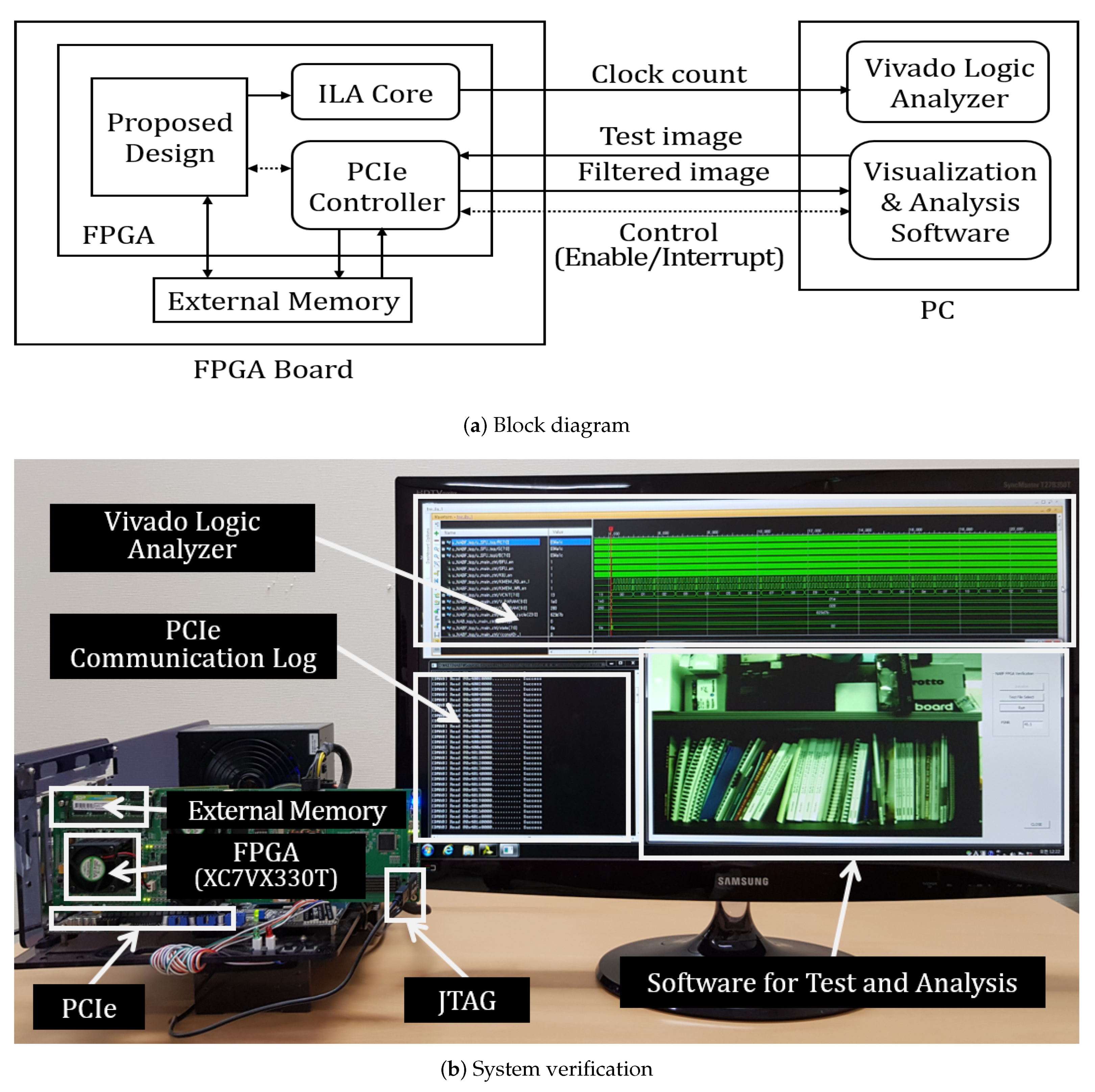

5.2.1. System Configuration for Measurement

5.2.2. Comparison with Recent VLSI Designs of Bilateral Filter

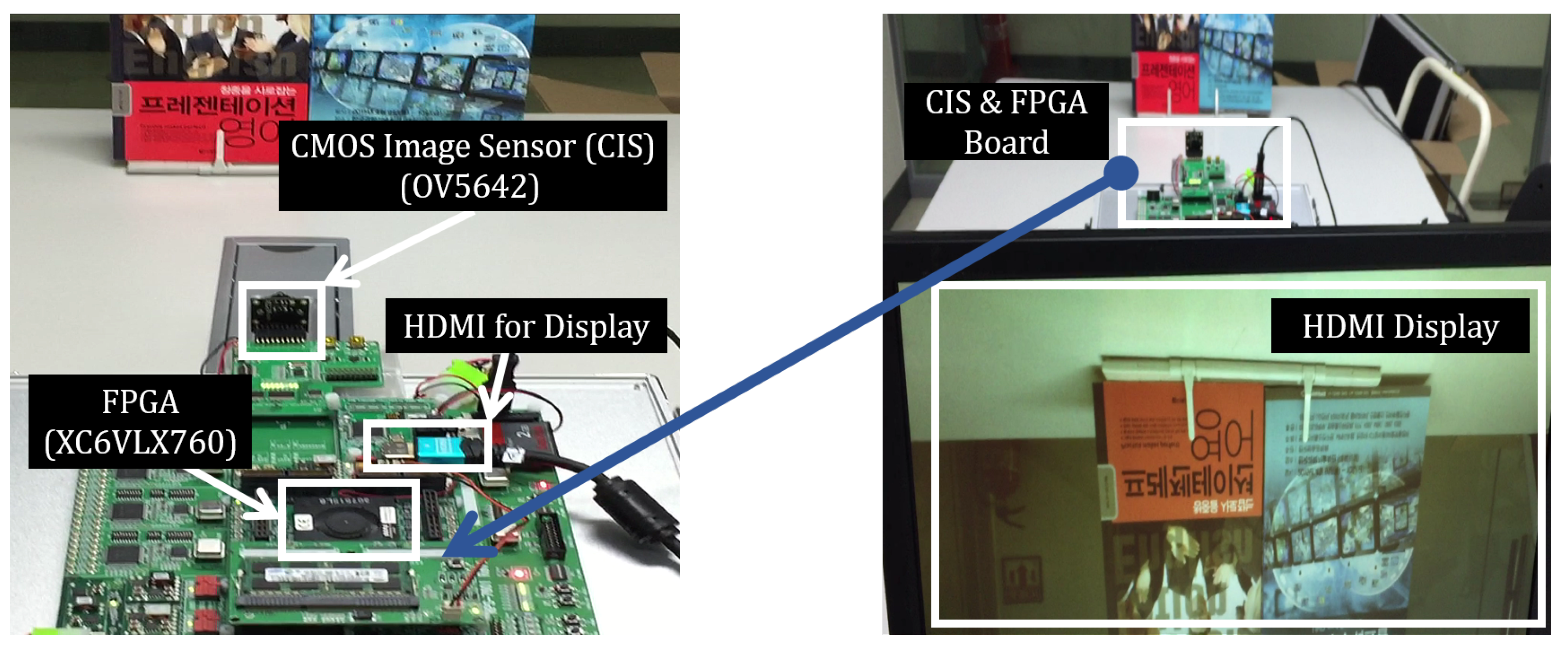

5.2.3. Integration of Implemented VLSI Design and Image Sensor

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Goyal, B.; Dogra, A.; Agrawal, S.; Sohi, B.; Sharma, A. Image denoising review: From classical to state-of-the-art approaches. Inf. Fusion 2020, 55, 220–244. [Google Scholar] [CrossRef]

- Liu, D.; Wen, B.; Jiao, J.; Liu, X.; Wang, Z.; Huang, T.S. Connecting Image Denoising and High-Level Vision Tasks via Deep Learning. IEEE Trans. Image Process. 2020, 29, 3695–3706. [Google Scholar] [CrossRef] [PubMed]

- Milyaev, S.; Laptev, I. Towards reliable object detection in noisy images. Pattern Recognit. Image Anal. 2017, 27, 713–722. [Google Scholar] [CrossRef]

- Hasan, M.; El-Sakka, M. Improved BM3D image denoising using SSIM-optimized Wiener filter. EURASIP J. Image Video Process. 2018, 2018, 25. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Zhu, Y.; Du, X. Dynamic Residual Dense Network for Image Denoising. Sensors 2019, 19, 3809. [Google Scholar] [CrossRef] [PubMed]

- Lefkimmiatis, S. Universal denoising networks: A aovel CNN architecture for image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3204–3213. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the IEEE International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Choi, S.H.; Cho, J.; Tai, Y.M.; Lee, S.W. A parallel camera image signal processor for SIMD architecture. EURASIP J. Image Video Process. 2016, 2016, 29. [Google Scholar] [CrossRef]

- Petreto, A.; Romera, T.; Lemaitre, F.; Masliah, I.; Gaillard, B.; Bouyer, M.; Meunier, Q.L.; Lacassagne, L. A new real-time embedded video denoising algorithm. In Proceedings of the Conference on Design and Architectures for Signal and Image Processing, Montreal, QC, Canada, 16–18 October 2019; pp. 47–52. [Google Scholar]

- Tseng, Y.; Hsu, P.; Chang, T. A 124 Mpixels/s VLSI design for histogram-based joint bilateral filtering. IEEE Trans. Image Process. 2011, 20, 3231–3241. [Google Scholar] [CrossRef] [PubMed]

- Dutta, H.; Hannig, F.; Teich, J.; Heigl, B.; Hornegger, H. A design methodology for hardware acceleration of adaptive filter algorithms in image processing. In Proceedings of the IEEE International Conference on Application-specific Systems, Architectures and Processors, Steamboat Springs, CO, USA, 11–13 September 2006; pp. 331–340. [Google Scholar]

- Gabiger-Rose, A.; Kube, M.; Weigel, R.; Rose, R. An FPGA-based fully synchronized design of a bilateral filter for real-time image denoising. IEEE Trans. Ind. Electron. 2014, 61, 4093–4104. [Google Scholar] [CrossRef]

- Dabhade, S.D.; Rathna, G.N.; Chaudhury, K.N. A Reconfigurable and Scalable FPGA Architecture for Bilateral Filtering. IEEE Trans. Ind. Electron. 2018, 65, 1459–1469. [Google Scholar] [CrossRef]

- Tsin, Y.; Ramesh, V.; Kanade, T. Statistical calibration of CCD imaging process. In Proceedings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; pp. 480–487. [Google Scholar]

- Hwang, Y.; Kim, J.; Kweon, I.S. Difference-based image noise modeling using skellam Distribution. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1329–1341. [Google Scholar] [CrossRef] [PubMed]

- Greenland, S.; Senn, S.J.; Rothman, K.J.; Carlin, J.B.; Poole, C.; Goodman, S.N.; Altman, D.G. Statistical tests, P values, confidence intervals, and power: A guide to misinterpretations. Eur. J. Epidemiol. 2016, 31, 337–350. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Rao, R. Bilateral kernel parameter optimization by risk minimization. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 3293–3296. [Google Scholar]

- Kishan, H.; Seelamantula, C.S. Optimal parameter selection for bilateral filters using Poisson Unbiased Risk Estimate. In Proceedings of the IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 121–124. [Google Scholar]

- Chen, Y.; Shu, Y. Optimization of bilateral filter parameters via chi-square unbiased risk estimate. IEEE Signal Process. Lett. 2014, 21, 97–100. [Google Scholar] [CrossRef]

- Luisier, F.; Wolfe, P.J. Chi-square unbiased risk estimate for denoising magnitude MR images. In Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 1561–1564. [Google Scholar]

- Chang, H.H.; Lin, Y.J.; Zhuang, A. An automatic parameter decision system of bilateral filtering with GPU-Based acceleration for brain MR images. J. Digit. Imaging 2018, 32, 148–161. [Google Scholar] [CrossRef]

- Porikli, F. Constant time O(1) bilateral filtering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Chaudhury, K.N.; Dabhade, S.D. Fast and provably accurate bilateral filtering. IEEE Trans. Image Process. 2016, 25, 2519–2528. [Google Scholar] [CrossRef] [PubMed]

| (a) Difference-based image noise model (b) Dependency on pixel intensity and camera setting |

| (a) Set 1 | (b) Set 2 | (c) Set 3 |

| (d) Set 4 | (e) Set 5 |

| (a) (b) (c) (d) (e) |

| Parameter | Input | Conventional BF | NABF | B-NABF | |||||

|---|---|---|---|---|---|---|---|---|---|

| N/A | 1.0 | 1.0 | 2.0 | 1.0 | 2.0 | 1.0 | 1.0 | ||

| 0.04 | 0.08 | 0.08 | N/A | N/A | |||||

| N/A | N/A | 0.1 | 0.05 | ||||||

| Gain [dB] | 0 | 45.1 | 42.3 | 38.9 | 35.9 | 45.7 | 45.5 | 45.5 | 45.3 |

| 5 | 43.7 | 42.0 | 38.2 | 35.4 | 44.4 | 44.2 | 44.2 | 44.1 | |

| 10 | 42.1 | 41.7 | 38.0 | 35.3 | 43.1 | 42.8 | 42.8 | 42.7 | |

| 15 | 39.3 | 40.0 | 37.4 | 35.0 | 40.5 | 40.2 | 40.2 | 40.1 | |

| 15(I) | N/A | 40.4 | 40.2 | 40.1 | 40.1 | ||||

| 18 | 36.7 | 37.7 | 36.0 | 34.1 | 37.8 | 37.5 | 37.6 | 37.5 | |

| Parameter | Input | Conventional BF | NABF | B-NABF | |||||

|---|---|---|---|---|---|---|---|---|---|

| N/A | 1.0 | 1.0 | 2.0 | 1.0 | 2.0 | 1.0 | 1.0 | ||

| 0.04 | 0.08 | 0.08 | N/A | N/A | |||||

| N/A | N/A | 0.1 | 0.05 | ||||||

| Test Set | #1 | 42.4 | 41.9 | 38.5 | 35.7 | 43.5 | 43.2 | 43.2 | 43.1 |

| #2 | 38.9 | 38.9 | 36.9 | 34.7 | 39.6 | 39.4 | 39.5 | 39.5 | |

| #3 | 42.5 | 41.7 | 37.9 | 35.3 | 43.7 | 43.4 | 43.4 | 43.4 | |

| #4 | 40.6 | 39.7 | 36.7 | 34.5 | 41.2 | 41.0 | 40.9 | 40.8 | |

| #5 | 42.5 | 41.5 | 38.5 | 35.6 | 43.4 | 43.1 | 43.0 | 42.9 | |

| [12] | [13] | Proposed | ||

|---|---|---|---|---|

| Device | Xilinx-5 FPGA | Xilinx-7 FPGA | Xilinx-7 FPGA | |

| (XC5VLX50) | (XC7Z020) | (XC7VX330T) | ||

| Image Resolution | 1024×1024 | 256×256 | 1920×1080 | |

| Max. Freq. (MHz) | 320 | 63 | 330 | |

| Throughput (Mpixels/s) | 31.5 | 3.45 | 330 | |

| Logic Usage (ea) | Slice | 1060 | † | 476 |

| LUT | † | 2647 | 1425 | |

| FF | † | 686 | 552 | |

| DSP | 29 | 10 | 8 | |

| Memory Usage (KByte) | 49.5 | 706.5 | 18 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, S.-J.; Hwang, Y. Noise-Aware and Light-Weight VLSI Design of Bilateral Filter for Robust and Fast Image Denoising in Mobile Systems. Sensors 2020, 20, 4722. https://doi.org/10.3390/s20174722

Jang S-J, Hwang Y. Noise-Aware and Light-Weight VLSI Design of Bilateral Filter for Robust and Fast Image Denoising in Mobile Systems. Sensors. 2020; 20(17):4722. https://doi.org/10.3390/s20174722

Chicago/Turabian StyleJang, Sung-Joon, and Youngbae Hwang. 2020. "Noise-Aware and Light-Weight VLSI Design of Bilateral Filter for Robust and Fast Image Denoising in Mobile Systems" Sensors 20, no. 17: 4722. https://doi.org/10.3390/s20174722

APA StyleJang, S.-J., & Hwang, Y. (2020). Noise-Aware and Light-Weight VLSI Design of Bilateral Filter for Robust and Fast Image Denoising in Mobile Systems. Sensors, 20(17), 4722. https://doi.org/10.3390/s20174722