Fully-Actuated Aerial Manipulator for Infrastructure Contact Inspection: Design, Modeling, Localization, and Control

Abstract

1. Introduction

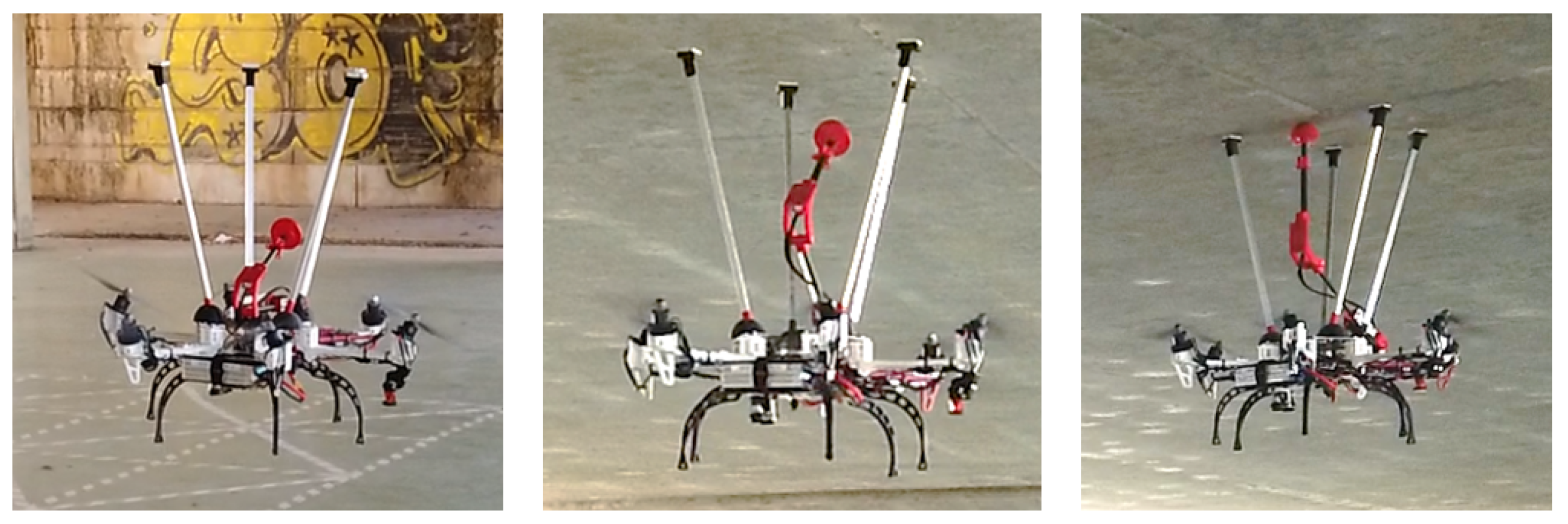

2. System Description

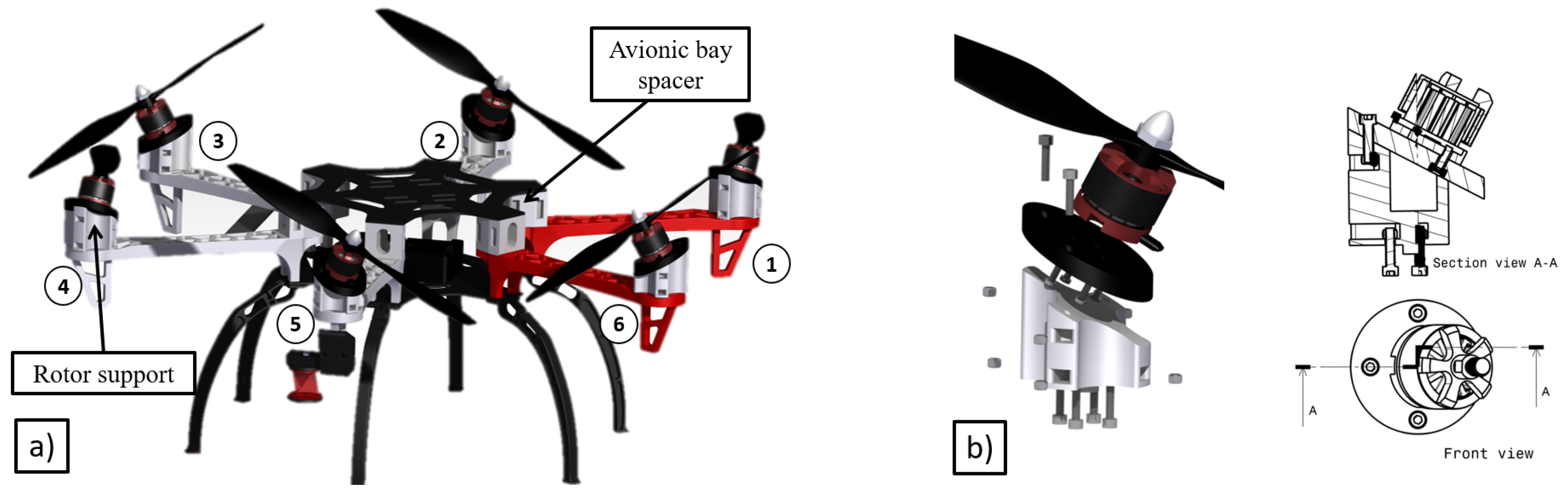

2.1. Tilted Rotor Design

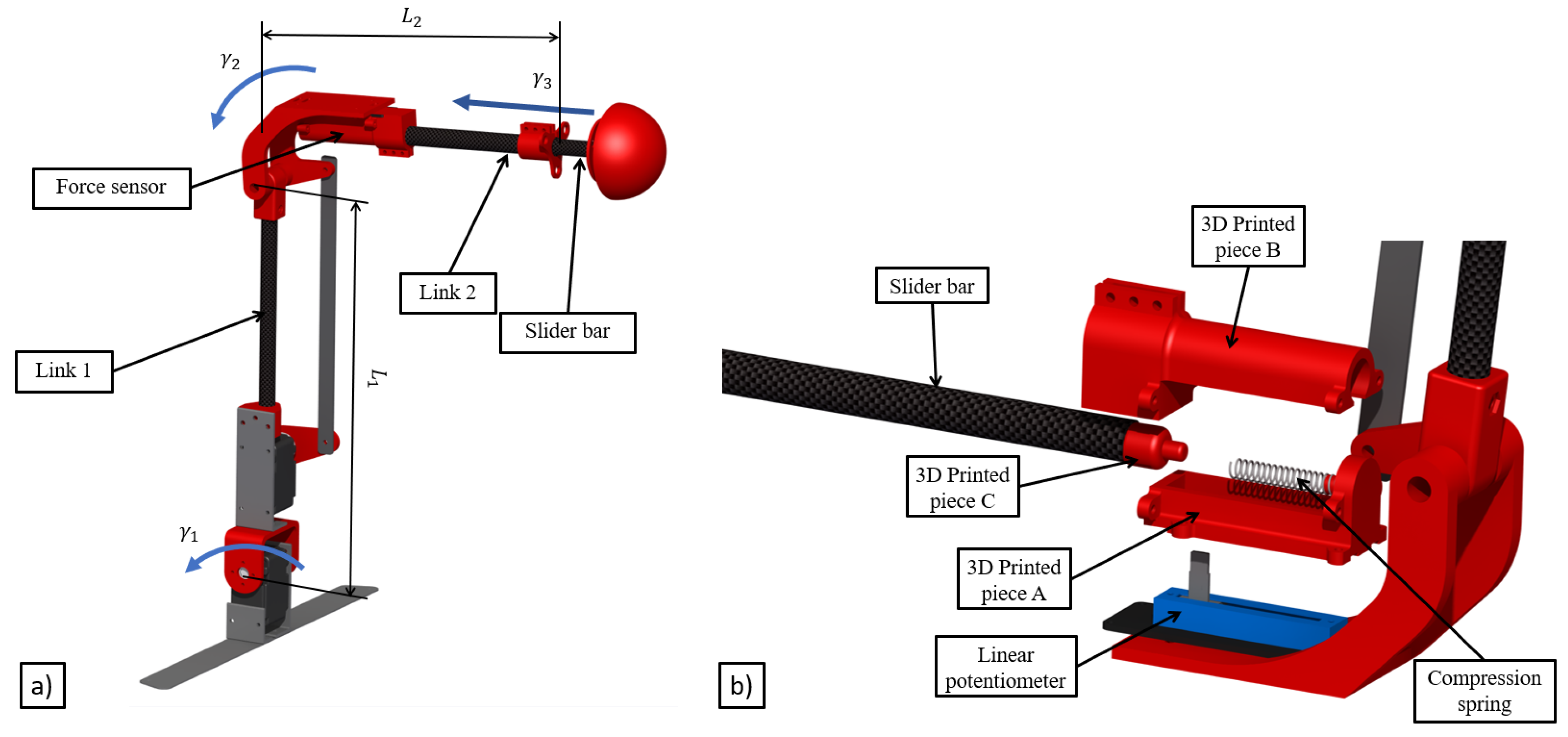

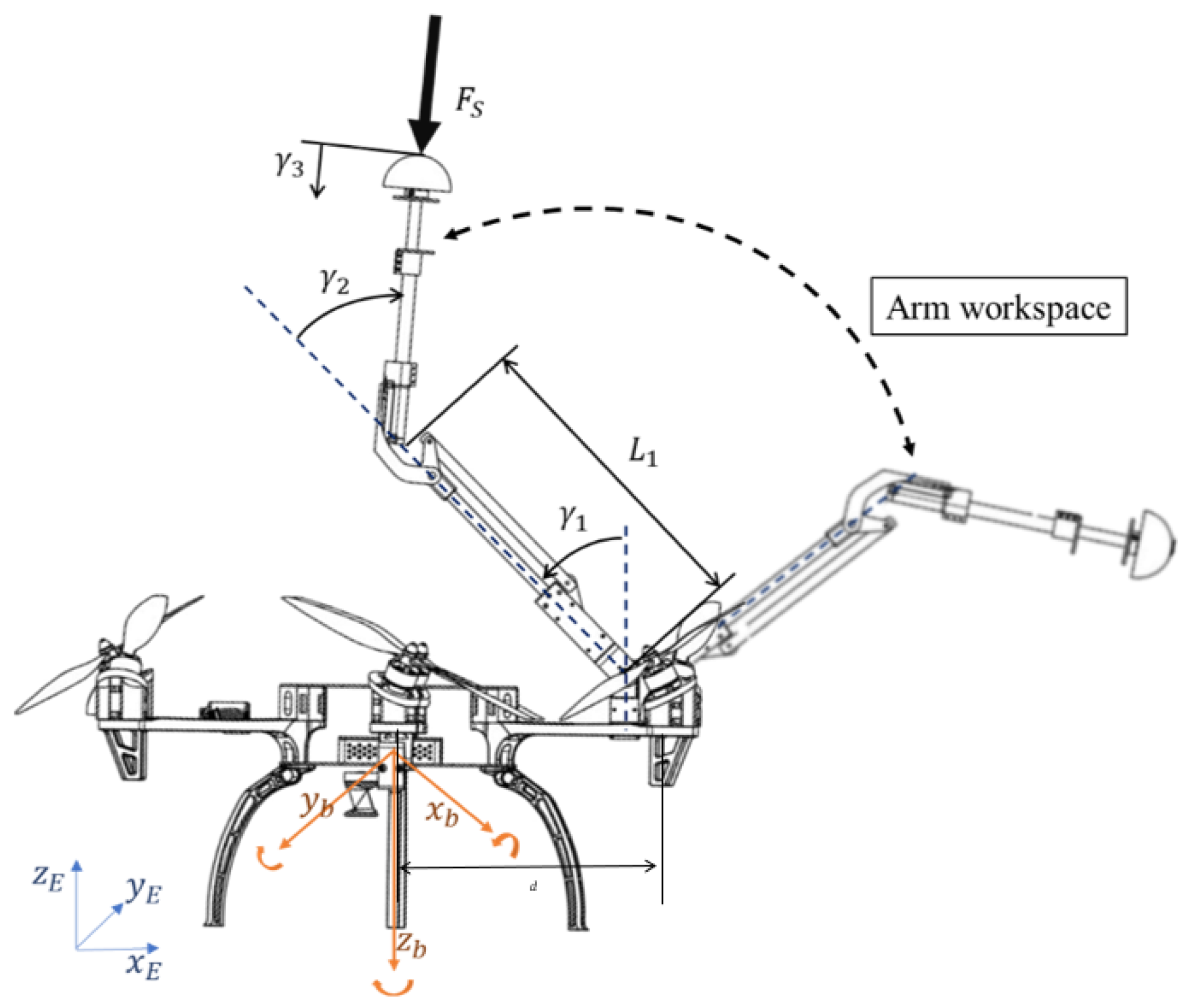

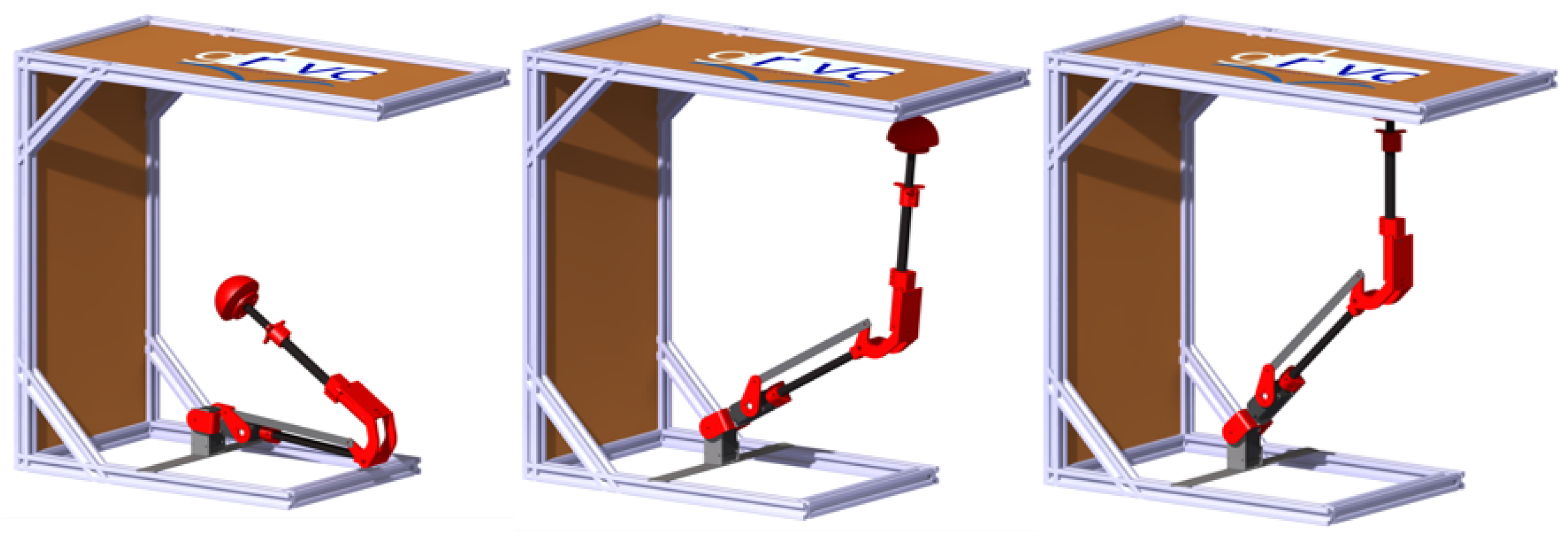

2.2. Arm Design

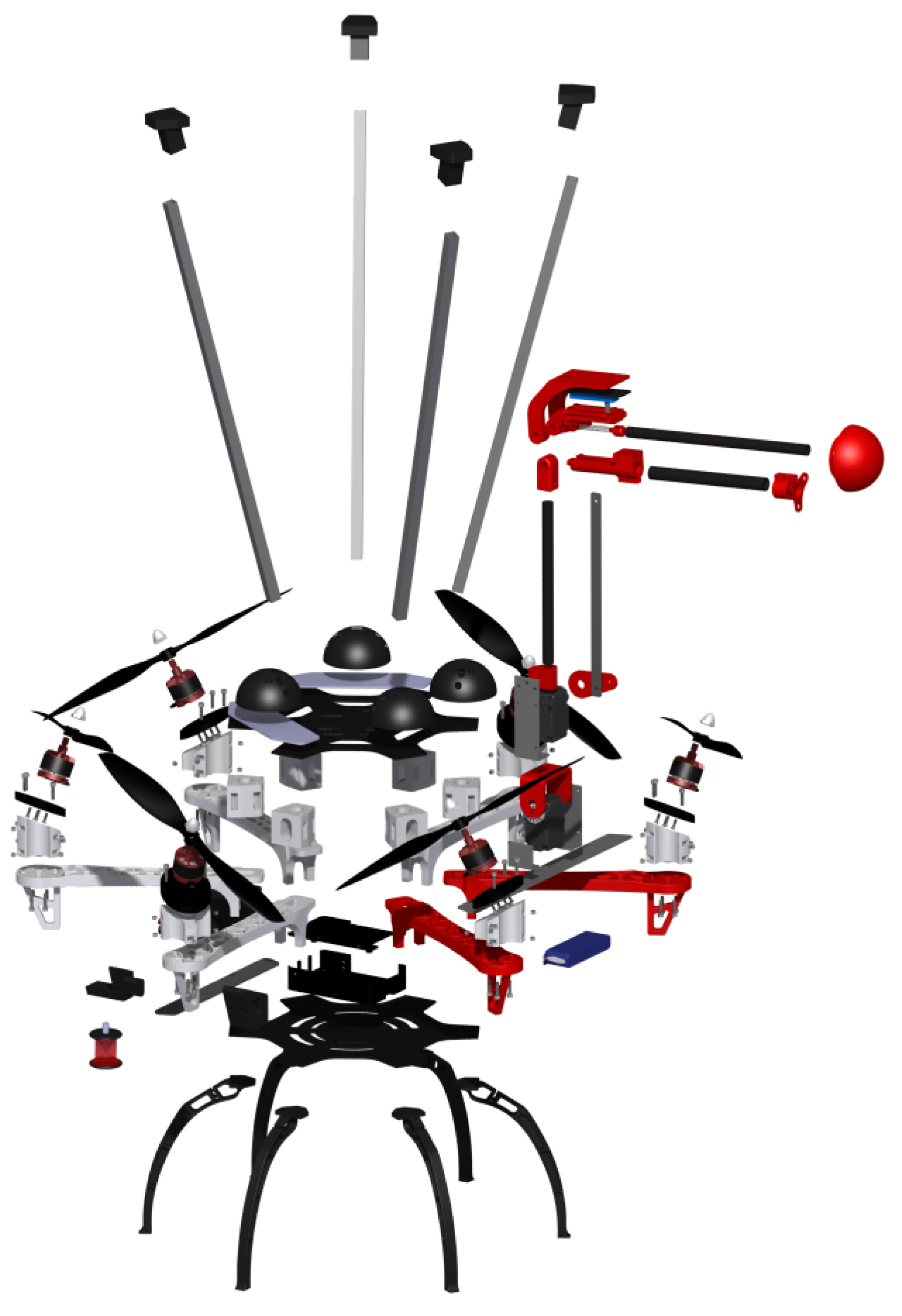

2.3. Final Prototype Integration

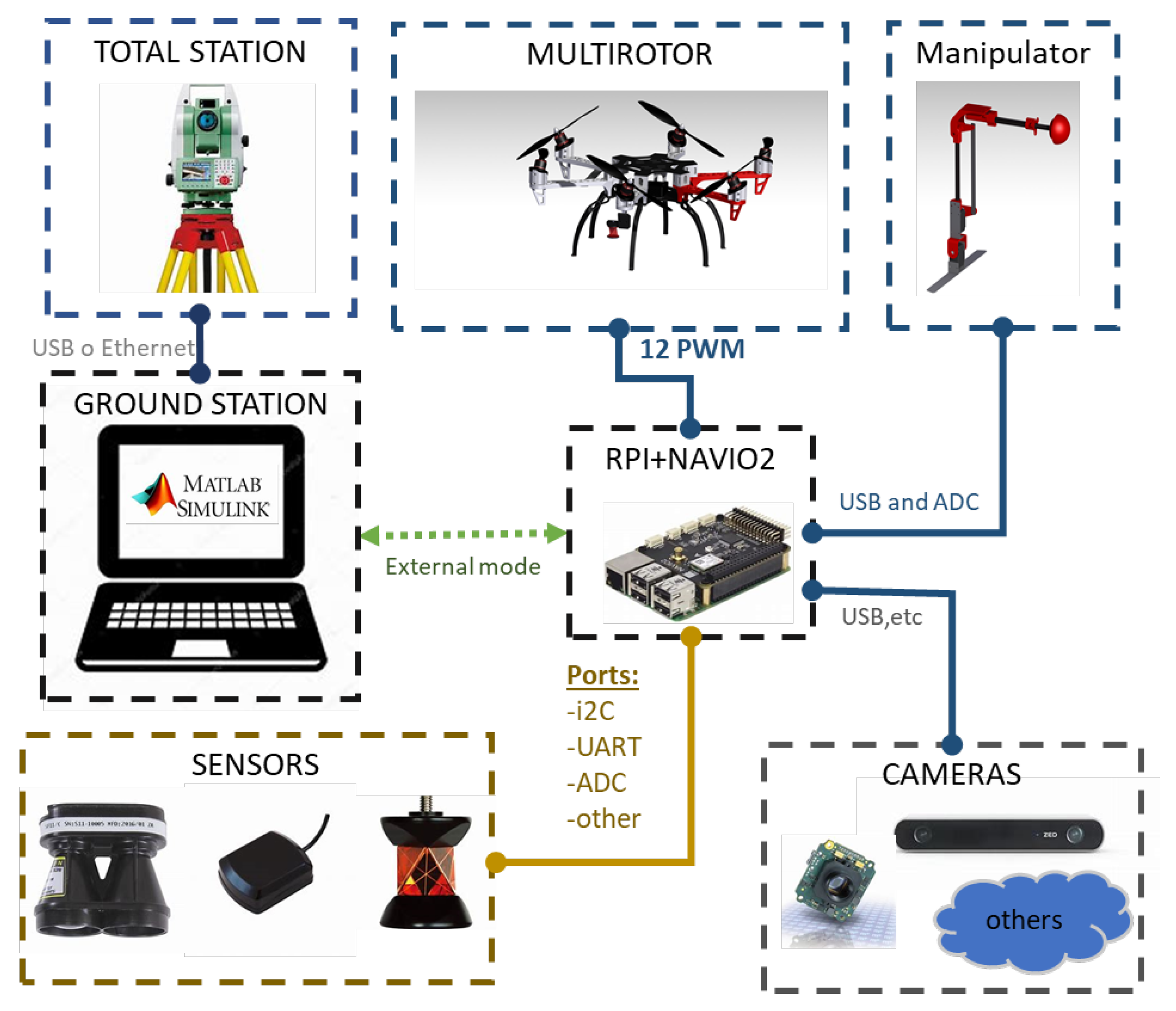

2.4. On-Board Avionics

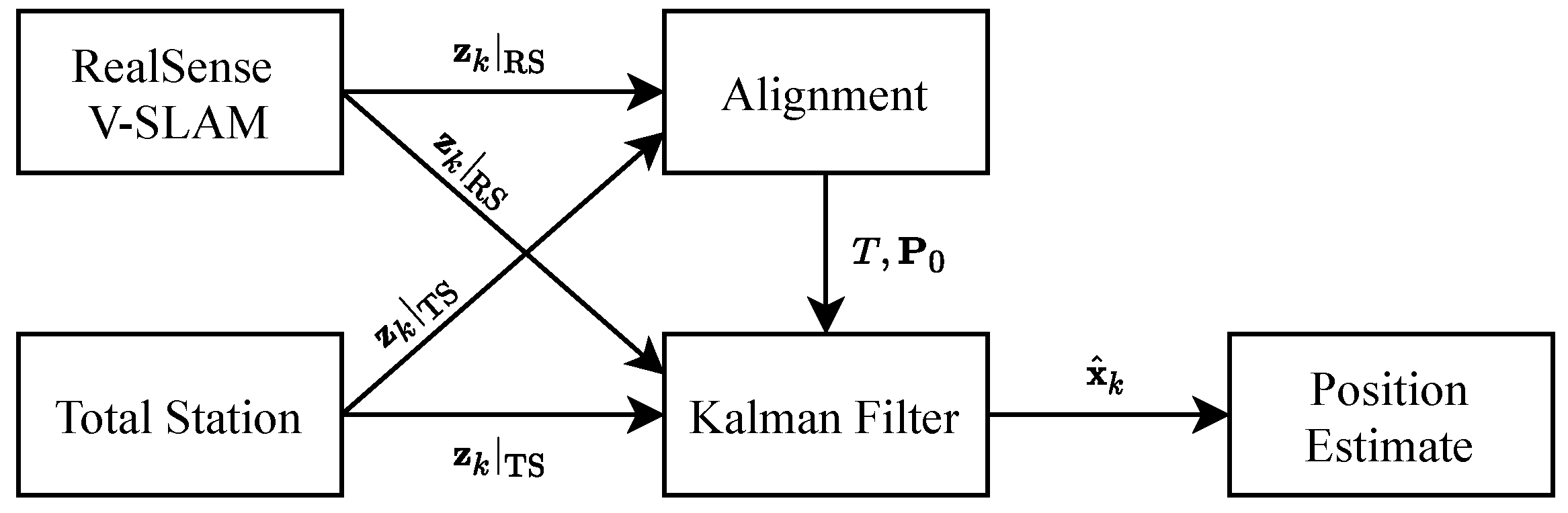

3. Localization System

3.1. Multi-Sensor Localization for Bridge Inspection

- Align sensors to the common reference frame:

- (a)

- Move the aerial platform manually or in a first safe flight and record the measurements from the sensors.

- (b)

- Interpolate the collected sensor data to have the same length.

- (c)

- Solve the over-determined equation system using a least square fitting algorithm to obtain T and .

- Apply the Kalman filter to obtain an accurate estimate of the platform position.

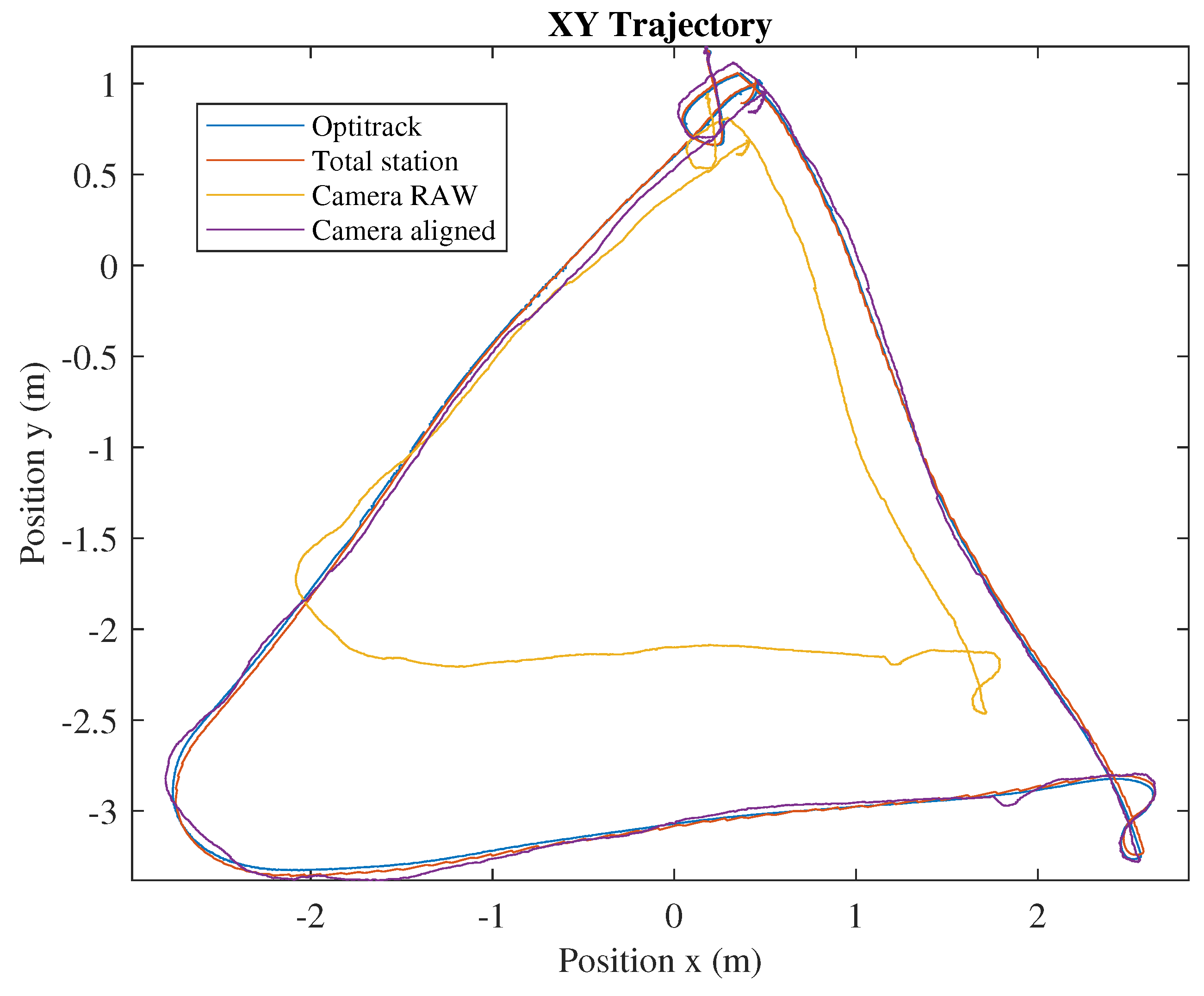

3.2. Experimental Results

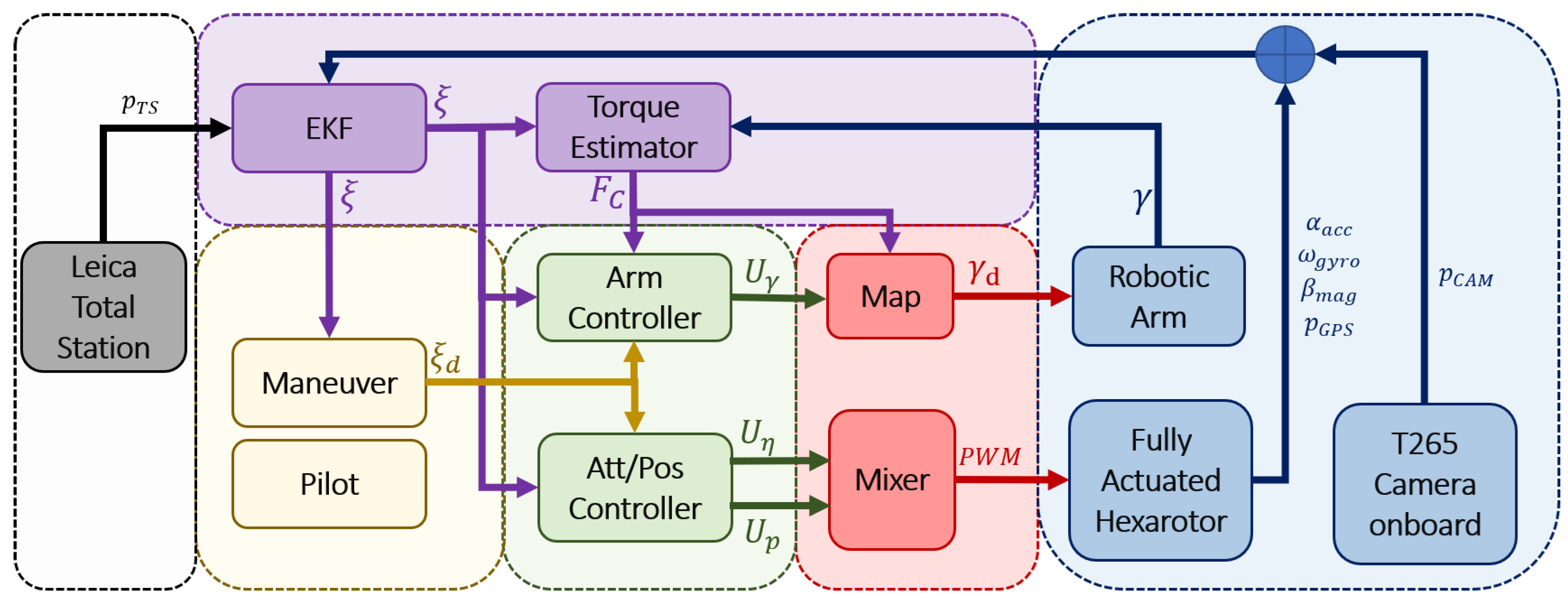

4. Modeling and Control

4.1. Modeling

4.2. Control Scheme

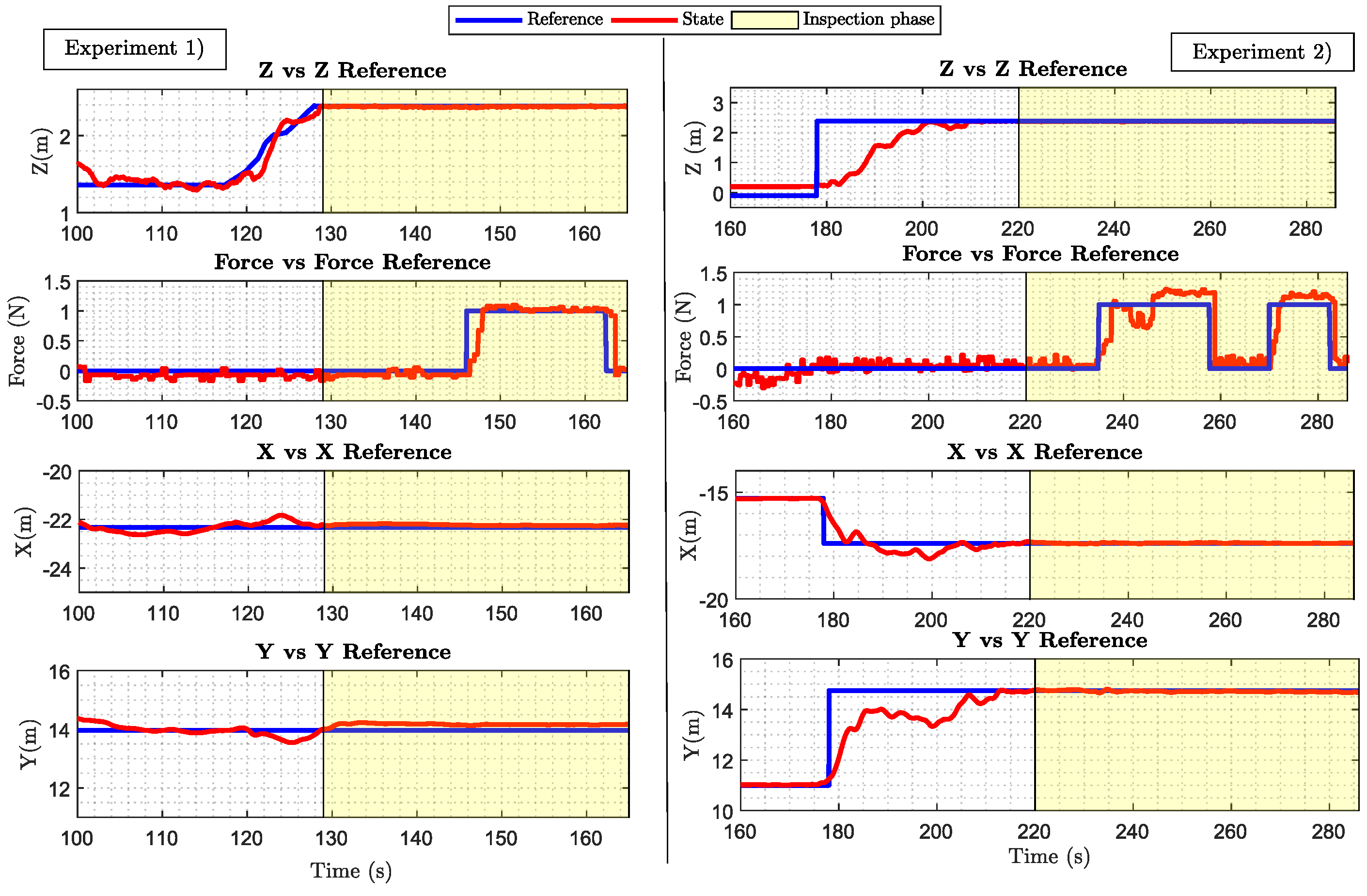

5. Experimental Results

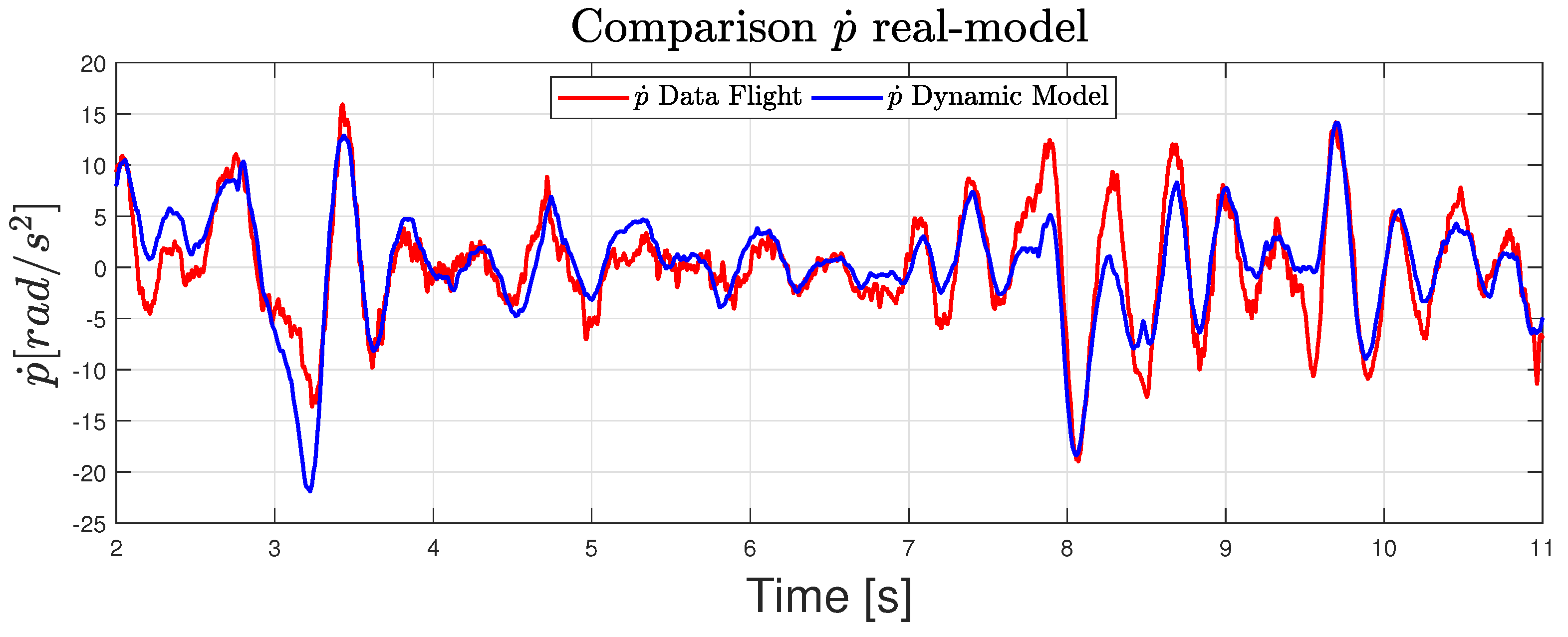

5.1. Model Validation

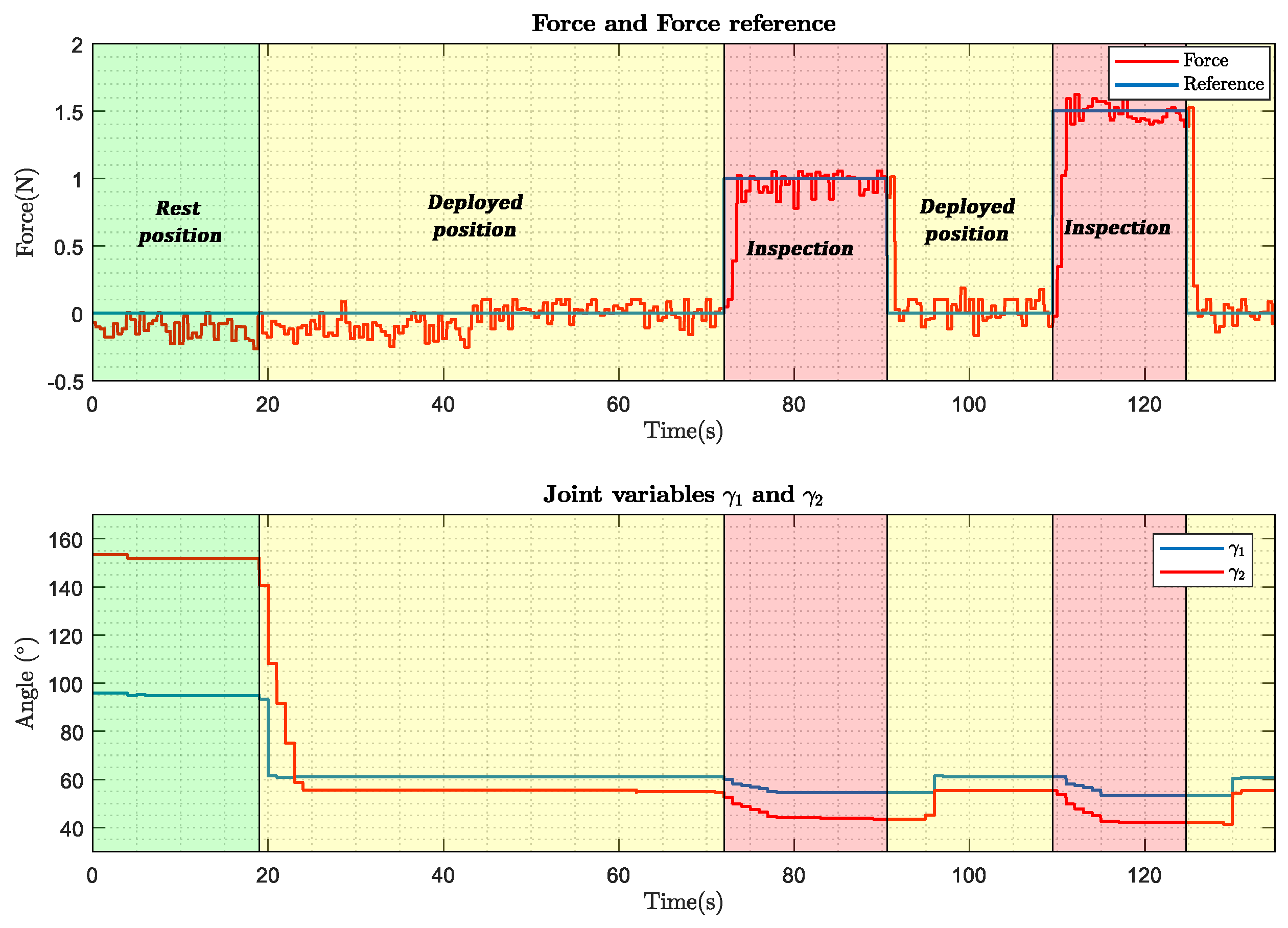

5.2. Inspection in the Test Bench

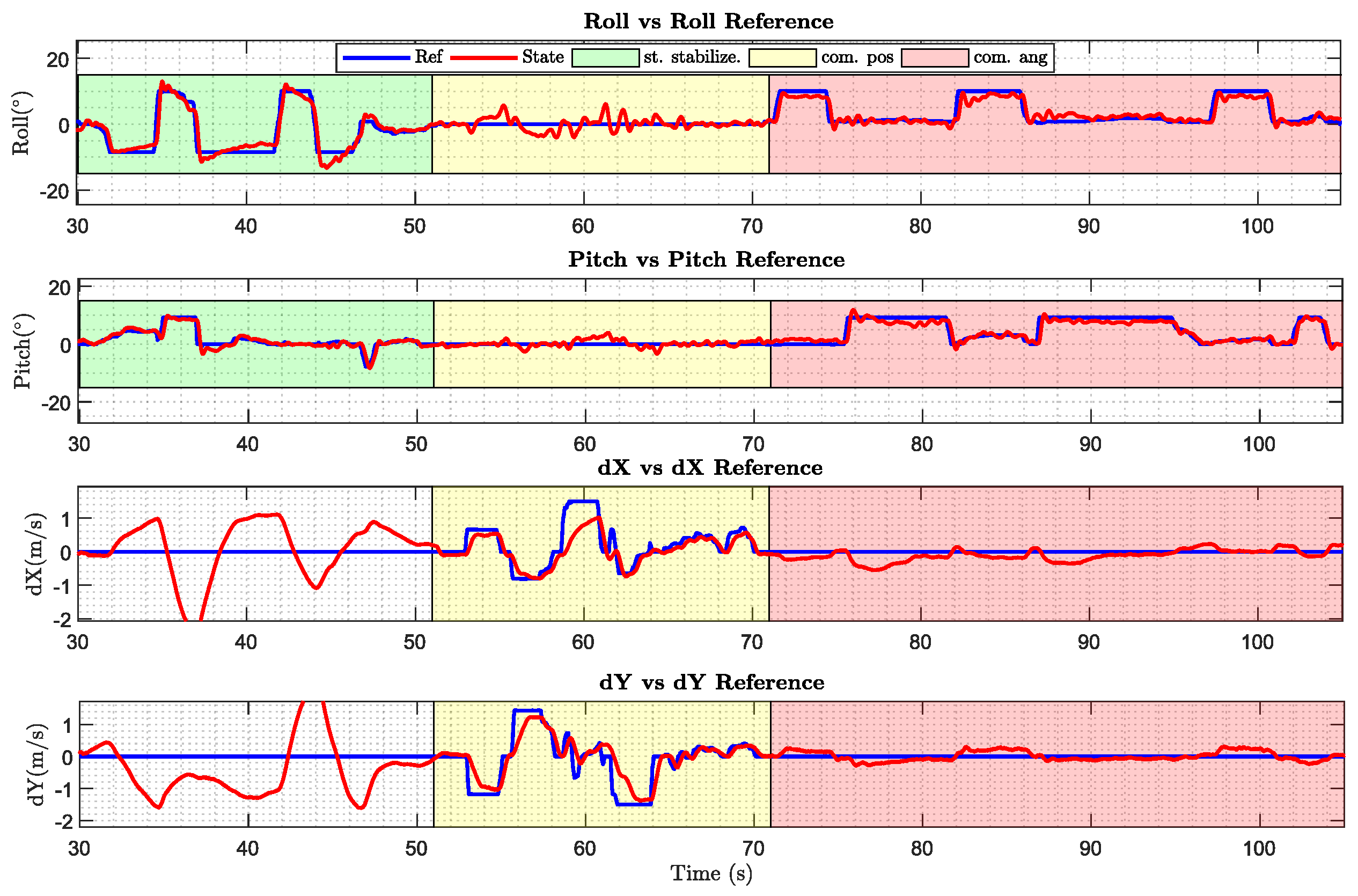

5.3. Tilted Rotor Capabilities

5.4. Inspection Experiments

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Vachtsevanos, G.; Valavanis, K. Handbook of Unmanned Aerial Vehicles; Springer: Berlin/Heidelberg, Germany, 2015; pp. 93–103. [Google Scholar]

- Mahony, R.; Kumar, V.; Corke, P. Multirotor Aerial Vehicles: Modeling, Estimation, and Control of Quadrotor. IEEE Robot. Autom. Mag. 2012, 19, 20–32. [Google Scholar] [CrossRef]

- Ruggiero, F.; Lippiello, V.; Ollero, A. Aerial manipulation: A literature review. IEEE Robot. Autom. Lett. 2018, 3, 1957–1964. [Google Scholar] [CrossRef]

- Ollero, A.; Siciliano, B. Aerial Robotic Manipulation; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Pounds, P.E.I.; Bersak, D.R.; Dollar, A.M. The Yale aerial manipulator: Grasping in flight. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2974–2975. [Google Scholar]

- Seo, H.; Kim, S.; Kim, H.J. Aerial grasping of cylindrical object using visual servoing based on stochastic model predictive control. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6362–6368. [Google Scholar]

- Korpela, C.; Orsag, M.; Oh, P. Towards valve turning using a dual-arm aerial manipulator. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 3411–3416. [Google Scholar]

- Shimahara, S.; Leewiwatwong, S.; Ladig, R.; Shimonomura, K. Aerial torsional manipulation employing multi-rotor flying robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1595–1600. [Google Scholar]

- Chermprayong, P.; Zhang, K.; Xiao, F.; Kovac, M. An integrated Delta manipulator for aerial repair: A new aerial robotic system. IEEE Robot. Autom. Mag. 2019, 26, 54–66. [Google Scholar] [CrossRef]

- Fumagalli, M.; Naldi, R.; Macchelli, A.; Forte, F.; Keemink, A.Q.L.; Stramigioli, S.; Carloni, R.; Marconi, L. Developing an aerial manipulator prototype: Physical interaction with the environment. IEEE Robot. Autom. Mag. 2014, 21, 41–50. [Google Scholar] [CrossRef]

- Yang, C.H.; Wen, M.C.; Chen, Y.C.; Kang, S.C. An Optimized Unmanned Aerial System for Bridge Inspection. In Proceedings of the 32nd International Symposium on Automation and Robotics in Construction and Mining (ISARC), Oulu, Finland, 15–18 June 2015. [Google Scholar]

- Recchiuto, C.T.; Sgorbissa, A. Post-disaster assessment with unmanned aerial vehicles: A survey on practical implementations and research approaches. J. Field Robot. 2018, 35, 459–490. [Google Scholar] [CrossRef]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in Civil Infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- Jung, H.-J.; Lee, J.-H.; Yoon, S.; Kim, I.-H. Bridge Inspection and condition assessment using Unmanned Aerial Vehicles (UAVs): Major challenges and solutions from a practical perspective. Smart Struct. Syst. 2019, 24, 669–681. [Google Scholar]

- Jung, S.; Song, S.; Kim, S.; Park, J.; Her, J.; Roh, K.; Myung, H. Toward Autonomous Bridge Inspection: A framework and experimental results. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Korea, 24–27 June 2019; pp. 208–211. [Google Scholar] [CrossRef]

- Hallermann, N.; Morgenthal, G. Visual inspection strategies for large bridges using Unmanned Aerial Vehicles (UAV). In Proceedings of the 7th International Conference on Bridge Maintenance, Safety and Management (IABMAS), Shanghai, China, 7–11 July 2014; pp. 661–667. [Google Scholar]

- Chen, S.; Linh, T.-H.; Laefer, D.; Mangina, E. Automated bridge deck evaluation through UAV derived point cloud. In Proceedings of the 2018 Civil Engineering Research in Ireland Conference, Dublin, Ireland, 29–30 August 2018. [Google Scholar]

- AEROBI Project (AErial RObotic System for In-Depth Bridge Inspection by Contact). Available online: http://www.aerobi.eu/ (accessed on 15 August 2020).

- RESIST Project (Resilient Transport Infrastructure to Extreme Events). Available online: http://www.resistproject.eu/ (accessed on 15 August 2020).

- PILOTING Project (PILOTs for Robotic INspection and Maintenance Grounded on Advanced Intelligent Platforms and Prototype Applications). Available online: https://piloting-project.eu/ (accessed on 15 August 2020).

- Jimenez-Cano, A.E.; Sanchez-Cuevas, P.J.; Grau, P.; Ollero, A.; Heredia, G. Contact-Based Bridge Inspection Multirotors: Design, Modeling, and Control Considering the Ceiling Effect. IEEE Robot. Autom. Lett. 2019, 4, 3561–3568. [Google Scholar] [CrossRef]

- Ikeda, T.; Yasui, S.; Fujihara, M.; Ohara, K.; Ashizawa, S.; Ichikawa, A.; Okino, A.; Oomichi, T.; Fukuda, T. Wall contact by octo-rotor UAV with one DoF manipulator for bridge inspection. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5122–5127. [Google Scholar]

- Hamaza, S.; Georgilas, I.; Fernandez, M.; Sanchez, P.; Richardson, T.; Heredia, G.; Ollero, A. Sensor Installation and Retrieval Operations Using an Unmanned Aerial Manipulator. IEEE Robot. Autom. Lett. 2019, 4, 2793–2800. [Google Scholar] [CrossRef]

- Abe, Y.; Ichikawa, A.; Ikeda, T.; Fukuda, T. Study of hammering device to put on multi-copter targeted for bridge floor slabs. In Proceedings of the 2017 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 3–6 December 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Jimenez-Cano, A.E.; Heredia, G.; Ollero, A. Aerial manipulator with a compliant arm for bridge inspection. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1217–1222. [Google Scholar]

- Myeong, W.; Myung, H. Development of a Wall-Climbing Drone Capable of Vertical Soft Landing Using a Tilt-Rotor Mechanism. IEEE Access 2019, 7, 4868–4879. [Google Scholar] [CrossRef]

- Yamada, M.; Nakao, M.; Hada, Y.; Sawasaki, N. Development and field test of novel two-wheeled UAV for bridge inspections. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1014–1021. [Google Scholar] [CrossRef]

- Franchi, A.; Carli, R.; Bicego, D.; Ryll, M. Full-Pose Tracking Control for Aerial Robotic Systems with Laterally-Bounded Input Force. IEEE Trans. Robot. 2018, 34, 644271. [Google Scholar] [CrossRef]

- Brescianini, D.; D’Andrea, R. Design, modeling and control of an omni-directional aerial vehicle. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–20 May 2016; pp. 3261–3266. [Google Scholar] [CrossRef]

- Park, S.; Lee, J.; Ahn, J.; Kim, M.; Her, J.; Yang, G.; Lee, D. ODAR: Aerial Manipulation Platform Enabling Omnidirectional Wrench Generation. IEEE/ASME Trans. Mechatron. 2018, 23, 1907–1918. [Google Scholar] [CrossRef]

- Lee, J.Y.; Leang, K.K.; Yim, W. Design and control of a fully-actuated hexarotor for aerial manipulation applications. J. Mech. Robot. 2018, 10, 1–10. [Google Scholar] [CrossRef]

- Kamel, M.; Verling, S.; Elkhatib, O.; Sprecher, C.; Wulkop, P.; Taylor, Z.; Siegwart, R.; Gilitschenski, I. The Voliro Omniorientational Hexacopter: An Agile and Maneuverable Tiltable-Rotor Aerial Vehicle. IEEE Robot. Autom. Mag. 2018, 25, 34–44. [Google Scholar] [CrossRef]

- Long, Y.; Cappelleri, D.J. Linear control design, allocation, and implementation for the Omnicopter MAV. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 289–294. [Google Scholar]

- Ryll, M.; Bulthoff, H.H.; Giordano, P.R. A Novel Overactuated Quadrotor Unmanned Aerial Vehicle: Modeling, Control and Experimental Validation. IEEE Trans. Control Syst. Technol. 2015, 23, 540–556. [Google Scholar] [CrossRef]

- Ryll, M.; Bicego, D.; Franchi, A. Modeling and control of FAST-Hex: A fully-actuated by synchronized-tilting hexarotor. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1689–1694. [Google Scholar] [CrossRef]

- Zheng, P.; Tan, X.; Kocer, B.B.; Yang, E.; Kovac, M. TiltDrone: A Fully-Actuated Tilting Quadrotor Platform. IEEE Robot. Autom. Mag. 2020. [Google Scholar] [CrossRef]

- Odelga, M.; Stegagno, P.; Bulthoff, H.H. A fully actuated quadrotor uav with a propeller tilting mechanism: Modeling and control. In Proceedings of the 2016 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Barcelona, Spain, 12–15 July 2016; pp. 306–311. [Google Scholar]

- Zhao, M.; Kawasaki, K.; Anzai, T.; Chen, X.; Noda, S.; Shi, F.; Okada, K.; Inaba, M. Transformable multirotor with two-dimensional multilinks: Modeling, control, and whole-body aerial manipulation. Int. J. Robot. Res. 2018, 37, 1085–1112. [Google Scholar] [CrossRef]

- Tognon, M.; Chávez, H.A.; Gasparin, E.; Sablé, Q.; Bicego, D.; Mallet, A.; Lany, M.; Santi, G.; Revaz, B.; Cortés, J.; et al. A Truly-Redundant Aerial Manipulator System With Application to Push-and-Slide Inspection in Industrial Plants. IEEE Robot. Autom. Lett. 2019, 4, 1846–1851. [Google Scholar] [CrossRef]

- Ollero, A.; Heredia, G.; Franchi, A.; Antonelli, G.; Kondak, K.; Sanfeliu, A.; Viguria, A.; Martinez-de Dios, J.R.; Pierri, F.; Cortés, J.; et al. The AEROARMS Project: Aerial Robots with Advanced Manipulation Capabilities for Inspection and Maintenance. IEEE Robot. Autom. Mag. 2018, 25, 12–23. [Google Scholar] [CrossRef]

- Sanchez-Cuevas, P.J.; Heredia, G.; Ollero, A. Multirotor UAS for bridge inspection by contact using the ceiling effect. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 767–774. [Google Scholar] [CrossRef]

- Hsiao, Y.H.; Chirarattananon, P. Ceiling Effects for Hybrid Aerial–Surface Locomotion of Small Rotorcraft. IEEE/ASME Trans. Mechatron. 2018, 24, 2316–2327. [Google Scholar] [CrossRef]

- Kocer, B.B.; Tiryaki, M.E.; Pratama, M.; Tjahjowidodo, T.; Seet, G.G.L. Aerial robot control in close proximity to ceiling: A force estimation-based nonlinear mpc. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 2813–2819. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vision Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Robust Visual-Inertial Tracking from a Camera that Knows Where it’s Going. Available online: https://www.intelrealsense.com/visual-inertial-tracking-case-study/ (accessed on 15 August 2020).

- Rajappa, S.; Ryll, M.; Bülthoff, H.H.; Franchi, A. Modeling, control and design optimization for a fully-actuated hexarotor aerial vehicle with tilted propellers. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4006–4013. [Google Scholar]

- Suarez, A.; Heredia, G.; Ollero, A. Lightweight compliant arm with compliant finger for aerial manipulation and inspection. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4449–4454. [Google Scholar]

- Sanchez-Cuevas, P.J.; Ramon-Soria, P.; Arrue, B.; Ollero, A.; Heredia, G. Robotic system for inspection by contact of bridge beams using UAVs. Sensors 2019, 19, 305. [Google Scholar] [CrossRef] [PubMed]

- Jimenez-Cano, A.E. Modeling and Control of Aerial Manipulators. Ph.D. Thesis, University of Sevilla, Seville, Spain, 2019. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. Robot. Sci. Syst. 2014, 2, 1–9. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Akima, H. A new method of interpolation and smooth curve fitting based on local procedures. J. ACM 1970, 17, 589–602. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. 1995. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.336.5576&rep=rep1&type=pdf (accessed on 19 August 2020).

- Bouabdallah, S.; Siegwart, R. Full control of a quadrotor. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007; pp. 153–158. [Google Scholar]

- Jimenez-Cano, A.E.; Braga, J.; Heredia, G.; Ollero, A. Aerial manipulator for structure inspection by contact from the underside. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Hamburg, 28 September–2 October 2015; pp. 1879–1884. [Google Scholar]

- Heredia, G.; Cano, R.; Jimenez-Cano, A.E.; Ollero, A. Modeling and Design of Multirotors with Multi-joint Arms. In Aerial Robotic Manipulation; Ollero, A., Siciliano, B., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Video of the Experiments. Available online: https://hdvirtual.us.es/discovirt/index.php/s/xpadr7AZEk8cbeE (accessed on 15 August 2020).

| i | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Weight | 2.00 kg |

| Payload | ∼ 600 g |

| Maximum achievable lateral forces | = 415 g = 555 g |

| Propellers | DJI 9 × 4.5 in |

| Battery | 4S 5300 mAh |

| Rotation angles |

| Weight | 0.370 kg |

| Dimensions | Link 1 = 25 cm Link 2 = 20 cm |

| Rotation range | Shoulder pitch = [−100, 100] Elbow pitch = [20, 160] |

| Battery | 2S 1300 mAh |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sanchez-Cuevas, P.J.; Gonzalez-Morgado, A.; Cortes, N.; Gayango, D.B.; Jimenez-Cano, A.E.; Ollero, A.; Heredia, G. Fully-Actuated Aerial Manipulator for Infrastructure Contact Inspection: Design, Modeling, Localization, and Control. Sensors 2020, 20, 4708. https://doi.org/10.3390/s20174708

Sanchez-Cuevas PJ, Gonzalez-Morgado A, Cortes N, Gayango DB, Jimenez-Cano AE, Ollero A, Heredia G. Fully-Actuated Aerial Manipulator for Infrastructure Contact Inspection: Design, Modeling, Localization, and Control. Sensors. 2020; 20(17):4708. https://doi.org/10.3390/s20174708

Chicago/Turabian StyleSanchez-Cuevas, Pedro J., Antonio Gonzalez-Morgado, Nicolas Cortes, Diego B. Gayango, Antonio E. Jimenez-Cano, Aníbal Ollero, and Guillermo Heredia. 2020. "Fully-Actuated Aerial Manipulator for Infrastructure Contact Inspection: Design, Modeling, Localization, and Control" Sensors 20, no. 17: 4708. https://doi.org/10.3390/s20174708

APA StyleSanchez-Cuevas, P. J., Gonzalez-Morgado, A., Cortes, N., Gayango, D. B., Jimenez-Cano, A. E., Ollero, A., & Heredia, G. (2020). Fully-Actuated Aerial Manipulator for Infrastructure Contact Inspection: Design, Modeling, Localization, and Control. Sensors, 20(17), 4708. https://doi.org/10.3390/s20174708