1. Introduction

Sonar is an essential technology for underwater sensing. However, its spatial resolution depends on a combination of transducers to (roughly) approximate a sampling beam via interferences. A larger number of transducers placed on a larger area accordingly provides a higher resolution. However, the number of transducers in a sonar sensor is limited by many factors like sensor size, power consumption and costs. A popular approach is hence the use of a synthetic aperture, i.e., the sonar with its

N transducers is positioned at

k places to generate a virtual sensor with

transducers [

1,

2,

3].

So, the core idea of Synthetic Aperture Imaging in general is to use a sequence of measurements that are combined to form improved 2D or 3D representations of the sampled environment. The motion of the sensor, respectively of its carrier platform, generates a synthetic aperture that leads to higher resolution images. The related methodology of Synthetic Aperture Radar (SAR) has been for multiple decades a standard technique for remote sensing [

4]. A general optical counterpart is camera arrays [

5,

6] to improve imaging performance. For sonar, the same fundamental principles apply, but there are specific challenges related to the propagation properties of sound including its slow speed, the medium water, the available technologies for transducers, etc. An introduction to Synthetic Aperture Sonar (SAS) and discussions of related research can be found in [

2,

3].

The state of the art for synthetic aperture sonar (SAS) is strongly coupled to constraints on the way it can be used [

1,

2,

3]. For example, the

k sensor poses often have to be equidistantly placed on a virtual line perpendicular to the sensor. This is motivated by the intention to ease the signal processing as well as by practical aspects: a vehicle, for example, a surface vessel or an Autonomous Underwater Vehicle (AUV), with a sonar facing down to the seafloor is only required to use its navigation sensors to travel with constant speed on a straight line. However, it also significantly limits the scope of the vehicle’s mission.

A core element in conventional SAR/SAS systems is in general the precise localization of the sensor poses, which is achieved through navigation sensors. This is a less critical issue for SAR, i.e., for remote sensing satellites, which need extremely precise navigation for control anyway. Nevertheless, SAR autofocus is still in the interest of research where sensors cannot provide the required accuracy, for example, for ultra-high resolution imaging [

7,

8]. In [

9], it is shown that extracted phase information can be used for the additional detection of moving targets. In [

10], a novel SAR application for near-field microwave imaging is presented. A treatment of trajectory inaccuracies by a constrained least squares approach is presented in [

11].

In conventional SAS systems, two methods exist to alleviate motion errors. One method is the Displaced Phase Center Antenna (DPCA) [

12] that exploits the spatial coherence of the seafloor backscatter in a multiple receiver array configuration. It uses a ping to ping correlation of single sensor elements. The second line of methods are phase-only autofocus algorithms, of which the phase gradient autofocus (PGA) is the most popular one. It extracts the phase error by detecting a common peak in each range cut. In [

13], PGA is extended to a generalized phase gradient autofocus, which works for reconstruction methods including the polar format algorithm (PFA), range migration, and backprojection (BPA).

The idea presented in this article is to use scans without navigation data for the extended aperture of a standard antenna. In conventional SAS systems, the area coverage is generally limited by the propagation speed in water [

14]; this restriction is usually remedied by employing multi-receiver configurations [

2]. Compared to these conventional SAS systems, the main idea here is to improve resolution and coverage of related structures/details within the dimensions of an imaging device. This bears some similarities to tomographic SAS [

15,

16] or semi-coherent imaging [

17] where multiple versatile spatial views with different 3D angular positions are used to obtain lateral views of the scene, respectively of an object of interest.

Our approach is applied to sonar sensing within the near field. One motivation is that conventional sonar devices are still usually not well suited for robotic applications, for example, for object recognition/detection or the representation of details needed for intervention missions, where hence optical sensors prevail [

18]. The concentration on a restricted range for the SAS approach proposed here has two methodological advantages. Compared to, for example, seafloor mapping applications in conventional SAS systems, sound speed variations in the water for restricted ranges can be neglected. A second methodological advantage is the independence from the center frequency of the imaging system. Coherent processing of sub-apertures with fixed receiver sensors is independent from the center frequency of the system. Note that some millimeters can cause a defocus for high-resolution SAS in, for example, seabed mapping.

A robust and precise pixel/voxel-based registration of subsequent sonar scans and the following exact mapping of the coherent signals is used here for synthetic aperture processing. Concretely, the relative localization is done with voxel-wise spectral registration of the scan data. This is inspired by our previous work on sonar registration for mapping. In [

19,

20], for example, it is shown that a registration and alignment of sonar scan-data is possible even with severe interference and partial overlap between individual scans. While this pairwise registration—which is the basis for the work presented in this article—is already very accurate, positional information over the entire aperture can even be further improved by Simultaneous Localization and Mapping (SLAM) [

21].

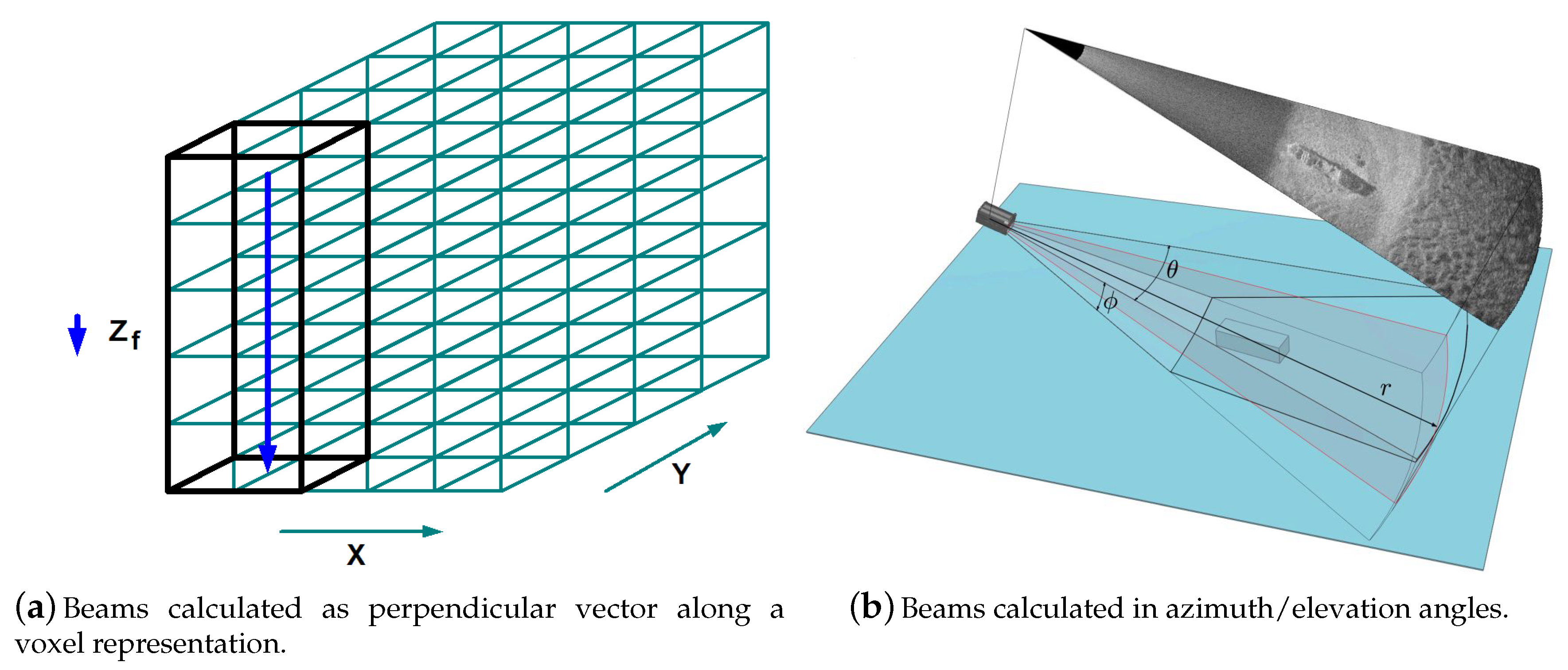

To facilitate the registration, a Delay and Sum (D&S) BF is employed for the reconstruction, which directly operates on pixel/voxel form on a Cartesian grid. In [

22], a voxel-based format is also used to avoid the scan-conversion operation, which is otherwise necessary to convert from polar coordinates to Cartesian coordinates. The main difference to conventional SAS systems is in our approach the processing of coherent, already reconstructed images from an array system.

In summary, the following contributions are presented in this article: (a) the use of registration of single raw scans is proposed as a novel basis for Synthetic Aperture Sonar (SAS), (b) a suited algorithm for the registration is presented in the form of a spectral method based on our previous work, and (c) the concrete implementation of our new approach to SAS is completed with a Delay and Sum (D&S) BF for the reconstruction operating on pixel/voxel form on a Cartesian grid.

The rest of this article is organized as follows.

Section 2 introduces the image reconstruction for our SAS approach.

Section 3 explains necessary signal-processing parameter requirements.

Section 4 derives necessary positional requirements of the sensor-platform. Registration and the corresponding transformations for the necessary scan alignment are discussed in

Section 5.

Section 6 demonstrates with experiments in 2D prospects and limits of the proposed imaging method.

Section 7 concludes the article.

3. Coherent Image Superposition

The analog conversion and processing of array signals is usually done in the baseband, which reduces the sampling rate to the range of the pulse-bandwidth. This corresponding baseband shift or direct bandpass-sampling dislocates phase relations of the array data. For the beamforming processing, the stave signals are hence multiplied with the phase relation according to (

3), which are the

steps multiplied with the center frequency

to align the phase relations again. As described, Equation (

5) shows the entire reconstruction as a (D&S) BF. The phase-shift term leads in addition to the desired phase correction to a modulation of the pulse signal. This implies that the pulse is no longer a baseband signal with steady local phase properties, but rather an oscillation again.

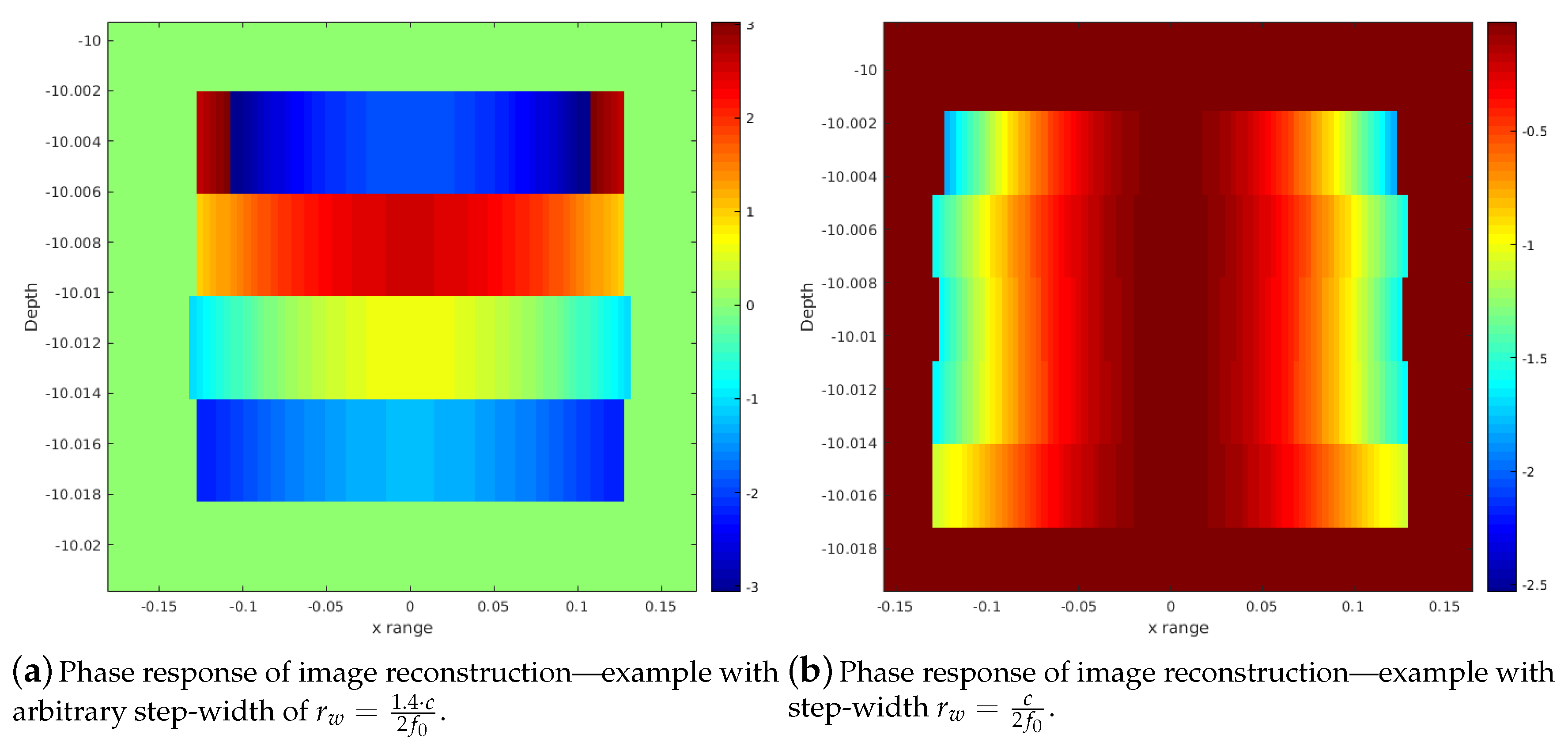

Figure 2 shows the difference between both signal forms. In this example, no further processing like filtering or pulse compression is carried out.

The beamforming/image-reconstruction itself is done on a certain spatial range, which again samples the pulse signals on the time scale. A reconstruction step-width of

maps the pulse shape directly to the resulting image shape, since this is half the wavelength on a pixel/voxel accounting for the round-trip delay of the pulse. This is in contrast to the Exploding Reflector Model (ERM) [

30], where a point diffractor is a source of waves that travel at one-half of the physical velocity. The difference between both models is that the antenna system has different travel times for the transmitting and receiving part. Here,

is the sampling frequency and

c the sound velocity. Depending on the desired resolution, the pulses in the time scale can hence either be oversampled or subsampled when the step-width differs from the given value.

In order to keep a baseband signal in the image reconstruction as described, the signal modulated by (

3) must be shifted back to the baseband by the factor

. Hence, the product

keeps the phase response in the baseband.

cancels out, which leaves the factor

. This factor as range step-width interpolates the signal correctly into the baseband when processing the beamforming steps (Equations (

1)–(

5)). The result is an image reconstruction where the signal pulse is still in the baseband, as shown in

Figure 2b. Consequently, this step-width is the finest possible resolution that meets this requirement. For different resolutions, i.e., lower resolutions, multiples of

must be used.

For the ideal coherent summation of single scans, the phase relation is of no importance. However, in case of sub-pixel or even pixel errors, a rapid change of the pulse phase immediately leads to destructive interferences. Hence, oscillating phase structures are not desirable. The goal is to obtain steady phase structures in the single scan reconstructions achieved with the described ratio of

and

. Getting an SAS gain in spite of pixel/voxel-errors due to possible positional deviations is an important requirement for a successful implementation of our method. This principle and the effects on multiple image reconstructions are discussed using a point-scatterer in

Section 3.

Ideal Sas Beampatterns

An imaging example with four antenna positions is used to illustrate our method. The number of sensors, respectively sensor elements is 128, the center-frequency is kHz with a bandpass sampling frequency kHz, which is two times the pulse bandwidth. The sensor spacing is .

Figure 3 shows the different beampatterns of a single antenna and the coherent processing from all antenna positions. The beampatterns are numerically calculated using a point-scatterer at a range of 10 m. Beamforming and image reconstruction is then carried out in a lateral range (x) of

m and a depth range (z) of

m where the depth range is integrated and then plotted along the lateral range.

Figure 3a shows the beampattern for an array of 128 elements directly in front of the point-scatterer. In comparison,

Figure 3b shows the distinctly improved beampattern of the coherent sum from all antenna positions. In total, four positions with an overlap of

of the array length (

L), hence a complete aperture of three times the array length, is used. The beampattern shows a significantly improved resolution with even smaller sidelobes compared to the single beampattern. This illustrates of course the ideal case where scans are pixelwise correctly summed.

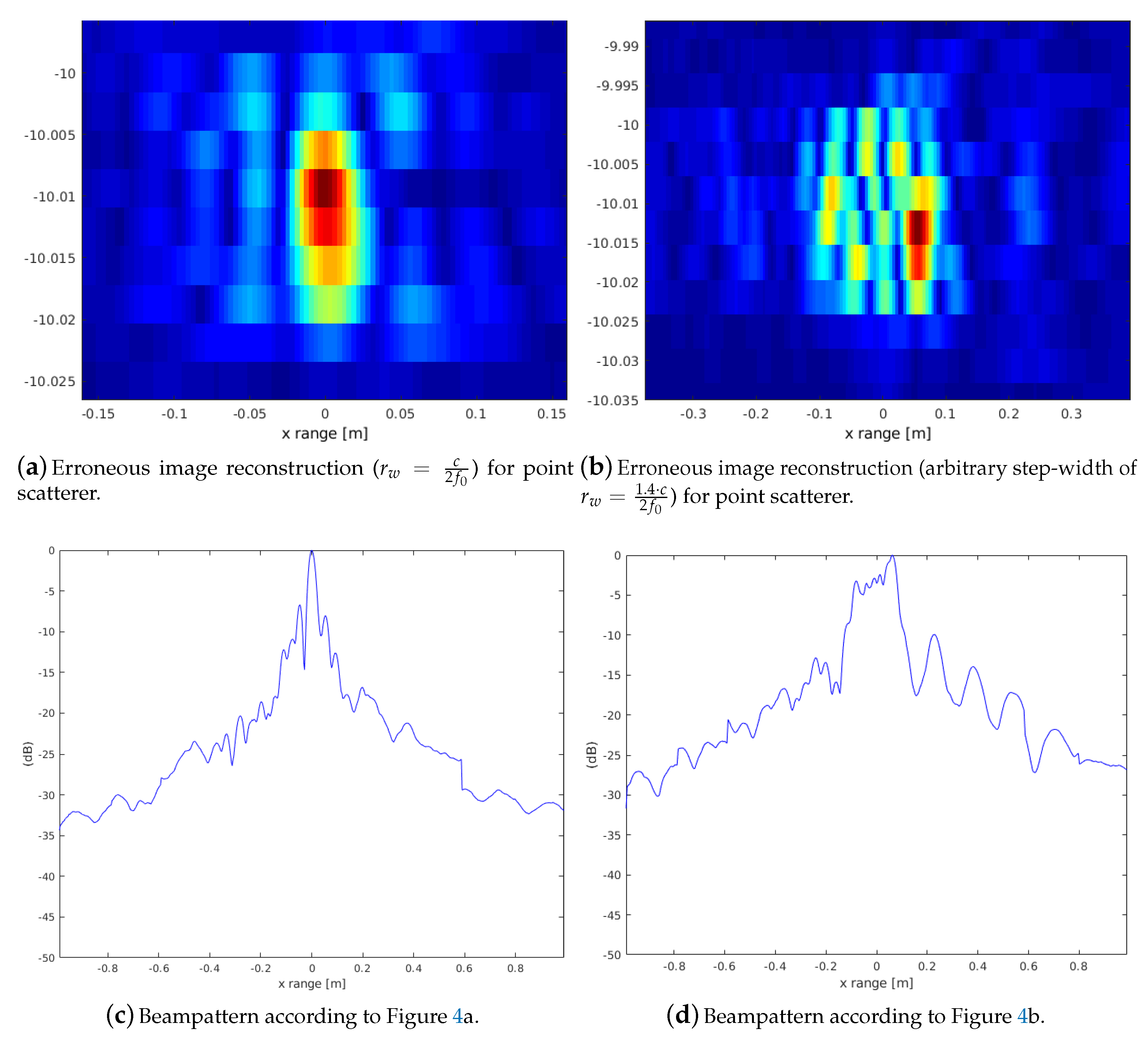

The following illustrates this SAS image reconstruction using two different range step-widths.

Figure 4c shows the case with

. Random pixel-errors are introduced according to

Table 1. The described baseband processing leads to an extremely low oscillation. As a consequence, the erroneous coherent summation still achieves a gain in resolution within certain limits of voxel/pixel errors. The image reconstruction (

Figure 4c) still shows a bound region of the point scatterer. The counterpart uses an odd step-width

where the image reconstruction (

Figure 4d) and its phase structure is an unstructured region.

The general principle demonstrated by the beampattern of the point-scatterer motivates that a significant SAS gain from the coherent imaging can still be achieved with pixel/voxel errors, which are possible due to localization inaccuracies when just using registration to derive the spatial relations between multiple scans.

4. Sampling the Aperture

A constraint for the processing of sub-apertures is similar to the sampling of any signal. A continuous sampled signal along the processed aperture is necessary to avoid spectral artifacts. In [

31], a similar problem of nonuniformly synthetic aperture positions for SAR nondestructive evaluation imaging is investigated. There, an approach is introduced, which allows sampling from non-uniform positions using a non-uniform fast FT (NUFFT) for the Stolt interpolation. Note that a gap within the aperture can be seen as a multiplication of a rectangular window with subsequent sub-apertures (single scan-data) in the spatial domain.

The example from

Section 3 representing a point-spread function from multiple sub-apertures is now repeated, but with an information gap between four neighboring antenna positions. A corresponding beampattern compared to the ideal beampattern in

Figure 4b shows therefore considerably higher sidelobes.

Figure 5 shows a comparison of beampattern without a continuous phase along the sub-apertures.

Figure 5a shows the case with arrays spatially directly connected. Nevertheless, the result is a significant interfering sidelobe.

Figure 5b shows another invalid configuration with a spatial gap of

of the array length between every sub-aperture. The sidelobes in

Figure 5b are stronger, which can be explained by the physical interrupt between the neighboring arrays.

As shown by the beampattern in

Figure 5a, a straighforward linkage of arrays for synthetic aperture processing is not possible. The reason for the discontinuities within the received data from all sub-apertures are the different sender positions from which each single scan is recorded.

Figure 6 shows a detailed phase response for all four arrays. The phase is displayed for a point-scatterer (

) at a fixed depth and calculated at this depth

,

. The x-axis displays all sensors of all subsequent arrays where the y-axis displays this phase along the lateral range for

. The normalized representation shows four apertures with spacing

and 128 sensors. The lateral range along the y-axis is the same length as the four array apertures on the x-axis. According to Equation (

5), the array manifold vector is summed along all sensors for an image point reconstruction.

Figure 6a shows interruptions along the data of the sub-apertures. In contrast,

Figure 6b shows a continuous phase along all sub-apertures. The

overlap between neighboring arrays keeps the position from one transmitter (center)

and the outer receiver element

from a neighboring array at the same position. The consequence is the same run-time to any spatial position

. The same holds for the second transmitter (center)

and outer receiver element

from the first array

. Hence, an overlap of

guarantees the same phase at the transition from one sub-aperture to the other, which yields an ideal reconstruction, as shown in

Figure 3a. This explains the erroneous sidelobes in the beampattern

Figure 5a. A practical implementation to obtain single scans at appropriate positions is recording a dense set and selecting the suitable positions after image registration.

4.1. Grating Lobes

The effect of interfering sidelobes due to a discontinuous phase between sub-apertures is different to the effect of grating lobes. If the sensor spacing is larger than

, the peak of a grating lobe already occurs within the region of propagating signals. The problem occurs due to the length of the receiver array between the transmitter positions building in turn a pseudo transmitter array. The proposed concept of sub-apertures implies separated transmitter positions with a spacing

causing already grating lobes even within a smaller visible region. Although most energy is suppressed by the receiving beampattern, this effect needs to be considered.

Figure 7a shows the overlay of a transmitter/receiver beam pattern according to the concept of a uniformly weighted linear array (

11) (see [

28]).

The combined beam pattern is separated into the transmitter pattern having four different positions plus the full receiver pattern (

) of the physical length of all sub-apertures. The combination for two different lengths of overlapping arrays is shown in

Figure 7b. The overlap of

achieves a sufficient suppression since the grating lobes lie within the Nulls of the receiver pattern. This fact is due to the ratio of positions for grating lobes and zeros of uniform linear arrays. Grating lobes (

) and zeros (

) occur corresponding to (

10). Sticking to an array overlap of

, the transmitter sensor distance

can be expressed as

with

as the receiver spacing. This leads to a ratio of

and

, which equals

. Hence, multiples by a factor 2 of grating lobes fall automatically into zeros of the receiver beampattern.

As positions of

overlap cannot always be acquired in reality, the concept of null-steering can be applied in a straightforward way.

Figure 8 shows an example with significant grating lobes of up to 20 dB. Null-steering approximates a desired pattern subject to a set of null constraints. The matrix in brackets (

14) is the projection matrix orthogonal to the constraint subspace, which results in the optimal weighting coefficients (see [

28]). The matrix

C (

13) defines the null-steering positions given in

. The coefficients

are applied on the receiver data segments where the overlapping regions are processed with the same coefficients. The derivation is given in [

28], using the same notation

u defined in the range from

to

, which corresponds to a coverage of 180°. The plots in

Figure 7 and

Figure 8 are calculated for a depth of 10 m and range of 2 m according to the experiments.

4.2. Compressed Sensing for Aperture Gap Recovery

As already discussed in

Section 4.1, in case exact desired sensor positions cannot always be achieved, a data extrapolation within a certain range is desirable. The 2D FT of the entire array data represents the fast- and slow-time spectrum, which originates from the slow movement along the synthetic aperture compared to the time-signal [

1] (see

Figure 9a). It is shown that a spatial gap recovery for small ranges leads to good results.

Compressed sensing (CS) [

32] is a sampling reduction in order to avoid signal compression, which has been used before in the context of conventional stripmap SAR [

33]. A requirement is a sparse signal representation in the parameter domain representing the chosen basis functions. This is usually the frequency domain in case the Fourier basis is chosen. As the 2D spectral array representation (

Figure 9a) shows, the slow-time frequency content is a sparse representation concentrated at low frequencies. In case a successful scan registration in our approach yields sensor-platform positions detecting a gap between an ideal sequence of neighboring sensors, CS is a feasible way for recovering signal information. The suggested CS method is although only feasible for offline-processing. This is due to the fact that it requires full array signals from all antenna positions before image reconstruction. For more details to CS theory and applications we refer to corresponding references; note that it is currently an intensive research topic in many imaging areas far beyond sonar. For example, the work in [

34] deals with multiresolution CS enabling the reconstruction of a low-resolution signal having insufficient CS samples. In many applications CS is tailored to the specific data representation and its sparsity [

35,

36,

37].

Figure 9 shows the signal representation where the array manifold vector is multiplied with the phase shift matrix

defined in (

3). This representation corresponds to Equation (

5) before the accumulation of the rectified channel information for the image reconstruction.

Figure 9b shows the data matrix of two neighboring antenna signals where the gap corresponds to a space of 10 single sensor spacings. Hence, the CS signal recovering is applied at an interim result of the beamforming-reconstruction, which takes the sensor positions (array manifold vector) into account.

So, CS is used to supplement missing samples, which are supposed to be reconstructed from basis functions where the corresponding weighting coefficients are approximated from an underdetermined linear system. The basis functions should fit to the specific problem; here a 2D Fourier basis is chosen. The subsampled signal is defined as a sliding window

grabbing

samples along the time-segment and parts of the sensor channels. Hence, a signal matrix

of size

are the measurement inputs for CS. The number of missing sensor-channels describing the spatial gap is defined as

. Concretely, the vector

defined in (

17) defines the range of sensor indices describing the gap between neighboring sensor-platform positions. The window length along the time-segment overlaps to both sides with a length of one sample. The corresponding basis-functions are defined as a 2D Fourier basis (

15). Matrix

(

16) contains as 1D vectors all possible combinations for the frequency vectors

u and

v with the defined time segments

and

given in (

17).

The problem is an underdetermined linear system (

22) that provides approximated 2D Fourier coefficients of the sliding window in the ideal case. The resulting vector represents the 2D Fourier coefficients

of size

. The number of vertical frequency components is then

and the horizontal components

. Note that the number of sensors is now complemented by the number

. After reliable coefficients are found, it is possible to recover the signal.

The main idea in the seminal publication on CS [

32] is to substitute the

NP-hard l0 norm by the closest convex norm in form of the

l1 norm. Accordingly, the coefficients

can be determined by an optimization process (

23). Nevertheless, there are still varieties of solutions (e.g., spikes within the gaps), which are unlikely to occur in this data. The approach here is to restrict the number of frequencies, which provide a decent approximation of the slow-time domain. In (

21), a range is defined with corresponding restricted symmetric basis functions.

is the number of effective frequencies. Hence, the corresponding matrix

has a rank of

. The required inverse

is determined by the pseudo inverse using the Singular Value Decomposition (SVD) (

24). The notation

describes the

-submatrix, i.e., A with

i.th row and

j.th column deleted. By deleting the row/columns of

D and

U according to (

24) respectively, the desired parameters

can be determined as in (

25). The parameters

and

are used as measurement data and are set as follows:

and

is half the sensor-channels from each side of the neighboring array data. A range of 20% to 30% of

as a range of low frequencies yields a sufficient approximation using the pseudo inverse. The restoration of the missing array data is finally achieved by applying an inverse 2D FT on

(

26).

5. Pixel Based Scan Registration

As discussed in the previous sections, the coherent SAS processing of the separate array data requires the knowledge of the underlying spatial transformations within reconstructed sonar images. The idea of using registration of multiple scans from different unknown sensor positions is based on our previous work on registration of noisy data with partial overlap including especially sonar [

19,

20,

21]. This includes especially spectral registration methods [

38,

39], which are capable of matching scans as an entire unit without a dependency on features within the scan representation.

The registration method used here is a robust 2D Fourier–Mellin implementation, which determines rotation and translation in subsequent dependent steps. The resampling methodology shown in (

27) is the 2D Fourier Mellin Invariant (FMI) [

40]. It decouples transformation parameters (rotation/scale) from translation. Phase correlation of descriptor functions are generated according to (

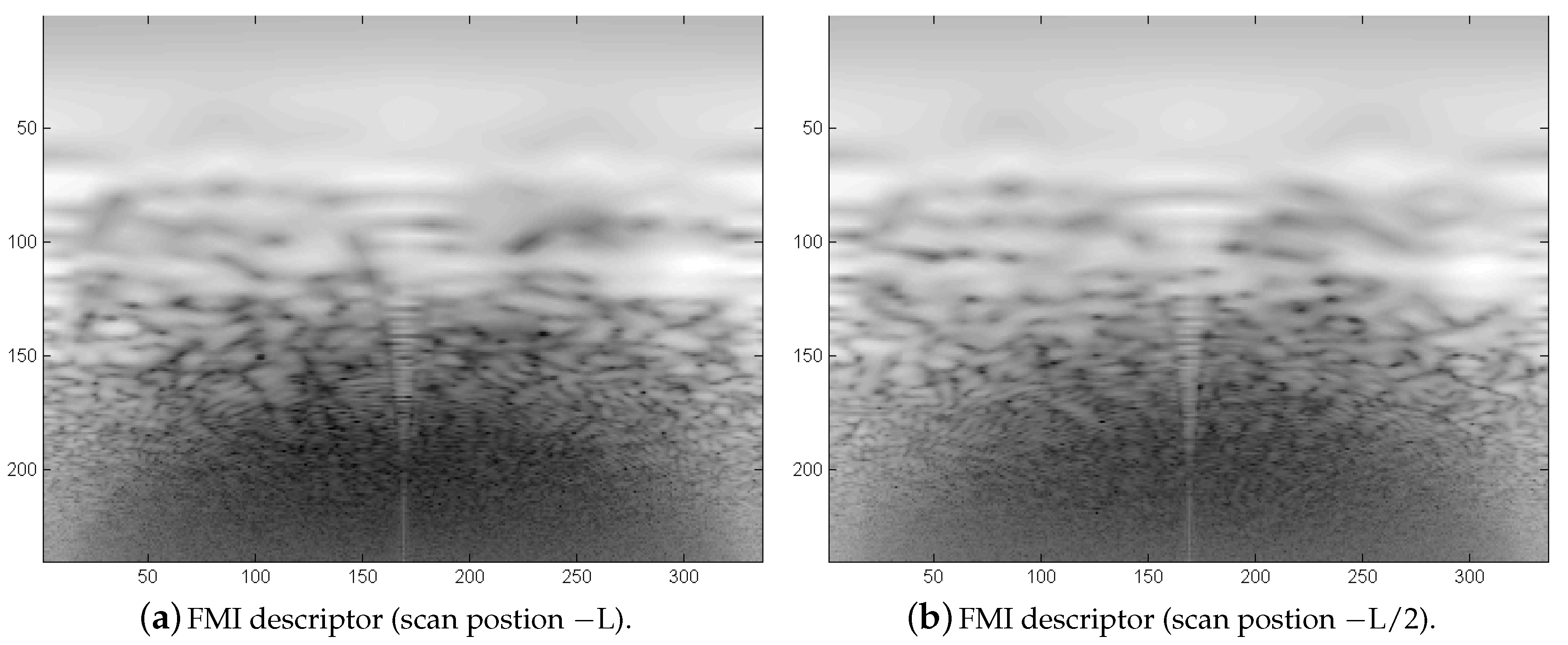

27) and yield unique Dirac peaks indicating all parameters of the underlying transformation. Once this registration is successful, which can be verified by a unique signal/noise ratio of the Dirac maximum, the registration is precise within the rendered pixel/voxel resolution.

Even in the case of typical noisy, fuzzy sonar images, the correct position can be determined, although both peaks (FMI descriptor function plus FMI translational registration) appear to be smeared over a larger area. The experiments later on will demonstrate that sufficiently precise parameters (rotation, translation) can be determined, which allow a pixel alignment for our SAS. Since the transformation parameter of scale is not required, the FMI polar-logarithmically resampling (

27) can be replaced by a simple resampling in polar coordinates.

6. Experiments and Results

The following experiments are based on a high fidelity sonar simulator [

41], which allows the definition of a transmitter/receiver array (number and arrangement of sensors), the pulse form and the frequency. Note that a realistic simulation of the received signal needs to be based on a multitude of echoes arising from the water volume, from boundary layers and from the sediment volume as well as from objects in the water, on the bottom and even in the sediment. Unlike in simple ray-tracing based sonar simulations with simple noise added, the generation of appropriate transmitter and receiver signals is hence required in addition to modeling the physical properties of sediment volume, targets and the water/sediment boundary layer to get high fidelity results.

Targets are specified in the high fidelity sonar simulator [

41] from CAD objects modeled by a collection of facets with parameters depending on the backscattering properties on the surface of the object [

42]. The backscattering of each facet is modeled as that of a plane circular element. Orientation and accordingly backscattering strength is defined by a vector normal to the object surface at the facets grid position. The total backscattered signal is then obtained by coherently superimposing the reflections from all facets. In [

22], it is shown that realistic and reliable emulation of array signals based on dense grids of small discrete scatterers leads to high-fidelity results. For example, a 3D BF is modeled there, which generates realistic images containing all expected elements.

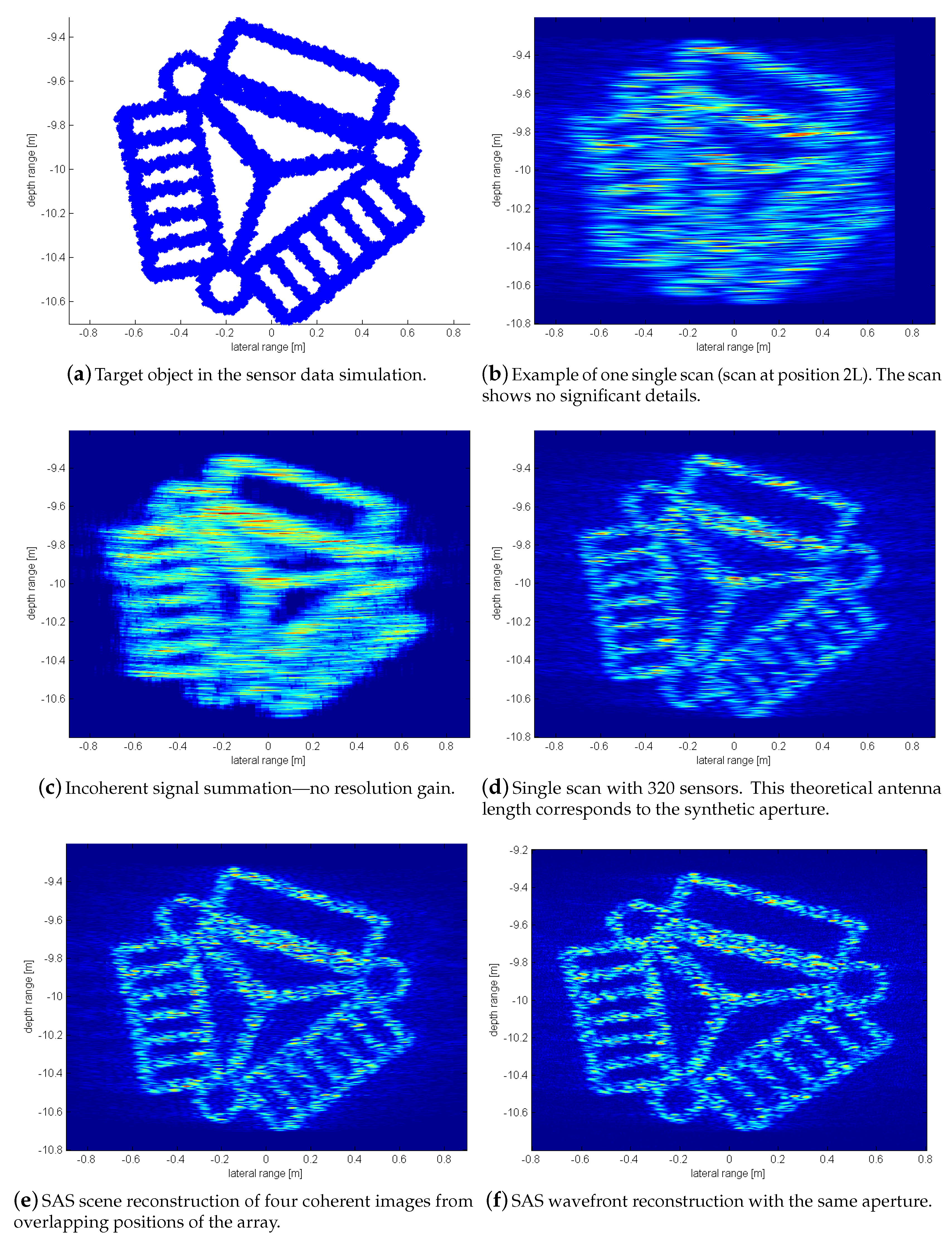

The object used for the experiments here (

Figure 10) is a test structure in the form of a mock-up panel for trials in the context of deep-sea oil- and gas-production (OGP) [

18], which was used in the EU project “Effective Dexterous ROV Operations in Presence of Communications Latencies (DexROV)”. In DexROV, the amount of robot operators required offshore (Mediterranean Sea, offshore of Marseille, France) was reduced—hence, reducing cost and inconveniences—by facilitating offshore OPG operations from an onshore control center (in Brussels, Belgium) via a satellite communication link and by reducing the gap between low-level tele-operation and full autonomy, among others by enabling machine perception on-board of the Remotely Operated Vehicle (ROV) itself [

43,

44,

45,

46]. The model of the test structure is in the following experiments in a top-down view, which corresponds to the scenario when the ROV is in the initial approach phase, i.e., when sonar is used to localize the target structure from above.

2D Imaging with a 1D Linear Antenna

The following SAS processing is based on four array positions using the same signal processing parameters. The array is a 1D linear system with 128 elements with a pulse center-frequency of

kHz. The pulse length is

, the sampling frequency

kHz and the sensor spacing is always

. The resulting SAS reconstruction is shown in

Figure 11e.

The significant SAS effect is especially observable when directly comparing it to the quality of the image of a single scan shown in

Figure 11b. A comparison using the standard SAS wavefront reconstruction (

Section 2.2) along the same aperture

u is shown in

Figure 11f. In this wavefront reconstruction and the corresponding generated data, ideal phase relations are used. Both our SAS and the ideal phase results show very similar results. The original CAD model is shown in

Figure 11a.

The incoherent summation in

Figure 11c represents no information gain. Another comparison is shown in

Figure 11d where a theoretical 1D antenna of the full SAS length is used. This result is less accurate, which can be explained by the omnidirectional beampattern of the transmitter. It demonstrates that a resolution gain can be achieved by the combination of a transmitter/receiver beampattern when the grating lobes are well suppressed by null-steering (see

Section 4.1 again).

The clearly visible effects are also supported by a numeric analysis using standard statistical measures for figure/ground separation, i.e., it is tested whether a cell indicates the presence of the object, i.e., the figure, which is considered as positive response, or not, i.e., the cell indicates the (back-)ground, which is as usual considered to be the negative response in this test. Based on this standard definition of the figure/ground separation test, the related statistical measures can be calculated, i.e., the True Positive Rate (TPR), also known as recall or sensitivity, the True Negative Rate (TNR) also known as specificity or selectivity, the False Positive Rate (FPR), also known as fall-out or the probability of false alarm, and the False negative rate (FNR), also known as miss rate.

Table 2 shows the according results. The ground truth of the target object (case (a)) leads obviously to perfect results and it is only included for the sake of completeness. The single scan (case (b)) has a smeared-out response that overestimates the object. There is hence a high recall due to many “lucky hits”, but especially the probability of false alarms is very high. The incoherent summation (case (c)) provides a somewhat clearer representation of the object, but the probability of false alarms is still very high. The hypothetical sonar with 320 sensors in one device (case (d)) leads to an improvement with respect to the probability of false alarms, but false alarms are still quite probable and the recall significantly drops. Our proposed method (case (e)) performs significantly better than the different alternatives and it provides high recall and selectivity under small fall-outs and miss-rates. Furthermore, it can be noted that it is close to the theoretical best-case of an idealized SAS processing (case (f)).

To further illustrate the robustness of our method,

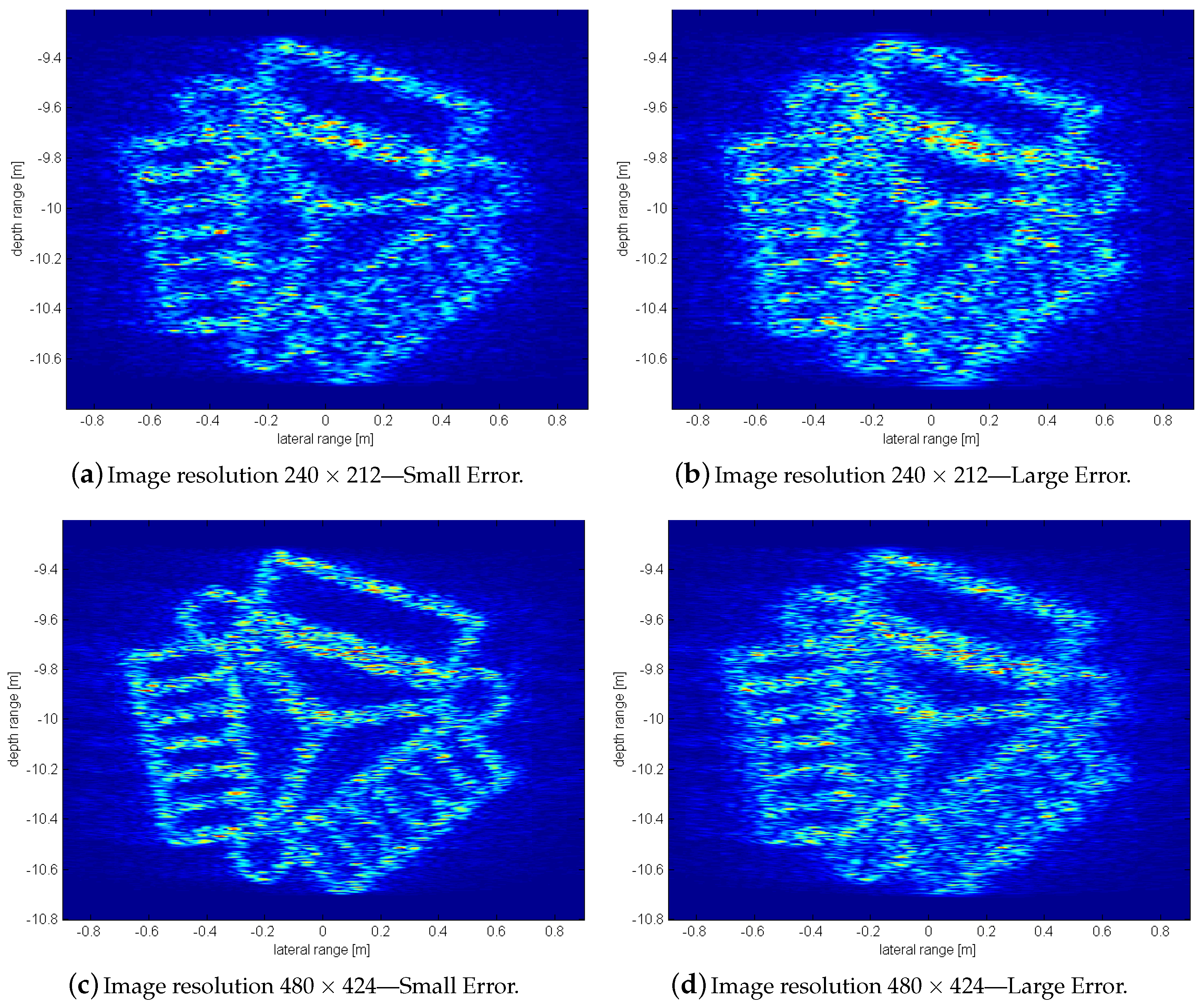

Figure 12 shows a comparison of two different resolutions with a set of two different registration errors on the pixel level.

Figure 12a,b shows an image reconstruction using a step-width

. The second example in

Figure 12c,d has a doubled resolution using half the step-width

. Concretely, a range of

on

and

pixel is used. Similar to

Section 3 a pixel error is introduced demonstrating different behavior for different image resolutions.

On both resolutions, a set of small errors not exceeding a pixel shift one and a set of larger errors up to a maximum pixel shift two is applied (see

Table 3). As can be expected, pixel errors have a more significant effect within a smaller resolution than with higher resolutions.

Figure 12c shows that in this resolution, the erroneous superposition of signals still leads to an increase in resolution while the other example shows a degrading effect at a similar level like a single scan (

Figure 11b).

The FMI registration uses signal information from the entire image and not just single prominent features that are hard to find in sonar images.

Figure 11b shows the outer left scan of the set of sonar images where the other three scans are shifted counterparts in similar appearance.

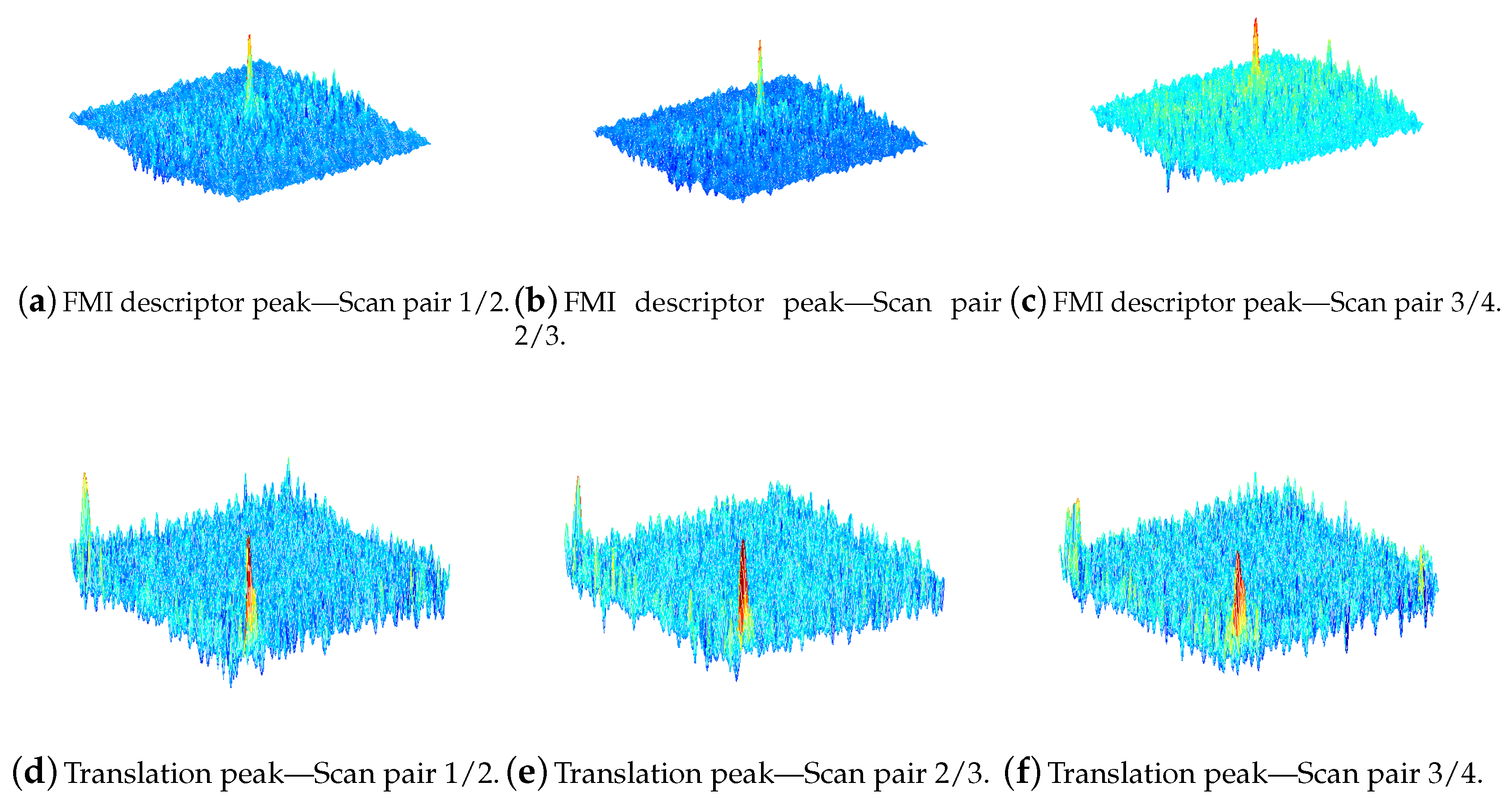

Figure 13 shows the registration results from phase matching of the four sonar images.

An example of an FMI descriptor pair is shown in

Figure 14. In the case of no rotation, both functions are nearly identical. The phase matching leads to a peak indicating the rotation angle. After a rotational alignment, translation is determined using phase matching again.

Table 4 shows the resulting transformation parameters. After rounding all translation parameters, a nearly exact integer pixel position is found for a coherent alignment. Using the parameters for the small resolution

, a step-width of L/2 corresponds to a theoretical pixel shift of

between corresponding scan pairs. The registration results are all well between 15 and 16 pixel and all 0 for the vertical position. Although the translational registration peaks in

Figure 13 are broad due to artifacts and extreme blurring of the single sonar scans, the maximums indicate correct translation parameters. Side peaks from translation occur at the opposite side due to the periodic nature of the FT.