Future-Frame Prediction for Fast-Moving Objects with Motion Blur

Abstract

1. Introduction

2. Related Work

2.1. Learning Implicit Physics

2.2. Learning Explicit Physics

2.3. Learning for Real Scenes

3. Methods

3.1. Synthetic Data Generation

3.2. Future-Frame Prediction Network

3.3. Training Scheme

3.3.1. Training with Synthetic Data

3.3.2. Fine-Tuning with Real Data

3.4. Implementation Details

3.5. Extension to Multiple Objects

4. Results

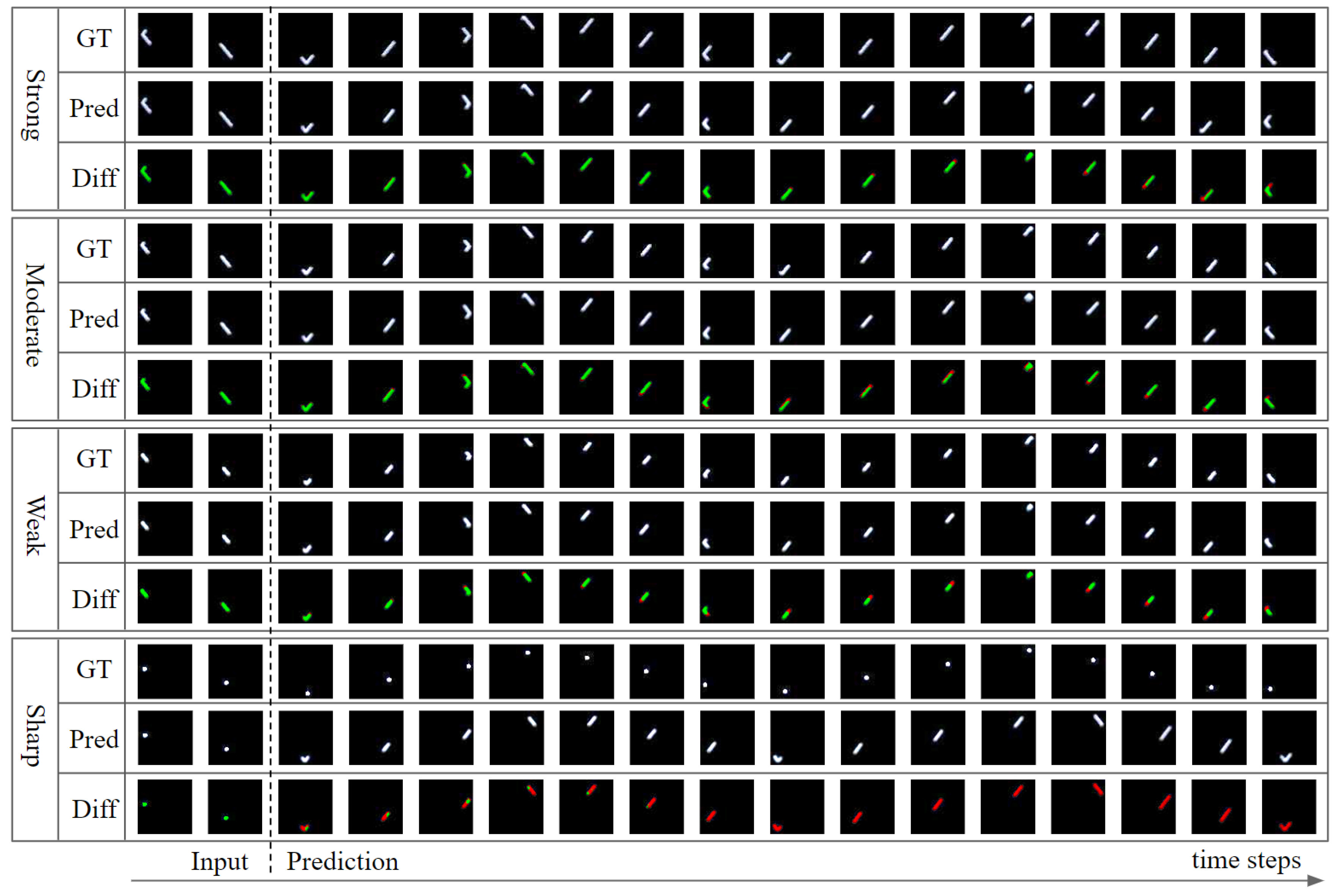

4.1. FDB

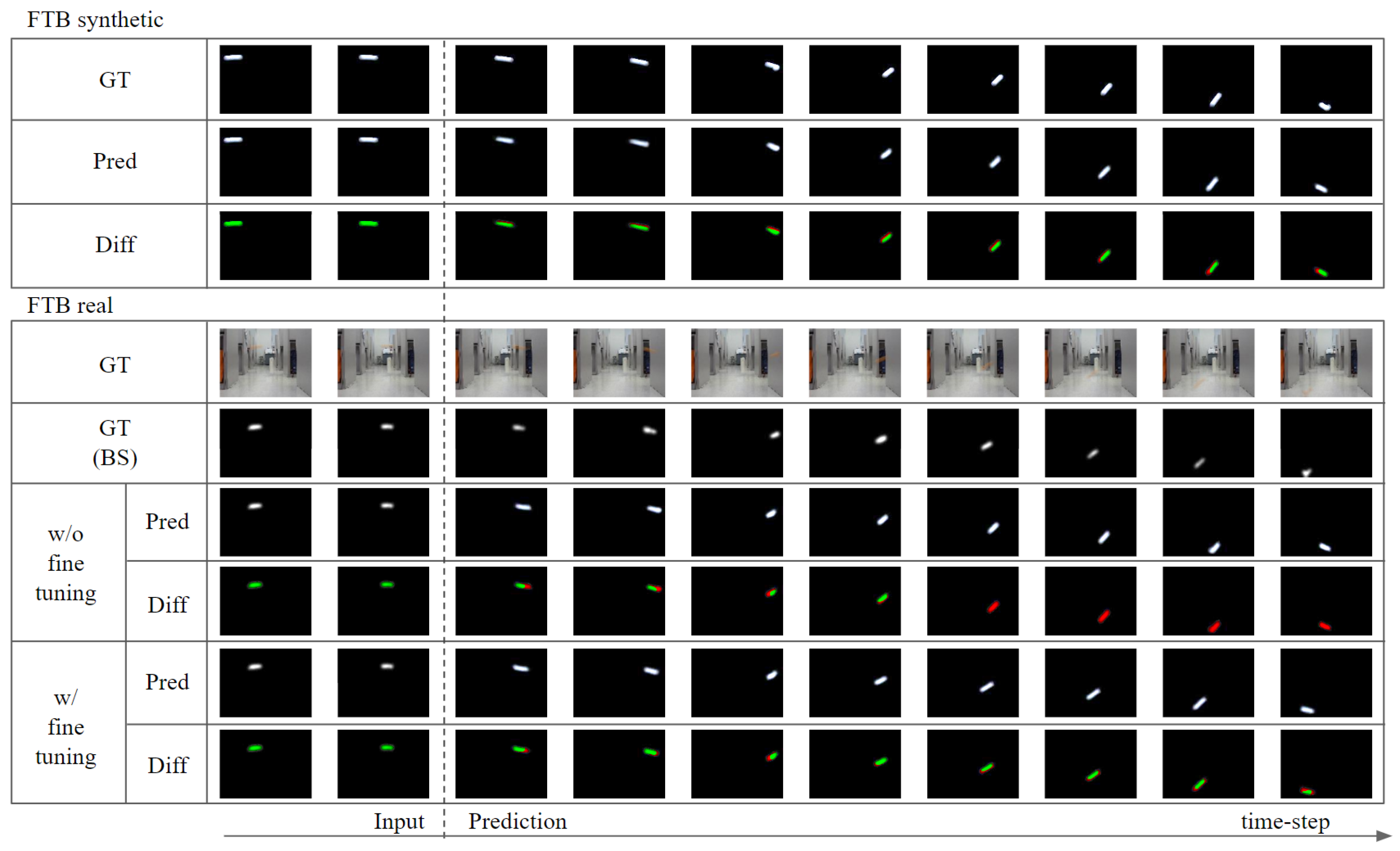

4.2. FTB

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Battaglia, P.W.; Hamrick, J.B.; Tenenbaum, J.B. Simulation as an engine of physical scene understanding. Proc. Natl. Acad. Sci. USA 2013, 110, 18327–18332. Available online: https://www.pnas.org/content/110/45/18327.full.pdf (accessed on 6 August 2020). [CrossRef] [PubMed]

- Lerer, A.; Gross, S.; Fergus, R. Learning Physical Intuition of Block Towers by Example. In Proceedings of the ICML’16 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 430–438. [Google Scholar]

- Li, W.; Leonardis, A.; Fritz, M. Visual Stability Prediction and Its Application to Manipulation. In Proceedings of the 2017 AAAI Spring Symposia, Stanford University, Palo Alto, CA, USA, 27–29 March 2017; AAAI Press: Menlo Park, CA, USA, 2017. [Google Scholar]

- Zheng, D.; Luo, V.; Wu, J.; Tenenbaum, J.B. Unsupervised Learning of Latent Physical Properties Using Perception-Prediction Networks. arXiv 2018, arXiv:1807.0924. [Google Scholar]

- Asenov, M.; Burke, M.; Angelov, D.; Davchev, T.; Subr, K.; Ramamoorthy, S. Vid2Param: Modeling of Dynamics Parameters From Video. IEEE Robot. Autom. Lett. 2020, 5, 414–421. [Google Scholar] [CrossRef]

- Mottaghi, R.; Rastegari, M.; Gupta, A.; Farhadi, A. “What happens if...” Learning to Predict the Effect of Forces in Images. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 269–285. [Google Scholar]

- Fragkiadaki, K.; Agrawal, P.; Levine, S.; Malik, J. Learning Visual Predictive Models of Physics for Playing Billiards. arXiv 2015, arXiv:1511.07404. [Google Scholar]

- Ehrhardt, S.; Monszpart, A.; Mitra, N.; Vedaldi, A. Unsupervised intuitive physics from visual observations. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Cham, Switzerland, 2018; pp. 700–716. [Google Scholar]

- Jaques, M.; Burke, M.; Hospedales, T. Physics-as-Inverse-Graphics: Unsupervised Physical Parameter Estimation from Video. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Wu, J.; Lu, E.; Kohli, P.; Freeman, B.; Tenenbaum, J. Learning to see physics via visual de-animation. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 153–164. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8183–8192. [Google Scholar] [CrossRef]

- Zhao, H.; Liu, Y.; Xie, X.; Liao, Y.; Liu, X. Filtering Based Adaptive Visual Odometry Sensor Framework Robust to Blurred Images. Sensors 2016, 16, 1040. [Google Scholar] [CrossRef] [PubMed]

- Kubricht, J.R.; Holyoak, K.J.; Lu, H. Intuitive physics: Current research and controversies. Trends Cogn. Sci. 2017, 21, 749–759. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Janner, M.; Levine, S.; Freeman, W.T.; Tenenbaum, J.B.; Finn, C.; Wu, J. Reasoning about Physical Interactions with Object-Oriented Prediction and Planning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Agrawal, P.; Nair, A.V.; Abbeel, P.; Malik, J.; Levine, S. Learning to poke by poking: Experiential learning of intuitive physics. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 5074–5082. [Google Scholar]

- Denil, M.; Agrawal, P.; Kulkarni, T.D.; Erez, T.; Battaglia, P.; de Freitas, N. Learning to Perform Physics Experiments via Deep Reinforcement Learning. arXiv 2017, arXiv:1611.01843. [Google Scholar]

- Greff, K.; Van Steenkiste, S.; Schmidhuber, J. Neural expectation maximization. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6691–6701. [Google Scholar]

- Van Steenkiste, S.; Chang, M.; Greff, K.; Schmidhuber, J. Relational Neural Expectation Maximization: Unsupervised Discovery of Objects and their Interactions. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Baradel, F.; Neverova, N.; Mille, J.; Mori, G.; Wolf, C. COPHY: Counterfactual Learning of Physical Dynamics. arXiv 2020, arXiv:1909.12000. [Google Scholar]

- Wu, J.; Yildirim, I.; Lim, J.J.; Freeman, B.; Tenenbaum, J. Galileo: Perceiving Physical Object Properties by Integrating a Physics Engine with Deep Learning. In Advances in Neural Information Processing Systems 28; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2015; pp. 127–135. [Google Scholar]

- Wu, J.; Lim, J.J.; Zhang, H.; Tenenbaum, J.B.; Freeman, W.T. Physics 101: Learning physical object properties from unlabeled videos. In Proceedings of the British Machine Vision Conference, York, UK, 19–22 September 2016. [Google Scholar]

- Chang, M.B.; Ullman, T.; Torralba, A.; Tenenbaum, J.B. A Compositional Object-Based Approach to Learning Physical Dynamics. arXiv 2016, arXiv:1612.00341. [Google Scholar]

- Mottaghi, R.; Bagherinezhad, H.; Rastegari, M.; Farhadi, A. Newtonian Image Understanding: Unfolding the Dynamics of Objects in Static Images. arXiv 2016, arXiv:1511.04048. [Google Scholar]

- Hwang, W.; Lim, S.C. Inferring Interaction Force from Visual Information without Using Physical Force Sensors. Sensors 2017, 17, 2455. [Google Scholar] [CrossRef] [PubMed]

- Battaglia, P.; Pascanu, R.; Lai, M.; Rezende, D.J. Interaction Networks for Learning about Objects, Relations and Physics. In Proceedings of the NIPS’16 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 4–9 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; pp. 4509–4517. [Google Scholar]

- Watters, N.; Tacchetti, A.; Weber, T.; Pascanu, R.; Battaglia, P.; Zoran, D. Visual Interaction Networks: Learning a Physics Simulator from Video. In Proceedings of the NIPS’17 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 4542–4550. [Google Scholar]

- Fraccaro, M.; Kamronn, S.; Paquet, U.; Winther, O. A Disentangled Recognition and Nonlinear Dynamics Model for Unsupervised Learning. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2017; pp. 3601–3610. [Google Scholar]

- De Avila Belbute-Peres, F.; Smith, K.; Allen, K.; Tenenbaum, J.; Kolter, J.Z. End-to-end differentiable physics for learning and control. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 7178–7189. [Google Scholar]

- Ehrhardt, S.; Monszpart, A.; Mitra, N.J.; Vedaldi, A. Taking visual motion prediction to new heightfields. Comput. Vision Image Underst. 2019, 181, 14–25. [Google Scholar] [CrossRef]

- Hsieh, J.T.; Liu, B.; Huang, D.A.; Fei-Fei, L.F.; Niebles, J.C. Learning to decompose and disentangle representations for video prediction. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 517–526. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2017–2025. [Google Scholar]

- Chung, J.; Kastner, K.; Dinh, L.; Goel, K.; Courville, A.C.; Bengio, Y. A recurrent latent variable model for sequential data. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2980–2988. [Google Scholar]

- Chen, M.; Hashimoto, K. Vision System for Coarsely Estimating Motion Parameters for Unknown Fast Moving Objects in Space. Sensors 2017, 17, 2820. [Google Scholar] [CrossRef] [PubMed]

- Monszpart, A.; Thuerey, N.; Mitra, N.J. SMASH: Physics-Guided Reconstruction of Collisions from Videos. ACM Trans. Graph. 2016, 35. [Google Scholar] [CrossRef]

- Finn, C.; Goodfellow, I.; Levine, S. Unsupervised Learning for Physical Interaction through Video Prediction. In Proceedings of the NIPS’16 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 4–9 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; pp. 64–72. [Google Scholar]

- Stewart, R.; Ermon, S. Label-Free Supervision of Neural Networks with Physics and Domain Knowledge. In Proceedings of the AAAI’17 Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–10 February 2017; AAAI Press: Menlo Park, CA, USA, 2017; pp. 2576–2582. [Google Scholar]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep Multi-Scale Convolutional Neural Network for Dynamic Scene Deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zivkovic, Z.; van der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Lu, B.; Chen, J.C.; Chellappa, R. Unsupervised domain-specific deblurring via disentangled representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10225–10234. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Wang, Y.; Jiang, L.; Yang, M.H.; Li, L.J.; Long, M.; Fei-Fei, L. Eidetic 3D LSTM: A Model for Video Prediction and Beyond. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

| FDB | FTB | ||||

|---|---|---|---|---|---|

| FDB1 | FDB2 | FDB3 | FTB Synthetic | FTB Real | |

| generating method | synthetic | synthetic | synthetic | synthetic | real |

| number of objects | 1 | 2 | 3 | 1 | 1 |

| train dataset amount | 900 | 900 | 2700 | 4700 | 64 |

| test dataset amount | 100 | 100 | 100 | 100 | 30 |

| frame size | 64 × 64 | 64 × 64 | 64 × 64 | 160 × 120 | 160 × 120 |

| number of frames per video | 20 | 20 | 20 | 10 | 10 |

| object states/shutter speed labels | exists | exists | exists | exists | not exists |

| FDB1 | FDB2 | FDB3 | |

|---|---|---|---|

| SSE | 4.40 | 10.61 | 13.81 |

| SSIM | 0.948 | 0.855 | 0.809 |

| PE | 107.52 | 178.86 | 211.44 |

| Method | PE | SSE | SSIM | Execution Time |

|---|---|---|---|---|

| PAIG | 3549.24 | 6.70 | 0.816 | 0.006 |

| Ours | 107.52 | 4.40 | 0.948 | 0.012 |

| Method | PE | SSE | SSIM | Execution Time |

| Eidetic 3D LSTM | - | 12.98 | 0.850 | 0.008 |

| Ours | 178.86 | 10.61 | 0.855 | 0.023 |

| Motion Blur Degree | Strong | Moderate | Weak | Sharp |

|---|---|---|---|---|

| Position error | 470.52 | 264.27 | 7.65 | 1338.03 |

| Position Error | Velocity Error | |

|---|---|---|

| w/ velocity encoder division | 107.52 | 16.46 |

| w/o velocity encoder division | 3144.60 | 116.36 |

| Model | SSE | SSIM |

|---|---|---|

| No fine tuning | 10.75 | 0.980 |

| Model | SSE | SSIM |

| No fine tuning | 13.49 | 0.968 |

| only | 14.82 | 0.963 |

| only | 10.24 | 0.975 |

| only | 9.30 | 0.976 |

| All | 9.15 | 0.977 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Oh, Y.J.; Lee, I.-K. Future-Frame Prediction for Fast-Moving Objects with Motion Blur. Sensors 2020, 20, 4394. https://doi.org/10.3390/s20164394

Lee D, Oh YJ, Lee I-K. Future-Frame Prediction for Fast-Moving Objects with Motion Blur. Sensors. 2020; 20(16):4394. https://doi.org/10.3390/s20164394

Chicago/Turabian StyleLee, Dohae, Young Jin Oh, and In-Kwon Lee. 2020. "Future-Frame Prediction for Fast-Moving Objects with Motion Blur" Sensors 20, no. 16: 4394. https://doi.org/10.3390/s20164394

APA StyleLee, D., Oh, Y. J., & Lee, I.-K. (2020). Future-Frame Prediction for Fast-Moving Objects with Motion Blur. Sensors, 20(16), 4394. https://doi.org/10.3390/s20164394