1. Introduction

Although industrial robots are flexible platforms and provide high repeatability for the automation of a variety of manufacturing tasks, a low absolute positioning accuracy may limit their applicability [

1]. Error sources in robots can be either kinematic or non-kinematic [

2,

3,

4]. Manufacturing and assembly tolerances cause deviation of the actual kinematic parameters from their nominal values, causing kinematic errors [

5]. On the other hand, non-kinematic errors are usually neglected by their difficult modeling. Nevertheless, non-kinematic errors caused by factors such as temperature variations, joint and link compliance, and gear backlash have a considerable effect on the absolute positioning accuracy. Thus, an efficient method to effectively compensate positioning deviations caused by both kinematic and non-kinematic errors should be devised.

Extensive reports have shown that kinematic errors account for about 90% of the total positioning error [

6]. Therefore, kinematic calibration effectively improves the absolute positioning accuracy of robots. This type of calibration comprises four steps: modeling, measurement, identification, and compensation or correction [

7]. Thus, the kinematic model plays a critical role during robot calibration and should meet the following requirements for parameter identification: continuity, completeness, and minimality [

8]. The Denavit–Hartenberg convention is a widely used modeling method to describe the robot kinematics [

9]. However, this method is not continuous when two adjacent joint axes are parallel or nearly parallel, thus presenting singularities. To overcome the singularity problem, many studies have suggested alternative models. For instance, Hayati added a revolute parameter to the Denavit–Hartenberg convention, establishing the modified Denavit–Hartenberg model [

10]. The complete and parametrically continuous model proposed by Meng and Zhuang provides completeness and continuity, but some of its parameters are redundant [

11]. The product of exponentials (POE) model provides a complete geometric and parameterized representation of the robot motion, greatly simplifying the kinematic analysis of robotic mechanisms. The POE formula satisfies continuity, completeness, and minimality, being suitable and widely used for robot kinematic modeling [

12].

Robot calibration aims to optimize an objective function and obtain accurate kinematic parameters [

13]. The most common calibration algorithms are those based on the least squares method, such as the Gauss–Newton and Levenberg–Marquardt (LM) algorithms, with the latter being robust against interference and enabling global search [

14,

15]. However, neglecting error sources such as temperature variations and gear backlash limits the effectiveness and accuracy of robot calibration. Various correction methods have been developed to mitigate the influence of non-kinematic errors. Ma et al. demonstrated the importance of non-kinematic errors and generalized them by a representation with error matrices containing high-order Chebyshev polynomials that reflect individual error terms [

16]. Whitney et al. developed a forward calibration method using joint encoder offset, link length, and consecutive-axis relative orientations as parameters and experimentally evaluated the effects of joint backlash, gear transmission, and compliance errors [

17]. Chen et al. parameterized the joint flexibility error for estimation to improve the absolute positioning accuracy. However, the mathematical modeling of these methods is complex and various non-kinematic errors are ignored, limiting their applicability [

18].

Given the difficulty to model non-kinematic errors, artificial neural networks have been used as an alternative to compensate the absolute positioning error [

19]. Neural networks allow one to compensate positioning errors, avoid complex modeling, and comprehensively consider the influence of every error source. Ding et al. proposed a neural network combining with Faugeras vision system calibration technology for accurate calibration of a delta-robot vision system [

20]. Likewise, Jang et al. used a radial basis function network to approximate the relationship between the robot joint readings and corresponding position errors [

21]. Wang et al. developed a neural network to extract local features of error surface and estimated the positioning errors during calibration of a robot manipulator [

22].

The performance of neural networks is influenced by their structure and parameters. To optimize neural networks, Rouhani et al. introduced one-pass heuristic rules to determine the center, number, and spread of hidden neurons in the network [

23]. Feng et al. used evolutionary particle swarm optimization and developed a neural network with a self-generating radial basis function [

24]. González et al. developed a multiobjective evolutionary algorithm to optimize neural networks. Differential evolution algorithms enable global optimization with high identification accuracy and optimal rate [

25]. Accordingly, we adopt differential evolution for pre-training of a neural network to optimize the thresholds, initial weights, and the number of hidden neurons for maximizing accuracy and efficiency. We conducted simulations and experiments to verify the performance of the proposed network by employing a six-degree-of-freedom (DOF) industrial robot and a laser tracker as reference.

The main contributions of this work can be summarized as follows. (1) The influence of kinematic and non-kinematic errors on the absolute positioning accuracy is analyzed, and the limitations of kinematic calibration are addressed. (2) A neural network optimized using differential evolution is proposed to enhance the absolute positioning accuracy. Thresholds, initial weights, and the number of hidden neurons in the neural network are optimized using differential evolution to improve the performance of the neural network. The proposed network mitigates the effects of kinematic and non-kinematic errors and thus improves the absolute positioning accuracy of a robot. (3) The experiment and simulation are completed with the six-DOF robot as the research object and the laser tracker as the measuring instrument. The theoretical correctness and effectiveness of the proposed neural network are verified by simulations and experiments.

The remainder of this paper is organized as follows: The influences of kinematic and non-kinematic errors on robot positioning accuracy are analyzed in

Section 2. The proposed neural network optimized by differential evolution is detailed in

Section 3. In

Section 4, simulations and experiments are performed for compensating the absolute positioning error. The discussion and conclusions are presented in

Section 5.

2. Kinematic and Non-Kinematic Error Analysis

The accuracy of a robot kinematic model depends on robot calibration. We use the POE model to describe the robot kinematics [

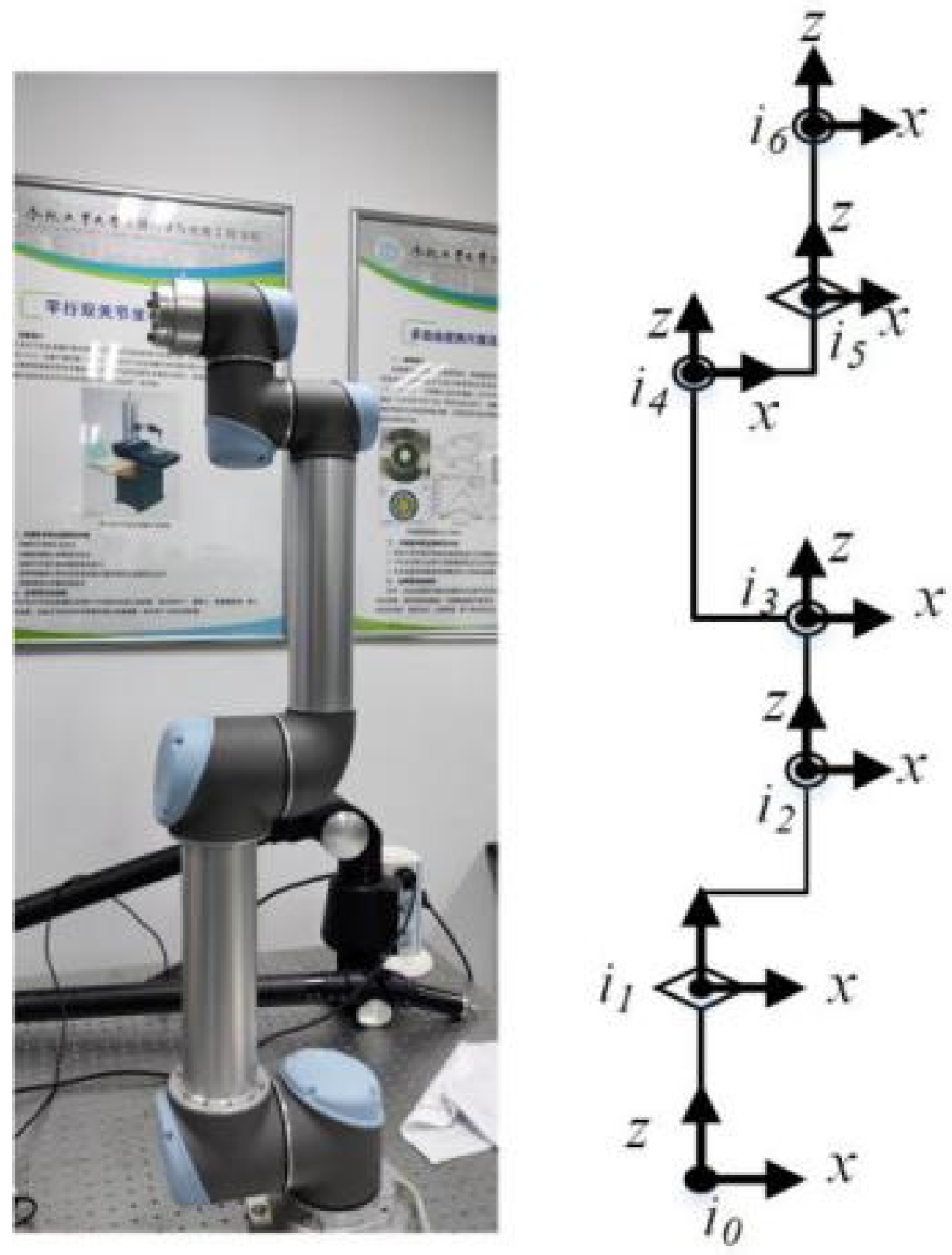

26], considering the coordinate systems of the robot shown in

Figure 1.

The pose of end-effector frame

with respect to base frame

is given by

where

indicates the robot joint variable and

is the twist of the initial transformation. In Equation (1),

can be expressed as

where

and

are given by

where

represents the coordinate of the origin of each axis in the base coordinate system

;

is the direction vector in system

of each rotation axis.

For a rotational joint, the exponential matrix of the motion screw can be represented as

The forward kinematics of a six-DOF serial robot is given by

The kinematic parameters of the robot generally deviate from their designed values due to different kinematic errors such as mechanical deformation, assembly and machining errors etc., as shown in

Figure 2.

When the kinematic errors are only considered, the actual robot position

can be expressed as

where

and

represent the kinematic errors to be corrected. The mathematical expression of kinematic errors can be obtained by kinematic modeling. These errors exist whether the robot is moving or not. Therefore, kinematic errors can be identified by the calibration method so as to effectively improve the positioning accuracy of the robot.

However, non-kinematic errors undermine accuracy, but they are generally neglected due to their modeling difficulty and complexity. All error sources whose contributions to positioning errors cannot be characterized by kinematic parameters are herein described as non-kinematic errors [

18]. The non-kinematic errors relate to pose and dynamical behavior of the robot, so it is difficult to obtain the exactly mathematical expression. Therefore, calibration cannot mitigate the effects of non-kinematic errors. Non-kinematic errors with considerable effects, such as those related to strain wave gearing and joint flexibility, occur between the angular encoder and output shaft at the joint, and the nominal joint variable should be compensated for computing the actual joint rotation.

Considering non-kinematic errors, the actual robot joint variable can be expressed as

where

is the nominal robot joint variable and

represents the compensation factor for non-kinematic errors.

We can use Chebyshev polynomials to calculate

[

16]:

with

where

m denotes the order of the Chebyshev polynomial and

are the polynomial coefficients to be calculated.

Thus, when nominal joint variables and theoretical kinematic parameters of a robot are known, its actual position is given by

The complexity of non-kinematic errors and the coupling between the compensation of nominal joint variables and robot pose hinder the determination of the actual mathematical representation of the robot position. Therefore, we use a neural network to directly estimate the robot position based on the joint variables. This method avoids complex calibration and modeling, effectively improves the absolute positioning accuracy of the robot and mitigates the influence of kinematic and non-kinematic errors.

4. Simulations and Experiments

We validated the performance of the proposed neural network through simulations and experiments of error compensation on a six-DOF robot manipulator. For comparison, the robot kinematic parameters were calibrated using the POE model [

12] and LM algorithm [

14,

15].

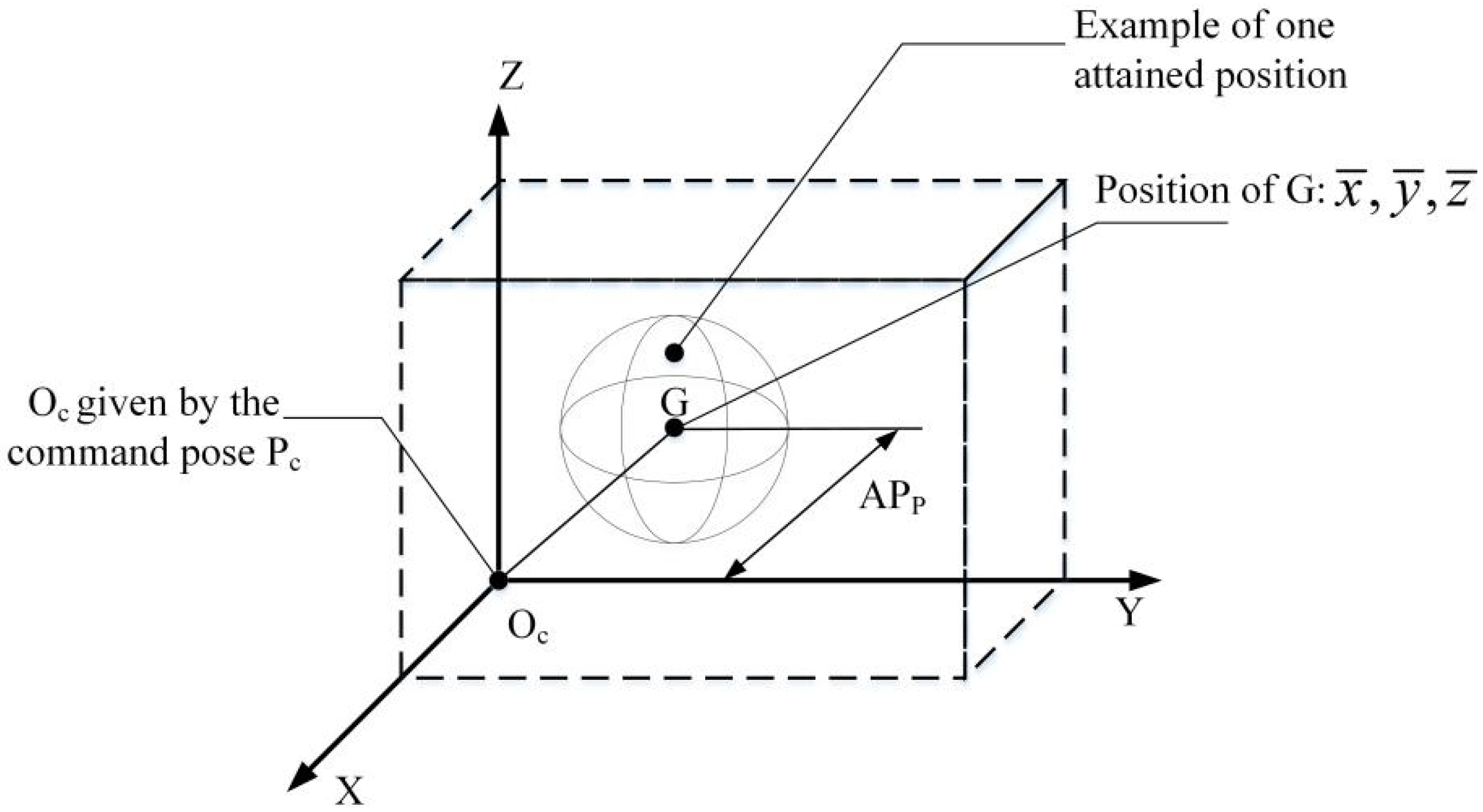

As described in standard ISO 9283 [

28], the positioning accuracy is the difference between the position of a command pose and the barycenter of the attained positions, as illustrated in

Figure 6.

Thus, the positioning accuracy can be calculated as

with

where

are the coordinates of the barycenter of the cluster of points obtained after executing the same pose

n times,

are the coordinates of the command pose, and

are the coordinates of the

j-th attained pose along the respective axes.

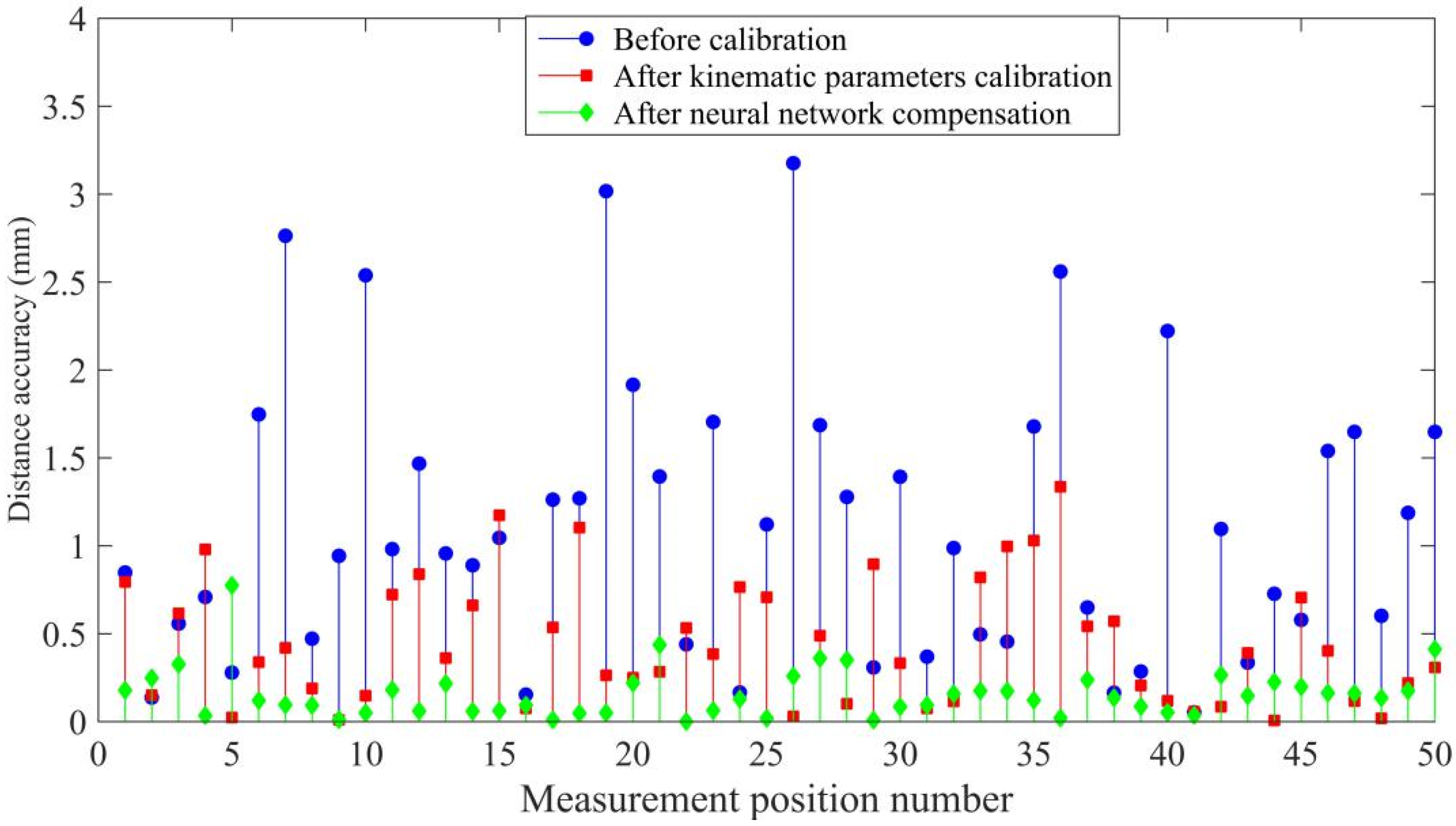

Analogously, the distance accuracy expresses the deviation in positioning and orientation between the command distance and mean of the attained distances, as illustrated in

Figure 7.

The distance accuracy can be calculated as

with

We use both the distance accuracy and positioning accuracy to quantify error compensation.

4.1. Simulations

To perform simulations, we added random parameter errors to the theoretical robot kinematic parameters as actual parameters and non-kinematic errors as the compensation of the nominal joint variables of the robot. The actual and theoretical end-effector position coordinates of the robot were calculated using the POE model.

We applied 1000 pairs of joint variables and position coordinates to optimize the thresholds, initial weights, and number of hidden neurons in the proposed network using differential evolution. The same 1000 samples were applied to train the optimized neural network, and 100 command points were employed for verification of the prediction accuracy of the trained neural network. In addition, the robot kinematic parameters were calibrated using the POE model and LM algorithm for comparison by applying the same samples. The compensation results are shown in

Figure 8 and

Figure 9.

Compared to the calibration method, the proposed neural network optimized using differential evolution provides better compensation. The maximum distance accuracy is improved from 3.1760 mm to 0.7743 mm, and the average distance accuracy is improved from 1.1118 mm to 0.1564 mm by using the proposed network. The maximum positioning accuracy is improved from 3.1760 mm to 0.7743 mm and the average positioning accuracy is improved from 0.7411 mm to 0.1007 mm by using the proposed algorithm.

As summarized in

Table 1 and

Table 2, the proposed method has the best absolute positioning accuracy and distance accuracy.

4.2. Experiment

The calibration system, as shown in

Figure 10, consists of a six-DOF serial robot (Universal Robot 5, Universal Robots), a Laser Tracker (API T3, Automated Precision Inc., Maryland, USA) with an accuracy of 0.005 mm/m, and an accompanying laser reflector. The reflector was fixed at an assigned location on the robot end-effector. The robot moved within the workspace and spatial position coordinates of the end-effector data were collected using an laser tracker as the output of the neural network. Additionally, the corresponding joint angles of the sampling points were recorded as the input of the neural network.

We measured 600 samples in the workspace of the robot for optimization and training of the proposed network. The same samples were used for calibration of kinematic parameters using the POE model and LM algorithm. The samples were selected such that the corresponding joint variables covered the robot joint working ranges, as shown in

Figure 11. In addition, 100 command points in the robot workspace were randomly selected to verify the accuracy of the calibration methods and proposed network.

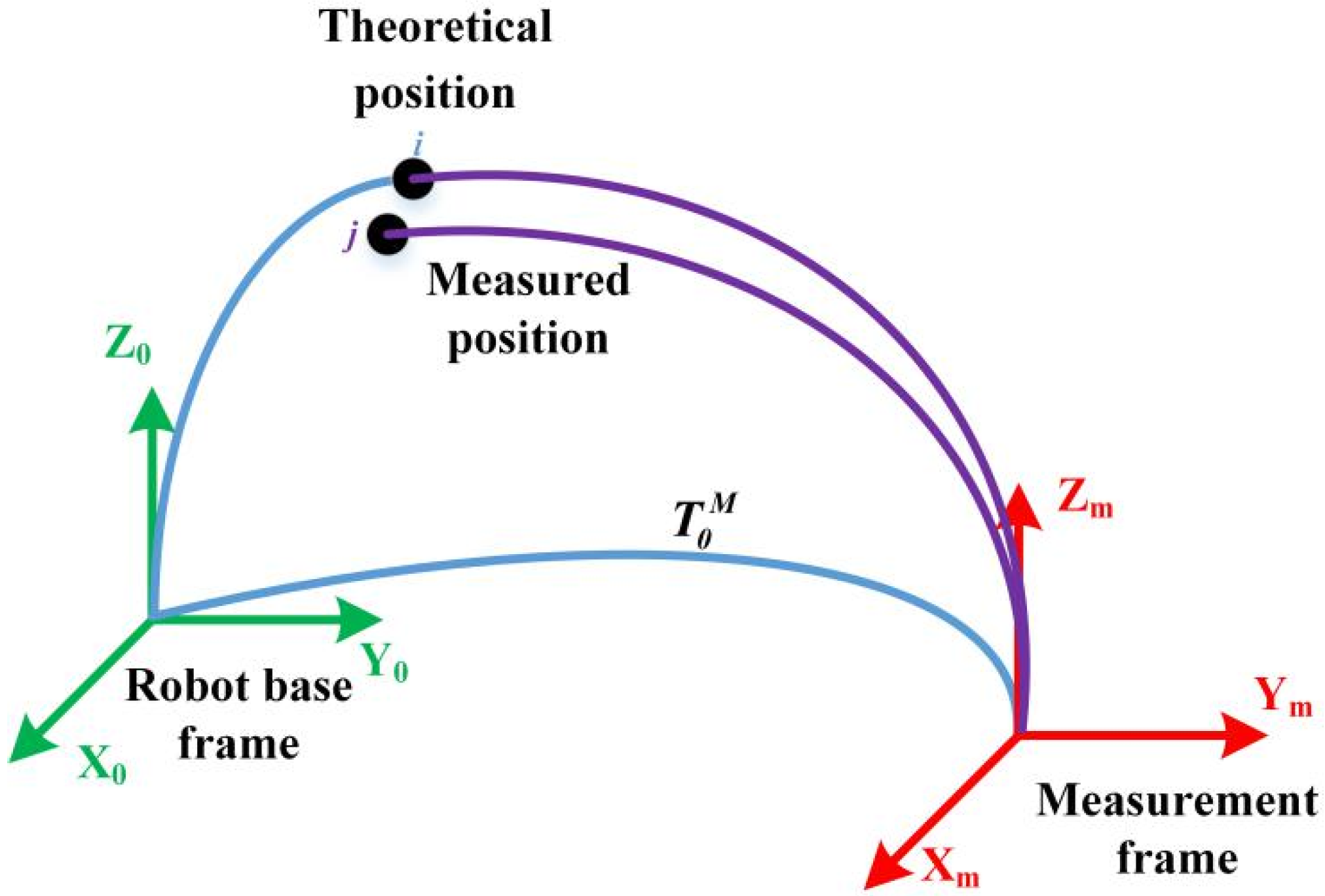

Figure 12 shows the robot base and measurement coordinate systems considered in the experiment. Gan et al. presented a simple but effective calibration of the relative rotation matrix and translation vector for converting the base frames of two coordinate systems using quaternions [

29]. We used this method to determine the transformation between the measurement and robot base frames. The standard position coordinates measured using the laser tracker can be expressed into the robot base coordinate system. Therefore, we conducted the prediction and training of the proposed network by integrating robot joint variables with exact positioning with respect to the robot base.

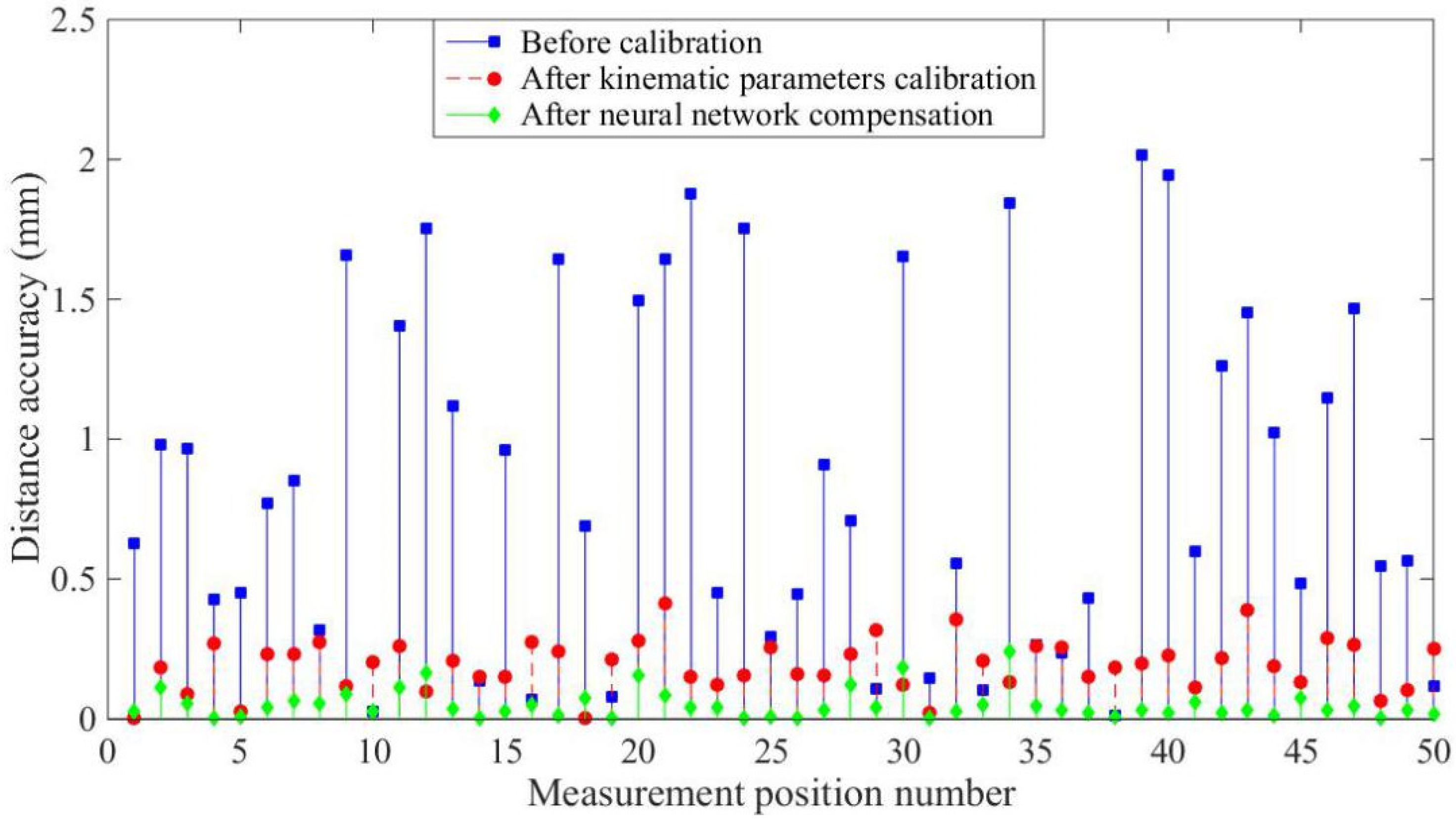

Figure 13 and

Figure 14 and

Table 3 and

Table 4 show the experimental results, with the optimized network accurately predicting the position coordinates. Before calibration, the maximum positioning accuracy is 3.1760 mm and the maximum distance accuracy 1.1118 mm, which is affected by kinematic and non-kinematic errors. After calibration, using the POE model and the LM algorithm, the positioning accuracy and distance accuracy of the robot were significantly improved, which is due to the reduction in kinematic errors through calibration. However, neglecting error sources such as temperature variations and gear backlash limits the effectiveness and accuracy of robot calibration. Therefore the proposed network provides the best absolute positioning accuracy and distance accuracy because the proposed network mitigates the effects of kinematic and non-kinematic errors. After error compensation, the maximum positioning accuracy is improved from 3.1760 mm to 0.7743 mm and the average positioning accuracy is improved from 1.1118 mm to 0.1564 mm by using the proposed algorithm. The maximum distance accuracy is improved from 2.0140 mm to 0.2392 mm and the average distance accuracy is improved from 0.8497 mm to 0.0493 mm.

5. Discussion and Conclusions

The absolute positioning accuracy of robots has become increasingly important to support their widespread applications, particularly when offline programming is required. Error sources in robots can be classified into kinematic and non-kinematic errors. Conventional calibration can reduce the influence of kinematic errors on positioning accuracy, but the complexity of non-kinematic error sources and modeling hinders the compensation of such errors.

We applied a neural network to compensate both kinematic and non-kinematic errors with the aim of improving the absolute positioning accuracy of robot manipulators. The proposed network can avoid complex modeling while comprehensively considering the influence of all error sources. As the performance of a neural network is influenced by its structure and parameters, we optimized the proposed network using differential evolution. The thresholds, initial weights, and number of hidden neurons in the network are optimized using differential evolution to improve efficiency and performance. Using the proposed network, the effects of kinematic, and non-kinematic errors decreased, thus improving the absolute positioning accuracy of an industrial robot.

The theoretical correctness and effectiveness of the proposed method are verified by simulations and experiments on a six-DOF serial robot and compared with calibration using the POE model and LM algorithm. The absolute positioning accuracy improves using the proposed network, and the experimental results verify the correctness and effectiveness of the network. After calibration, the robot average distance accuracy improved from 0.8497 mm before calibration to 0.04933 mm. Likewise, the robot average positioning accuracy improved from 3.176 mm before calibration to 0.7743 mm.