Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

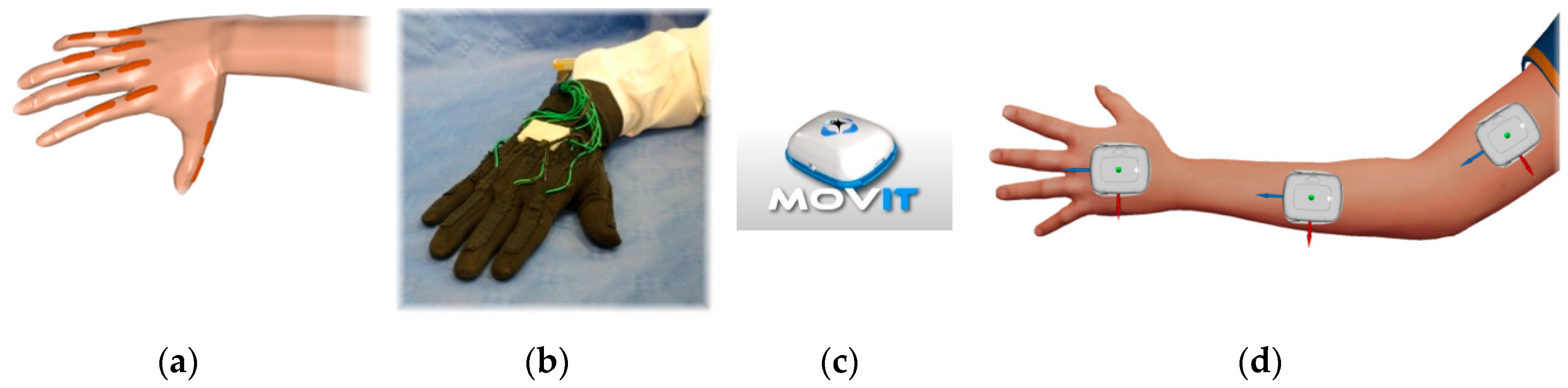

3.1. Sensory Glove

3.2. IMUs

3.3. Calibration and Data Acquisition

3.4. Signers and Signs

4. Classifiers

4.1. k-NN with DTW

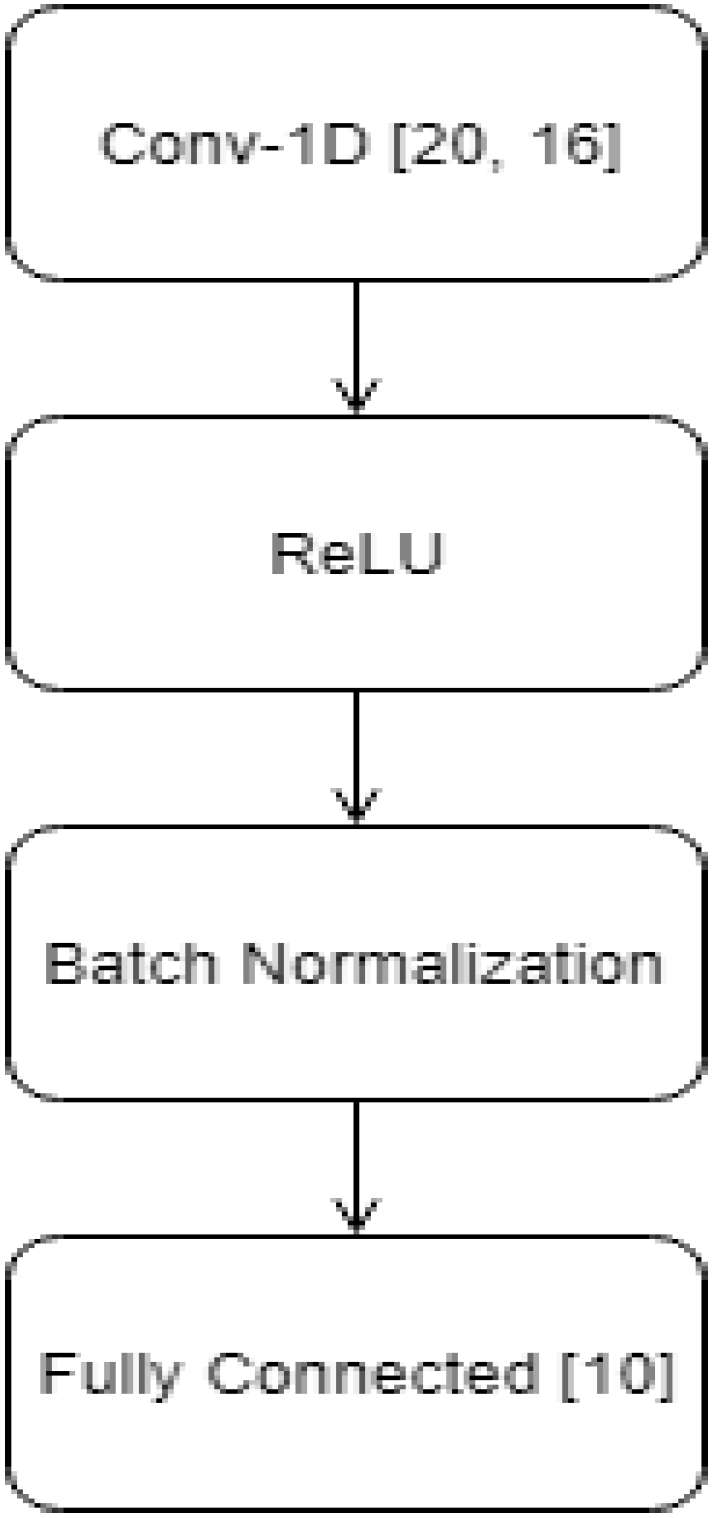

4.2. CNN

5. Results and Discussion

5.1. Results with the k-NN and DTW Algorithm

5.2. Results with the CNN Algorithm

5.3. Comparison of Results with Related Works

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Saggio, G.; Cavrini, F.; Di Paolo, F. Inedited SVM application to automatically tracking and recognizing arm-and-hand visual signals to aircraft. In Proceedings of the 7th International Joint Conference on Computational Intelligence 3, Lisbon, Portugal, 12–14 November 2015; pp. 157–162. [Google Scholar]

- Saggio, G.; Cavrini, F.; Pinto, C.A. Recognition of arm-and-hand visual signals by means of SVM to increase aircraft security. Stud. Comput. Intell. 2017, 669, 444–461. [Google Scholar]

- León, M.; Romero, P.; Quevedo, W.; Arteaga, O.; Terán, C.; Benalcázar, M.E.; Andaluz, V.H. Virtual rehabilitation system for fine motor skills using a functional hand orthosis. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Otranto, Italy, 24–27 June 2018; pp. 78–94. [Google Scholar]

- Ramírez, F.; Segura-Morales, M.; Benalcázar, M.E. Design of a software architecture and practical applications to exploit the capabilities of a human arm gesture recognition system. In Proceedings of the 3rd IEEE Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 15–19 October 2018; pp. 1–6. [Google Scholar]

- Tsironi, E.; Barros, P.; Weber, C.; Wermter, S. An analysis of convolutional long short-term memory recurrent neural networks for gesture recognition. Neurocomputing 2017, 268, 76–86. [Google Scholar] [CrossRef]

- Saggio, G.; Bizzarri, M. Feasibility of teleoperations with multi-fingered robotic hand for safe extravehicular manipulations. Aerosp. Sci. Tech. 2014, 39, 666–674. [Google Scholar] [CrossRef]

- Saggio, G.; Lazzaro, A.; Sbernini, L.; Carrano, F.M.; Passi, D.; Corona, A.; Panetta, V.; Gaspari, A.L.; Di Lorenzo, N. Objective surgical skill assessment: An initial experience by means of a sensory glove paving the way to open surgery simulation? J. Surg. Educ. 2015, 72, 910–917. [Google Scholar] [CrossRef] [PubMed]

- Mohandes, M.; Deriche, M.; Liu, J. Image-Based and sensor-based approaches to Arabic sign language recognition. IEEE Trans. Hum. Mach. Syst. 2014, 44, 551–557. [Google Scholar] [CrossRef]

- Who.int. Available online: http://www.who.int/mediacentre/factsheets/fs300/en/ (accessed on 2 May 2018).

- Valli, C.; Lucas, C. Linguistics of American Sign Language: An Introduction, 4th ed.; University Press: Washington, DC, USA, 2000. [Google Scholar]

- Yi, B.; Wang, X.; Harris, F.C.; Dascalu, S.M. sEditor: A prototype for a sign language interfacing system. IEEE Trans. Hum. Mach. Syst. 2014, 44, 499–510. [Google Scholar] [CrossRef]

- Saggio, G.; Sbernini, L. New scenarios in human trunk posture measurements for clinical applications. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications (MeMeA 2011), Bari, Italy, 30–31 May 2011. [Google Scholar]

- Estrada, L.; Benalcázar, M.E.; Sotomayor, N. Gesture recognition and machine learning applied to sign language translation. In Proceedings of the 7th Latin American Congress on Biomedical Engineering CLAIB 2016, Bucaramanga, Colombia, 26–28 October 2016; pp. 233–236. [Google Scholar]

- Orengo, G.; Lagati, A.; Saggio, G. Modeling wearable bend sensor behavior for human motion capture. IEEE Sens. J. 2014, 14, 2307–2316. [Google Scholar] [CrossRef]

- Saggio, G.; Bocchetti, S.; Pinto, C.A.; Orengo, G.; Giannini, F. A novel application method for wearable bend sensors. In Proceedings of the 2nd IEEE International Symposium on Applied Sciences in Biomedical and Communication Technologies, Bratislava, Slovakia, 24–27 November 2009; pp. 1–39. [Google Scholar]

- Mohandes, M.; A-Buraiky, S.; Halawani, T.; Al-Baiyat, S. Automation of the Arabic sign language recognition. In Proceedings of the IEEE International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 19–23 April 2004; pp. 479–480. [Google Scholar]

- Kadous, M.W. Machine recognition of auslan signs using powergloves: Towards large-lexicon recognition of sign language. In Proceedings of the Workshop on the Integration of Gesture in Language and Speech, Wilmington, DE, USA, 7–8 October 1996. [Google Scholar]

- Mohandes, M.; Deriche, M. Arabic sign language recognition by decisions fusion using Dempster-Shafer theory of evidence. In Proceedings of the IEEE Computing, Communications and IT Applications Conference, Hong Kong, China, 2–3 April 2013; pp. 90–94. [Google Scholar]

- Tubaiz, N.; Shanableh, T.; Assaleh, K. Glove-Based continuous arabic sign language recognition in user-dependent mode. IEEE Trans. Hum. Mach. Syst. 2015, 45, 526–533. [Google Scholar] [CrossRef]

- Abualola, H.; Al Ghothani, H.; Eddin, A.N.; Almoosa, N.; Poon, K. Flexible gesture recognition using wearable inertial sensors. In Proceedings of the 59th IEEE International Midwest Symposium on Circuits and Systems, Abu Dhabi, UAE, 16–19 October 2016; pp. 1–4. [Google Scholar]

- Hernandez-Rebollar, J.L.; Kyriakopoulos, N.; Lindeman, R.W. A new instrumented approach for translating American sign language into sound and text. In Proceedings of the 6th IEEE International Conference on Automatic Face and Gesture Recognition, Seoul, Korea, 17–19 May 2004; pp. 547–552. [Google Scholar]

- Lu, D.; Yu, Y.; Liu, H. Gesture recognition using data glove: An extreme learning machine method. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Qingdao, China, 3–7 December 2016; pp. 1349–1354. [Google Scholar]

- Saengsri, S.; Niennattrakul, V.; Ratanamahatana, C.A. TFRS: Thai finger-spelling sign language recognition system. In Proceedings of the 2nd IEEE International Conference on Digital Information and Communication Technology and its Applications, Bangkok, Thailand, 16–18 May 2012; pp. 457–462. [Google Scholar]

- Silva, B.C.R.; Furriel, G.P.; Pacheco, W.C.; Bulhoes, J.S. Methodology and comparison of devices for recognition of sign language characters. In Proceedings of the 18th IEEE International Scientific Conference on Electric Power Engineering, Kouty nad Desnou, Czech Republic, 17–19 May 2017; pp. 1–6. [Google Scholar]

- Saggio, G.; Cavallo, P.; Fabrizio, A.; Ibe, S.O. Gesture recognition through HITEG data glove to provide a new way of communication. In Proceedings of the 4th International Symposium on Applied Sciences in Biomedical and Communication Technologies (ISABEL’11), Barcelona, Spain, 26 October 2011. [Google Scholar]

- Saggio, G.; Orengo, G. Flex sensor characterization against shape and curvature changes. Sens. Actuators A Phys. 2018, 273, 221–223. [Google Scholar] [CrossRef]

- Costantini, G.; Casali, D.; Paolizzo, F.; Alessandrini, M.; Micarelli, A.; Viziano, A.; Saggio, G. Towards the enhancement of body standing balance recovery by means of a wireless audio-biofeedback system. Med. Eng. Phys. 2018, 54, 74–81. [Google Scholar] [CrossRef] [PubMed]

- Ricci, M.; Terribili, M.; Giannini, F.; Errico, V.; Pallotti, A.; Galasso, C.; Tomasello, L.; Sias, S.; Saggio, G. Wearable-Based electronics to objectively support diagnosis of motor impairments in school-aged children. J. Biomech. 2019, 83, 243–252. [Google Scholar] [CrossRef] [PubMed]

- Ricci, M.; Di Lazzaro, G.; Pisani, A.; Mercuri, N.B.; Giannini, F.; Saggio, G. Assessment of Motor Impairments in Early Untreated Parkinson’s Disease Patients: The Wearable Electronics Impact. IEEE J. Biomed. Health Inform. 2020, 24, 120–130. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Oppenheim, A.V.; Schafer, R.W. Digital Filter Design Techniques of Digital Signal Processing, 1st ed.; Prentice Hall: Upper Saddle River, NJ, USA, 1978. [Google Scholar]

- Benalcázar, M.E.; Jaramillo, A.; Zea, J.; Páez, A.; Andaluz, V.H. Hand gesture recognition using machine learning and the Myo armband. In Proceedings of the 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 1040–1044. [Google Scholar]

- Müller, M. Information Retrieval for Music and Motion; Springer: Berlin, Germany, 2007. [Google Scholar]

- Devroye, L.; Györfi, L.; Lugosi, G. A Probabilistic Theory of Pattern Recognition. Applications of Mathematics. Stochastic Modelling and Applied Probability; Springer: Berlin, Germany, 1991; Volume 31, pp. 61–81. [Google Scholar]

- Benalcázar, M.E.; Motoche, C.; Zea, J.; Jaramillo, A.; Anchundia, C.; Zambrano, P.; Segura, M.; Benalcázar, F.; Pérez, M. Real-Time hand gesture recognition using the myo armband and muscle activity detection. In Proceedings of the 2nd IEEE Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 18–20 October 2017; pp. 1–6. [Google Scholar]

- Wang, Q. Dynamic Time Warping. MATLAB Central File Exchange. Available online: https://www.mathworks.com/matlabcentral/fileexchange/43156-dynamic-time-warping-dtw (accessed on 22 June 2020).

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

| 135; 9.6% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 0; 0% | 0; 0% | 13; 0.9% | 2; 0.1% | 0; 0% | 89.4% | |

| Internet | 0; 0% | 132; 9.4% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 4; 0.3% | 0; 0% | 0; 0% | 97.1% |

| Jogging | 2; 0.1% | 0; 0.0% | 140; 10% | 0; 0% | 8; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 93.3% |

| Pizza | 0; 0% | 0; 0% | 0; 0% | 140; 10% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 100% |

| Television | 3; 0.2% | 0; 0.0% | 0; 0% | 0; 0.0% | 131; 9.4% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 97.0% |

| 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 140; 10% | 0; 0% | 0; 0% | 0; 0% | 0; 0.0% | 100% | |

| Ciao | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 139; 9.9% | 0; 0% | 0; 0% | 0; 0% | 100% |

| Cose | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 120; 8.6% | 0; 0% | 0; 0% | 100% |

| Grazie | 0; 0% | 8; 0.6% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 3; 0.2% | 138; 9.9% | 1; 0.1% | 91.4% |

| Maestra | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 138; 9.9% | 100%; |

| SENSITIVITY | 96.4% | 94.3% | 100% | 100% | 93.6% | 100% | 99.3% | 85.7% | 98.6% | 98.6% | 96.6% |

| Internet | Jogging | Pizza | Television | Ciao | Cose | Grazie | Maestra | PRECISION |

| 139; 9.9% | 0; 0% | 1; 0.1% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 0; 0% | 98.6% | |

| Internet | 0; 0% | 139; 9.9% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 0; 0% | 0; 0% | 1; 0.1% | 98.6% |

| Jogging | 0; 0% | 0; 0% | 135; 9.6% | 1; 0.1% | 0; 0% | 1; 0.1% | 2; 0.1% | 2; 0.1% | 1; 0.1% | 0; 0% | 95.1% |

| Pizza | 0; 0% | 0; 0% | 0; 0% | 138; 9.9% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 100% |

| Television | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 140; 10.0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 100% |

| 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 0; 0% | 139; 9.9% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 99.3% | |

| Ciao | 0; 0% | 0; 0% | 2; 0.1% | 0; 0% | 0; 0% | 0; 0% | 133; 9.5% | 0; 0% | 0; 0% | 0; 0% | 98.5% |

| Cose | 1; 0.1% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 137; 9.8% | 1; 0.1% | 2; 0.1% | 97.2% |

| Grazie | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 0; 0% | 1; 0.1% | 135; 9.6% | 0; 0% | 99.3% |

| Maestra | 0; 0% | 1; 0.1% | 2; 0.1% | 0; 0% | 0; 0% | 0; 0% | 4; 0.3% | 0; 0% | 2; 0.1% | 137; 9.8% | 93.8% |

| SENSITIVITY | 99.3% | 99.3% | 96.4% | 98.6% | 100% | 99.3%; | 95.0% | 97.9% | 96.4% | 97.9% | 98.0% |

| Internet | Jogging | Pizza | Television | Ciao | Cose | Grazie | Maestra | PRECISION |

| Reference | Sensor(s) | Signers, Signs, Repetitions | Classifier | Accuracy m ± s [%] |

|---|---|---|---|---|

| Mohandes et al., 1996 [16] | PowerGlove | n/a, 10, 20 | SVM | 90 ± 10 |

| Mohandes and Deriche, 2013 [18] | CyberGloves | 1, 100, 20 | LDA + MD | 96.2 ± 0.78 |

| Tubaiz et al., 2015 [19] | DG5 - VHand | 1, 40, 10 | MKNN | 82 ± 4.88 |

| Abualola et al., 2016 [20] | AcceleGlove + skeleton | 17, 1, 30 | CTM | 98 ± n/a |

| Lu et al., 2016 [22] | YoBuGlove | n/a, 10, n/a | ELM-kernel SVM | 89.59 ± n/a 83.65 ± n/a |

| Saengsri et al., 2012 [23] | 5DTGlove + tracker | 1, 16, 4 | ENN | 94.44 ± n/a |

| Silva et al., 2017 [24] | Glove + IMU | 1, 26, 100 | ANN | 95.8 ± n/a |

| Our work | HitegGlove + Movit G1 IMU | 7, 10, 100 | kNN + DTW CNN | 96.6 ± 3.4 98 ± 2.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saggio, G.; Cavallo, P.; Ricci, M.; Errico, V.; Zea, J.; Benalcázar, M.E. Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms. Sensors 2020, 20, 3879. https://doi.org/10.3390/s20143879

Saggio G, Cavallo P, Ricci M, Errico V, Zea J, Benalcázar ME. Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms. Sensors. 2020; 20(14):3879. https://doi.org/10.3390/s20143879

Chicago/Turabian StyleSaggio, Giovanni, Pietro Cavallo, Mariachiara Ricci, Vito Errico, Jonathan Zea, and Marco E. Benalcázar. 2020. "Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms" Sensors 20, no. 14: 3879. https://doi.org/10.3390/s20143879

APA StyleSaggio, G., Cavallo, P., Ricci, M., Errico, V., Zea, J., & Benalcázar, M. E. (2020). Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms. Sensors, 20(14), 3879. https://doi.org/10.3390/s20143879