Abstract

We consider measures of nonlinearity (MoNs) of a polynomial curve in two-dimensions (2D), as previously studied in our Fusion 2010 and 2019 ICCAIS papers. Our previous work calculated curvature measures of nonlinearity (MoNs) using (i) extrinsic curvature, (ii) Bates and Watts parameter-effects curvature, and (iii) direct parameter-effects curvature. In this paper, we have introduced the computation and analysis of a number of new MoNs, including Beale’s MoN, Linssen’s MoN, Li’s MoN, and the MoN of Straka, Duník, and S̆imandl. Our results show that all of the MoNs studied follow the same type of variation as a function of the independent variable and the power of the polynomial. Secondly, theoretical analysis and numerical results show that the logarithm of the mean square error (MSE) is an affine function of the logarithm of the MoN for each type of MoN. This implies that, when the MoN increases, the MSE increases. We have presented an up-to-date review of various MoNs in the context of non-linear parameter estimation and non-linear filtering. The MoNs studied here can be used to compute MoN in non-linear filtering problems.

Keywords:

polynomial curve in 2D; measures of nonlinearity (MoNs); extrinsic curvature; Beale’s MoN; Linssen’s MoN; Bates and Watts parameter-effects curvature; direct parameter-effects curvature; Li’s MoN; MoN of Straka, Duník, and S̆imandl; maximum likelihood estimator (MLE); Cramér-Rao lower bound (CRLB) 1. Introduction

The Kalman filter (KF) [1,2,3,4] is an optimal estimator (in the minimum mean square error (MMSE) sense) for a filtering problem with linear dynamic and measurement models with additive Gaussian noise. However, many real-world filtering problems are non-linear due to nonlinearity in the dynamic and measurement models. Common real-world non-linear filtering (NLF) problems are bearing-only filtering [5,6,7,8], ground moving target indicator (GMTI) filtering [9], passive angle-only filtering in three-dimensions (3D) using an infrared search and track sensor [10,11,12], etc.

In the early stages of NLF, the extended Kalman filter (EKF) [1,2,3,4] was widely used. It was observed in some problems, e.g., falling of a body in earth’s atmosphere with high velocity [13,14] and bearing-only filtering [5,7,8] that the EKF performs poorly due to linearization. The high degree of nonlinearity in these problems was the attributed cause for the poor performance of the problem without a quantitative measure of nonlinearity (MoN). To overcome the poor accuracy and convergence problems of the EKF, a number of improved approximate non-linear filters, such as the unscented Kalman filter (UKF) [14,15], cubature KF (CKF) [16], and particle filter (PF) [8,17] have been proposed during the last two decades.

It is important to address the following questions for NLF problems:

- Is it possible to find a quantitative MoN for a nonlinear filtering problem?

- Can we establish a correspondence between the MoN of a NLF problem and the performance of a filtering algorithm?

- Can we show that the UKF, CKF, or PF gives better results than the EKF, when the degree of nonlinearity (DoN) is high?

Remark 1.

In this paper we consider a parameter estimation problem with polynomial nonlinearity. We hope that insights and results from this analysis would encourage further study of MoN in NLF problems. Next, we describe some historical developments in the field of parameter estimation and NLF.

Beale in his pioneering work [18] proposed four MoNs for the static non-random parameter estimation problem. Two MoNs were empirical and two were theoretical. Guttman and Meeter [19] and Linssen [20] observed that Beale’s method gives lower MoN for highly non-linear problems and proposed a modified MoN. Using differential geometry based curvature measures, Bates and Watts [21,22] and Goldberg et al. [23] extended Beale’s work and developed curvature measures of nonlinearity (CMoN) for the static non-random parameter estimation problem. Bates and Watts formulated two CMoN, the parameter-effects curvature and intrinsic curvature [21,24,25,26].

In [27], we first extended the method of Bates and Watts to the non-linear filtering problem with unattended ground sensor (UGS) to calculate CMoN. Next, we computed the parameter-effects curvature and intrinsic curvature for the bearing-only filtering (BOF) problem [28,29,30,31], GMTI filtering problem [30,32,33], video tracking problem [34], and polynomial nonlinearity [35].

In our previous work [35], we considered a polynomial curve in two-dimensions (2D) and calculated CMoN using differential geometry (e.g., extrinsic curvature) [36,37,38], Bates and Watts parameter-effects curvature [21,25,26], and direct parameter-effects curvature [29]. The computation of these curvatures requires the Jacobian and Hessian of the measurement function [2] evaluated at the true or estimated parameter. The extrinsic curvature uses the true parameter, whereas the other two CMoN use the estimated parameter.

In [35], we obtained the maximum likelihood (ML) estimate [2,39] of the parameter x while using a vector measurement by numerical minimization. In [40], we derived analytic expressions for the ML estimator (MLE) [2,39] and associated variance using a vector measurement. This approach is simple and efficient, since it does not require numerical minimization. We also showed through Monte Carlo simulations in [40] that the variance of the MLE and the Cramér-Rao lower bound (CRLB) [2,41] are nearly the same for different powers of x. We also found that the bias error was small and the mean square error (MSE) [2] was close to the CRLB and variance of the MLE. Our numerical results showed that the average normalized estimation error squared (ANEES) [42] was within the 99% confidence interval most of the time. Hence, the variance of the MLE was in agreement with the estimation error.

Li constructed a combined non-linear function while using the non-linear time evolution function and measurement function in a discrete-time nonlinear filtering problem, and he proposed a global MoN at each measurement time [43]. This MoN minimizes the mean square distance between the combined non-linear function and the set of all affine functions with the same dimension at each measurement time. An un-normalized MoN and a normalized MoN were proposed in [43]. These MoNs can also be unconditional or conditional. The normalized MoN lies in the interval . A journal version of the paper with enhancements was published in [44].

The normalized MoN that was proposed in [43] was calculated for non-linear filtering problems, including one with the nearly constant turn motion and a non-linear measurement model [45], a video tracking problem using PF [46], and a hypersonic entry vehicle state estimation problem [47]. In these cases, the normalized MoN were rather low. In [33], we compared the normalized MoN for the BOF and GMTI filtering problems. Contrary to our expectation, we found that the GMTI filtering problem had a higher conditional normalized MoN than that of the BOF problem in the examples that we investigated.

Using the current mean (e.g., predicted mean) and associated covariance, Duník et al. [48] generate a number of sample points (e.g., sigma points using unscented transform [14]) and transform these points using a non-linear function (e.g., non-linear measurement function or time evolution function). Subsequently, they try to predict the transformed points using a linear transformation and estimate the parameter of the transformation using linear weighted least squares (WLS) [39]. They use the cost function of the WLS evaluated at the estimated parameter as a local MoN.

In [35], we showed analytically and through Monte Carlo simulations that affine mappings with positive slopes exist among the logarithm of the extrinsic curvature, Bates and Watts parameter-effects curvature, direct parameter-effects curvature, MSE, and CRLB. For completeness, we have included these key results from [35] in Section 4. New contributions in this paper include the computation and analysis of following MoNs:

- Beale’s MoN [18],

- Least squares based Beale’s MoN,

- Linssen’s MoN [20],

- Least squares based Linssen’s MoN,

- Li’s MoN [43,44], and

- MoN of Straka, Duník, and S̆imandl [48,49].

It is not possible to derive a mapping analytically between the logarithm of Beale’s MoN, Linssen’s MoN, Li’s MoN, MoN of Straka, Duník, and S̆imandl, and the logarithm of the MSE. The numerical results from Monte Carlo simulations also show that affine mappings with positive slopes exist among the logarithm of the MSE and the logarithm of two of these MoNs.

The paper is organized, as follows. Section 2.1 describes the measurement model for polynomial nonlinearity. The MLE for parameter estimation and CRLB using polynomial nonlinearity and a vector measurement is presented in Section 2. Section 3 presents different types of MoN, such as extrinsic curvature based on differential geometry, Beale’s MoN, Linssen’s MoN, Bates and Watts parameter-effects curvature, direct parameter-effects curvature, Li’s MoN, and MoN of Straka, et al. Section 4 discusses mappings among logarithms of extrinsic curvature, parameter-effects curvature, CRLB, and MSE. Section 5 presents the numerical simulation and results. Finally, Section 6 summarizes our contribution and concludes with future work.

Notation Convention: For clarity, we use italics to denote scalar quantities and boldface for vectors and matrices. A lower or upper case Roman letter represents a name (e.g., “s” for “sensor”, “RMS” for “root mean square”, etc.). We use “:=” to define a quantity and denotes the transpose of the vector or matrix . The dimensional identity matrix, dimensional null matrix, and null matrix are denoted by , , and , respectively.

2. MLE Parameter Estimation and CRLB

2.1. Measurement Model

We studied CMoN of a polynomial smooth scalar function h of a non-random variable x in [35], where

and a is a non-zero scalar. In scenarios considered, and .

Remark 2.

For MoN of other forms of nonlinearity, such as the bearing-only [27], GMTI [32], and video filtering [34] problems in radar communities, we shall discuss in detail in the end of Section 3.

The measurement model for the polynomial function is given by

where is a zero-mean white Gaussian measurement noise with variance ,

We assume that the measurement noises are independent.

The measurement model can be written in the vector form

where

2.2. ML Estimate of Parameter

The likelihood function of x is [2,50,51]

The maximization of the likelihood in (10) is equivalent to the minimization of the cost function [2,51]

The maximum likelihood (ML) estimate of x is obtained by setting the derivative of to zero [2,51],

Because the derivative of with respect to x is not zero, we obtain

Hence, the ML estimate satisfies,

Left-multiplying both sides of (15) by we obtain

We note that

Remark 3.

In general, the MLE for a nonlinear measurement model is biased [51]. We can calculate the variance of under the small error assumption using the linearization approximation. To guarantee the validity of the variance, the bias in the MLE must be calculated. The bias can be numerically calculated using Monte Carlo simulation.

The bias in the MLE is defined by [2,51]

2.3. Variance of the MLE

The variance of is given by [51]

where

From (27), we obtain

2.4. Cramér-Rao Lower Bound

The CRLB [2,41] for the MSE in the current problem is given by

Remark 5.

Calculation of the variance and are similar. For , we use the estimate while calculating the Jacobian of the measurement function, whereas, for , we use the true x while calculating the Jacobian of the measurement function.

Using similar procedure, we obtain

3. Measures of Nonlinearity

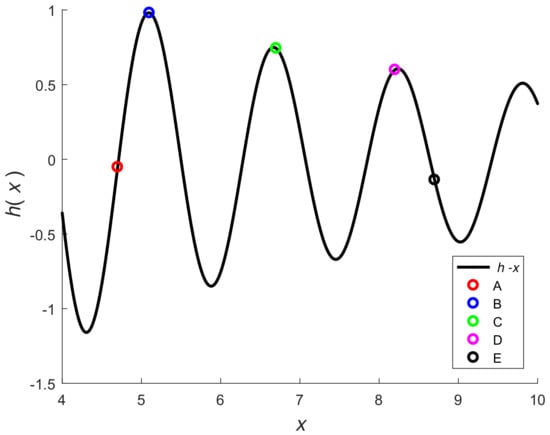

To explain the key concepts of nonlinearity, consider the scalar function shown in Figure 1. We observe in Figure 1 that the function is nearly linear at A and E. If we draw a tangent to the curve at A and E, then the curve is close to the tangent in the neighborhood of A and E. However, tangents to the curve at points B, C, and D differ by large amounts from the curve in the neighborhood of these points. The tangent represents an affine approximation to the curve at a point. We observe that, among points B, C, and D, the curve bends the most at B and the least at point D. If we draw a circle (called the osculating circle) at these points, then the radius of the circle can be used to judge nonlinearity. The rate of bending is high when the radius of the circle is small. In differential geometry [37,38], the curvature is inverse of the radius of the osculating circle and, hence, curvature can be viewed as a measure of nonlinearity. The radii of the osculating circles at A and E are nearly infinity and, hence, the curvatures are nearly zero. From Figure 1, we observe that, in general, the nonlinearity of a function can vary with x. Hence, the nonlinearity is a local measure. If the second derivative of a function is non-zero, then the function is non-linear.

Figure 1.

versus x.

In [35,40], we analyzed the CMoN of a polynomial scalar function h of a non-random variable x, as described in Section 2.1. The CMoN were based on the extrinsic curvature using differential geometry, Bates and Watts parameter-effects curvature, and direct parameter-effects curvature. In this paper, we study the following MoNs:

- extrinsic curvature using differential geometry [36,37,38],

- Beale’s MoN [18],

- least squares based Beale’s MoN,

- Linssen’s MoN [20],

- Least squares based Linssen’s MoN,

- parameter-effects curvatures [21,25,29],

- Li’s MoN [43,44], and

- MoN of Straka, Duník, and S̆imandl [48,49].

If a MoN has a high value, then the nonlinearity is high and if it has a low value, then the Therefore, it is impossible to compare them based on numerical values. We can only study their variations.

Consider the m-dimensional vector non-linear function of the non-random dimensional parameter . Let be a known estimate of . Using the Taylor series expansion of about and keeping the first order term gives

where represents the tangent plane approximation (an affine mapping) to and

If , then is an dimensional manifold embedded in an dimensional space [37,38]. The tangent plane is tangent to the surface at . The concept of tangent plane is used in Beale’s MoN, Linssen’s MoN, Bates and Watts parameter-effects curvatures [21,25], and direct parameter-effects curvature [44].

For polynomial nonlinearity, the CMoN using differential geometry is calculated at the true value x and, hence, it is non-random. The Bates and Watts parameter-effects curvature, direct parameter-effects curvature, Beale’s MoN, Li’s MoN, and the MoN of Straka et al. are calculated while using an estimate of x. The estimate is obtained from a measurement model involving the measurement function h. Since x is a scalar, we need one or more scalar measurements to estimate x. Table 1 summarizes features of various MoNs.

Table 1.

Features of Various MoNs

The CMoN using differential geometry [36,37,38] is calculated at the true value x, whereas the Bates and Watts parameter-effects curvature [21,25,26], direct parameter-effects curvature [29], Beale’s MoN, Li’s MoN, and the MoN of Straka et al. are calculated while using an estimate of x. The estimate is obtained from a measurement model involving the measurement function h. Since x is a scalar, we need one or more scalar measurements to estimate x. Next, we describe various MoN.

3.1. Extrinsic Curvature Using Differential Geometry

The curvature of a circle at every point on the circumference is equal to the inverse of the radius of the circle. Thus, the curvature of a circle is a constant. A circle with a smaller radius bends more sharply and, therefore, has a higher curvature.

We assume that the first and second derivatives of the nonlinear smooth scalar function h exist. The curvature of the curve at a point x is equal to the curvature of the osculating circle at that point. The extrinsic curvature at the point x is defined by [36,37,38],

The first derivative of h at a point x is given in (26). The second derivative of h with respect to x is given by

3.2. Beale’s MoN

Consider the nonlinear measurement model for the non-random n-dimensional parameter

where , , and are the measurement, non-linear measurement function, and measurement noise, respectively. Let be an estimate of . Subsequently, a Taylor series expansion of about and keeping the first order term is as (34). Suppose we choose m vectors in the neighborhood of Then Beale’s first empirical MoN [18] is given by

where is the standard radius and it is defined by

Guttman and Meeter [19] observed that the empirical MoN underestimates severe nonlinearity. When m approaches infinity, the empirical MoN approaches the theoretical MoN

3.3. Least Squares Based Beale’s MoN

Consider the scalar function h for polynomial nonlinearity, as described in (1). As described in Beale’s MoN, we choose m points in th neighborhood of Let

An affine mapping as approximation to is given by

We compute A and B by minimizing the cost function

by the method of least squares (LS) [2,39]. The LS minimization of the cost function yields [52]

where

Then we can use the affine mapping with and in Beale’s MoN.

3.4. Linssen’s MoN

In order the correct the deficiency in Beale’s MoN, Linssen proposed a modification to obtain the following MoN [20]

3.5. Least Squares Based Linssen’s MoN

Using the same procedure as in Section 3.3, we can use an affine mapping with and as an approximation to in computing Linssen’s MoN.

3.6. Parameter-Effects Curvatures

The parameter-effects curvature and intrinsic curvature defined by Bates and Watts [21,25,26] are associated with a non-linear parameter estimation problem and are defined at the estimated parameter. We note that in (1), . Since h is a scalar function, the intrinsic curvature of Bates and Watts [21] or the direct intrinsic curvature [29] is zero. Thus, only the parameter-effects curvature of Bates and Watts and the direct parameter-effects curvature are non-zero. Since the intrinsic curvature is zero, for simplicity in notation, we drop the superscript “T” from the parameter-effects curvature and they are given by

where

From (26), we get

We note that the extrinsic curvature in (36) is evaluated at the true x, while the parameter-effects curvatures in (50) and in (51) are evaluated at the estimate . Because is a random variable, and are random variables. When we perform Monte Carlo simulations and estimate x from measurements, varies among Monte Carlo runs. Therefore, and the set of all linear vary with Monte Carlo runs.

3.7. Li’s MoN

For a scalar random variable x, the un-normalized MoN proposed by Li [43,44] represents the square root of the minimum mean square distance between the nonlinear measurement function h and the set of all affine functions L,

where The scalar parameters A and B are determined in the minimization process. For the current problem, where x is non-random, the un-normalized MoN J and normalized MoN ar given, respectively, by

Given and (30), the unscented transformation (UT) [14,15], cubature transformation (CT) [16], or Monte Carlo method [8] can be used to compute and . We find that the UT gives good results in calculating the two MoNs. Next we dscribe computing J and using the UT. We use [14]. The three weights and sigma points are given, respectively, by

The measurement transformed points are

Then the mean and variance of h a given by

The cross-covariance is computed by

3.8. MoN of Straka, Duník, and S̆imandl

Straka, Duník, and S̆imandl presented two local MoNs in [48,49]. Given the estimate and variance these MoNs use a number of points in the neighborhood of We analyze the first MoN proposed by the authors. The transformed points by the non-linear function h are given by

Define

A linear approximation to is , where is a scalar parameter to be estimated. The cost function that is proposed in [48,49] to determine is given by

where the weight-matrix is given by

The LS estimate [39] that minimizes the cost function is given by

For this problem, the LS estimate in (74) reduces to

The cost function evaluated at is treated as a local MoN , given by

Remark 6.

We have calculated the average MoN for the bearing-only filtering [27], GMTI [32], and video filtering [34] problems. The MoN is presented in the table below (Table 2). From this table we find that the degree of nonlinearity of the bearing-only filtering problem is about two orders of magnitude higher than that of the GMTI or video filtering problem. This implies that a simple filter, such as the EKF or UKF, is sufficient for the GMTI or video filtering problem, but an advanced filter, such as the PF, is needed for the BOF [17] problem.

Table 2.

MoNs for the bearing-only, GMTI, and video filtering problems.

4. Mapping between CMoN and MSE in Polynomial NonLinearity

The nonlinearity of the problem imposes challenges in parameter estimation. We analyze the CMoN and MSE of the non-linear estimation problem to discover relationships among them. For the current problem, CMoN are measured by the parameter-effects curvature in (57) and the direct parameter-effects curvature in (58). In general, CMoN depend on the first and second derivatives of the non-linear function calculated at the parameter estimate and on the norm of the estimation error for . Therefore, the CMoN will depend the type of estimator (e.g., ML) used to obtain parameter estimate. The extrinsic curvature (38) depends on the first and second derivatives of the non-linear function evaluated at the true x.

4.1. MSE and Sample MSE

We estimate the x coordinate using noisy measurements at a discrete set of values. Let denote the estimate of in the mth Monte Carlo run. Subsequently, the error in is defined by

where M is the number of Monte Carlo runs. The MSE at is given by

The sample MSE (SMSE) at is defined by

Let denote the of the CRLB,

Taking the log of in (32) we get

4.2. MSE and Parameter-Effects Curvature

Let denote the log of the expected value of in (57). Then

In order to compute , we first approximate the expectation in (82) by assuming , which holds for the case investigated in our paper,

The last step of the above equation follows from an assumption that the estimator is nearly unbiased. Now, taking the logarithm, we have

Now, from Equations (84) and (81), we can see that there is an affine mapping between and . That is,

where

We observe that is positive and, hence, and have the same sign of the non-zero slopes. As a result, and CRLB have the same sign of the non-zero slopes.

4.3. MSE and Direct Parameter-Effects Curvature

Similar to the previous section, we define

Now, taking the expected value of , we have

The RHS of (88) can be simplified by assuming that is unbiased and that it achieves the CRLB. Additionally, we approximate this error to be Gaussian and the variance of is given in (29). Then,

Thus,

We also observe that is positive and, hence, and have the same sign of the non-zero slopes. As a result, and CRLB have the same sign of the non-zero slopes.

4.4. Extrinsic Curvature

The expression for extrinsic curvature for our problem is given in (38). Similar to previous sections, we define

Taking the log of (94), we have

Note that the second last expression is a valid approximation for . From (95) and (84) it is easy to establish the affine mapping

where

Using similar arguments used in previous sections, we infer that the extrinsic curvature and parameter-effects curvature have the same sign of the non-zero slopes. Similarly, the extrinsic curvature and direct parameter-effects curvature have the same non-zero slopes.

4.5. Estimation of CMoN and SMSE by Monte-Carlo Simulations

Let and denote the sample means of the Bates and Watts and direct parameter-effects curvatures calculated from M Monte Carlo runs. Subsequently,

Correspondingly, we define

Define

Suppose that an affine mapping exists between and . Subsequently,

where is a random noise. Afterwards, we can write (108) in the matrix-vector form by

where

Given and , we can estimate using the linear least squares (LLS).

We can similarly define the affine mapping between other variable pairs. Altogether, we consider the following four:

5. Numerical Simulation and Results

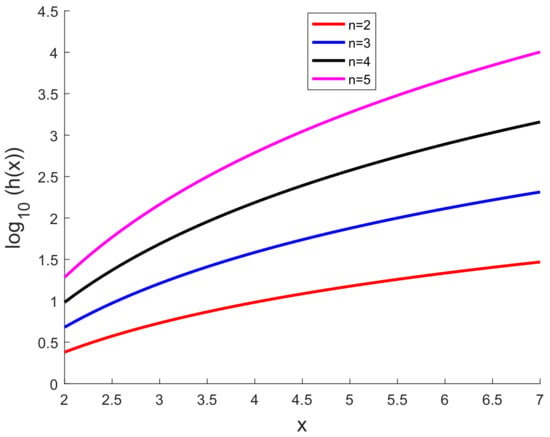

We follow the same simulation scenario as used in our previous work [35]. We use and and a number of uniformly spaced x coordinates with the spacing of in the interval . The measurement noise standard deviation () is . The dimension of the measurement vector is 10 or 20. The results are based on 1000 Monte Carlo runs. Figure 2 shows versus x.

Figure 2.

versus x.

To assess the accuracy of the MLE, we compute the sample bias, sample MSE, ANEES [42], and CRLB [2,41,51]. Let , , and denote the true parameter, ML estimate, and associated variance, respectively, at the kth point in the ith Monte Carlo run. The sample bias in the estimate at the kth point is defined by [9]

where M is the number of Monte Carlo runs. The sample root MSE (RMSE) [9] and ANEES [2,9,42] at the kth point are defined, respectively, by

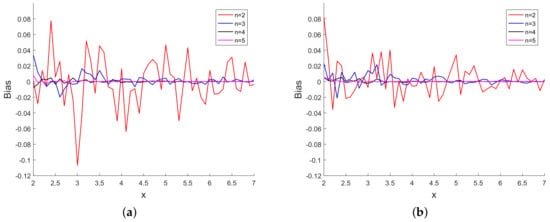

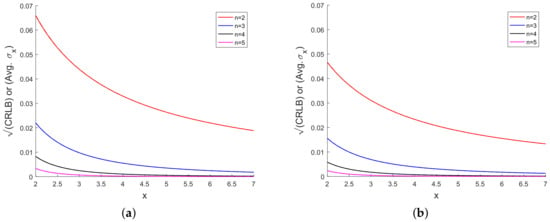

Figure 3 presents the sample bias for different powers of x. We observe from Figure 3 that the bias is small when compared with the true value of x and the bias decreases with increase in the power of In Figure 4, we have plotted the and the average of over Monte Carlo runs. Figure 4 shows that, for each power of x, the and the average of are on top of each other and it is not

possible to distinguish them in the figure.

Figure 3.

(a) Sample bias vs. x using 10 scalar measurements and (b) sample bias vs. x using 20 scalar measurements.

Figure 4.

(a) or (Avg. ) vs. x using 10 scalar measurements and (b) or (Avg. ) vs. x using 20 scalar measurements.

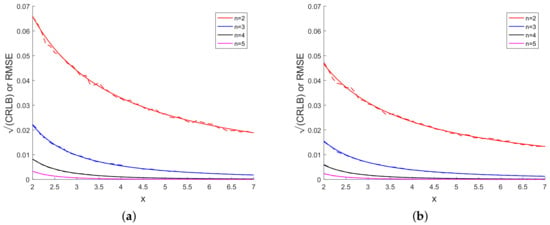

Figure 5 presents and RMSE for each power of x. Solid and dashed lines in Figure 5 represent the and RMSE, respectively, for each power of x. We see from Figure 5 that

corresponding values of and RMSE are close to each other for each power of x. In Figure 3, Figure 4 and Figure 5, the bias, , and RMSE for 20 measurements are smaller than corresponding values for 10

measurements.

Figure 5.

(a) or RMSE vs. x using 10 scalar measurements and (b) or RMSE vs. x using 20 scalar measurements.

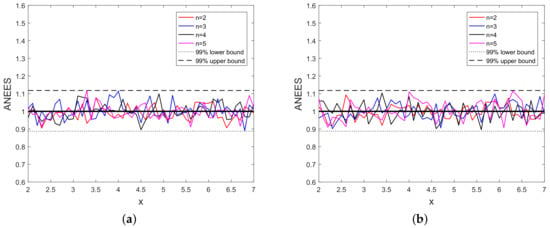

We present the ANEES [42] in Figure 6 for different powers of x with 99% confidence bounds.

We see from Figure 6 hat the ANEES lies within the 99% confidence bounds. This shows that the variance calculated using the MLE is consistent with the estimation error.

Figure 6.

(a) ANEES vs. x using 10 scalar measurements and (b) ANEES vs. x using 20 scalar measurements.

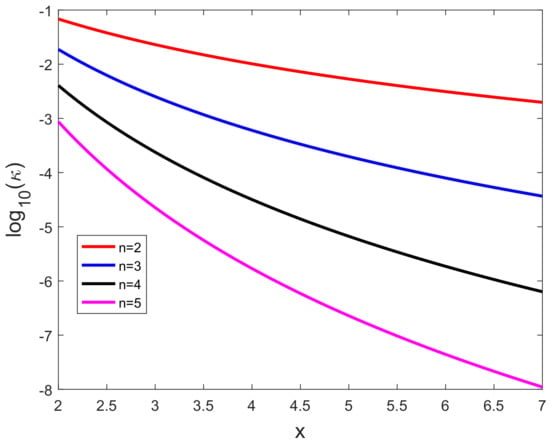

Figure 7 presents the logarithm of the extrinsic curvature versus x. The extrinsic curvature is completely determined by the first and second derivatives of the non-linear function h and it is evaluated while using the true x.

Figure 7.

Logarithm of the extrinsic curvature (k(x)) versus x.

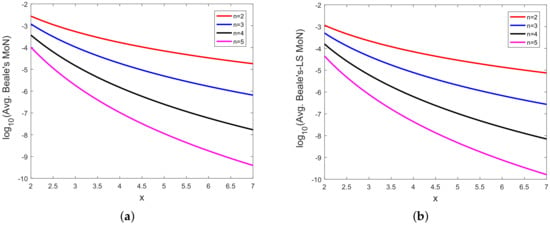

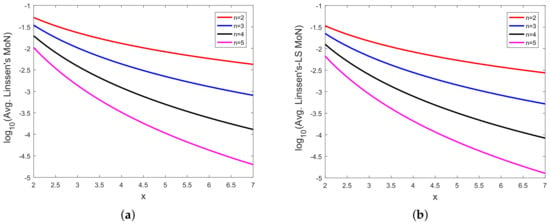

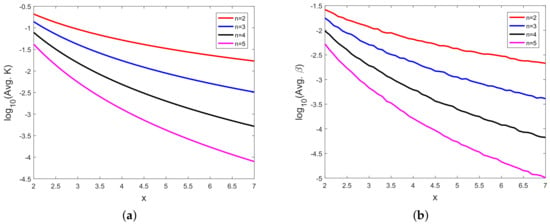

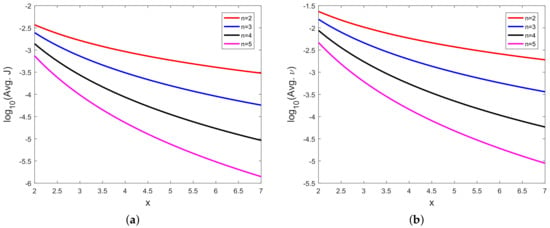

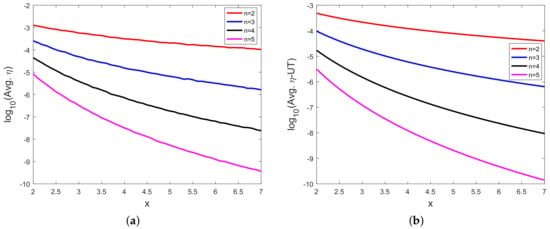

In Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16, Figure 17 and Figure 18, we present results using 10 scalar measurements. We have also generated results using 20 scalar measurements. In order to limit the number of figures, we have not presented figures with 20 scalar measurements. The CRLB, variance of estimation error, all MoNs, and MSE follow the same trend. However, the corresponding values compared with 20 measurements are reduced due to improved estimation accuracy.

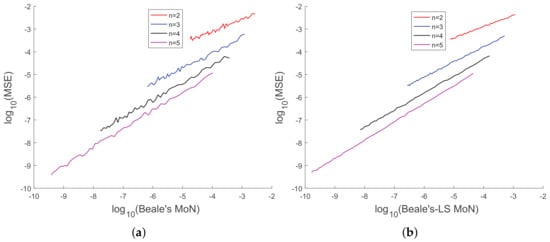

Figure 8.

(a) Logarithm of Beale’s MoN ((Avg. Beale’s MoN)) vs. x and (b) logarithm of Beale’s MoN using LS ((Avg. Beale’s-LS MoN)) vs. x with 10 scalar measurements.

Figure 9.

(a) Logarithm of Linssen’s MoN ((Avg. Linssen’s MoN)) vs. x and (b) logarithm of Linssen’s MoN using LS ((Avg. Linssen’s-LS MoN)) vs. x with 10 scalar measurements.

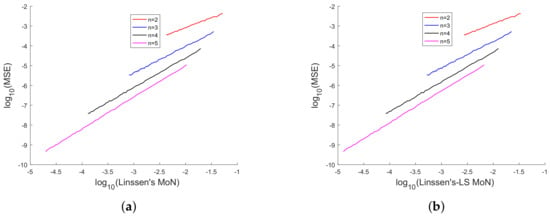

Figure 10.

(a) Logarithm of Bates and Watts parameter-effects curvature ((Avg. K)) vs. x and (b) logarithm of direct parameter-effect curvature ((Avg. β)) vs. x using 10 scalar measurements.

Figure 11.

(a) Logarithm of Li’s un-normalized MoN ((Avg. J)) vs. x and (b) logarithm of Li’s normalized MoN ((Avg. J)) vs. x with 10 scalar measurements.

Figure 12.

(a) Logarithm of MoN of Straka et al. ((Avg. )) vs. x and (b) logarithm of MoN of Straka et al. with UT ((Avg. -UT)) vs. x using 10 scalar measurements.

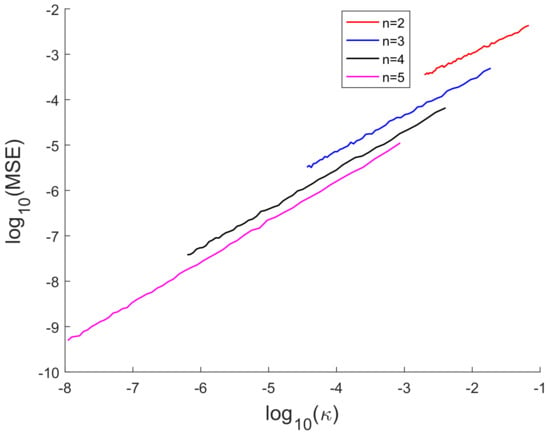

Figure 13.

(MSE) vs. logarithm of extrinsic curvature ( ()) using 10 scalar measurements.

Figure 14.

(a) (MSE) vs. (Avg. Beale’s MoN) and (b) (MSE) vs. (Avg. Beale’s MoN using LS) using 10 scalar measurements.

Figure 15.

(a) (MSE) vs. (Linssen’s MoN) and (b) (MSE) vs. (Linssen’s-LS) using 10 scalar measurements.

Figure 16.

(MSE) vs. logarithm of parameter-effects curvatures. (a) (MSE) vs. (Avg. K) and (b) (MSE) vs. (Avg. ) using 10 scalar measurements.

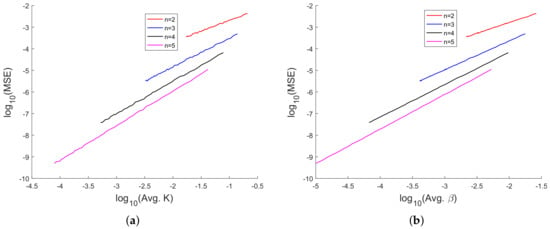

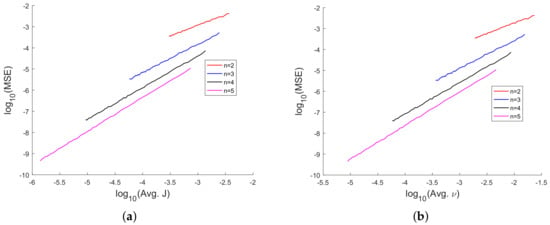

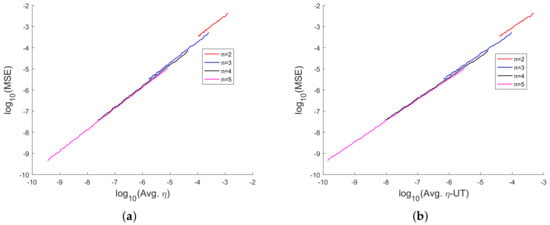

Figure 17.

(MSE) vs. logarithm of Li’s MoN. (a) (MSE) vs. (Avg. J) and (b) (MSE) vs. (Avg. ) using 10 scalar measurements.

Figure 18.

(MSE) vs. logarithm of MoN of Straka et al. (a) (MSE) vs. (Avg. ) and (b) (MSE) vs. (Avg. -UT) using 10 scalar measurements.

In [35], we had shown analytically, and through Monte Carlo simulation, that affine mappings exist among (MSE), (), (Avg. K), and (Avg. ). In Figure 13, Figure 14, Figure 15, Figure 16, Figure 17 and Figure 18, we have plotted the (MSE) versus of various MoNs using 10 scalar measurements. These figures show that the (MSE) varies with (MoN) according to an affine mapping with a positive slope. This implies that the MSE increases as an MoN increases. We obtain similar results for the case of 20 scalar measurements.

The above results demonstrate that, for the polynomial nonlinearity problem analyzed, any of the seven MoNs analyzed is suitable metrics to quantify the MSE, which represents the complexity of a parameter estimation problem. Further research is needed to study the applicability of these MoNs in real-world non-linear filtering problems.

6. Conclusions

We considered a polynomial curve in 2D and derived analytic expressions for the ML estimate and associated variance of the independent variable x using a vector measurement. The ML estimate is used to evaluate the Jacobian and Hessian of the measurement function appearing in the computation of Bates and Watts and direct parameter-effects curvatures, Beale’s MoN, and Linssen’s MoN. Our numerical results show that the variance of the estimated parameter and the Cramér-Rao lower bound (CRLB) are nearly the same for different powers of x. The average normalized estimation error squared (ANEES) lies within the 99% confidence interval, which indicates that the ML based variance is consistent with the estimation error.

We used seven MoNs, including the extrinsic curvature using differential geometry, Beale’s MoN (and its least squares variant), Linssen’s MoN (and its least squares variant), Bates and Watts parameter-effects curvature, direct parameter-effects curvature, Li’s MoN, and the MoN of Straka, Duník, and S̆imandl. If a MoN has a high value, then the nonlinearity is high. All of the MoNs show the same type of variation with x and the power of of the polynomial. Secondly, as the logarithm of a MoN increases, the logarithm of the MSE also increases linearly for each MoN. This implies that, as a MoN increases, and then the MSE increases. These results are quite surprising, given the fact that these MoNs are derived based on completely different theoretical considerations. The second feature of our analysis is useful in establishing that a MoN in our study can be considered as a candidate metric for quantifying the MSE that represents the complexity of a parameter estimation problem. Our future work will study other practical parameter estimation and non-linear filtering problems.

Author Contributions

Formal analysis, M.M. and X.T.; methodology, M.M.; software, M.M. and X.T.; writing, M.M. and X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The author thanks Sanjeev Arulampalam of Defence Science and Technology Organisation, Edinburgh SA, Australia for useful discussions and insightful comments in improving the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Anderson, B.D.O.; Moore, J.B. Optimal Filtering; Prentice-Hall: Englewood Cliffs, NJ, USA, 1979. [Google Scholar]

- Bar-Shalom, Y.; Li, X.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Gelb, A. (Ed.) Applied Optimal Estimation; MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Aidala, V.J. Kalman Filter Behaviour in Bearings-only Tracking Applications. IEEE Trans. Autom. Control 1979, AES-15, 29–39. [Google Scholar]

- Aidala, V.J.; Hammel, S.E. Utilization of modified polar coordinates for bearings-only tracking. IEEE Trans. Autom. Control 1983, 28, 283–294. [Google Scholar] [CrossRef]

- Arulampalam, S.; Ristic, B. Comparison of the particle filter with range-parameterised and modified polar EKFs for angle-only tracking. In Proceedings of the SPIE Conference on Signal and Data Processing of Small Targets, Orlando, FL, USA, 24–28 April 2000; Volume 4048, pp. 288–299. [Google Scholar]

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond the Kalman Filter; Artech House: Boston, MA, USA, 2004. [Google Scholar]

- Mallick, M.; Arulampalam, S. Comparison of Nonlinear Filtering Algorithms in Ground Moving Target Indicator (GMTI) Target Tracking. In Proceedings of the SPIE Conference on Signal and Data Processing of Small Targets, San Diego, CA, USA, 5 January 2004; Volume 5204, pp. 630–647. [Google Scholar]

- Blackman, S.S.; White, T.; Blyth, B.; Durand, C. Integration of Passive Ranging with Multiple Hypothesis Tracking (MHT) for Application with Angle-Only Measurements. In Proceedings of the SPIE Conference on Signal and Data Processing of Small Targets, Orlando, FL, USA, 15 April 2010; Volume 7698, pp. 769815-1–769815-11. [Google Scholar]

- Mallick, M.; Morelande, M.; Mihaylova, L.; Arulampalam, S.; Yan, Y. Comparison of Angle-only Filtering Algorithms in 3D using Cartesian and Modified Spherical Coordinates. In Proceedings of the 15th International Conference on Information Fusion, Singapore, 9–12 July 2012. [Google Scholar]

- Mallick, M.; Morelande, M.; Mihaylova, L.; Arulampalam, S.; Yan, Y. Angle-only Filtering in Three Dimensions, Chapter 1. In Integrated Tracking, Classification, and Sensor Management: Theory and Applications; Mallick, M., Krishnamurthy, V., Vo, B.-N., Eds.; Wiley/IEEE: Hoboken, NJ, USA, 2013. [Google Scholar]

- Athans, M.; Wishner, R.P.; Bertolini, A. Suboptimal state estimation for continuous-time nonlinear systems from discrete noisy measurements. IEEE Trans. Autom. Control 1968, 13, 504–518. [Google Scholar] [CrossRef]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H.F. A new method for the nonlinear transformation of means and covariances in filters and estimators. IEEE Trans. Autom. Control 2000, AC-45, 477–482. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature Kalman filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Arulampalam, S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for on-line non-linear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Beale, E.M.L. Confidence regions in non-linear estimation. J. R. Stat. Soc. Ser. B 1960, 22, 41–76. [Google Scholar] [CrossRef]

- Guttman, I.; Meeter, D.A. On Beale’s measures of non-linearity. Technometrics 1965, 7, 623–637. [Google Scholar] [CrossRef]

- Linssen, H.N. Nonlinearity measures: A case study. Stat. Neerl. 1975, 29, 93–99. [Google Scholar] [CrossRef]

- Bates, D.M.; Watts, D.G. Relative curvature measures of nonlinearity. J. R. Stat. Society. Ser. B (Methodol.) 1980, 42, 1–25. [Google Scholar] [CrossRef]

- Bates, D.M.; Watts, D.G. Parameter transformations for improved approximate confidence regions in nonlinear least squares. Ann. Stat. 1981, 9, 1152–1167. [Google Scholar] [CrossRef]

- Goldberg, M.L.; Bates, D.M.; Watts, D.G. Simplified Methods of Assessing Nonlinearity. Am. Stat. Assoc. Proc. Bus. Eco. Statistics Section 1983, 67–74. [Google Scholar]

- Bates, D.M.; Hamilton, D.C.; Watts, D.G. Calculation of intrinsic and parameter-effects curvatures for nonlinear regression models. Commun. Stat. Simul. Comput. 1983, 12, 469–477. [Google Scholar] [CrossRef]

- Bates, D.M.; Watts, D.G. Nonlinear Regression Analysis and its Applications; John Wiley: New York, NY, USA, 1988. [Google Scholar]

- Seber, G.A.; Wild, C.J. Nonlinear Regression; John Wiley: New York, NY, USA, 1989. [Google Scholar]

- Mallick, M. Differential geometry measures of nonlinearity with application to ground target tracking. In Proceedings of the 7th International Conference on Information Fusion, Stockholm, Sweden, 28 June–1 July 2004. [Google Scholar]

- Scala, B.F.; Mallick, M.; Arulampalam, S. Differential Geometry Measures of Nonlinearity for Filtering with Nonlinear Dynamic and Linear Measurement Models. In Proceedings of the SPIE Conference on Signal and Data Processing of Small Targets, San Diego, CA, USA, 28–30 August 2007. [Google Scholar]

- Mallick, M.; Scala, B.F.L.; Arulampalam, M.S. Differential geometry measures of nonlinearity for the bearing-only tracking problem. In Proceedings of the SPIE Conference on Signal Processing, Sensor Fusion, and Target Recognition XIV, Orlando, FL, USA, 25 May 2005; Volume 5809, pp. 288–300. [Google Scholar]

- Mallick, M.; Scala, B.F.L.; Arulampalam, S. Differential geometry measures of nonlinearity with applications to target tracking. In Proceedings of the Fred Daum Tribute Conference, Monterey, CA, USA, 24 May 2007. [Google Scholar]

- Duník, J.; Straka, O.; Mallick, M.; Blasch, E. Survey of nonlinearity and non-Gaussianity measures for state estimation. In Proceedings of the 19th International Conference on Information Fusion, Heidlberg, Germany, 5 July 2016; pp. 1845–1852. [Google Scholar]

- Mallick, M.; Scala, B.F.L. Differential geometry measures of nonlinearity for ground moving target indicator (GMTI) filtering. In Proceedings of the 2005 8th International Conference on Information Fusion, Philadelphia, PA, USA, 25–28 July 2005; pp. 219–226. [Google Scholar]

- Mallick, M.; Ristic, B. Comparison of Measures of Nonlinearity for Bearing-only and GMTI Filtering. In Proceedings of the 20th International Conference on Information Fusion, Xi’an, China, 10–13 July 2017. [Google Scholar]

- Mallick, M.; Scala, B.F.L. Differential geometry measures of nonlinearity for the video tracking problem. In Proceedings of the SPIE Conference on Signal Processing, Sensor Fusion, and Target Recognition XV, Orlando (Kissimmee), FL, USA, 17 May 2006; Volume 6235, pp. 246–258. [Google Scholar]

- Mallick, M.; Yan, Y.; Arulampalam, S.; Mallick, A. Connection between Differential Geometry and Estimation Theory for Polynomial Nonlinearity in 2D. In Proceedings of the 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Available online: http://en.wikipedia.org/wiki/curvature (accessed on 5 March 2020).

- Carmo, M.D. Differential Geometry of Curves and Surfaces; Prentice-Hall: Englewood Cliffs, NJ, USA, 1976. [Google Scholar]

- Struik, D.J. Lectures on Classical Differential Geometry, 2nd ed.; Dover Publications: Mineola, NY, USA, 1988. [Google Scholar]

- Mendel, J.M. Lessons in Estimation Theory for Signal Processing Communications, and Control; Prentice-Hall: Englewood Cliffs, NJ, USA, 1995. [Google Scholar]

- Mallick, M. Improvements to Measures of Nonlinearity Computation for Polynomial Nonlinearity. In Proceedings of the 8th International Conference on Control, Automation and Information Sciences (ICCAIS 2019), Chengdu, China, 23–26 October 2019. [Google Scholar]

- Trees, H.L.V.; Bell, K.L.; Tian, Z. Detection, Estimation, and Modulation Theory, Part I: Detection, Estimation, and Filtering Theory, 2nd ed.; Wiley: New York, NY, USA, 2013. [Google Scholar]

- Li, X.R.; Zhao, Z.; Jilkov, V.P. Estimator’s credibility and its measures. In Proceedings of the IFAC 15th World Congress, Barcelona, Spain, 21–26 July 2002. [Google Scholar]

- Li, X.R. Measure of Nonlinearity for Stochastic Systems. In Proceedings of the 15th International Conference on Information Fusion, Singapore, 9–12 July 2012; pp. 1073–1080. [Google Scholar]

- Liu, Y.; Li, X.R. Measure of Nonlinearity for Estimation. IEEE Trans. Signal Process. 2015, 63, 2377–2388. [Google Scholar] [CrossRef]

- Sun, T.; Xin, M.; Jia, B. Nonlinearity-based adaptive sparse-grid quadrature filter. In Proceedings of the American Control Conference (ACC), Chicago, IL, USA, 1–3 July 2015; pp. 2499–2504. [Google Scholar]

- Wang, P.; Blasch, E.; Li, X.R.; Jones, E.; Hanak, R.; Yin, W.; Beach, A.; Brewer, P. Degree of nonlinearity (DoN) measure for target tracking in videos. In Proceedings of the 9th International Conference on Information Fusion, Heidlberg, Germany, 5 July 2016; pp. 1390–1397. [Google Scholar]

- Sun, T.; Xin, M. Hypersonic entry vehicle state estimation using nonlinearity-based adaptive cubature Kalman filters. Acta Astronaut. 2017, 134, 221–230. [Google Scholar] [CrossRef]

- Duník, J.; Straka, O.; S̆imandl, M. Nonlinearity and non-Gaussianity measures for stochastic dynamic systems. In Proceedings of the 16th International Conference on Information Fusion, Istanbul, Turkey, 9 July 2013; pp. 204–211. [Google Scholar]

- Straka, O.; Duník, J.; S̆imandl, M. Unscented Kalman filter with controlled adaptation. In Proceedings of the 16th IFAC Symposium on System Identification, Brussels, Belgium, 11–13 July 2012; pp. 906–911. [Google Scholar]

- Lu, Q.; Bar-Shalom, Y.; Willett, P.; Zhou, S. Nonlinear observation models with additive Gaussian noises and efficient MLEs. IEEE Signal Process. Lett. 2017, 24, 545–549. [Google Scholar] [CrossRef]

- Schweppe, F.C. Uncertain Dynamic Systems; Prentice Hall: Englewood Cliffs, NJ, USA, 1973. [Google Scholar]

- Walpole, R.E.; Myers, R.H.; Myers, S.L.; Ye, K. Probability and Statistics for Engineers and Scientists, 9th ed.; Prentice Hall: Englewood Cliffs, NJ, USA, 2011. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).