A Robust Method for Automatic Panoramic UAV Image Mosaic

Abstract

:1. Introduction

2. Related Work

2.1. Nonrigid Image Matching

2.2. Local Transformation

2.3. Bundle Adjustment

2.4. Aerial Image Mosaic

3. Methodology

3.1. Nonrigid Feature Matching Using Vector Field Consensus

3.2. Local Wrap and Bundle Adjustment

4. Experimental Results

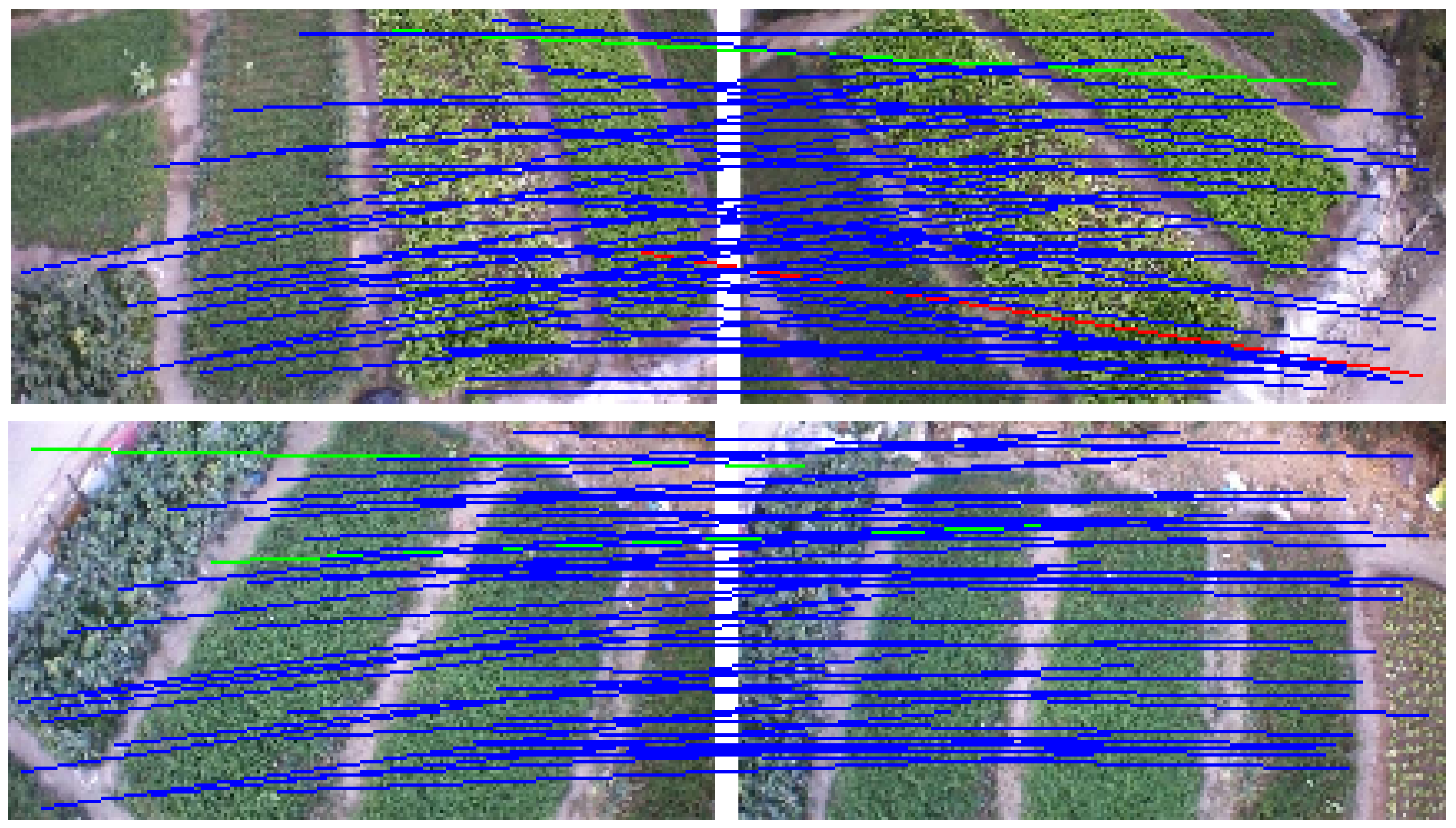

4.1. Remove Mismatches on Nonrigid Image Pairs

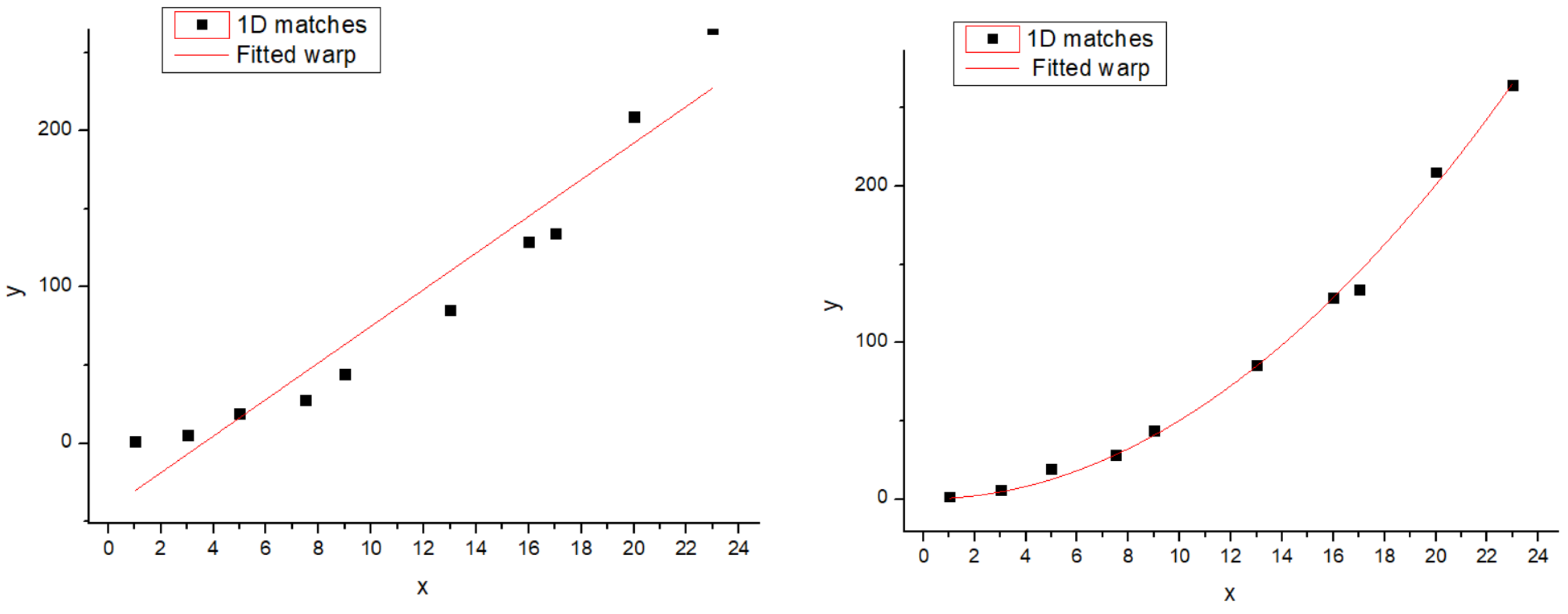

4.2. Overall Differentiation and Local Wrap

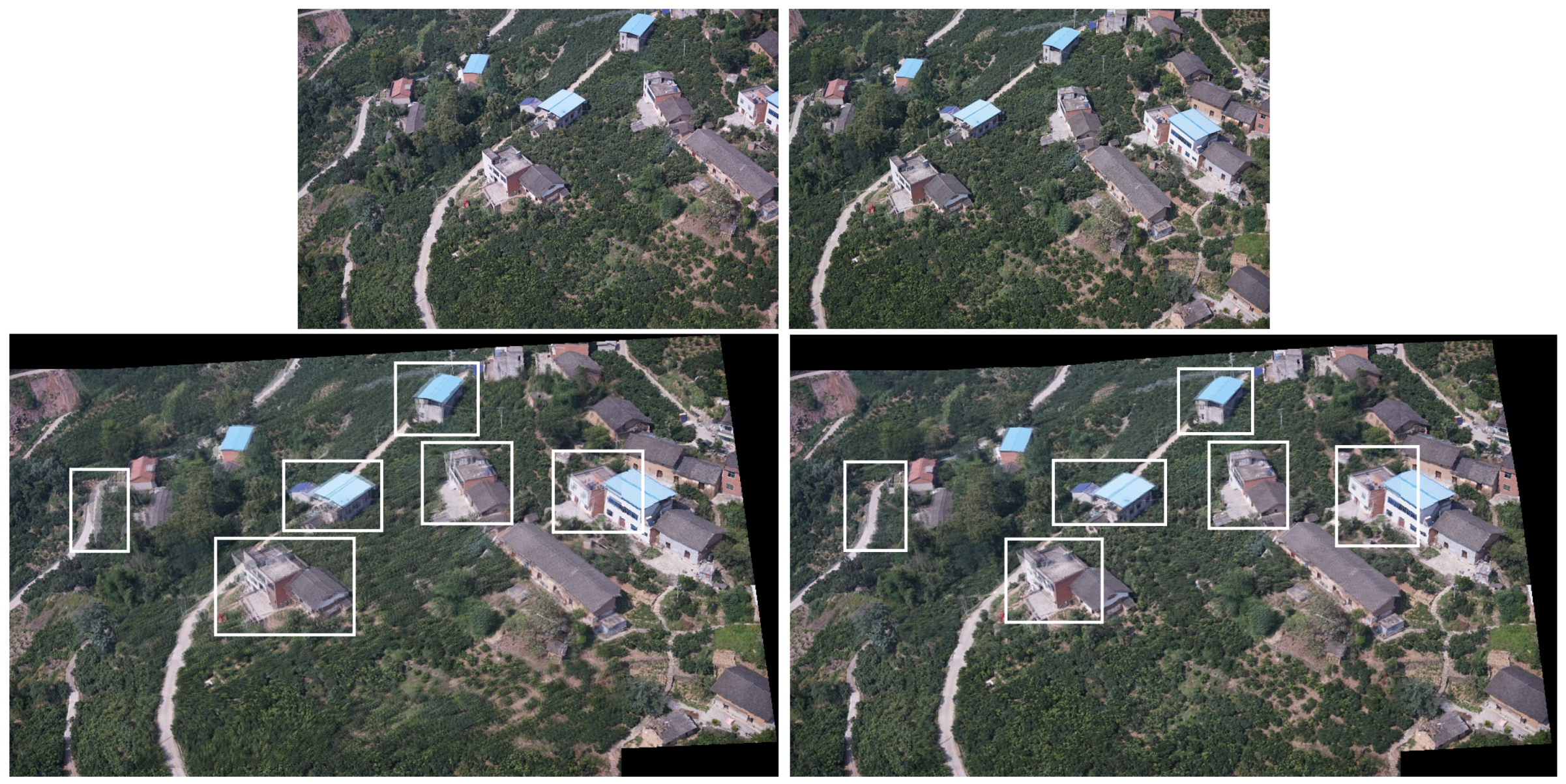

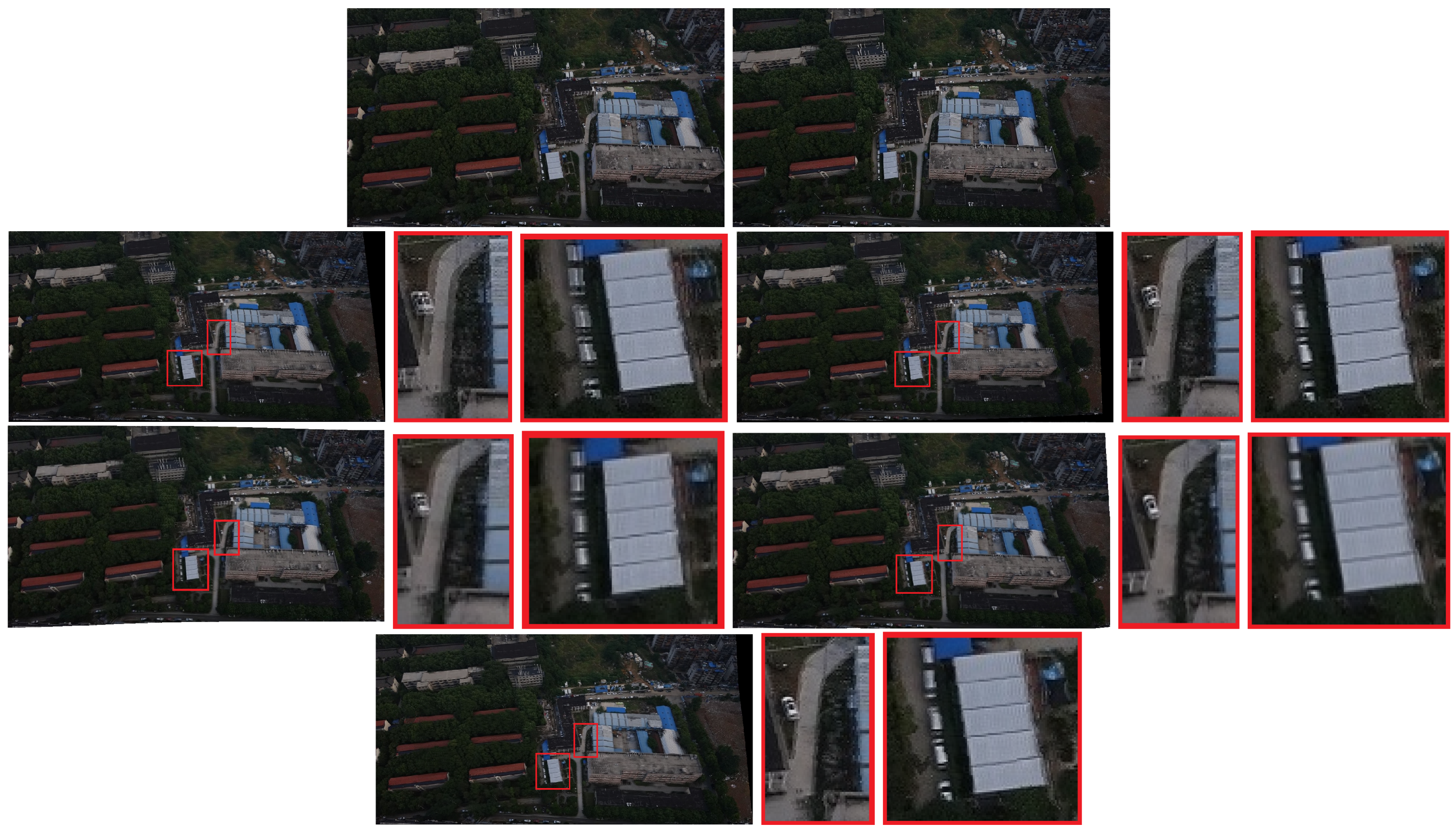

4.3. The Effect of Nonrigid Changes and Parallax

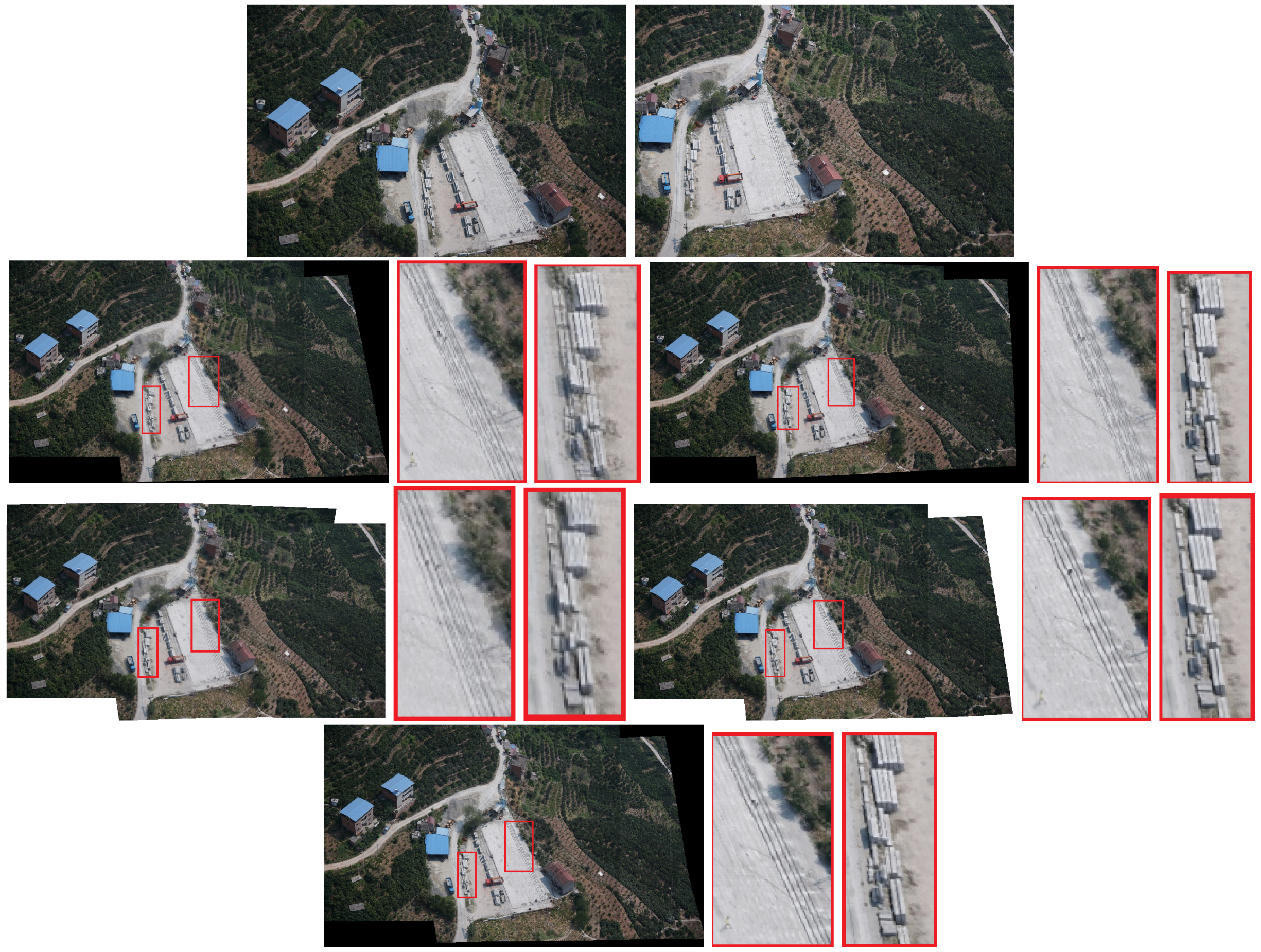

4.4. The Effect of Noise and Blurriness

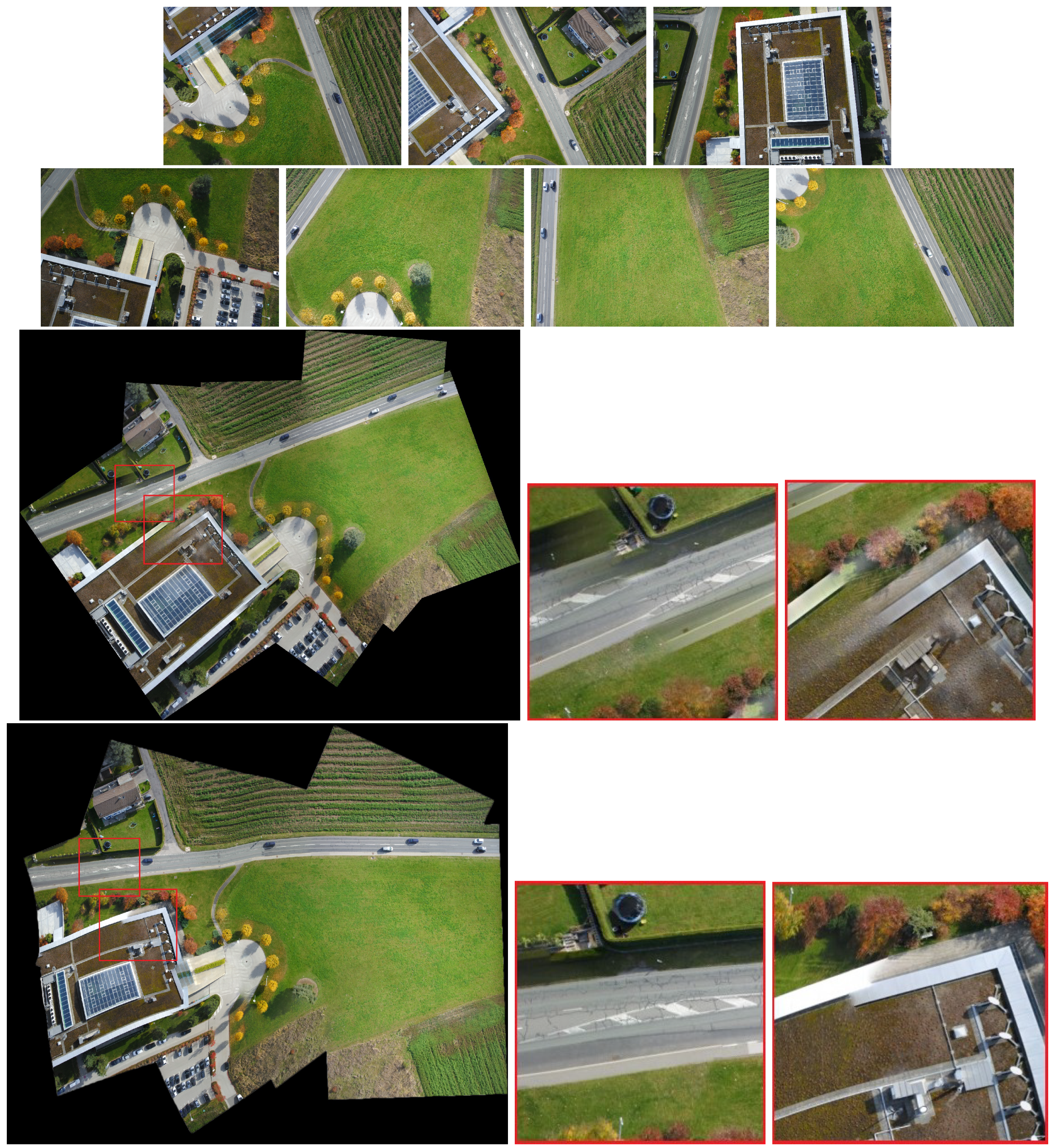

4.5. Bundle Adjustment and Panoramic Stitching

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Adel, E.; Elmogy, M.; Elbakry, H. Image stitching based on feature extraction techniques: A survey. Int. J. Comput. Appl. 2014, 99, 1–8. [Google Scholar] [CrossRef]

- Zhang, W.; Guo, B.; Li, M.; Liao, X.; Li, W. Improved Seam-Line Searching Algorithm for UAV Image Mosaic with Optical Flow. Sensors 2018, 18, 1214. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust feature matching for remote sensing image registration via locally linear transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Burt, P.J.; Adelson, E.H. A multiresolution spline with application to image mosaics. ACM Trans. Graph. 1983, 2, 217–236. [Google Scholar] [CrossRef]

- Li, M.; Chen, R.; Zhang, W.; Li, D.; Liao, X.; Wang, L.; Pan, Y.; Zhang, P. A Stereo Dual-Channel Dynamic Programming Algorithm for UAV Image Stitching. Sensors 2017, 17, 2060. [Google Scholar] [CrossRef]

- Agarwala, A.; Dontcheva, M.; Agrawala, M.; Drucker, S.; Colburn, A.; Curless, B.; Salesin, D.; Cohen, M. Interactive digital photomontage. ACM Trans. Graph. 2004, 23, 294–302. [Google Scholar] [CrossRef]

- Eden, A.; Uyttendaele, M.; Szeliski, R. Seamless image stitching of scenes with large motions and exposure differences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2498–2505. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. 2003, 22, 313–318. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Tian, J.; Bai, X.; Tu, Z. Regularized vector field learning with sparse approximation for mismatch removal. Pattern Recognit. 2013, 46, 3519–3532. [Google Scholar] [CrossRef]

- Wang, D.; Liu, H.; Cheng, X. A Miniature Binocular Endoscope with Local Feature Matching and Stereo Matching for 3D Measurement and 3D Reconstruction. Sensors 2018, 18, 2243. [Google Scholar] [CrossRef]

- Yu, M.; Deng, K.; Yang, H.; Qin, C. Improved WαSH Feature Matching Based on 2D-DWT for Stereo Remote Sensing Images. Sensors 2018, 18, 3494. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Jiang, J.; Zhao, J.; Guo, X. LMR: Learning A Two-class Classifier for Mismatch Removal. IEEE Trans. Image Process. 2019. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zaragoza, J.; Chin, T.J.; Brown, M.S.; Suter, D. As-projective-as-possible image stitching with moving DLT. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2339–2346. [Google Scholar]

- Ma, J.; Zhao, J.; Tian, J.; Yuille, A.L.; Tu, Z. Robust Point Matching via Vector Field Consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment-a modern synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Wang, Z.; Yi, P.; Jiang, K.; Jiang, J.; Han, Z.; Lu, T.; Ma, J. Multi-memory convolutional neural network for video super-resolution. IEEE Trans. Image Process. 2019, 28, 2530–2544. [Google Scholar] [CrossRef] [PubMed]

- Lu, T.; Xiong, Z.; Zhang, Y.; Wang, B.; Lu, T. Robust face super-resolution via locality-constrained low-rank representation. IEEE Access 2017, 5, 13103–13117. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Torr, P.H.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J.; Kittler, J. Locally optimized RANSAC. Joint Pattern Recognition Symposium; Springer: Berlin/Heidelberg, Germany, 2003; pp. 236–243. [Google Scholar]

- Chum, O.; Matas, J. Matching with PROSAC-progressive sample consensus. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 220–226. [Google Scholar]

- Li, X.; Hu, Z. Rejecting mismatches by correspondence function. Int. J. Comput. Vis. 2010, 89, 1–17. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Myronenko, A.; Song, X. Point set registration: Coherent point drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhao, J.; Yuille, A.L. Non-rigid point set registration by preserving global and local structures. IEEE Trans. Image Process. 2016, 25, 53–64. [Google Scholar]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality preserving matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, J.; Zhou, H.; Zhao, J.; Guo, X. Guided locality preserving feature matching for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4435–4447. [Google Scholar] [CrossRef]

- Yan, J.; Li, C.; Li, Y.; Cao, G. Adaptive discrete hypergraph matching. IEEE Trans. Cybern. 2018, 48, 765–779. [Google Scholar] [CrossRef]

- Torresani, L.; Kolmogorov, V.; Rother, C. Feature correspondence via graph matching: Models and global optimization. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 596–609. [Google Scholar]

- Leordeanu, M.; Hebert, M. A spectral technique for correspondence problems using pairwise constraints. In Proceedings of the IEEE International Conference on Computer Vision, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 1482–1489. [Google Scholar]

- Liu, H.; Yan, S. Common visual pattern discovery via spatially coherent correspondences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1609–1616. [Google Scholar]

- Liu, F.; Gleicher, M.; Jin, H.; Agarwala, A. Content-preserving warps for 3D video stabilization. ACM Trans. Graph. 2009, 28, 44. [Google Scholar] [CrossRef]

- Gao, J.; Kim, S.J.; Brown, M.S. Constructing image panoramas using dual-homography warping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 49–56. [Google Scholar]

- Lin, W.Y.; Liu, S.; Matsushita, Y.; Ng, T.T.; Cheong, L.F. Smoothly varying affine stitching. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 345–352. [Google Scholar]

- Chang, C.H.; Sato, Y.; Chuang, Y.Y. Shape-preserving half-projective warps for image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3254–3261. [Google Scholar]

- Lin, C.C.; Pankanti, S.U.; Natesan Ramamurthy, K.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1155–1163. [Google Scholar]

- Shum, H.Y.; Szeliski, R. Systems and experiment paper: Construction of panoramic image mosaics with global and local alignment. Int. J. Comput. Vis. 2000, 36, 101–130. [Google Scholar] [CrossRef]

- Lourakis, M.; Argyros, A.A. Is Levenberg-Marquardt the most efficient optimization algorithm for implementing bundle adjustment? In Proceedings of the IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005; Volume 2, pp. 1526–1531. [Google Scholar]

- Park, S.; Ghosh, D.; Kaabouch, N.; Fevig, R.A.; Semke, W. Hierarchical multi-level image mosaicing for autonomous navigation of UAV. In Proceedings of the Intelligent Robots and Computer Vision XXIX: Algorithms and Techniques, International Society for Optics and Photonics, Burlingame, CA, USA, 23 January 2012; Volume 8301, p. 830116. [Google Scholar]

- Ghosh, D.; Kaabouch, N.; Fevig, R.A. Robust spatial-domain based super-resolution mosaicing of CubeSat video frames: Algorithm and evaluation. Comput. Inf. Sci. 2014, 7, 68. [Google Scholar] [CrossRef]

- Ghosh, D.; Park, S.; Kaabouch, N.; Semke, W. Quantitative evaluation of image mosaicing in multiple scene categories. In Proceedings of the 2012 IEEE International Conference on Electro/Information Technology, Indianapolis, IN, USA, 6–8 May 2012; pp. 1–6. [Google Scholar]

- Aronszajn, N. Theory of reproducing kernels. Trans. Am. Math. Soc. 1950, 68, 337–404. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Szeliski, R. Image alignment and stitching: A tutorial. Found. Trends Comput. Graph. Vis. 2007, 2, 1–104. [Google Scholar] [CrossRef]

- Kang, E.Y.; Cohen, I.; Medioni, G. A graph-based global registration for 2d mosaics. In Proceedings of the International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000; pp. 257–260. [Google Scholar]

- Marzotto, R.; Fusiello, A.; Murino, V. High resolution video mosaicing with global alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Zhang, Z. Parameter estimation techniques: A tutorial with application to conic fitting. Image Vis. Comput. 1997, 15, 59–76. [Google Scholar] [CrossRef]

- Ma, J.; Qiu, W.; Zhao, J.; Ma, Y.; Yuille, A.L.; Tu, Z. Robust L2E Estimation of Transformation for Non-Rigid Registration. IEEE Trans. Signal Process. 2015, 63, 1115–1129. [Google Scholar] [CrossRef]

- Li, J.; Wang, Z.; Lai, S.; Zhai, Y.; Zhang, M. Parallax-tolerant image stitching based on robust elastic warping. IEEE Trans. Multimed. 2018, 20, 1672–1687. [Google Scholar] [CrossRef]

- Sensefly. Available online: https://www.sensefly.com/drones/example-datasets.html (accessed on 28 March 2019).

| RANSAC [23] | ICF [27] | GS [36] | VFC | |

|---|---|---|---|---|

| (P, R) | (99.66%, 97.67%) | (98.98%, 91.76%) | (99.59%, 81.51%) | (99.67%, 98.02%) |

| (P, R) | (100.0%, 97.07%) | (99.32%, 92.86%) | (100.0%, 94.06%) | (100.0%, 97.62%) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Xu, Q.; Luo, L.; Wang, Y.; Wang, S. A Robust Method for Automatic Panoramic UAV Image Mosaic. Sensors 2019, 19, 1898. https://doi.org/10.3390/s19081898

Chen J, Xu Q, Luo L, Wang Y, Wang S. A Robust Method for Automatic Panoramic UAV Image Mosaic. Sensors. 2019; 19(8):1898. https://doi.org/10.3390/s19081898

Chicago/Turabian StyleChen, Jun, Quan Xu, Linbo Luo, Yongtao Wang, and Shuchun Wang. 2019. "A Robust Method for Automatic Panoramic UAV Image Mosaic" Sensors 19, no. 8: 1898. https://doi.org/10.3390/s19081898

APA StyleChen, J., Xu, Q., Luo, L., Wang, Y., & Wang, S. (2019). A Robust Method for Automatic Panoramic UAV Image Mosaic. Sensors, 19(8), 1898. https://doi.org/10.3390/s19081898