A Game-Theoretic Framework to Preserve Location Information Privacy in Location-Based Service Applications

Abstract

:1. Introduction

- We formally analyze the privacy protection scenarios using game theory and formulate the situation of the prisoner’s dilemma game between a privacy protector (i.e., LBS user) and an outside observer (i.e., user’s private information requester or simply a visitor) in a static game context.

- We analyze the existence of Nash equilibrium solutions for privacy protection methods and propose a game strategic selection to help users and outsiders obtain the corresponding benefits.

- We describe the procedures involved in the game model, the gameplay scenario, as well as the framework design used in the game theoretic model.

- We conduct some experiments based on game theory and the existing technical-oriented solutions, and we then validate the effectiveness of the former through comparison analysis.

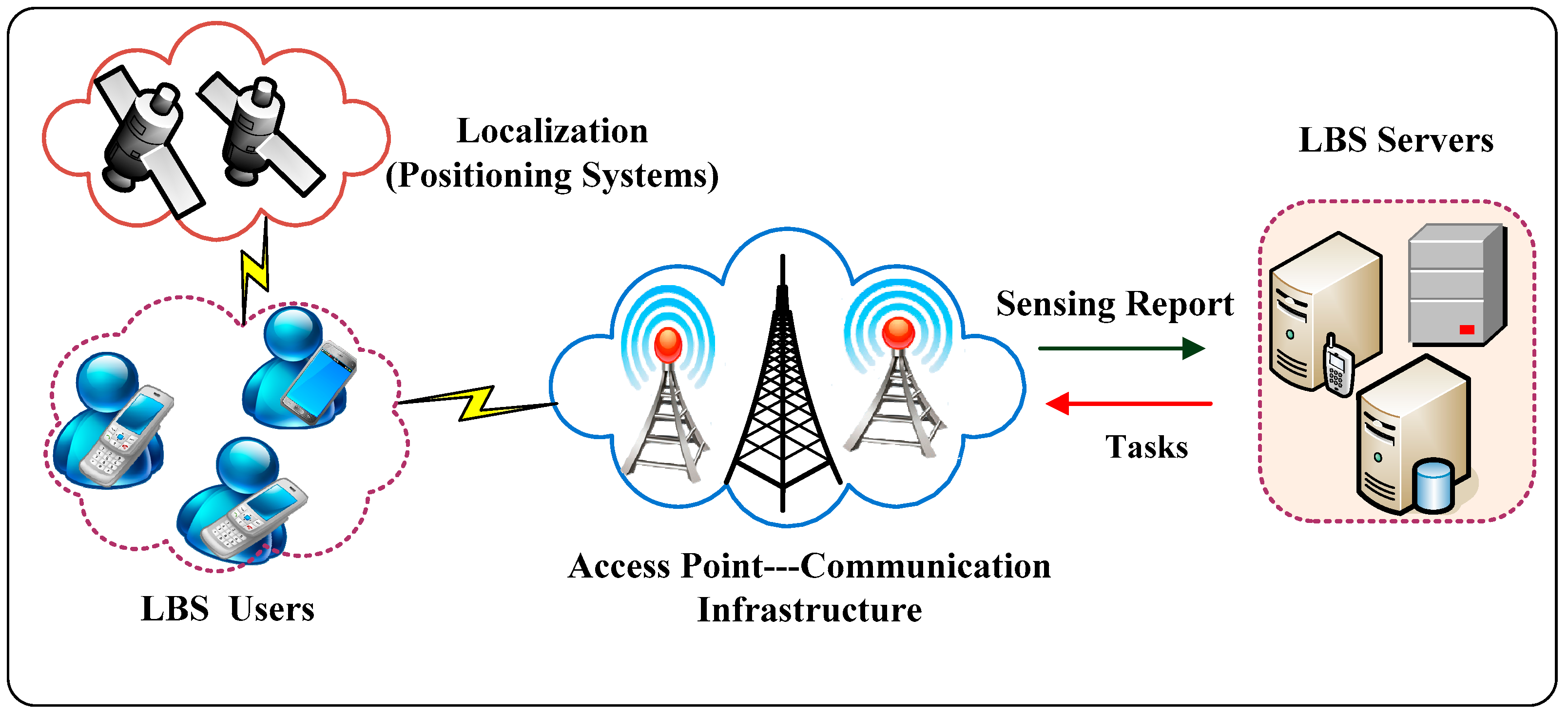

2. System Model and Assumptions for LBSs

2.1. Privacy and Adversarial Consideration in LBS

2.2. Game Analysis in the Traditional Privacy Protection Model

2.3. Reward Matrix for the Traditional Game Theory Model

3. Privacy Game Analysis using the Game Theory Model

3.1. Game Theory-Based General Privacy Protection Model

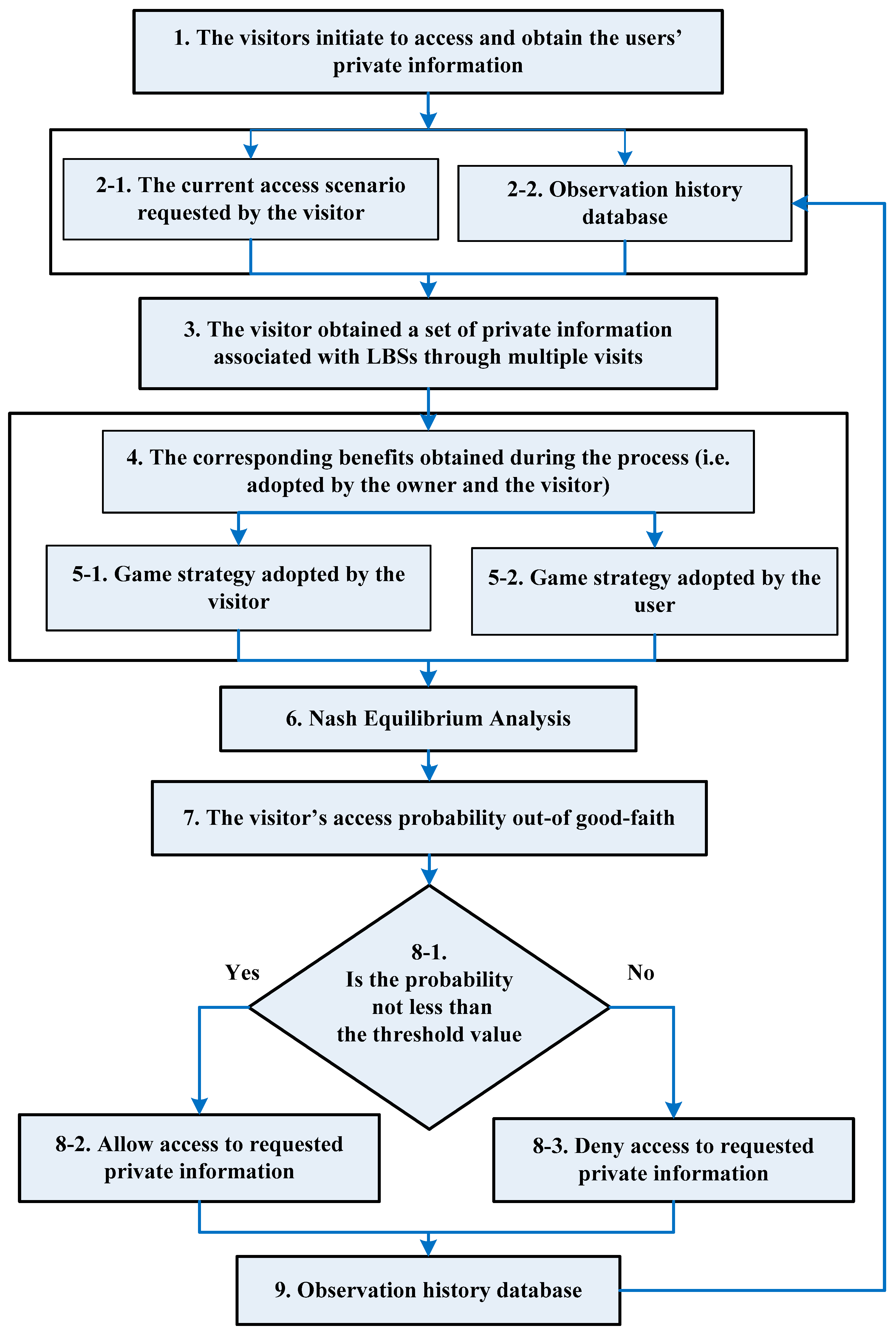

3.2. The Gameplay Process between the Privacy Protector and the Visitor

3.3. Design Framework for the Game-Theoretic Protection Model

4. Experimental Analysis and Discussion

4.1. The Scenario Settings of the Evaluation System

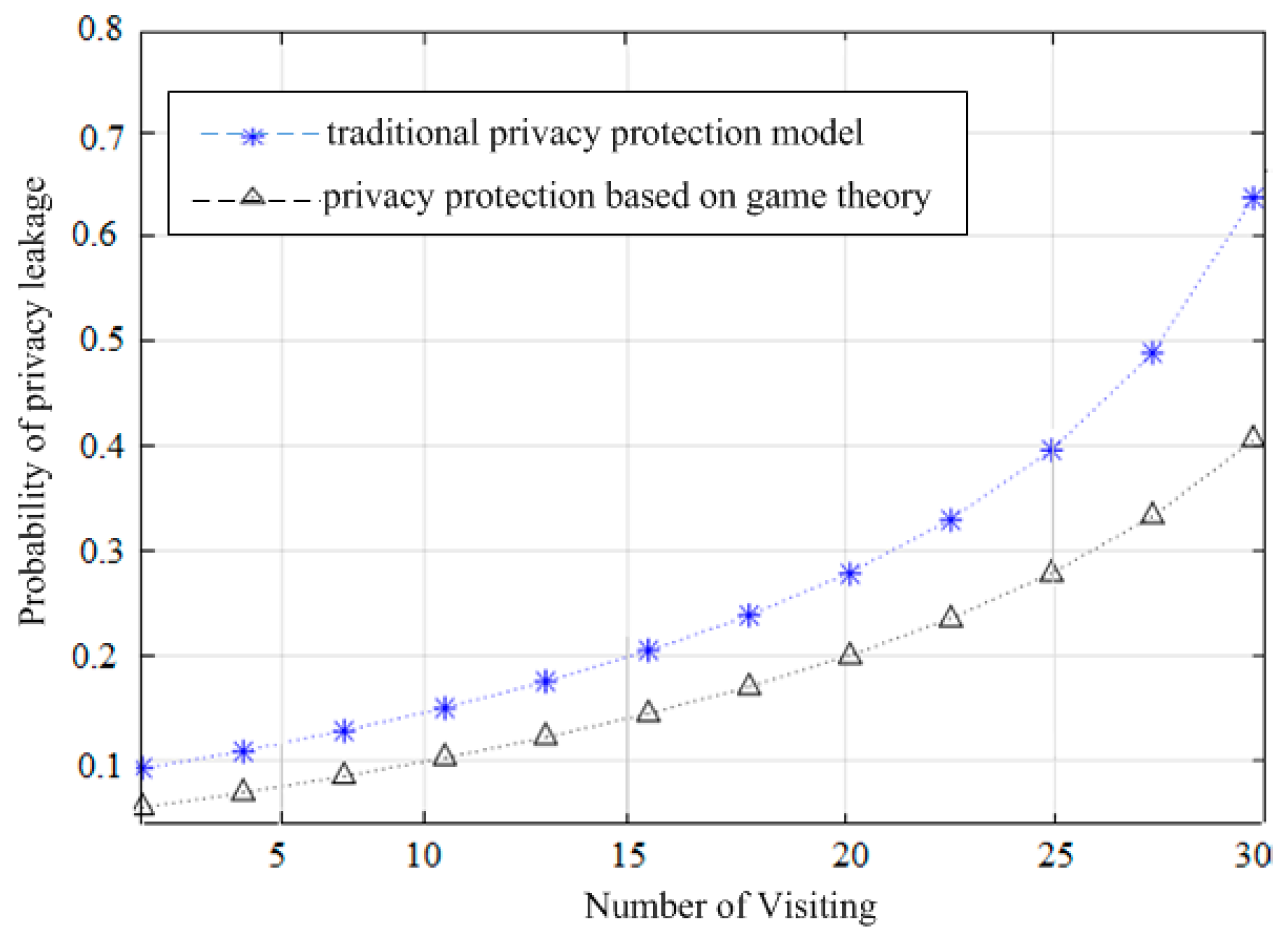

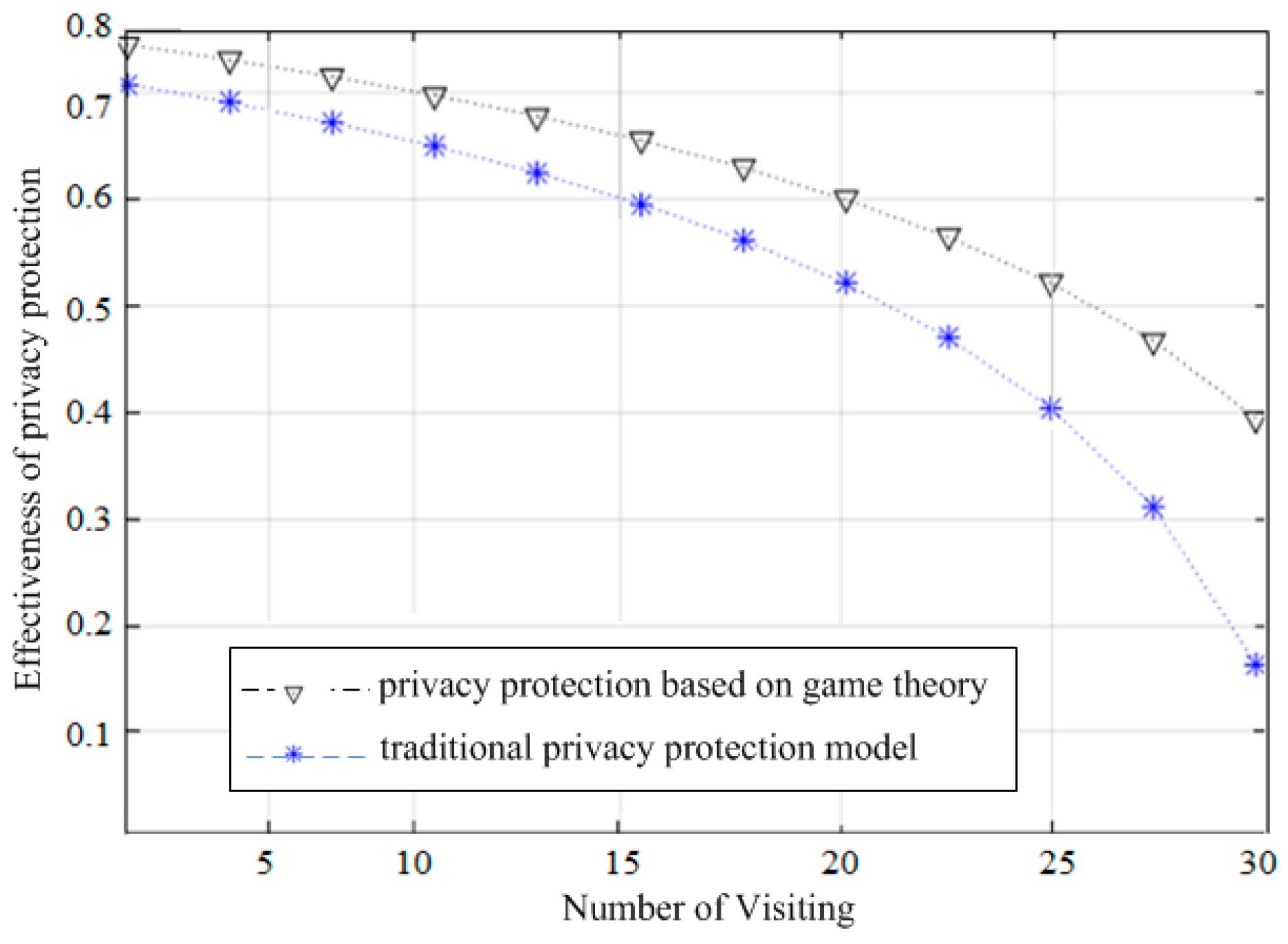

4.2. Data Set and Evaluation Results

5. Related Works

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, H.; Zhu, H.; Du, S.; Liang, X.; Shen, X.S. Privacy leakage of location sharing in mobile social networks: Attacks and defense. IEEE Trans. Depend. Secur. Comput. 2018, 15, 646–660. [Google Scholar] [CrossRef]

- Aditya, P.; Bhattacharjee, B.; Druschel, P.; Erdélyi, V.; Lentz, M. Brave new world: Privacy risks for mobile users. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2015, 18, 49–54. [Google Scholar] [CrossRef]

- Yi, X.; Paulet, R.; Bertino, E.; Varadharajan, V. Practical approximate k nearest neighbor queries with location and query privacy. IEEE Trans. Knowl. Data Eng. 2016, 28, 1546–1559. [Google Scholar] [CrossRef]

- Bettini, C.; Bettini, D.R. Privacy protection in pervasive systems: State of the art and technical challenges. Pervasive Mob. Comput. 2015, 17, 159–174. [Google Scholar] [CrossRef]

- Ghai, V.; Sharma, S.; Jain, A. Policy-Based Physical Security System for Restricting Access to Computer Resources and Data Flow Through Network Equipment. U.S. Patent 9,111,088, 18 August 2015. [Google Scholar]

- Wang, L.; Meng, X.F. Location privacy preservation in big data era: A survey. J. Softw. 2014, 25, 693–712. [Google Scholar]

- Liu, X.; Liu, K.; Guo, L.; Li, X.; Fang, Y. A game-theoretic approach for achieving k-anonymity in location based services. In Proceedings of the 2013 Proceedings IEEE INFOCOM, Turin, Italy, 14–19 April 2013; pp. 2985–2993. [Google Scholar]

- Shen, H.; Bai, G.; Yang, M.; Wang, Z. Protecting trajectory privacy: A user-centric analysis. J. Netw. Comput. Appl. 2017, 82, 128–139. [Google Scholar] [CrossRef]

- Hao, Y.; Cao, H.; Bhattarai, K.; Misra, S. STK-anonymity: K-anonymity of social networks containing both structural and textual information. In Proceedings of the ACM SIGMOD Workshop on Databases and Social Networks, New York, NY, USA, 22–27 June 2013; pp. 19–24. [Google Scholar]

- Freudiger, J.; Shokri, R.; Hubaux, J.P. On the optimal placement of mix zones. In Proceedings of the International Symposium on Privacy Enhancing Technologies Symposium, Seattle, WA, USA, 5–7 August 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 216–234. [Google Scholar]

- Chow, R.; Golle, P. Faking contextual data for fun, profit, and privacy. In Proceedings of the 8th ACM Workshop on Privacy in the Electronic Society, Chicago, IL, USA, 9 November 2009; pp. 105–108. [Google Scholar]

- Andrés, M.E.; Bordenabe, N.E.; Chatzikokolakis, K.; Palamidessi, C. Geo-indistinguishability: Differential privacy for location-based systems. arXiv, 2012; arXiv:1212.1984. [Google Scholar]

- Tan, X.; Yu, F. Research and application of virtual user context information security strategy based on group intelligent computing. Cogn. Syst. Res. 2018, 52, 629–639. [Google Scholar] [CrossRef]

- Kalnis, P.; Ghinita, G.; Mouratidis, K.; Papadias, D. Preventing location-based identity inference in anonymous spatial queries. IEEE Trans. Knowl. Data Eng. 2007, 19, 1719–1733. [Google Scholar] [CrossRef]

- Tran, M.; Echizen, I.; Duong, A. Binomial-mix-based location anonymizer system with global dummy generation to preserve user location privacy in location-based services. In Proceedings of the 2010 International Conference on Availability, Reliability and Security, Krakow, Poland, 15–18 February 2010. [Google Scholar]

- Sun, L.; Wang, H.; Soar, J.; Rong, C. Purpose based access control for privacy protection in e-healthcare services. J. Softw. 2012, 7, 2443–2449. [Google Scholar] [CrossRef]

- Lu, R.; Lin, X.; Shen, X. SPOC: A secure and privacy-preserving opportunistic computing framework for mobile-healthcare emergency. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 614–624. [Google Scholar] [CrossRef]

- Terzi, D.S.; Terzi, R.; Sagiroglu, S. A survey on security and privacy issues in big data. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015; pp. 202–207. [Google Scholar]

- Liu, X.; Zhou, Y. A Self-Adaptive Layered Sleep-Based Method for Security Dynamic Scheduling in Cloud Storage. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 99–103. [Google Scholar]

- Jagdale, B.N.; Bakal, J.W. Emerging trends of location privacy techniques in location aided applications. In Proceedings of the 2014 International Conference on Contemporary Computing and Informatics (IC3I), Mysore, India, 27–29 November 2014; pp. 1002–1006. [Google Scholar]

- Shin, K.G.; Ju, X.; Chen, Z.; Hu, X. Privacy protection for users of location-based services. IEEE Wirel. Commun. 2012, 19, 30–39. [Google Scholar] [CrossRef]

- Zhang, X.; Gui, X.; Tian, F.; Yu, S.; An, J. Privacy quantification model based on the Bayes conditional risk in Location-based services. Tsinghua Sci. Technol. 2014, 19, 452–462. [Google Scholar] [CrossRef]

- Shokri, R.; Theodorakopoulos, G.; Danezis, G.; Hubaux, J.P.; le Boudec, J.Y. Quantifying location privacy: The case of sporadic location exposure. In Proceedings of the International Symposium on Privacy Enhancing Technologies Symposium, Waterloo, ON, Canada, 27–29 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 57–76. [Google Scholar]

- Shokri, R.; Theodorakopoulos, G.; le Boudec, J.Y.; Hubaux, J.P. Quantifying location privacy. In Proceedings of the 2011 IEEE symposium on Security and Privacy (SP), Berkeley, CA, USA, 22–25 May 2011; pp. 247–262. [Google Scholar]

- Abdalzaher, M.S.; Seddik, K.; Elsabrouty, M.; Muta, O.M.; Furukawa, H.; Abdel-Rahman, A. Game theory meets wireless sensor networks security requirements and threats mitigation: A survey. Sensors 2016, 16, 1003. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, Y. Constructive proof of the existence of Nash equilibrium in a strategic game with sequentially locally non-constant payoff functions. Adv. Fixed Point Theory 2012, 2, 398–416. [Google Scholar]

- Tan, Z. A lightweight conditional privacy-preserving authentication and access control scheme for pervasive computing environments. J. Netw. Comput. Appl. 2012, 35, 1839–1846. [Google Scholar] [CrossRef]

- Omran, E.; Grandison, T.; Nelson, D.; Bokma, A. A Comparative Analysis of Chain-Based Access Control and Role-Based Access Control in the Healthcare Domain. Int. J. Inf. Secur. Priv. (IJISP) 2013, 7, 36–52. [Google Scholar] [CrossRef]

- Pappas, V.; Krell, F.; Vo, B.; Kolesnikov, V.; Malkin, T.; Choi, S.G.; George, W.; Keromytis, A.; Bellovin, S. Blind seer: A scalable private dbms. In Proceedings of the 2014 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 18–21 May 2014; pp. 359–374. [Google Scholar]

- Zheng, Y.; Zhang, L.; Xie, X.; Ma, W.Y. Mining interesting locations and travel sequences from GPS trajectories. In Proceedings of the 18th international conference on World Wide Web, Madrid, Spain, 20–24 April 2009; pp. 791–800. [Google Scholar]

- Zheng, Y.; Wang, L.; Zhang, R.; Xie, X.; Ma, W.Y. GeoLife: Managing and understanding your past life over maps. In Proceedings of the 9th International Conference on Mobile Data Management (MDM’08), Beijing, China, 27–30 April 2008; pp. 211–212. [Google Scholar]

- Shokri, R. Quantifying and protecting location privacy. IT Inf. Technol. 2015, 57, 257–263. [Google Scholar] [CrossRef]

- Shokri, R.; Theodorakopoulos, G.; Troncoso, C.; Hubaux, J.P.; le Boudec, J.Y. Protecting location privacy: Optimal strategy against localization attacks. In Proceedings of the 2012 ACM conference on Computer and Communications Security, Raleigh, NC, USA, 16–18 October 2012; pp. 617–627. [Google Scholar]

- Kang, J.; Yu, R.; Huang, X.; Bogucka, H.; Gjessing, S.; Zhang, Y. Location Privacy Attack and Defense in Cloud-Enabled Internet of Vehicles. IEEE Wirel. Commun. 2016, 23, 52–59. [Google Scholar] [CrossRef]

- Polakis, I.; Argyros, G.; Petsios, T.; Sivakorn, S.; Keromytis, A.D. Where’s Wally?: Precise User Discovery Attacks in Location Proximity Services. In Proceedings of the 22nd ACM Conference on Computer and Communications Security, Denver, Colorado, USA, 12–16 October 2015; pp. 817–828. [Google Scholar]

- Feng, H.; Shin, K.G. POSTER: Positioning Attack on Proximity-Based People Discovery. In Proceedings of the 21nd ACM Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1427–1429. [Google Scholar]

- Li, M.; Zhu, H.; Gao, Z.; Chen, S.; Ren, K.; Yu, L.; Hu, S. All Your Location are Belong to Us: Breaking Mobile Social Networks for Automated User Location Tracking. In Proceedings of the MobiHoc 2014, Philadelphia, PA, USA, 11–14 August 2014; pp. 43–52. [Google Scholar]

- Beresford, A.R.; Rice, A.; Skehin, N.; Sohan, R. Mockdroid: Trading privacy for application functionality on smartphones. In Proceedings of the MobiHoc 2011, Paris, France, 16–19 May 2011; pp. 49–54. [Google Scholar]

- Rodriguez-Carrion, A.; Rebollo-Monedero, D.; Forné, J.; Campo, C.; Garcia-Rubio, C.; Parra-Arnau, J.; Das, S. Entropy-based privacy against profiling of user mobility. Entropy 2015, 17, 3913–3946. [Google Scholar] [CrossRef]

- Shokri, R. Privacy games: Optimal user-centric data obfuscation. Proc. Priv. Enhanc. Technol. 2015, 2015, 299–315. [Google Scholar] [CrossRef]

- Chatzikokolakis, K.; Palamidessi, C.; Panangaden, P. Anonymity protocols as noisy channels. Inf. Comput. 2008, 206, 378–401. [Google Scholar] [CrossRef]

- Lu, H.; Jensen, C.S.; Yiu, M.L. Pad: Privacy-area aware, dummy-based location privacy in mobile services. In Proceedings of the Seventh ACM International Workshop on Data Engineering for Wireless and Mobile Access (Mobide 2008), Vancouver, BC, Canada, 13 June 2008. [Google Scholar]

- Ardagna, C.; Cremonini, M.; Damiani, E.; di Vimercati, S.d.; Samarati, P. Location privacy protection through obfuscation-based techniques. In Proceedings of the Data and Applications Security XXI, Redondo Beach, CA, USA, 8–11 July 2007. [Google Scholar]

- Zhu, Q.; Zhao, T.; Wang, S. Privacy preservation algorithm for service-oriented information search. Jisuanji Xuebao (Chin. J. Comput.) 2010, 33, 1315–1323. [Google Scholar] [CrossRef]

- Jia, O.; Jian, Y.; Shaopeng, L.; Yuba, L. An effective differential privacy transaction data publication strategy. J. Comput. Res. Dev. 2014, 51, 2195–2205. [Google Scholar]

- Huo, Z.; Huang, Y.; Meng, X. History trajectory privacy-preserving through graph partition. In Proceedings of the 1st International Workshop on Mobile Location-Based Service, Beijing, China, 18 September 2011; pp. 71–78. [Google Scholar]

- Shokri, R.; Theodorakopoulos, G.; Troncoso, C. Privacy games along location traces: A game-theoretic framework for optimizing location privacy. ACM Trans. Priv. Secur. (TOPS) 2017, 19, 11. [Google Scholar] [CrossRef]

- Korzhyk, D.; Yin, Z.; Kiekintveld, C.; Conitzer, V.; Tambe, M. Stackelberg vs. Nash in security games: An extended investigation of interchangeability, equivalence, and uniqueness. J. Artif. Intell. Res. 2011, 41, 297–327. [Google Scholar] [CrossRef]

| Owners (Users) | Third-Party Visitors | |

|---|---|---|

| Good-Faith | Malicious | |

| Allow | , | |

| Refuse | , 0 | , 0 |

| Tolerance | Threshold |

|---|---|

| Very High | [0, 0.2] |

| High | (0.2, 0.4] |

| Medium | (0.4, 0.6] |

| Low | (0.6, 0.8] |

| Very Low | (0.8, 1] |

| Owners (Users) | Third-Party Visitors | |

|---|---|---|

| Good-Faith | Malicious | |

| Allow | , | |

| Refuse | , 0 | , |

| No. Visits. | Private Information Leakage Probability | |

|---|---|---|

| Traditional Model | Game Theoretic Model | |

| 1 | 0.05 | 0.01 |

| 2 | 0.05 | 0.03 |

| 3 | 0.05 | 0.04 |

| 4 | 0.08 | 0.06 |

| 5 | 0.14 | 0.08 |

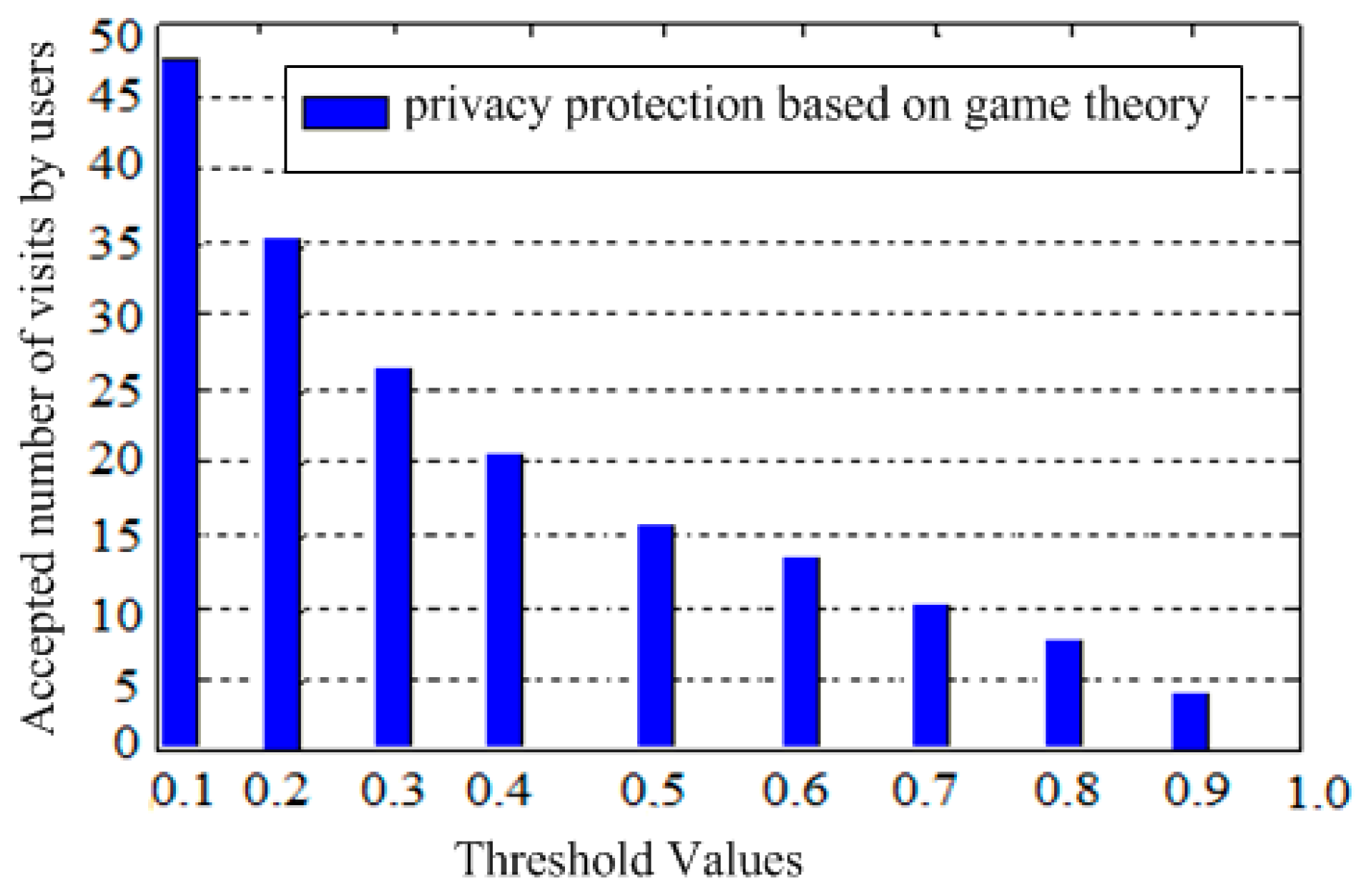

| Threshold | Number of Visits |

|---|---|

| 0.1 | 52 |

| 0.2 | 40 |

| 0.3 | 33 |

| 0.4 | 28 |

| 0.5 | 0.5 |

| 0.6 | 15 |

| 0.7 | 10 |

| 0.8 | 6 |

| 0.9 | 3 |

| 1.0 | 0 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tefera, M.K.; Yang, X. A Game-Theoretic Framework to Preserve Location Information Privacy in Location-Based Service Applications. Sensors 2019, 19, 1581. https://doi.org/10.3390/s19071581

Tefera MK, Yang X. A Game-Theoretic Framework to Preserve Location Information Privacy in Location-Based Service Applications. Sensors. 2019; 19(7):1581. https://doi.org/10.3390/s19071581

Chicago/Turabian StyleTefera, Mulugeta Kassaw, and Xiaolong Yang. 2019. "A Game-Theoretic Framework to Preserve Location Information Privacy in Location-Based Service Applications" Sensors 19, no. 7: 1581. https://doi.org/10.3390/s19071581

APA StyleTefera, M. K., & Yang, X. (2019). A Game-Theoretic Framework to Preserve Location Information Privacy in Location-Based Service Applications. Sensors, 19(7), 1581. https://doi.org/10.3390/s19071581