A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data

Abstract

:1. Introduction

2. Data Acquisition and Preprocessing

2.1. Data Structure Analysis

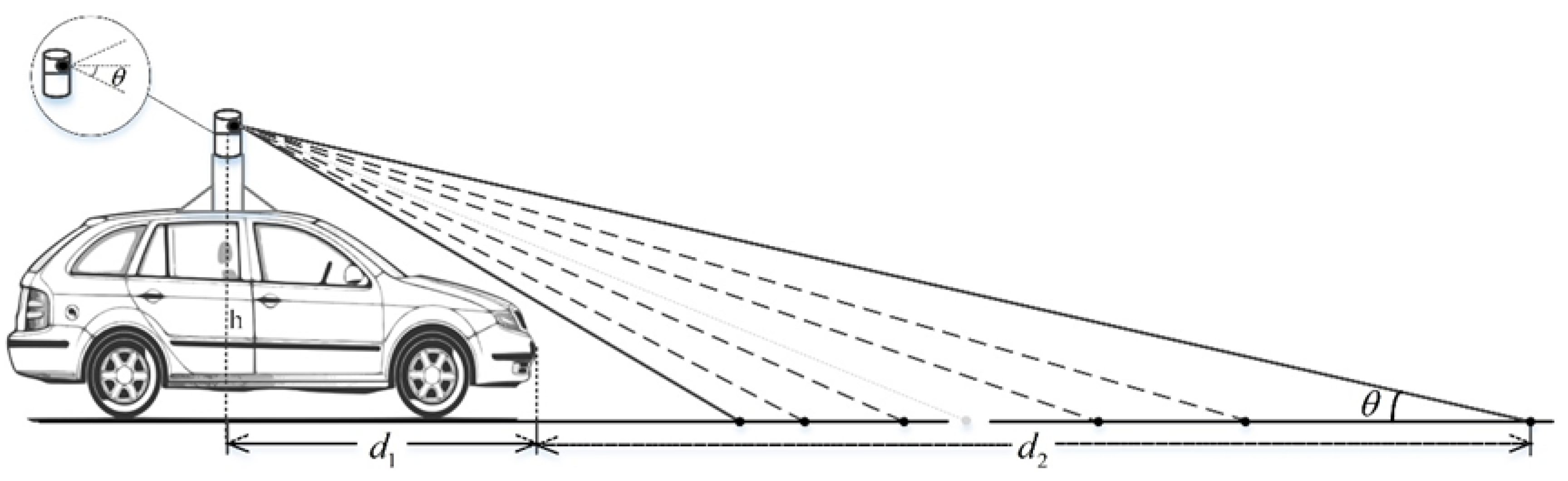

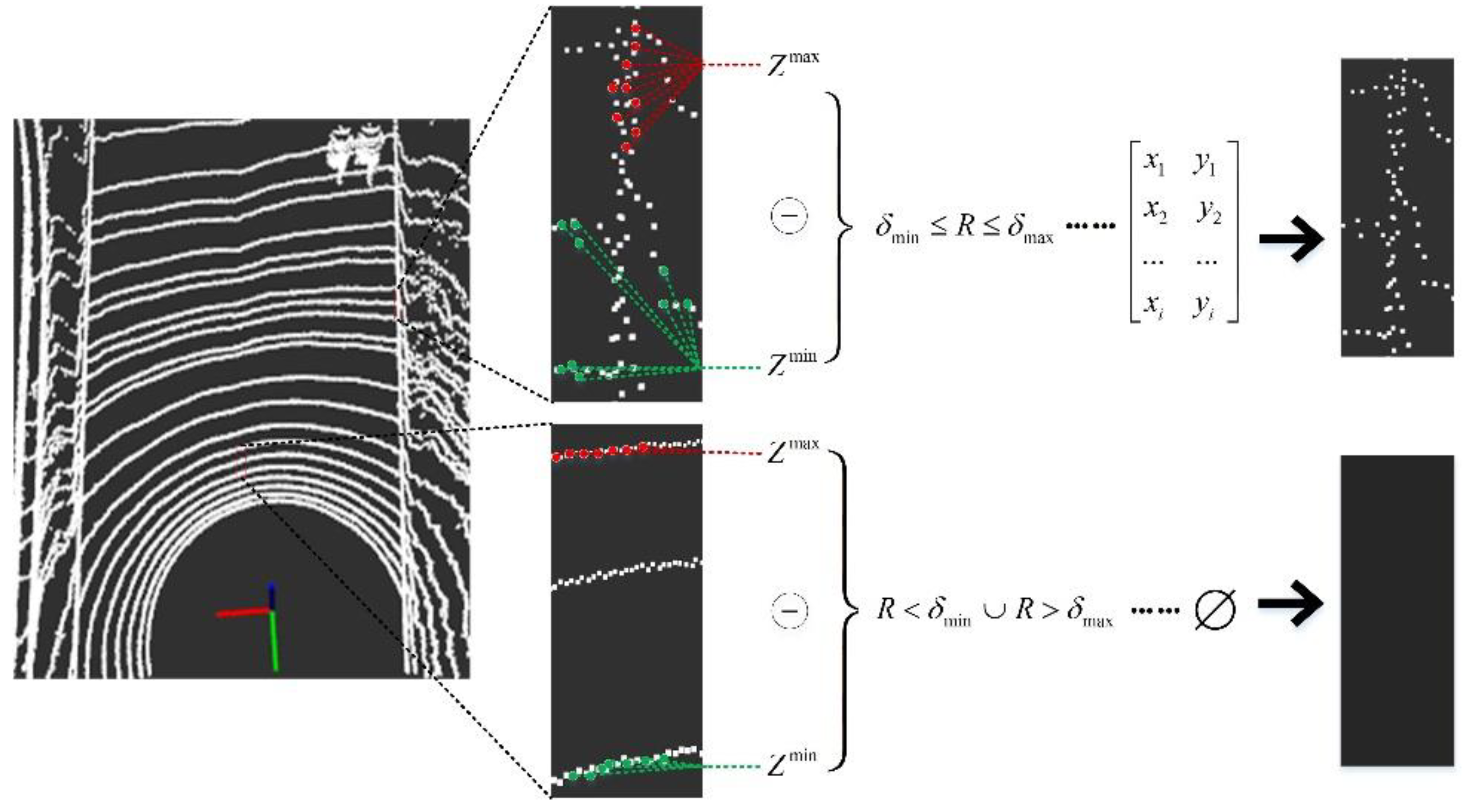

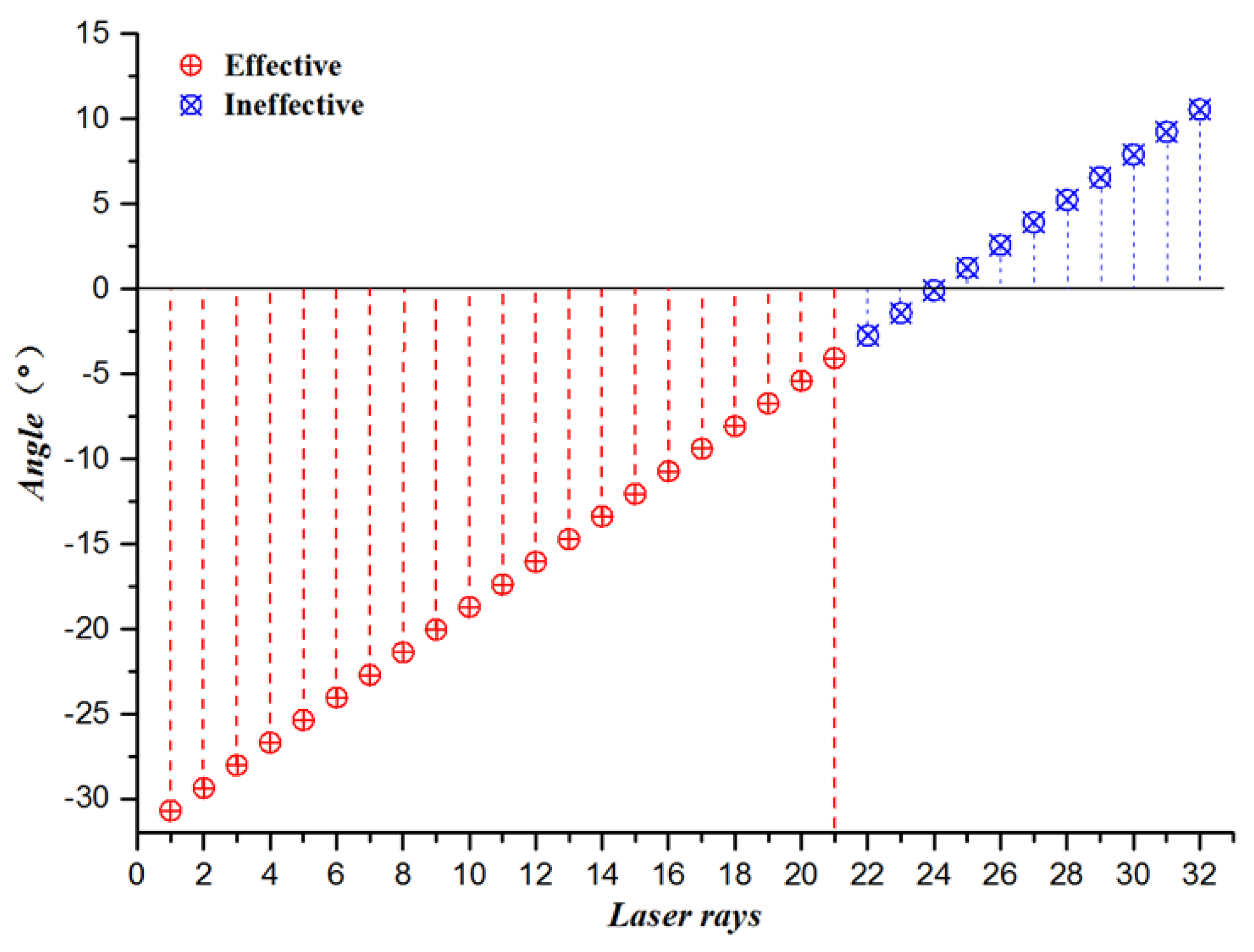

2.2. Sensor Sensing Area Selection

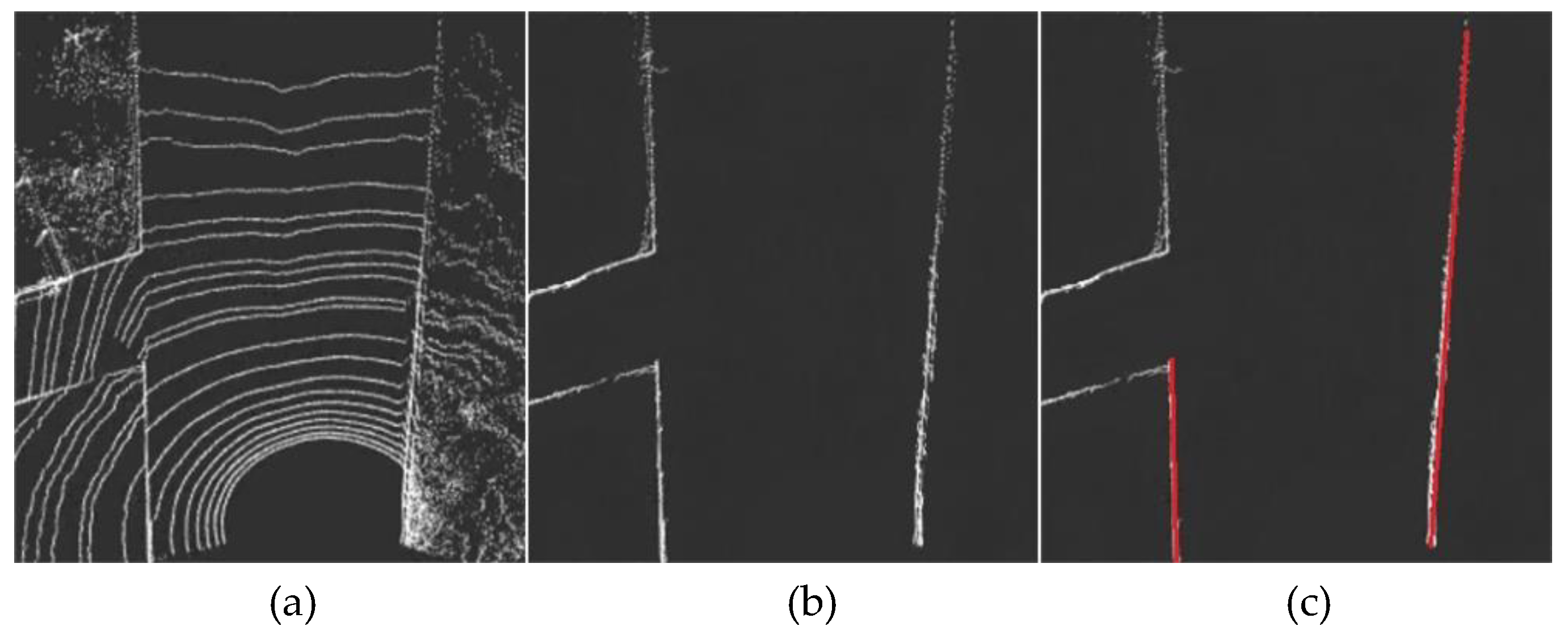

2.3. Foreground Point Cloud Area Extraction

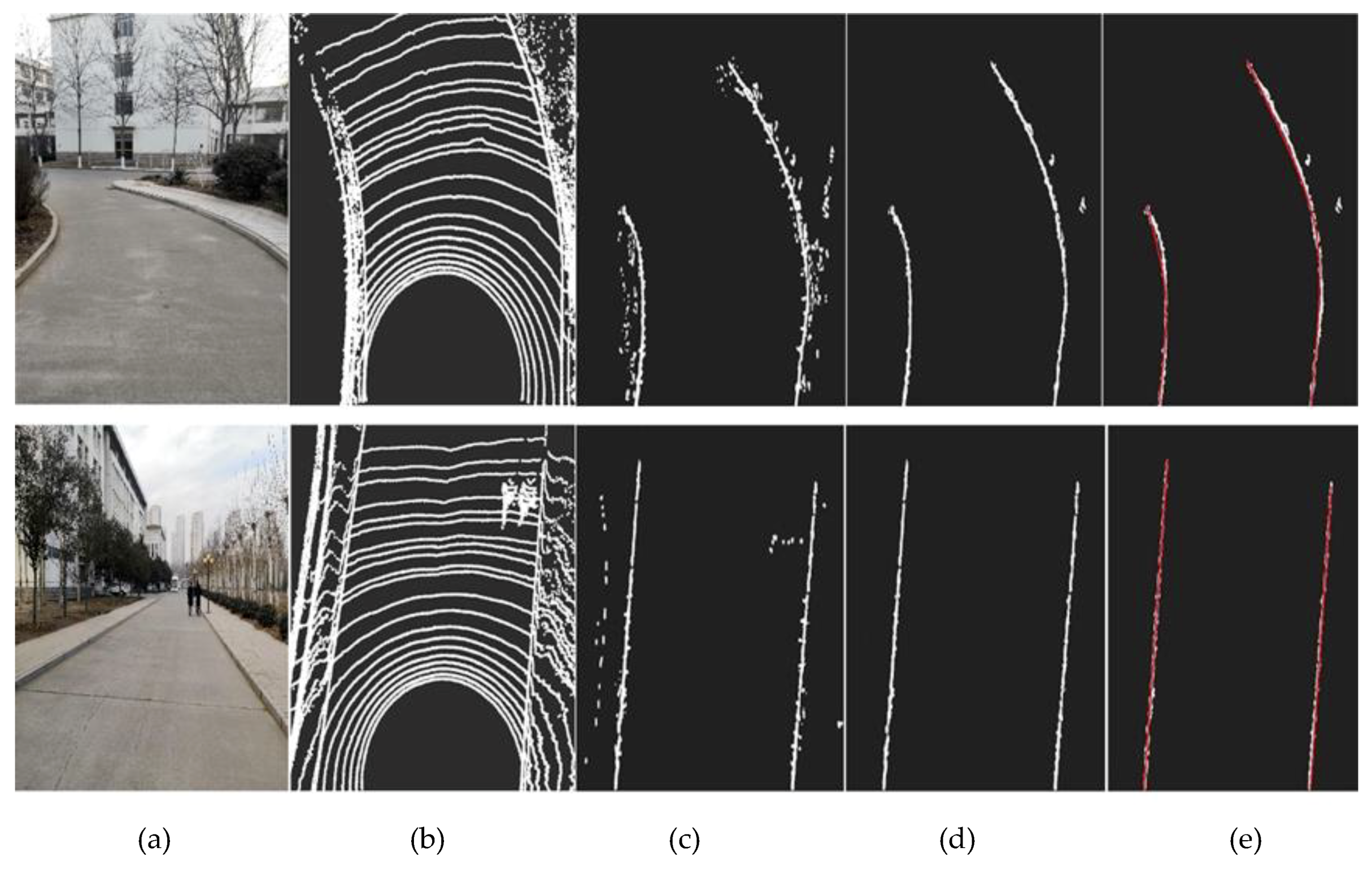

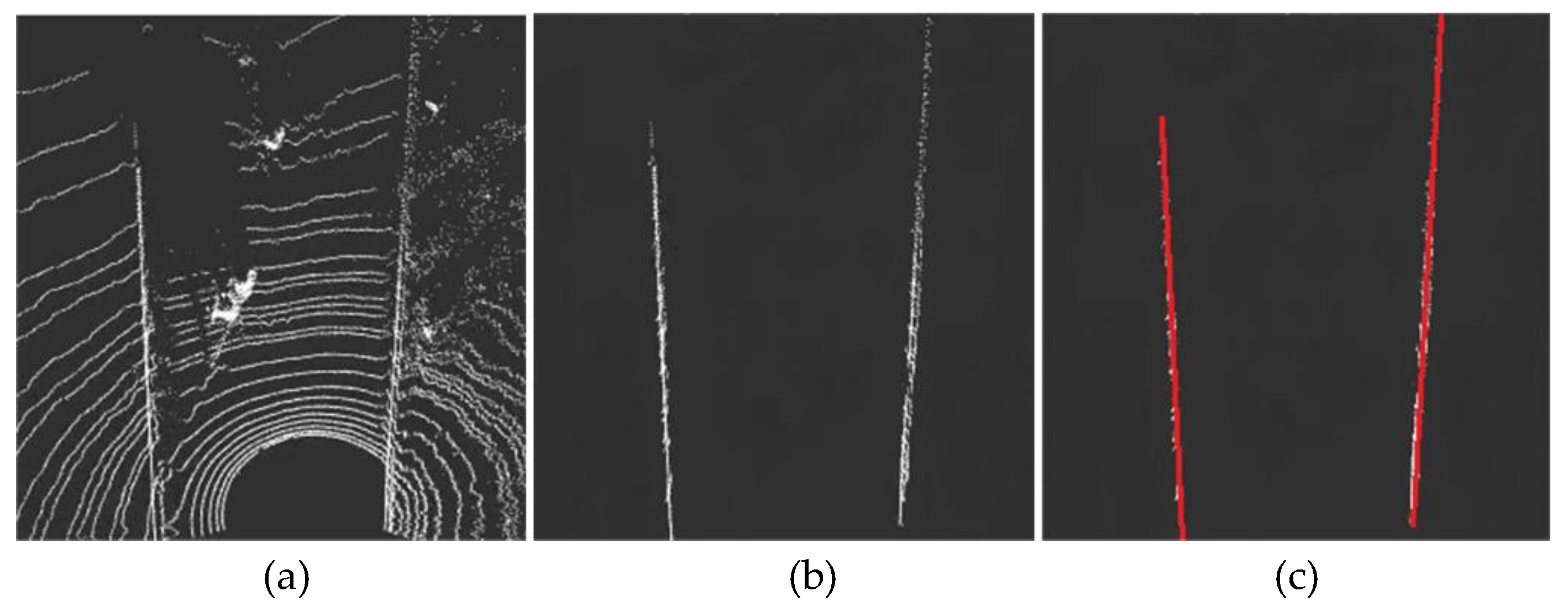

3. Extraction and Fitting of Road Boundary Points

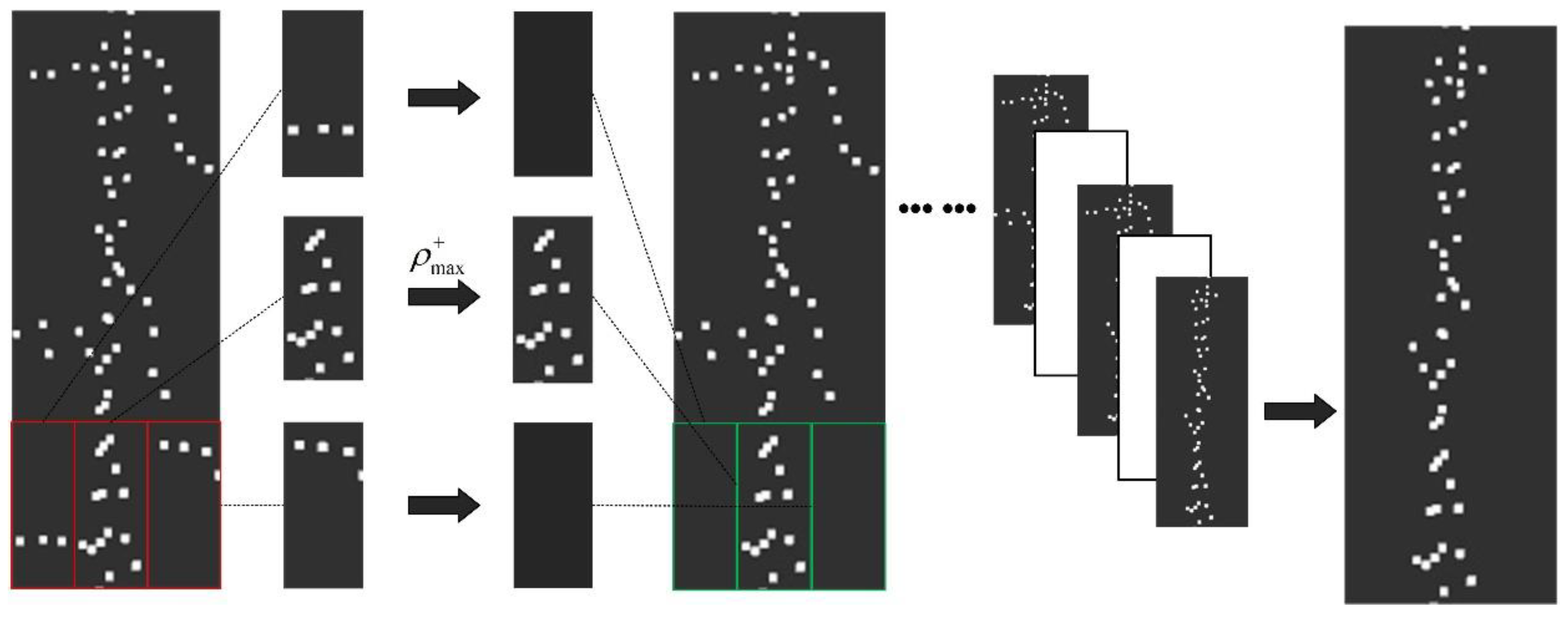

3.1. Geometric Feature Extraction

3.2. Spatial Distribution Feature Extraction

4. Experiments and Results

4.1. Experimental Design

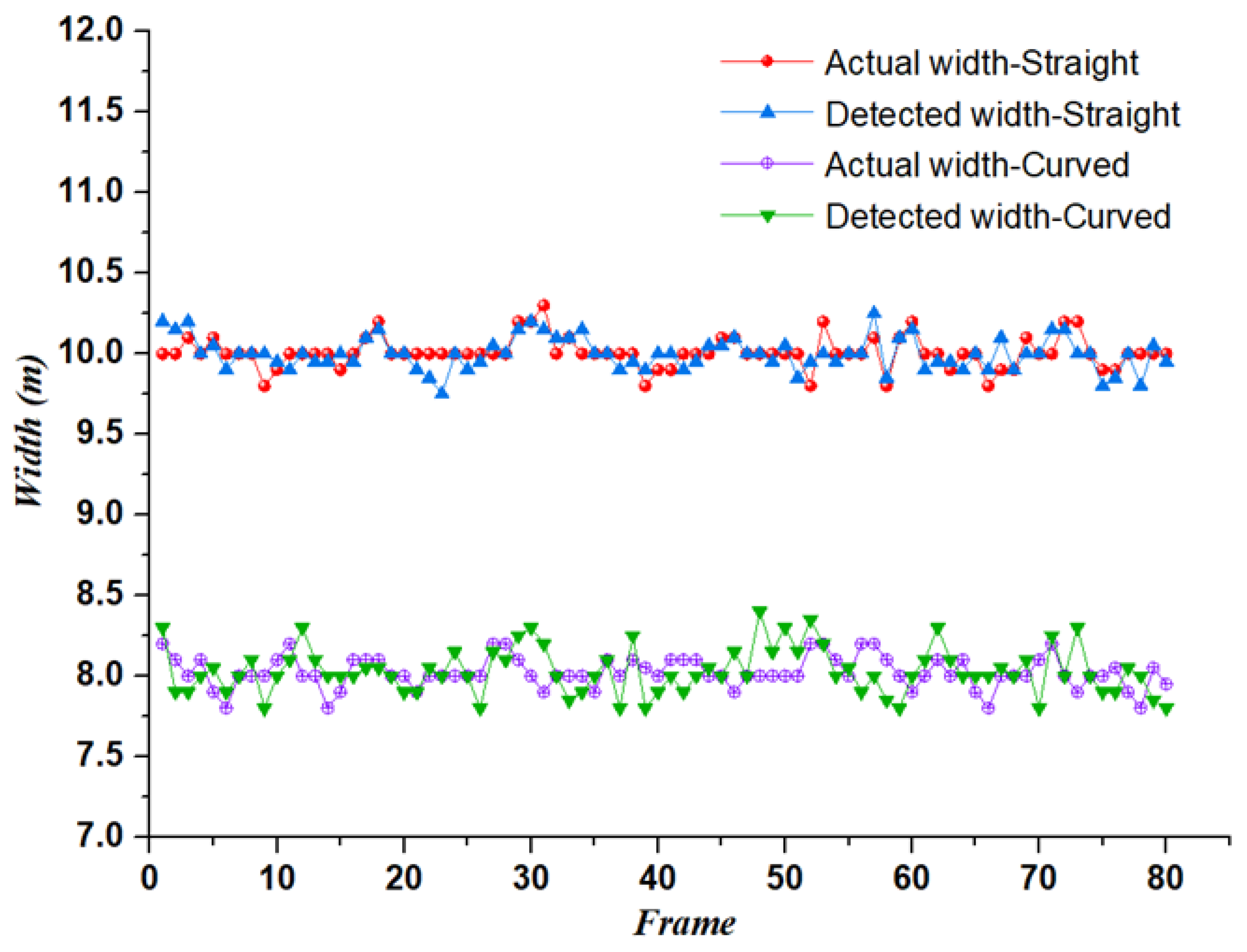

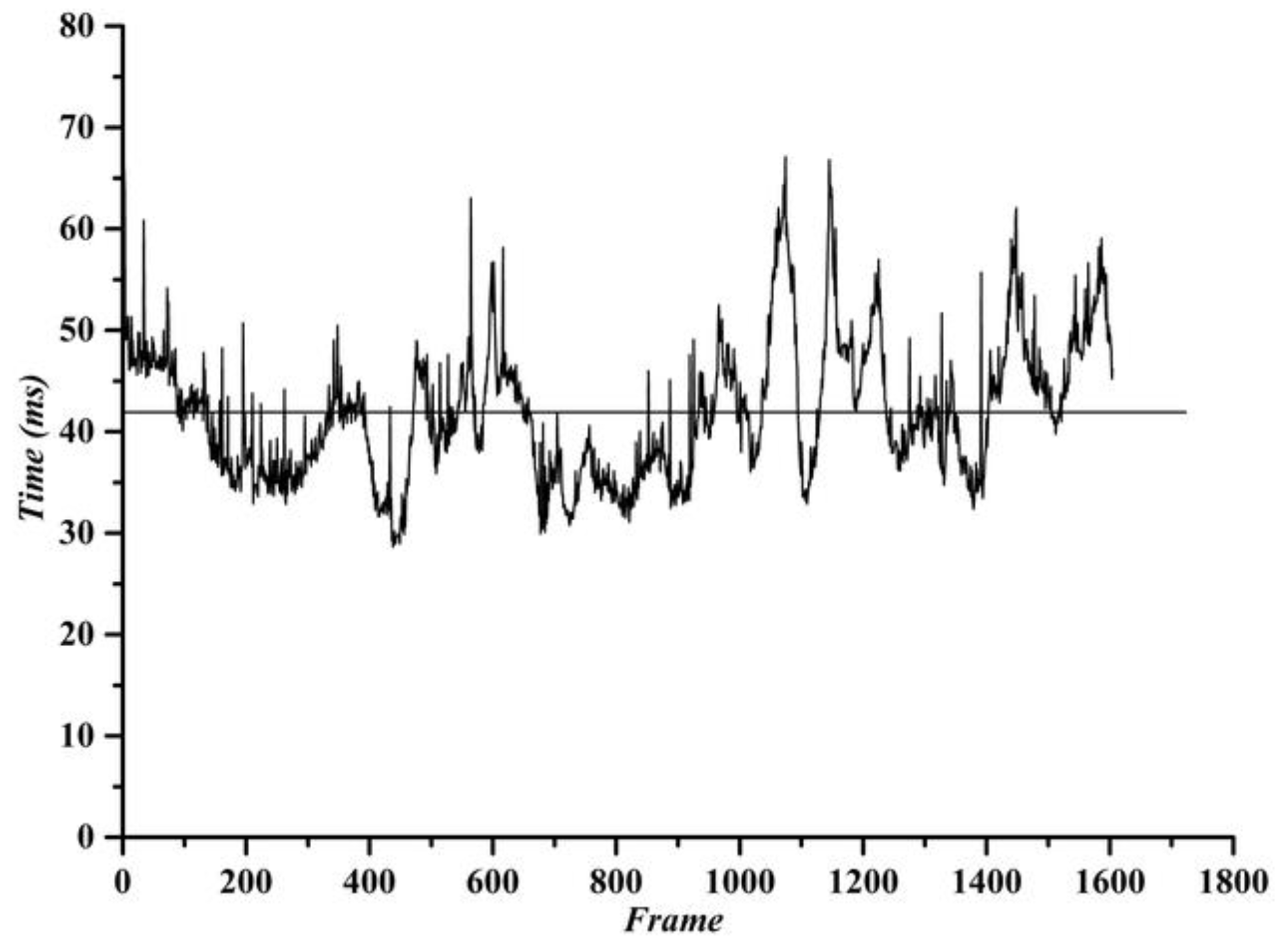

4.2. Results Evaluation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wu, B.F.; Lee, T.T.; Chiang, H.H.; Jiang, J.J.; Lien, C.N.; Liao, T.Y.; Perng, J.W. GPS navigation based autonomous driving system design for intelligent vehicles. IEEE Int. Conf. Syst. 2007, 2007, 3294–3299. [Google Scholar]

- Naranjo, J.E.; Gonzalez, C.; Garcia, R.; Pedro, T.; Revuelto, J.; Reviejo, J. Fuzzy logic based lateral control for GPS map tracking. In Proceedings of the IEEE Intelligent Vehicles Symp, Parma, Italy, 14–17 June 2004; pp. 397–400. [Google Scholar]

- Naranjo, J.E.; Gonzalez, C.; Garcia, R.; Pedro, T.; Haber, R.E. Power steering control architecture for automatic driving. IEEE Trans. Intell. Transp. Syst. 2005, 6, 406–415. [Google Scholar] [CrossRef]

- Lundgren, M.; Stenborg, E.; Svensson, L.; Hammarstrand, L. Vehicle self-localization using off-the-shelf sensors and a detailed map. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium (IV), Ypsilanti, MI, USA, 8–11 June 2014; pp. 522–528. [Google Scholar]

- Mobasheri, A.; Huang, H.S.; Degrossi, L.C.; Zipf, A. Enrichment of OpenStreetMap data completeness with sidewalk geometries using data mining techniques. Sensors 2018, 18, 509. [Google Scholar] [CrossRef]

- Luettel, T.; Himmelsbach, M.; Wuensche, H.J. Autonomous Ground Vehicles—Concepts and a Path to the Future. Proc. IEEE 2012, 100, 1831–1839. [Google Scholar] [CrossRef]

- Fritsch, J.; Kuhnl, T.; Geiger, A. A New Performance Measure and Evaluation Benchmark for Road Detection Algorithms. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, Hague, The Netherlands, 6–9 October 2013; pp. 1693–1700. [Google Scholar]

- Li, Q.; Chen, L.; Li, M.; Shaw, S.L.; Nuchter, A. A Sensor-Fusion Drivable-Region and Lane-Detection System for Autonomous Vehicle Navigation in Challenging Road Scenarios. IEEE Trans. Veh. Technol. 2014, 63, 540–555. [Google Scholar] [CrossRef]

- Tobias, K.; Kummert, F.; Fritsch, J. Monocular road segmentation using slow feature analysis. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 800–806. [Google Scholar]

- Guo, C.Z.; Yamabe, T.; Mita, S. Drivable Road Boundary Detection for Intelligent Vehicles Based on Stereovision with Plane-induced Homography. ACTA. Autom. Sin. 2013, 39, 371–380. [Google Scholar] [CrossRef]

- Yu, X.G.; Liu, D.X.; DAI, B. Road Edge Detection and Filtering Based on Unclosed Snakes and 2D LIDAR Data. Robot 2014, 36, 654–661. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, J.L.; Liu, D.X. A new curb detection method for unmanned ground vehicles using 2D sequential laser data. Sensors 2013, 13, 1102–1120. [Google Scholar] [CrossRef] [PubMed]

- Rami, A.J.; Mohammad, A.J.; Hubert, R. A novel edge detection algorithm for mobile robot path planning. J. Robot. 2018, 2018, 1–12. [Google Scholar]

- Seibert, A.; Hahnel, M.; Tewes, A.; Rojas, R. Camera based detection and classification of soft shoulders, curbs and guardrails. In Proceedings of the Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 853–858. [Google Scholar]

- Zhang, C.C.; Zhao, F.; Zhang, Q.L.; Chen, X.; Chen, Q. Control Method of Intelligent Tracking Trolley with Vision Navigation; China Academic Journal Electronic Publishing House: Beijing, China, 2018; pp. 191–196. [Google Scholar]

- Oniga, F.; Nedevschi, S. Polynomial Curb Detection Based on Dense Stereovision for Driving Assistance. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems (ITSC), Madeira Island, Portugal, 19–22 September 2010; pp. 1110–1115. [Google Scholar]

- Wijesoma, W.S.; Kodagoda, K.R.S.; Balasuriya A, P. Road-Boundary Detection and Tracking Using Ladar Sensing. Ieee Trans. Robot. Autom. 2004, 20, 456–464. [Google Scholar] [CrossRef]

- Bar, H.A.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar]

- Kang, J.M.; Zhao, X.M.; Xu, Z.G. Classification method of running environment features for unmanned vehicle. China J. Highw. Transp. 2016, 16, 140–148. [Google Scholar]

- Nan, J.; Kim, D.; Lee, M.; Sunwoo, M. Road boundary detection and tracking for structured and unstructured roads using 2D lidar sensor. Int. J. Automot. Technol. 2014, 15, 611–623. [Google Scholar]

- Kang, J.M.; Zhao, X.M.; Xu, Z.G. Loop Closure Detection of Unmanned Vehicle Trajectory Based on Geometric Relationship Between Features. China J. Highw. Transp. 2017, 30, 121–135. [Google Scholar]

- Liu, Z.; Liu, D.X.; Chen, T.T.; Wei, C.Y. Curb detection using 2D range data in a campus environment. In Proceedings of the Seventh International Conference on Image and Graphics, Piscataway, NJ, USA, 4–6 July 2013; pp. 291–296. [Google Scholar]

- Liu, J.; Liang, H.W.; Wang, Z.L.; Chen, X.C. A Framework for Applying Point Clouds Grabbed by, Multi-Beam LIDAR in Perceiving the Driving Environment. Sensors 2015, 15, 21931–21956. [Google Scholar] [CrossRef] [PubMed]

- Hata, A.Y.; Wolf, D.F. Feature Detection for Vehicle Localization in Urban Environments Using a Multilayer LIDAR. IEEE Trans. Intell. Transp. Syst. 2016, 17, 420–429. [Google Scholar] [CrossRef]

- Zhao, G.; Yuan, J. Curb detection and tracking using 3D-LIDAR scanner. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012; pp. 437–440. [Google Scholar]

- Pascoal, R.; Santos, V.; Premebida, C.; Nunes, U. Simultaneous Segmentation and Superquadrics Fitting in Laser-Range Data. IEEE Trans. Veh. Technol. 2015, 64, 441–452. [Google Scholar] [CrossRef]

- Liu, J.; Liang, H.W.; Mei, T.; Wang, L.Z.; Wu, Y.H.; Du, M.F.; Deng, Y. Road Curb Extraction Based on Road Shape Analysis. Robot 2016, 38, 322–328. [Google Scholar]

- Chen, T.T.; Dai, B.; Liu, D.X.; Song, J.Z.; Liu, Z. Velodyne-based curb detection up to 50 meters away. In Proceedings of the Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 241–248. [Google Scholar]

- Wang, J.; Kong, B.; Wang, C.; Yang, J. An Approach of Real-Time Boundary Detection Based on HDL-64E LIDAR. J. Hefei Univ. Technol. 2018, 41, 1029–1034. [Google Scholar]

- Zhang, Y.H.; Wang, J.; Wang, X.N.; Li, C.C.; Wang, L. 3D LIDAR-Based Intersection Recognition and Road Boundary Detection Method for Unmanned Ground Vehicle. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems (ITSC), Las Palmas, Spain, 15–18 September 2015; pp. 499–504. [Google Scholar]

- Su, Z.Y.; Xu, Y.C.; Peng, Y.S.; Wang, R.D. Enhanced Detection Method for Structured Road Edge Based on Point Clouds Density. Automot. Eng. 2017, 39, 833–838. [Google Scholar]

- Liu, G.R.; Zhou, M.Z.; Wang, L.L.; Wang, H. Control Model for Minimum Safe Inter-Vehicle Distance and Collision Avoidance Algorithm in Urban Traffic Condition. Automot. Eng. 2016, 38, 1200–1206. [Google Scholar]

- Petrovskaya, A.; Thrun, S. Model based vehicle detection and tracking for autonomous urban driving. Auton. Robot. 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Wang, X.; Wang, J.Q.; Li, K.Q.; Xu, C.; Li, X.F. Fast Segmentation of 3D Point Clouds for Intelligent Vehicles. J. Tsinghua Univ. 2014, 54, 1440–1446. [Google Scholar]

- Fardi, B.; Weigel, H.; Wanielik, G.; Takagi, K. Road Border Recognition Using FIR Images and LIDAR Signal Processing. In Proceedings of the Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 1278–1283. [Google Scholar]

- Hata, A.Y.; Osorio, F.S.; Wolf, D.F. Robust curb detection and vehicle localization in urban environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Piscataway, MI, USA, 8–11 June 2014; pp. 1257–1262. [Google Scholar]

- Shin, Y.; Jung, C.; Chung, W. Drivable Road Region Detection Using a Single Laser Range Finder for Outdoor Patrol Robots. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 877–882. [Google Scholar]

- Borcs, A.; Benedek, C. Extraction of Vehicle Groups in Airborne Lidar Point Clouds with Two-Level Point Processes. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1457–1489. [Google Scholar] [CrossRef]

- Jaehyun, H.; Dongchul, K.; Minchae, L.; Myoungho, S. Enhanced road boundary and obstacle detection using a down-looking LIDAR sensor. IEEE Trans. Veh. Technol 2012, 61, 971–985. [Google Scholar]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the segmentation of 3D LIDAR point clouds. In Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Zhu, H.D.; Song, W. Image retrieval based on different between pixels and motif matrix. Appl. Res. Comput. 2015, 32, 3151–3155. [Google Scholar]

| Experimental Object | Parameter Index | Parameter Value |

|---|---|---|

| Intelligent vehicle | h | 2.2 m |

| d1 | 2.4 m | |

| LIDAR | Horizontal field of view | 360° |

| Vertical field of view | −30.67~10.67° | |

| Vertical resolution | 1.33° | |

| Scanning frequency | 10 Hz | |

| Maximum detection distance | 70 m | |

| Camera | Maximum resolution | 1280 * 960 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Shao, J.; Guo, D. A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data. Sensors 2019, 19, 1551. https://doi.org/10.3390/s19071551

Li K, Shao J, Guo D. A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data. Sensors. 2019; 19(7):1551. https://doi.org/10.3390/s19071551

Chicago/Turabian StyleLi, Kai, Jinju Shao, and Dong Guo. 2019. "A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data" Sensors 19, no. 7: 1551. https://doi.org/10.3390/s19071551

APA StyleLi, K., Shao, J., & Guo, D. (2019). A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data. Sensors, 19(7), 1551. https://doi.org/10.3390/s19071551