A Posture Recognition Method Based on Indoor Positioning Technology

Abstract

:1. Introduction

2. Posture Estimation Method

2.1. Design of Positioning System

2.2. Position Arrangement of Receiving Tags

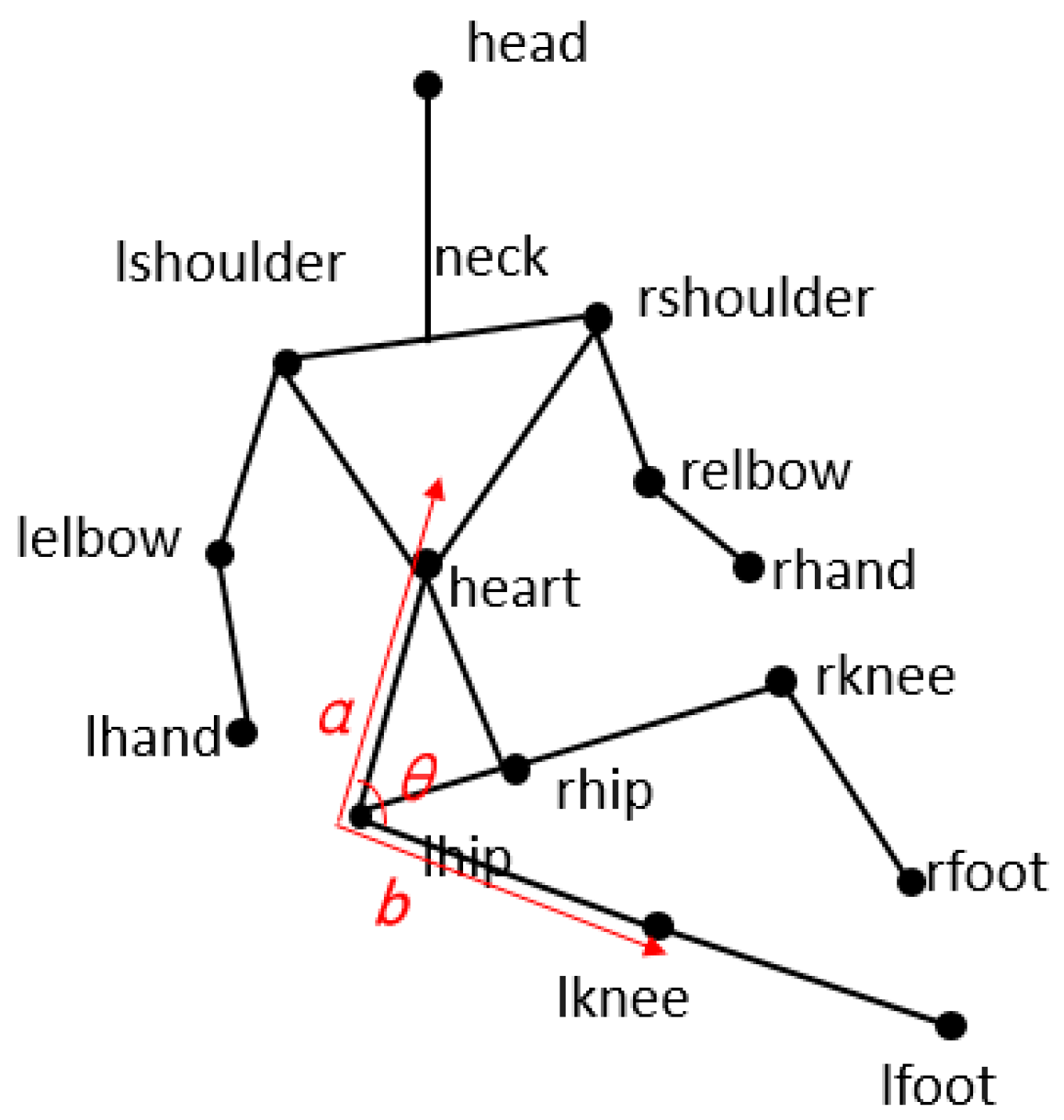

2.3. Structure Vector of Human Body

2.4. Vector Angle Setting

3. Positioning Algorithm

3.1. Positioning Principles

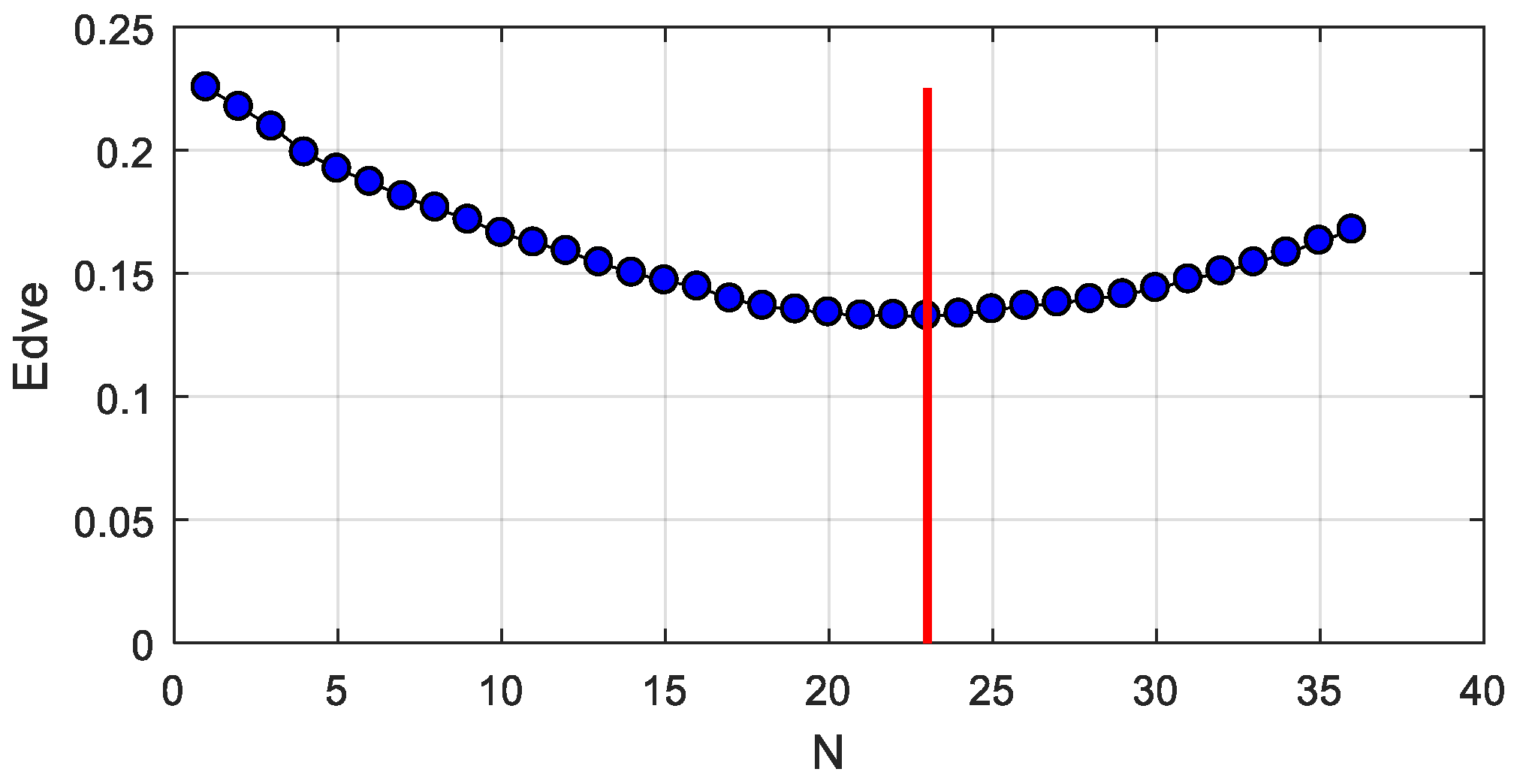

3.2. Improved and Extended Kalman Filtering Algorithm

4. Experiment

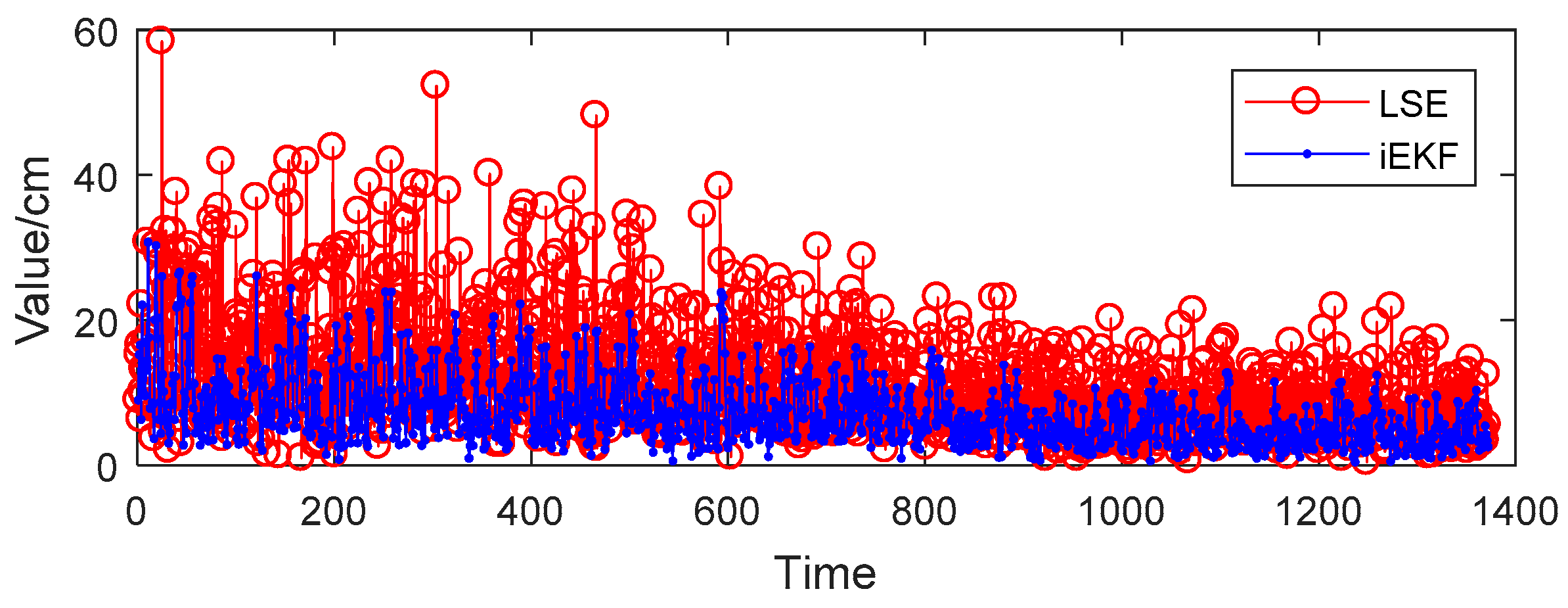

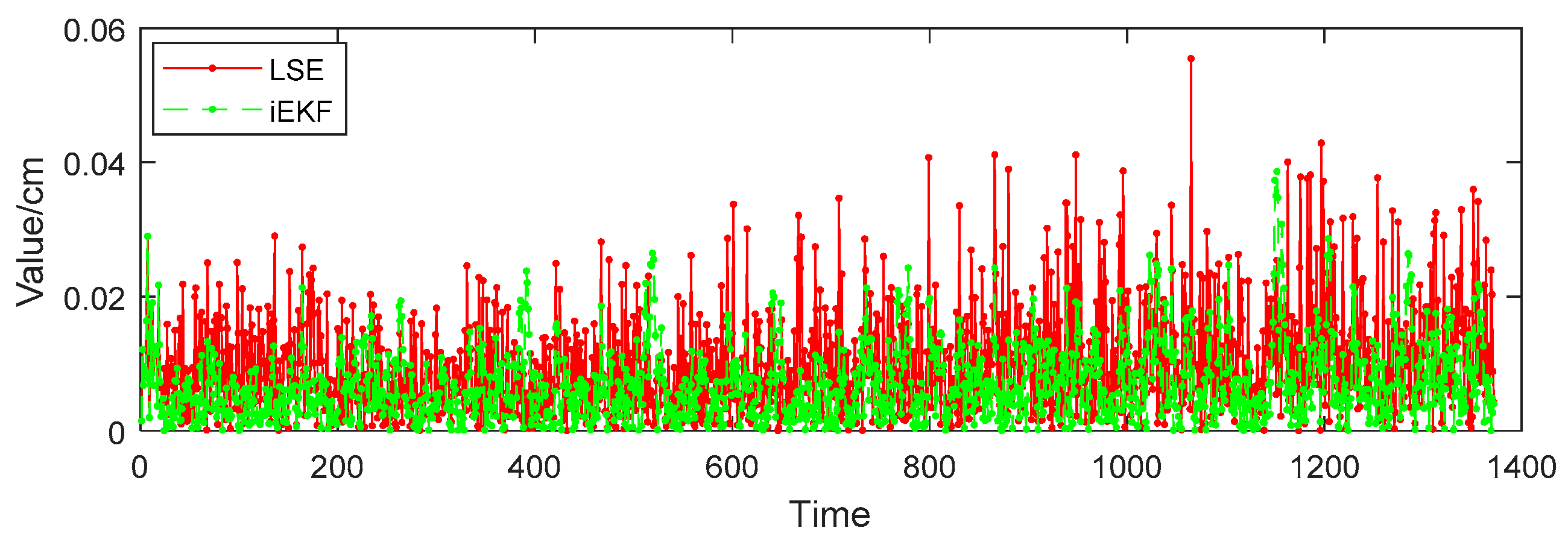

5. Results Analysis and Discussion

- (1)

- We have done basic posture recognition work for a single person. In order to improve algorithm robustness, multi-person postures need to be tested.

- (2)

- The experiment is only at the stage of algorithm simulation. Nonetheless, our system hardware is completed, and testing work of transplanted algorithms will be done in the following stage.

- (3)

- The final target is to deliver this wearable device to the elderly. More and more postures will be tested such as walking, sitting, sleeping, crawling, calling, falling down, and so on. Evaluating the weaknesses of the entire system and optimizing tasks need to be tested many times.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gong, S.; Wang, Y.; Zhang, M.; Wang, C. Design of Remote Elderly Health Monitoring System Based on MEMS Sensors. In Proceedings of the IEEE International Conference on Information and Automation (ICIA), Macau, China, 18–20 July 2017; pp. 494–498. [Google Scholar]

- Aging Stats. Available online: https://agingstats.gov/ (accessed on 25 March 2019).

- Yu, J.; Sun, J.; Li, W. 3D Human Pose Estimation Based on Multi-kernel Sparse Coding. Acta Electron. Sin. 2016, 44, 1899–1908. [Google Scholar]

- Dai, Q.; Shi, X.; Qiao, J.Z.; Liu, F.; Zhang, D.Y. Articulated Human Pose Estimation with Occlusion Level. J. Comput.-Aided Des. Comput. Graphics 2017, 29, 279–289. [Google Scholar]

- Kien, H.K.; Hung, N.K.; Chau, M.T.; Duyen, N.T.; Thanh, N.X. Single view image based-3D human pose reconstruction. In Proceedings of the 9th International Conference on Knowledge and Systems Engineering (KSE), Hue, Vietnam, 19–21 October 2017; pp. 118–123. [Google Scholar]

- Hong, C.; Yu, J.; Tao, D.; Wang, M. Image-Based Three-Dimensional Human Pose Recovery by Multiview Locality-Sensitive Sparse Retrieval. IEEE Trans. Ind. Electron. 2015, 62, 3742–3751. [Google Scholar]

- Tian, G.; Yin, J.; Han, X.; Yu, J. A Novel Human Activity Recognition Method Using Joint Points Information. Robot 2014, 36, 285–292. [Google Scholar]

- Abbondanza, P.; Giancola, S.; Sala, R.; Tarabini, M. Accuracy of the Microsoft Kinect System in the Identification of the Body Posture. In Proceedings of the 6th International Conference on Wireless Mobile Communication and Healthcare, Milan, Italy, 14–16 November 2016; pp. 289–296. [Google Scholar]

- Sombandith, V.; Walairacht, A.; Walairacht, S. Recognition of Lao Sentence Sign Language Using Kinect Sensor. In Proceedings of the 14th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Phuket, Thailand, 27–30 June 2017; pp. 656–659. [Google Scholar]

- Tripathy, S.R.; Chakravarty, K.; Sinha, A.; Chatterjee, D.; Saha, S.K. Constrained Kalman Filter for Improving Kinect Based Measurements. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Gaglio, S.; Re, G.L.; Morana, M. Human Activity Recognition Process Using 3-D Posture Data. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 586–597. [Google Scholar] [CrossRef]

- Kinect for Windows. Available online: https://developer.microsoft.com/en-us/windows/kinect (accessed on 25 March 2019).

- Pierleoni, P.; Belli, A.; Maurizi, L.; Palma, L.; Pernini, L.; Paniccia, M.; Valenti, S. A Wearable Fall Detector for Elderly People Based on AHRS and Barometric Sensor. IEEE Sens. J. 2016, 16, 6733–6744. [Google Scholar] [CrossRef]

- Musalek, M. A wearable fall detector for elderly people. In Proceedings of the 28th DAAAM International Symposium, Zadar, Croatia, 8–11 November 2017; pp. 1015–1020. [Google Scholar]

- Guo, G.; Chen, R.; Ye, F.; Chen, L.; Pan, Y.; Liu, M.; Cao, Z. A pose awareness solution for estimating pedestrian walking speed. Remote Sens. 2019, 11, 55. [Google Scholar] [CrossRef]

- Wang, J.; Huang, Z.; Zhang, W.; Patil, A.; Patil, K.; Zhu, T.; Shiroma, E.J.; Schepps, M.A.; Harris, T.B. Wearable sensor based human posture recognition. In Proceedings of the IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 3432–3438. [Google Scholar]

- Caroppo, A.; Leone, A.; Rescio, G.; Diraco, G.; Siciliano, P. Multi-sensor Platform for Detection of Anomalies in Human Sleep Patterns. In Proceedings of the 3rd National Conference Sensors, Rome, Italy, 23–25 February 2016; pp. 276–285. [Google Scholar]

- Wang, Z.; Yang, Z.; Dong, T. A review of wearable technologies for elderly care that can accurately track indoor position, recognize physical activities and monitor vital signs in real time. Sensors 2017, 17, 341. [Google Scholar] [CrossRef]

- Tian, X.; Li, W.; Yang, Y.; Zhang, Z.; Wang, X. Optimization of fingerprints reporting strategy for WLAN indoor localization. IEEE Trans. Mob. Comput. 2018, 17, 390–403. [Google Scholar] [CrossRef]

- Zuo, Z.; Liu, L.; Zhang, L.; Fang, Y. Indoor positioning based on Bluetooth low-energy beacons adopting graph optimization. Sensors 2018, 18, 3736. [Google Scholar] [CrossRef]

- Yasir, M.; Ho, S.-W.; Vellambi, B.N. Indoor position tracking using multiple optical receivers. J. Lightware Technol. 2016, 34, 1166–1176. [Google Scholar] [CrossRef]

- Antoniazzi, F.; Paolini, G.; Roffia, L.; Masotti, D.; Costanzo, A.; Cinotti, T.S. A web of things approach for indoor position monitoring of elderly and impaired people. In Proceedings of the Conference of Open Innovation Association (FRUCT), Helsinki, Finland, 6–10 November 2017; pp. 51–56. [Google Scholar]

- Dabove, P.; Di Pietra, V.; Piras, M.; Jabbar, A.A.; Kazim, S.A. Indoor positioning using ultra-wide band (UWB) technologies: positioning accuracies and sensors performances. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS 2018), Monterey, CA, USA, 23–26 April 2018; pp. 175–184. [Google Scholar]

- Li, H.B.; Miura, R.; Nishikawa, H.; Kagawa, T.; Kojima, F. Proposals and implementation of high band IR-UWB for increasing propagation distance for indoor positioning. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2018, E101A, 185–194. [Google Scholar] [CrossRef]

- Ridolfi, M.; Vandermeeren, S.; Defraye, J.; Steendam, H.; Gerlo, J.; De Clercq, D.; Hoebeke, J.; De Poorter, E. Experimental Evaluation of UWB Indoor Positioning for Sport Postures. Sensors 2018, 18, 168. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Yu, X.; Wang, Y.; Xiao, X. An integrated wireless wearable sensor system for posture recognition and indoor localization. Sensors 2016, 16, 1825. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Li, X.; Long, X. Underground operator monitoring based on ultra-wide band WSN. Int. J. Online Eng. 2018, 14, 219–229. [Google Scholar] [CrossRef]

- Xia, B.; Lao, Z.; Zhang, R.; Tian, Y.; Chen, G.; Sun, Z.; Wang, W.; Sun, W.; Lai, Y.; Wang, M.; et al. Online parameter identification and state of charge estimation of lithium-ion batteries based on forgetting factor recursive least squares and nonlinear Kalman filter. Energies 2018, 11, 3. [Google Scholar] [CrossRef]

- Xiao, M.; Zhang, Y.; Wang, Z.; Fu, H. An adaptive three-stage extended Kalman filter for nonlinear discrete-time system in presence of unknown inputs. ISA Trans. 2018, 75, 101–117. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.Y.; Chang, S.H. A skeleton-occluded repair method from Kinect. In Proceedings of the International Symposium on Computer, Consumer and Control, Taichung, Taiwan, 10–12 June 2014; pp. 264–267. [Google Scholar]

| Position | Tag Name | Position | Tag Name |

|---|---|---|---|

| Head | head | Central | heart |

| Left Shoulder | lshoulder | Right Shoulder | rshoulder |

| Left Elbow | lelbow | Right Elbow | relbow |

| Left Hand | lhand | Right Hand | rhand |

| Left Hip | lhip | Right Hip | rhip |

| Left Knee | lknee | Right Knee | rknee |

| Left Foot | lfoot | Right Foot | rfoot |

| Vector Name | Position | Vector Name | Position |

|---|---|---|---|

| I lelbow-to-lhand | Left Hand | Irelbow-to-rhand | Right Hand |

| I lshoulder-to-lelbow | Left Arm | I rshoulder-to-relbow | Left Arm |

| I lhip-to-lknee | Left Leg | I rhip-to-rknee | Left Leg |

| I lknee-to-lfoot | Left Foot | I rknee-to-rfoot | Left Foot |

| I head-to-lshoulder | Head-Left Shoulder | I head-to-rshoulder | Head-Right Shoulder |

| Posture Angle | Position | Posture Angle | Position |

|---|---|---|---|

| θ head-heart-lshoulder | Head-Heart-Left Shoulder | θ head-heart-rshoulder | Head-Heart-Right Shoulder |

| θ heart-lshoulder-lelbow | Heart-Left Shoulder-Elbow | θ heart-rshoulder-relbow | Heart-Right Shoulder-Elbow |

| θ lshoulder-lelbow-lhand | Left Shoulder-Elbow-Hand | θ rshoulder-relbow-rhand | Right Shoulder-Elbow-Hand |

| θ lhip-lknee-lfoot | Left Hip-Knee-foot | θ rhip-rknee-rfoot | Right Hip-Knee-foot |

| Name | LSE | iEKF |

|---|---|---|

| head | 11.70 cm | 7.16 cm |

| left shoulder | 11.83 cm | 7.24 cm |

| I heart-to-lshoulder | 0.32 cm | 0.20 cm |

| I lshoulder-to-lelbow | 0.22 cm | 0.14 cm |

| θ head-heart-lshoulder | 0.0075 rad | 0.0046 rad |

| θ heart-lshoulder-lelbow | 0.0104 rad | 0.0073 rad |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, X.; Wang, F.; Zhang, J.; Hu, Z.; Jin, J. A Posture Recognition Method Based on Indoor Positioning Technology. Sensors 2019, 19, 1464. https://doi.org/10.3390/s19061464

Huang X, Wang F, Zhang J, Hu Z, Jin J. A Posture Recognition Method Based on Indoor Positioning Technology. Sensors. 2019; 19(6):1464. https://doi.org/10.3390/s19061464

Chicago/Turabian StyleHuang, Xiaoping, Fei Wang, Jian Zhang, Zelin Hu, and Jian Jin. 2019. "A Posture Recognition Method Based on Indoor Positioning Technology" Sensors 19, no. 6: 1464. https://doi.org/10.3390/s19061464

APA StyleHuang, X., Wang, F., Zhang, J., Hu, Z., & Jin, J. (2019). A Posture Recognition Method Based on Indoor Positioning Technology. Sensors, 19(6), 1464. https://doi.org/10.3390/s19061464