A Survey of Vision-Based Human Action Evaluation Methods

Abstract

:1. Introduction

- (1)

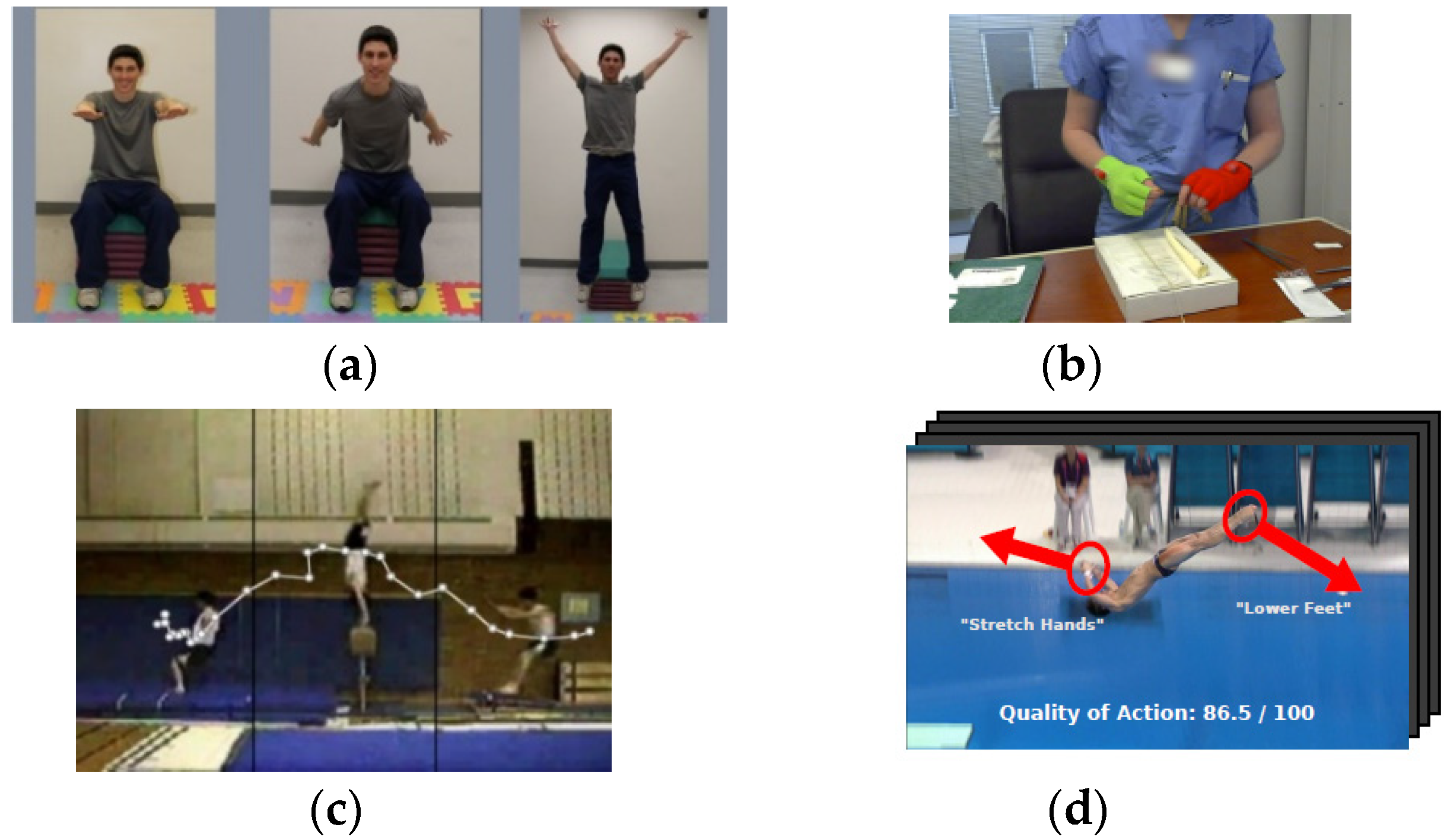

- Healthcare and rehabilitation. Physical therapy is essential for the recovery training of stroke survivors and sports injury patients. As supervision by a professional provided by a hospital or medical agency is expensive, home training with a vision-based rehabilitation system is preferred since it allows people to practice movement training privately and economically. In computer-aided therapy or rehabilitation, human action evaluation can be applied to assist patients training at home, guide them to perform actions properly, and prevent them from further injuries.

- (2)

- Skill training. Human action evaluation plays an important role in assessing the skill level of expert learners on self-learning platforms. For example, simulation-based surgical training platforms have been developed for surgical education. The technique of action quality assessment makes it possible to develop computational approaches that automatically evaluate the surgical students’ performance. Accordingly, meaningful feedback information can be provided to individuals and guide them to improve their skill levels. Another application field is the assembly line operations in industrial production. Human action evaluation can help to construct standardized action models related to different operation steps, as well as to evaluate the performance of trained workers. Automatically assessing the action quality of workers can be beneficial by improving working performance, promoting productive efficiency, and, more importantly, discovering dangerous actions before damage occurs.

- (3)

- Sports activity scoring. In recent years, the video assistant referee (VAR) system has been introduced to some international sports competition events. The VAR serves as a match official who reviews the referee staffing’s decisions on the basis of video footage. Thus, decision making can be affected by contacting the referee on the field of play. Human action evaluation makes it possible to analyze the motion quality of athletes, judge the normalization of postures or body movements, and score the action performances automatically. The VAR system facilitates detailed assessments of sports activities and ensures the fairness of competition.

- (1)

- A clear problem definition is given of human action evaluation and clarify the differences among the three tasks of human activity understanding: Action recognition, action prediction, and action evaluation. To the best of the authors’ knowledge, it is the first review of action evaluation research that involves the diversity of real-world applications, such as healthcare, physical rehabilitation, skill training, and sports activity scoring.

- (2)

- A thorough review of human action evaluation methods, including motion detection and preprocessing methods, handcrafted feature representation methods, and deep feature representation methods, are presented in this paper.

- (3)

- Some benchmark datasets are introduced and compared with the existing research works on these datasets to provide useful information for those interested in this field of research.

- (4)

- Moreover, some suggestions for future research are presented.

2. Problem Definition

- (1)

- From the perspective of the data to be processed, the temporal series of RGB video or depth sequences are a common data type in both action prediction and action evaluation. Still images are barely used for these two tasks. However, action recognition can usually be directly inferred from a still image or key frame of a video sequence. Furthermore, action prediction infers the class label with the condition that only parts of the action occurrence have been observed (the first part or middle part of the complete video). In contrast, accurate action evaluation estimation can only be achieved if complete human body movements have been observed and considered.

- (2)

- Of the different targets among the three research tasks, human action prediction focuses on discovering abnormal events by forecasting unseen human actions and distinguishing anomalous actions from normal ones. Action recognition tries to classify a given still image or video sequence into a predefined action category or to find the start frame and the end frame of an action occurrence from a video sequence. Action evaluation has been presented to make a computer automatically give a good or bad assessment for action quality and provide interpretable feedback.

- (3)

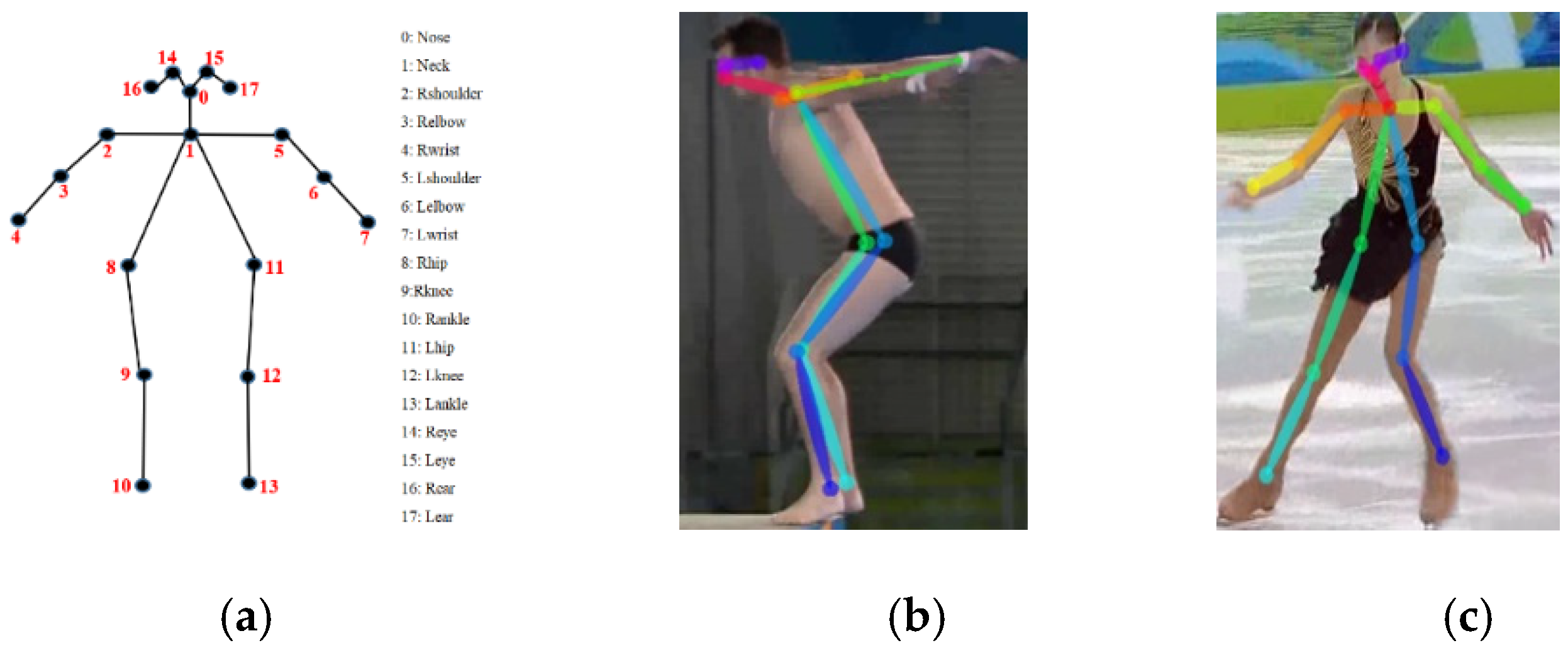

- Both human motion and environmental backgrounds provide important spatial–temporal information for action recognition and prediction. In contrast, the quality of action performance is directly determined by the dynamic changes in the human body or limbs, while it is less affected by environmental aspects. In particular, in the task of recognizing human–object interactions, such as people using telephones or playing instruments, the interacting objects provide an important clue for inferring the action category or intention. However, in a diving scoring application, the quality score of a diving performance involves the whole movement of the body and limbs of an athlete between taking off and entering the water. It matters whether the athletes’ feet are together and whether their toes are pointed straight throughout the whole diving process. The splash upon entering the water has a relatively minor impact only on the final stage assessment.

- (4)

- For facilitating action recognition research, large-scale datasets have been collected and provided to researchers in this field, such as ImageNet, UCF-101, HMDB51, and Sports-1M. Efficient action recognition methods, especially recent deep feature approaches, including 3D convolutional networks, recurrent neural networks, and graph convolutional neural networks, have been trained from these large-scale annotated datasets. On the contrary, only a few small-scale datasets oriented to some specific applications, such as physical therapy, healthcare, sports activity scoring, and skill training, have been available for the research on human action evaluation. Small-scale annotated training samples, limited action categories, a stationary camera position, and the same backgrounds are common shortcomings among the published action evaluation datasets.

3. Overview of Human Action Evaluation Methods

3.1. Detection and Preprocessing of Skeleton Data

3.1.1. Skeleton Data Detection

3.1.2. Skeleton Data Preprocessing

3.2. Handcrafted Feature Methods for Human Action Evaluation

3.2.1. Handcrafted Features for Physical Rehabilitation

3.2.2. Handcrafted Feature for Sports Activity Scoring

3.2.3. Handcrafted Feature for Skill Training

3.3. Deep Feature Methods for Human Action Evaluation

3.3.1. Deep Features for Physical Rehabilitation

3.3.2. Deep Features for Sports Activity Scoring

3.3.3. Deep Features for Skill Training

4. Benchmark Datasets and Evaluation Criteria

4.1. Datasets

4.1.1. Physical Rehabilitation Datasets

4.1.2. Sports Activity Scoring Datasets

4.1.3. Skill Training-Related Datasets

4.2. Performance Evaluation Criteria

5. Conclusions and Discussion

- (1)

- Most existing research works have directly employed traditional machine learning or state-of-the-art deep learning methods in the field of action recognition to tackle the problem of action evaluation. Thus, it is difficult to develop reliable action quality assessment algorithms without distinguishing the intrinsic difference between these two problems.

- (2)

- In the application of sports activity scoring, deep feature representation methods have been proved superior than handcrafted feature methods in their performance on benchmark datasets. Specifically, most of deep-learning methods significantly improve the scoring estimation results on MIT Olympic Scoring dataset than handcrafted approaches. The best correlation coefficient of dive scoring has been improved to 0.86, and the figure skating is 0.59 as introduced in Table 5. The reason is probably because that it is difficult to design one mechanical feature engine to extract various kinds of patterns for multiple-class action evaluation. Therefore, handcrafted feature representation is unsuitable for indicating characteristics of complex activities in long-duration video. On the other hand, most of deep learning methods have employed mechanical equal-sized division on long-duration video for the sake of reducing the parameter scale of learning networks. The important temporal information might be lost as a result of over-segmentation or false segmentation.

- (3)

- In skill training, both the handcrafted and deep learning feature methods achieved high classification accuracy on the JIGSAWS dataset as presented in Table 6. However, a three-category of (E, I, N) classification evaluation is rather simple to evaluate the different methods. More appropriate evaluation criteria, such as rank accuracy and score prediction, deserves further investigation. In physical rehabilitation, a few datasets have been publicly available due to private activities and property rights. The reviewed studies employed diverse evaluation criteria, such as an abnormal events detection rate, equal error rate, and a detection rate with a false acceptance rate, on their own datasets. It is difficult to compare them in a unified criterion. The pro and cons of different methods remains to be further observation.

- (4)

- The deep feature representation methods have significantly improved the performance on several benchmark datasets. However, their accuracy and efficiency are far from satisfactory and below the current application requirements.

- (5)

- There is still a lack of large-scale annotated datasets with a diversity of action categories and application fields. This is mainly because of the great labor cost of the domain experts’ professional annotation. There is also a lack of unified evaluation criteria to validate the effectiveness of the proposed methods.

- (1)

- It is reasonably assumed that the quality of human actions is directly determined by the dynamic change in human body movement rather than environmental factors. Thus, accurate skeleton data detection and deep feature representation methods based on skeleton data are the key issues in the development of reliable quality assessment algorithms for human action evaluation.

- (2)

- The segmentation of long-duration video on the basis of primitive action semantics and the representation of temporal relationships between action segments are important topics of future deep architecture research for human action evaluation. Most previously published deep learning methods have employed an equal-sized division of video to reduce the parameter scale of learning networks. The important temporal information might be lost as a result of over-segmentation or false segmentation.

- (3)

- The semantic granularity of the evaluation models needs to be further studied. Most existing studies have adopted a unified regression function to assess all action categories. Thus, the evaluation accuracy has significantly decreased under circumstances of unequally distributed training samples. Furthermore, an all-action regression model is not capable of assessing the quality of unseen actions. Whether a specific-action model or an all-action model is more suitable for evaluating the quality of actions and whether knowledge transfer can be adapted to train a unified evaluating model across action categories are promising directions that deserve study.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pirsiavash, H.; Vondrick, C.; Torralba, A. Assessing the Quality of Actions. In Proceedings of the European Conference on Computer Vision 2014; Springer: Cham, Switzerland, 2014; pp. 556–571. [Google Scholar] [Green Version]

- Patrona, F.; Chatzitofis, A.; Zarpalas, D.; Daras, P. Motion analysis: Action detection, recognition and evaluation based on motion capture data. Pattern Recognit. 2018, 76, 612–622. [Google Scholar] [CrossRef]

- Venkataraman, V.; Vlachos, I.; Turaga, P. Dynamical Regularity for Action Analysis. In 26th British Machine Vision Conference; British Machine Vision Association: Swansea, Wales, 2015; pp. 67.1–67.12. [Google Scholar]

- Weeratunga, K.; Dharmaratne, A.; How, K.B. Application of Computer Vision and Vector Space Model for Tactical Movement Classification in Badminton. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 132–138. [Google Scholar]

- Morel, M.; Kulpa, R.; Sorel, A. Automatic and Generic Evaluation of Spatial and Temporal Errors in Sport Motions. In Proceedings of the International Conference on Computer Vision Theory and Applications, Rome, Italy, 27–29 February 2016; pp. 542–551. [Google Scholar]

- Paiement, A.; Tao, L.; Hannuna, S. Online quality assessment of human movement from skeleton data. In Proceedings of the British Machine Vision Conference (BMVC 2014), Nottingham, UK, 1–5 September 2014; pp. 153–166. [Google Scholar]

- Antunes, M.; Baptista, R.; Demisse, G.; Aouada, D.; Ottersten, B. Visual and Human-Interpretable Feedback for Assisting Physical Activity. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 115–129. [Google Scholar]

- Baptista, R.; Antunes, M.; Aouada, D. Video-Based Feedback for Assisting Physical Activity. In Proceedings of the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP), Rome, Italy, 27 February–1 March 2017. [Google Scholar]

- Tao, L.; Paiement, A.; Damen, D. A comparative study of pose representation and dynamics modelling for online motion quality assessment. Comput. Vis. Image Underst. 2016, 148, 136–152. [Google Scholar] [CrossRef] [Green Version]

- Meng, M.; Drira, H.; Boonaert, J. Distances evolution analysis for online and off-line human object interaction recognition. Image Vis. Comput. 2018, 70, 32–45. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Liu, Z.; Zhou, L.; Leung, H.; Chan, A.B. Martial arts, dancing and sports dataset: A challenging stereo and multi-view dataset for 3d human pose estimation. Image Vis. Comput. 2017, 61, 22–39. [Google Scholar] [CrossRef]

- Laraba, S.; Tilmanne, J. Dance performance evaluation using hidden markov models. Comput. Animat. Virtual Worlds 2016, 27, 321–329. [Google Scholar] [CrossRef]

- Barnachon, M.; Boufama, B.; Guillou, E. A real-time system for motion retrieval and interpretation. Pattern Recognit. Lett. 2013, 34, 1789–1798. [Google Scholar] [CrossRef]

- Hu, M.C.; Chen, C.W.; Cheng, W.H.; Chang, C.H.; Lai, J.H.; Wu, J.L. Real-time human movement retrieval and assessment with kinect sensor. IEEE Trans. Cybern. 2014, 45, 742–753. [Google Scholar] [CrossRef]

- Liu, X.; He, G.F.; Peng, S.J.; Cheung, Y.M.; Tang, Y.Y. Efficient human motion retrieval via temporal adjacent bag of words and discriminative neighborhood preserving dictionary learning. IEEE Trans. Hum. Mach. Syst. 2017, 47, 763–776. [Google Scholar] [CrossRef]

- Girdhar, R.; Ramanan, D.; Gupta, A.; Sivic, J.; Russell, B. Actionvlad: Learning spatio-temporal aggregation for action classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 971–980. [Google Scholar]

- Wang, H.; Oneata, D.; Verbeek, J.; Schmid, C. A robust and efficient video representation for action recognition. Int. J. Comput. Vis. 2016, 119, 219–238. [Google Scholar] [CrossRef]

- Duarte, K.; Rawat, Y.S.; Shah, M. Videocapsulenet: A simplified network for action detection. Proceedings of Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 7610–7619. [Google Scholar]

- Zolfaghari, M.; Singh, K.; Brox, T. Eco: Efficient convolutional network for online video understanding. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 695–712. [Google Scholar]

- Vondrick, C.; Pirsiavash, H.; Torralba, A. Anticipating visual representations from unlabeled video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vegas Valley, NV, USA, 26 June–1 July 2016; pp. 98–106. [Google Scholar]

- Becattini, F.; Uricchio, T.; Seidenari, L.; Bimbo, A.D.; Ballan, L. Am I done? Predicting action progress in videos. arXiv 2017, arXiv:1705.01781. [Google Scholar]

- Parmar, P.; Morris, B. Measuring the quality of exercises. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Orlando, FL, USA, 16–20 August 2016; pp. 2241–2244. [Google Scholar]

- Zia, A.; Sharma, Y.; Bettadapura, V.; Sarin, E.L.; Clements, M.A.; Essa, I. Automated assessment of surgical skills using frequency analysis. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 430–438. [Google Scholar]

- Gordon, A.S. Automated video assessment of human performance. In Proceedings of the 7th World Conference on Artificial Intelligence in Education (AI-ED 1995), Washington, DC, USA, 16–19 August 1995; pp. 16–19. [Google Scholar]

- Atiqur Rahman Ahad, M.; Das Antar, A.; Shahid, O. Vision-based Action Understanding for Assistive Healthcare: A Short Review. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 1–11. [Google Scholar]

- Aggarwal, J.K.; Ryoo, M.S. Human activity analysis: A review. ACM Comput. Surv. (CSUR) 2011, 43, 16. [Google Scholar] [CrossRef]

- Yu, K.; Yun, F. Human Action Recognition and Prediction: A Survey. arXiv 2018, arXiv:1806.11230. [Google Scholar]

- Herath, S.; Harandi, M.; Porikli, F. Going deeper into action recognition: A survey. Image Vis. Comput. 2017, 60, 4–21. [Google Scholar] [CrossRef] [Green Version]

- Ziaeefard, M.; Bergevin, R. Semantic human activity recognition: A literature review. Pattern Recognit. 2015, 48, 2329–2345. [Google Scholar] [CrossRef]

- Zhang, H.B.; Zhang, Y.X.; Zhong, B.; Lei, Q.; Yang, L.; Du, J.X.; Chen, D.S. A Comprehensive Survey of Vision-Based Human Action Recognition Methods. Sensors 2019, 19, 1005. [Google Scholar] [CrossRef] [PubMed]

- POPPE, R. A survey on vision-based human action recognition. Image Vis. Comput. 2010, 28, 976–990. [Google Scholar] [CrossRef]

- Zhu, F.; Shao, L.; Xie, J.; Fang, Y. From handcrafted to learned representations for human action recognition: A survey. Image Vis. Comput. 2016, 55, 42–52. [Google Scholar] [CrossRef]

- Guo, G.; Lai, A. A survey on still image based human action recognition. Pattern Recognit. 2014, 47, 3343–3361. [Google Scholar] [CrossRef]

- Alexander, K.; Marszalek, M.; Schmid, C. A Spatio-Temporal Descriptor Based on 3D-Gradients. In Proceedings of the British Machine Vision Conference 2008, Leeds, UK, 1–4 September 2008. [Google Scholar]

- Liu, J.; Kuipers, B.; Sararese, S. Recognizing human actions by attributes. In Proceedings of the 24th IEEE Conference on Computer Vision and Pattern Recognition 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3337–3344. [Google Scholar]

- Neibles, J.C.; Chen, C.W.; Li, F.F. Modeling temporal structure of decomposable motion segments for activity classification. In Proceedings of the European Conference on Computer Vision 2010, Heraklion, Greece, 5–11 September 2010; pp. 392–405. [Google Scholar]

- Shu, Z.; Yun, K.; Samaras, D. Action Detection with Improved Dense Trajectories and Sliding Window. In Proceedings of ECCV 2014; Springer: Cham, Switzerland; pp. 541–551.

- Oneata, D.; Verbeek, J.J.; Schmid, C. Efficient Action Localization with Approximately Normalized Fisher Vectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2014, Columbus, OH, USA, 23–28 June 2014; pp. 2545–2552. [Google Scholar]

- Shou, Z.; Wang, D.; Chang, S.F. Temporal action localization in untrimmed videos via multi-stage cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Vegas Valley, NV, USA, 26 June–1 July 2016; pp. 1049–1058. [Google Scholar]

- Yu, G.; Yuan, J. Fast action proposals for human action detection and search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 1302–1311. [Google Scholar]

- Zhao, Y.; Xiong, Y.; Wang, L.; Wu, Z.; Tang, X.; Lin, D. Temporal action detection with structured segment networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2017, Venice, Italy, 22–29 October 2017; pp. 2914–2923. [Google Scholar]

- Kong, Y.; Fu, Y. Max-margin heterogeneous information machine for RGB-D action recognition. Int. J. Comput. Vis. 2017, 123, 350–371. [Google Scholar] [CrossRef]

- Hu, J.F.; Zheng, W.S.; Ma, L.; Wang, G.; Lai, J.H.; Zhang, J. Early action prediction by soft regression. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 1. [Google Scholar] [CrossRef]

- Martinez, J.; Black, M.J.; Romero, J. On human motion prediction using recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2891–2900. [Google Scholar]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Savarese, S. Social lstm: Human trajectory prediction in crowded spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Vegas Valley, NV, USA, 26 June–1 July 2016; pp. 961–971. [Google Scholar]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-end learning of driving models from large-scale video datasets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2174–2182. [Google Scholar]

- Kuefler, A.; Morton, J.; Wheeler, T.; Kochenderfer, M. Imitating driver behavior with generative adversarial networks. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV 2017), Los Angeles, CA, USA, 11–14 June 2017; pp. 204–211. [Google Scholar]

- Alexiadis, D.S.; Kelly, P.; Daras, P.; OConnor, N.E.; Boubekeur, T.; Moussa, M.B. Evaluating a dancer’s performance using kinect-based skeleton tracking. In Proceedings of the 19th ACM international conference on Multimedia ACM 2011, Scottsdale, AZ, USA, 28 November–1 December 2011; pp. 659–662. [Google Scholar]

- Jug, M.; Pers, J.; Dezman, B.; Kovacic, S. Trajectory based assessment of coordinated human activity. In International Conference on Computer Vision Systems 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 534–543. [Google Scholar]

- Reiley, C.E.; Lin, H.C.; Yuh, D.D.; Hager, G.D. Review of methods for objective surgical skill evaluation. Surg. Endosc. 2011, 25, 356–366. [Google Scholar] [CrossRef] [PubMed]

- Ilg, W.; Mezger, J.; Giese, M. Estimation of skill levels in sports based on hierarchical spatio-temporal correspondences. In Joint Pattern Recognition Symposium 2003, Magdeburg, Germany, 10–12 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 523–531. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.D.; Fergus, R.; Torresani, L.; Paluri, M. C3D: Generic features for video analysis. arXiv 2014, arXiv:1412.0767. [Google Scholar]

- Shi, X.J.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Neural Information Processing Systems, Motreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Ng, J.Y.H.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4694–4702. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D. Cascade object detection with deformable part models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010), San Francisco, CA, USA, 13–18 June 2010; pp. 2241–2248. [Google Scholar]

- Yang, Y.; Ramanan, D. Articulated Pose Estimation with Flexible Mixtures of Parts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011), Colorado Springs, CO, USA, 20–25 June 2011; pp. 1385–1392. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the 30th IEEE Conference Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Fang, H.S.; Xie, S.; Tai, Y.W.; Lu, C. Rmpe: Regional multi-person pose estimation. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 2334–2343. [Google Scholar]

- Guler, R.A.; Neverova, N.; Kokkinos, I. Densepose: Dense human pose estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7297–7306. [Google Scholar]

- Han, F.; Reily, B.; Hoff, W.; Zhang, H. Space-time representation of people based on 3d skeletal data: A review. Comput. Vis. Image Underst. 2017, 158, 85–105. [Google Scholar] [CrossRef]

- Pazhoumand-Dar, H.; Lam, C.P.; Masek, M. Joint movement similarities for robust 3d action recognition using skeletal data. J. Vis. Commun. Image Represent. 2015, 30, 10–21. [Google Scholar] [CrossRef]

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Sequence of the Most Informative Joints (SMIJ): A new representation for human skeletal action recognition. J. Vis. Commun. Image Represent. 2014, 25, 24–38. [Google Scholar] [CrossRef]

- Wang, P.; Li, W.; Li, C.; Hou, Y. Action recognition based on joint trajectory maps with convolutional neural networks. Knowl. Based Syst. 2018, 158, 43–53. [Google Scholar] [CrossRef] [Green Version]

- Amor, B.B.; Su, J.; Srivastava, A. Action recognition using rate-invariant analysis of skeletal shape trajectories. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1–13. [Google Scholar] [CrossRef]

- Dollar, P.; Rabaud, V.; Cottrell, G. Behaviour recognition via sparse spatio-temporal features. In Proceedings of the 2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar]

- Laptev, I.; Lindeberg, T. On Space-time interest points. In Proceedings of the International Conference on Computer Vision 2003, Nice, France, 14–17 October 2003; pp. 432–439. [Google Scholar]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the Conference on Computer Vision and Pattern Recognition 2008, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional SIFT descriptor and its application to action recognition. In Proceedings of the International Conference on Multimedia 2007, Augsburg, Germany, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Wang, H.; Schmid, C. Action Recognition with Improved Trajectories. In Proceedings of the IEEE International Conference on Computer Vision 2013, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

- Csurka, G.; Dance, C.; Fan, L. Visual Categorization with Bags of Keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision (ECCV), Prague, Czech Republic, 11–14 May 2004; pp. 1–22. [Google Scholar]

- Vicente, I.S.; Kyrki, V.; Kragic, D.; Larsson, M. Action recognition and understanding through motor primitives. Adv. Robot. 2007, 21, 1687–1707. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Duff, M.; Lehrer, N.; Sundaram, H.; He, J.; Wolf, S.L.; Rikakis, T. A computational framework for quantitative evaluation of movement during rehabilitation. AIP Conf. Proc. 2011, 1371, 317–326. [Google Scholar]

- Venkataraman, V.; Turaga, P.; Lehrer, N.; Baran, M.; Rikakis, T.; Wolf, S. Attractor-shape for dynamical analysis of human movement: Applications in stroke rehabilitation and action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2013, Portland, OR, USA, 23–28 June 2013; pp. 514–520. [Google Scholar]

- Çeliktutan, O.; Akgul, C.B.; Wolf, C.; Sankur, B. Graph-based analysis of physical exercise actions. In Proceedings of the 1st ACM international workshop on Multimedia indexing and information retrieval for healthcare 2013, Barcelona, Spain, 22 October 2013; pp. 23–32. [Google Scholar]

- Elkholy, A.; Hussein, M.; Gomaa, W.; Damen, D.; Saba, E. Efficient and Robust Skeleton-Based Quality Assessment and Abnormality Detection in Human Action Performance. IEEE J. Biomed. Health Inform. 2019. [Google Scholar] [CrossRef] [PubMed]

- Wnuk, K.; Soatto, S. Analyzing diving: A dataset for judging action quality. In Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 266–276. [Google Scholar]

- Sharma, Y.; Bettadapura, V.; Plotz, T.; Hammerla, N.; Mellor, S.; McNaney, R.; Olivier, P.; Deshmukh, S.; McCaskie, A.; Essa, I. Video based assessment of OSATs using sequential motion textures. In Proceedings of the International Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI)- Workshop, Boston, MA, USA, 14–18 September 2014. [Google Scholar]

- Zia, A.; Sharma, Y.; Bettadapura, V.; Sarin, E.L.; Ploetz, T.; Clements, M.A.; Essa, I. Automated video-based assessment of surgical skills for training and evaluation in medical schools. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1623–1636. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zia, A. Automated Benchmarking of Surgical Skills Using Machine Learning. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2018. [Google Scholar]

- Fard, M.J.; Ameri, S.; Darin Ellis, R.; Chinnam, R.B.; Pandya, A.K.; Klein, M.D. Automated robot-assisted surgical skill evaluation: Predictive analytics approach. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1850. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Sun, L.; Jia, K.; Yeung, D.Y.; Shi, B.E. Human action recognition using factorized spatio-temporal convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 4597–4605. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Li, Z.; Gavrilyuk, K.; Gavves, E.; Jain, M.; Snoek, C.G. Videolstm convolves, attends and flows for action recognition. Comput. Vis. Image Underst. 2018, 166, 41–50. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Neural Information Processing Systems, Motreal, QC, Canada, 8–13 December 2014; pp. 568–576. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Vegas Valley, NV, USA, 26 June–1 July 2016; pp. 1933–1941. [Google Scholar]

- Vakanski, A.; Jun, H.P.; Paul, D.; Baker, R. A data set of human body movements for physical rehabilitation exercises. Data 2018, 3, 2. [Google Scholar] [CrossRef]

- Liao, Y.; Vakanski, A.; Xian, M. A Deep Learning Framework for Assessing Physical Rehabilitation Exercises. arXiv 2019, arXiv:1901.10435. [Google Scholar]

- Antunes, J.; Bernardino, A.; Smailagic, A.; Siewiorek, D.P. AHA-3D: A Labelled Dataset for Senior Fitness Exercise Recognition and Segmentation from 3D Skeletal Data. In Proceedings of the BMVC 2018, Newcastle, UK, 3–6 September 2018; p. 332. [Google Scholar]

- Blanchard, N.; Skinner, K.; Kemp, A.; Scheirer, W.; Flynn, P. “Keep Me in Coach!”: A Computer Vision Perspective on Assessing ACL Injury Risk in Female Athletes. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1366–1374. [Google Scholar]

- Parmar, P.; Morris, B.T. Learning to score olympic events. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 20–28. [Google Scholar]

- Parmar, P.; Morris, B.T. Action Quality Assessment Across Multiple Actions. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1468–1476. [Google Scholar]

- Parmar, P.; Morris, B.T. What and How Well You Performed? A Multitask Learning Approach to Action Quality Assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 304–313. [Google Scholar]

- Xu, C.; Fu, Y.; Zhang, B.; Chen, Z.; Jiang, Y.G.; Xue, X. Learning to Score Figure Skating Sport Videos. IEEE Trans. Circuits Syst. Video Technol. 2019. [Google Scholar] [CrossRef]

- Xiang, X.; Tian, Y.; Reiter, A.; Hager, G.D.; Tran, T.D. S3d: Stacking segmental p3d for action quality assessment. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 928–932. [Google Scholar]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal convolutional networks for action segmentation and detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 156–165. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatiotemporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Li, Y.; Chai, X.; Chen, X. End-to-end learning for action quality assessment. In Proceedings of the Pacific Rim Conference on Multimedia 2018, Hefei, China, 21–22 September 2018; pp. 125–134. [Google Scholar]

- Li, Y.; Chai, X.; Chen, X. ScoringNet: Learning Key Fragment for Action Quality Assessment with Ranking Loss in Skilled Sports. In Proceedings of the Asian Conference on Computer Vision 2018, Perth, Australia, 2–6 December 2018; pp. 149–164. [Google Scholar]

- McNally, W.; Vats, K.; Pinto, T.; Dulhanty, C.; McPhee, J.; Wong, A. GolfDB: A Video Database for Golf Swing Sequencing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2019, Long Beach, CA, USA, 15–21 June 2019. [Google Scholar]

- Yadav, S.K.; Singh, A.; Gupta, A.; Raheja, J.L. Real-time Yoga recognition using deep learning. Neural Comput. Appl. 2019, 1–13. [Google Scholar] [CrossRef]

- Wang, Z.; Fey, A.M. Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1959–1970. [Google Scholar] [CrossRef] [Green Version]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Evaluating surgical skills from kinematic data using convolutional neural networks. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention 2018, Granada, Spain, 16–20 September 2018; pp. 214–221. [Google Scholar]

- Funke, I.; Mees, S.T.; Weitz, J.; Speidel, S. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1217–1225. [Google Scholar] [CrossRef] [Green Version]

- Doughty, H.; Damen, D.; Mayol-Cuevas, W. Who’s Better? Who’s Best? Pairwise Deep Ranking for Skill Determination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6057–6066. [Google Scholar]

- Doughty, H.; Mayol-Cuevas, W.; Damen, D. The Pros and Cons: Rank-aware temporal attention for skill determination in long videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019. [Google Scholar]

- Li, Z.; Huang, Y.; Cai, M.; Sato, Y. Manipulation-skill assessment from videos with spatial attention network. arXiv 2019, arXiv:1901.02579. [Google Scholar]

- SPHERE-Staircase 2014 Dataset. Available online: https://data.bris.ac.uk/data/dataset/bgresiy3olk41nilo7k6xpkqf (accessed on 23 July 2019).

- SPHERE-Walking 2015 Dataset. Available online: http://cs.swansea.ac.uk/~csadeline/datasets/SPHERE-Walking2015_skeletons_only.zip (accessed on 23 July 2019).

- SPHERE-SitStand 2015 Dataset. Available online: http://cs.swansea.ac.uk/~csadeline/datasets/SPHERE-SitStand2015_skeletons_only.zip (accessed on 23 July 2019).

- UI-PRMD Dataset. Available online: https://webpages.uidaho.edu/ui-prmd/ (accessed on 23 July 2019).

- AHA-3D Dataset. Available online: http://vislab.isr.ist.utl.pt/datasets/ (accessed on 23 July 2019).

- Tao, L.; Elhamifar, E.; Khudanpur, S.; Hager, G.D.; Vidal, R. Sparse hidden markov models for surgical gesture classification and skill evaluation. In Proceedings of the International Conference on Information Processing in Computer-Assisted Interventions 2012, Pisa, Italy, 27 June 2012; pp. 167–177. [Google Scholar]

- Forestier, G.; Petitjean, F.; Senin, P.; Despinoy, F.; Jannin, P. Discovering discriminative and interpretable patterns for surgical motion analysis. In Conference on Artificial Intelligence in Medicine in Europe 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 136–145. [Google Scholar]

- Zia, A.; Essa, I. Automated surgical skill assessment in RMIS training. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 731–739. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- GolfDB Database. Available online: https://github.com/wmcnally/GolfDB (accessed on 23 July 2019).

- Yoga Dataset. Available online: https://archive.org/details/YogaVidCollected (accessed on 23 July 2019).

- JIGSAWS Dataset. Available online: https://cirl.lcsr.jhu.edu/research/hmm/datasets/ (accessed on 23 July 2019).

- Gao, Y.; Vedula, S.S.; Reiley, C.E.; Ahmidi, N.; Varadarajan, B.; Lin, H.C.; Tao, L.; Zappella, L.; Bejar, B.; Yuh, D.D.; et al. The JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS): A Surgical Activity Dataset for Human Motion Modeling. In Proceedings of the Modeling and Monitoring of Computer Assisted Interventions (M2CAI)—MICCAI Workshop, Boston, MA, USA, 14–18 September 2014; p. 3. [Google Scholar]

- Ahmidi, N.; Tao, L.; Sefati, S.; Gao, Y.; Lea, C.; Haro, B.B.; Zappella, L.; Khudanpur, S.; Vidal, R.; Hager, G.D. A Dataset and Benchmarks for Segmentation and Recognition of Gestures in Robotic Surgery. IEEE Trans. Biomed. Eng. 2017, 64, 2025–2041. [Google Scholar] [CrossRef] [PubMed]

- EPIC-Skills 2018 Dataset. Available online: http://people.cs.bris.ac.uk/~damen/Skill/ (accessed on 23 July 2019).

- BEST 2019 Dataset. Available online: https://github.com/hazeld/rank-awareattention-network (accessed on 23 July 2019).

- The Breakfast Actions Dataset. Available online: http://serre-lab.clps.brown.edu/resource/breakfast-actions-dataset/ (accessed on 23 July 2019).

- ADL Dataset. Available online: https://www.csee.umbc.edu/~hpirsiav/papers/ADLdataset/ (accessed on 23 July 2019).

- Rohrbach, M.; Amin, S.; Andriluka, M.L.; Schiele, B. A Database for Fine Grained Activity Detection of Cooking Activities. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2012, Providence, RI, USA, 16–21 June 2012; pp. 1194–1201. [Google Scholar]

- Sigurdsson, G.A.; Varol, G.; Wang, X.; Farhadi, A.; Laptev, I.; Gupta, A. Hollywood in homes: Crowdsourcing data collection for activity understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 510–526. [Google Scholar]

- Damen, D.; Doughty, H.; Maria Farinella, G. Scaling egocentric vision: The epic-kitchens dataset. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; pp. 720–736. [Google Scholar]

| Applications Methods | Physical Therapy | Sports Activity Scoring | Skill Training |

|---|---|---|---|

| Skeleton or kinematic data-based methods | [6,7,8,9,22,90,91,92] | [1,2,3,4,5,48,51,104] | [83,105,106,116,117,118] |

| Handcrafted feature learning methods | [6,7,8,9,75,76,77,78] | [1,2,3,4,5,24,48,49,51,79] | [23,50,80,81,82,83,116,117,118] |

| Deep feature learning methods | [22,90,91,92,93] | [94,95,96,97,98,101,102,103,104] | [105,106,107,108,109,110] |

| Dataset Name | #Action Categories | #Persons | #Samples | Data Modality |

|---|---|---|---|---|

| SPHERE-Staircase2014 dataset [111] | 1 | 12 | 48 | Depth sequences, skeletons |

| SPHERE-Walking2015 dataset [112] | 1 | 10 | 40 | Depth sequences, skeletons |

| SPHERE-SitStand 2015 dataset [113] | 2 | 10 | 109 | Depth sequences, skeletons |

| UI-PRMD dataset [114] | 10 | 10 | 100 | Positions and angles of body joints in the skeletal models |

| AHA-3D dataset [115] | 4 | 21 | 79 | 3D skeletal sequences, RGB images |

| Dataset Name | #Action Categories | #Samples | View of Samples | Background of Samples |

|---|---|---|---|---|

| FINA09 diving dataset [79] | 1 | 68 | Front, side | Same |

| MIT Olympic Scoring dataset [1] | 2 | 309 | Variations | Same |

| UNLV AQA-7 dataset [95] | 7 | 1189 | Severe changes | Different |

| MTL-AQA dataset [96] | 1 | 1412 | Severe changes | Different |

| Fis-V dataset [97] | 1 | 500 | Severe changes | Different |

| GolfDB dataset [119] | 1 | 1400 | Multiple views | Different |

| YogaVidCollected dataset [120] | 6 | 88 | Small changes | Same |

| Dataset Name | #Action Categories | #Samples | Data Modality | View of Samples | Background of Samples |

|---|---|---|---|---|---|

| JIGSAWS dataset [122] | 3 | 103 | Kinematics data, video data | two left and right cameras | Same |

| EPIC-Skills 2018 dataset [124] | 6 | 216 | Video data | single view | Different |

| BEST 2019 dataset [125] | 5 | 500 | Video data | Severe changes | Different |

| Breakfast Actions database [126] | 10 | 1989 | Video data | 3–5 cameras | Different |

| ADL dataset [127] | 18 | 440 | Video data | 170-degree first-person view angle | Different |

| Methods | Year | MIT Olympic Scoring Diving | MIT Olympic Scoring Skating | UNLV AQA-7 Diving | UNLV AQA-7 Vault |

|---|---|---|---|---|---|

| [1] | 2014 | 0.41 | 0.35 | ||

| [3] | 2015 | 0.45 | |||

| [94] D | 2017 | 0.74 | 0.53 | 0.79 | 0.68 |

| [95] D | 2018 | 0.61 | 0.67 | ||

| [101] D | 2018 | 0.57 | 0.80 | 0.70 | |

| [102] D | 2018 | 0.78 | 0.84 | 0.70 | |

| [98] D | 2018 | 0.86 | |||

| [97] D | 2019 | 0.59 |

| Method | Year | Evaluation Criteria | Action Categories | ||

|---|---|---|---|---|---|

| Suturing | Knot Tying | Needle Passing | |||

| [116] | 2012 | Classification accuracy | 97.4% | 96.2% | 94.4% |

| [117] | 2017 | Classification accuracy | 89.7% | 96.3% | 61.1% |

| [118] | 2018 | Classification accuracy | 100% | 99.9% | 100% |

| Score prediction (Correlation Coefficient) | 0.75 | 0.63 | 0.46 | ||

| [83] | 2018 | Classification accuracy | 89.9% | 95.8% | 82.3% |

| [105] D | 2018 | Classification accuracy | 93.4% | 89.8% | 84.9% |

| [107] D | 2019 | Classification accuracy | 100% | - | 96.4% |

| [108] D | 2018 | Rank accuracy | 70.2% | ||

| [110] D | 2019 | Rank accuracy | 73.1% | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, Q.; Du, J.-X.; Zhang, H.-B.; Ye, S.; Chen, D.-S. A Survey of Vision-Based Human Action Evaluation Methods. Sensors 2019, 19, 4129. https://doi.org/10.3390/s19194129

Lei Q, Du J-X, Zhang H-B, Ye S, Chen D-S. A Survey of Vision-Based Human Action Evaluation Methods. Sensors. 2019; 19(19):4129. https://doi.org/10.3390/s19194129

Chicago/Turabian StyleLei, Qing, Ji-Xiang Du, Hong-Bo Zhang, Shuang Ye, and Duan-Sheng Chen. 2019. "A Survey of Vision-Based Human Action Evaluation Methods" Sensors 19, no. 19: 4129. https://doi.org/10.3390/s19194129

APA StyleLei, Q., Du, J.-X., Zhang, H.-B., Ye, S., & Chen, D.-S. (2019). A Survey of Vision-Based Human Action Evaluation Methods. Sensors, 19(19), 4129. https://doi.org/10.3390/s19194129