Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems

Abstract

1. Introduction

2. Background on Guidance and Navigation Systems for the Visually Impaired

2.1. The Beginnings of Electronic Travel Aids

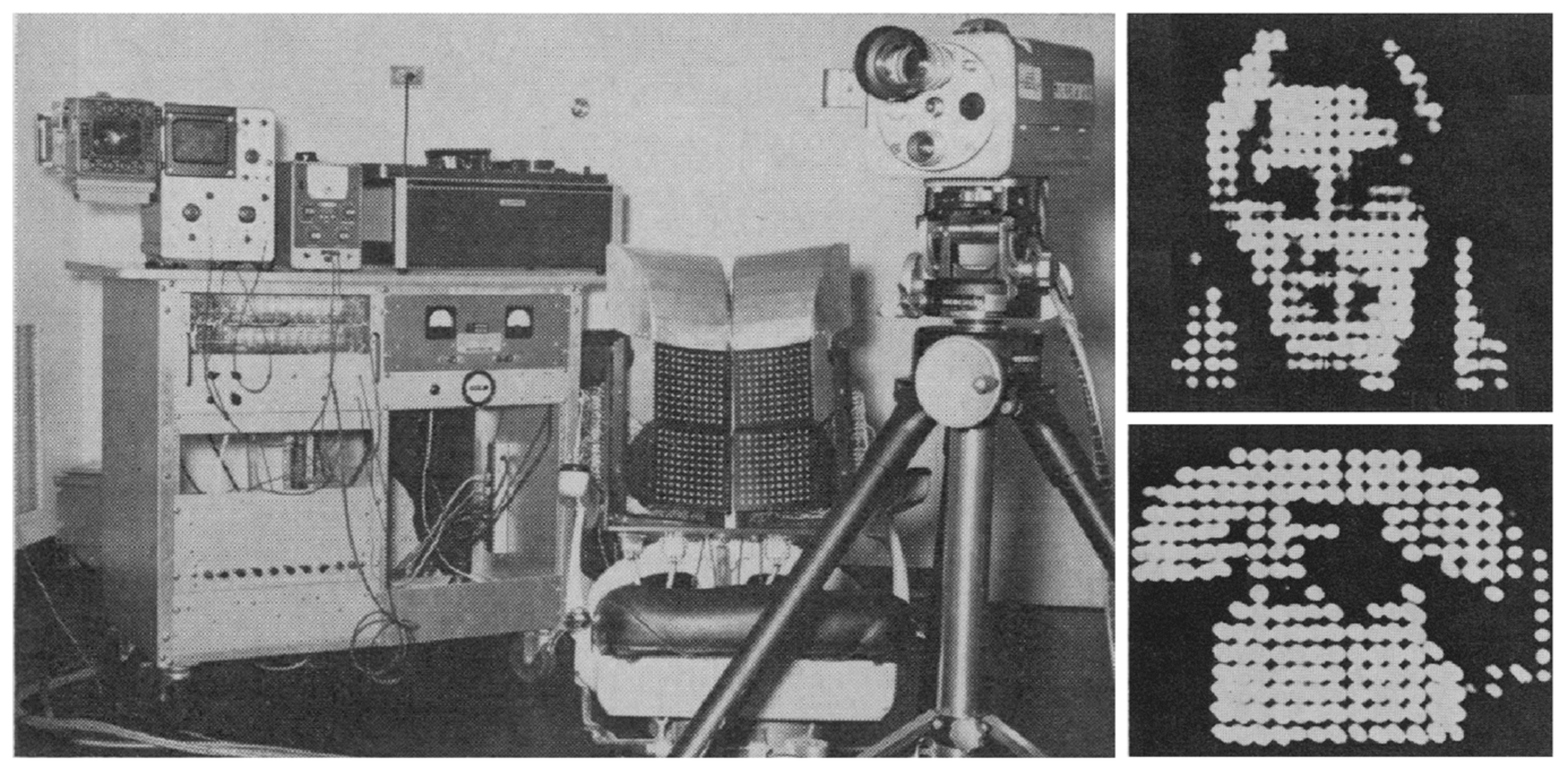

2.2. Sensory Substitution Devices

2.3. Navigation Systems for the Visually Impaired

- Ultrasound transmitters: As an illustrative case, the University of Florida’s Drishti project [38] (2004) applied this kind of technology to BVI people guidance, combining differential GPS outdoors and an ultrasound transmitter infrastructure indoors. As for the latter, a mean error of approximately 10 cm and 22 cm maximum, was observed. However, the accuracy may be easily degraded due to signal obstruction, reflection, etc.

- Optical transmitters: By 2001, researchers from Tokyo University developed a BVI guidance system made of optical beacons, which were installed in a hospital [39]. The transmitters were positioned on the ceiling, with each one sending an identification code associated to its position. The equipment carried by the users read the code in range, then reproduced recorded messages accordingly. Another system worth mentioning belongs to the National University of Singapore [40] (~2004). This time the position was inferred by means of fluorescent lights, each of these lights having its own code to identify the illuminated area. As can be seen, this line of work has similar features to those of Li-fi.

- RFID tags: Many of the technical solutions for positioning services were based on an infrastructure of beacons, be they radio frequency, infrared, etc. However, the subsequent costs of installation and maintenance, or their rigidity against changes in the environment (e.g., furniture rearrangement), were points against their implementation. To make up for these problems, RFID tag networks were proposed. Whereas, active tag costs are usually in the tens of dollars, passive tags cost only tens of cents. Also, as batteries are discarded, the network lifetime increases while maintenance costs are lowered, thus making them attractive solutions for locating systems. Even though their range only covers a few meters, range measuring techniques based on receive signal strength (RSS), received signal phase (RSP) or time of arrival (TOA) could be applied [41]. However, the estimation of the user’s position is usually that of the tag in range. As an example of this line of work, the University of Utah launched an indoor Robotic Guide project for the visually impaired in 2003 [42]. One year later, their prototype collected positioning data from a passive RFID network with a range of 1.5 m, effectively guiding test users along a 40-m route. By 2005, their installation in shopping carts was proposed [43]. In line with this, the PERCEP’ project [44] provided acoustic guidance messages by means of a deployment of passive RFID tags and an RFID reader embedded in a glove. RFID positioning will be widely adopted in the coming years, becoming one of the classic solutions. Nevertheless, the applications are not only limited to this area. For example, they were also found suitable to search for or identify distant objects [45].

3. Related Innovation Fields

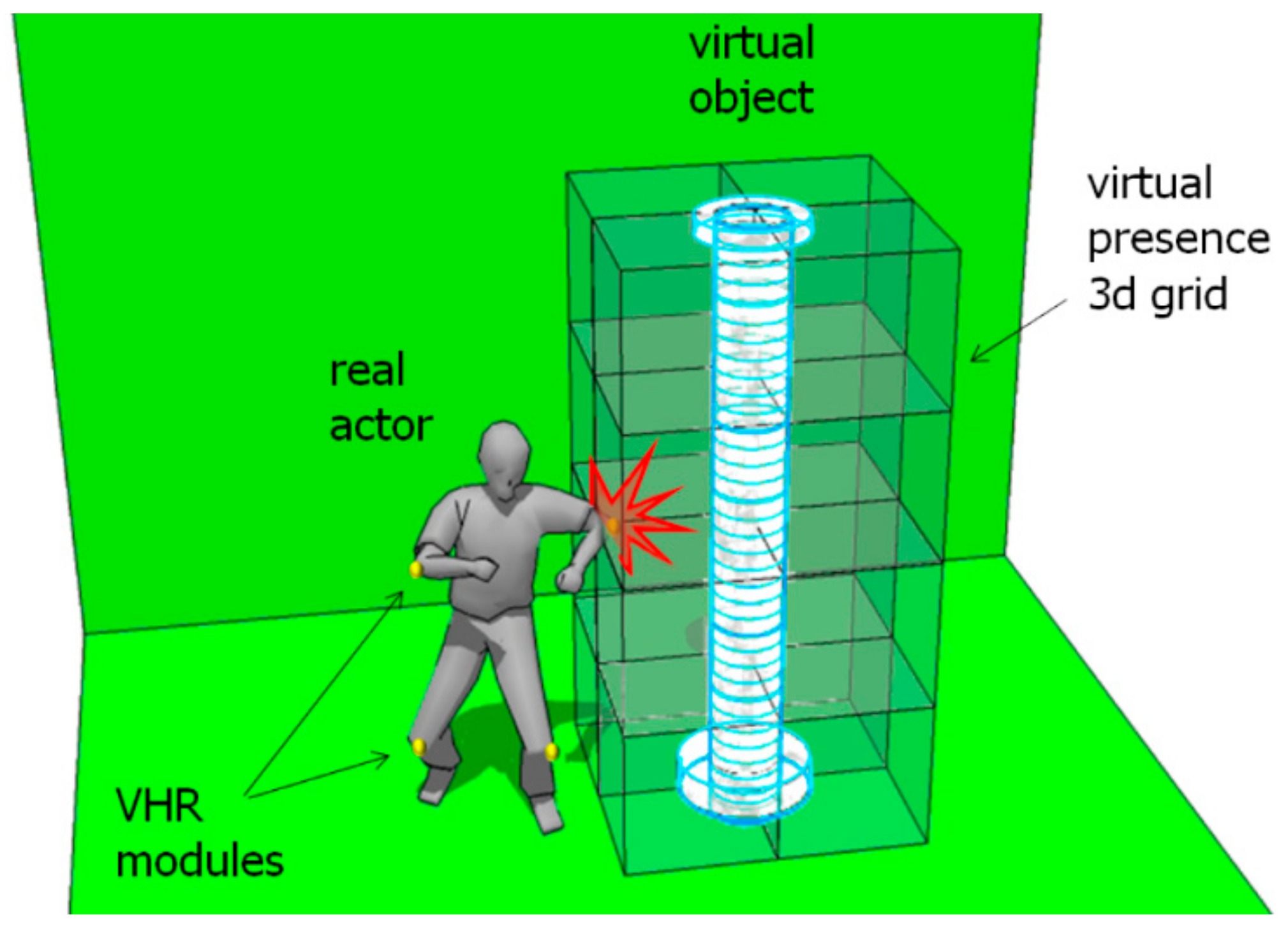

3.1. Mixed Reality

3.2. Smartphones

- Moovit [75]: a free, effective, and easy-to-use tool that offers guidance on the public transport network, managing schedules, notifications, and even warnings in real time. It is one of the assets for mobility tasks recommended by ONCE (National Organization of Spanish Blind People).

- BlindSquare [76]: specifically designed for the BVI, this application conveys the relative location of previously recorded POIs through speech. It makes use of Foursquare’s and OpenStreetMap’s databases.

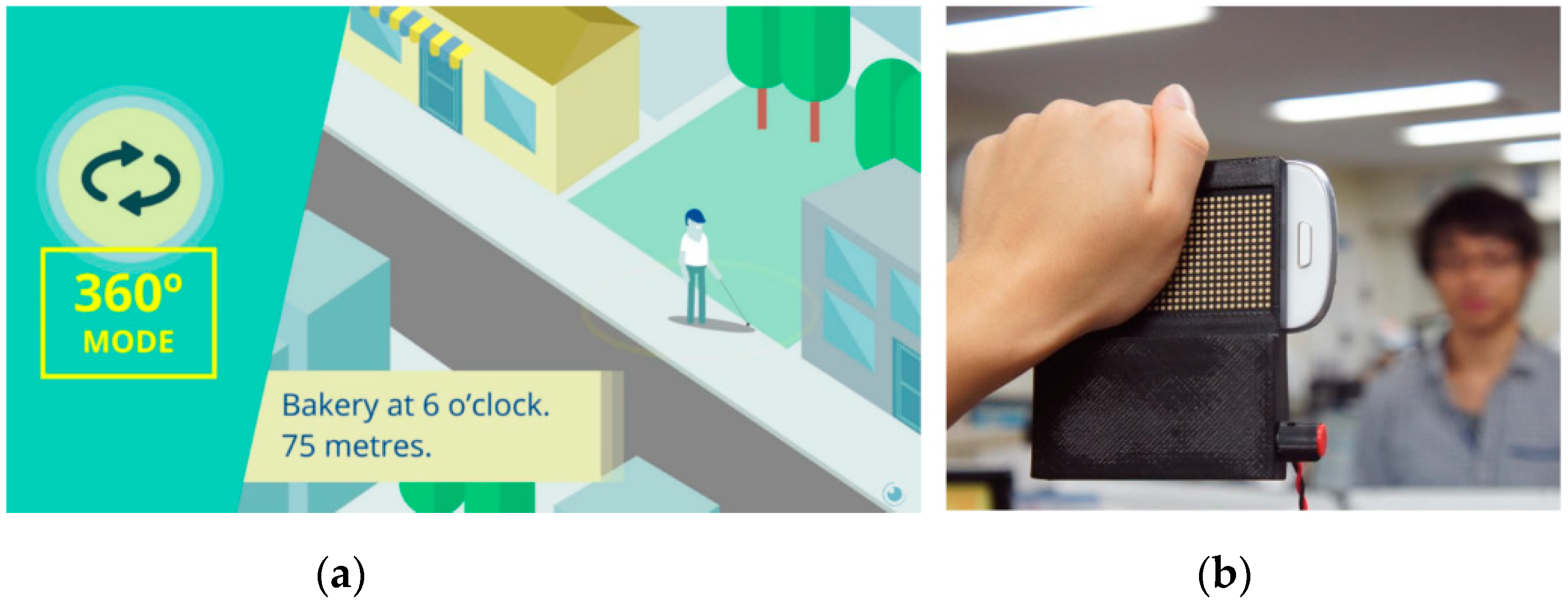

- Lazzus [77]: a paid application, again designed for BVI users, which coordinates GPS and built-in motion capture and orientation sensors to provide users with intuitive cues about the location of diverse POIs in the surrounding area, even including zebra crossings. It offers two modes of operation: the 360° mode verbally informs of the distance and orientation to nearby POIs, whereas the beam mode describes any POI in a virtual field of view in front of the smartphone. Its main sources of data are Google Places and OpenStreetMap.

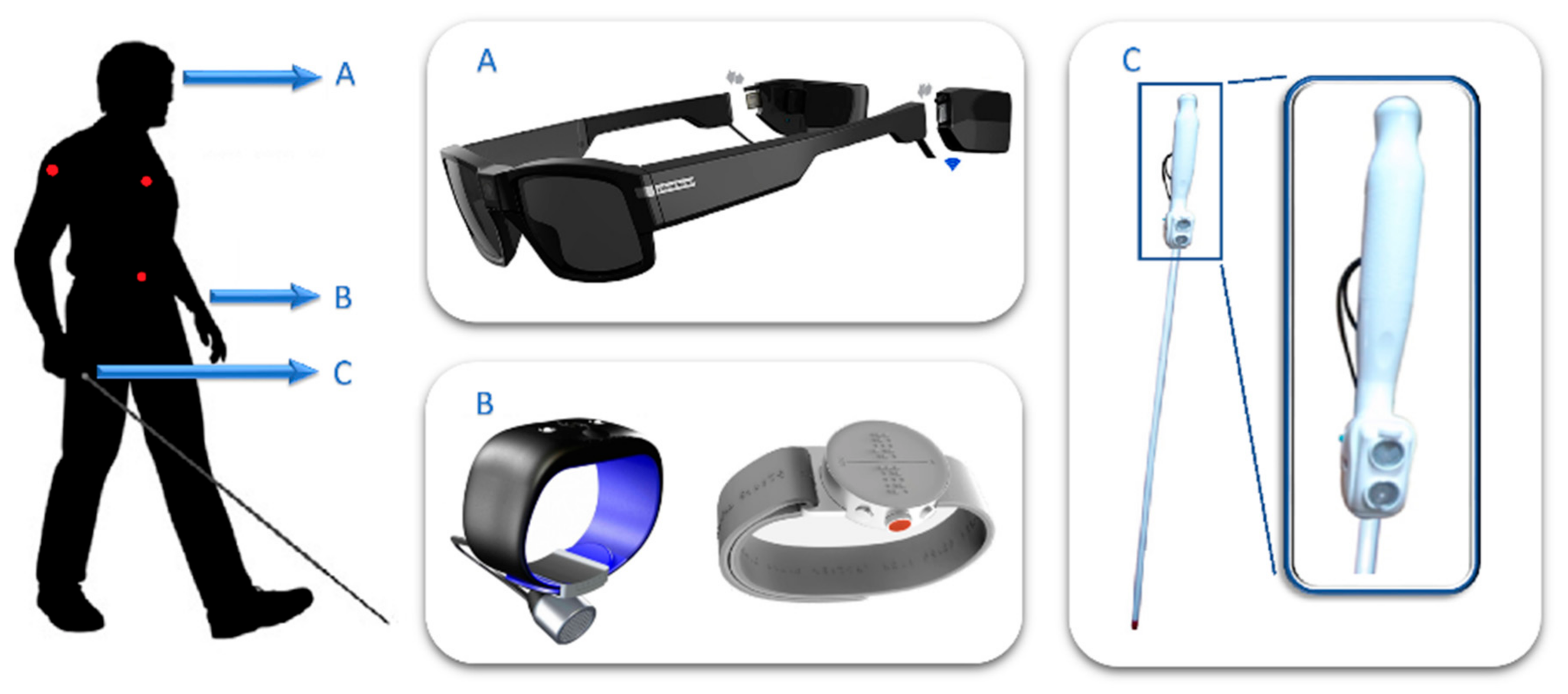

3.3. Wearables

4. Challenges in User-Centered System Design

- “The presence, location, and preferably the nature of obstacles immediately ahead of the traveller.” This relates to obstacle avoidance support.

- Data on the “path or surface on which the traveller is walking, such as texture, gradient, upcoming steps,” etc.

- “The position and nature of objects to the sides of the travel path,” i.e., hedges, fences, doorways, etc.

- Information that helps users to “maintain a straight course, notably the presence of some type of aiming point in the distance,” e.g., distant traffic sounds.

- “Landmark location and identification,” including those previously seen, particularly in (3).

- Information that “allows the traveller to build up a mental map, image, or schema for the chosen route to be followed.” This point involves the study of what is frequently termed “cognitive mapping” in blind individuals [83].

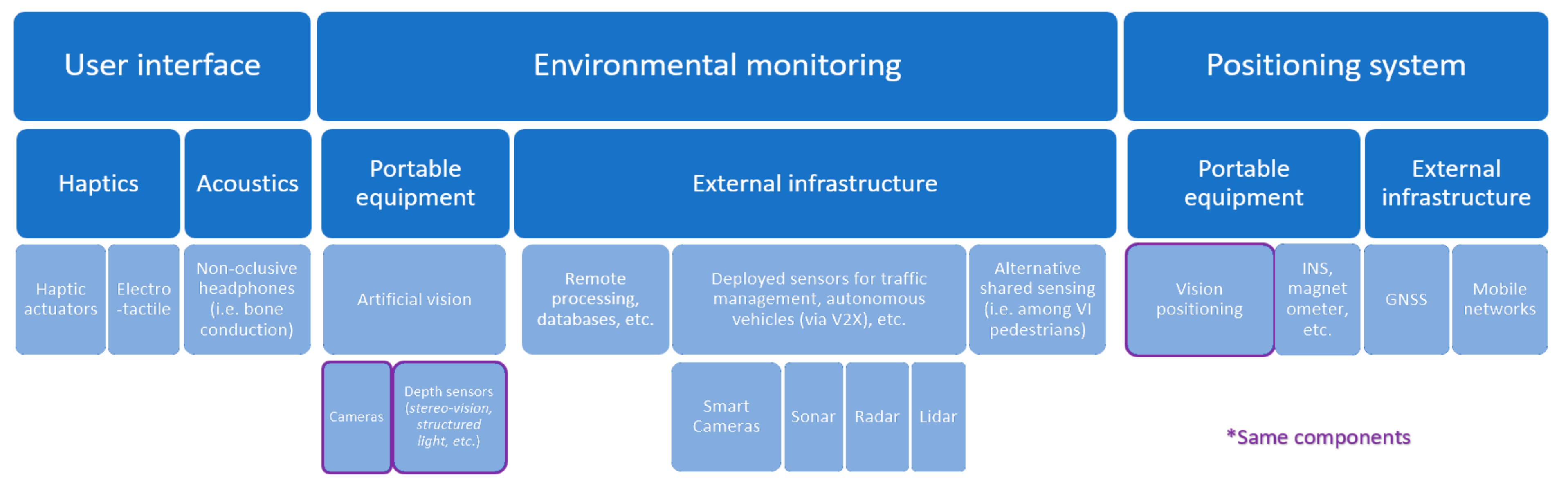

5. Availability of Technical Solutions

5.1. Positioning Systems

5.2. Environmental Monitoring

5.3. User Interface

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bourne, R.R.A.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef]

- Tversky, B. Cognitive Maps, Cognitive Collages, and Spatial Mental Models; Springer: Berlin/Heidelberg, Germany, 1993; pp. 14–24. [Google Scholar]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognit. Lett. 2018. [Google Scholar] [CrossRef]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef] [PubMed]

- Working Group on Mobility Aids for the Visually Impaired and Blind; Committee on Vision. Electronic Travel Aids: New Directions for Research; National Academies Press: Washington, DC, USA, 1986; ISBN 978-0-309-07791-0.

- Benjamin, J.M. The laser cane. Bull. Prosthet. Res. 1974, 443–450. [Google Scholar]

- Russel, L. Travel Path Sounder. In Proceedings of the Rotterdam Mobility Research Conference; American Foundation for the Blind: New York, NY, USA, 1965. [Google Scholar]

- Armstrong, J.D. Summary Report of the Research Programme on Electronic Mobility Aids; University of Nottingham: Nottingham, UK, 1973. [Google Scholar]

- Pressey, N. Mowat sensor. Focus 1977, 11, 35–39. [Google Scholar]

- Heyes, A.D. The Sonic Pathfinder—A new travel aid for the blind. In High Technology Aids for the Disabled; Elsevier: Edinburgh, UK, 1983; pp. 165–171. [Google Scholar]

- Maude, D.R.; Mark, M.U.; Smith, R.W. AFB’s Computerized Travel Aid: Two Years of Research. J. Vis. Impair. Blind. 1983, 77, 71, 74–75. [Google Scholar]

- Collins, C.C. On Mobility Aids for the Blind. In Electronic Spatial Sensing for the Blind; Springer: Dordrecht, The Netherlands, 1985; pp. 35–64. [Google Scholar]

- Collins, C.C. Tactile Television-Mechanical and Electrical Image Projection. IEEE Trans. Man-Mach. Syst. 1970, 11, 65–71. [Google Scholar] [CrossRef]

- Rantala, J. Jussi Rantala Spatial Touch in Presenting Information with Mobile Devices; University of Tampere: Tampere, Finland, 2014. [Google Scholar]

- BrainPort, Wicab. Available online: https://www.wicab.com/brainport-vision-pro (accessed on 29 July 2019).

- Grant, P.; Spencer, L.; Arnoldussen, A.; Hogle, R.; Nau, A.; Szlyk, J.; Nussdorf, J.; Fletcher, D.C.; Gordon, K.; Seiple, W. The Functional Performance of the BrainPort V100 Device in Persons Who Are Profoundly Blind. J. Vis. Impair. Blind. 2016, 110, 77–89. [Google Scholar] [CrossRef]

- Kajimoto, H.; Kanno, Y.; Tachi, S. Forehead electro-tactile display for vision substitution. In Proceedings of the EuroHaptics, Paris, France, 3–6 July 2006. [Google Scholar]

- Kajimoto, H.; Suzuki, M.; Kanno, Y. HamsaTouch: Tactile Vision Substitution with Smartphone and Electro-Tactile Display. In Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems: Extended Abstracts, Toronto, ON, Canada, 26 April–1 May 2014; pp. 1273–1278. [Google Scholar]

- Cassinelli, A.; Reynolds, C.; Ishikawa, M. Augmenting spatial awareness with haptic radar. In Proceedings of the 10th IEEE International Symposium on Wearable Computers (ISWC 2006), Montreux, Switzerland, 11–14 October 2006; pp. 61–64. [Google Scholar]

- Kay, L. An ultrasonic sensing probe as a mobility aid for the blind. Ultrasonics 1964, 2, 53–59. [Google Scholar] [CrossRef]

- Kay, L. A sonar aid to enhance spatial perception of the blind: Engineering design and evaluation. Radio Electron. Eng. 1974, 44, 605. [Google Scholar] [CrossRef]

- Sainarayanan, G.; Nagarajan, R.; Yaacob, S. Fuzzy image processing scheme for autonomous navigation of human blind. Appl. Soft Comput. J. 2007, 7, 257–264. [Google Scholar] [CrossRef]

- Ifukube, T.; Sasaki, T.; Peng, C. A blind mobility aid modeled after echolocation of bats. IEEE Trans. Biomed. Eng. 1991, 38, 461–465. [Google Scholar] [CrossRef] [PubMed]

- Meijer, P.B.L. An Experimental System for Auditory Image Representations. IEEE Trans. Biomed. Eng. 1992, 39, 112–121. [Google Scholar] [CrossRef] [PubMed]

- Haigh, A.; Brown, D.J.; Meijer, P.; Proulx, M.J. How well do you see what you hear? The acuity of visual-to-auditory sensory substitution. Front. Psychol. 2013, 4. [Google Scholar] [CrossRef] [PubMed]

- Ward, J.; Meijer, P. Visual experiences in the blind induced by an auditory sensory substitution device. Conscious. Cognit. 2010, 19, 492–500. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Mora, J.L.; Rodriguez-Hernaindez, A.F.; Burunat, E.; Martin, F.; Castellano, M.A. Seeing the world by hearing: Virtual Acoustic Space (VAS) a new space perception system for blind people. In Proceedings of the 2006 2nd International Conference on Information & Communication Technologies, Damascus, Syria, 24–28 April 2006; Volume 1, pp. 837–842. [Google Scholar]

- Hersh, M.A.; Johnson, M.A. Assistive Technology for Visually Impaired and Blind People; Springer: London, UK, 2008; ISBN 9781846288661. [Google Scholar]

- Ultracane. Available online: https://www.ultracane.com/ (accessed on 29 July 2019).

- Tachi, S.; Komoriya, K. Guide dog robot. In Autonomous Mobile Robots: Control, Planning, and Architecture; Mechanical Engineering Laboratory: Ibaraki, Japan, 1985; pp. 360–367. [Google Scholar]

- Borenstein, J. The guidecane—A computerized travel aid for the active guidance of blind pedestrians. In Proceedings of the 1997 International Conference on Robotics and Automation (ICRA 1997), Albuquerque, NM, USA, 20–25 April 1997; IEEE: Piscataway, NJ, USA; Volume 2, pp. 1283–1288.

- Shoval, S.; Borenstein, J.; Koren, Y. Mobile robot obstacle avoidance in a computerized travel aid for the blind. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; pp. 2023–2028. [Google Scholar]

- Loomis, J.M. Digital Map and Navigation System for the Visually Impaired; Department of Psychology, University of California-Santa Barbara; Unpublished work; 1985. [Google Scholar]

- Loomis, J.M.; Golledge, R.G.; Klatzky, R.L.; Marston, J.R. Assisting wayfinding in visually impaired travelers. In Applied Spatial Cognition: From Research to Cognitive Technology; Lawrence Erlbaum Associates, Inc.: Mahwah, NJ, USA, 2007; pp. 179–203. [Google Scholar]

- Crandall, W.; Bentzen, B.L.; Myers, L.; Brabyn, J. New orientation and accessibility option for persons with visual impairment: Transportation applications for remote infrared audible signage. Clin. Exp. Optom. 2001, 84, 120–131. [Google Scholar] [CrossRef]

- Loomis, J.M.; Klatzky, R.L.; Golledge, R.G. Auditory Distance Perception in Real, Virtual, and Mixed Environments. In Mixed Reality; Springer: Berlin/Heidelberg, Germany, 1999; pp. 201–214. [Google Scholar]

- PERNASVIP—Final Report. 2011. Available online: pernasvip.di.uoa.gr/DELIVERABLES/D14.doc (accessed on 1 August 2019).

- Ran, L.; Helal, S.; Moore, S. Drishti: An Integrated Indoor/Outdoor Blind Navigation System and Service. In Proceedings of the Second IEEE Annual Conference on Pervasive Computing and Communications, Orlando, FL, USA, 17–17 March 2004. [Google Scholar]

- Harada, T.; Kaneko, Y.; Hirahara, Y.; Yanashima, K.; Magatani, K. Development of the navigation system for visually impaired. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; pp. 4900–4903. [Google Scholar]

- Cheok, A.D.; Li, Y. Ubiquitous interaction with positioning and navigation using a novel light sensor-based information transmission system. Pers. Ubiquitous Comput. 2008, 12, 445–458. [Google Scholar] [CrossRef]

- Bouet, M.; Dos Santos, A.L. RFID tags: Positioning principles and localization techniques. In Proceedings of the 1st IFIP Wireless Days, Dubai, UAE, 24–27 November 2008; pp. 1–5. [Google Scholar]

- Kulyukin, V.A.; Nicholson, J.; Kulyukin, V.; Nicholson, J. RFID in Robot-Assisted Indoor Navigation for the Visually Impaired RFID in Robot-Assisted Indoor Navigation for the Visually Impaired. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 2, pp. 1979–1984. [Google Scholar]

- Kulyukin, V.; Gharpure, C.; Nicholson, J. RoboCart: Toward robot-assisted navigation of grocery stores by the visually impaired. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 2845–2850. [Google Scholar]

- Ganz, A.; Schafer, J.; Gandhi, S.; Puleo, E.; Wilson, C.; Robertson, M. PERCEPT Indoor Navigation System for the Blind and Visually Impaired: Architecture and Experimentation. Int. J. Telemed. Appl. 2012. [Google Scholar] [CrossRef]

- Lanigan, P.; Paulos, A.; Williams, A.; Rossi, D.; Narasimhan, P. Trinetra: Assistive Technologies for Grocery Shopping for the Blind. In Proceedings of the 2006 10th IEEE International Symposium on Wearable Computers, Montreux, Switzerland, 11–14 October 2006; pp. 147–148. [Google Scholar]

- Hub, A.; Diepstraten, J.; Ertl, T. Design and development of an indoor navigation and object identification system for the blind. In Proceedings of the 6th International ACM SIGACCESS Conference on Computers and Accessibility, Atlanta, GA, USA, 18–20 October 2004; pp. 147–152. [Google Scholar]

- Hub, A.; Hartter, T.; Ertl, T. Interactive tracking of movable objects for the blind on the basis of environment models and perception-oriented object recognition methods. In Proceedings of the Eighth International ACM SIGACCESS Conference on Computers and Accessibility, Portland, OR, USA, 23–25 October 2006; pp. 111–118. [Google Scholar]

- Fernandes, H.; Costa, P.; Filipe, V.; Hadjileontiadis, L.; Barroso, J. Stereo vision in blind navigation assistance. In Proceedings of the World Automation Congress, Kobe, Japan, 19–23 September 2010; pp. 1–6. [Google Scholar]

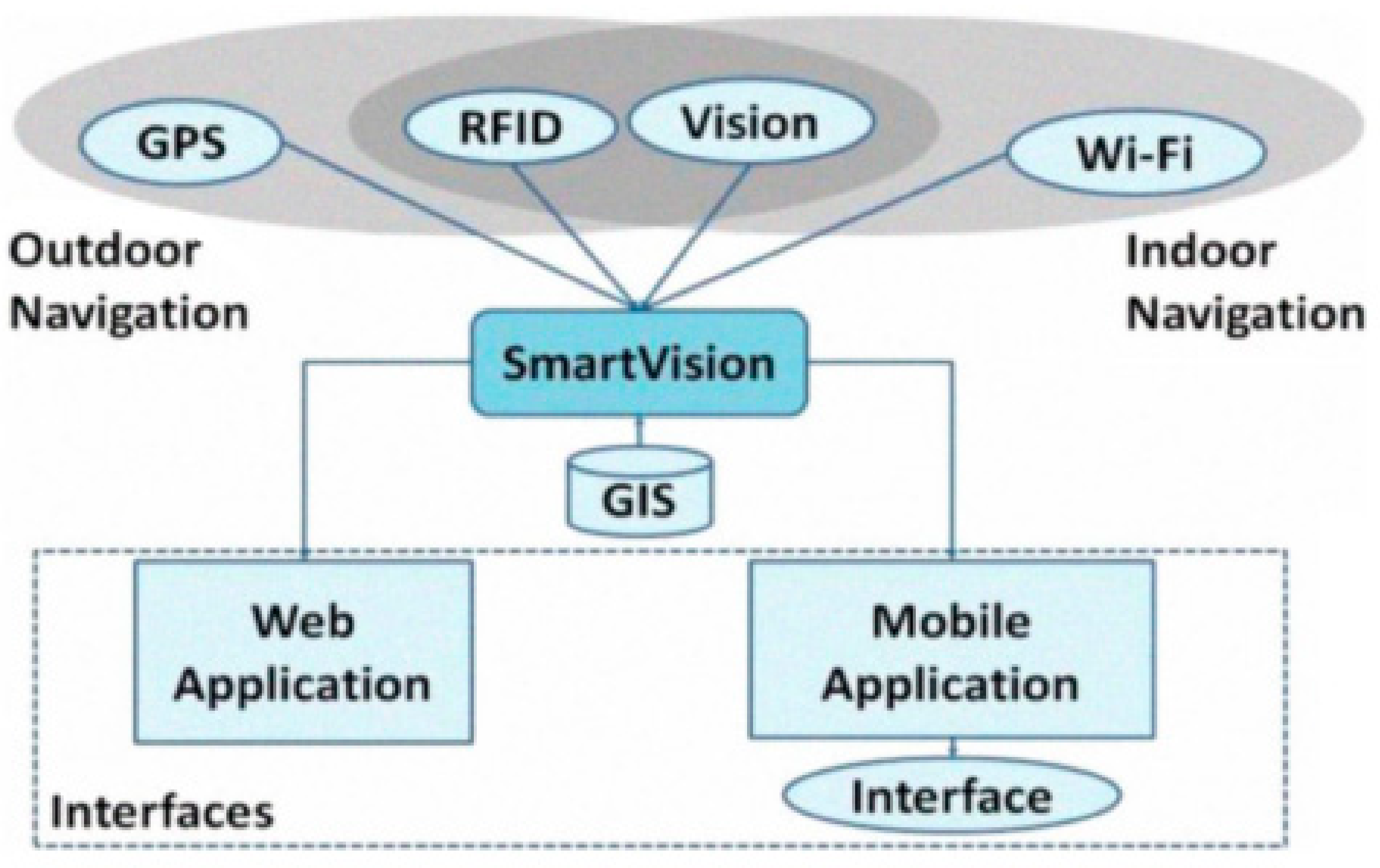

- Fernandes, H.; Costa, P.; Paredes, H.; Filipe, V.; Barroso, J. Integrating Computer Vision Object Recognition with Location Based Services for the Blind; Springer: Cham, Switzerland, 2014; pp. 493–500. [Google Scholar]

- Martinez-Sala, A.S.; Losilla, F.; Sánchez-Aarnoutse, J.C.; García-Haro, J. Design, implementation and evaluation of an indoor navigation system for visually impaired people. Sensors 2015, 15, 32168–32187. [Google Scholar] [CrossRef]

- Riehle, T.H.; Lichter, P.; Giudice, N.A. An indoor navigation system to support the visually impaired. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 4435–4438. [Google Scholar]

- Legge, G.E.; Beckmann, P.J.; Tjan, B.S.; Havey, G.; Kramer, K.; Rolkosky, D.; Gage, R.; Chen, M.; Puchakayala, S.; Rangarajan, A. Indoor Navigation by People with Visual Impairment Using a Digital Sign System. PLoS ONE 2013, 8, 14–15. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’16), Florence, Italy, 6–9 September 2016; pp. 90–99. [Google Scholar]

- Murata, M.; Ahmetovic, D.; Sato, D.; Takagi, H.; Kitani, K.M.; Asakawa, C. Smartphone-Based Indoor Localization for Blind Navigation across Building Complexes. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018; pp. 1–10. [Google Scholar]

- Giudice, N.A.; Whalen, W.E.; Riehle, T.H.; Anderson, S.M.; Doore, S.A. Evaluation of an Accessible, Free Indoor Navigation System by Users Who Are Blind in the Mall of America. J. Vis. Impair. Blind. 2019, 113, 140–155. [Google Scholar] [CrossRef]

- Dakopoulos, D. Tyflos: A Wearable Navigation Prorotype for Blind & Visually Impaired; Design, Modelling and Experimental Results; Wright State University and OhioLINK: Dayton, OH, USA, 2009. [Google Scholar]

- Meers, S.; Ward, K. A vision system for providing 3D perception of the environment via transcutaneous electro-neural stimulation. In Proceedings of the Eighth International Conference on Information Visualisation, London, UK, 16–16 July 2004; pp. 546–552. [Google Scholar]

- Meers, S.; Ward, K. A Substitute Vision System for Providing 3D Perception and GPS Navigation via Electro-Tactile Stimulation. In Proceedings of the International Conference on Sensing Technology, Palmerston North, New Zealand, 21–23 November 2005; pp. 551–556. [Google Scholar]

- Zöllner, M.; Huber, S.; Jetter, H.-C.; Reiterer, H. NAVI—A Proof-of-Concept of a Mobile Navigational Aid for Visually Impaired Based on the Microsoft Kinect; Human-Computer Interaction—INTERACT; Springer: Berlin/Heidelberg, 2011; pp. 584–587. [Google Scholar]

- Zhang, H.; Ye, C. An Indoor Wayfinding System Based on Geometric Features Aided Graph SLAM for the Visually Impaired. IEEE Trans. Neural Syst. Rehabilit. Eng. 2017, 25, 1592–1604. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Munoz, J.P.; Rong, X.; Chen, Q.; Xiao, J.; Tian, Y.; Arditi, A.; Yousuf, M. Vision-based Mobile Indoor Assistive Navigation Aid for Blind People. IEEE Trans. Mob. Comput. 2019, 18, 702–714. [Google Scholar] [CrossRef] [PubMed]

- Jafri, R.; Campos, R.L.; Ali, S.A.; Arabnia, H.R. Visual and Infrared Sensor Data-Based Obstacle Detection for the Visually Impaired Using the Google Project Tango Tablet Development Kit and the Unity Engine. IEEE Access 2017, 6, 443–454. [Google Scholar] [CrossRef]

- Neto, L.B.; Grijalva, F.; Maike, V.R.M.L.; Martini, L.C.; Florencio, D.; Baranauskas, M.C.C.; Rocha, A.; Goldenstein, S. A Kinect-Based Wearable Face Recognition System to Aid Visually Impaired Users. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 52–64. [Google Scholar] [CrossRef]

- Hicks, S.L.; Wilson, I.; Muhammed, L.; Worsfold, J.; Downes, S.M.; Kennard, C. A Depth-Based Head-Mounted Visual Display to Aid Navigation in Partially Sighted Individuals. PLoS ONE 2013, 8, e67695. [Google Scholar] [CrossRef] [PubMed]

- VA-ST Smart Specs—MIT Technology Review. Available online: https://www.technologyreview.com/s/538491/augmented-reality-glasses-could-help-legally-blind-navigate/ (accessed on 29 July 2019).

- Cassinelli, A.; Sampaio, E.; Joffily, S.B.; Lima, H.R.S.; Gusmo, B.P.G.R. Do blind people move more confidently with the Tactile Radar? Technol. Disabil. 2014, 26, 161–170. [Google Scholar] [CrossRef]

- Zerroug, A.; Cassinelli, A.; Ishikawa, M. Virtual Haptic Radar. In Proceedings of the ACM SIGGRAPH ASIA 2009 Sketches, Yokohama, Japan, 16–19 December 2009. [Google Scholar]

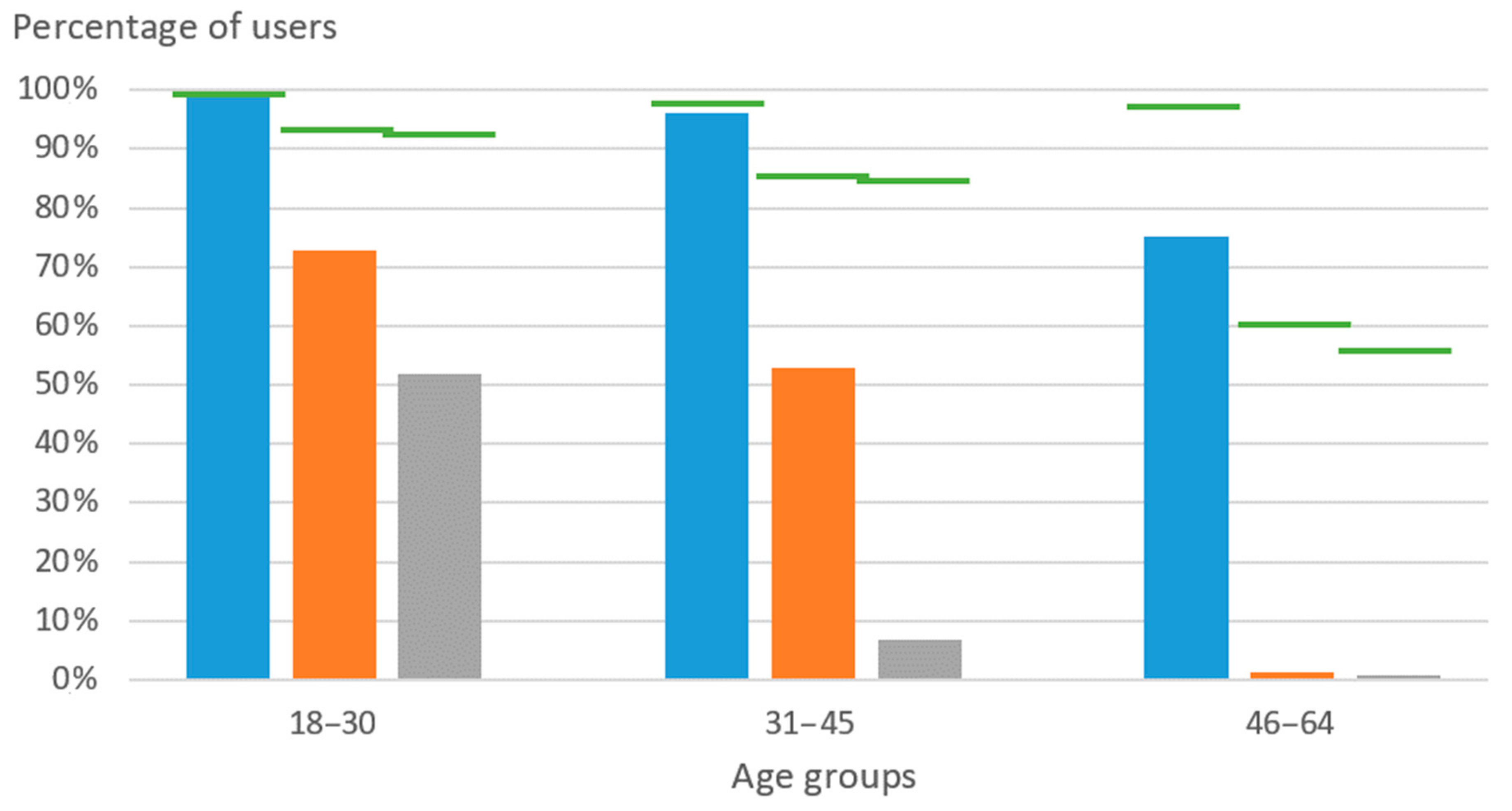

- Fundación Vodafone España. Acceso y uso de las TIC por las personas con discapacidad; Fundación Vodafone España: Madrid, España, 2013; Available online: http://www.fundacionvodafone.es/publicacion/acceso-y-uso-de-las-tic-por-las-personas-con-discapacidad (accessed on 1 August 2019).

- Apostolopoulos, I.; Fallah, N.; Folmer, E.; Bekris, K.E. Integrated online localization and navigation for people with visual impairments using smart phones. In Proceedings of the International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1322–1329. [Google Scholar]

- BrainVisionRehab. Available online: https://www.brainvisionrehab.com/ (accessed on 29 July 2019).

- EyeMusic. Available online: https://play.google.com/store/apps/details?id=com.quickode.eyemusic&hl=en (accessed on 29 July 2019).

- The vOICe. Available online: https://www.seeingwithsound.com/ (accessed on 29 July 2019).

- Microsoft Seeing AI. Available online: https://www.microsoft.com/en-us/ai/seeing-ai (accessed on 29 July 2019).

- TapTapSee—Smartphone application. Available online: http://taptapseeapp.com/ (accessed on 29 July 2019).

- Moovit. Available online: https://company.moovit.com/ (accessed on 29 July 2019).

- BlindSquare. Available online: http://www.blindsquare.com/about/ (accessed on 29 July 2019).

- Lazzus. Available online: http://www.lazzus.com/en/ (accessed on 29 July 2019).

- Seeing AI GPS. Available online: https://www.senderogroup.com/ (accessed on 29 July 2019).

- Sunu Band. Available online: https://www.sunu.com/en/index.html (accessed on 29 July 2019).

- Orcam MyEye. Available online: https://www.orcam.com/en/myeye2/ (accessed on 29 July 2019).

- Project Blaid. Available online: https://www.toyota.co.uk/world-of-toyota/stories-news-events/toyota-project-blaid (accessed on 29 July 2019).

- Schinazi, V. Representing Space: The Development, Content and Accuracy of Mental Representations by the Blind and Visually Impaired. Ph.D. Thesis, University College, London, UK, 2008. [Google Scholar]

- Ungar, S. Cognitive Mapping without Visual Experience. In Cognitive Mapping: Past Present and Future; Routledge: London, UK, 2000; pp. 221–248. [Google Scholar]

- Loomis, J.M.; Klatzky, R.L.; Giudice, N.A. Sensory substitution of vision: Importance of perceptual and cognitive processing. In Assistive Technology for Blindness and Low Vision; CRC Press: Boca Ratón, FL, USA, 2012; pp. 162–191. [Google Scholar]

- Spence, C. The skin as a medium for sensory substitution. Multisens. Res. 2014, 27, 293–312. [Google Scholar] [CrossRef]

- Maidenbaum, S.; Abboud, S.; Amedi, A. Sensory substitution: Closing the gap between basic research and widespread practical visual rehabilitation. Neurosci. Biobehav. Rev. 2014, 41, 3–15. [Google Scholar] [CrossRef]

- Proulx, M.J.; Brown, D.J.; Pasqualotto, A.; Meijer, P. Multisensory perceptual learning and sensory substitution. Neurosci. Biobehav. Rev. 2014, 41, 16–25. [Google Scholar] [CrossRef]

- Giudice, N.A. Navigating without Vision: Principles of Blind Spatial Cognition; Handbook of Behavioral and Cognitive Geography; Edward Elgar Publishing: Cheltenham, UK; Northampton, MA, USA, 2018; pp. 260–288. [Google Scholar]

- Giudice, N.A.; Legge, G.E. Blind Navigation and the Role of Technology. In Engineering Handbook of Smart Technology for Aging, Disability, and Independence; John Wiley & Sons: Hoboken, NJ, USA, 2008; pp. 479–500. [Google Scholar]

- Wayfindr. Available online: https://www.wayfindr.net/ (accessed on 29 July 2019).

- Kobayashi, S.; Koshizuka, N.; Sakamura, K.; Bessho, M.; Kim, J.-E. Navigating Visually Impaired Travelers in a Large Train Station Using Smartphone and Bluetooth Low Energy. In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; pp. 604–611. [Google Scholar]

- Cheraghi, S.A.; Namboodiri, V.; Walker, L. GuideBeacon: Beacon-based indoor wayfinding for the blind, visually impaired, and disoriented. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops, Kona, HI, USA, 13–17 March 2017; pp. 121–130. [Google Scholar]

- NaviLens—Smartphone Application. Available online: https://www.navilens.com/ (accessed on 29 July 2019).

- NavCog. Available online: http://www.cs.cmu.edu/~NavCog/navcog.html (accessed on 29 July 2019).

- NGMN Alliance. NGMN Alliance 5G White Paper; Next Generation Mobile Networks White Paper; NGMN Alliance: Frankfurt, Germany, 2015; pp. 1–125. [Google Scholar]

- Giudice, N.A.; Klatzky, R.L.; Bennett, C.R.; Loomis, J.M. Perception of 3-D location based on vision, touch, and extended touch. Exp. Brain Res. 2013, 224, 141–153. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Lin, B.S.; Lee, C.C.; Chiang, P.Y. Simple smartphone-based guiding system for visually impaired people. Sensors 2017, 17, 1371. [Google Scholar] [CrossRef] [PubMed]

- Martino, G.; Marks, L.E. Synesthesia: Strong and Weak. Curr. Dir. Psychol. Sci. 2001, 10, 61–65. [Google Scholar] [CrossRef]

- Delazio, A.; Israr, A.; Klatzky, R.L. Cross—Modal Correspondence between vibrations and colors. In Proceedings of the 2017 IEEE World Haptics Conference (WHC), Munich, Germany, 5–9 Jun 2017; pp. 219–224. [Google Scholar]

- Briscoe, R. Bodily action and distal attribution in sensory substitution. In Sensory Substitution and Augmentation; Macpherson, F., Ed.; Proceedings of the British Academy; Oxford University Press: London, UK, 2015; pp. 1–13. [Google Scholar]

- Golledge, R.G.; Marston, J.R.; Loomis, J.M.; Klatzky, R.L. Stated preferences for components of a personal guidance system for nonvisual navigation. J. Vis. Impair. Blind. 2004, 98, 135–147. [Google Scholar] [CrossRef]

- D’Alonzo, M.; Dosen, S.; Cipriani, C.; Farina, D. HyVE-hybrid vibro-electrotactile stimulation-is an efficient approach to multi-channel sensory feedback. IEEE Trans. Haptics 2014, 7, 181–190. [Google Scholar] [CrossRef] [PubMed]

- Yoshimoto, S.; Kuroda, Y.; Imura, M.; Oshiro, O. Material roughness modulation via electrotactile augmentation. IEEE Trans. Haptics 2015, 8, 199–208. [Google Scholar] [CrossRef] [PubMed]

- Kajimoto, H. Electrotactile display with real-time impedance feedback using pulse width modulation. IEEE Trans. Haptics 2012, 5, 184–188. [Google Scholar] [CrossRef]

- Kitamura, N.; Miki, N. Micro-needle-based electro tactile display to present various tactile sensation. In Proceedings of the 28th IEEE International Conference on Micro Electro Mechanical Systems (MEMS), Estoril, Portugal, 18–22 January 2018; 2015; pp. 649–650. [Google Scholar]

- Tezuka, M.; Kitamura, N.; Tanaka, K.; Miki, N. Presentation of Various Tactile Sensations Using Micro-Needle Electrotactile Display. PLoS ONE 2016, 11, e0148410. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Real, S.; Araujo, A. Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems. Sensors 2019, 19, 3404. https://doi.org/10.3390/s19153404

Real S, Araujo A. Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems. Sensors. 2019; 19(15):3404. https://doi.org/10.3390/s19153404

Chicago/Turabian StyleReal, Santiago, and Alvaro Araujo. 2019. "Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems" Sensors 19, no. 15: 3404. https://doi.org/10.3390/s19153404

APA StyleReal, S., & Araujo, A. (2019). Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems. Sensors, 19(15), 3404. https://doi.org/10.3390/s19153404