The Effect of the Color Filter Array Layout Choice on State-of-the-Art Demosaicing

Abstract

:1. Introduction

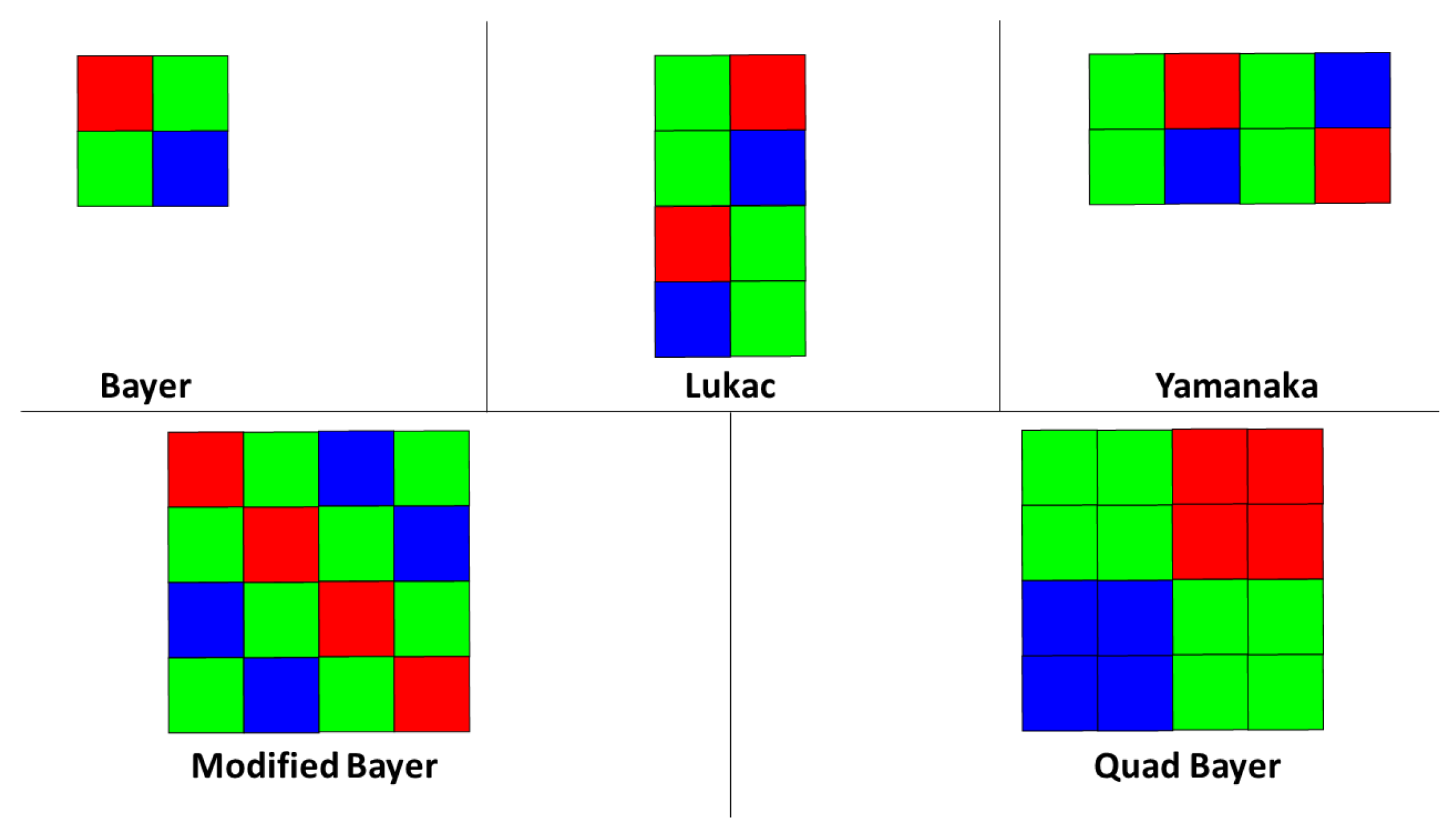

1.1. CFA Pattern Design

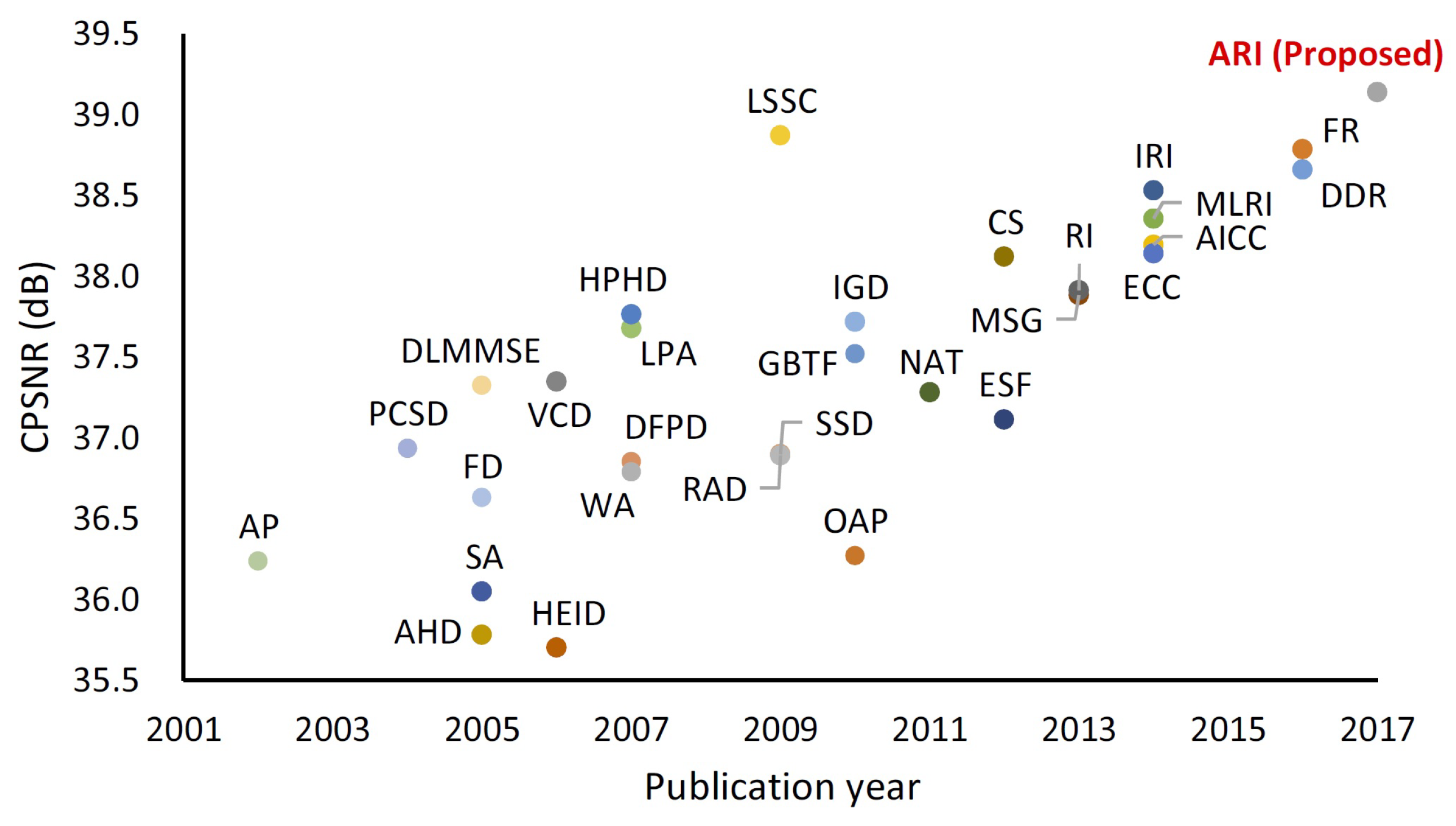

1.2. Demosaicing

1.3. CFA-Demosaicing Co-Design

1.4. Structure

2. State-of-the-Art Demosaicing

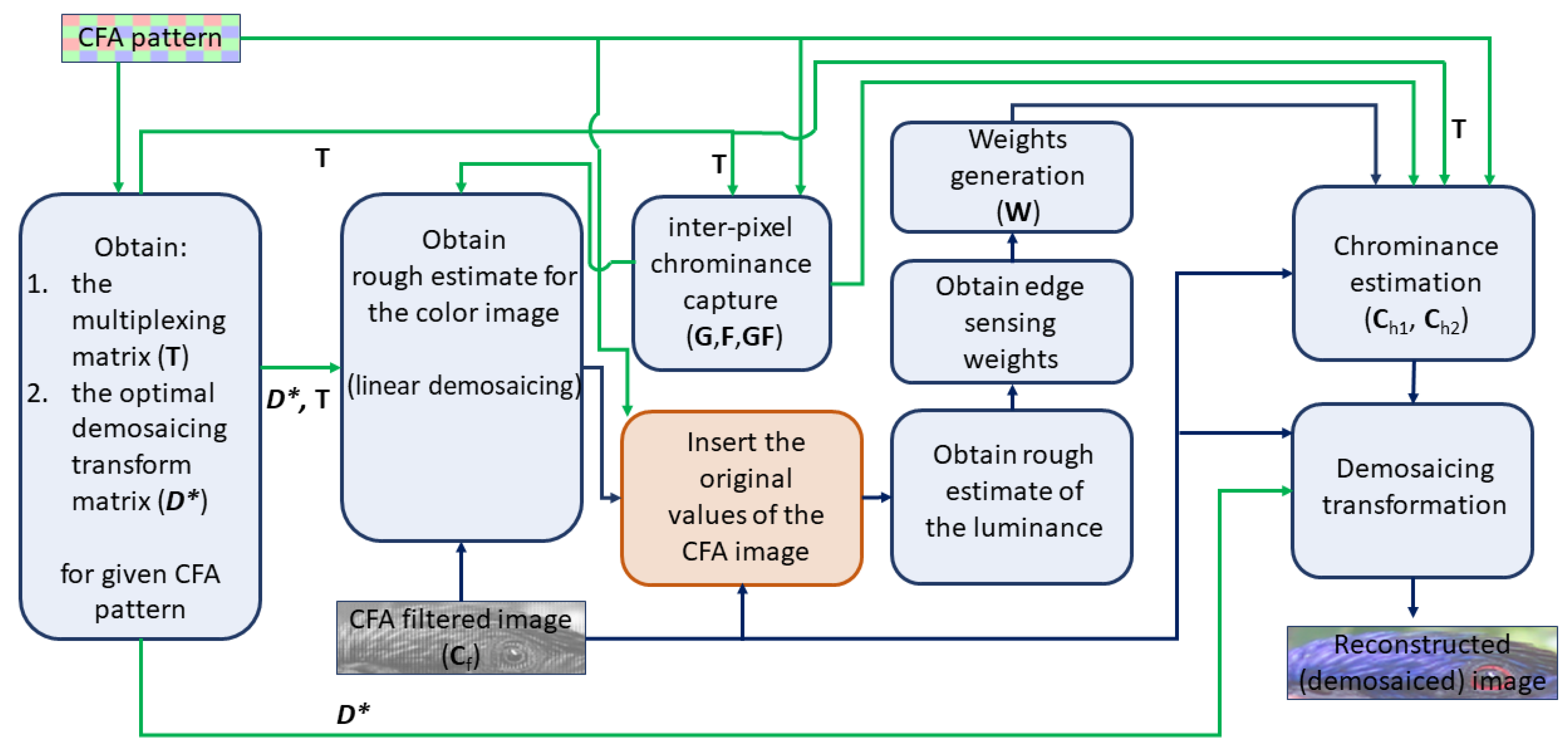

2.1. Universal Demosaicing of CFA (ACUDe)

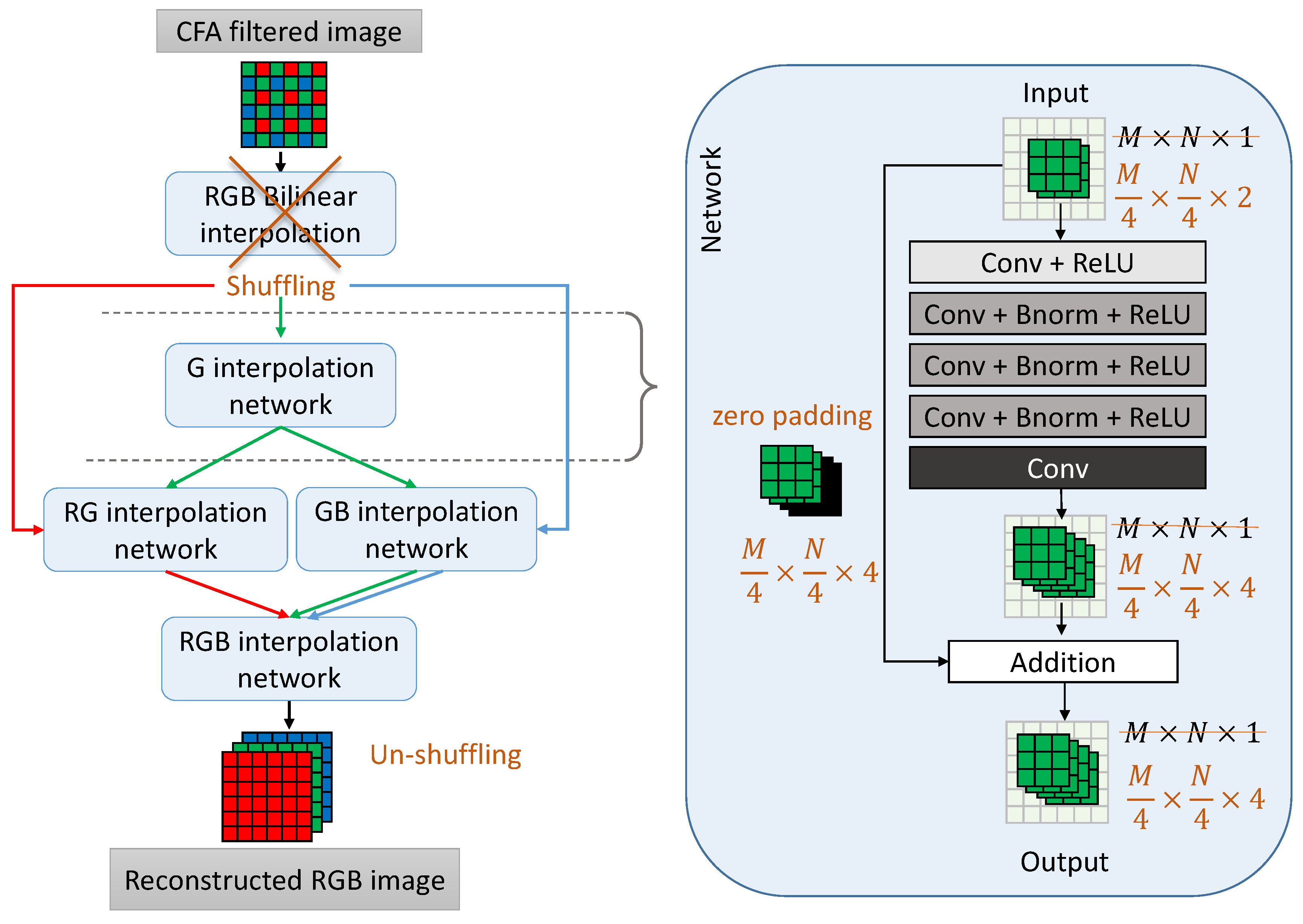

2.2. Demosaicing Using a CNN (CDMNet)

3. Modifying State-of-the-Art Demosaicing Algorithms

3.1. Modifying ACUDe

3.2. Making CDMNet Generic

- We do not rely on an initial estimate obtained by bilinear interpolation. Any interpolation technique cannot guarantee equal reconstruction quality when applied on different CFA patterns. Since our goal is to compare the influence of the patterns on the reconstruction quality, it is necessary that all other conditions are equal when training the neural networks. Instead of initial interpolation, the network operates on the original, sub-sampled color channels provided at the input. To compensate for the lower spatial resolution of the input and reconstruct a high-resolution output, the idea of periodic shuffling is applied as explained in the next paragraph.

- We integrated the idea of periodic shuffling in the CDMNet. Periodic shuffling was proposed for the problem of image super-resolution [42], in order to substitute the deconvolution layer for up-sampling. The effect of this operation is that the network operates on a lower spatial resolution, and interpolates the missing values in the feature channels. The number of channels is proportional to the sub-sampling factor. To obtain the final high-resolution output, the elements of the tensors of low spatial resolution and high dimensionality are re-arranged into a high-resolution RGB image.

- All patterns used in these experiments were assumed to have the same size. Patterns that are smaller (e.g., Bayer pattern or Lukac pattern) can be considered as replicated in the appropriate dimension to achieve the largest size of all compared patterns, . The down-sampling factor r in the periodic shuffling is determined by the size of the pattern, and in this case we have fixed it to 4 in both the horizontal and vertical directions.

4. Experimental Analysis

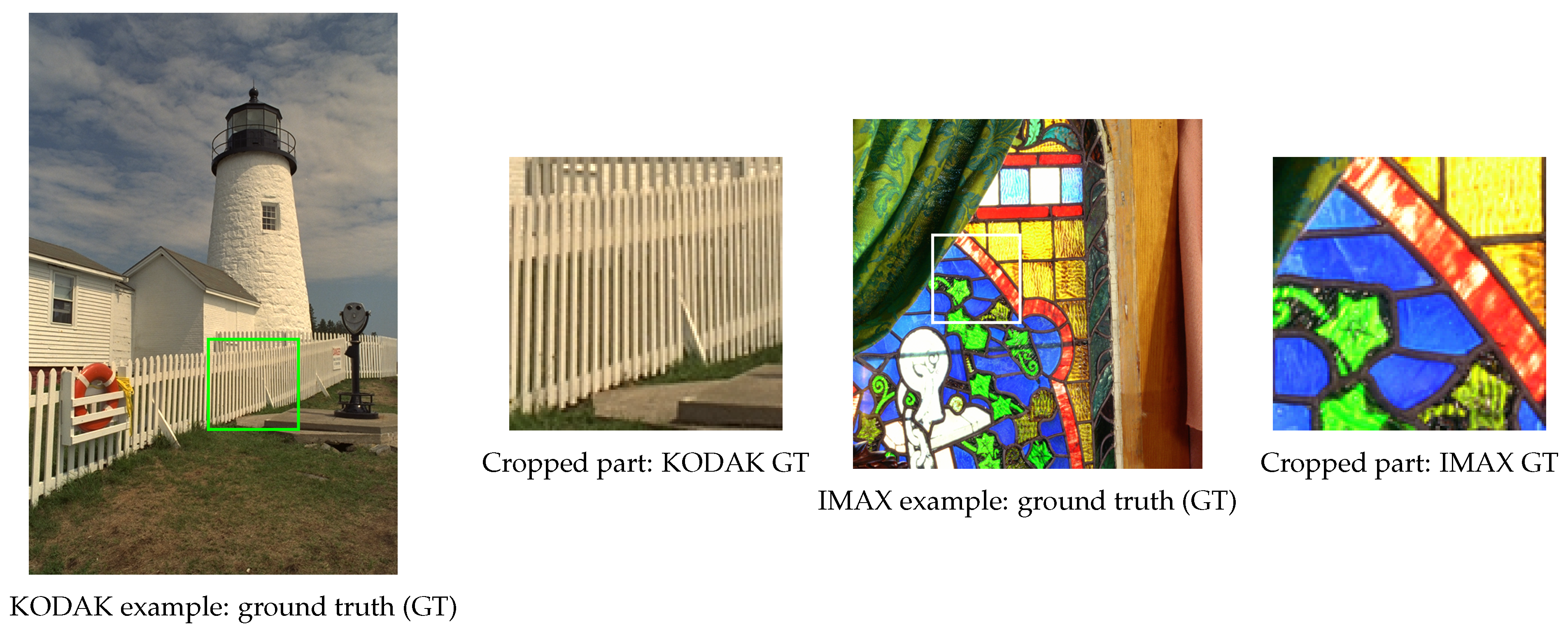

Experiments and Materials

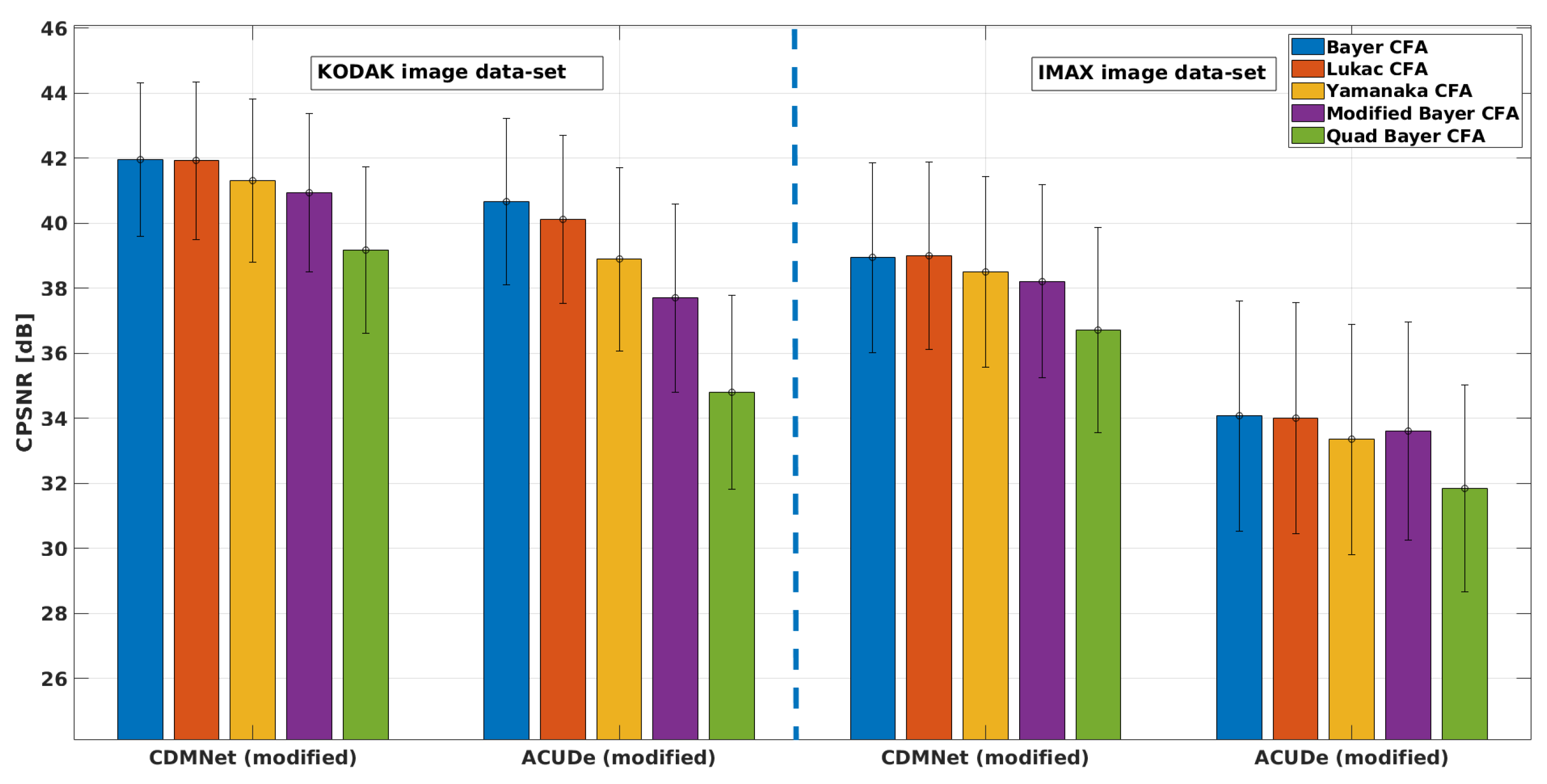

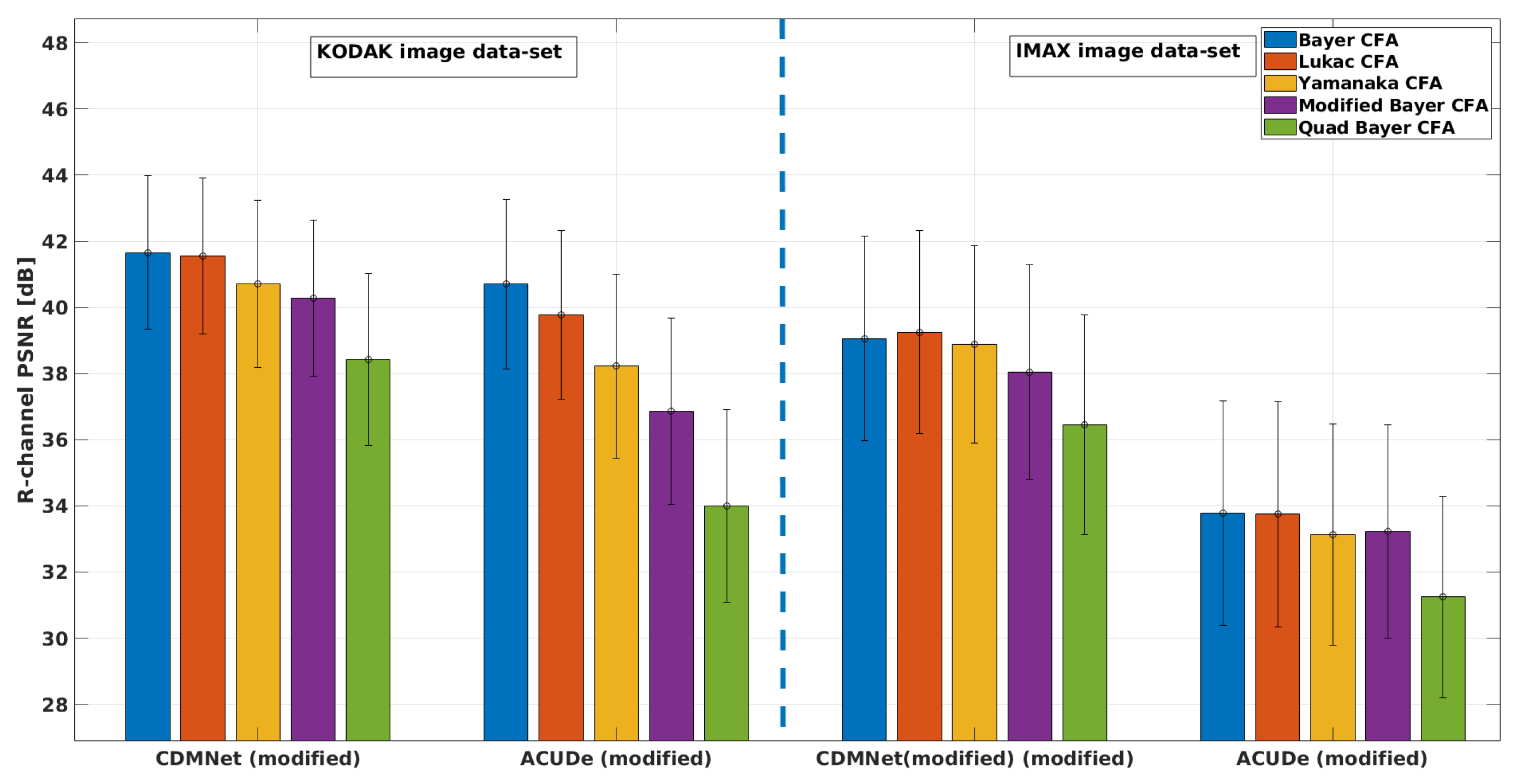

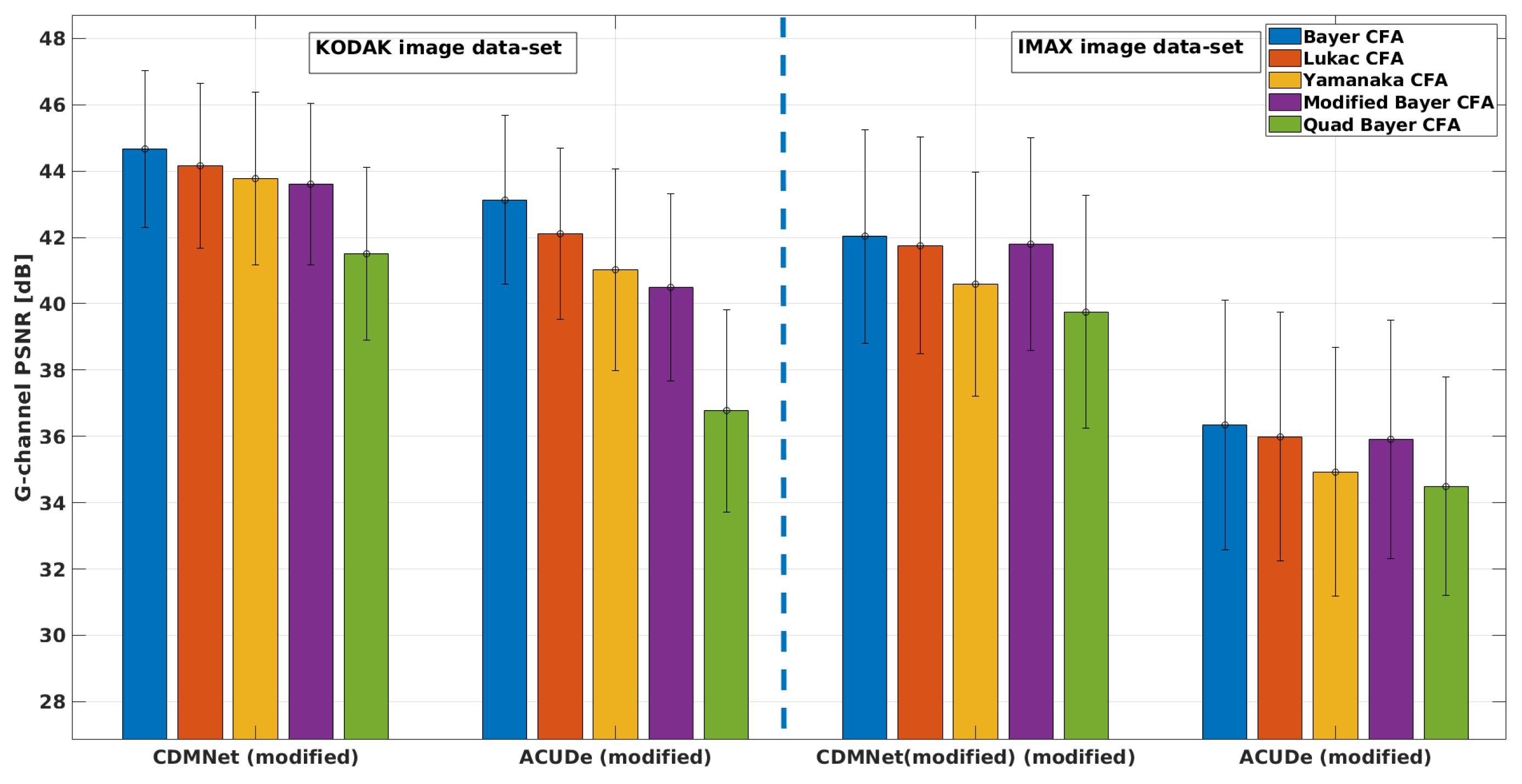

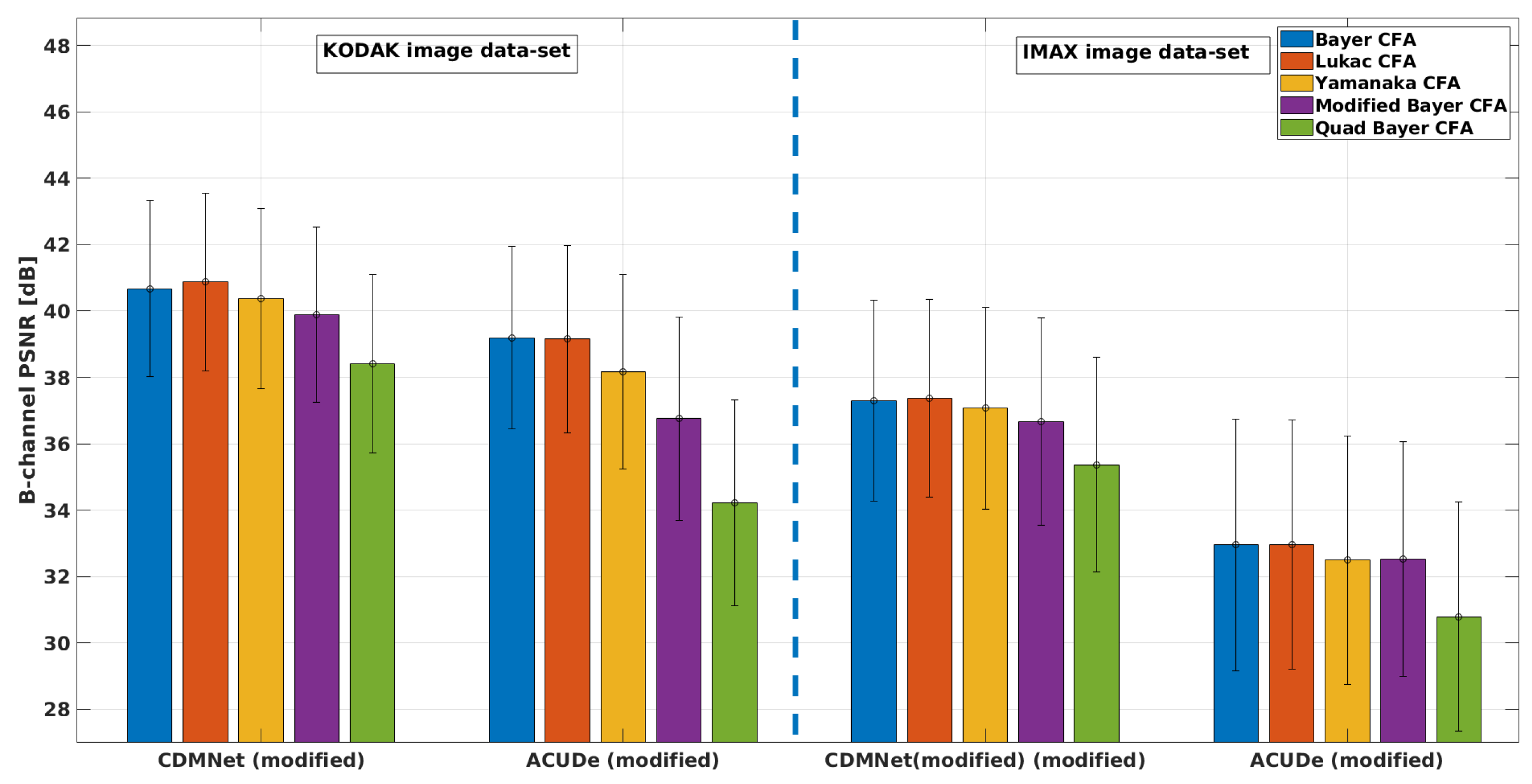

5. Results

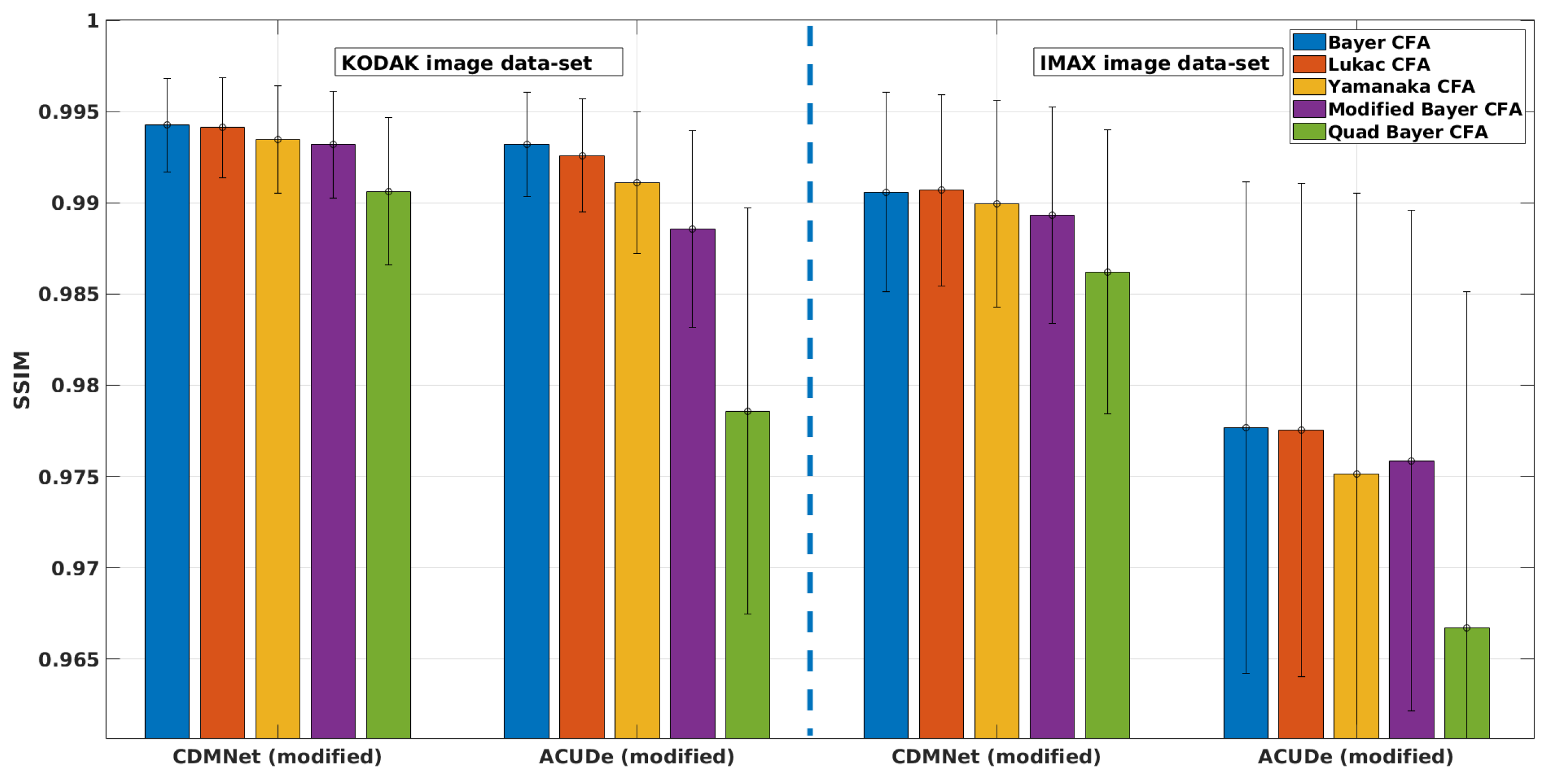

5.1. Results from the Quantitative Analysis

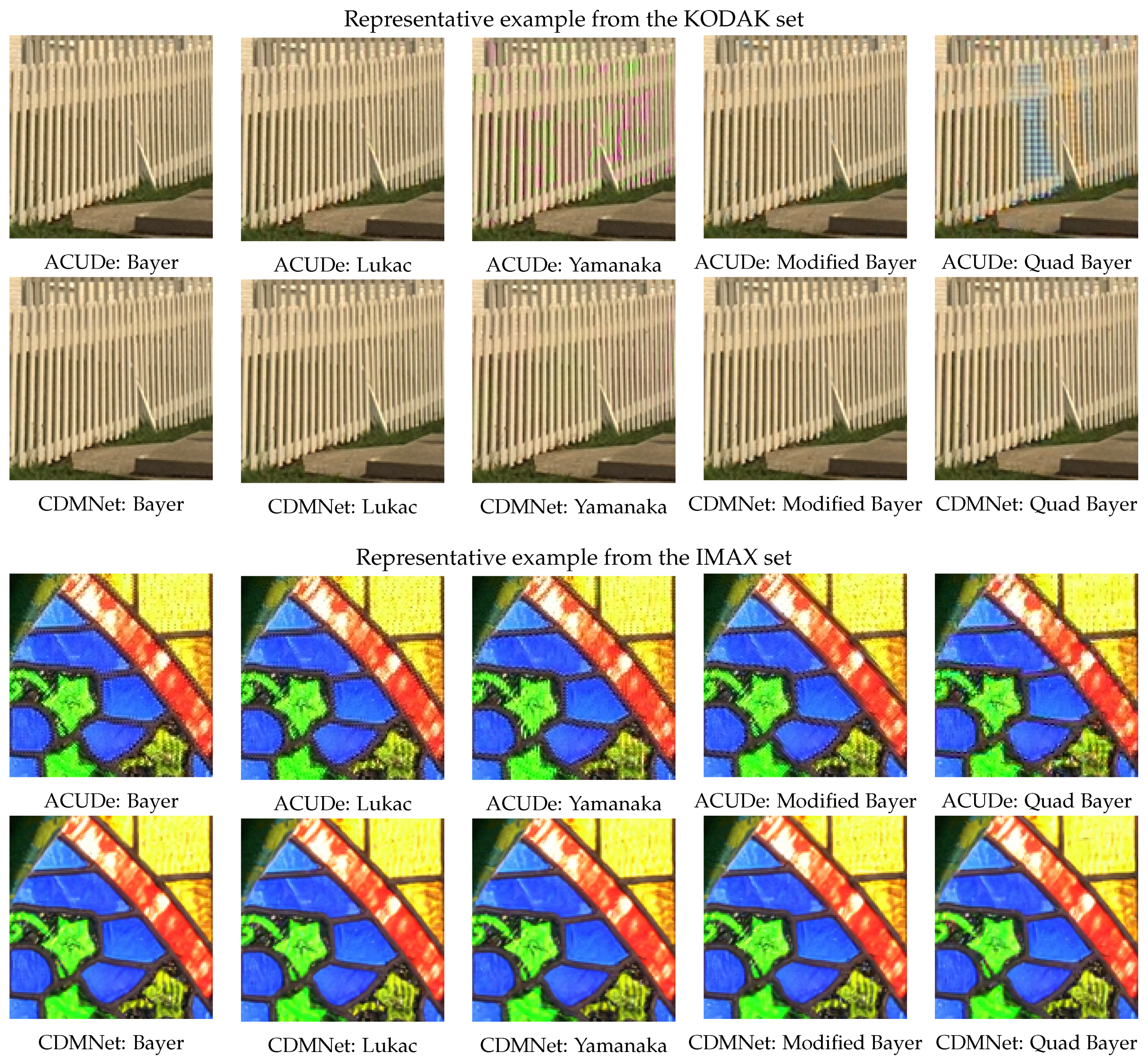

5.2. Results from the Qualitative Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bayer, B.E. Color Imaging Array. U.S. Patent 3,971,065, 1976. [Google Scholar]

- Lukac, R.; Plataniotis, K.N. Color filter arrays: Design and performance analysis. IEEE Trans. Consum. Electron. 2005, 51, 1260–1267. [Google Scholar] [CrossRef]

- Hirakawa, K.; Wolfe, P.J. Spatio-spectral color filter array design for optimal image recovery. IEEE Trans. Image Process. 2008, 17, 1876–1890. [Google Scholar] [CrossRef] [PubMed]

- Yamanaka, S. Solid State Color Camera. U.S. Patent 4,054,906, 1977. [Google Scholar]

- Available online: https://www.sony.net/SonyInfo/News/Press/201807/18-060E/index.html (accessed on 20 July 2019).

- Available online: https://www.ubergizmo.com/articles/quad-bayer-camera-sensor/ (accessed on 20 July 2019).

- Realization of Natural Color Reproduction in Digital Still Cameras, Closer to the Natural Sight Perception of the Human Eye, Sony Corp. 2003. Available online: https://www.sony.net/SonyInfo/News/Press_Archive/200307/03-029E/ (accessed on 20 July 2019).

- Kijima, T.; Nakamura, H.; Compton, J.; Hamilton, J. Image Sensor with Improved Light Sensitivity. U.S. Patent 20,070,177,236, 2007. [Google Scholar]

- Lapray, P.J.; Wang, X.; Thomas, J.B.; Gouton, P. Multispectral filter arrays: Recent advances and practical implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef] [PubMed]

- Monno, Y.; Kikuchi, S.; Tanaka, M.; Okutomi, M. A practical one-shot multispectral imaging system using a single image sensor. IEEE Trans. Image Process. 2015, 24, 3048–3059. [Google Scholar] [CrossRef] [PubMed]

- Gunturk, B.K.; Glotzbach, J.; Altunbasak, Y.; Schafer, R.W.; Mersereau, R.M. Demosaicking: Color filter array interpolation. IEEE Signal Process. Mag. 2005, 22, 44–54. [Google Scholar] [CrossRef]

- Li, X.; Gunturk, B.; Zhang, L. Image demosaicing: A systematic survey. Proc. SPIE 2008, 6822, 68221J. [Google Scholar]

- Menon, D.; Calvagno, G. Color image demosaicking: An overview. Signal Process. Image Commun. 2011, 26, 518–533. [Google Scholar] [CrossRef]

- Monno, Y.; Kiku, D.; Tanaka, M.; Okutomi, M. Adaptive residual interpolation for color and multispectral image demosaicking. Sensors 2017, 17, 2787. [Google Scholar] [CrossRef]

- Alleysson, D.; Susstrunk, S.; Hérault, J. Linear demosaicing inspired by the human visual system. IEEE Trans. Image Process. 2005, 14, 439–449. [Google Scholar]

- Dubois, E. Frequency-domain methods for demosaicking of Bayer-sampled color images. IEEE Signal Process. Lett. 2005, 12, 847–850. [Google Scholar] [CrossRef]

- Lu, Y.M.; Karzand, M.; Vetterli, M. Demosaicking by alternating projections: Theory and fast one-step implementation. IEEE Trans. Image Process. 2010, 19, 2085–2098. [Google Scholar] [CrossRef] [PubMed]

- Menon, D.; Calvagno, G. Demosaicing based on wavelet analysis of the luminance component. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16–19 September 2007; Volume 2, pp. II-181–II-184. [Google Scholar]

- Aelterman, J.; Goossens, B.; De Vylder, J.; Pižurica, A.; Philips, W. Computationally efficient locally adaptive demosaicing of color filter array images using the dual-tree complex wavelet packet transform. PLoS ONE 2013, 8, e61846. [Google Scholar] [CrossRef] [PubMed]

- Condat, L. A generic variational approach for demosaicking from an arbitrary color filter array. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1605–1608. [Google Scholar]

- Menon, D.; Calvagno, G. Regularization approaches to demosaicking. IEEE Trans. Image Process. 2009, 18, 2209–2220. [Google Scholar] [CrossRef] [PubMed]

- Mairal, J.; Bach, F.R.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. ICCV. Citeseer 2009, 29, 54–62. [Google Scholar]

- Wu, J.; Timofte, R.; Van Gool, L. Demosaicing based on directional difference regression and efficient regression priors. IEEE Trans. Image Process. 2016, 25, 3862–3874. [Google Scholar] [CrossRef] [PubMed]

- Amba, P.; Thomas, J.B.; Alleysson, D. N-LMMSE demosaicing for spectral filter arrays. J. Imaging Sci. Technol. 2017, 61, 40407:1–40407:11. [Google Scholar] [CrossRef]

- Cui, K.; Jin, Z.; Steinbach, E. Color Image Demosaicking Using a 3-Stage Convolutional Neural Network Structure. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 2177–2181. [Google Scholar]

- Condat, L. A new random color filter array with good spectral properties. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1613–1616. [Google Scholar]

- Lukac, R.; Plataniotis, K.N. Universal demosaicking for imaging pipelines with an RGB color filter array. Pattern Recognit. 2005, 38, 2208–2212. [Google Scholar] [CrossRef]

- Zhang, C.; Li, Y.; Wang, J.; Hao, P. Universal demosaicking of color filter arrays. IEEE Trans. Image Process. 2016, 25, 5173–5186. [Google Scholar] [CrossRef]

- Amba, P.; Dias, J.; Alleysson, D. Random Color Filter Arrays are Better than Regular Ones. J. Imaging Sci. Technol. 2016, 60, 50406:1–50406:6. [Google Scholar] [CrossRef]

- Amba, P.; Alleysson, D.; Mermillod, M. Demosaicing using Dual Layer Feedforward Neural Network. In Color and Imaging Conference; No.1; Society for Imaging Science and Technology: Springfield, VA, USA; Volume 2018, pp. 211–218.

- Gharbi, M.; Chaurasia, G.; Paris, S.; Durand, F. Deep joint demosaicking and denoising. ACM Trans. Graph. (TOG) 2016, 35, 191. [Google Scholar] [CrossRef]

- Tan, R.; Zhang, K.; Zuo, W.; Zhang, L. Color image demosaicking via deep residual learning. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 793–798. [Google Scholar]

- Kokkinos, F.; Lefkimmiatis, S. Deep image demosaicking using a cascade of convolutional residual denoising networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 303–319. [Google Scholar]

- Kokkinos, F.; Lefkimmiatis, S. Iterative Residual Network for Deep Joint Image Demosaicking and Denoising. arXiv 2018, arXiv:1807.06403. [Google Scholar]

- Syu, N.S.; Chen, Y.S.; Chuang, Y.Y. Learning deep convolutional networks for demosaicing. arXiv Preprint 2018, arXiv:1802.03769. [Google Scholar]

- Tan, D.S.; Chen, W.Y.; Hua, K.L. DeepDemosaicking: Adaptive image demosaicking via multiple deep fully convolutional networks. IEEE Trans. Image Process. 2018, 27, 2408–2419. [Google Scholar] [CrossRef]

- Chakrabarti, A. Learning sensor multiplexing design through back-propagation. Adv. Neural Inf. Process. Syst. 2016, 3081–3089. [Google Scholar]

- Henz, B.; Gastal, E.S.; Oliveira, M.M. Deep joint design of color filter arrays and demosaicing. Comput. Graph. Forum 2018, 37, 389–399. [Google Scholar] [CrossRef]

- Available online: http://r0k.us/graphics/kodak/ (accessed on 20 July 2019).

- Available online: https://www4.comp.polyu.edu.hk/~cslzhang/DATA/McM.zip (accessed on 20 July 2019).

- Available online: http://www.eecs.qmul.ac.uk/~phao/CFA/acude/ (accessed on 20 July 2019).

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Ma, K.; Duanmu, Z.; Wu, Q.; Wang, Z.; Yong, H.; Li, H.; Zhang, L. Waterloo exploration database: New challenges for image quality assessment models. IEEE Trans. Image Process. 2016, 26, 1004–1016. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stojkovic, A.; Shopovska, I.; Luong, H.; Aelterman, J.; Jovanov, L.; Philips, W. The Effect of the Color Filter Array Layout Choice on State-of-the-Art Demosaicing. Sensors 2019, 19, 3215. https://doi.org/10.3390/s19143215

Stojkovic A, Shopovska I, Luong H, Aelterman J, Jovanov L, Philips W. The Effect of the Color Filter Array Layout Choice on State-of-the-Art Demosaicing. Sensors. 2019; 19(14):3215. https://doi.org/10.3390/s19143215

Chicago/Turabian StyleStojkovic, Ana, Ivana Shopovska, Hiep Luong, Jan Aelterman, Ljubomir Jovanov, and Wilfried Philips. 2019. "The Effect of the Color Filter Array Layout Choice on State-of-the-Art Demosaicing" Sensors 19, no. 14: 3215. https://doi.org/10.3390/s19143215

APA StyleStojkovic, A., Shopovska, I., Luong, H., Aelterman, J., Jovanov, L., & Philips, W. (2019). The Effect of the Color Filter Array Layout Choice on State-of-the-Art Demosaicing. Sensors, 19(14), 3215. https://doi.org/10.3390/s19143215