Transfer Learning Assisted Classification and Detection of Alzheimer’s Disease Stages Using 3D MRI Scans

Abstract

1. Introduction

- We propose and evaluate a transfer-learning-based method to classify Alzheimer’s disease

- An algorithm is proposed for a multiclass classification problem to identify Alzheimer’s stages

- We evaluate the effect of different gray levels 3D MRI views to identify the stages of Alzheimer’s disease

2. Related Work

2.1. Binary Classification Techniques

2.2. Multi-Class Classification Techniques

2.3. Deep-Learning-Based Alzheimer’s Detection

3. Proposed Methodology

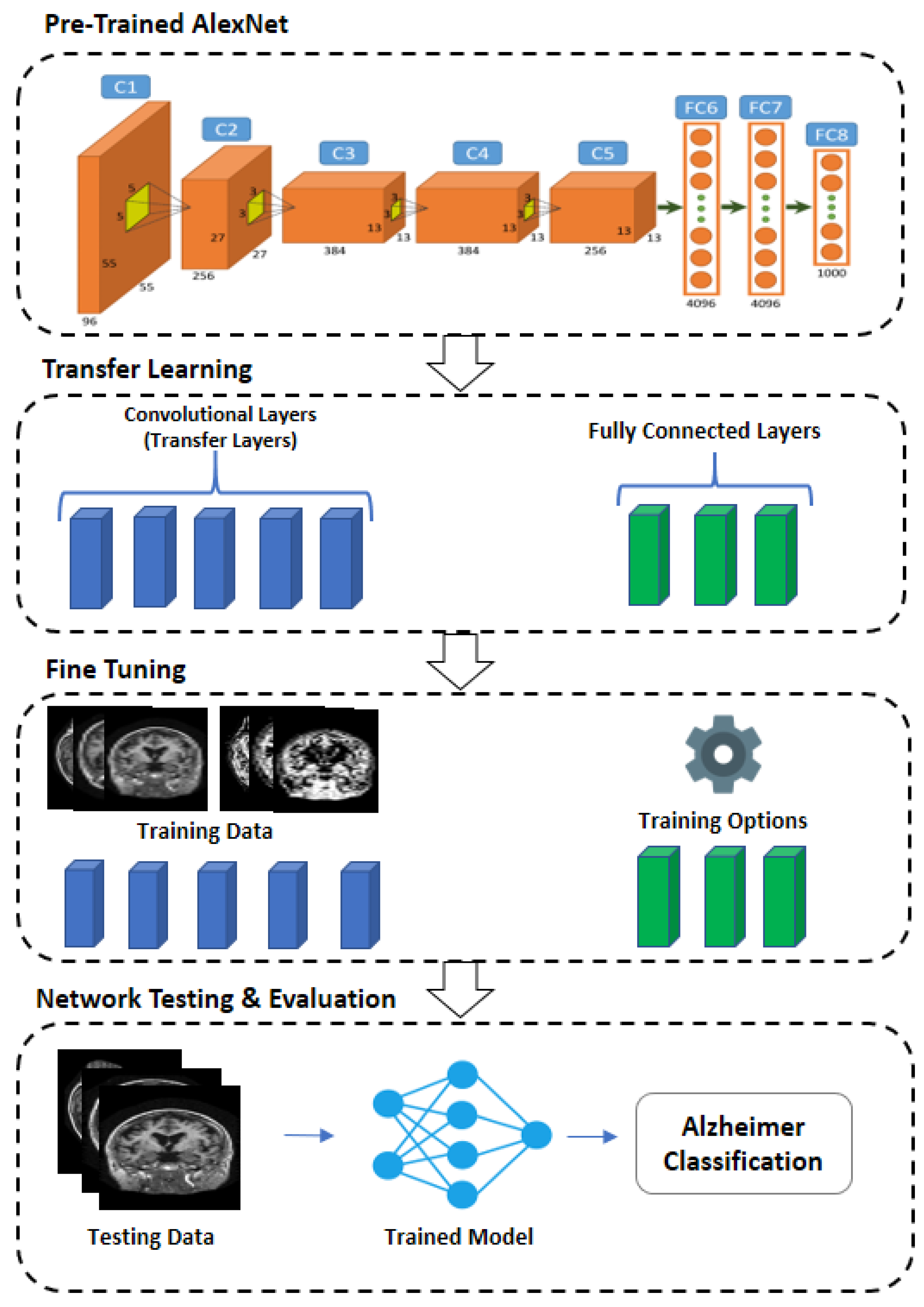

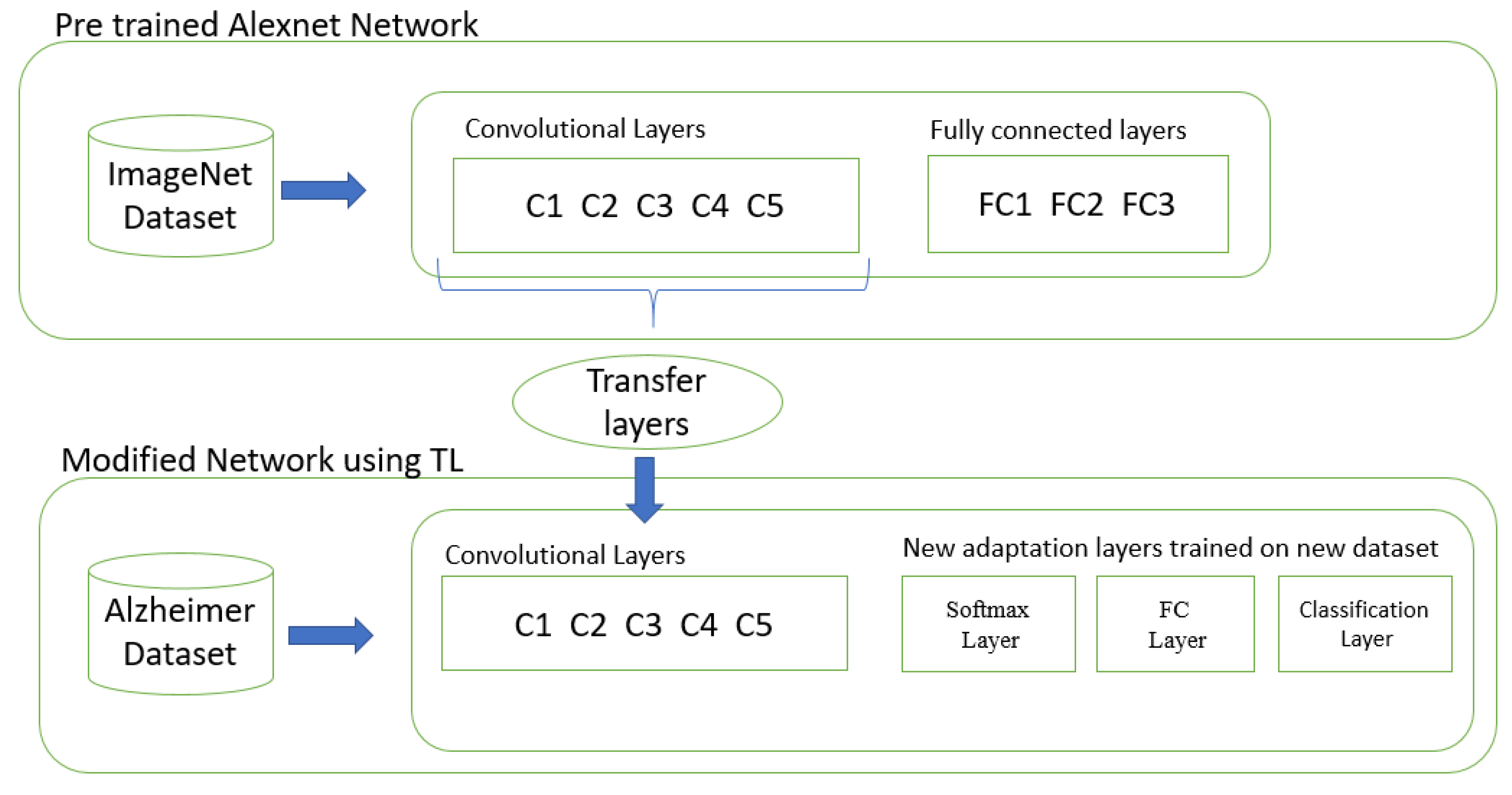

3.1. Pre-trained CNN Architecture: AlexNet

3.2. Transfer Learning Parameters

Modified Network Architecture

3.3. Pre-Processing of Target Dataset

3.4. Training Network and Fine Tuning

3.5. Network Testing—Classification of Alzheimer’s

4. Experimental Setup and Results

4.1. Tools and Software

4.2. Dataset

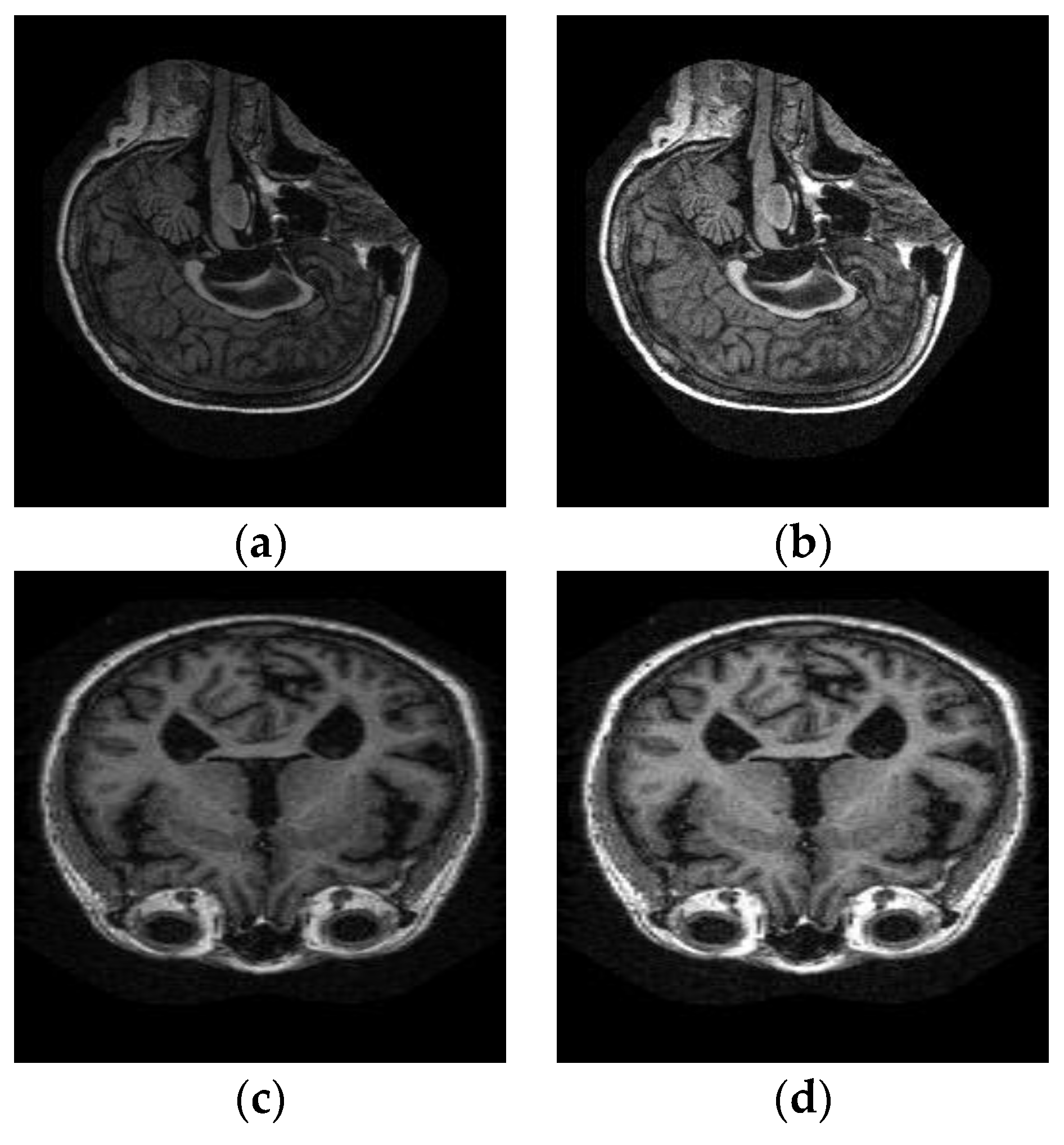

4.3. Image Pre-Processing

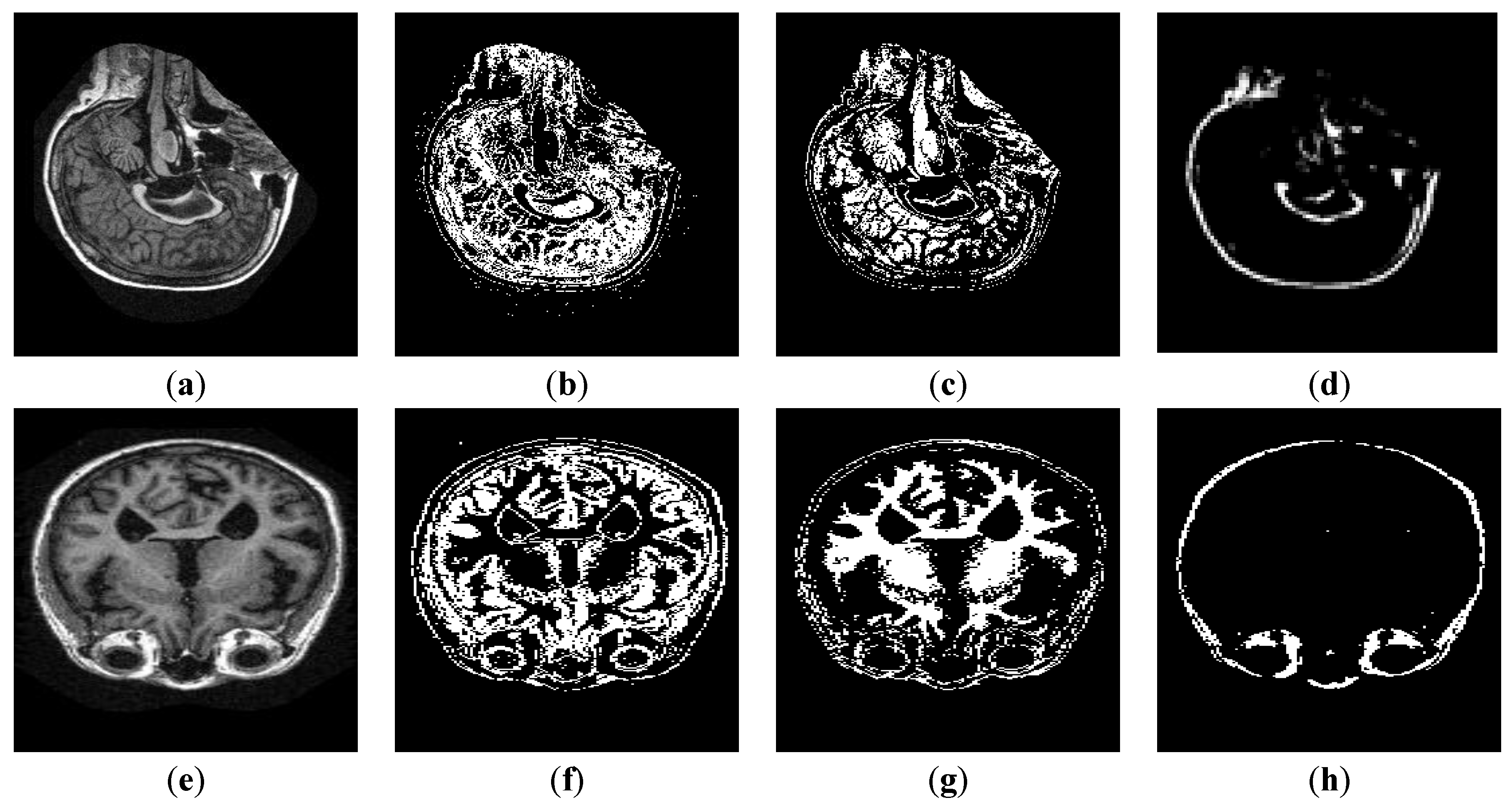

4.4. Image Segmentation

4.5. Evaluation Metrics

4.5.1. Sensitivity–Recall

4.5.2. Specificity

4.5.3. Precision

4.5.4. False Positive Rate (FPR)

4.5.5. Equal Error Rate (EER)

4.6. Results and Discussion

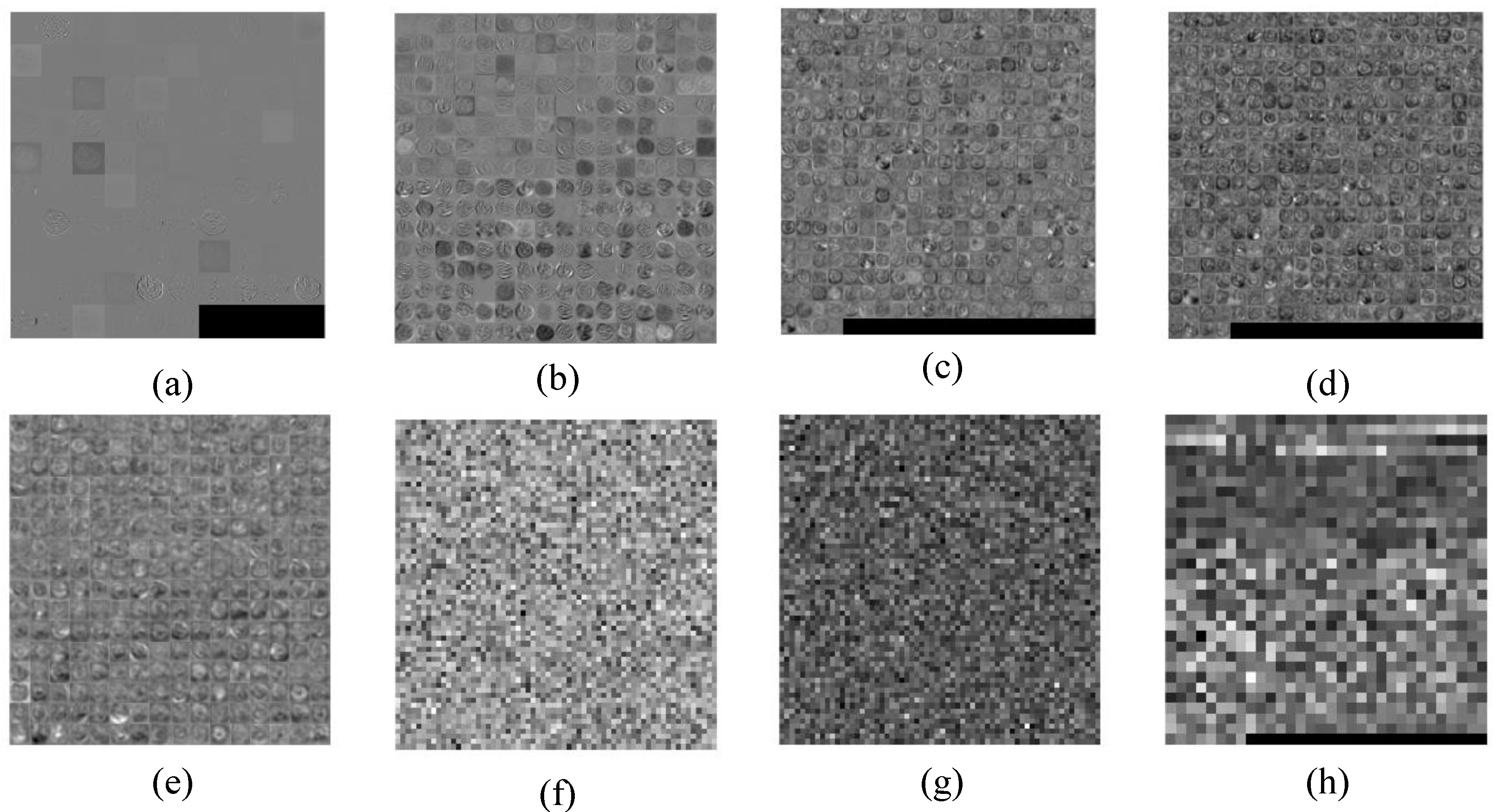

4.6.1. Pre-Processing Results

4.6.2. Segmentation Results

4.6.3. Layer-Wise Results of AlexNet

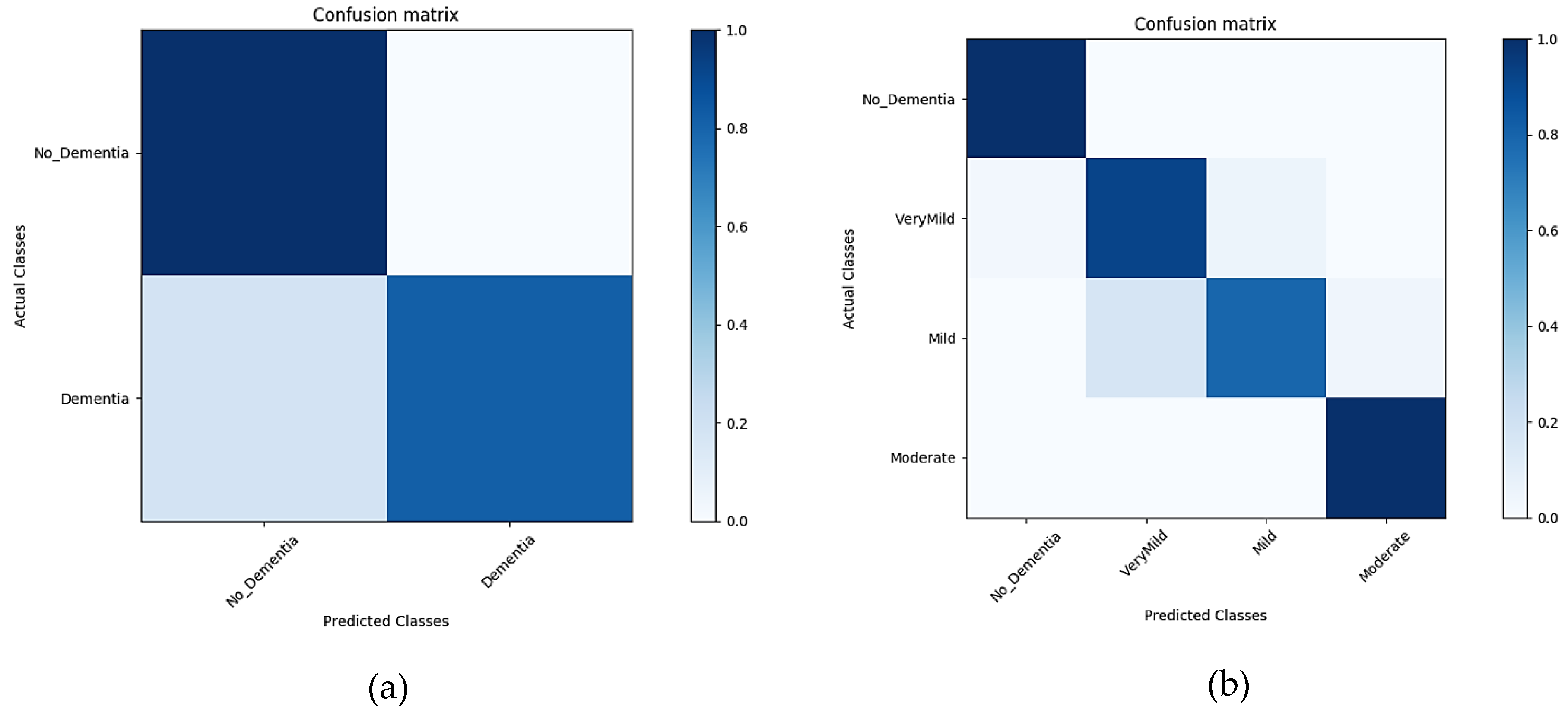

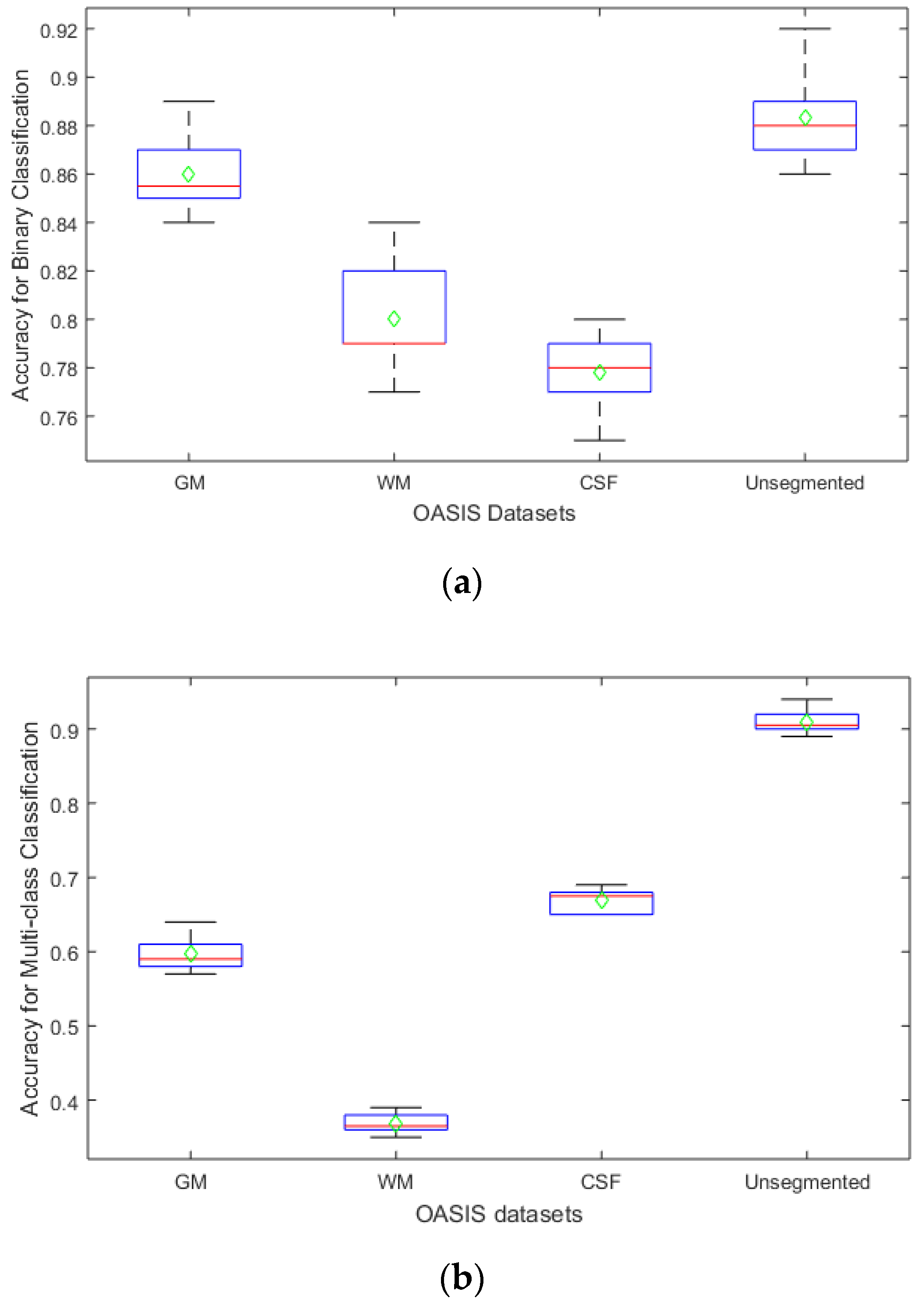

4.6.4. Classification Results

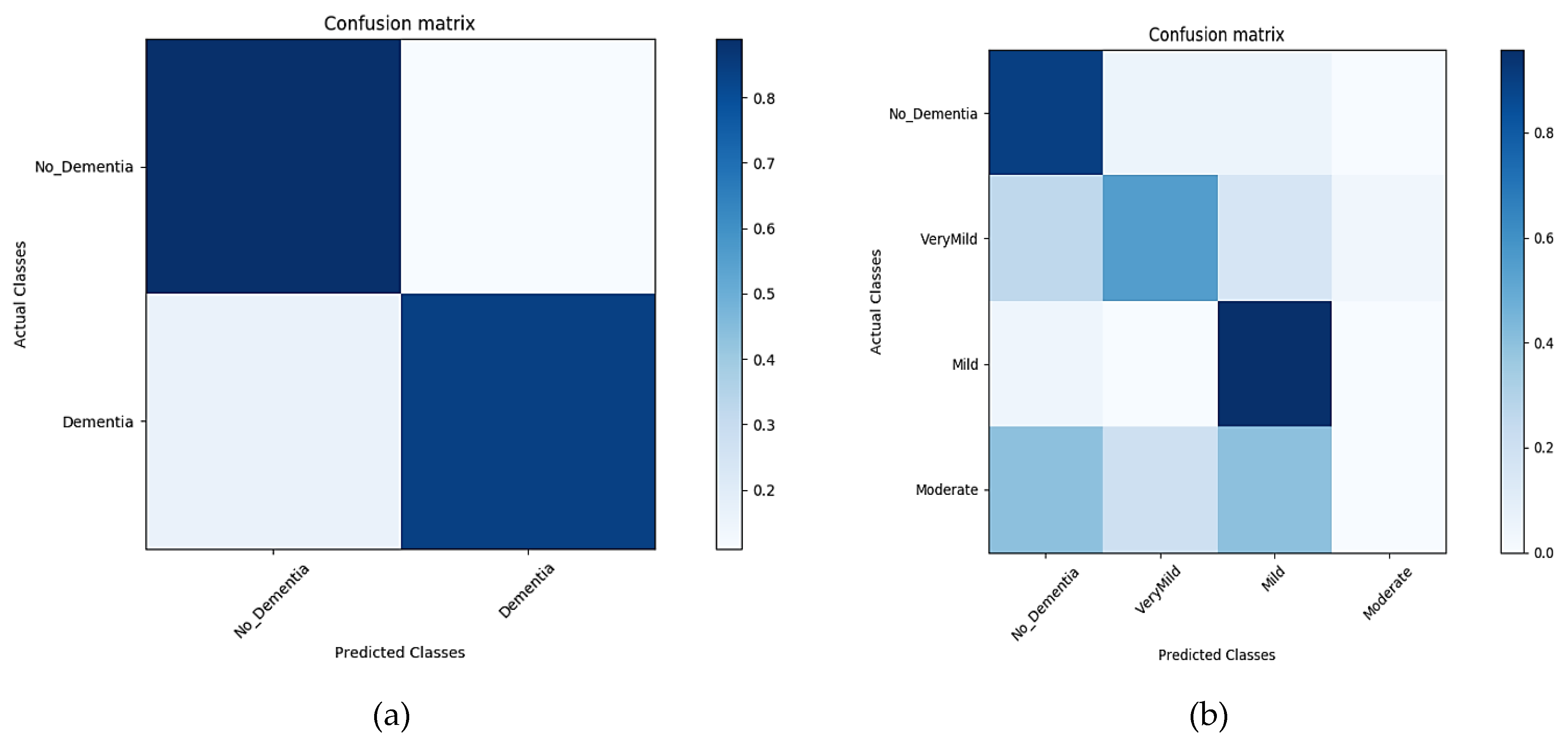

Classification Results for GM—Segment 1

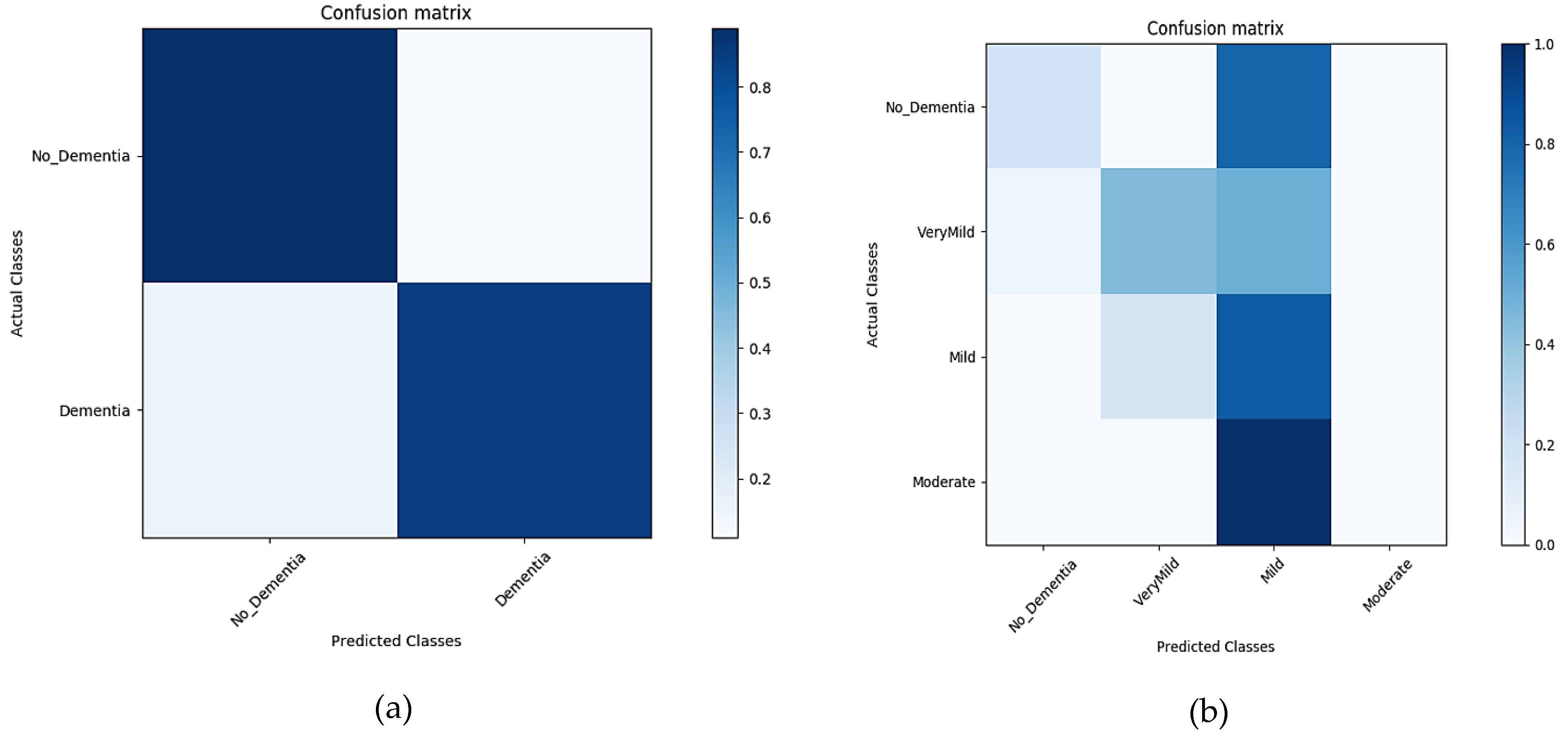

Classification Results for WM—Segment 2

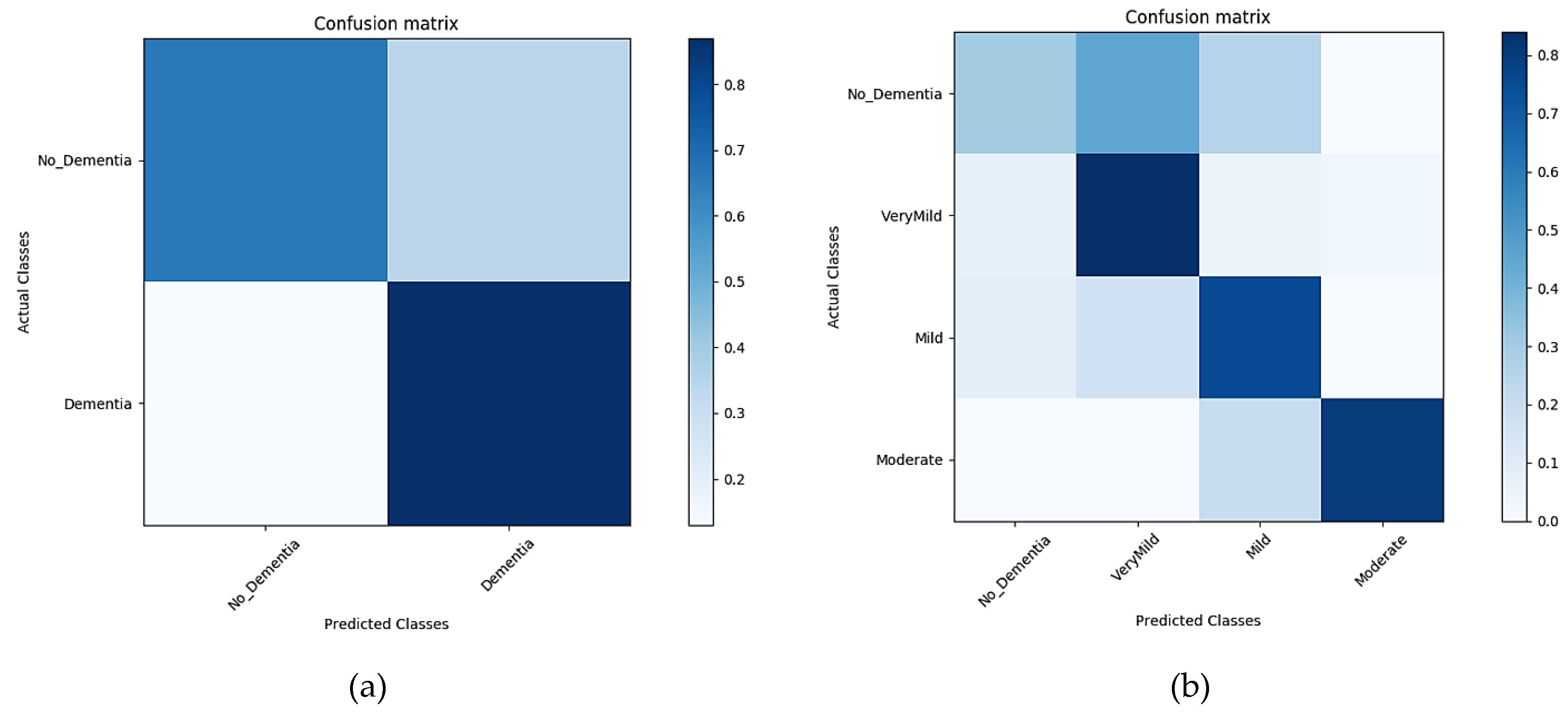

Classification Results for CSF—Segment 3

Classification Results for Un-Segmented MRIs

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Beheshti, I.; Demirelb, H.; Matsudaaf, H. Classification of Alzheimer’s disease and prediction of mild cognitive impairment-to-Alzheimer’s conversion from structural magnetic resource imaging using feature ranking and a genetic algorithm. Comput. Biol. Med. 2017, 83, 109–119. [Google Scholar] [CrossRef] [PubMed]

- Klöppel, S.; Stonnington, C.M.; Chu, C.; Draganski, B.; Scahill, R.I.; Rohrer, J.D.; Fox, N.C.; Jack, C.R., Jr.; Ashburner, J.; Frackowiak, R.S. Automatic classification of MR scans in Alzheimer’s disease. Brain 2008, 131, 681–689. [Google Scholar] [CrossRef] [PubMed]

- Beheshti, I.; Demirel, H. Feature-ranking-based Alzheimer’s disease classification from structural MRI. Magn. Reson. Imaging 2016, 34, 252–263. [Google Scholar] [CrossRef] [PubMed]

- Belleville, S.; Fouquet, C.; Duchesne, S.; Collins, D.L.; Hudon, C. Detecting early preclinical Alzheimer’s disease via cognition, neuropsychiatry, and neuroimaging: Qualitative review and recommendations for testing. J. Alzheimers Dis. 2014, 42, S375–S382. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zhang, Y.; Liu, G.; Phillips, P.; Yuan, T.-F. Detection of Alzheimer’s disease by three-dimensional displacement field estimation in structural magnetic resonance imaging. J. Alzheimers Dis. 2016, 50, 233–248. [Google Scholar] [CrossRef] [PubMed]

- Altaf, T.; Anwar, S.; Gul, N.; Majeed, N.; Majid, M. Multi-class Alzheimer disease classification using hybrid features. In Proceedings of the Future Technologies Conference (FTC) 2017, Vancouver, BC, Canada, 29–30 November 2017. [Google Scholar]

- Liu, Y.; Teverovskiy, L.; Carmichael, O.; Kikinis, R.; Shenton, M.; Carter, C.S.; Stenger, V.A.; Davis, S.; Aizenstein, H.; Becker, J.T. Discriminative MR image feature analysis for automatic schizophrenia and Alzheimer’s disease classification. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, Saint-Malo, France, 26–29 September 2004. [Google Scholar]

- Lao, Z.; Shen, D.; Xue, Z.; Karacali, B.; Resnick, S.M.; Davatzikos, C. Morphological classification of brains via high-dimensional shape transformations and machine learning methods. Neuroimage 2004, 21, 46–57. [Google Scholar] [CrossRef] [PubMed]

- Fung, G.; Stoeckel, J. SVM feature selection for classification of SPECT images of Alzheimer’s disease using spatial information. Knowl. Inf. Syst. 2007, 11, 243–258. [Google Scholar] [CrossRef]

- Chincarini, A.; Bosco, P.; Calvini, P.; Gemme, G.; Esposito, M.; Olivieri, C.; Rei, L.; Squarcia, S.; Rodriguez, G.; Bellotti, R. Local MRI analysis approach in the diagnosis of early and prodromal Alzheimer’s disease. Neuroimage 2011, 58, 469–480. [Google Scholar] [CrossRef] [PubMed]

- Westman, E.; Cavallin, L.; Muehlboeck, J.-S.; Zhang, Y.; Mecocci, P.; Vellas, B.; Tsolaki, M.; Kłoszewska, I.; Soininen, H.; Spenger, C. Sensitivity and specificity of medial temporal lobe visual ratings and multivariate regional MRI classification in Alzheimer’s disease. PLoS ONE 2011, 6, e22506. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, O.B.; Benois-Pineau, J.; Allard, M.; Amar, C.B.; Catheline, G. Classification of Alzheimer’s disease subjects from MRI using hippocampal visual features. Multimed. Tools Appl. 2015, 74, 1249–1266. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based Synthetic Medical Image Augmentation for increased CNN Performance in Liver Lesion Classification. arXiv 2018, arXiv:1803.01229. [Google Scholar] [CrossRef]

- Bukowy, J.D.; Dayton, A.; Cloutier, D.; Manis, A.D.; Staruschenko, A.; Lombard, J.H.; Woods, L.C.S.; Beard, D.A.; Cowley, A.W. Region-Based Convolutional Neural Nets for Localization of Glomeruli in Trichrome-Stained Whole Kidney Sections. J. Am. Soc. Nephrol. 2018, 29, 2081–2088. [Google Scholar] [CrossRef] [PubMed]

- Noothout, J.M.H.; De Vos, B.D.; Wolterink, J.M.; Leiner, T.; Isgum, I. CNN-based Landmark Detection in Cardiac CTA Scans. arXiv 2018, arXiv:1804.04963. [Google Scholar]

- Sarraf, S.; Tofighi, G. DeepAD: Alzheimer′ s Disease Classification via Deep Convolutional Neural Networks using MRI and fMRI. bioRxiv 2016. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the NIPS 2014: Neural Information Processing Systems Conference, Quebec, QC, Canada, 8–13 December 2014. [Google Scholar]

- Tieu, K.; Russo, A.; Mackey, B.; Sengupta, K. Systems and Methods for Image Alignment. US Patent 15/728,392, 2018. [Google Scholar]

- Gao, M.; Bagci, U.; Lu, L.; Wu, A.; Buty, M.; Shin, H.-C.; Roth, H.; Papadakis, G.Z.; Depeursinge, A.; Summers, R.M. Holistic classification of CT attenuation patterns for interstitial lung diseases via deep convolutional neural networks. Comput. Meth. Biomech. Biomed. Eng. 2018, 6, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in neural information processing systems, Nevada, NX, USA, 3–6 December 2012. [Google Scholar]

- Ramaniharan, A.K.; Manoharan, S.C.; Swaminathan, R. Laplace Beltrami eigen value based classification of normal and Alzheimer MR images using parametric and non-parametric classifiers. Expert Syst. Appl. 2016, 59, 208–216. [Google Scholar] [CrossRef]

- Guerrero, R.; Wolz, R.; Rao, A.; Rueckert, D. Manifold population modeling as a neuro-imaging biomarker: Application to ADNI and ADNI-GO. NeuroImage 2014, 94, 275–286. [Google Scholar] [CrossRef]

- Plocharski, M.; Østergaard, L.R.; Initiative, A.S.D.N. Extraction of sulcal medial surface and classification of Alzheimer’s disease using sulcal features. Comput. Meth. Biomech. Biomed. Eng. 2016, 133, 35–44. [Google Scholar] [CrossRef]

- Ben Ahmed, O.; Mizotin, M.; Benois-Pineau, J.; Allard, M.; Catheline, G.; Ben Amar, C. Alzheimer’s disease diagnosis on structural MR images using circular harmonic functions descriptors on hippocampus and posterior cingulate cortex. Comput. Med. Imag. Grap. 2015, 44, 13–25. [Google Scholar] [CrossRef]

- Altaf, T.; Anwar, S.M.; Gul, N.; Majeed, M.N.; Majid, M. Multi-class Alzheimer’s disease classification using image and clinical features. Biomed. Signal Process. Control 2018, 43, 64–74. [Google Scholar] [CrossRef]

- Hinrichs, C.; Singh, V.; Mukherjee, L.; Xu, G.; Chung, M.K.; Johnson, S.C. Spatially augmented LPboosting for AD classification with evaluations on the ADNI dataset. Neuroimage 2009, 48, 138–149. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Ayhan, M.; Maida, A. Natural image bases to represent neuroimaging data. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Payan, A.; Montana, G. Predicting Alzheimer’s disease: A neuroimaging study with 3D convolutional neural networks. arXiv 2015, arXiv:1502.02506. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Ni, D.; Qin, J.; Li, S.; Yang, X.; Wang, T.; Heng, P.A. Standard plane localization in fetal ultrasound via domain transferred deep neural networks. IEEE J. Biomed. Health 2015, 19, 1627–1636. [Google Scholar] [CrossRef] [PubMed]

- Margeta, J.; Criminisi, A.; Cabrera Lozoya, R.; Lee, D.C.; Ayache, N. Fine-tuned convolutional neural nets for cardiac MRI acquisition plane recognition. Comput. Meth. Biomech. Biomed. Eng. 2017, 5, 339–349. [Google Scholar] [CrossRef]

- Ateeq, T.; Majeed, M.N.; Anwar, S.M.; Maqsood, M.; Rehman, Z.-U.; Lee, J.W.; Muhammad, K.; Wang, S.; Baik, S.W.; Mehmood, I. Ensemble-classifiers-assisted detection of cerebral microbleeds in brain MRI. Comput. Electr. Eng. 2018, 69, 768–781. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. arXiv 2018, arXiv:1808.01974. [Google Scholar]

- Kalsoom, A.; Maqsood, M.; Ghazanfar, M.A.; Aadil, F.; Rho, S. A dimensionality reduction-based efficient software fault prediction using Fisher linear discriminant analysis (FLDA). J. Supercomput. 2018, 74, 4568–4602. [Google Scholar] [CrossRef]

- Tan, L.; Chiang, T.C.; Mason, J.R.; Nelling, E. Herding behavior in Chinese stock markets: An examination of A and B shares. Pacific-Basin Finance J. 2008, 16, 61–77. [Google Scholar] [CrossRef]

- Singh, J.; Arora, A.S. Contrast Enhancement Algorithm for IR Thermograms Using Optimal Temperature Thresholding and Contrast Stretching. In Advances in Machine Learning and Data Science; Springer: Singapore, 2018. [Google Scholar]

- Lazli, L.; Boukadoum, M. Improvement of CSF, WM and GM Tissue Segmentation by Hybrid Fuzzy–Possibilistic Clustering Model based on Genetic Optimization Case Study on Brain Tissues of Patients with Alzheimer’s Disease. Int. J. Netw. Distrib. Comput. 2018, 6, 63–77. [Google Scholar] [CrossRef]

- Dinov, I.D. Model Performance Assessment. In Data Science and Predictive Analytics; Springer: Singapore, 2018; pp. 475–496. [Google Scholar]

- Nazir, F.; Ghazanfar, M.A.; Maqsood, M.; Aadil, F.; Rho, S.; Mehmood, I. Social media signal detection using tweets volume, hashtag, and sentiment analysis. Multimed. Tools Appl. 2018, 78, 3553–3586. [Google Scholar] [CrossRef]

| Clinical Dementia Rate (CDR) | Corresponding Mental State | No. of Image Samples |

|---|---|---|

| 0 | No Dementia | 167 |

| 0.5 | Very Mild Dementia | 87 |

| 1 | Mild Dementia | 105 |

| 2 | Moderate Dementia | 23 |

| Dataset | Classification | 6 Epochs | 10 Epochs | 15 Epochs | 20 Epochs | 25 Epochs |

|---|---|---|---|---|---|---|

| OASIS (Un-segmented) | Binary | 0.84 | 0.89 | 0.89 | 0.83 | 0.85 |

| Multiple | 0.86 | 0.92 | 0.91 | 0.87 | 0.87 |

| Dataset | Classification | Sensitivity | Specificity | Precision | EER | FPR | F1 Score | Accuracy | Learning Time |

|---|---|---|---|---|---|---|---|---|---|

| OASIS (GM) | Binary | 0.89 | 0.84 | 0.84 | 0.13 | 0.16 | - | 0.8621 | 93 min 6 s |

| Multiple | 60.28% | 49.20% | 49.20% | 0.40 | 0.51 | 54.18% | 0.6028 | 76 min 14 s | |

| OASIS (WM) | Binary | 0.66 | 0.92 | 0.89 | 0.2 | 0.08 | - | 0.8046 | 120 min 4 s |

| Multiple | 37.01% | 35.25% | 35.25% | 0.63 | 0.65 | 36.11% | 0.3701 | 113 min 23 s | |

| OASIS (CSF) | Binary | 0.66 | 0.88 | 0.85 | 0.22 | 0.12 | - | 0.7816 | 118 min 13 s |

| Multiple | 67.33% | 62.63% | 62.63% | 0.33 | 0.38 | 64.90 | 0.6733 | 110 min 24 s | |

| OASIS (Un-segmented) | Binary | 1 | 0.82 | 0.847 | 0.1 | 0.18 | - | 0.8966 | 125 min 20 s |

| Multiple | 92.85% | 74.27% | 74.27% | 0.07 | 0.26 | 82.53 | 0.9285 | 117 min 15 s |

| Sr# | Algorithm | Classifier | Accuracy (%) |

|---|---|---|---|

| 1 | Proposed Algorithm | CNN | 92.8 |

| 2 | MRI + clinical data | SVM | 79.8 |

| 3 | MRI + hybrid feature | LDA | 62.7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maqsood, M.; Nazir, F.; Khan, U.; Aadil, F.; Jamal, H.; Mehmood, I.; Song, O.-y. Transfer Learning Assisted Classification and Detection of Alzheimer’s Disease Stages Using 3D MRI Scans. Sensors 2019, 19, 2645. https://doi.org/10.3390/s19112645

Maqsood M, Nazir F, Khan U, Aadil F, Jamal H, Mehmood I, Song O-y. Transfer Learning Assisted Classification and Detection of Alzheimer’s Disease Stages Using 3D MRI Scans. Sensors. 2019; 19(11):2645. https://doi.org/10.3390/s19112645

Chicago/Turabian StyleMaqsood, Muazzam, Faria Nazir, Umair Khan, Farhan Aadil, Habibullah Jamal, Irfan Mehmood, and Oh-young Song. 2019. "Transfer Learning Assisted Classification and Detection of Alzheimer’s Disease Stages Using 3D MRI Scans" Sensors 19, no. 11: 2645. https://doi.org/10.3390/s19112645

APA StyleMaqsood, M., Nazir, F., Khan, U., Aadil, F., Jamal, H., Mehmood, I., & Song, O.-y. (2019). Transfer Learning Assisted Classification and Detection of Alzheimer’s Disease Stages Using 3D MRI Scans. Sensors, 19(11), 2645. https://doi.org/10.3390/s19112645