Cerebral Small Vessel Disease Biomarkers Detection on MRI-Sensor-Based Image and Deep Learning

Abstract

:1. Introduction

2. Related Works

Convolutional Neural Network

3. The Proposed Method

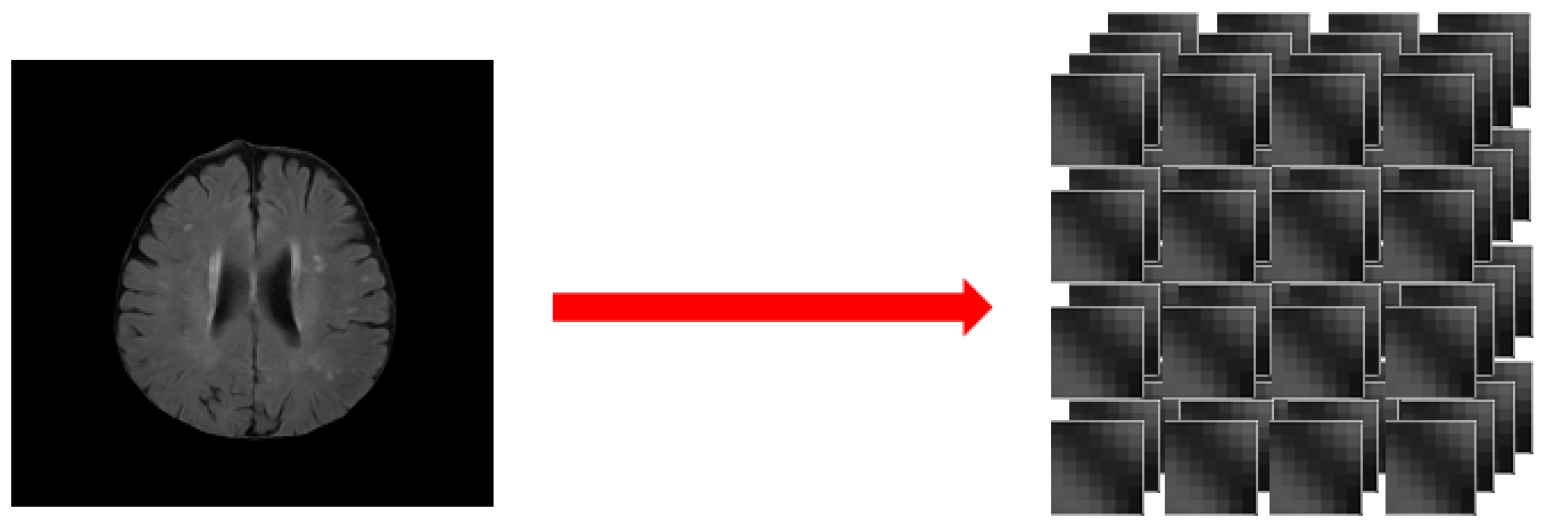

3.1. Data Preprocessing

- Step 1:

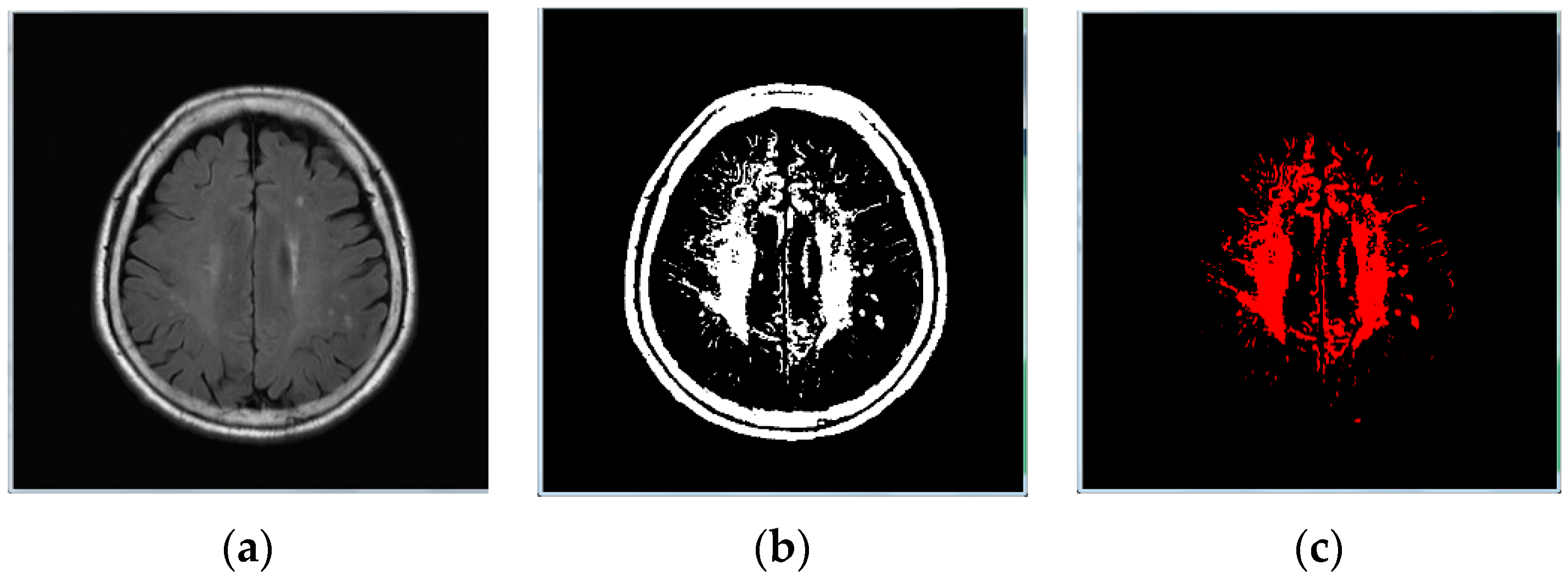

- Image binarization: We made sure the cranium (head shell) with the maximum region in image (Figure 1b);

- Step 2:

- Step 3:

- Image inverse binarization: The image from step 1 was adopted the inversed-binarization method in order to obtain the cerebrum region (Figure 1d,e);

- Step 4:

- Identify the cerebrum region: The cerebrum region was obtained from step 3 and then compared with the region obtained by step 2. The union of the two step regions was calculated to identify the cerebrum region (Figure 1f);

- Step 5:

- Perform median filtering to remove noise (Figure 1g); and

- Step 6:

- Calculate the actual size and position of the brain (Figure 1h).

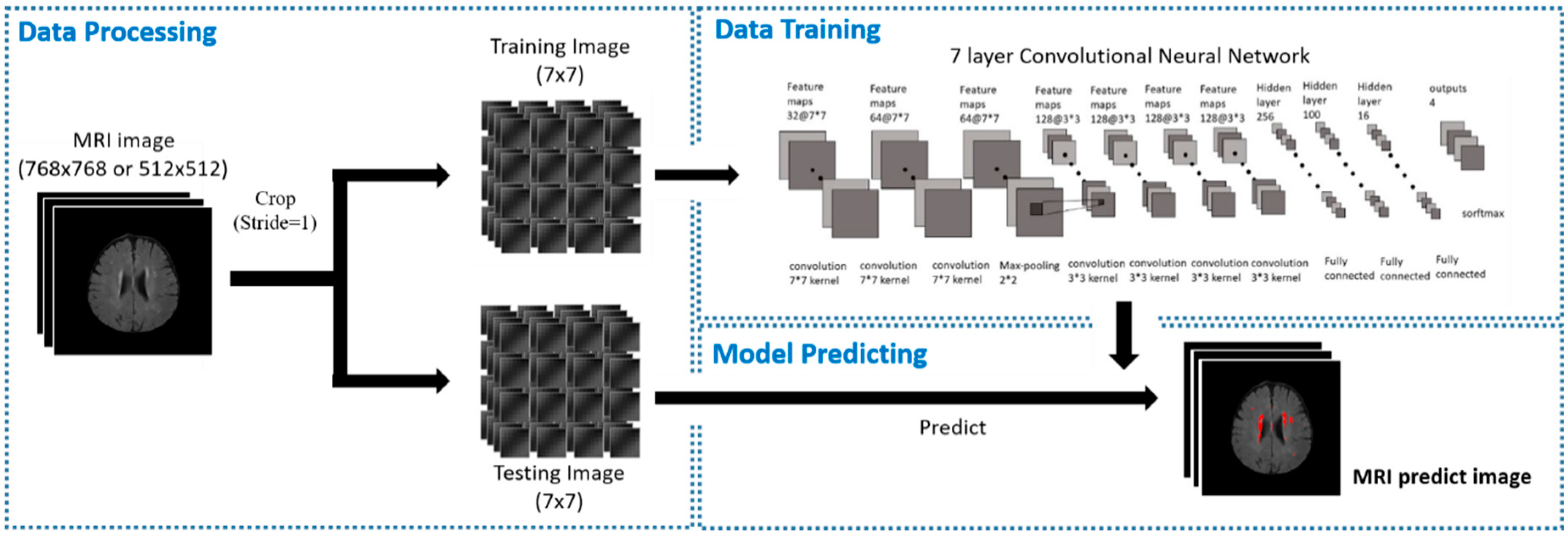

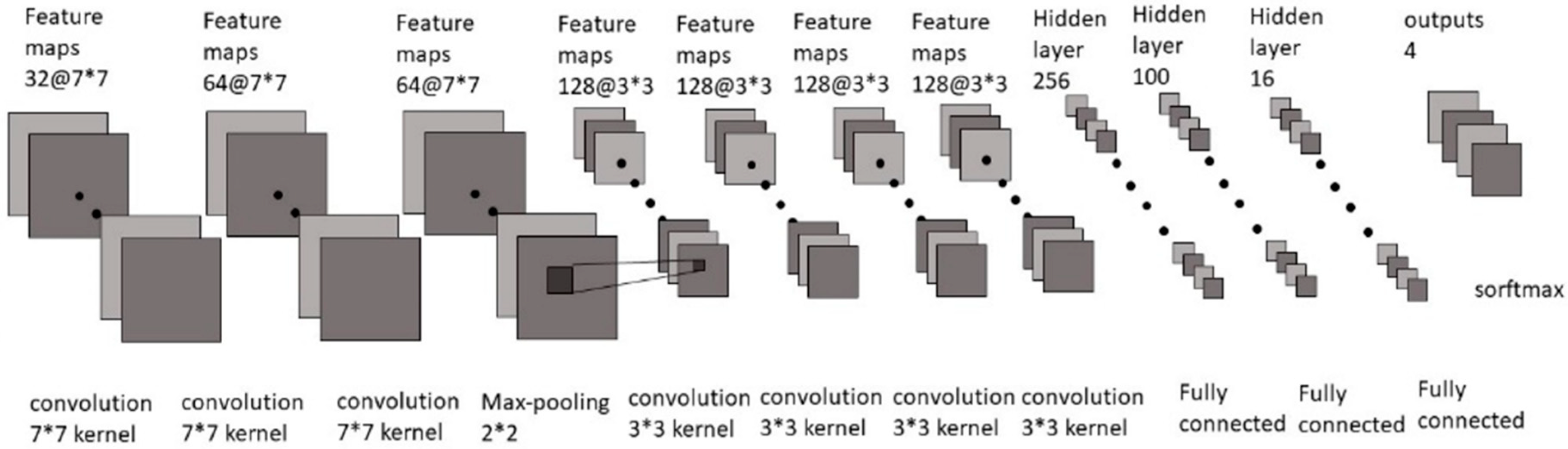

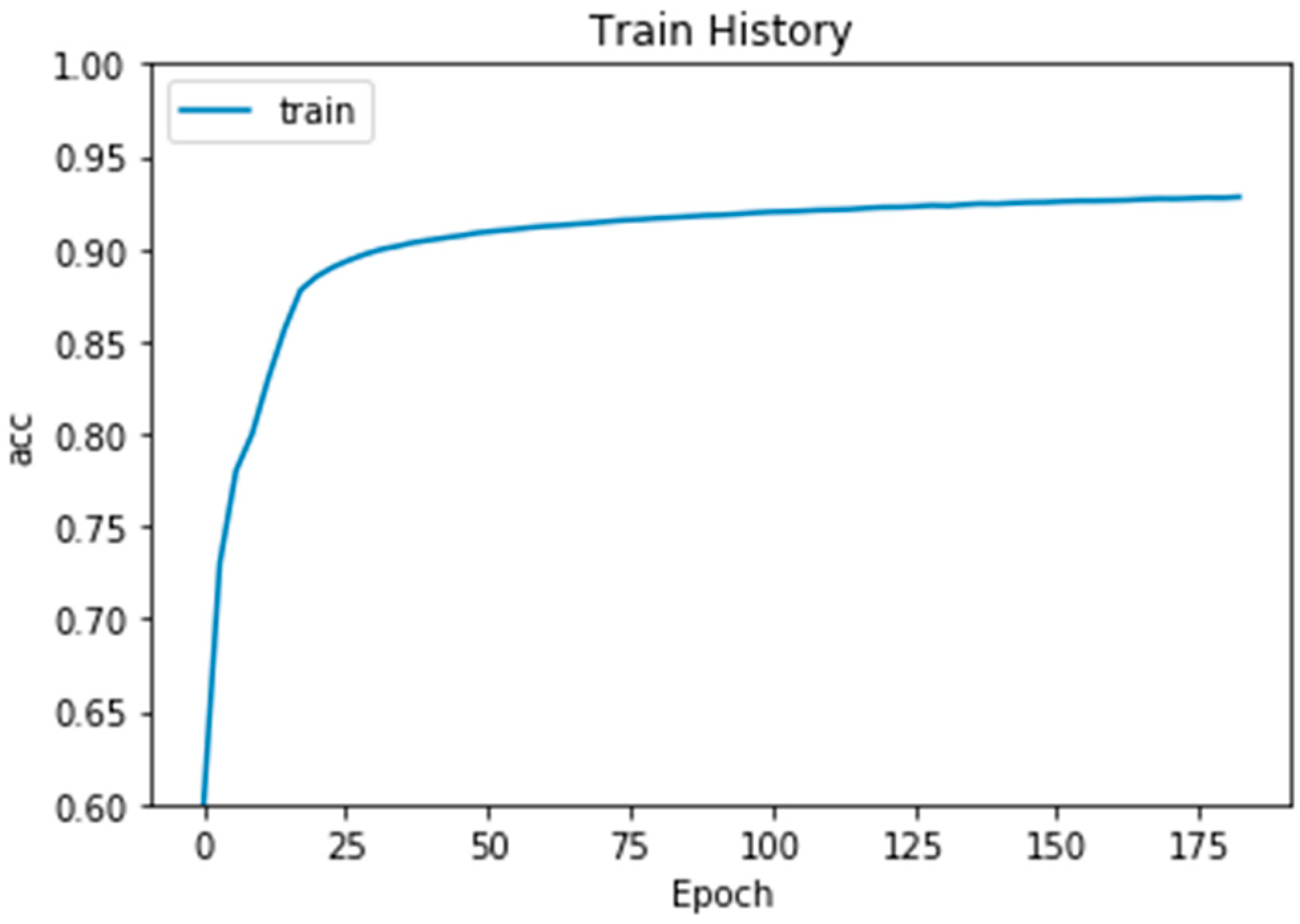

3.2. Training Model

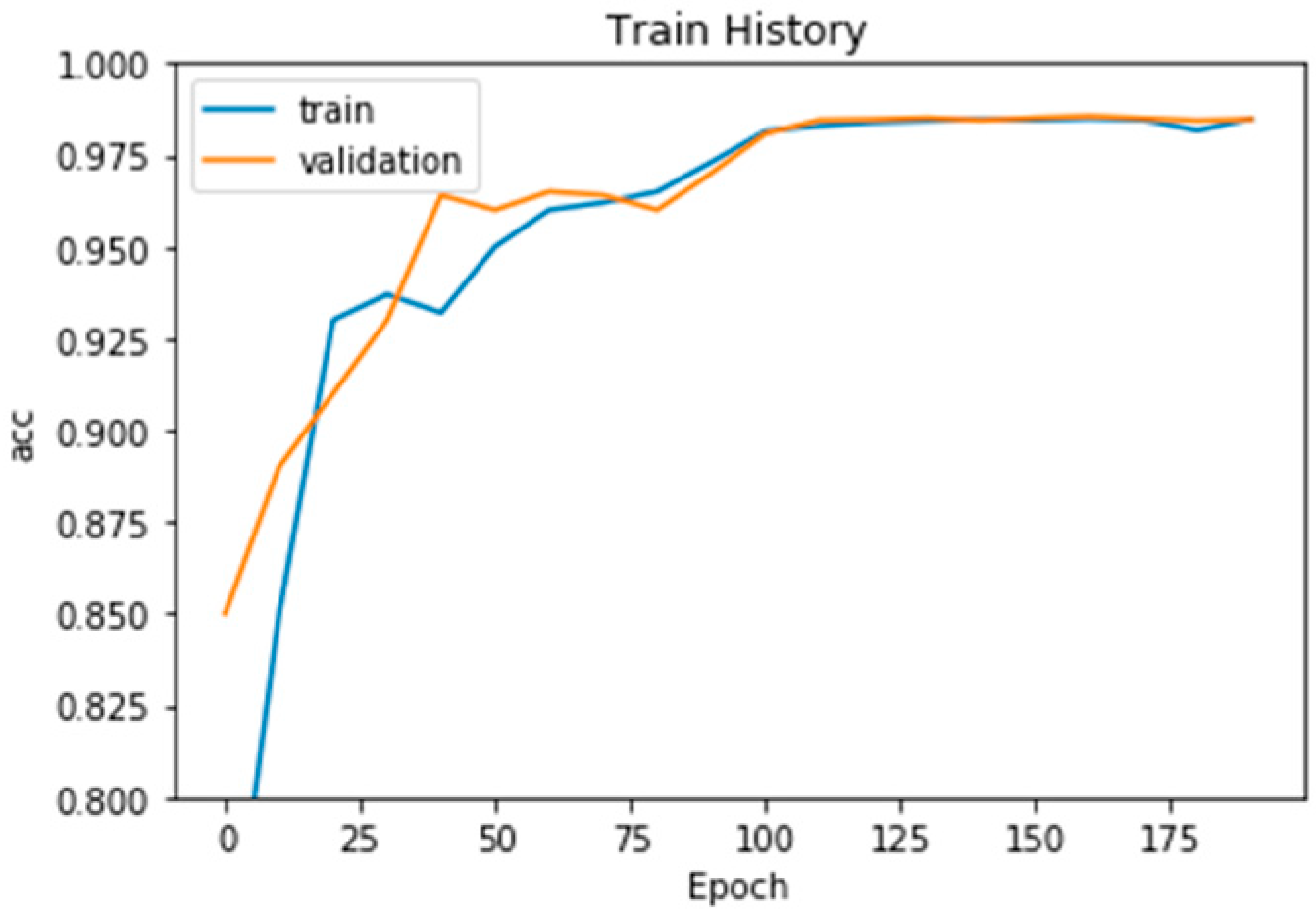

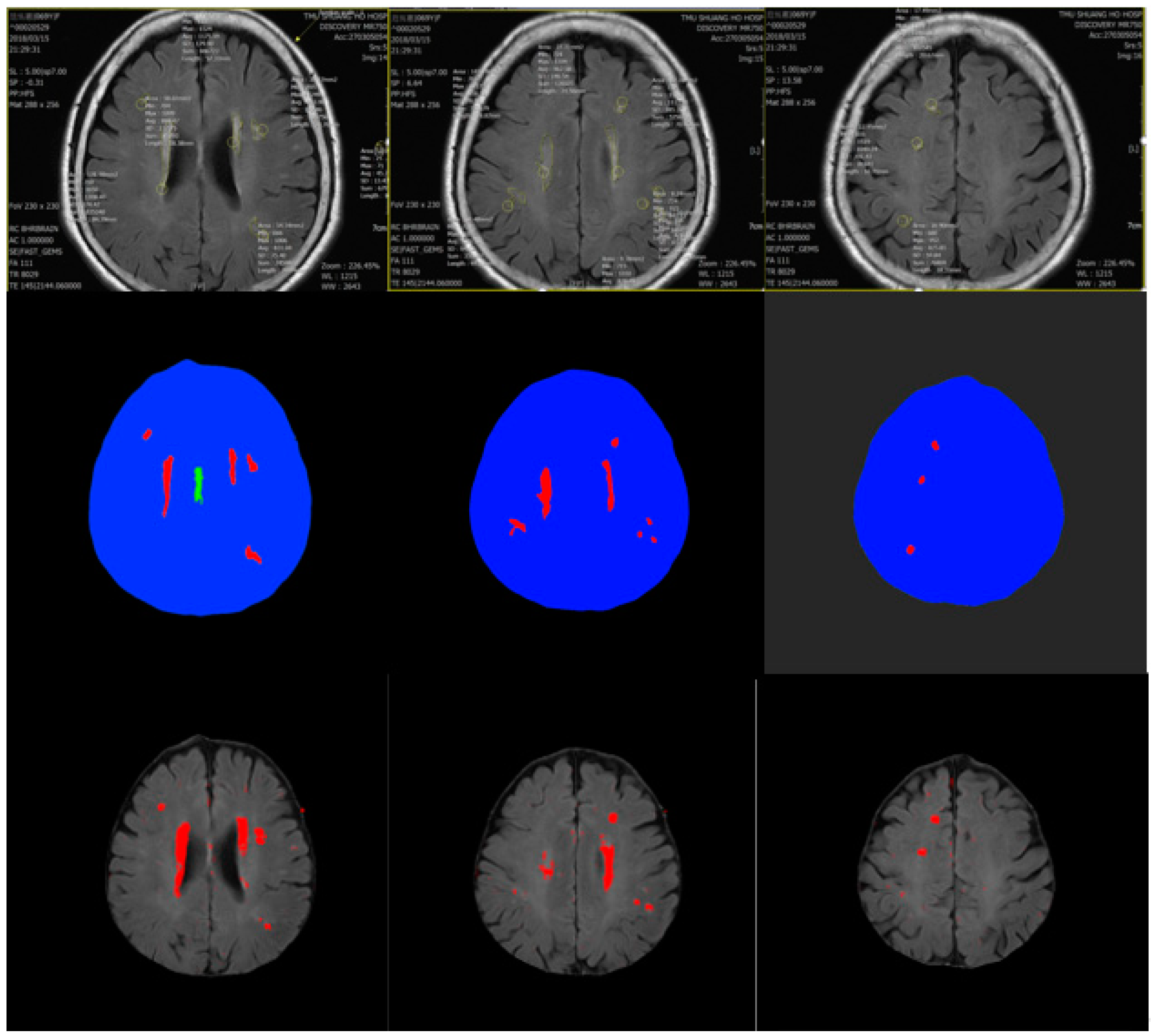

4. Experiment Results

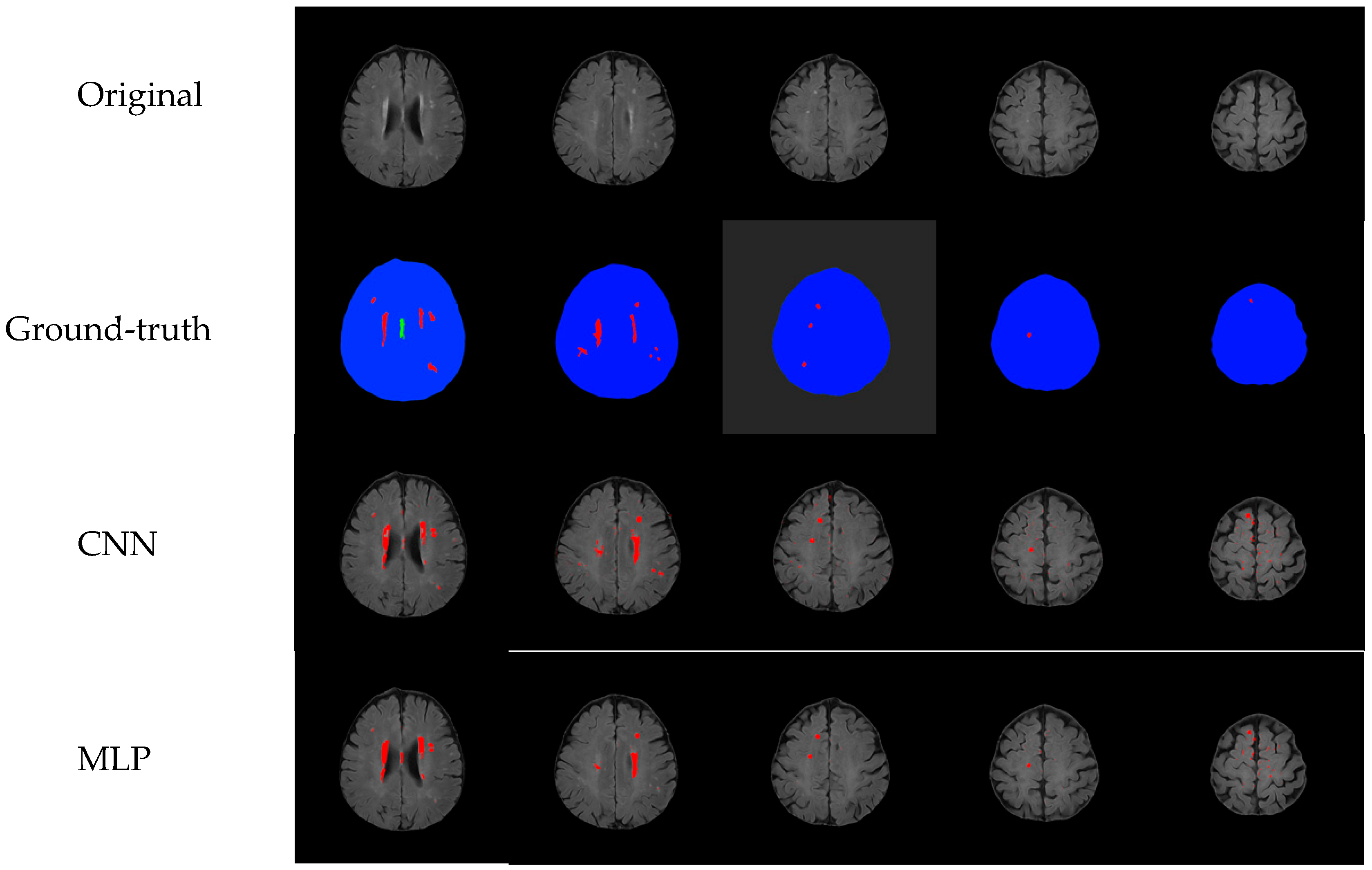

Comparison with Other Training Models

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

References

- Mehrtash, A.; Ghafoorian, M.; Pernelle, G.; Ziaei, A.; Heslinga, F.G.; Tuncali, K.; Fedorov, A.; Kikinis, R.; Tempany, C.M.; Wells, W.M.; et al. Automatic Needle Segmentation and Localization in MRI with 3-D Convolutional Neural Networks: Application to MRI-Targeted Prostate Biopsy. IEEE Trans. Med. Imaging 2019, 38, 1026–1036. [Google Scholar] [CrossRef] [PubMed]

- Bhima, K.; Jagan, A. Analysis of MRI based brain tumor identification using segmentation technique. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016. [Google Scholar]

- Zhao, F.; Chen, Y.; Chen, F.; He, X.; Cao, X.; Hou, Y.; Yi, H.; He, X.; Liang, J. Semi-Supervised Cerebrovascular Segmentation by Hierarchical Convolutional Neural Network. IEEE Access 2018, 6, 67841–67852. [Google Scholar] [CrossRef]

- Radhakrishnan, B.; Suresh, L.P. Tumor region extraction using edge detection method in brain MRI images. In Proceedings of the 2017 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Kollam, India, 20–21 April 2017. [Google Scholar]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-Ray8: Hospital-Scale Chest X-Ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tunga, P.P.; Singh, V. Extraction and description of tumour region from the brain MRI image using segmentation techniques. In Proceedings of the 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 20–21 May 2016. [Google Scholar]

- Wilke, H.-J.; Kmin, M.; Urban, J. Genodisc dataset: The benefits of multi-disciplinary research on intervertebral disc degeneration. Eur. Spine J. 2014, 23, 303–304. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Shen, X.; Ren, H. FDAR-Net: Joint Convolutional Neural Networks for Face Detection and Attribute Recognition. In Proceedings of the 2016 9th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 10–11 December 2016. [Google Scholar]

- Li, J.; Wang, X.; Su, H. Supermarket commodity identification using convolutional neural networks. In Proceedings of the 2016 2nd International Conference on Cloud Computing and Internet of Things (CCIOT), Dalian, China, 22–23 October 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional neural networks. In Proceedings of the 2014 ECCV: European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 Jun 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Almodfer, R.; Xiong, S.; Mudhsh, M.; Duan, P. Enhancing AlexNet for Arabic Handwritten words Recognition Using Incremental Dropout. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017. [Google Scholar]

- Zhu, Z.; Li, J.; Zhuo, L.; Zhang, J. Extreme Weather Recognition Using a Novel Fine-Tuning Strategy and Optimized GoogLeNet. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, NSW, Australia, 29 November–1 December 2017. [Google Scholar]

- Rezende, E.; Ruppert, G.; Carvalho, T.; Ramos, F.; De Geus, P. Malicious Software Classification Using Transfer Learning of ResNet-50 Deep Neural Network. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Batra: Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the ICCV 2017: IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Kim, I.; Rajaraman, S.; Antani, S. Visual Interpretation of Convolutional Neural Network Predictions in Classifying Medical Image Modalities. Diagnostics 2019, 9, 38. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Cao, C.; Ding, S.; Liu, Z.; Han, T.; Liu, S. Towards clinical diagnosis: Automated stroke lesion segmentation on multi-spectral MR image using convolutional neural network. IEEE Access 2018, 6, 57006–57016. [Google Scholar] [CrossRef]

- Aydoğdu, C.Y.; Albay, E.; Ünal, G. Classification of brain tissues as lesion or healthy by 3D convolutional neural networks. In Proceedings of the 2017 25th Signal Processing and Communications Applications Conference (SIU), Antalya, Turkey, 15–18 May 2017. [Google Scholar]

- Ray, V.; Goyal, A. Image-based fuzzy c-means clustering and connected component labeling subsecond fast fully automatic complete cardiac cycle left ventricle segmentation in multi frame cardiac MRI images. In Proceedings of the 2016 International Conference on Systems in Medicine and Biology (ICSMB), Kharagpur, India, 4–7 January 2016. [Google Scholar]

- Patil, P.D.; Kulkarni, P. Adaptive Supervised Learning Model for Training Set Selection under Concept Drift Data Streams. In Proceedings of the 2013 International Conference on Cloud & Ubiquitous Computing & Emerging Technologies, Pune, India, 15–16 November 2013. [Google Scholar]

- Cui, Z.; Yang, J.; Qiao, Y. Brain MRI segmentation with patch-based CNN approach. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

| Training mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 94/95 (TP) | 2/110 (FP) |

| Non cerebral small vessel | 1/95 (FN) | 108/110 (TN) |

| Testing mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 84/86 (TP) | 5/120 (FP) |

| Non cerebral small vessel | 2/86 (FN) | 115/120 (TN) |

| Training mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 90/95 (TP) | 6/110 (FP) |

| Non cerebral small vessel | 5/95 (FN) | 104/110 (TN) |

| Testing mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 80/86 (TP) | 8/120 (FP) |

| Non cerebral small vessel | 6/86 (FN) | 112/120 (TN) |

| Training mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 91/95 (TP) | 7/110 (FP) |

| Non cerebral small vessel | 4/95 (FN) | 103/110 (TN) |

| Testing mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 80/86 (TP) | 8/120 (FP) |

| Non cerebral small vessel | 6/86 (FN) | 112/120 (TN) |

| Training mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 89/95 (TP) | 9/110 (FP) |

| Non cerebral small vessel | 6/95 (FN) | 101/110 (TN) |

| Testing mAP | Cerebral Small Vessel | Non Cerebral Small Vessel |

|---|---|---|

| Cerebral small vessel | 79/86 (TP) | 10/120 (FP) |

| Non cerebral small vessel | 7/86 (FN) | 110/120 (TN) |

| Our Method | YOLO1 | YOLO2 | YOLO3 | |

|---|---|---|---|---|

| F1-score | 0.020829346 | 0.080591758 | 0.080591758 | 0.118198648 |

| Precision rate | 0.981956315 | 0.937704918 | 0.937704918 | 0.919680601 |

| Recall rate | 0.010526316 | 0.042105263 | 0.042105263 | 0.063157895 |

| Our Method | YOLO1 | YOLO2 | YOLO3 | |

|---|---|---|---|---|

| F1-score | 0.045410519 | 0.129827978 | 0.129827978 | 0.149516707 |

| Precision rate | 0.959086584 | 0.933125972 | 0.933125972 | 0.916827853 |

| Recall rate | 0.023255814 | 0.069767442 | 0.069767442 | 0.081395349 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsieh, Y.-Z.; Luo, Y.-C.; Pan, C.; Su, M.-C.; Chen, C.-J.; Hsieh, K.L.-C. Cerebral Small Vessel Disease Biomarkers Detection on MRI-Sensor-Based Image and Deep Learning. Sensors 2019, 19, 2573. https://doi.org/10.3390/s19112573

Hsieh Y-Z, Luo Y-C, Pan C, Su M-C, Chen C-J, Hsieh KL-C. Cerebral Small Vessel Disease Biomarkers Detection on MRI-Sensor-Based Image and Deep Learning. Sensors. 2019; 19(11):2573. https://doi.org/10.3390/s19112573

Chicago/Turabian StyleHsieh, Yi-Zeng, Yu-Cin Luo, Chen Pan, Mu-Chun Su, Chi-Jen Chen, and Kevin Li-Chun Hsieh. 2019. "Cerebral Small Vessel Disease Biomarkers Detection on MRI-Sensor-Based Image and Deep Learning" Sensors 19, no. 11: 2573. https://doi.org/10.3390/s19112573

APA StyleHsieh, Y.-Z., Luo, Y.-C., Pan, C., Su, M.-C., Chen, C.-J., & Hsieh, K. L.-C. (2019). Cerebral Small Vessel Disease Biomarkers Detection on MRI-Sensor-Based Image and Deep Learning. Sensors, 19(11), 2573. https://doi.org/10.3390/s19112573