Energy-Level Jumping Algorithm for Global Optimization in Compressive Sensing-Based Target Localization

Abstract

1. Introduction

- To reduce the number of measurements, we transform the CS-based target localization problem into -norm minimization. Compared with the traditional CS-based target localization via -norm or -norm minimization, we provide a sparser solution via -norm minimization, and then achieve more precise target localization.

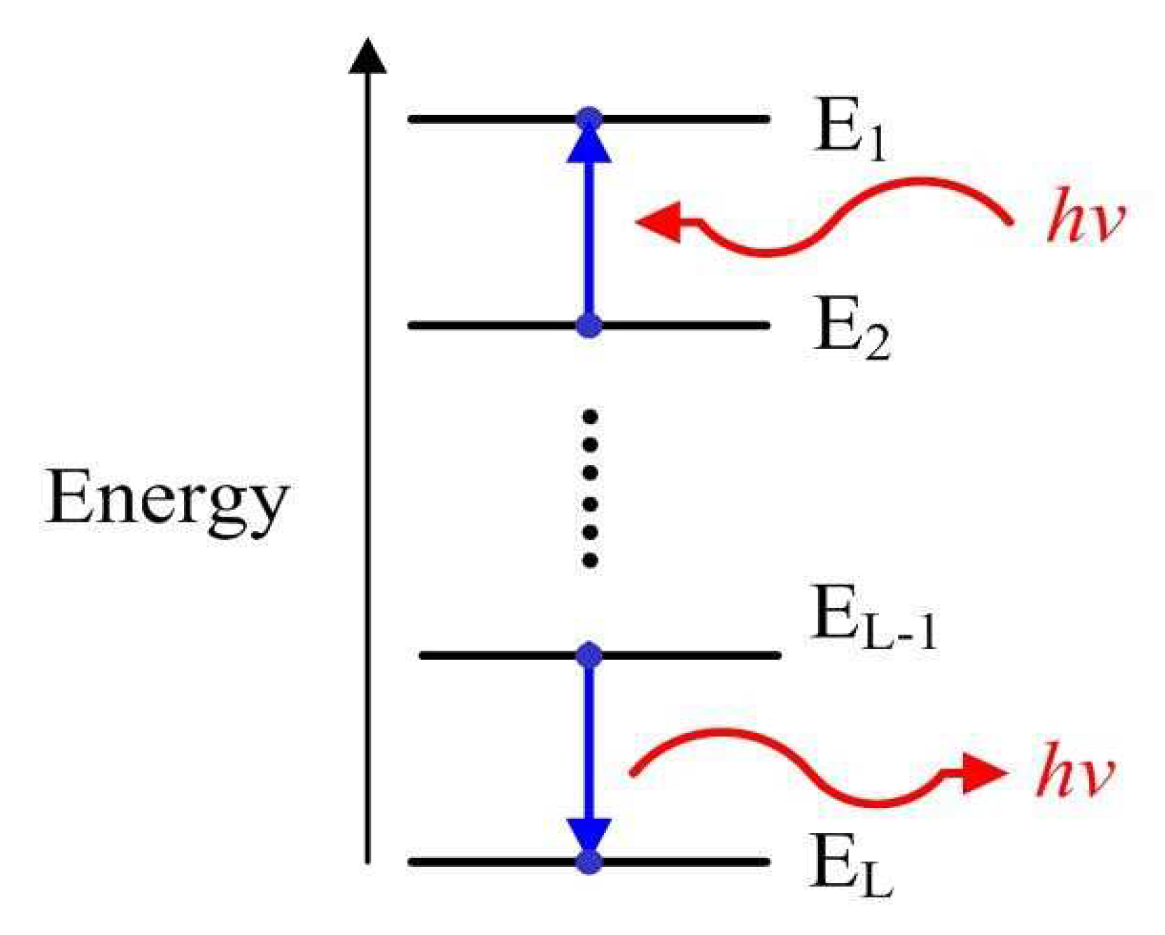

- Inspired by the concept of energy level, we develop a novel ELJ algorithm to effectively solve the globally optimal sparse solution of -norm minimization w hich corresponds to the most accurate locations of unknown targets. To the best of our knowledge, this is the first time to solve -norm optimization by the idea of energy-level jumping. Furthermore, the theoretical analyses of the global convergence of our ELJ algorithm are provided to arouse a new and effective method, which can solve some practical non-convex optimization problems by piecewise way.

- The simulation results show that our ELJ algorithm can help some target localization algorithms to improve the position accuracy when these algorithms have to locate targets using suboptimal sparse solutions.

2. Related Work

2.1. Typical Target Localization Algorithm in WSNs

2.2. CS-Based Target Localization Algorithm in WSNs

3. System Model of CS-Based Target Localization

3.1. Compressive Sensing

3.2. System Model and Algorithm Motivation

4. Energy-Level Jumping Algorithm for CS-Based Target Localization

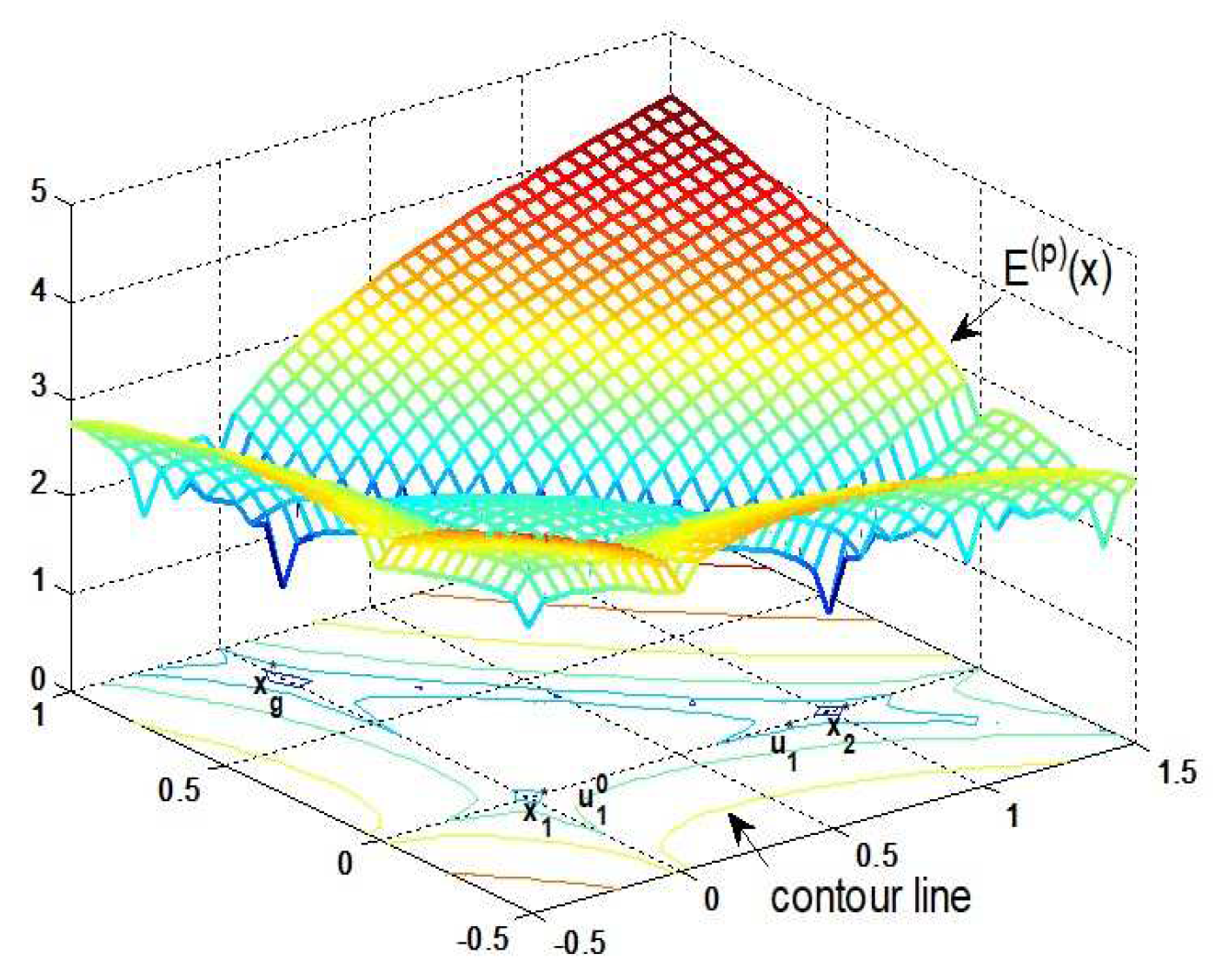

4.1. Preliminary Preparation

- How do we obtain a non-sparse solution ?

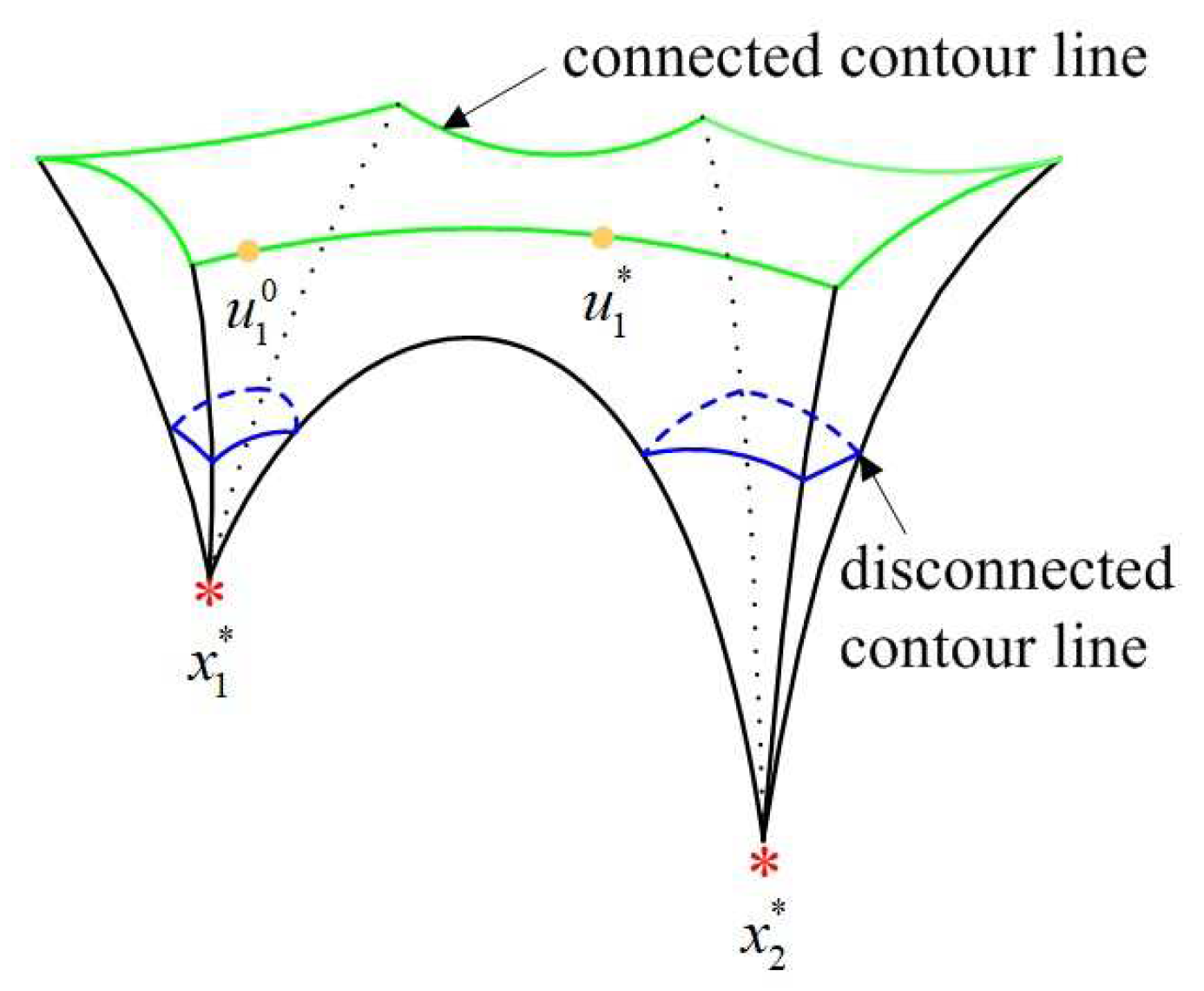

- How do we construct a connected curve between two non-sparse solutions (i.e., and ) located in two attraction basins, respectively?

4.2. Homotopy Curve Construction

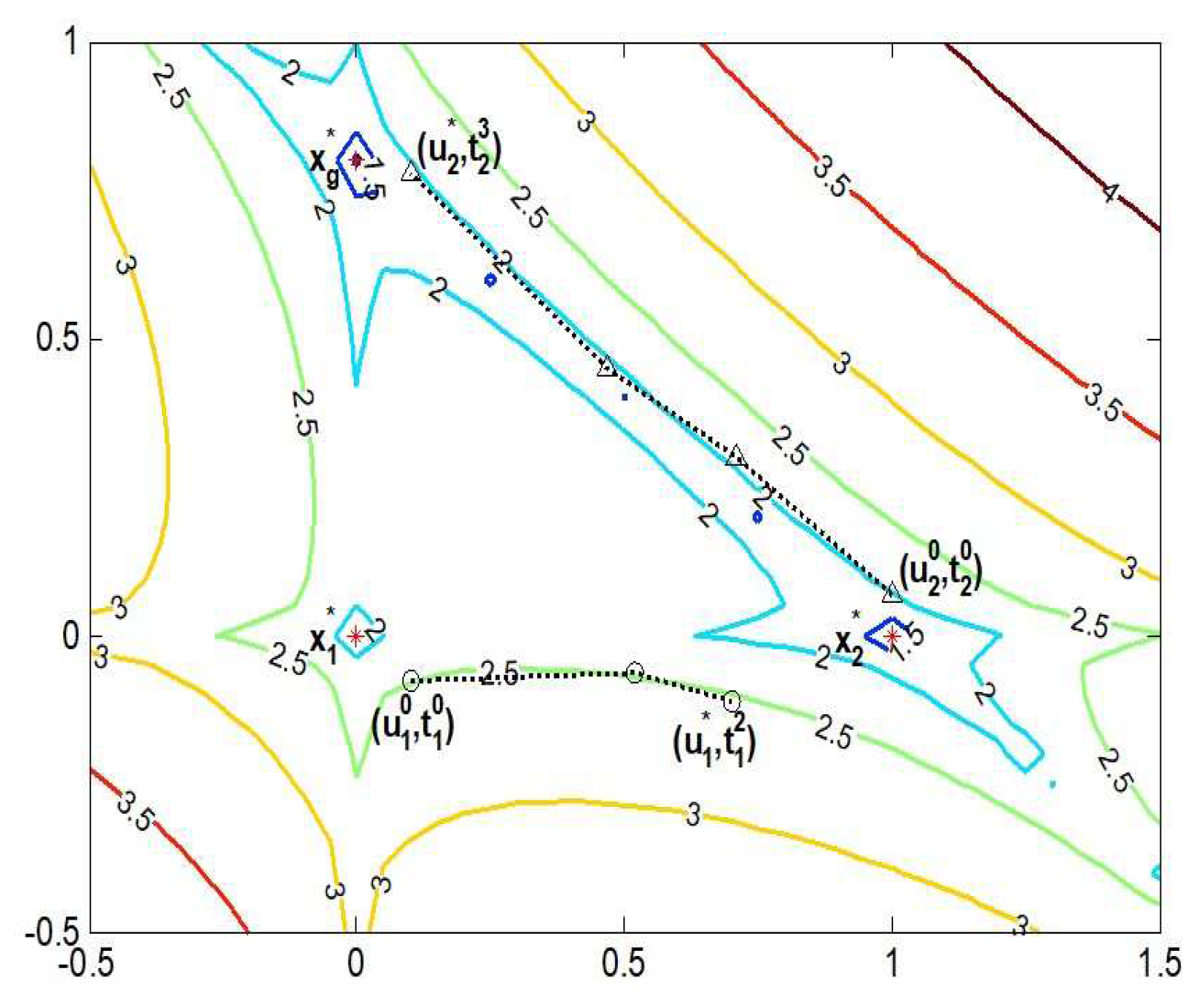

4.3. CS-Based Target Localization via Energy-Level Jumping Algorithm

| Algorithm 1: Compressive sensing-based target localization via energy-level jumping algorithm | |

| Input: A measurement matrix P, a measurement vector y, an error threshold . | |

| Initialize: An initial point , an iterative index l. | |

| while Stopping criterion not met do | |

| 1: Apply IRLS to reconstruct a locally optimal sparse solution . | |

| 2: Let jump to by absorbing the energy . | |

| 3: Apply the modified Euler’s forecast-Newton correction homotopy method to construct a homotopy curve between and . | |

| 4: Update and apply IRLS to find a sparser solution . | |

| 5: Increase iterative index l. | |

| end while if . | |

| Output: | |

| 1: The globally optimal sparse solution . | |

| 2: The grid locations of targets | |

5. Convergence Analysis

5.1. Convergence of Our Homotopy Method

5.2. Global Convergence of Energy-Level Jumping Algorithm

- (1)

- Solution set. A set is obtained by collecting all sparse solutions of Problem (7), i.e.,The set (21) has a bounded subset , where is a locally optimal sparse solution solved by IRLS. The limit of exists due to the bounded convergence theorem.

- (2)

- Descent function. Note that the objective function is a descent function. Corresponding to the sequence , there exists a monotonically decreasing energy sequence such thatHence, ELJ is a descent algorithm and the expression of global convergence is .

- (3)

- The rate of piecewise convergence. When we investigate the global minimizer, the solving process is divided into two stages: our modified Euler’s forecast-Newton correction homotopy method is used to find an initial point of local optimization, and IRLS is used to find a local minimizer. Our homotopy method is linear convergence while Theorem 2 states that the global convergence rate of IRLS is order . Hence, the convergence rate of ELJ is piecewise.

6. Simulation Results and Analysis

6.1. Numerical Example

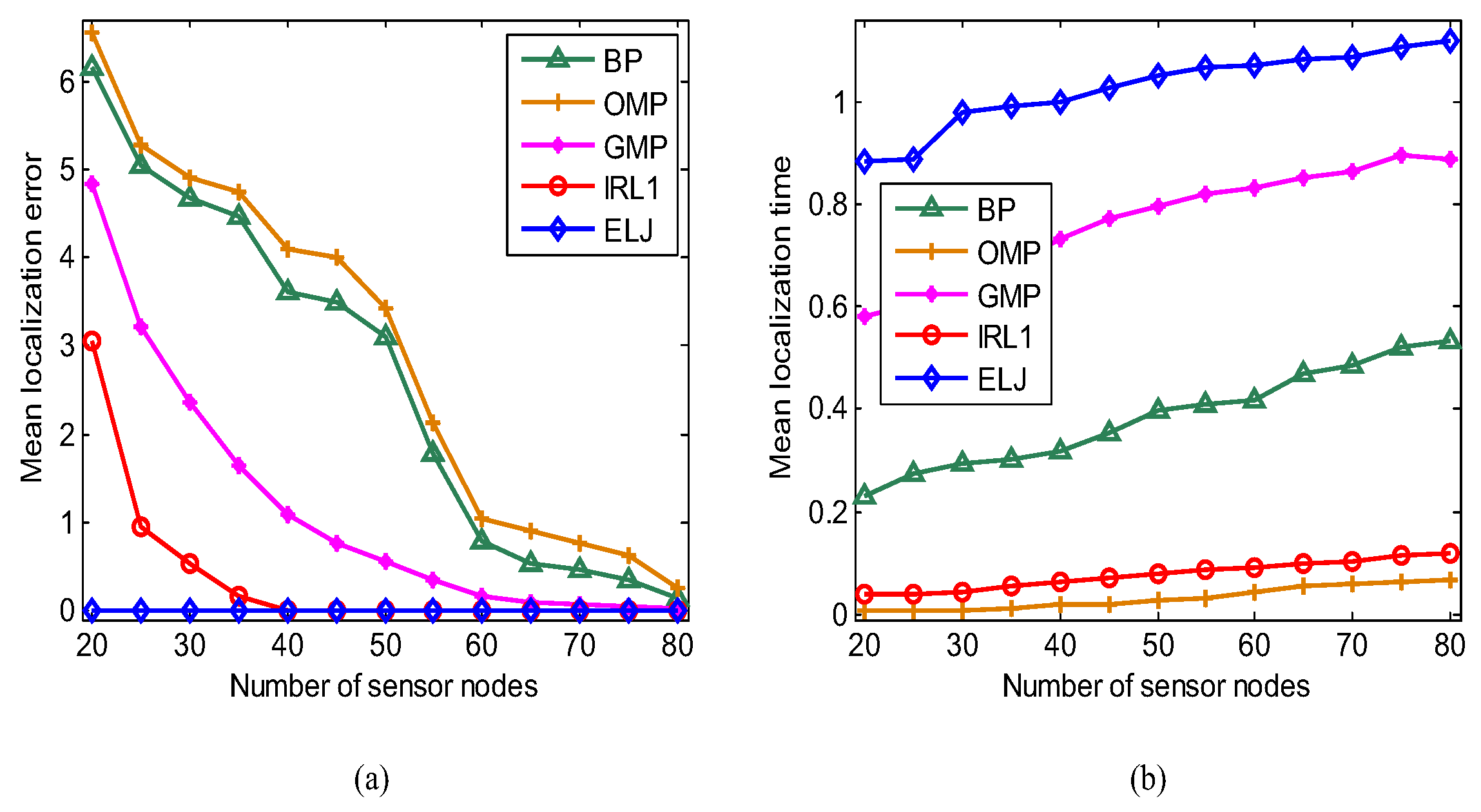

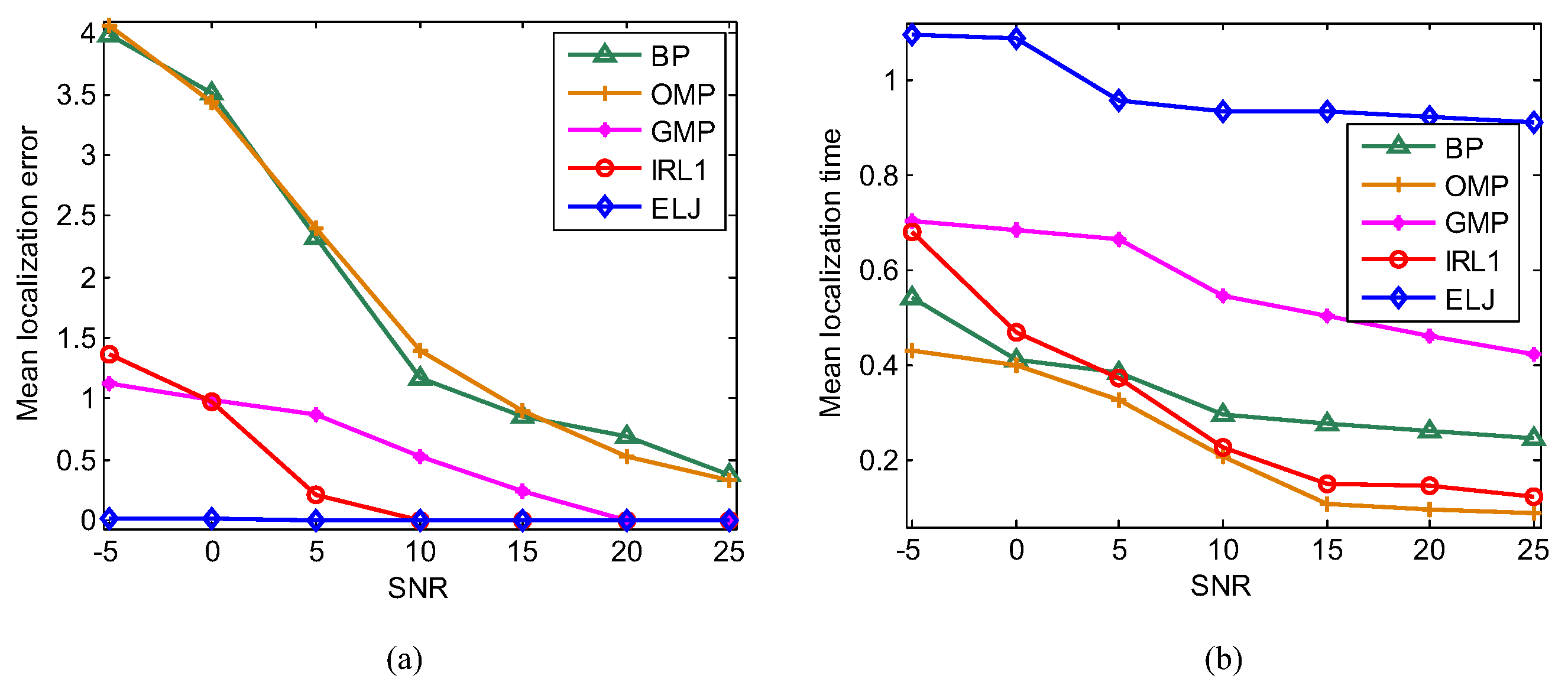

6.2. Accuracy of Target Localization

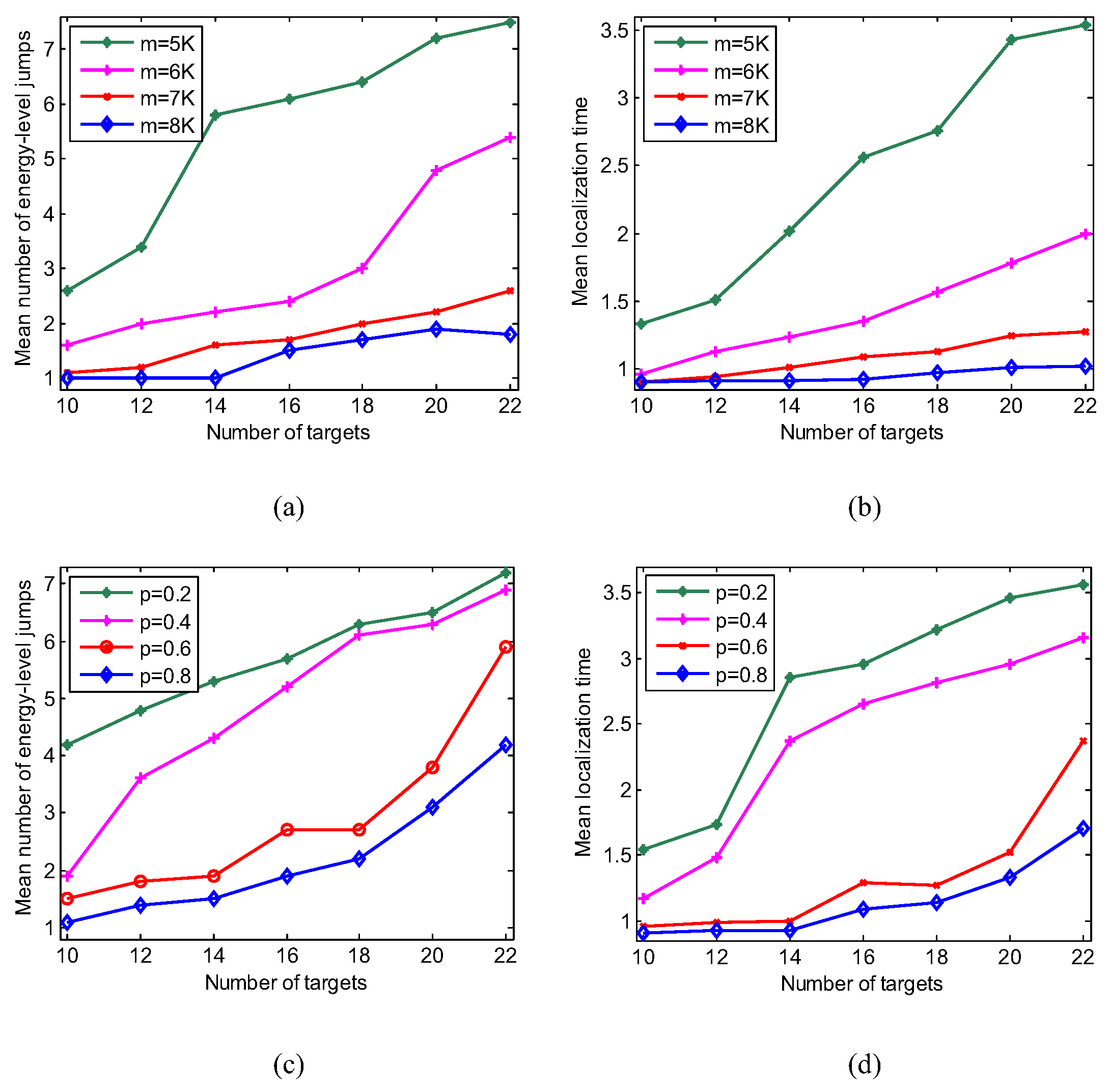

6.3. Influence of the Number of Measurements

6.4. Time Complexity Analysis

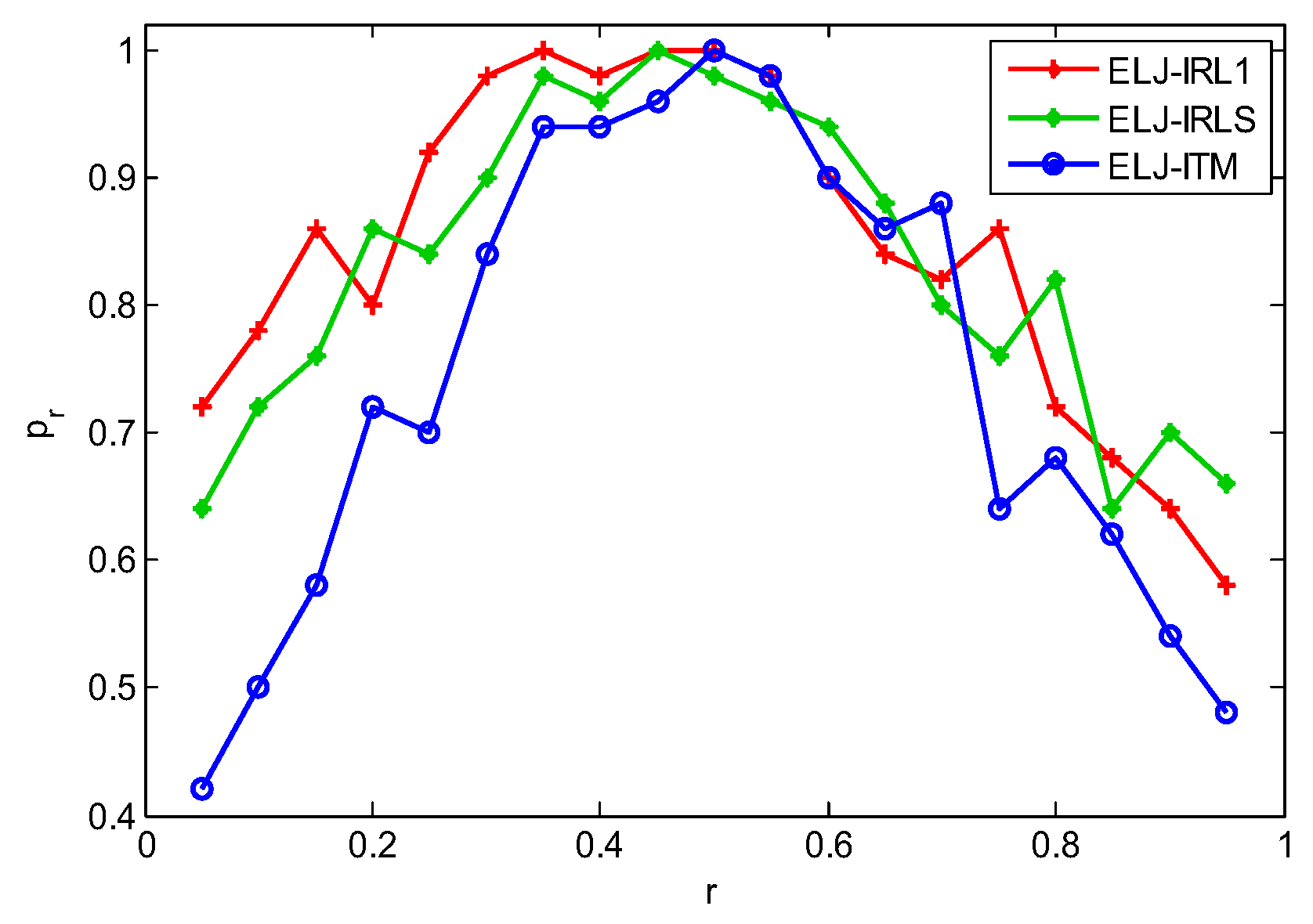

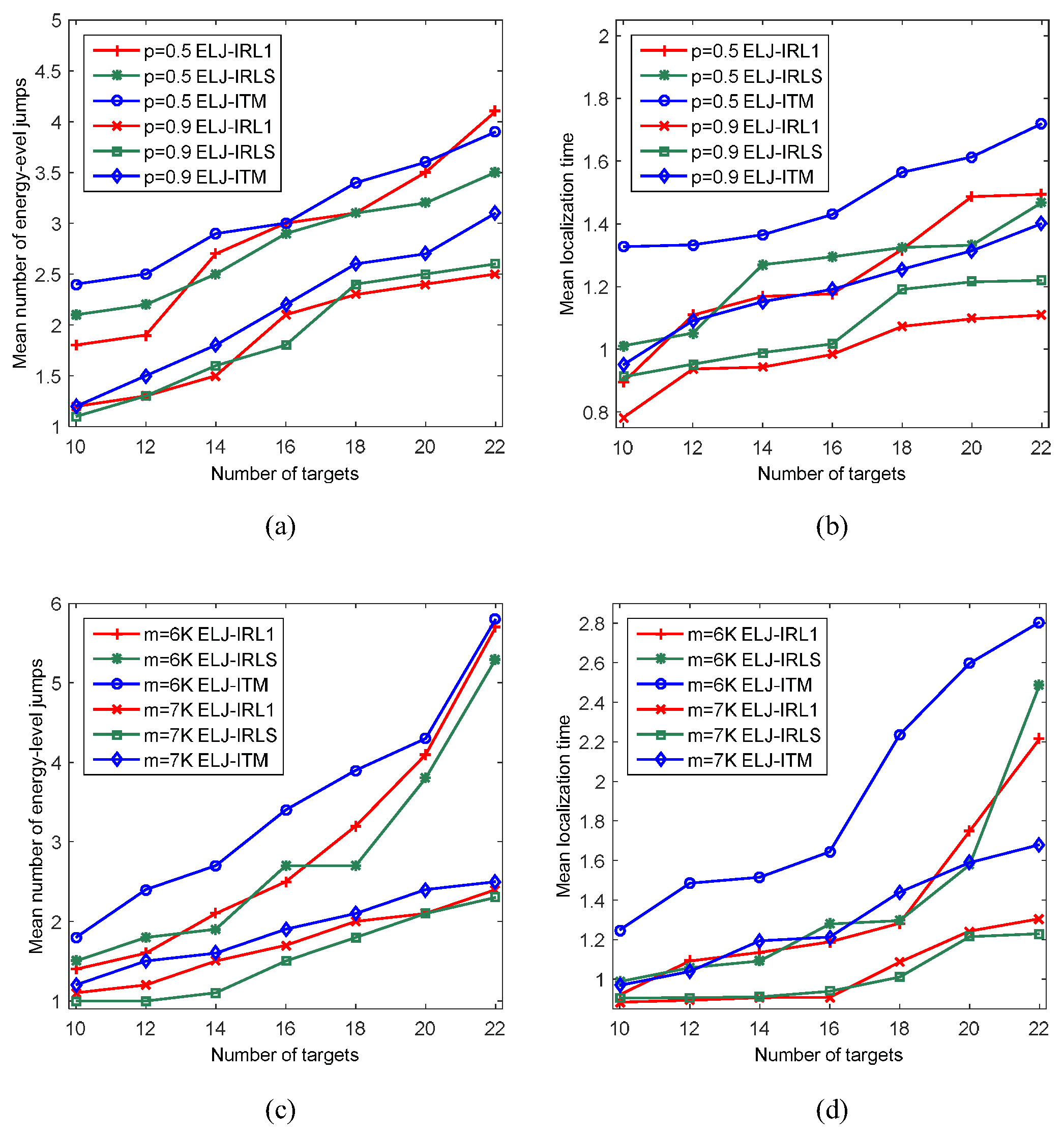

6.5. Influence of Local Recovery Algorithm

6.6. Influence of Measurement Noise

7. Conclusions

- Various types of propagation models replacing the path loss model will be applied to test the real localization performance of the proposed algorithm.

- Installing a WSN in a smart community, various types of application scenarios (e.g., CS-based target localization for people and car) will be considered to test the practicability of the proposed algorithm such that the parameters are more reasonably set.

- To reduce localization delay, the ELJ algorithm will be improved by accelerating global convergence and reducing computation time.

Author Contributions

Funding

Conflicts of Interest

References

- Li, M.F.; Lin, H.J. Design and implementation of smart home control systems based on wireless sensor networks and power line communications. IEEE Trans. Ind. Electron. 2015, 62, 4430–4442. [Google Scholar] [CrossRef]

- Demars, C.D.; Roggemann, M.C.; Webb, A.J.; Havens, T.C. Target localization and tracking by fusing doppler differentials from cellular emanations with a multi-spectral video tracker. Sensors 2018, 18, 3687. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Liu, J.W.; Du, X.J.; Li, B.; Guizani, M. AOA-based three-dimensional multi-target localization in industrial WSNs for LOS conditions. Sensors 2018, 18, 2727. [Google Scholar] [CrossRef] [PubMed]

- Hanen, A.; Federico, V.; Ridha, B. An accurate prediction method for moving target localization and tracking in wireless sensor networks. Ad Hoc Netw. 2018, 70, 14–22. [Google Scholar]

- Li, B.; Cui, W.; Wang, B. A robust wireless sensor network localization algorithm in mixed LOS/NLOS scenario. Sensors 2015, 15, 23536–23553. [Google Scholar] [CrossRef] [PubMed]

- Viani, F.; Rocca, P.; Oliveri, G.; Trinchero, D.; Massa, A. Localization, tracking, and imaging of targets in wireless sensor networks: An invited review. Radio Sci. 2011, 46, 1–12. [Google Scholar] [CrossRef]

- Tomic, S.; Beko, M.; Dinis, R.; Bernardo, L. On target localization using combined RSS and AoA measurements. Sensors 2018, 18, 1266. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Salter, J.; Dempster, A.G.; Rizos, C. Indoor positioning techniques based on wireless LAN. In Proceedings of the International Conference on LAN, Coronado, CA, USA, 25–27 April 2006; pp. 13–16. [Google Scholar]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inform. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Sun, B.M.; Yan, G.; Li, N.; Peng, L.X.; Fang, D.G. Two-dimensional localization for mobile targets using compressive sensing in wireless sensor networks. Comp. Commun. 2016, 78, 45–55. [Google Scholar] [CrossRef]

- Chen, F.; Au, W.S.; Valaee, S.; Tan, Z.H. Received-signal-strength-based indoor positioning using compressive sensing. IEEE Trans. Mob. Comput. 2012, 11, 1983–1993. [Google Scholar]

- Li, J.; Lin, Q.H.; Kang, C.Y.; Wang, K.; Yang, X.T. DOA estimation for underwater wideband weak targets based on coherent signal subspace and compressed sensing. Sensors 2018, 18, 902. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Donoho, D.; Saunders, M. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Joseph, J.; Krishna, K.K.P.; Kumar, N.S.; Devi, R.M. CS based acoustic source localization and sparse reconstruction using greedy algorithms. In Proceedings of the 5th International Conference on Advances in Computing and Communications (ICACC), Kochi, India, 3–5 September 2015; pp. 403–407. [Google Scholar]

- Zhang, B.W.; Cheng, X.Z.; Zhang, N. Sparse target counting and localization in sensor networks based on compressive sensing. In Proceedings of the International Conference on Computer Communications (INFOCOM), Shangai, China, 10–15 April 2011; pp. 2255–2263. [Google Scholar]

- Andjela, D.; Irena, O.; Srdjan, S. On some common compressive sensing recovery algorithms and applications. Facta Univ. Sci. J. 2017, 30, 477–510. [Google Scholar]

- Gorodnitsky, I.F.; Rao, B.D. Sparse signal reconstruction from limited data using FOCUSS: A re-weighted minimum norm algorithm. IEEE Trans. Signal Process. 1997, 45, 600–616. [Google Scholar] [CrossRef]

- Rao, B.D.; Delgado, K.K. An affine scaling algorithm for best basis selection. IEEE Trans. Signal Process. 1999, 47, 187–200. [Google Scholar] [CrossRef]

- She, Y. Thresholding-based iterative selection procedures for model selection and shrinkage. Electron. J. Stat. 2009, 3, 384–415. [Google Scholar] [CrossRef]

- Safaei, A.; Shahbazian, R.; Ghorashi, S.A. Cooperative improved target localization in harsh environments using direction of arrival. Indones. J. Electr. Eng. Comput. Sci. 2016, 3, 420–427. [Google Scholar] [CrossRef]

- Tomic, S.; Marko, B.; Rui, D.; Montezuma, P. A Robust bisection-based estimator for TOA-based target localization in NLOS environments. IEEE Commun. Lett. 2017, 21, 2488–2491. [Google Scholar] [CrossRef]

- Viani, F.; Rocca, P.; Benedetti, M.; Oliveri, G.; Massa, A. Electromagnetic passive localization and tracking of moving targets in a WSN-infrastructured environment. Inverse Probl. 2015, 26, 1–15. [Google Scholar] [CrossRef]

- Gante, A.D.; Siller, M. A survey of hybrid schemes for location estimation in wireless sensor networks. Procedia Technol. 2013, 7, 377–383. [Google Scholar] [CrossRef][Green Version]

- Khan, M.W.; Kemp, A.H.; Salman, N. Optimized hybrid localisation with cooperation in wireless sensor networks. IET Signal Process. 2017, 11, 341–348. [Google Scholar] [CrossRef]

- Khan, M.W.; Salman, N.; Kemp, A.H. Enhanced hybrid positioning in wireless networks I: AoA-ToA. In Proceedings of the International Conference on Telecommunications and Multimedia, Heraklion, Greece, 28–30 July 2014; pp. 86–91. [Google Scholar]

- Khan, M.W.; Salman, N.; Kemp, A.H. Enhanced hybrid positioning in wireless networks II: AoA-ToA. In Proceedings of the International Conference on Telecommunications and Multimedia, Heraklion, Greece, 28–30 July 2014; pp. 92–97. [Google Scholar]

- Zhu, X.X.; Wang, Y.; Guo, Y.L.; Chen, J.Y.; Li, N.; Zhang, B. Effect of inaccurate range measurements on hybrid TOA/RSS linear least squares localization. In Proceedings of the 2015 International Conference on Communications, Signal Processing, and Systemsl; Springer: Berlin/Heidelberg, Germany, 2016; Volume 386, pp. 523–530. [Google Scholar]

- Tang, S.Y.; Shu, X.M.; Hu, J.; Zhou, R.; Shen, S.F.; Cao, S.Y. Study on RSS/AOA hybrid localization in life detection in huge disaster situation. Nat. Hazards 2019, 95, 569–583. [Google Scholar] [CrossRef]

- Kegen, Y.; Ian, S.; Guo, Y.J. Ground-Based Wireless Positioning; John Wiley & Sons Ltd.: Chichester, UK, 2009. [Google Scholar]

- Trogh, J.; Joseph, W.; Martens, L.; Plets, D. An unsupervised learning technique to optimize radio maps for indoor localization. Sensors 2019, 19, 752. [Google Scholar] [CrossRef] [PubMed]

- Alessandro, B.; Diego, C.; Sophie, F. Block-sparsity-based localization in wireless sensor networks. EURASIP J. Wirel. Commun. Netw. 2015, 1, 182–197. [Google Scholar]

- Ahmadi, H.; Polo, A.; Moriyama, T.; Salucci, M.; Viani, F. Semantic wireless localization of WiFi terminals in smart buildings. Radio Sci. 2016, 51, 876–892. [Google Scholar] [CrossRef]

- Viani, F.; Migliore, M.D.; Polo, A.; Salucci, M.; Massa, A. An iterative classification strategy for multi-resolution wireless sensing of passive targets. Electron. Lett. 2018, 54, 101–103. [Google Scholar] [CrossRef]

- Liu, L.P.; Cui, T.T.; Lv, W.J. A range-free multiple target localization algorithm using compressive sensing theory in wireless sensor networks. In Proceedings of the 11th IEEE International Conference on Mobile Ad Hoc and Sensor Systems, Philadeliphia, PA, USA, 28–30 October 2014; pp. 690–695. [Google Scholar]

- Wang, J.; Fang, D.Y.; Chen, X.J. Compressive sensing based device-free localization for multiple targets in sensor networks. In Proceedings of the International Conference on Computer Communications (INFOCOM), Turin, Italy, 14–19 April 2013; pp. 145–149. [Google Scholar]

- Chartrand, R. Exact reconstruction of sparse signals via non-convex minimization. IEEE Signal Process. Lett. 2007, 14, 707–710. [Google Scholar] [CrossRef]

- Feng, C.; Anthea, A.W.S.; Valaee, S.; Tan, Z. Multiple target localization using compressive sensing. In Proceedings of the International Conference on Global Telecommunications Conference, Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–6. [Google Scholar]

- Feng, C.; Anthea, A.W.S.; Valaee, S.; Tan, Z. Compressive sensing based positioning using RSS of WLAN access points. In Proceedings of the International Conference on Computer Communications (INFOCOM), San Diego, CA, USA, 15–19 March 2010; pp. 1–9. [Google Scholar]

- Yu, D.P.; Yan, G.; Li, N.; Fang, D.G. Dictionary refinement for compressive sensing based device-free localization via the variational EM algorithm. IEEE Access 2016, 4, 9743–9757. [Google Scholar] [CrossRef]

- Cui, B.; Zhao, C.H.; Feng, C.; Xu, Y.L. An improved greedy matching pursuit algorithm for multiple target localization. In Proceedings of the Third International Conference on Instrumentation, Measurement, Computer, Communication and Control, Shenyang, China, 21–23 September 2013; pp. 926–930. [Google Scholar]

- Qian, P.; Yan, G.; Li, N. Leveraging compressive sensing for multiple target localization and power estimation in wireless sensor networks. IEICE Trans. Commun. 2017, 100, 1428–1435. [Google Scholar] [CrossRef]

- Van, D.B.E.; Friedlander, M.P. Sparse optimization with least-squares constraints. SIAM J. Optim. 2011, 21, 1201–1229. [Google Scholar]

- Yan, J.; Yu, K.; Chen, R.Z.; Chen, L. An improved compressive sensing and received signal strength-based target localization algorithm with unknown target population for local area networks. Sensors 2017, 17, 1246. [Google Scholar] [CrossRef] [PubMed]

- Massa, A.; Rocca, P.; Oliveri, G. Compressive sensing in electromagnetics-A review. IEEE Antennas Propag. Mag. 2015, 57, 224–238. [Google Scholar] [CrossRef]

- Anselmi, N.; Salucci, M.; Oliveri, G.; Massa, A. Wavelet-based compressive imaging of sparse targets. IEEE Trans. Antennas. Propag. 2015, 63, 4889–4900. [Google Scholar] [CrossRef]

- Anselmi, N.; Oliveri, G.; Hannan, M.A.; Salucci, M.; Massa, A. Color compressive sensing imaging of arbitrary-shaped scatterers. IEEE Trans. Microw. Theory Tech. 2017, 65, 1986–1999. [Google Scholar] [CrossRef]

- Oliveri, G.; Salucci, M.; Anselmi, N.; Massa, A. Compressive sensing as applied to inverse problems for imaging: theory, applications, current trends, and open challenges. IEEE Antennas Propag. Mag. 2017, 59, 34–46. [Google Scholar] [CrossRef]

- Hong, Z.; Yan, Q.R.; Li, Z.H.; Zhan, T.; Wang, Y.H. Photon-counting underwater optical wireless communication for reliable video transmission using joint source-channel coding based on distributed compressive sensing. Sensors 2019, 19, 1042. [Google Scholar] [CrossRef] [PubMed]

- Nathan, K. Compressive sensing based machine learning strategy for characterizing the flow around a cylinder with limited pressure measurements. Phys. Fluids 2013, 25, 127102. [Google Scholar] [CrossRef]

- Zhang, S.Q.; Zhao, X.M.; Lei, B.C. Robust facial expression recognition via compressive sensing. Sensors 2012, 12, 3747–3761. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, Y.; Yang, J. A survey of sparse representation: algorithms and applications. IEEE Biom. Compend. 2015, 3, 490–530. [Google Scholar] [CrossRef]

- Huang, H.; Makur, A. Backtracking-based matching pursuit method for sparse signal reconstruction. IEEE Signal Process. Lett. 2011, 18, 391–394. [Google Scholar] [CrossRef]

- Donoho, D.L.; Tsaig, Y.; Drori, I.; Starck, J.L. Sparse solution of underdetermined systems of linear equations by stagewise orthogonal matching pursuit. IEEE Trans. Inf. Theory 2012, 58, 1094–1121. [Google Scholar] [CrossRef]

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends Optim. 2013, 1, 123–231. [Google Scholar] [CrossRef]

- Yang, A.Y.; Zhou, Z.; Balasubramanian, A.G. Fast l1-minimization algorithms for robust face recognition. IEEE Trans. Image Process 2013, 22, 3234–3246. [Google Scholar] [CrossRef] [PubMed]

- Candes, E.J.; Wakin, M.; Boyd, S. Enhancing sparsity by reweighted l1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Feng, H.; Chen, J.Y.; Luo, L. Multiple target localization in WSNs using compressed sensing reconstruction based on ABC algorithm. In Proceedings of the International Conference on Frontiers of Sensors Technologies (ICFST 2016), Hong Kong, China, 12–14 March 2016; pp. 1–5. [Google Scholar]

- Maini, A.K. Handbook of Defence Electronics and Optronics: Fundamentals, Technologies and Systems; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2018. [Google Scholar]

- Miao, S.J. Homotopy Analysis Method in Nonlinear Differential Equations; Higher Education Press: Beijing, China, 2012. [Google Scholar]

- Hauenstein, D.L.; Alan, J.C. Certified predictor-corrector tracking for Newton homotopies. Symbol. Comput. 2016, 74, 239–255. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Guan, X.; Wan, X.; Liu, G.; Shen, H. Energy-Level Jumping Algorithm for Global Optimization in Compressive Sensing-Based Target Localization. Sensors 2019, 19, 2502. https://doi.org/10.3390/s19112502

Wang T, Guan X, Wan X, Liu G, Shen H. Energy-Level Jumping Algorithm for Global Optimization in Compressive Sensing-Based Target Localization. Sensors. 2019; 19(11):2502. https://doi.org/10.3390/s19112502

Chicago/Turabian StyleWang, Tianjing, Xinjie Guan, Xili Wan, Guoqing Liu, and Hang Shen. 2019. "Energy-Level Jumping Algorithm for Global Optimization in Compressive Sensing-Based Target Localization" Sensors 19, no. 11: 2502. https://doi.org/10.3390/s19112502

APA StyleWang, T., Guan, X., Wan, X., Liu, G., & Shen, H. (2019). Energy-Level Jumping Algorithm for Global Optimization in Compressive Sensing-Based Target Localization. Sensors, 19(11), 2502. https://doi.org/10.3390/s19112502