Object Tracking Algorithm Based on Dual Color Feature Fusion with Dimension Reduction

Abstract

1. Introduction

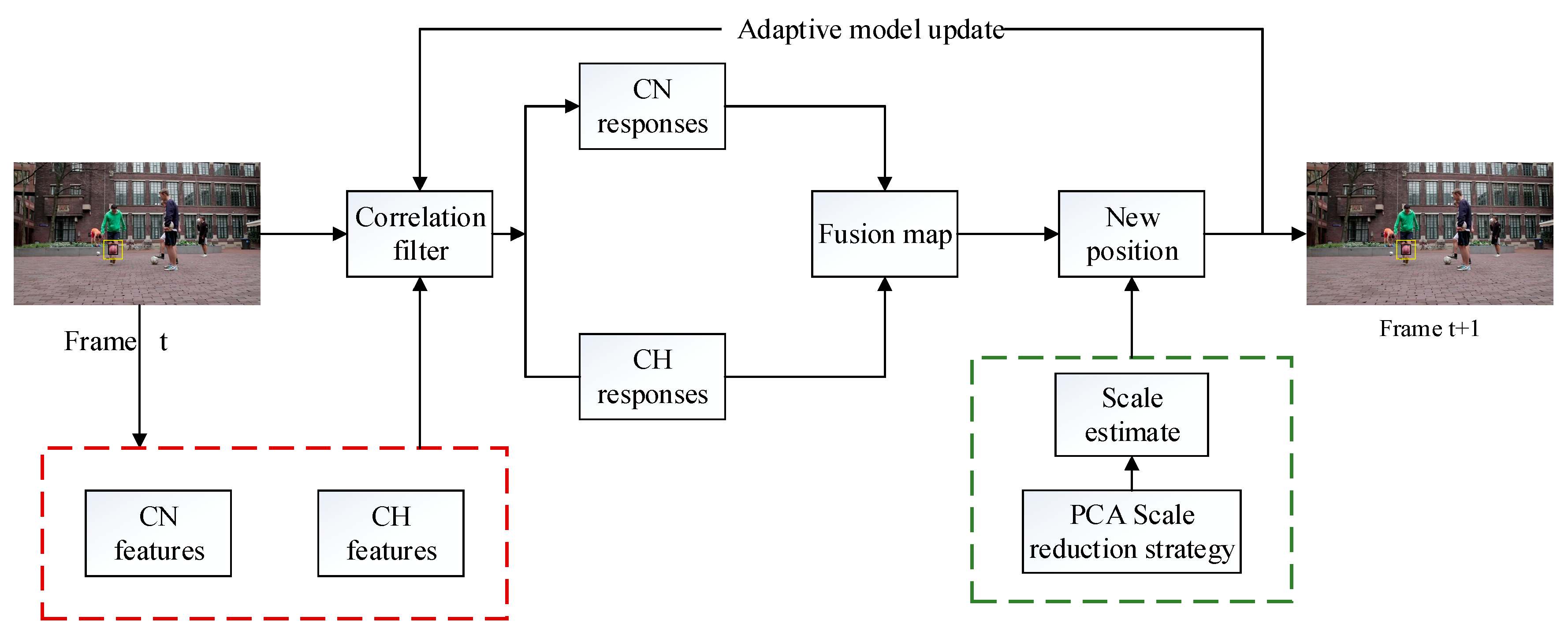

2. Correlation Filter (CF) Tracking

2.1. Classifier Training

2.2. Object Detection

2.3. Parameter Update

3. Proposed Algorithm

3.1. Color Name (CN) Feature

3.2. Color Histogram Feature

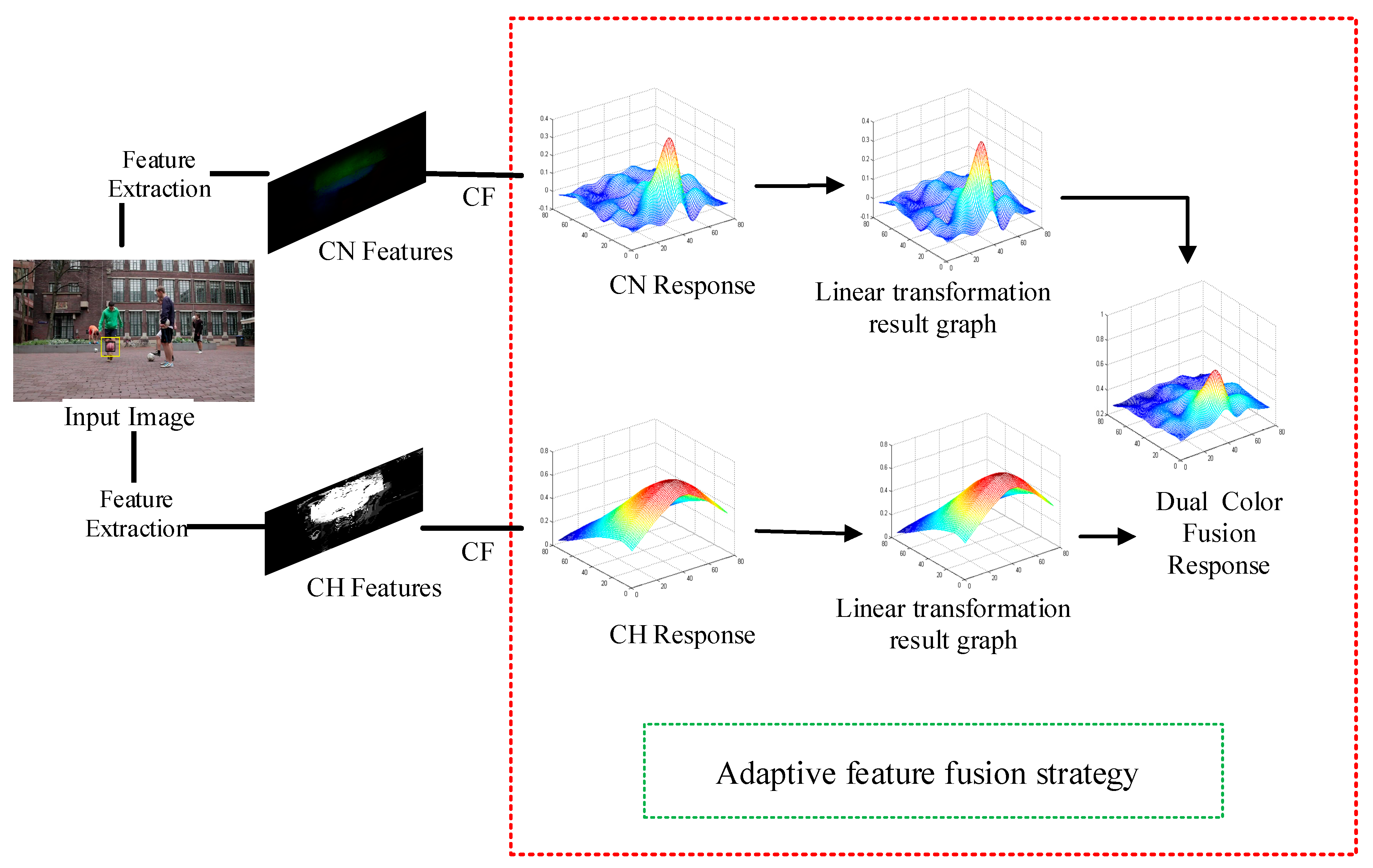

3.3. Dual Color Feature Fusion Strategy

- (1)

- The score function of the CH is recorded as:

- (2)

- The score function is the response score function, so that the CN score function is:

- (3)

- The key step of the feature fusion strategy is how to adaptively obtain the weight coefficients γcn and γhist. In the course of the experiment, we found that the color histogram feature weight score was relatively large in any scene. Directly performing the dual color feature fusion to obtain the tracking method is very sensitive to the color attribute, which easily leads to a failure in target tracking. In order to solve this problem, we introduce a suppression term, μ to the response of the histogram feature to obtain the final color histogram weight coefficient.

3.4. Scale Reduction

3.4.1. Principal Component Analysis

- are not related, where ;

- The variance of the variable is not less than the variance of , and the variance of the variable is gradually decreasing;

- From the above, a projection matrix of can be obtained:

3.4.2. Scale Reduction Strategy

4. Experiment

4.1. Implementation Details

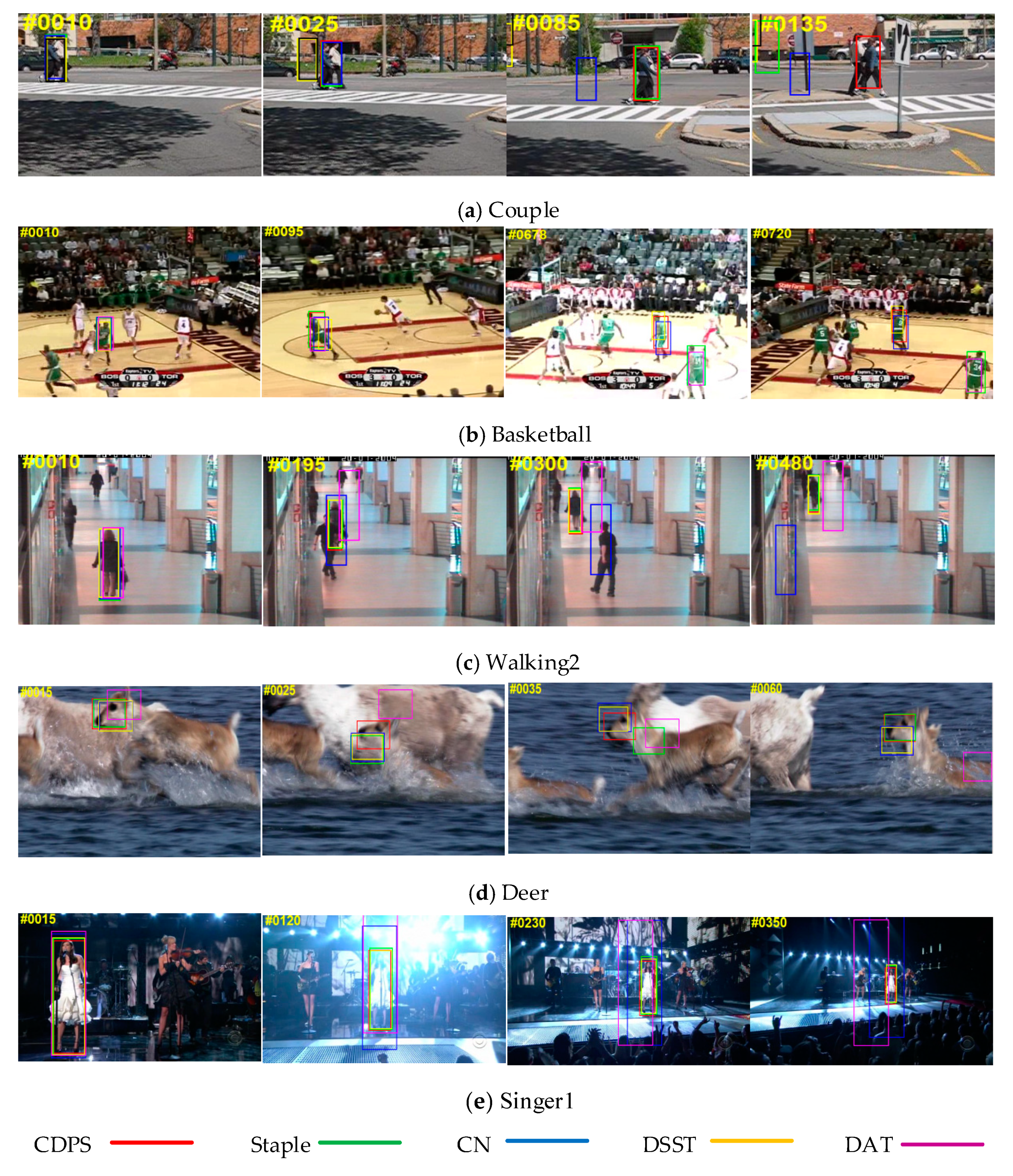

4.2. Qualitative Analysis

- (1)

- Deformation: Figure 3a. The “Couple” sequence had a deformation in the process of motion. From this sequence, CN and DSST were the earliest tracking failures, and then DAT and Staple deviated from the target position. Tracking the target showed that the tracking method proposed in this paper has the best tracking performance in the target deformation process, and Staple is the second-best tracker.

- (2)

- Occlusion: Figure 3c. The target of the 195th frame in the “Walking2” sequence was obviously occluded, and the DAT had a significant offset. At the 300th frame, the CN also drifted. The proposed algorithm had better robustness.

- (3)

- Fast motion, motion blur: A fast-moving situation is shown in Figure 3d, “Deer” sequence. The target moved quickly during the tracking process. At the 15th frame, the DAT had deviated from the target position at the 25th and 35th frames. The target had moved and blurred. In the figure, it was seen that Staple, CN, DSST, and DAT had large offsets. Only the algorithm did not drift, which indicated that the algorithm had the best tracking performance under fast-moving and moving-blur situations.

- (4)

- Illumination: Illumination changes were shown in the “Basketball” sequence (Figure 3b), and the “Singer1” sequence (Figure 3e). During the tracking process, obvious illumination changes occurred, and DAT found obvious drift. The results prove that the performance of the dual color feature is greatly improved compared to the single color feature tracker in the illumination change scenario.

- (5)

- In-plane rotation and out-of-plane rotation: “Couple” sequence (Figure 3a) and “Basketball” sequence (Figure 3b) produced internal and external rotation changes during the motion. From the “Couple” sequence, it was seen that at the 30th frame the DSST tracker could not keep up. The target, DAT, could not keep up with the 80th frame. At the 140th frame, only the algorithm was left. From the “Basketball” sequence, it was seen that Staple and DAT were much cheaper, and CN and DSST were also less expensive. The comparison results show that the proposed algorithm performs better for internal and external rotation scenes.

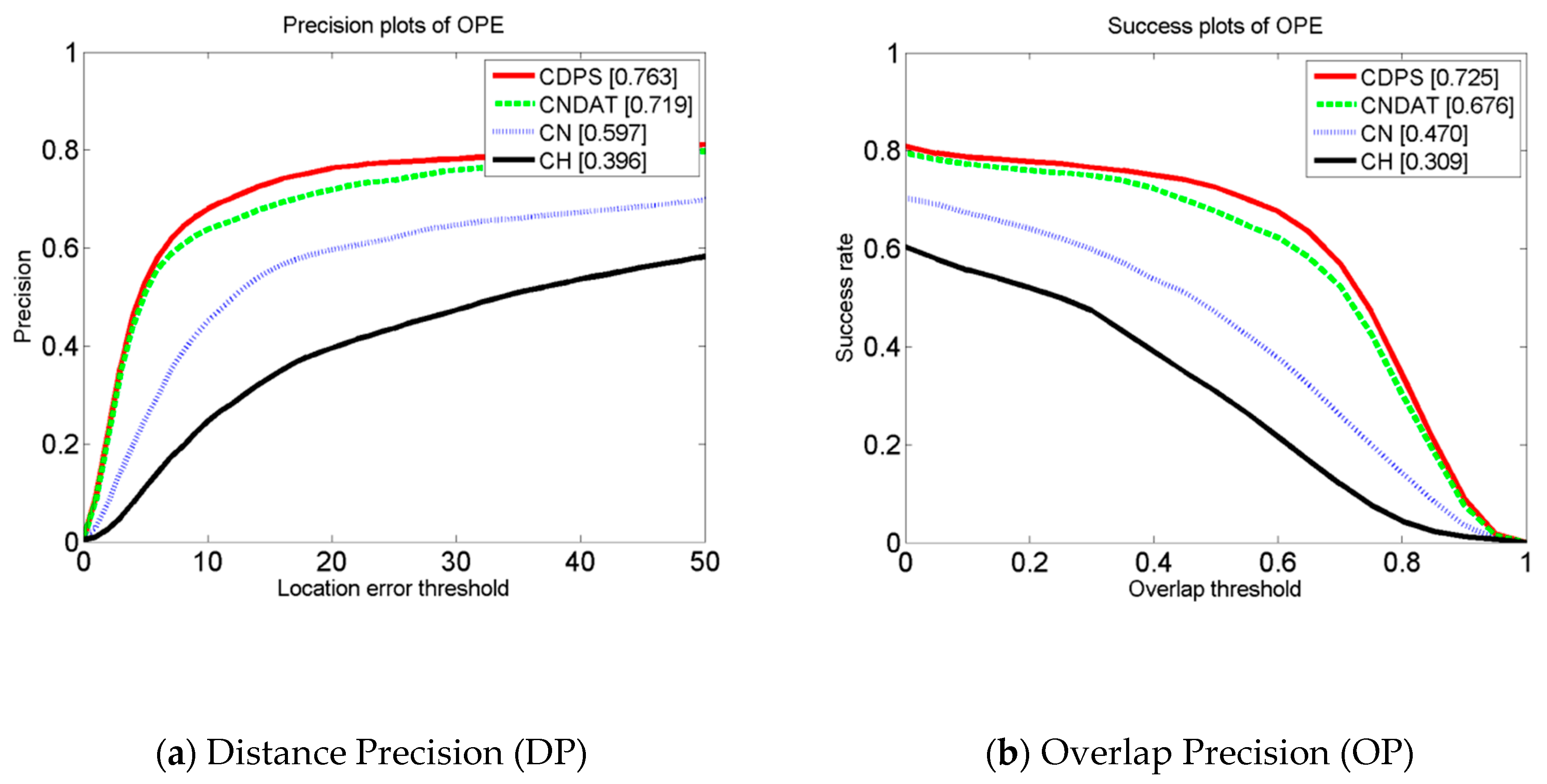

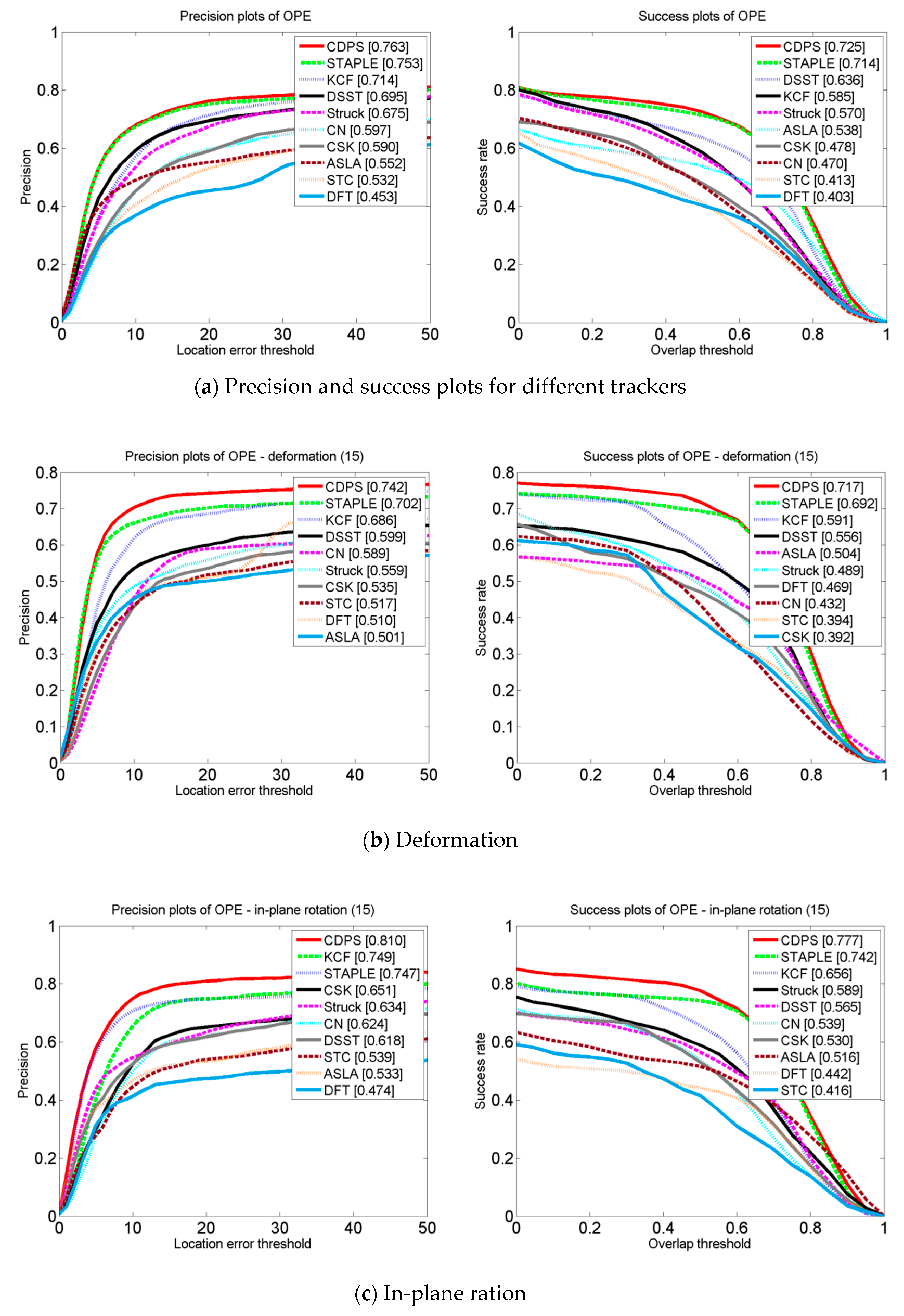

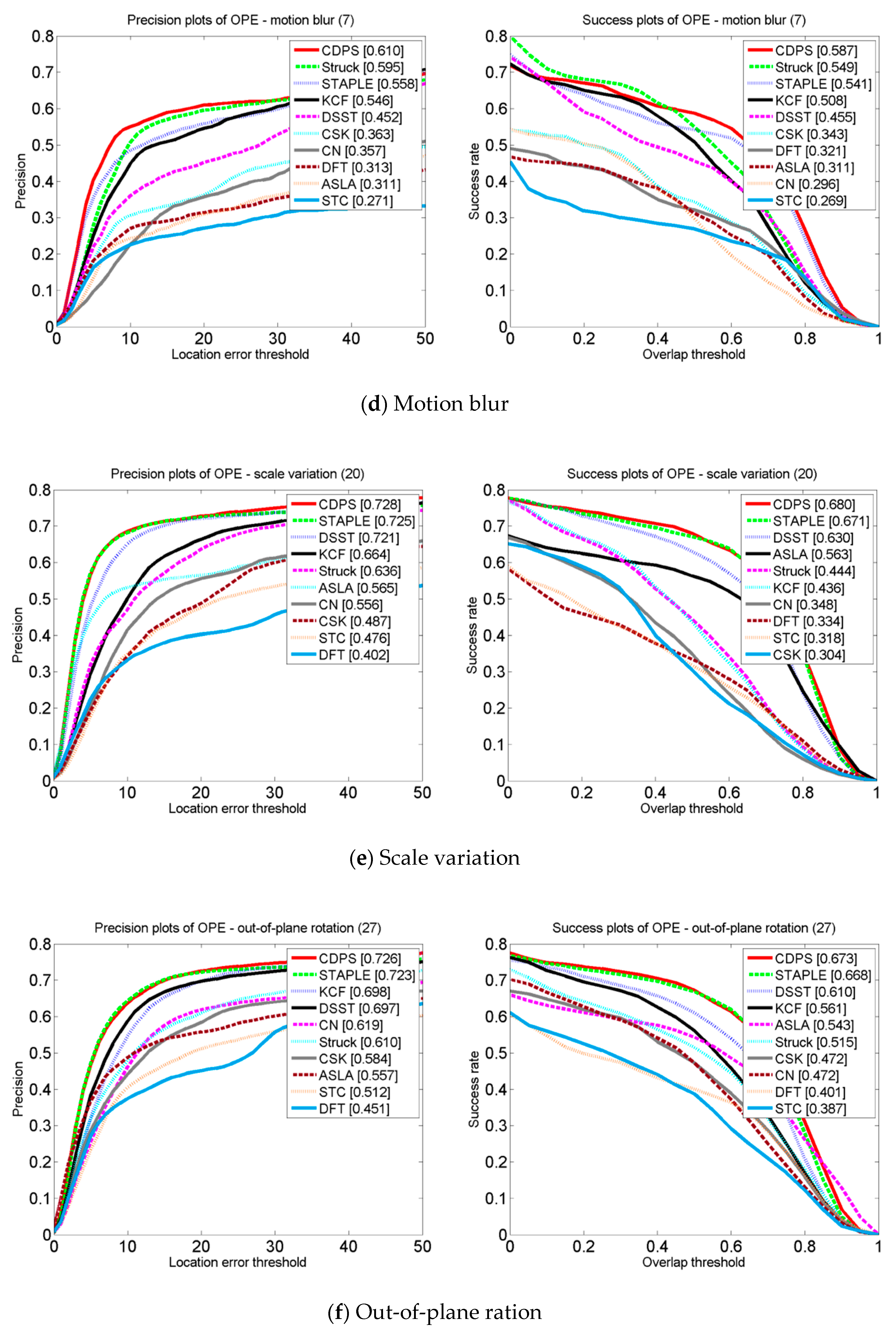

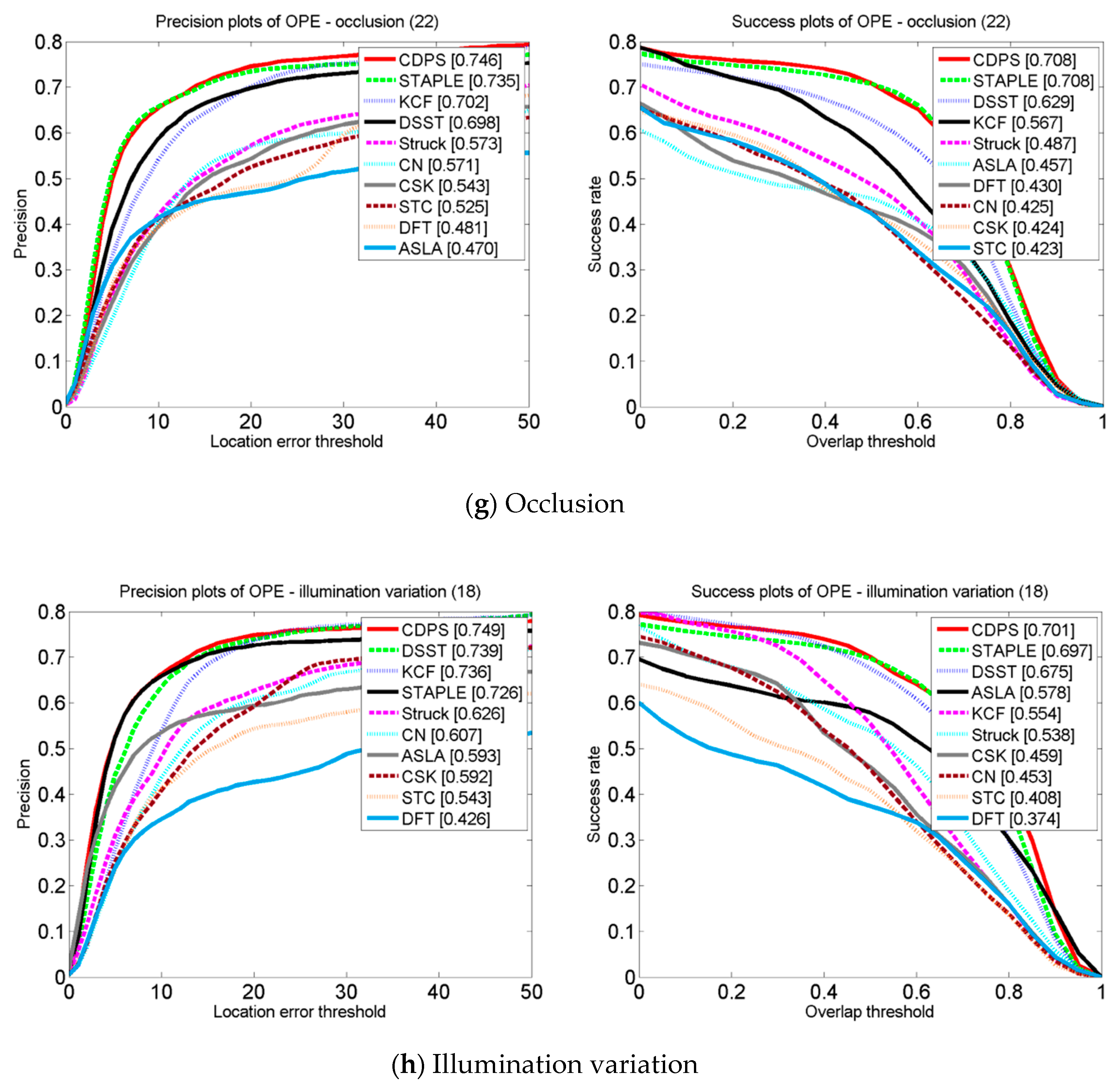

4.3. Quantitative Analysis

4.3.1. Quantitative Analysis of Feature Comparison Experiments

4.3.2. Comparative Analysis of Each Tracking Algorithm

4.3.3. Quantitative Analysis of the Dimensional Reduction of PCA Scale

4.3.4. Overall Tracking Performance

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual Tracking: An Experimental Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1442–1468. [Google Scholar]

- Trucco, E.; Plakas, K. Video Tracking: A Concise Survey. IEEE J. Ocean. Eng. 2006, 31, 520–529. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Savakis, A. Online Distance Metric Learning for Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1810–1821. [Google Scholar] [CrossRef]

- Yilmaz, A. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, Q.; Yu, H. Physics Inspired Methods for Crowd Video Surveillance and Analysis: A Survey. IEEE Access 2018, 6, 66816–66830. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Computer Vision—ECCV 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; pp. 65.1–65.11. [Google Scholar]

- Khan, F.S.; van de Weijer, J.; Vanrell, M. Modulating shape features by color attention for object recognition. Int. J. Comput. Vis. 2012, 98, 49–64. [Google Scholar] [CrossRef]

- van de Weijer, J.; Schmid, C. Coloring local feature extraction. In European Conference on Computer Vision 2006 May 7; Springer: Berlin/Heidelberg, Germany, 2006; pp. 334–348. [Google Scholar]

- Khan, F.S.; Anwer, R.M.; van de Weijer, J.; Bagdanov, A.; Lopez, A.; Felsberg, M. Coloring action recognition in still images. Int. J. Comput. Vis. 2013, 105, 205–221. [Google Scholar] [CrossRef]

- Swain, M.J.; Ballard, D.H. Color indexing. Int. J. Comput. Vis. 1991, 7, 11–32. [Google Scholar] [CrossRef]

- Cheng, Y. Mean Shift, Mode Seeking, and Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 790–799. [Google Scholar] [CrossRef]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1090–1097. [Google Scholar]

- Possegger, H.; Mauthner, T.; Bischof, H. In defense of color-based model-free tracking. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2015; pp. 2113–2120. [Google Scholar]

- Li, P.; Li, X. Mean shift tracking algorithm based on gradient feature and color feature fusion. Microcomput. Appl. 2011, 30, 35–38. [Google Scholar]

- Dong, W.; Yu, S.; Liu, S.; Zhang, Z.; Gu, W. Image Retrieval Based on Multi-feature Fusion. In Proceedings of the 2014 Fourth International Conference on Instrumentation and Measurement, Computer, Communication and Control, Harbin, China, 18–20 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 240–243. [Google Scholar]

- Huang, M.; Shu, H.; Ma, Y.; Gong, Q. Content-based image retrieval technology using multi-feature fusion. Optik–Int. J. Light Electron Opt. 2015, 126, 2144–2148. [Google Scholar] [CrossRef]

- Morenonoguer, F.; Andradecetto, J.; Sanfeliu, A. Fusion of Color and Shape for Object Tracking under Varying Illumination. Lect. Notes Comput. Sci. 2003, 2652, 580–588. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary Learners for Real-Time Tracking. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2015; pp. 1401–1409. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. arXiv, 2018; arXiv:1803.08679. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed]

- Urban, J.P.; Buessler, J.L.; Kihl, H. Color histogram footprint technique for visual object tracking. In Proceedings of the 2005 IEEE Conference on Control Applications (CCA 2005), Toronto, ON, Canada, 28–31 August 2005; pp. 761–766. [Google Scholar]

- Chen, T.M.; Luo, R.C.; Hsiao, T.H. Visual tracking using adaptive color histogram model. In Proceedings of the 25th Annual Conference of the IEEE Industrial Electronics Society (Cat. No.99CH37029), San Jose, CA, USA, 29 November–3 December 1999; pp. 1336–1341. [Google Scholar]

- Leichter, I.; Lindenbaum, M.; Rivlin, E. Mean Shift tracking with multiple reference color histograms. Comput. Vis. Image Understand. 2010, 114, 400–408. [Google Scholar] [CrossRef]

- Yan, Z.; Zhan, H.B.; Wei, W.; Wang, K. Weighted Color Histogram Based Particle Filter for Visual Target Tracking. Control Decis. 2006, 21, 868. [Google Scholar]

- Dong, H.; Gao, J.; Liangmei, H.U.; WenWen, D.O.N.G. Research on the shape feature extraction and recognition based on principal components analysis. J. Hefei Univ. Technol. 2003, 26, 176–179. [Google Scholar]

- Lkopf, B.; Smola, A.J.; Ller, K.R. Kernel principal component analysis. In Artificial Neural Networks—ICANN’97; Springer: Berlin/Heidelberg, Germany, 1997; pp. 555–559. [Google Scholar]

- Zhou, J.; Xing, H.E. Study on the Evaluation on the Core Journals of Management Science Based on Principle Component Analysis. Sci-Tech Inf. Dev. Econ. 2015, 25, 127–130. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2411–2418. [Google Scholar]

| System | CPU | Frequency | System Type | RAM | Experimental Software |

|---|---|---|---|---|---|

| Windows 10 | Intel i7-7700K | 4.20 GHz | 64 | 16.0 GB | Matlab R2014a |

| Video | Number of Frames | Main Challenges |

|---|---|---|

| Couple | 1140 | SV, DEF, OPR, IPR |

| Basketball | 725 | IV, DEF, OPR, IPR |

| Walking2 | 500 | OCC, SV |

| Deer | 71 | FM, MB |

| Singer1 | 351 | IV, SV |

| Characteristic | Staple [21] | DAT [18] | CN [15] | DSST [9] | CDPS |

|---|---|---|---|---|---|

| Illumination variation | 0.726 | 0.357 | 0.607 | 0.739 | 0.763 |

| In-plane rotation | 0.747 | 0.427 | 0.624 | 0.618 | 0.810 |

| Scale variation | 0.725 | 0.403 | 0.556 | 0.721 | 0.728 |

| Occlusion | 0.735 | 0.387 | 0.571 | 0.698 | 0.746 |

| Deformation | 0.704 | 0.589 | 0.589 | 0.599 | 0.742 |

| Out-of-plane rotation | 0.723 | 0.395 | 0.619 | 0.697 | 0.726 |

| Distance Precision | 0.753 | 0.396 | 0.597 | 0.695 | 0.763 |

| Characteristic | Staple [21] | DAT [18] | CN [15] | DSST [9] | CDPS |

|---|---|---|---|---|---|

| Illumination variation | 0.697 | 0.300 | 0.453 | 0.675 | 0.701 |

| In-plane rotation | 0.742 | 0.385 | 0.539 | 0.565 | 0.777 |

| Scale variation | 0.671 | 0.282 | 0.348 | 0.630 | 0.680 |

| Occlusion | 0.708 | 0.348 | 0.425 | 0.629 | 0.708 |

| Deformation | 0.692 | 0.448 | 0.432 | 0.556 | 0.717 |

| Out-of-plane rotation | 0.668 | 0.294 | 0.472 | 0.610 | 0.673 |

| Overlap precision | 0.714 | 0.309 | 0.470 | 0.636 | 0.725 |

| Staple [21] | DAT [18] | CN [15] | DSST [9] | CNDAT | CDPS | |

|---|---|---|---|---|---|---|

| Trellis | 24.4318 | 33.7101 | 110.1833 | 21.2816 | 20.5840 | 21.4211 |

| Doll | 34.6974 | 31.6614 | 111.1300 | 21.5115 | 26.7379 | 27.6085 |

| Dog1 | 44.2728 | 99.3431 | 220.2279 | 35.3933 | 34.9900 | 35.7940 |

| Lemming | 26.9557 | 27.2720 | 64.6727 | 12.3505 | 18.4105 | 24.0765 |

| Liquor | 22.4907 | 28.6155 | 35.9686 | 7.4456 | 20.9842 | 22.4102 |

| Average | 30.56968 | 44.1204 | 108.4365 | 19.5965 | 24.34132 | 26.13898 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, S.; Ge, Y.; Han, J.; Zhang, X. Object Tracking Algorithm Based on Dual Color Feature Fusion with Dimension Reduction. Sensors 2019, 19, 73. https://doi.org/10.3390/s19010073

Hu S, Ge Y, Han J, Zhang X. Object Tracking Algorithm Based on Dual Color Feature Fusion with Dimension Reduction. Sensors. 2019; 19(1):73. https://doi.org/10.3390/s19010073

Chicago/Turabian StyleHu, Shuo, Yanan Ge, Jianglong Han, and Xuguang Zhang. 2019. "Object Tracking Algorithm Based on Dual Color Feature Fusion with Dimension Reduction" Sensors 19, no. 1: 73. https://doi.org/10.3390/s19010073

APA StyleHu, S., Ge, Y., Han, J., & Zhang, X. (2019). Object Tracking Algorithm Based on Dual Color Feature Fusion with Dimension Reduction. Sensors, 19(1), 73. https://doi.org/10.3390/s19010073