Spatio-Temporal Field Estimation Using Kriged Kalman Filter (KKF) with Sparsity-Enforcing Sensor Placement

Abstract

1. Introduction

- The performance metrics to estimate the stationary as well as the non-stationary components of the field are represented in closed form as an explicit function of the sensor location selection vector.

- The aforementioned analytical formalism tackles two important issues in the sensor placement step. First, the developed method takes care of the fact that the estimation of the non-stationary component of the field involves the stationary component of the field as a spatially correlated observation noise. Second, the proposed method is applicable for a general class of spatial covariance matrices of the stationary component of the field, even when they are ill-conditioned or close to singular [25].

- The proposed sensor placement problem is formulated in a way that minimizes a cost function that involves the sum of the mean square error (MSE) of the stationary and the non-stationary component of the field as well as a spatial sparsity enforcing penalty. The overall optimization problem also satisfies a flexible resource constraint at every time instant.

2. Signal Modelling and Problem Formulation

2.1. Measurement Model

2.2. Modelling of the Spatial Variability

2.3. State Model

2.4. Main Problem Statement

2.5. Simple KKF Estimator and Estimation Error Covariance

2.6. Performance Metrics as a Function of

3. KKF with Sensor Placement

3.1. Sensor Placement Problem as an SDP

- First of all, let us consider the non-convex version of the optimization problem of (25) with . This is given asIn this case, the MSE cost will be minimum, i.e., the best estimation performance is achieved, when we select the maximum number of available candidate locations or in other words, when . Then, there is no way to reduce the number of selected locations below and the constraint becomes redundant. In the aforementioned case, it is difficult to reduce the number of selected sensing locations below .

- Notice that, dropping the resource constraint (25b) and increasing will reduce the number of selected sensing locations. However, there is no explicit relation between and , i.e., it is difficult to directly control the resource allocation (i.e., ) through .

- We mention that the proposed formulation of (25) is not a direct MSE minimization problem but it attains a specific MSE along with enforcing sparsity in spatial sensor location selection through the second summand of (25a). The sparsity enforcement is lower bounded by the minimum number of sensing locations to be selected at any t, i.e., . It should be noted that for an arbitrary selection of , the minimum number of selected sensing locations will always be .

- Lastly, it should be noted that a sparsity-enforcing design of can be achieved by retaining only the second summand of the objective function of (25a) and using a separate performance constraint given as [20,22]. The desired performance threshold can be time-varying or independent of t based on the application. However, in many practical scenarios, it could be difficult to set the performance threshold a priori for every t.

- The simplest approach could be to set the non-zero entries of to 1. However, there can be a huge difference between the magnitudes of any two non-zero elements in . Considering the fact that the indices of the high magnitude (close to 1) elements of signify a more informative sensing location, can be sorted in ascending order of magnitude [18] and a selection threshold () can be selected based on the magnitudes of the elements of the sorted . The entries of the Boolean selection vector can be computed as if else , for .

- Another approach could be a stochastic approach, where every entry of is assumed to be the probability that this sensing location is selected at time t. Based on this, multiple random realizations of are generated, where the probability that is given by , for . Then the realization that satisfies the constraints and minimizes the estimation error, i.e., is selected [20].

3.2. Spatial Sensor Placement for Stationary Field Estimation

3.3. Sparsity-Enhancing Iterative Design

- Initialize, weight vector , , and maximum number of iterations I.

- for

- , for every

- end;

- set.

3.4. KKF Algorithm with Sensor Placement

| Algorithm 1 Sensor placement followed by a KKF estimator. |

|

4. Simulation Results

4.1. Sensor Placement Followed by Field Estimation Using KKF

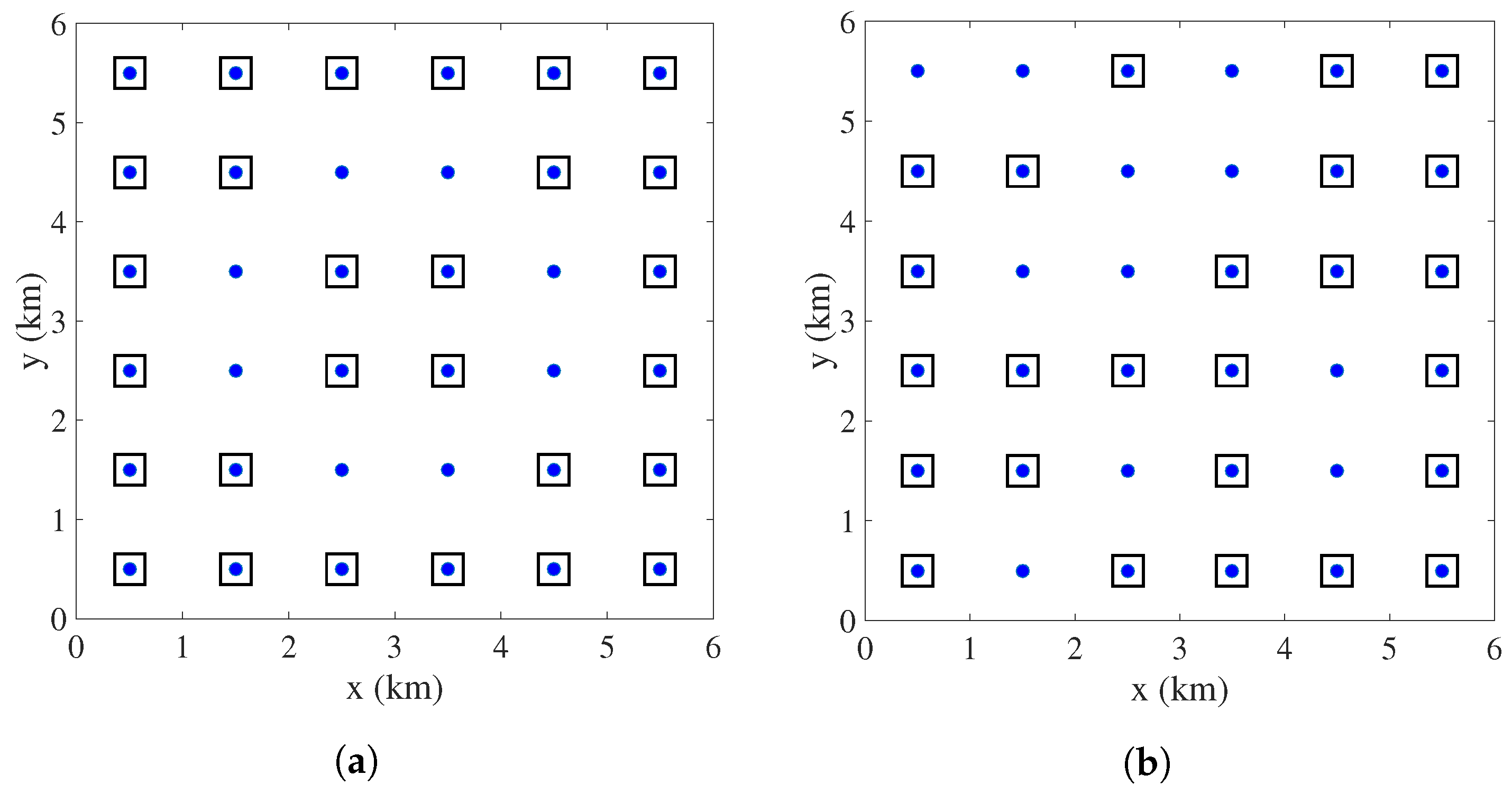

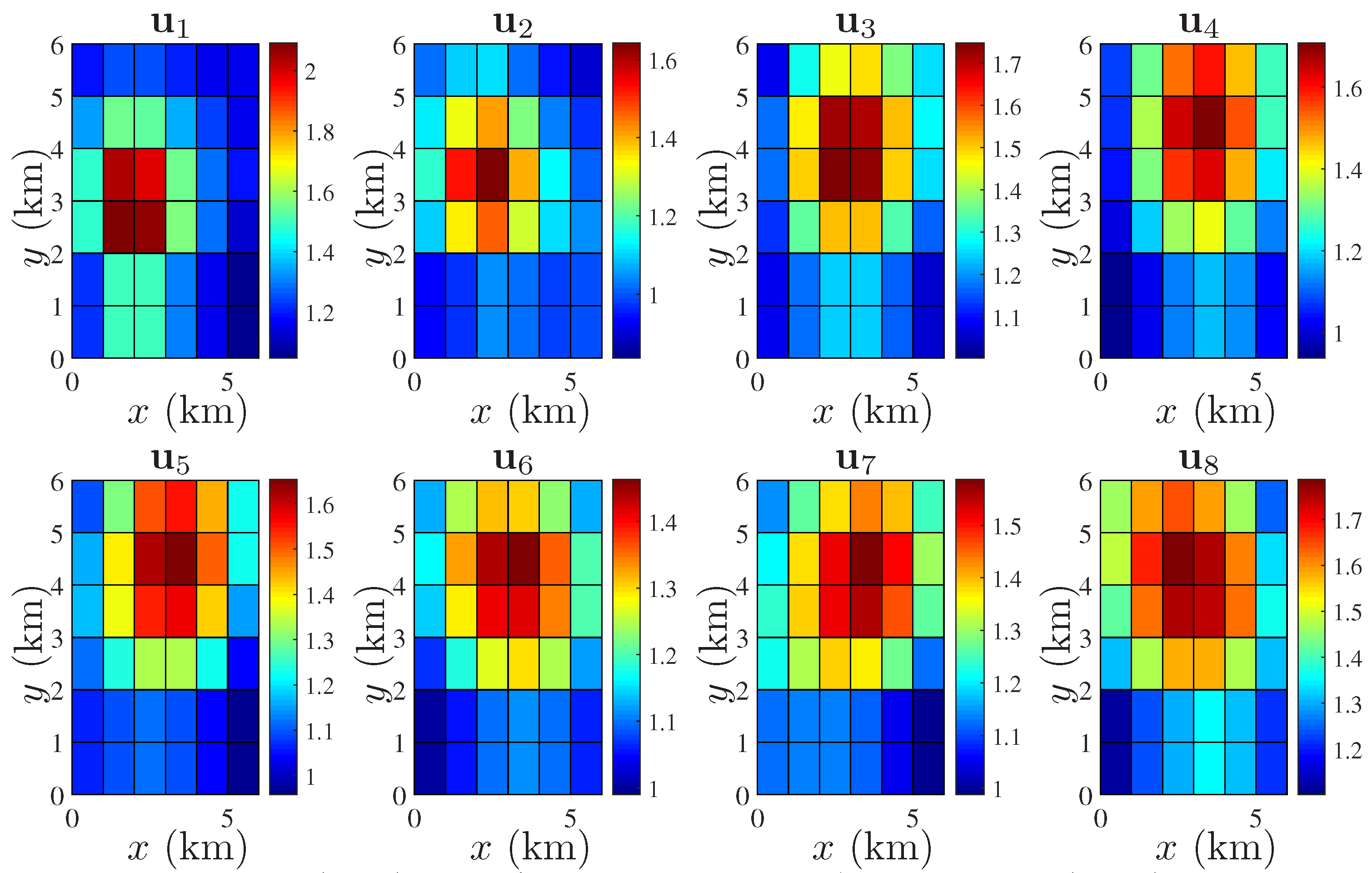

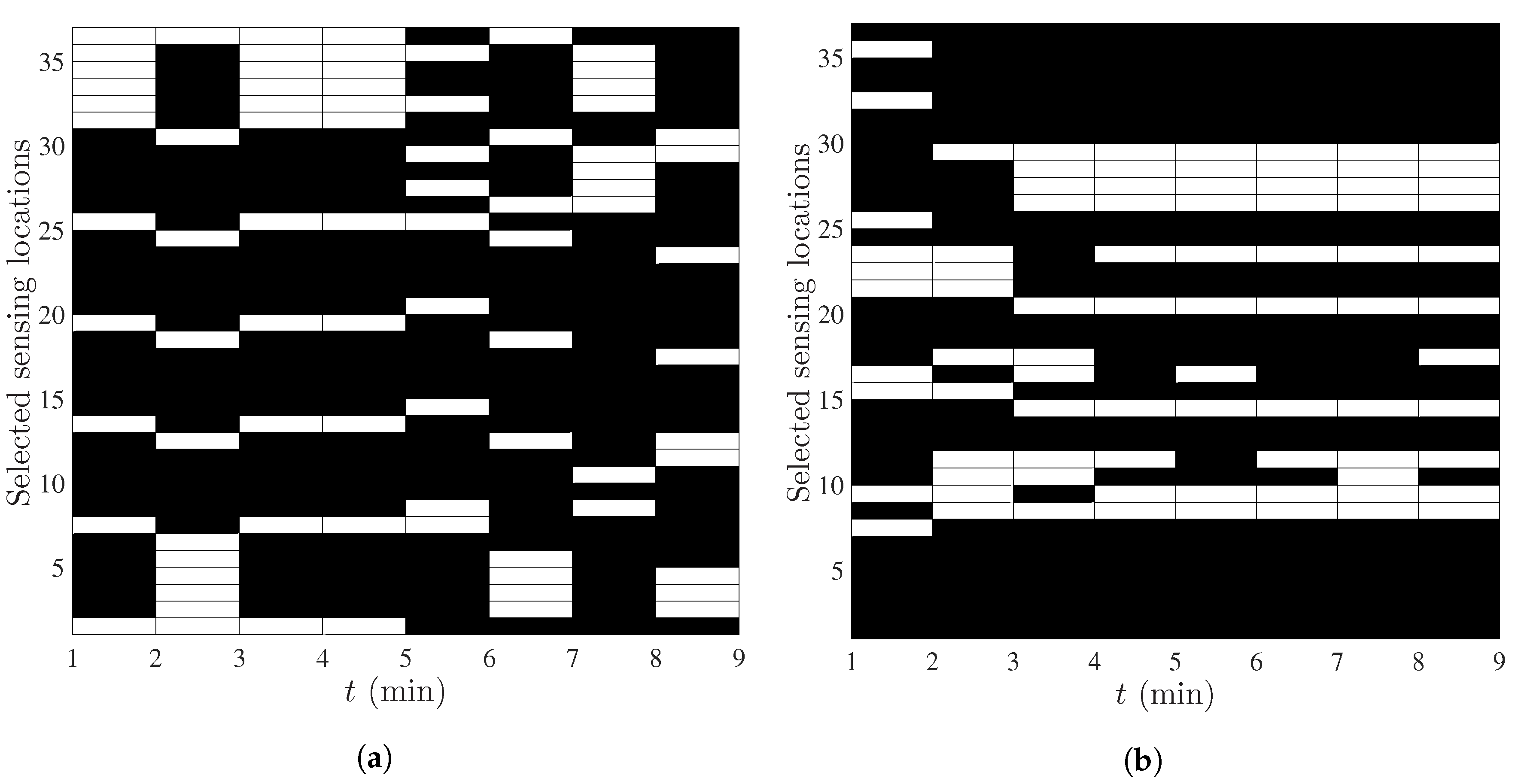

- In the second scenario, we have assumed a very low and fixed translation, i.e., for the first 4 snapshots and , i.e., no translation, for the last 4 snapshots (Figure 5). It is seen that almost the same set of sensors are selected in the last 4 snapshots of Figure 6b. In general, when is not changing with time, the estimation error of the non-stationary component reaches a steady state after a number of snapshots and the same set of sensors are selected every t.

4.2. Performance Analysis

4.3. Spatial Sensor Placement for Stationary Field Estimation

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Oliveira, L.; Rodrigues, J. Wireless sensor networks: A survey on environmental monitoring. J. Commun. 2011, 6, 143–151. [Google Scholar] [CrossRef]

- Hart, J.K.; Martinez, K. Environmental sensor networks: A revolution in the earth system science? Earth-Sci. Rev. 2006, 78, 177–191. [Google Scholar] [CrossRef]

- Cressie, N.; Wikle, K. Statistics for Spatio-Temporal Data; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Mardia, K.V.; Goodall, C.; Redfern, E.J.; Alonso, F.J. The kriged Kalman filter. Test 1998, 7, 217–282. [Google Scholar] [CrossRef]

- Wikle, C.K.; Cressie, N. A dimension-reduced approach to space-time Kalman filtering. Biometrika 1999, 86, 815–829. [Google Scholar] [CrossRef]

- Kim, S.J.; Anese, E.D.; Giannakis, G.B. Cooperative spectrum sensing for cognitive radios using kriged Kalman filtering. IEEE J. Sel. Top. Signal Process. 2011, 5, 24–36. [Google Scholar] [CrossRef]

- Rajawat, K.; Dall’Anese, E.; Giannakis, G. Dynamic network delay cartography. IEEE Trans. Inf. Theory 2014, 60, 2910–2920. [Google Scholar] [CrossRef]

- Sahu, S.K.; Mardia, K.V. A Bayesian kriged Kalman model for short term forecasting of air pollution levels. J. R. Stat. Soc. Ser. C (Appl. Stat.) 2005, 54, 223–244. [Google Scholar] [CrossRef]

- Cortes, J. Distributed Kriged Kalman filter for spatial estimation. IEEE Trans. Autom. Control 2009, 54, 2816–2827. [Google Scholar] [CrossRef]

- Krause, A.; Singh, A.; Guestrin, C. Near-optimal sensor placements in Gaussian processes: Theory, efficient algorithms and empirical studies. J. Mach. Learn. Res. 2008, 9, 235–284. [Google Scholar]

- Ranieri, J.; Chebira, A.; Vetterli, M. Near-optimal sensor placement for linear inverse problems. IEEE Trans. Signal Process. 2014, 62, 1135–1146. [Google Scholar] [CrossRef]

- Carmi, A. Sensor scheduling via compressed sensing. In Proceedings of the 13th Conference on Information Fusion (FUSION), Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Fu, Y.; Ling, Q.; Tian, Z. Distributed sensor allocation for multi-target tracking in wireless sensor networks. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 3538–3553. [Google Scholar] [CrossRef]

- Patan, M. Optimal Sensor Networks Scheduling in Identification of Distributed Parameter Systems; Springer Science & Business Media: Berlin, Germay, 2012; Volume 425. [Google Scholar]

- Liu, S.; Fardad, M.; Masazade, E.; Varshney, P.K. Optimal periodic sensor scheduling in networks of dynamical systems. IEEE Trans. Signal Process. 2014, 62, 3055–3068. [Google Scholar] [CrossRef]

- Mo, Y.; Ambrosino, R.; Sinopoli, B. Sensor selection strategies for state estimation in energy constrained wireless sensor networks. Automatica 2011, 47, 1330–1338. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Vuran, M.C.; Akan, O.B. On exploiting spatial and temporal correlation in wireless sensor networks. In Proceedings of the WiOpt, Cambridge, UK, March 2004; Volume 4, pp. 71–80. [Google Scholar]

- Joshi, S.; Boyd, S. Sensor selection via convex optimization. IEEE Trans. Signal Process. 2009, 57, 451–462. [Google Scholar] [CrossRef]

- Jamali-Rad, H.; Simonetto, A.; Ma, X.; Leus, G. Distributed sparsity-aware sensor selection. IEEE Trans. Signal Process. 2015, 63, 5951–5964. [Google Scholar] [CrossRef]

- Chepuri, S.P.; Leus, G. Sparsity-promoting sensor selection for non-linear measurement models. IEEE Trans. Signal Process. 2015, 63, 684–698. [Google Scholar] [CrossRef]

- Liu, S.; Cao, N.; Varshney, P.K. Sensor placement for field estimation via poisson disk sampling. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016; pp. 520–524. [Google Scholar]

- Roy, V.; Simonetto, A.; Leus, G. Spatio-temporal sensor management for environmental field estimation. Signal Process. 2016, 128, 369–381. [Google Scholar] [CrossRef]

- Jindal, A.; Psounis, K. Modeling spatially correlated data in sensor networks. ACM Trans. Sens. Netw. 2006, 2, 466–499. [Google Scholar] [CrossRef]

- Liu, S.; Chepuri, S.P.; Fardad, M.; Maşazade, E.; Leus, G.; Varshney, P.K. Sensor selection for estimation with correlated measurement noise. IEEE Trans. Signal Process. 2016, 64, 3509–3522. [Google Scholar] [CrossRef]

- Ababou, R.; Bagtzoglou, A.C.; Wood, E.F. On the condition number of covariance matrices in kriging, estimation, and simulation of random fields. Math. Geol. 1994, 26, 99–133. [Google Scholar] [CrossRef]

- Sigrist, F.; Künsch, H.R.; Stahel, W.A. A dynamic nonstationary spatio-temporal model for short term prediction of precipitation. Ann. Appl. Stat. 2012, 6, 1452–1477. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing: Estimation Theory; PTR Prentice-Hall: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Boyd, S.; Vandenberghe, S. Convex Optimization; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Candes, E.; Wakin, M.; Boyd, S. Enhancing sparsity by reweighted ℓ1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Grant, M.; Boyd, S.; Ye, Y. CVX, Matlab Software for Disciplined Convex Programming, CVX Research, Inc.: Austin, TX, USA, 2008.

- Sturm, J.F. Using sedumi 1.02, a matlab toolbox for optimization over symmetric cones. Optim. Methods Softw. 1999, 11, 625–653. [Google Scholar] [CrossRef]

- Simonetto, A.; Dall’Anese, E. Prediction-correction algorithms for time-varying constrained optimization. IEEE Trans. Signal Process. 2017, 65, 5481–5494. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roy, V.; Simonetto, A.; Leus, G. Spatio-Temporal Field Estimation Using Kriged Kalman Filter (KKF) with Sparsity-Enforcing Sensor Placement. Sensors 2018, 18, 1778. https://doi.org/10.3390/s18061778

Roy V, Simonetto A, Leus G. Spatio-Temporal Field Estimation Using Kriged Kalman Filter (KKF) with Sparsity-Enforcing Sensor Placement. Sensors. 2018; 18(6):1778. https://doi.org/10.3390/s18061778

Chicago/Turabian StyleRoy, Venkat, Andrea Simonetto, and Geert Leus. 2018. "Spatio-Temporal Field Estimation Using Kriged Kalman Filter (KKF) with Sparsity-Enforcing Sensor Placement" Sensors 18, no. 6: 1778. https://doi.org/10.3390/s18061778

APA StyleRoy, V., Simonetto, A., & Leus, G. (2018). Spatio-Temporal Field Estimation Using Kriged Kalman Filter (KKF) with Sparsity-Enforcing Sensor Placement. Sensors, 18(6), 1778. https://doi.org/10.3390/s18061778