2.1. Notation and Preliminaries

Matrices and vectors are shown in bold capital and bold lower-cased fonts, respectively. Sets are denoted with calligraphic fonts (e.g.,

), and their cardinality is denoted as

. For a vector

,

is the

i-th entry,

is the subvector of

corresponding to the entries with indices in

. We define

-norm,

;

-norm,

;

-norm,

(i.e., the number of nonzero elements).

is a sign vector with entries:

if

,

if

, and zero otherwise. Based on the sign operator, we define the shrinkage operator:

For a matrix and an index set , is the submatrix containing only the rows of with indices in . denotes the column-wise vectorization of matrix . Letters d and r in superscript refer to the components related to the depth and reflectance information, respectively. Letters v and h in subscript refer to the components related to the vertical and horizontal directions, respectively.

Ridge detection for range measurements. We first take a scan from a 2D laser scanner as an example to introduce our operator of ridge detection and then extend the approach to 3D depth profiles. In

Figure 1, we plot the depth profile

of a typical indoor environment, which is obtained using a standard planar range finder measured with (known) discrete angles. Clearly, the profile is sufficiently regular and contains a few corners. Considering three consecutive points at coordinates

,

, and

, there is a corner at

i if

. In the following, we assume that

for all

i (this assumption comes without loss of generality since we can define it at arbitrary resolution). Therefore, the corner detection for the 2D profile is to find those indices

i such that

. To make the notation more compact, we introduce the second-order difference operator:

Thus, a simple operation

can detect corners. For a 3D depth profile

, we estimate the ridges of vertical and horizontal directions

and

, respectively:

where the matrices

and

are the same as

in Equation (

1), but with suitable dimensions. To combine the two equations of (

2), we reformulate it as below:

Here, ⊗ is the Kronecker product, and .

2.2. Adaptive SC and Its Solutions

For each patch

(in the vectorized form) in the depth ridge map, we formulate the following adaptive SC model:

where

and

represent the patches from vertical and horizontal ridge maps, respectively.

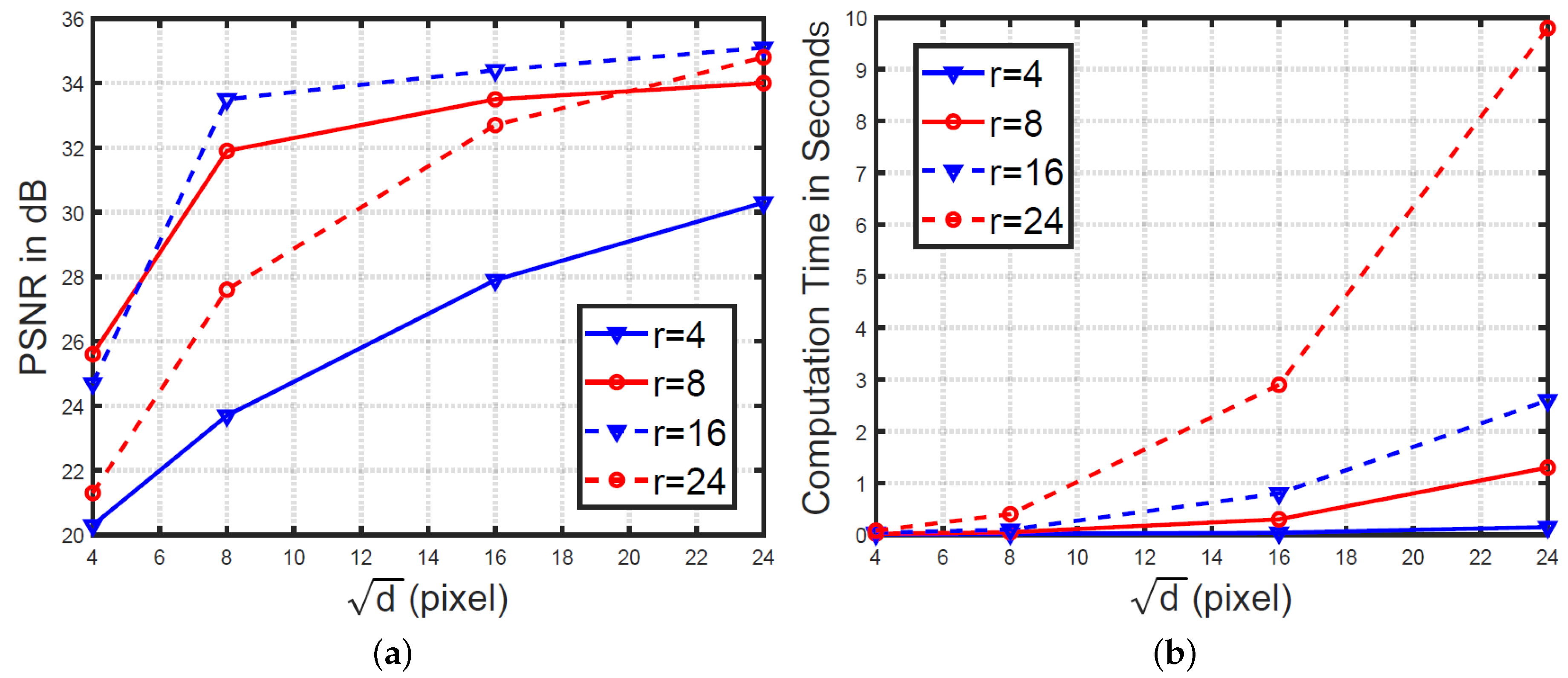

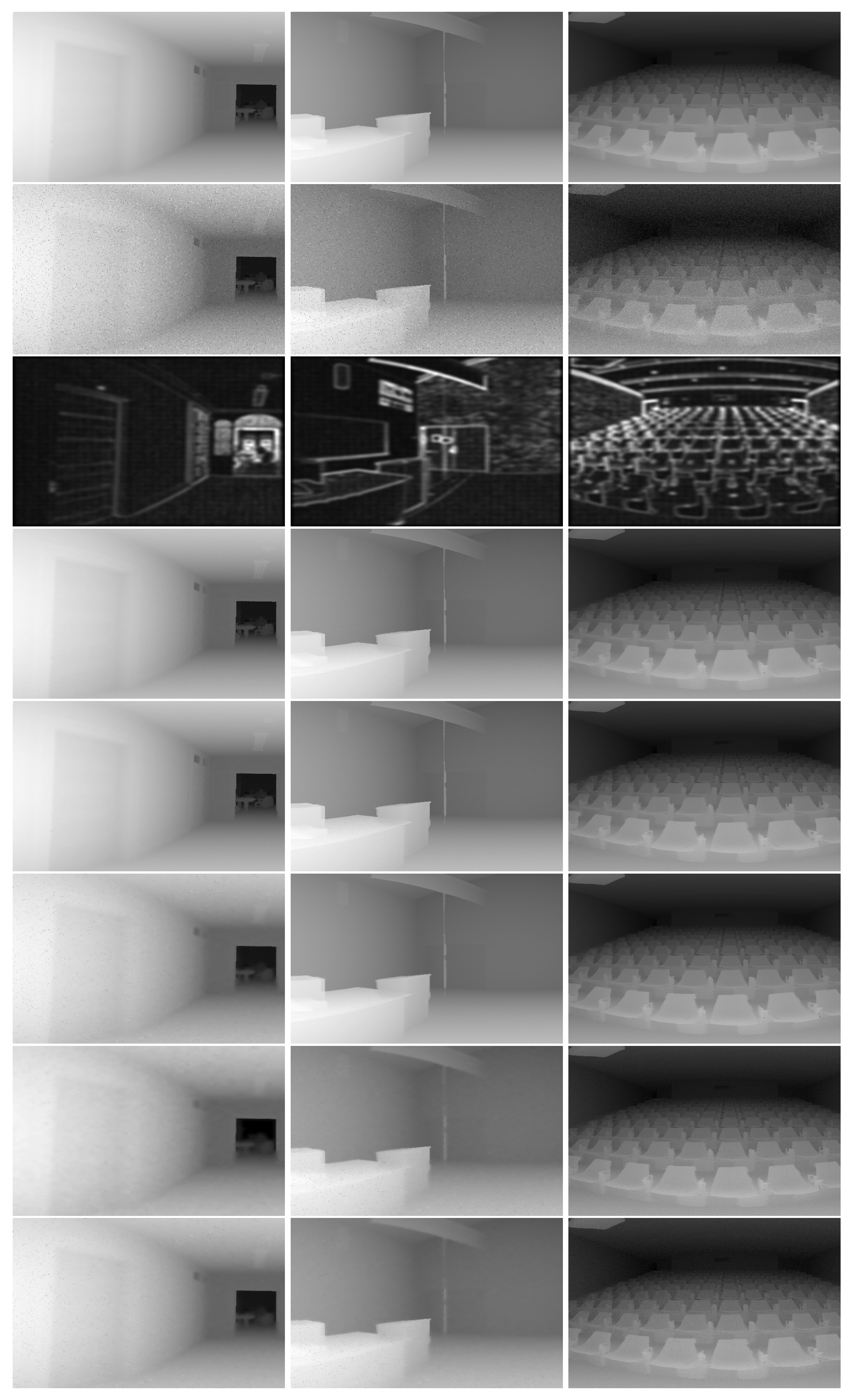

is the fixed pre-learned dictionary, as shown in

Figure 2, which is obtained by applying the basic SC algorithm [

19] on 40 pre-selected ridge maps of man-made scenarios (here, we display our pre-learned dictionary with the optimal setting of parameters as obtained in

Section 3.1.1, namely the number of dictionary atoms is 1024 and the size of each atom is 8 × 8). In [

23], different dictionaries (including off-the-shelf ones such as discrete cosine transform (DCT) basis, wavelets basis, and dictionaries obtained via learning—either pre-learned or learned from the data itself) were tested for image denoising. Although the dictionary learned from the data itself reported the best denoising accuracy, it was also the most computationally demanding. In addition to our pre-learned dictionary, we also displayed the DCT dictionary, Gabor wavelets dictionary in

Figure 2. The parameter tweaking of such dictionaries and their denoising performance are discussed in

Section 3.1.1.

and

correspond to the sparse coefficient vectors.

and

are the given sparsity controlling parameters. Instead of setting these parameters as constants for all patches, we set them adaptively according to the informative level of each patch (see

Section 3.1.1 for details) in a manner similar to that of [

20]. Given

and

, the popular orthogonal-matching-pursuit (OMP) algorithm is applied to solve such

optimization problem. Moreover, the batch-OMP (BOMP) technique is exploited to further improve the efficiency. As the problem of (

4) is non-convex and NP-hard, we reformulate its relaxation as below:

Here,

and

, which balance the representation fidelity term and the sparsity penalty term, are again estimated adaptively (see

Section 3.1.1 for details). By stacking all the patches, we obtain Equation (

6):

in which the neighboring patches are extracted with half-overlapping. To solve such

optimization problem, the least-absolute-shrinkage-and-selection-operator (LASSO) algorithm was proposed, which has proven to find the global optimizer.

Clearly, Equations (

4)–(

6) differ from previous methods [

12,

14,

19,

20,

22] in one aspect, which is that

is fixed. Thus,

and

can be obtained with a one-step closed-form solution instead of performing refinement on the coefficients and dictionary alternately and iteratively, thus resulting in much improved efficiency. With

and

in hand, we reconstruct each patch

and

. When all patches are processed, the average value is used for the locations occupied by multiple patches, and then we obtain the refined ridge maps

and

. Next, we can recover

by performing the inverse operation of Equation (

3). However, due to the dependency of the rows of

, the system is under-determined. Therefore, we need to incorporate the boundary conditions to recover

from its refined second-order difference, as shown in Equation (

7):

where the available

N boundary points are incorporated, and six boundary points are used in our work. A more rigorous theoretical proof of the minimum necessary boundary points is out of the scope of this work. Performing the inverse operation on (

7), we can recover

as Equation (

8):

Here, all the matrices , , their transposes, and their inverses can be pre-computed to significantly reduce the overall processing time. We now summarize all previous operations as Algorithm 1. In fact, our algorithm includes two versions of implementation: our-BOMP and our-LASSO.

In Algorithm 2, the convergence criterion is well-defined as . In Algorithm 3, the convergence criterion is that the relative update difference of is less than .

| Algorithm 1 Our Sparse Coding for Depth Data Denoising |

- Input:

Depth map , dictionary , parameters , , , ; - Output:

Restored of Equation ( 8); - 1:

Estimate the ridge maps and as Equation ( 2); - 2:

Extract and vectorize ridge map patches , , ; - 3:

//Line 5 & 7 apply different algorithms to estimate , . - 4:

//Line 5 belongs to the version of our-BOMP. - 5:

Apply the Batch-OMP algorithm to solve Equation ( 4); - 6:

//Line 7 belongs to the version of our-LASSO. - 7:

Apply the LASSO algorithm to solve Equation ( 5); - 8:

Obtain the refined ridge maps and via reconstruction; - 9:

Recover via applying Equation ( 8).

|

| Algorithm 2 Batch-orthogonal-matching-pursuit [24] to solve: |

- Input:

, , k; - Output:

; - 1:

Initialization: , , ; - 2:

while stopping criterion not met do - 3:

; - 4:

; - 5:

; - 6:

, ; - 7:

//Line 8 obtains using progressive Cholesky update. - 8:

; the key updating function to estimate is: here, - 9:

; - 10:

end while

|

| Algorithm 3 Fast iterative shrinkage-thresholding algorithm (FISTA) [25] to solve LASSO problem: |

- Input:

, , ; - Output:

; - 1:

Initialization: , , , , ; - 2:

while not converged do - 3:

; - 4:

; - 5:

; - 6:

- 7:

; - 8:

; - 9:

end while

|