On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels

Abstract

:1. Introduction

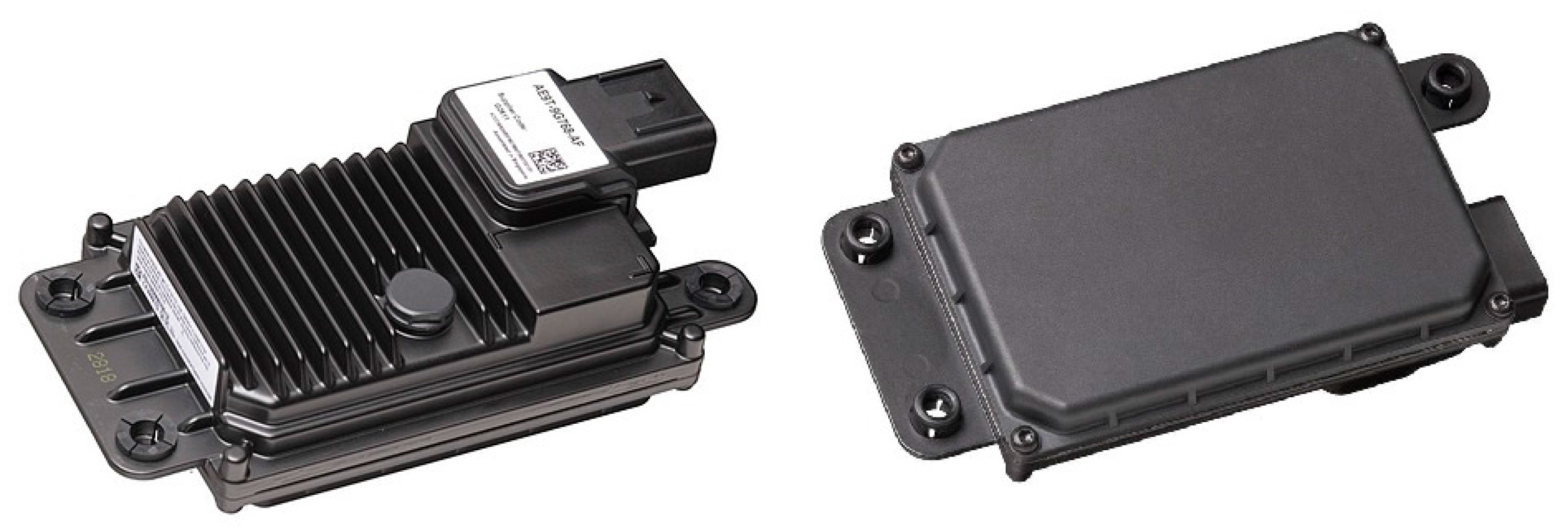

2. Radar Application for Roadside Deployment

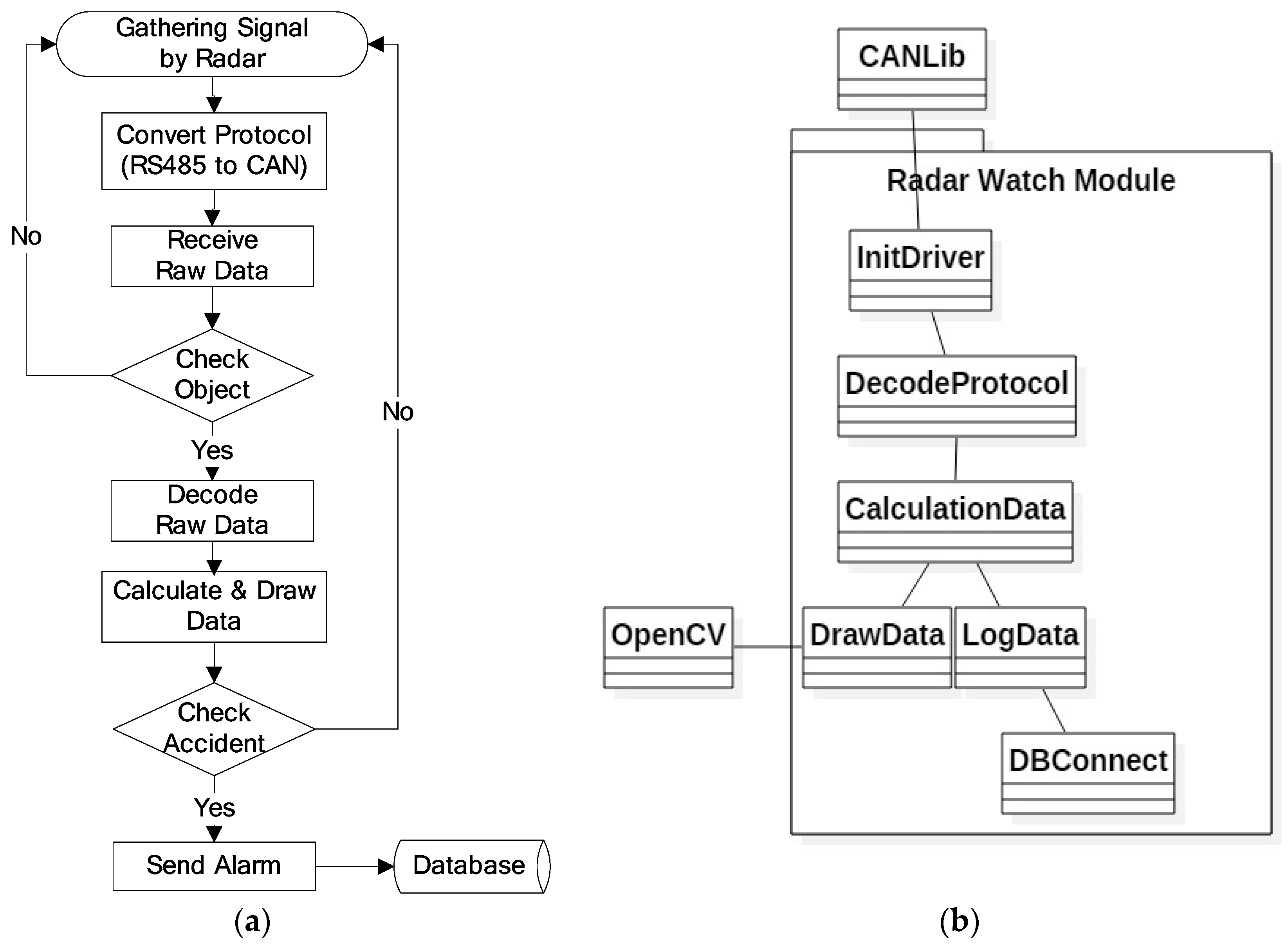

2.1. Design of Road Watch Module

- (1)

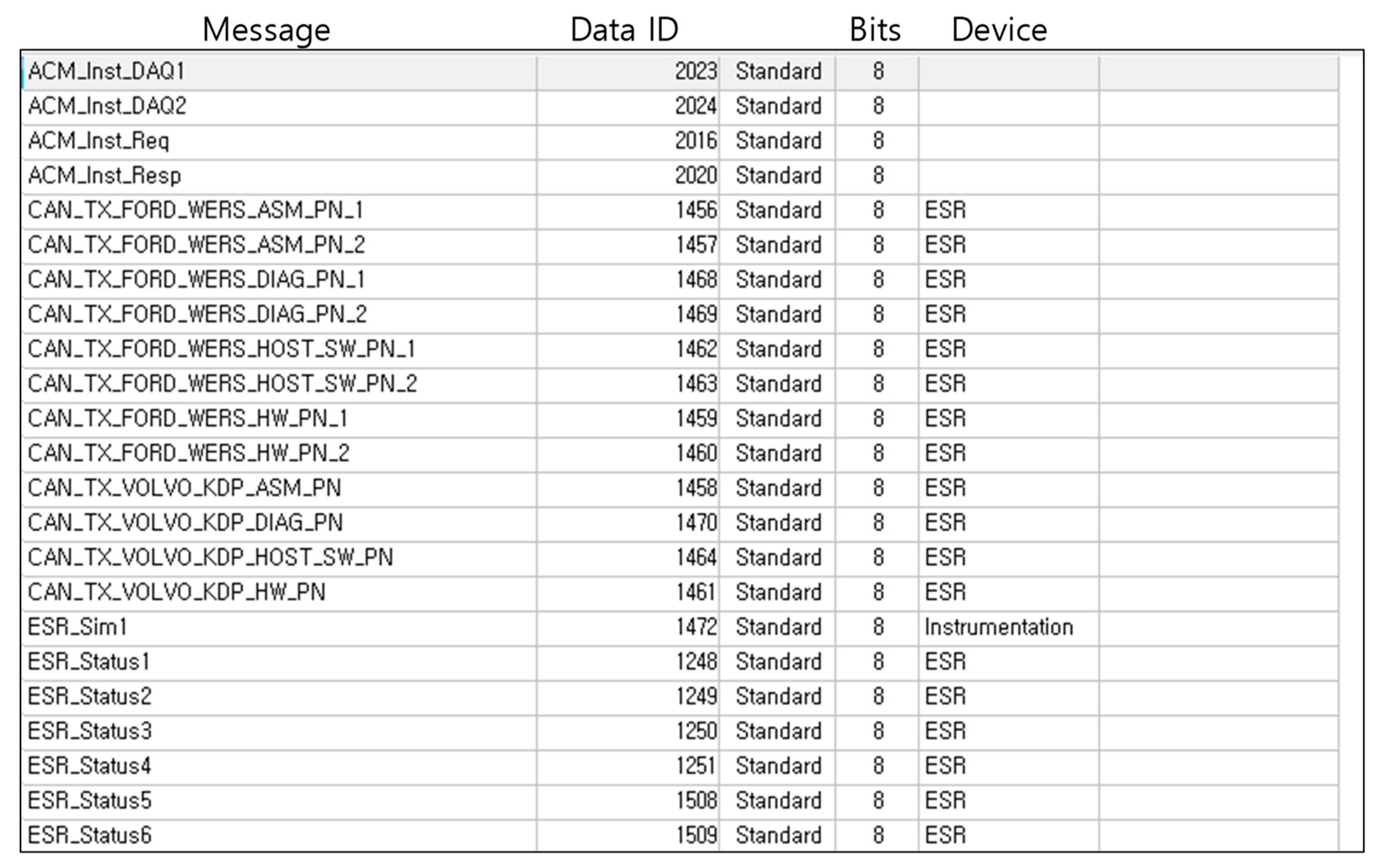

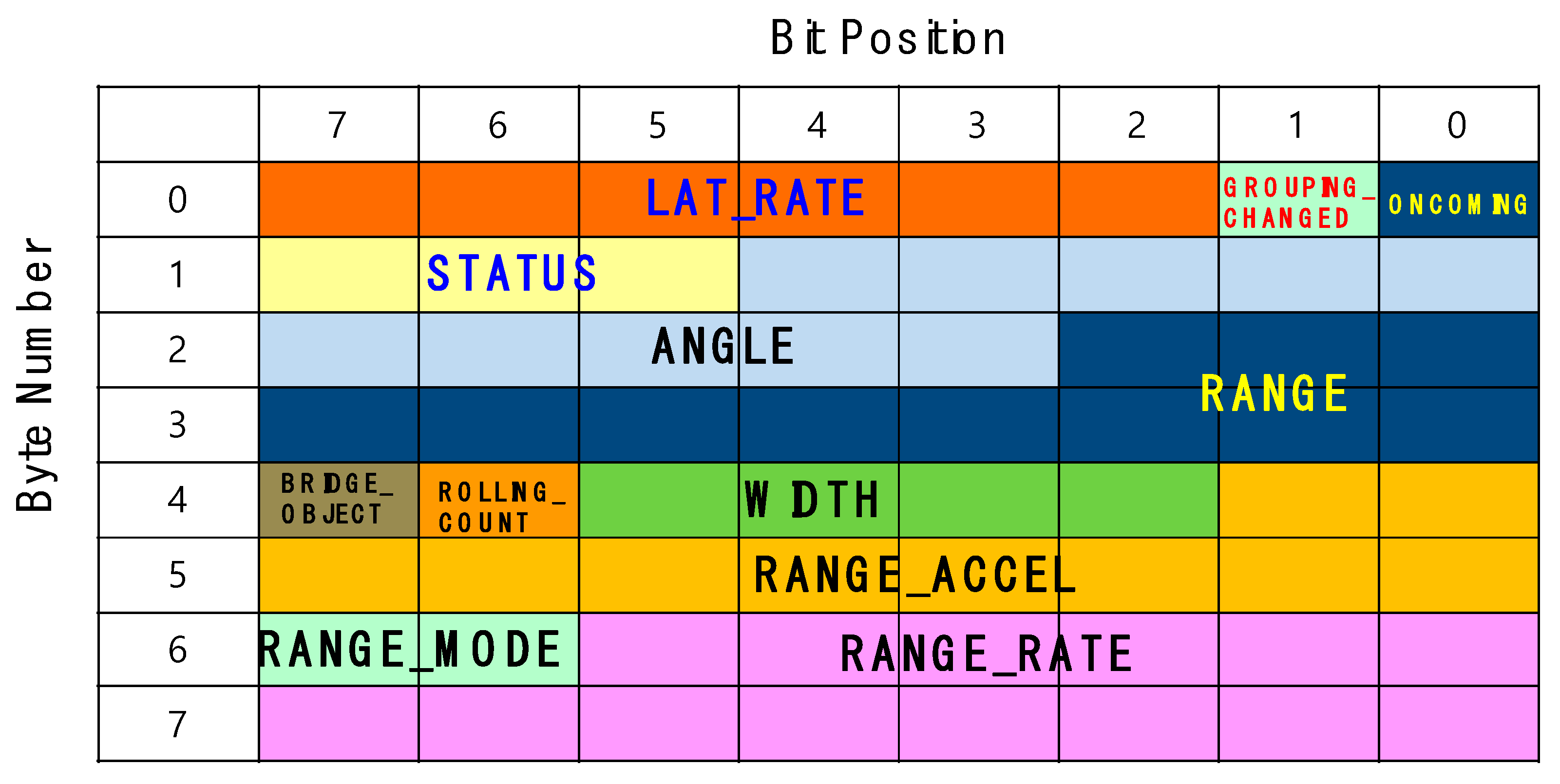

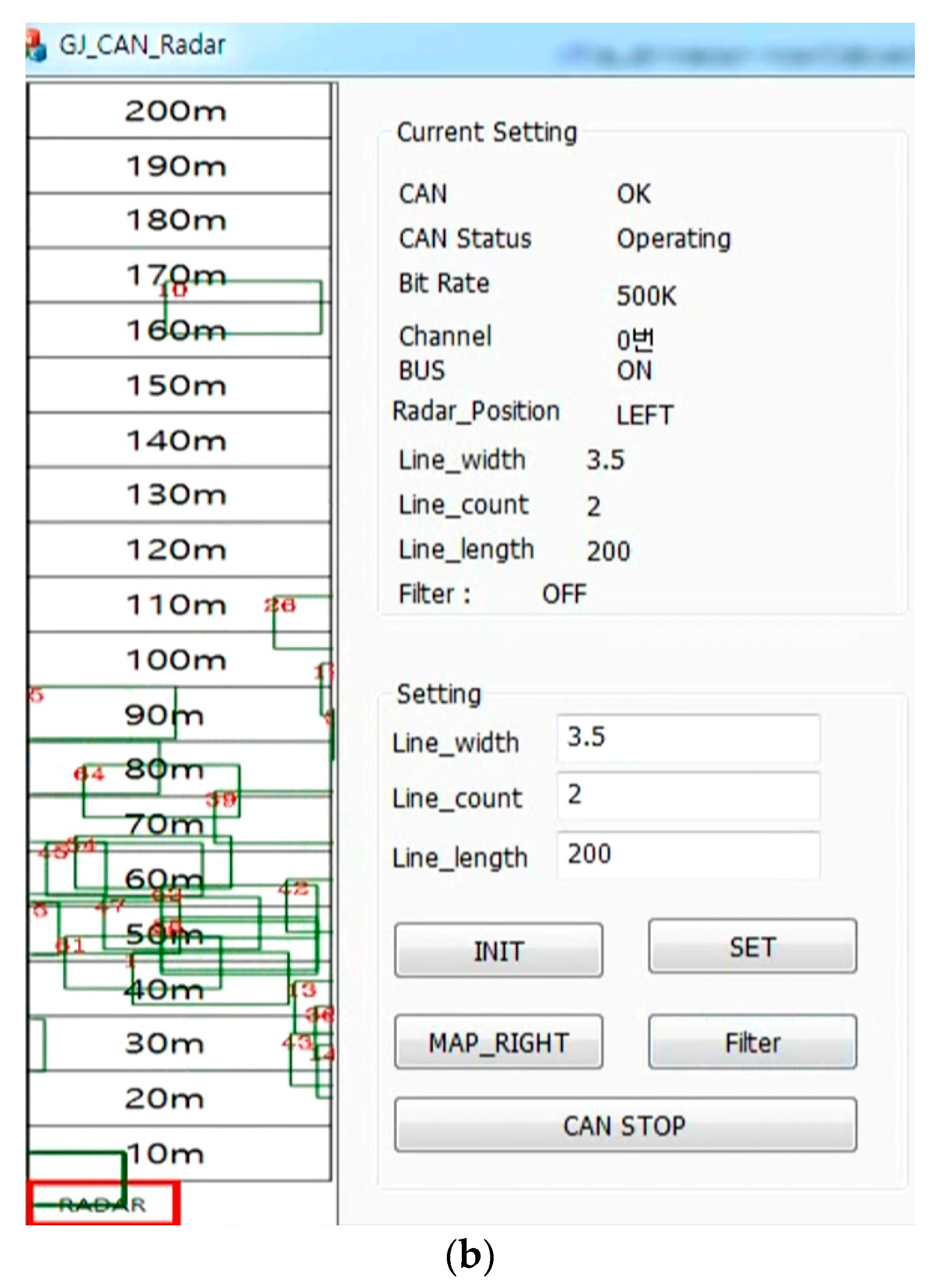

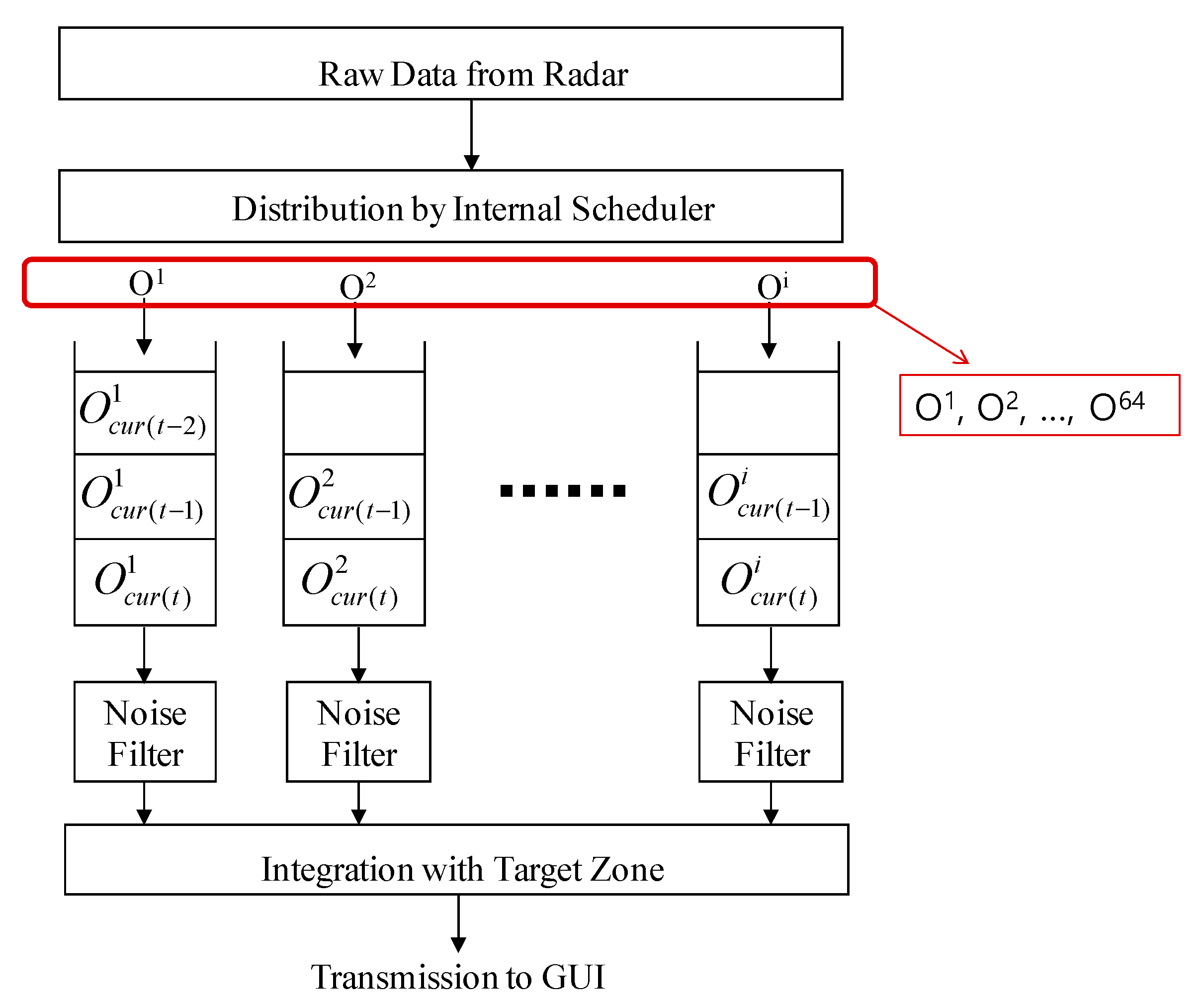

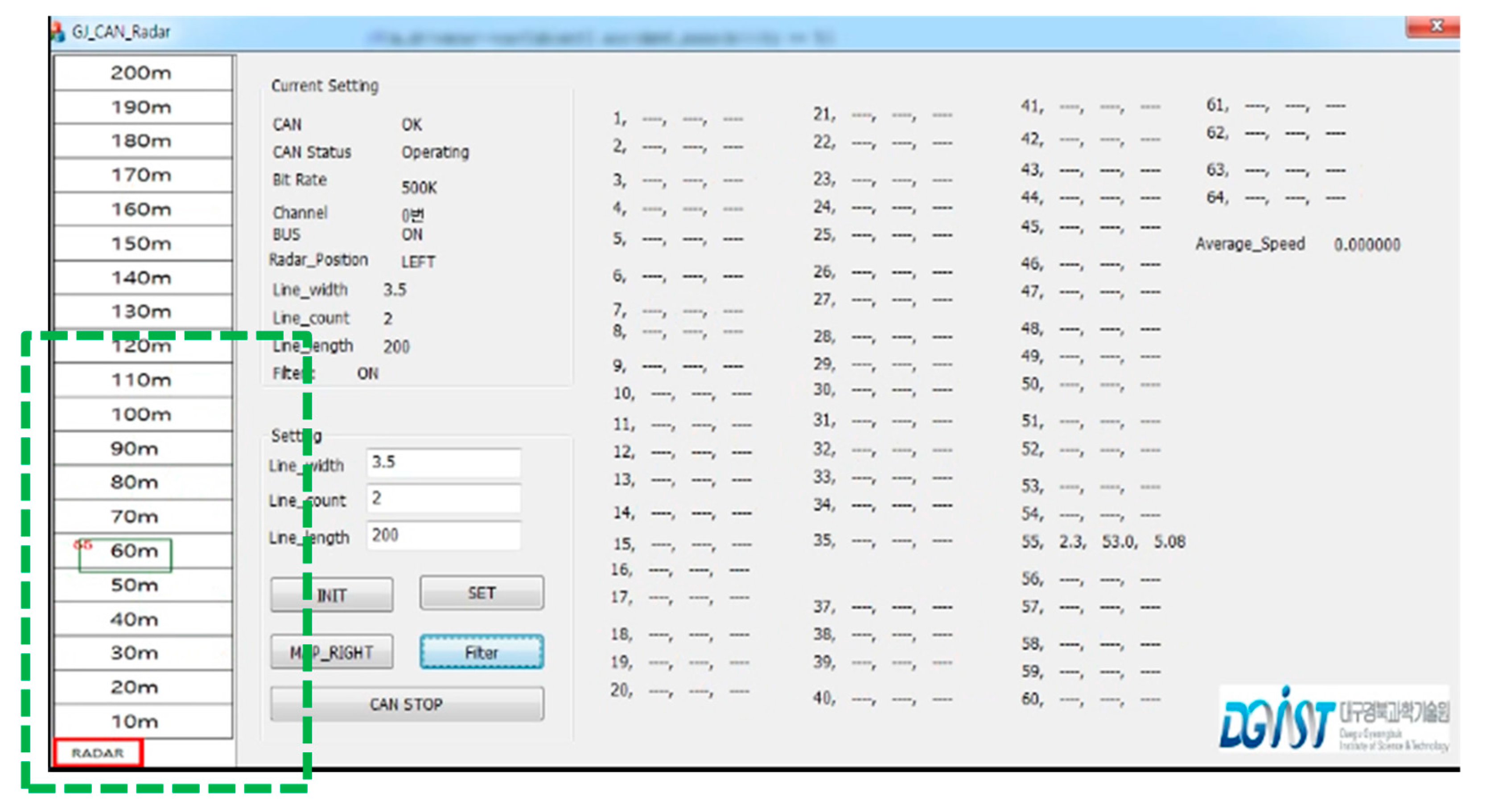

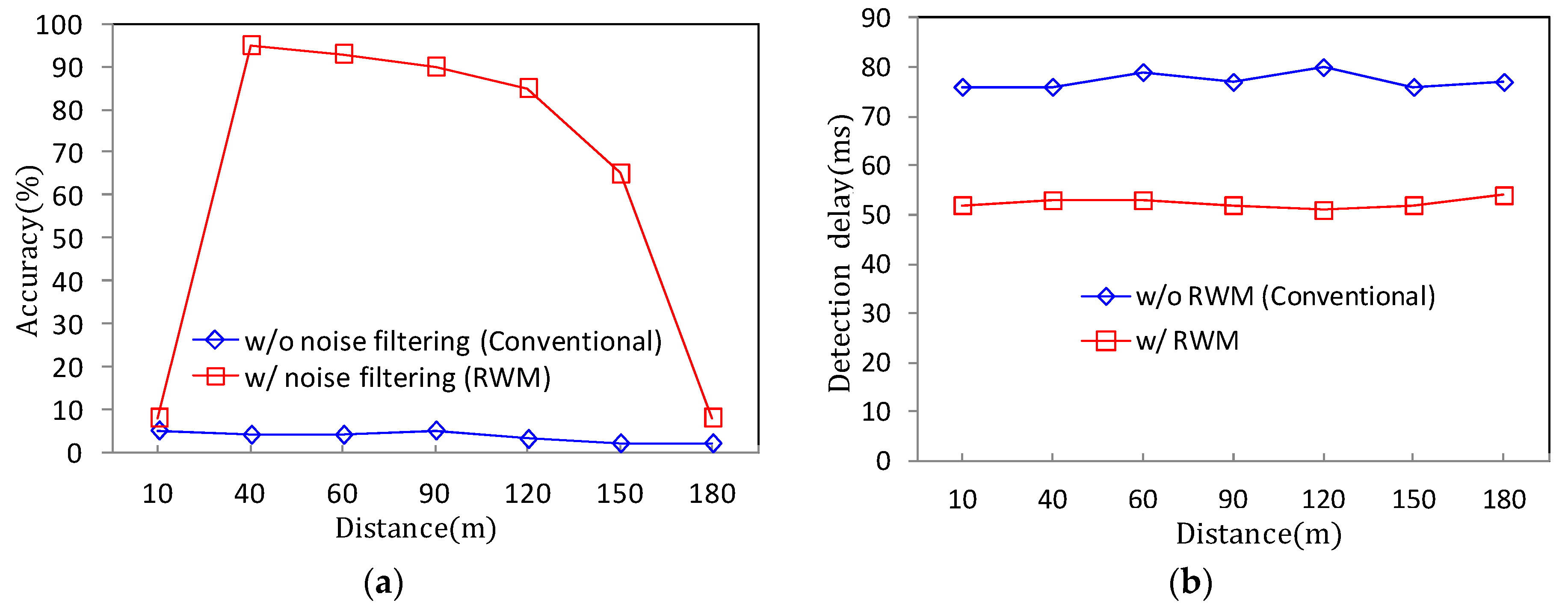

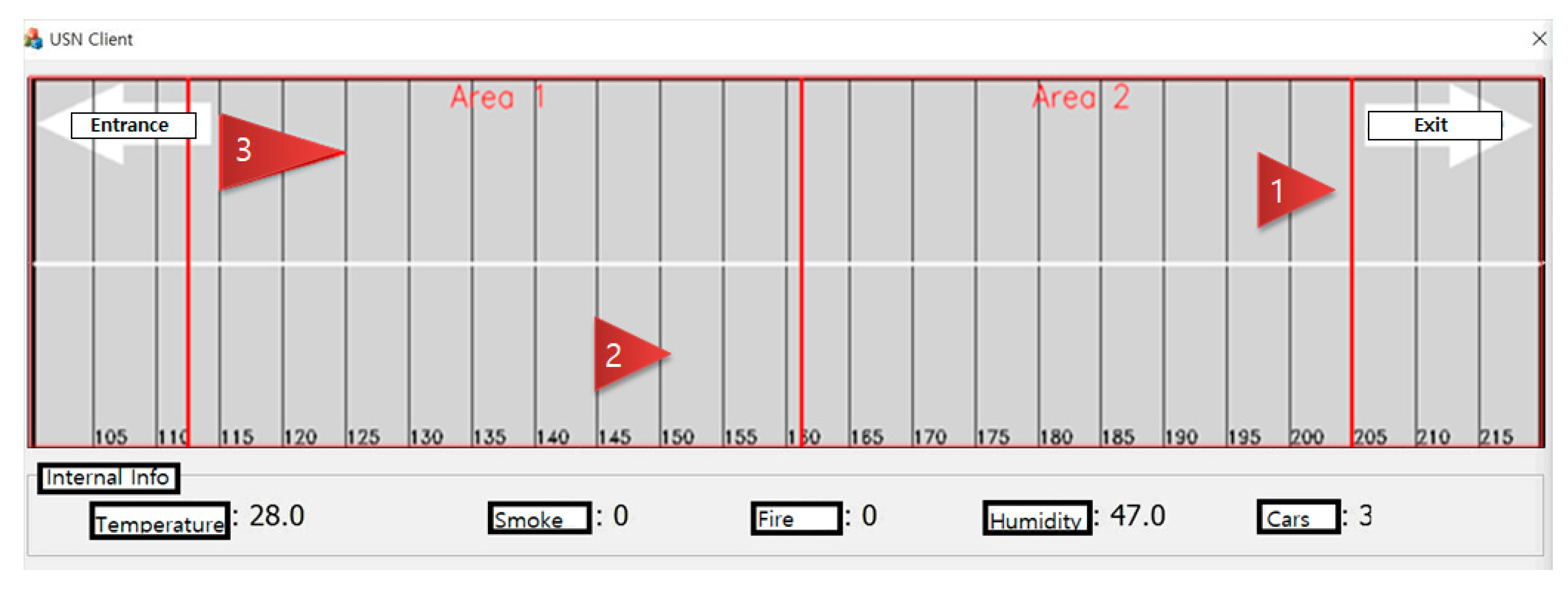

- Generally, the objects’ information that is obtained from the radar is very diverse and the amount of information is also very large. This large amount of data is still a burden to process in real-time on embedded devices connected to the radar. For this purpose, RWM preferentially inputs only those attributes that are relevant to the moving vehicles, minimizing the response latency by filtering meaningless information. In particular, since Delphi ESR radar only provides bitmap information of raw data, as shown in Figure 4, and does not provide data selection, acquisition, or communication methods for specific applications, the RWM provides a framework for the analysis and processing of the raw data from the radar.

- (2)

- With respect to the vehicle information obtained, only the vehicle located in the road is tracked with reference to the width of the road, the number of lanes and the length of the lane, so that the processing delay time can be reduced and detection accuracy can be enhanced.

- (3)

- In addition to accurate vehicle identification on the road, the RWM also provides a noise filtering technique in tunnels by analyzing various false signal patterns such as ghost phenomena and flickering. The proposed scheme provides robust detection accuracy and low calculation latency in tunnels.

- (4)

- By adopting the RWM technique, it is possible to confirm the occurrence of an accident by analyzing the stop pattern and congestion phenomenon of a vehicle.

- −

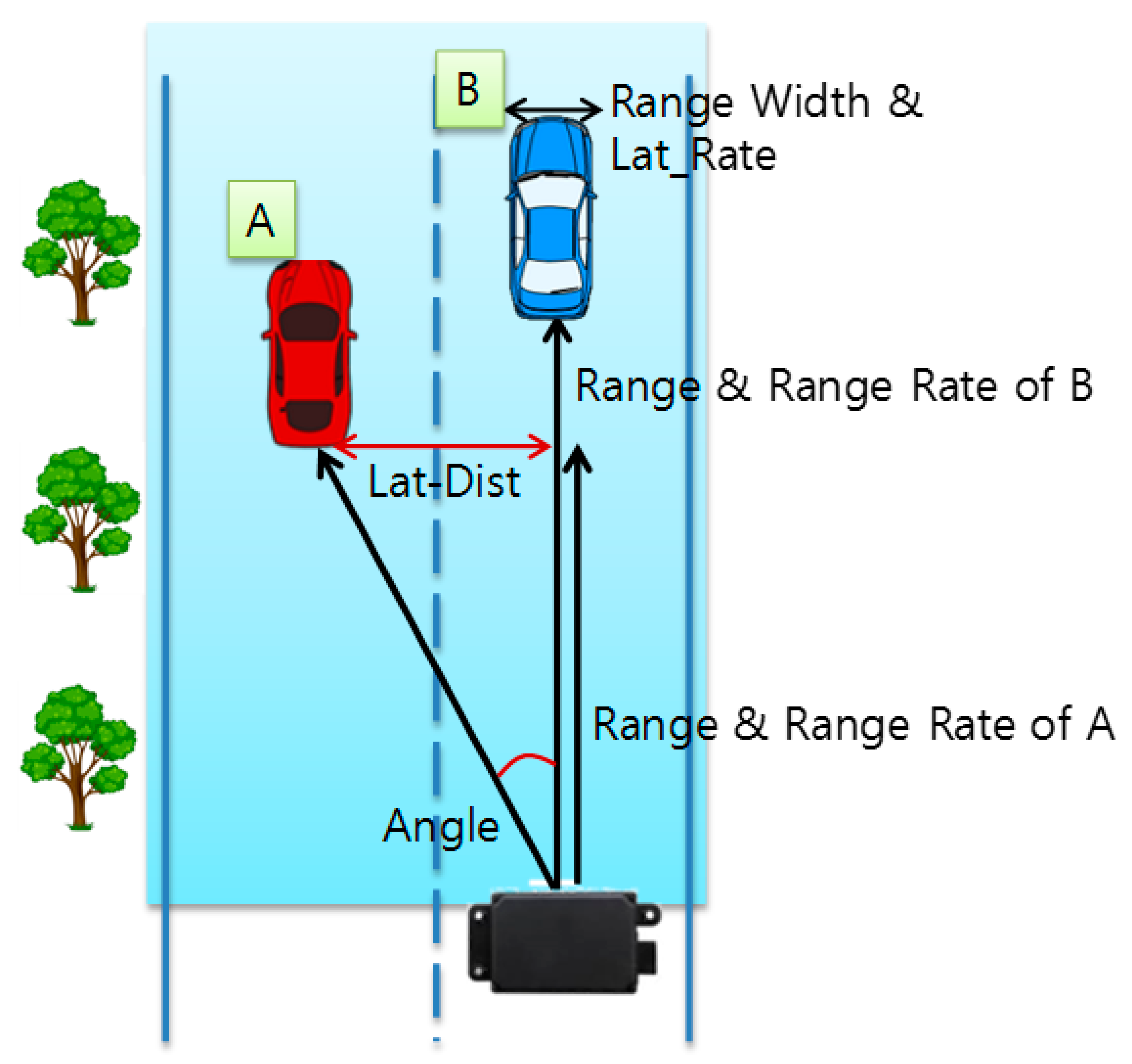

- ANGLE: Angle of the RF signal that is reflected from the target object after the radar transmits it.

- −

- LAT_RATE: Speed of an object moving in the horizontal direction between lanes.

- −

- RANGE: Distance between the radar and the target object.

- −

- RANGE_RATE: Relative speed between the radar and target object.

- −

- RANGE_WIDTH: Width of the target object.

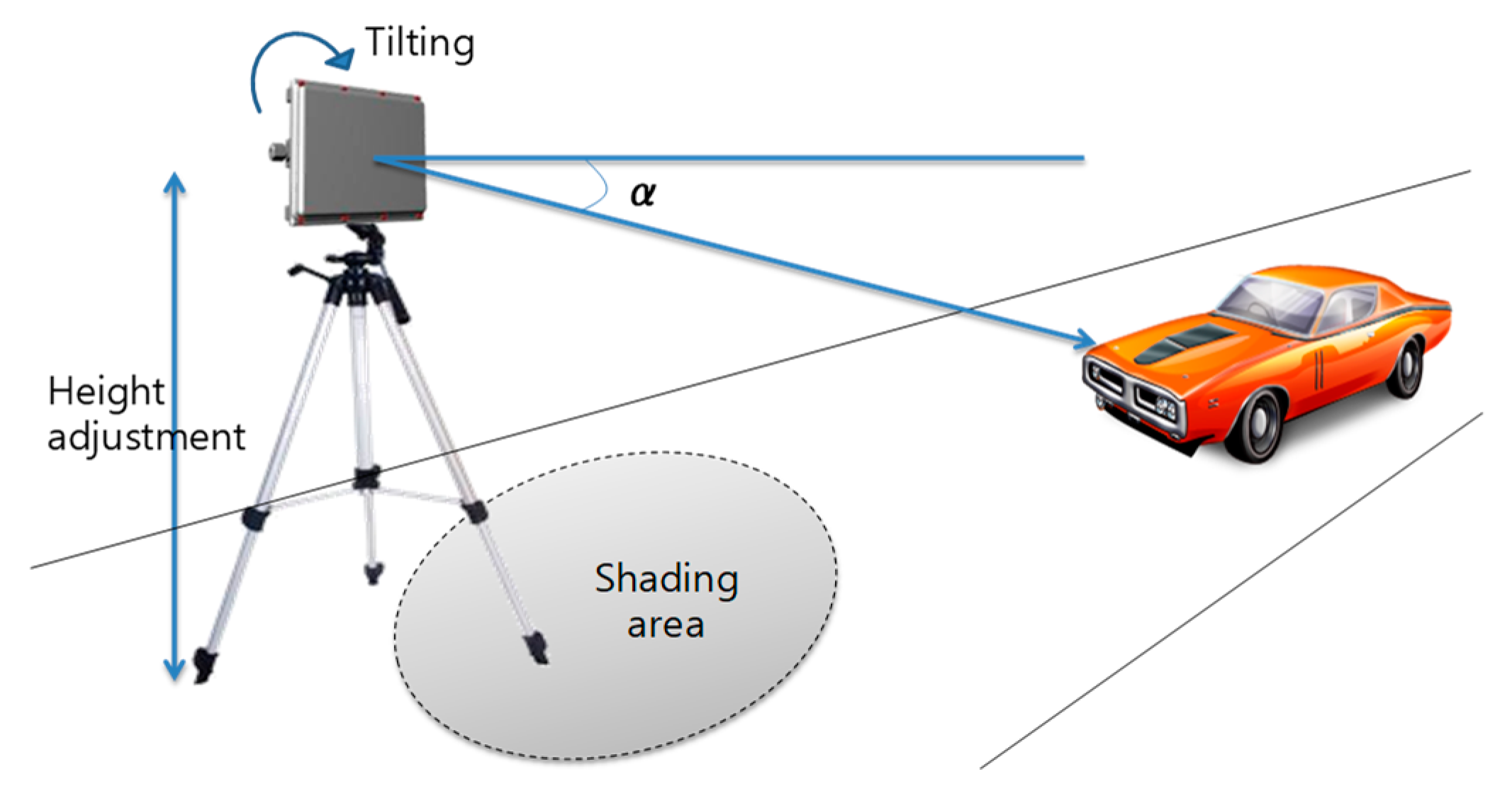

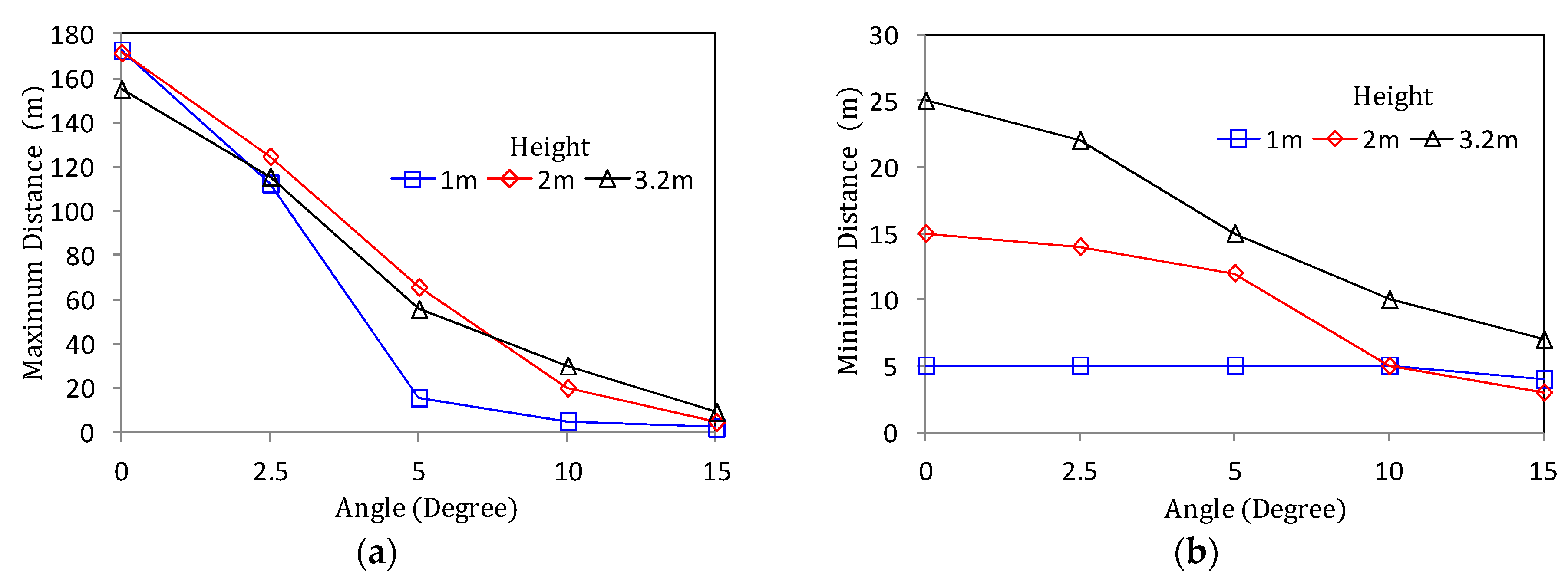

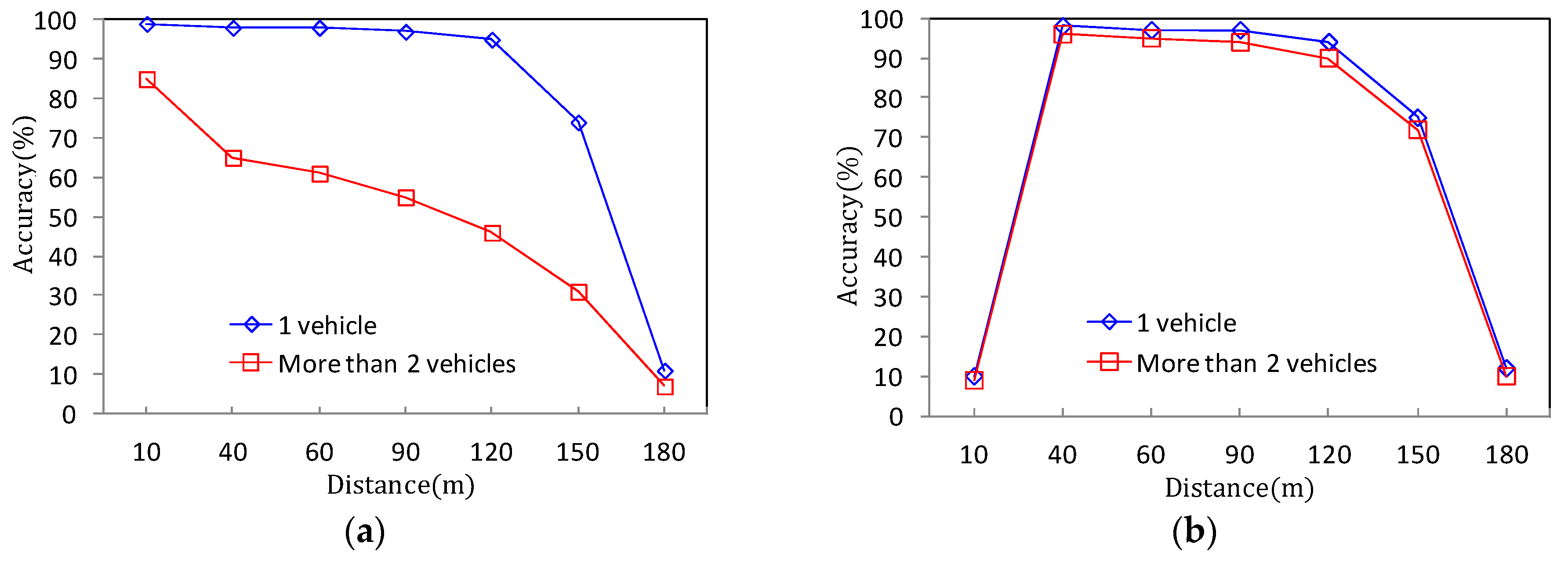

2.2. Derivation of Optimal Performance for Roadside Deployment

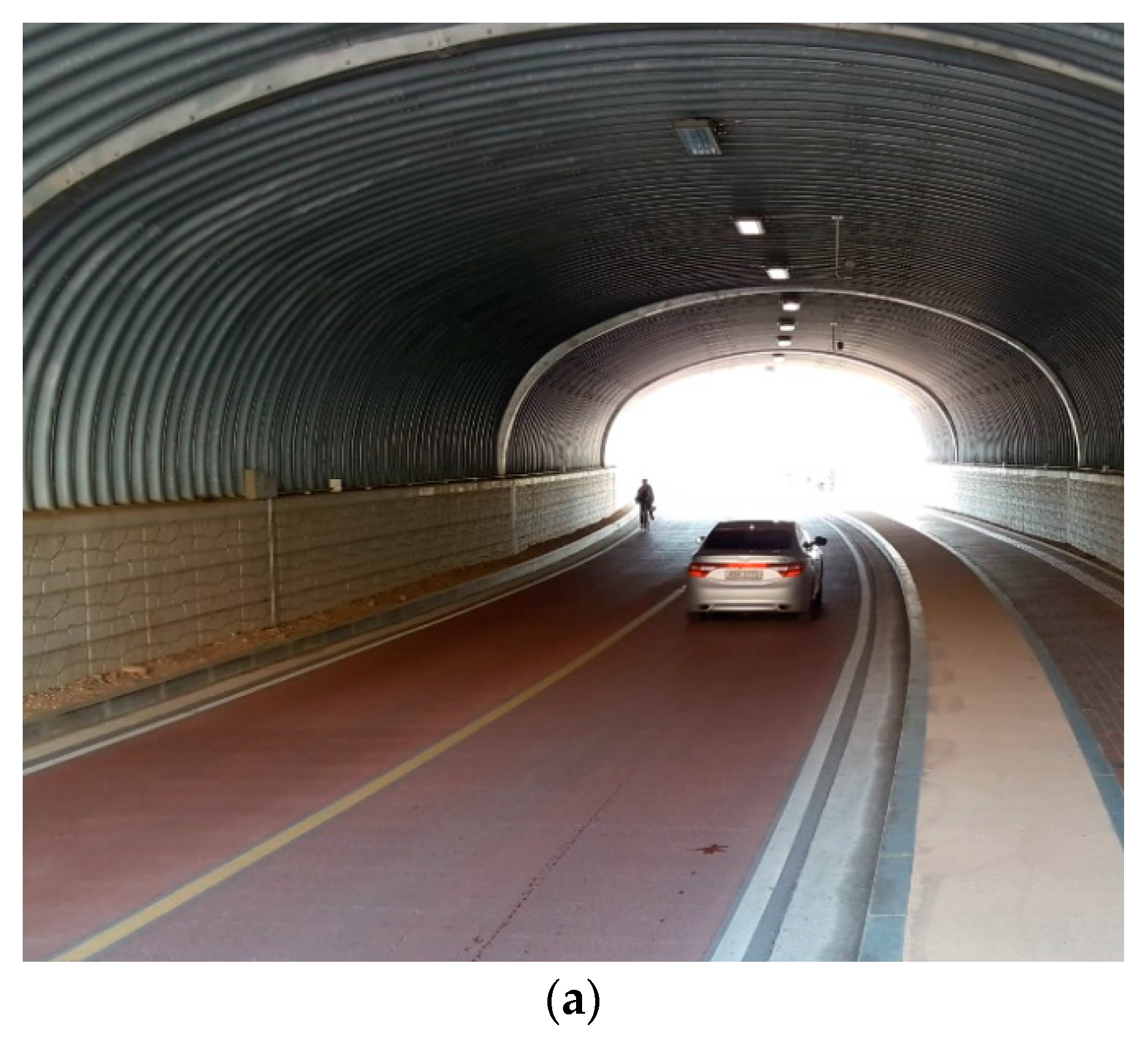

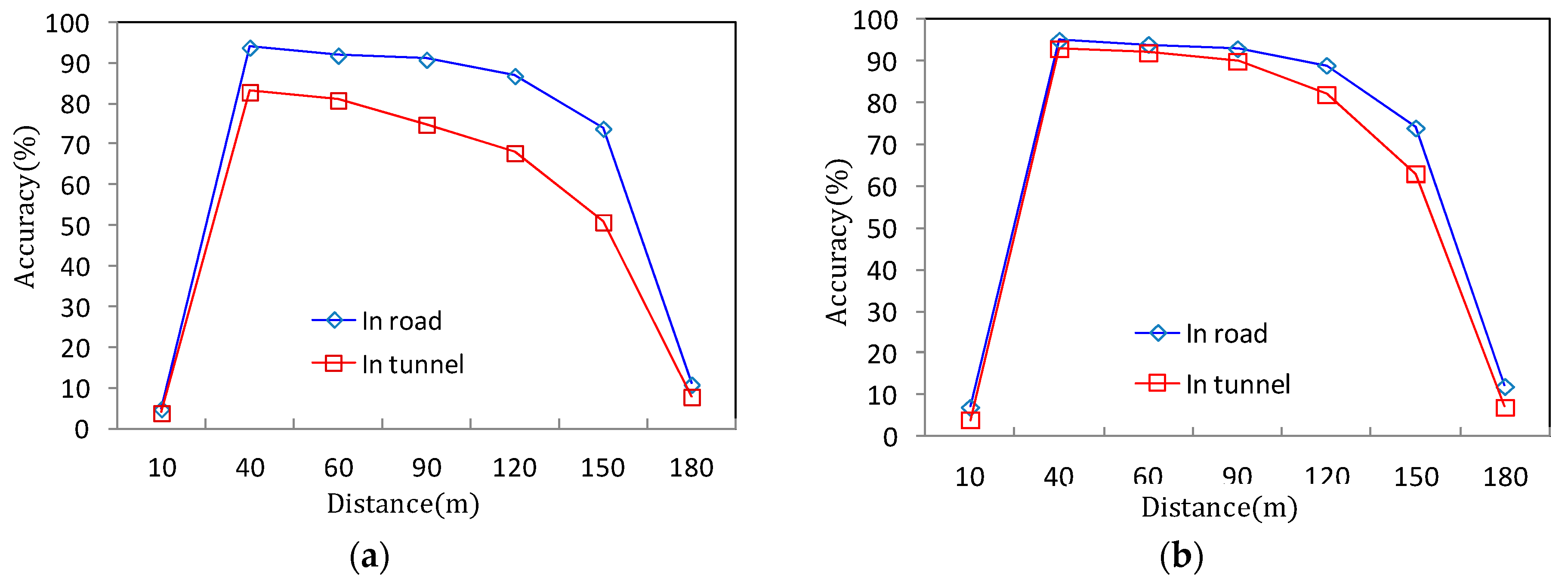

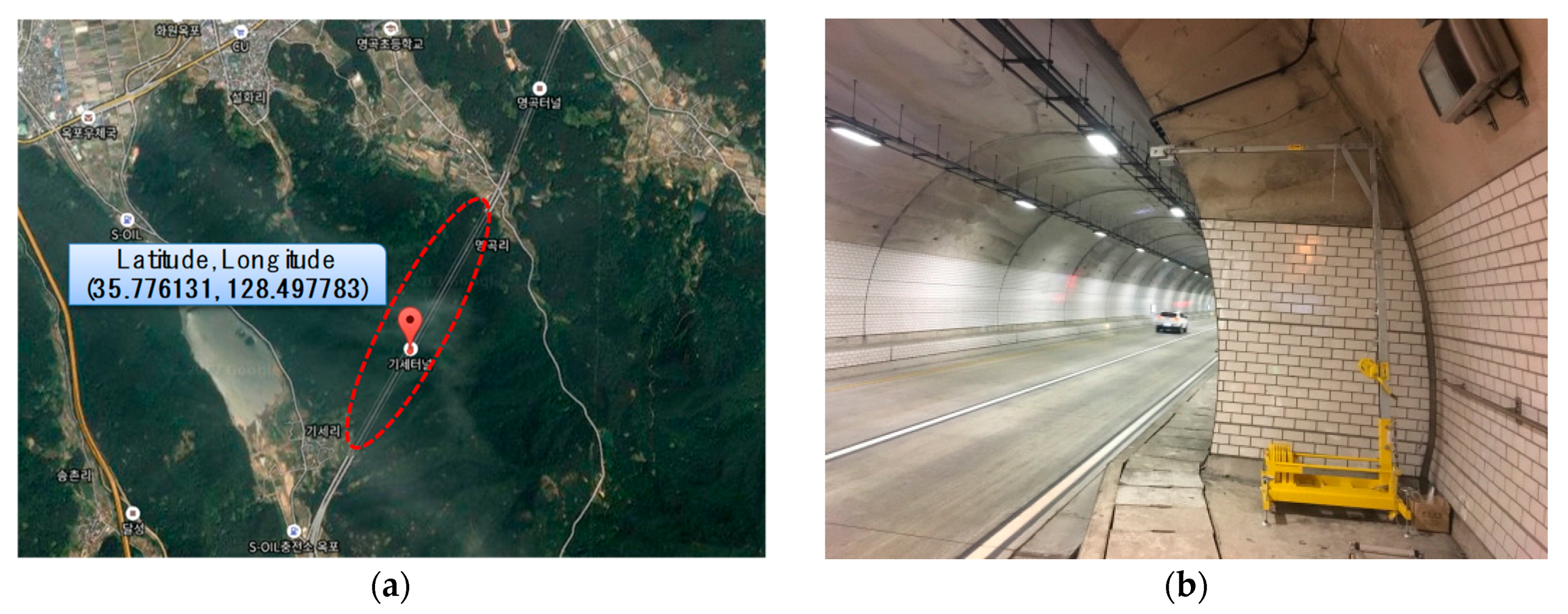

3. Radar Application for Tunnel Deployment

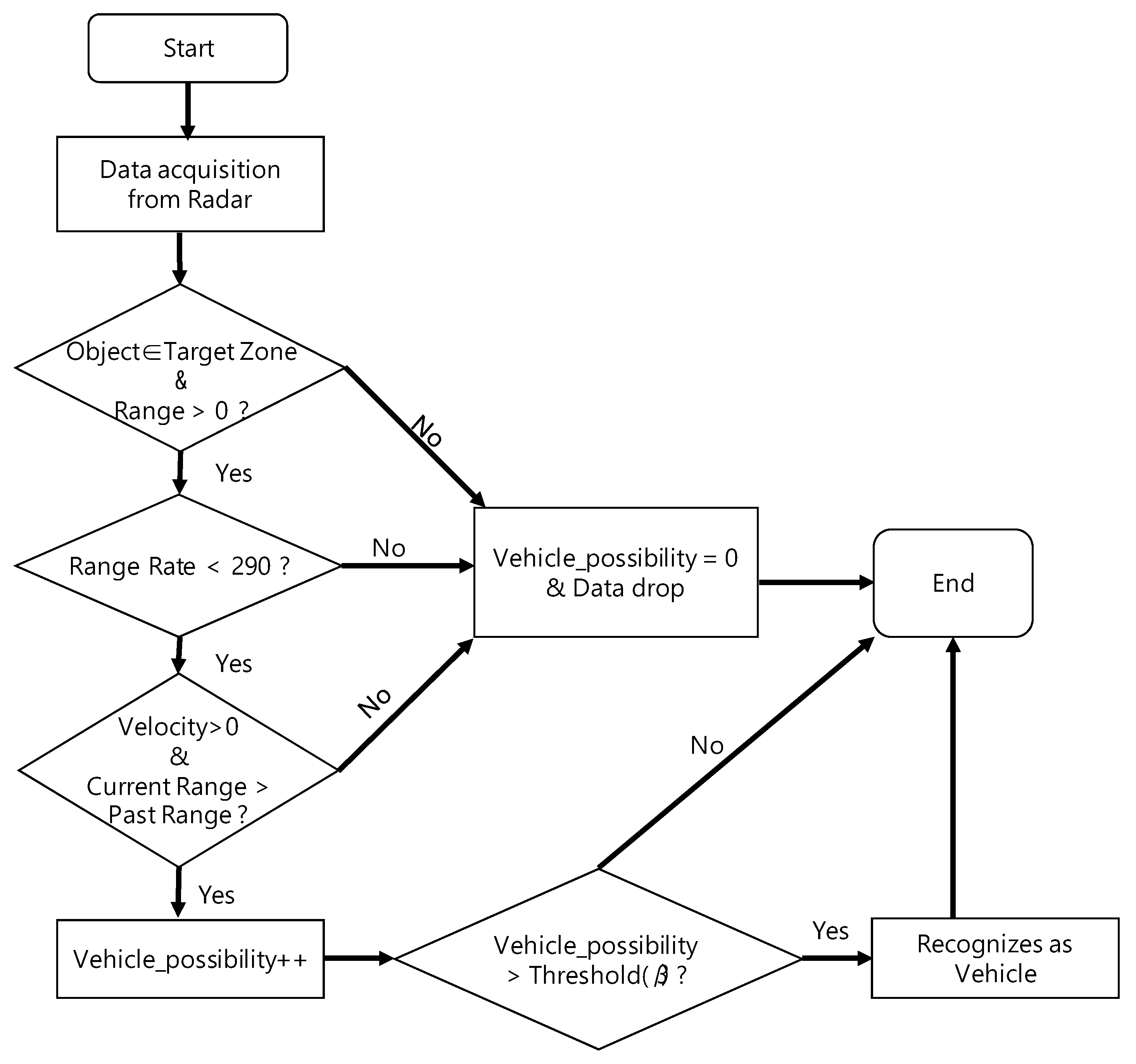

- (1)

- It is recognized as if the vehicle is running at an incorrect location beyond the width of tunnel and the road. It is also called a ghost image.

- (2)

- It is recognized as if the vehicle in the tunnel instantly achieves a high speed status that is out of the realm of common sense (e.g., 290 km/h or faster) or achieves a rapid acceleration status.

- (3)

- Even though the actual vehicle runs along the road, the distance between the radar and the vehicle does not increase. It is recognized as being the same or decreasing (recognition as stopping or backward/reverse run).

- (4)

- The vehicle suddenly appears and disappears within one second, and this phenomenon is repeated several times or continuously as if it is a flicker.

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Juan, A.G.; Sherali, Z.; Juan, C. Integration challenges of intelligent transportation systems with connected vehicle, cloud computing, and internet of things technologies. IEEE Wirel. Commun. 2015, 22, 122–128. [Google Scholar]

- Yu, X.; Prevedouros, P.D. Performance and Challenges in Utilizing Non-Intrusive Sensors for Traffic Data Collection. Adv. Remote Sens. 2013, 2, 45–50. [Google Scholar] [CrossRef]

- Ho, T.-J.; Chung, M.-J. Information-Aided Smart Schemes for Vehicle Flow Detection Enhancements of Traffic Microwave Radar Detectors. Appl. Sci. 2016, 6, 196. [Google Scholar] [CrossRef]

- Zhao, Y.; Su, Y. Vehicles Detection in Complex Urban Scenes Using Gaussian Mixture Model with FMCW Radar. IEEE Sens. J. 2017, 17, 5948–5953. [Google Scholar] [CrossRef]

- Hyun, E.; Jin, Y.S.; Ju, Y.H.; Lee, J.H. Development of Short-Range Ground Surveillance Radar for Moving Target Detection. In Proceedings of the Asia-Pacific Conference on Synthetic Aperture Radar, Singapore, 1–4 September 2015; pp. 692–695. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H. On-Road Vehicle Detection and Tracking Using MMW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Uchiyama, K.; Kajiwara, A. Vehicle location estimation based on 79 GHz UWB radar employing road objects. In Proceedings of the International Conference on Electromagnetics in Advanced Applications (ICEAA), Cairns, Australia, 19–23 September 2016; pp. 720–723. [Google Scholar]

- Andrej, H.; Gorazd, K. A Survey of Radio Propagation Modeling for Tunnels. IEEE Commun. Surv. Tutor. 2014, 16, 658–669. [Google Scholar]

- Dudley, D.; Lienard, M.; Mahmoud, S.; Degauque, P. Wireless propagation in tunnels. IEEE Antennas Propag. Mag. 2007, 49, 11–26. [Google Scholar] [CrossRef]

- Wang, T.; Yang, C. Simulations and measurements of wave propagations in curved road tunnels for signals from GSM base stations. IEEE Trans. Antennas Propag. 2006, 54, 2577–2584. [Google Scholar] [CrossRef]

- Zhou, C. Ray Tracing and Modal Methods for Modeling Radio Propagation in Tunnels with Rough Walls. IEEE Trans. Antennas Propag. 2017, 65, 2624–2634. [Google Scholar] [CrossRef] [PubMed]

- Graeme, E.S.; Bijan, G.M. Analysis and Exploitation of Multipath Ghosts in Radar Target Image Classification. IEEE Trans. Image Process. 2014, 23, 1581–1592. [Google Scholar]

- Lundquist, C.; Schön, T.B. Tracking stationary extended objects for road mapping using radar measurements. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 405–410. [Google Scholar]

- Diewald, F.; Klappstein, J.; Sarholz, F.; Dickmann, J.; Dietmayer, K. Radar-interference-based bridge identification for collision avoidance systems. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 113–118. [Google Scholar]

- Martine, L.; Pierre, D. Long-Range Radar Sensor for Application in Railway Tunnels. IEEE Trans. Veh. Technol. 2004, 53, 705–715. [Google Scholar]

- Christian, L.; Lars, H.; Fredrik, G. Road Intensity Based Mapping using Radar Measurements with a Probability Hypothesis Density Filter. IEEE Trans. Signal Process. 2011, 59, 1397–1408. [Google Scholar]

- Lee, J.-E.; Lim, H.-S.; Jeong, S.-H.; Kim, S.-C.; Shin, H.-C. Enhanced iron-tunnel recognition for automotive radars. IEEE Trans. Veh. Technol. 2016, 65, 4412–4418. [Google Scholar] [CrossRef]

- Rohling, H.; Meinecke, M.M. Waveform design principles for automotive radar systems. In Proceedings of the CIE international Conference on Radar, Beijing, China, 15–18 October 2001. [Google Scholar]

- Miyahara, S. New algorithm for multiple object detection in FM-CW radar. Intell. Veh. Initiat. Technol. 2004, 17–22. [Google Scholar] [CrossRef]

- Rohling, H.; Moller, C. Radar waveform for automotive radar systems and applications. In Proceedings of the IEEE Radar Conference, Rome, Italy, 26–30 May 2008. [Google Scholar]

- Bi, X.; Du, J. A new waveform for range-velocity decoupling in automotive radar. In Proceedings of the 2nd International Conference on Signal Processing Systems, Dalian, China, 5–7 July 2010; pp. 511–514. [Google Scholar]

- Lin, J., Jr.; Li, Y.-P.; Hsu, W.-C.; Lee, T.-S. Design of an FMCW radar baseband signal processing system for automotive application. SpringerPlus 2016, 5. [Google Scholar] [CrossRef] [PubMed]

- Stanislas, L.; Peynot, T. Characterisation of the Delphi Electronically Scanning Radar for robotics applications. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 2–4 December 2015; pp. 1–10. [Google Scholar]

- Kvaser Database Editor—User’s Guide. April 2010. Available online: http://www.kvaser.com (accessed on 12 September 2017).

- OpneCV. Available online: https://github.com/opencv/opencv/wiki (accessed on 5 November 2017).

| Parameter | Specification | Parameter | Specification |

|---|---|---|---|

| Frequency | 76 GHz | Waveform | Pulse Doppler |

| Long Range | 174 m | Mid-Range | 60 m |

| Long Range Field of View (FOV) | +/−10 ° | Mid-Range Field of View (FOV) | +/−45 ° |

| Vertical FOV | 4.2 ° | Min. Amplitude | <−10 dB |

| Update Rate | 50 ms | Max. Amplitude | >40 dB |

| Range Rate | −100–25 m/s | Range Rate Accuracy | <+/−0.12 m/s |

| Number of Targets | 64 | Min. Update Interval | 20 Hz |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, Y.-D.; Son, G.-J.; Song, C.-H.; Kim, H.-K. On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels. Sensors 2018, 18, 837. https://doi.org/10.3390/s18030837

Kim Y-D, Son G-J, Song C-H, Kim H-K. On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels. Sensors. 2018; 18(3):837. https://doi.org/10.3390/s18030837

Chicago/Turabian StyleKim, Young-Duk, Guk-Jin Son, Chan-Ho Song, and Hee-Kang Kim. 2018. "On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels" Sensors 18, no. 3: 837. https://doi.org/10.3390/s18030837

APA StyleKim, Y.-D., Son, G.-J., Song, C.-H., & Kim, H.-K. (2018). On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels. Sensors, 18(3), 837. https://doi.org/10.3390/s18030837