A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application

Abstract

:1. Introduction

2. An Omnidirectional Backpack Mobile Image System

2.1. PMTS Setup

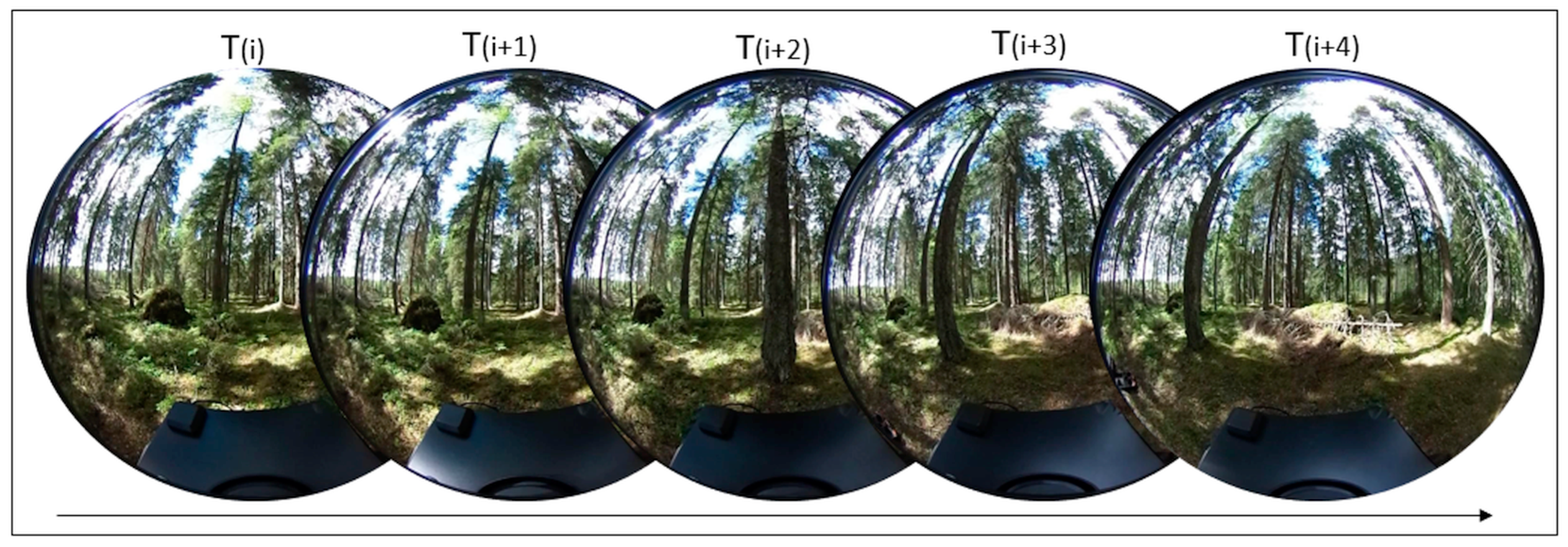

2.1.1. Omnidirectional System

2.1.2. Navigation System

2.2. PMTS Data Integration

3. PMTS Assessments

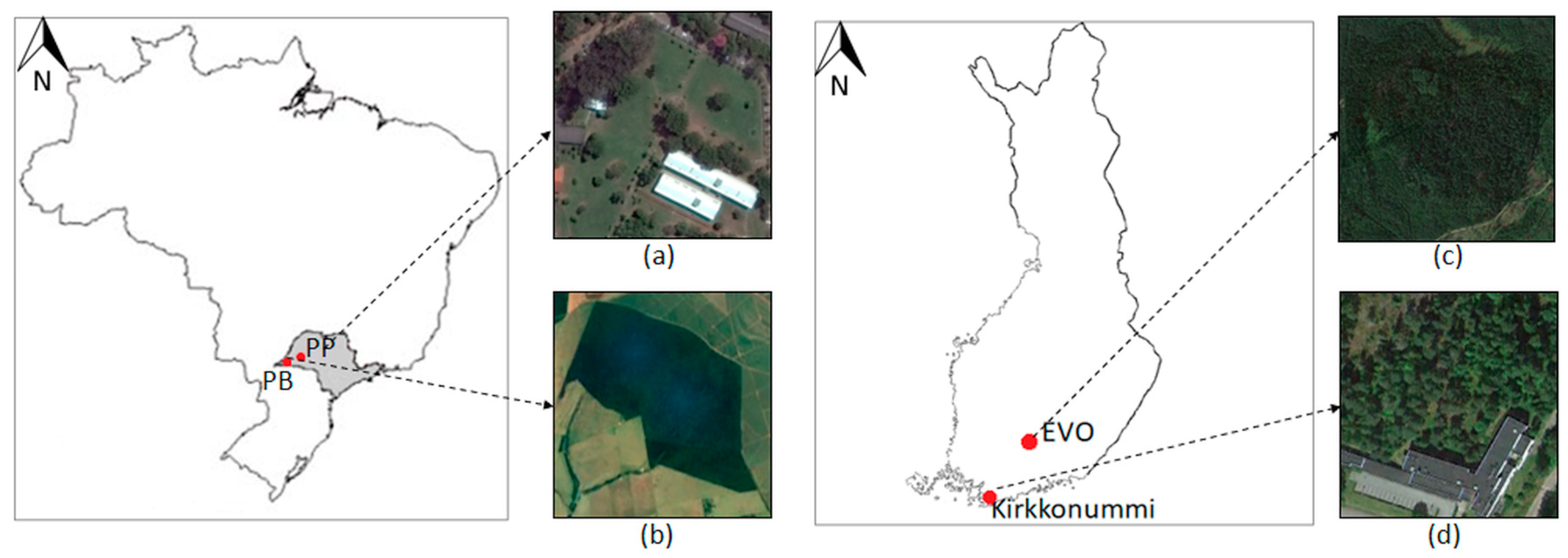

3.1. Test Areas

3.2. Assessment of the Positional and Attitude Trajectory Accuracies

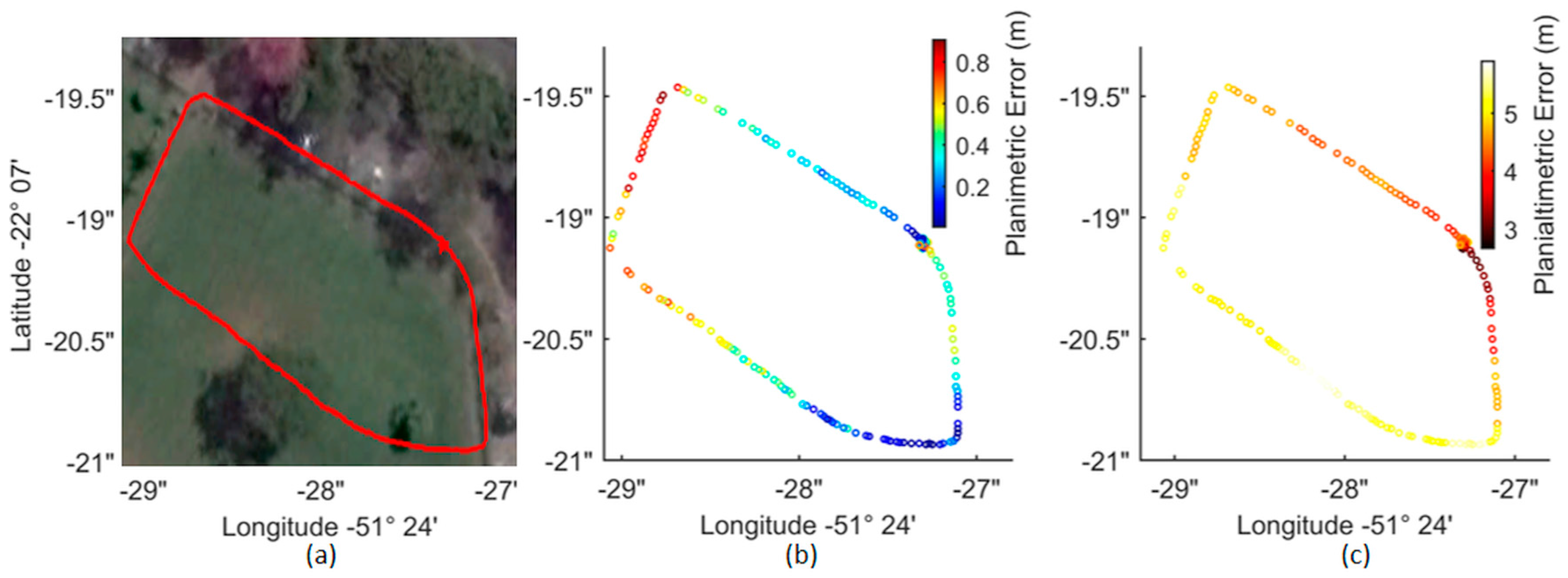

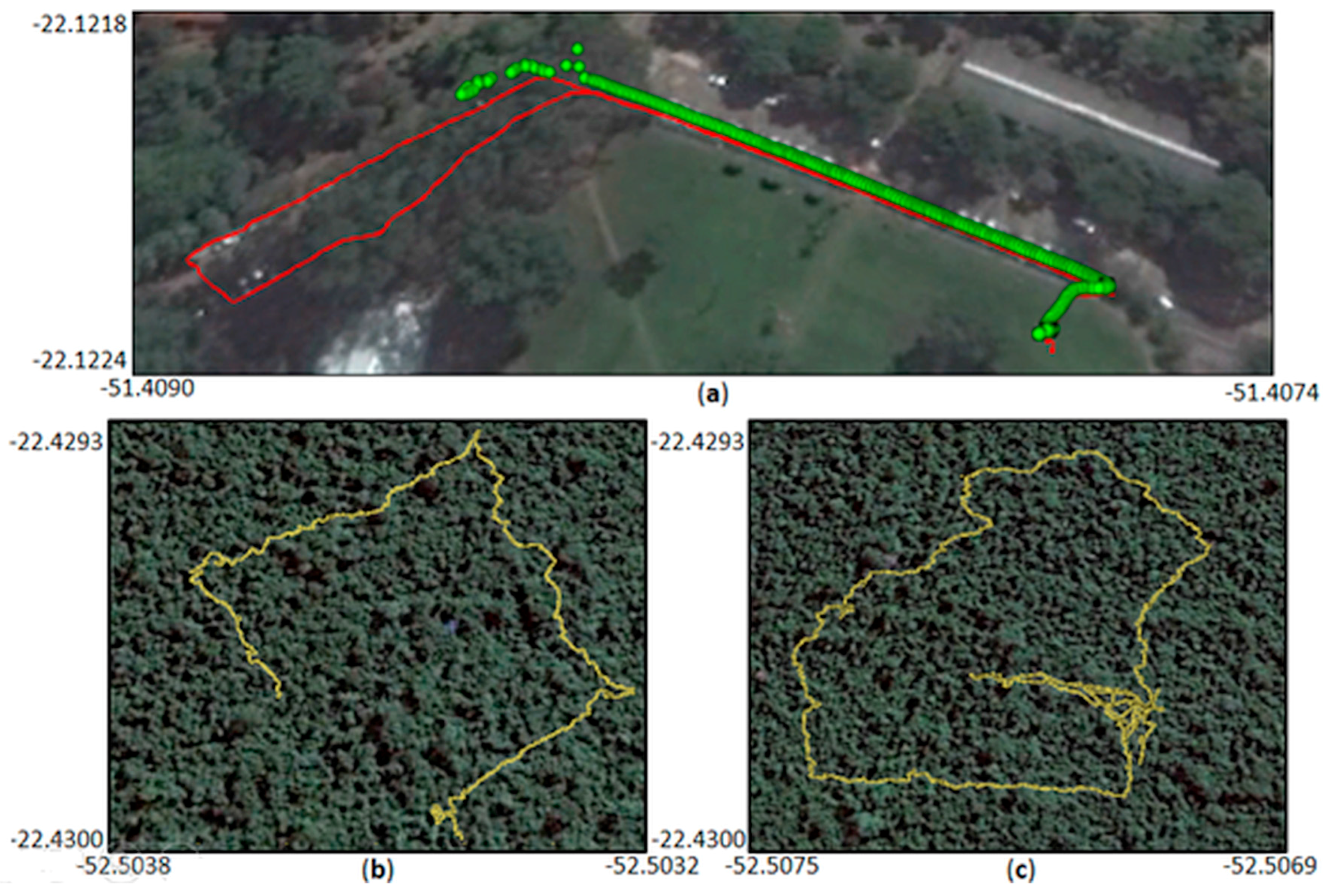

3.2.1. Positional Accuracy Experiments

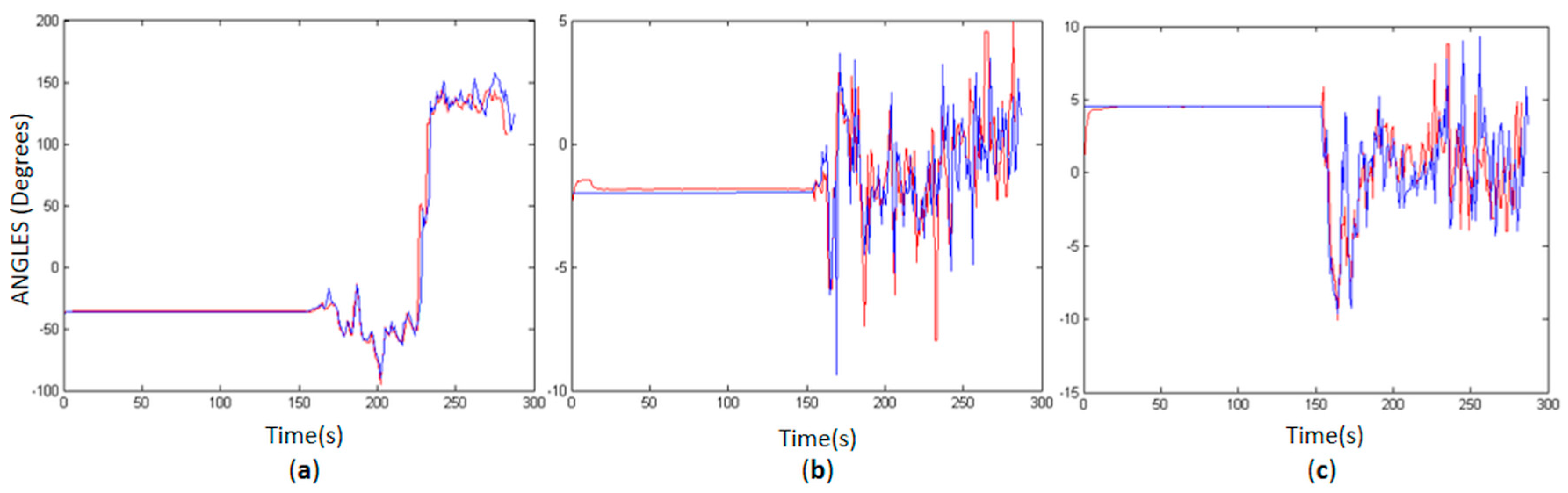

3.2.2. Attitude Accuracy Experiments

3.3. Assessment of Object Reconstruction

3.3.1. Data Set and Processing

- Six targets to be used as the GCP were installed in some tree stems inside the plot and accurately positioned using a tachymeter (total station). The GCP were targeted with white spheres (Figure 1d). Only small numbers of GCP were available because of the challenges in installing GCP inside the forest due to occlusions and accessibility. The spheres were measured manually in the images.

- Tie points were generated using the PhotoScan accuracy option “high”, which uses the full resolution images in the processing [30]. Gradual automatic filtering was performed to exclude outliers; the projection accuracy and reconstruction uncertainty methods were used. The errors in the image observations were less than 1 pixel. Finally, a bundle block adjustment was performed to refine the camera positions, camera attitudes and camera calibration parameters, resulting in a final set of oriented images. The final RMSE of the GCPs was 6 cm, which is within the GSD range. Table 4 presents the statistics, including the mean standard deviation and RMSE for the GCPs.

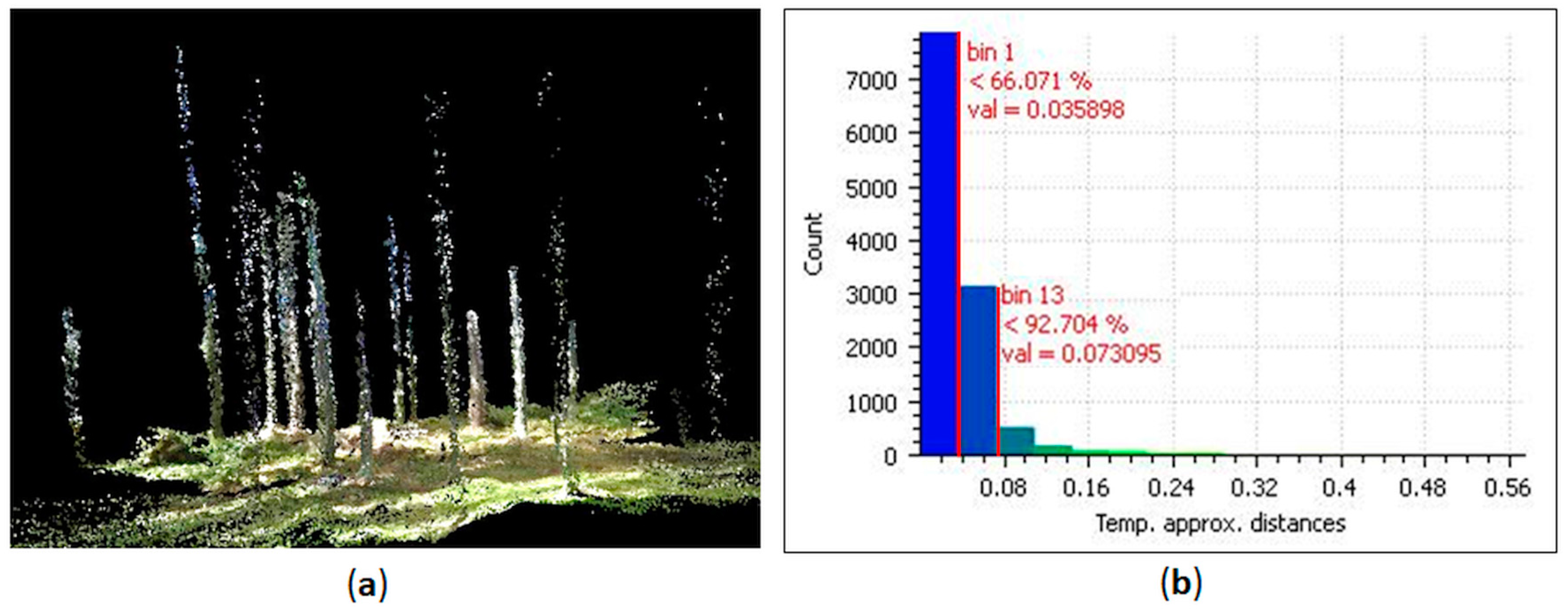

- A dense point cloud was generated using the multi-view stereo-reconstruction method and the oriented image set. An ultra-high accuracy mode was used that produced, on average, a density with 1.7 cm between points [30,31]. An aggressive depth filtering mode was selected to reduce outliers, particularly those due to the movement of tree leaves and low vegetation.

3.3.2. PMTS Point Cloud Accuracy Assessment

4. Results

4.1. Positional and Attitude Trajectory Accuracies

4.2. Accuracy of Object Reconstruction

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Corso, N.; Zakhor, A. Indoor localization algorithms for an ambulatory human operated 3D mobile mapping system. Remote Sens. 2013, 5, 6611–6646. [Google Scholar] [CrossRef]

- Lauterbach, H.A.; Borrmann, D.; Heß, R.; Eck, D.; Schilling, K.; Nüchter, A. Evaluation of a backpack-mounted 3D mobile scanning system. Remote Sens. 2015, 7, 13753–13781. [Google Scholar] [CrossRef]

- Wen, C.; Pan, S.; Wang, C.; Li, J. An Indoor Backpack System for 2-D and 3-D Mapping of Building Interiors. IEEE Geosci. Remote Sens. Lett. 2016, 13, 992–996. [Google Scholar] [CrossRef]

- Leica Geosystems. Leica Pegasus: Backpack Datasheet (Mobile Reality Capture). 2016. Available online: https://leica-geosystems.com/products/mobile-sensor-platforms/capture-platforms/leica-pegasus-backpack (accessed on 6 December 2017).

- Fisher, A. Google’s road map to global domination. N. Y. Times 2013, 11, 1–13. Available online: http://www.nytimes.com/2013/12/15/magazine/googles-plan-for-global-domination-dont-ask-why-ask-where.html (accessed on 6 December 2017).

- West, P.W. Tree and Forest Measurement; Springer: Heidelberg, Germany, 2009. [Google Scholar]

- Kukko, A.; Kaartinen, H.; Hyyppä, J.; Chen, Y. Multiplatform mobile LASER scanning: Usability and performance. Sensors 2012, 12, 11712–11733. [Google Scholar] [CrossRef]

- Liang, X.; Kukko, A.; Kaartinen, H.; Hyyppä, J.; Yu, X.; Jaakkola, A.; Wang, Y. Possibilities of a personal LASER scanning system for forest mapping and ecosystem services. Sensors 2014, 14, 1228–1248. [Google Scholar] [CrossRef] [PubMed]

- Forsman, M.; Börlin, N.; Holmgren, J. Estimation of Tree Stem Attributes Using Terrestrial Photogrammetry with a Camera Rig. Forests 2016, 7, 61. [Google Scholar] [CrossRef]

- Tseng, Y.H.; Lin, K.Y.; Chen, Y.C. Bundle Adjustment of Spherical Images Acquired with a Portable Panoramic Image Mapping System (PPIMS). Photogramm. Eng. Remote Sens. 2016, 82, 935–943. [Google Scholar] [CrossRef]

- Oveland, I.; Hauglin, M.; Gobakken, T.; Næsset, E.; Maalen-Johansen, I. Automatic Estimation of Tree Position and Stem Diameter Using a Moving Terrestrial LASER Scanner. Remote Sens. 2017, 9, 350. [Google Scholar] [CrossRef]

- Mass, H. Close-range photogrammetry sensors. In 2008 ISPRS Congress Book: Advances in Photogrammetry, Remote Sensing and Spatial Information Science, 1st ed.; Li, Z., Chen, J., Balsavias, E., Eds.; CRC Press: New York, NY, USA, 2008; pp. 63–72. [Google Scholar]

- Valiente, D.; Gil, A.; Fernández, L.; Reinoso, Ó. A comparison of EKF and SGD applied to a view-based SLAM approach with omnidirectional images. Robot. Auton. Syst. 2014, 62, 108–119. [Google Scholar] [CrossRef]

- Theta Developers. Proprietary Technical Information: Lens Parameter Information. 2017. Available online: http://theta360.guide/community-document/community.html (accessed on 6 December 2017).

- Aghayaria, S.; Saadatsereshta, M.; Omidalizarandib, M.; Neumannb, I. Geometric calibration of full spherical panoramic Ricoh-Theta camera. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-1(W1), 237–245. [Google Scholar] [CrossRef]

- Campos, M.B.; Tommaselli, A.M.G.; Marcato Junior, J. Geometric Model and Assessment of a Dual Fisheye Imaging System. Photogramm. Rec. 2018. accepted for publication. [Google Scholar]

- Ray, S.F. Applied Photographic Optics: Lenses and Optical Systems for Photography, Film, Video and Electronic Imaging, 3rd ed.; Focal Press: Oxford, UK, 2002. [Google Scholar]

- Hughes, C.; Denny, P.; Jones, E.; Glavin, M. Accuracy of fish-eye lens models. Appl. Opt. 2010, 49, 3338–3347. [Google Scholar] [CrossRef] [PubMed]

- Brown, D.C. Close-Range Camera Calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Mikhail, E.M.; Ackerman, F. Observations and Least Squares, 1st ed.; IEP: New York, NY, USA, 1976. [Google Scholar]

- Takasu, T.; Yasuda, A. Development of the low-cost RTK-GPS receiver with an open source program package RTKLIB. In Proceedings of the Annals of International Symposium on GPS/GNSS, International Convention Center Jeju, Korea, 4–6 November 2009; pp. 4–6. [Google Scholar]

- Hedgecock, W.; Maroti, M.; Sallai, J.; Volgyesi, P.; Ledeczi, A. High-accuracy differential tracking of low-cost GPS receivers. In Proceedings of the 11th Annual International Conference on Mobile Systems, Applications, and Services, Taipei, Taiwan, 25–28 June 2013; Volume 11, pp. 221–234. [Google Scholar]

- Rodríguez-Pérez, J.R.; Álvarez, M.; Sanz-Ablanedo, E. Assessment of low-cost GPS receiver accuracy and precision in forest environments. J. Surv. Eng. 2007, 133, 159–167. [Google Scholar] [CrossRef]

- Rowberg, J. I2Cdev Device Library Code Is Placed under the MIT License (Open Source Code). 2012. Available online: https://github.com/jrowberg/i2cdevlib/blob/master/dsPIC30F/I2Cdev/I2Cdev.h (accessed on 6 December 2017).

- Alam, F.; Zhaihe, Z.; Jia, H. A Comparative Analysis of Orientation Estimation Filters using MEMS based IMU. In Proceedings of the International Conference on Research in Science, Engineering and Technology, Dubai, UAE, 21–22 March 2014; pp. 21–22. [Google Scholar]

- Marino, J.; Frey, M.; Gauterin, F.; Sharma, R. Development of a highly accurate and low-cost measurement device for field operational tests. In Proceedings of the IEEE International Symposium on Inertial Sensors and Systems, Laguna Beach, CA, USA, 22–25 February 2016; pp. 74–77. [Google Scholar]

- Guarnieri, A.; Pirotti, F.; Vettore, A. Low-cost MEMS sensors and vision system for motion and position estimation of a scooter. Sensors 2013, 13, 1510–1522. [Google Scholar] [CrossRef] [PubMed]

- International Organization for Standardization (ISO). ISO 19157:2011 Geographic Information—Data Quality; ISO: Geneva, Switzerland, 2011. [Google Scholar]

- VectorNav Embedded Navigation Solutions. VN-100 Rugged IMU User Manual. Available online: https://www.vectornav.com/docs/default-source/documentation/vn-100-documentation/vn-100-user-manual-(um001).pdf?sfvrsn=b49fe6b9_18 (accessed on 6 December 2017).

- Agisoft LLC. Agisoft PhotoScan User Manual: Professional Edition, Version 1.2. 2014. Available online: http://www.agisoft.com/pdf/photoscan-pro_1_2_en.pdf (accessed on 6 December 2017).

- Dewez, T.J.B.; Leroux, J.; Morelli, S. Cliff collapse hazard from repeated multicopter uav acquisitions: Return on experience. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 805–811. [Google Scholar] [CrossRef]

- Lehtola, V.V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Kurkela, M. Comparison of the Selected State-Of-The-Art 3D Indoor Scanning and Point Cloud Generation Methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. Cloud Compare: 3D Point Cloud and Mesh Processing Software: Open-Source Project. Available online: http://www. danielgm.net/cc (accessed on 6 December 2017).

- Blázquez, M.; Colomina, I. Relative INS/GNSS aerial control in integrated sensor orientation: Models and performance. ISPRS J. Photogramm. Remote Sens. 2012, 67, 120–133. [Google Scholar] [CrossRef]

- Naesset, E. Effects of differential single-and dual-frequency GPS and GLONASS observations on point accuracy under forest canopies. Photogramm. Eng. Remote Sens. 2001, 67, 1021–1026. [Google Scholar]

- Cramer, M. Performance of GPS/inertial solutions in photogrammetry. In Proceedings of the Photogrammetric Week, Stuttgart, German, 24–28 September 2001; pp. 49–62. [Google Scholar]

- Ip, A.; El-Sheimy, N.; Mostafa, M. Performance analysis of integrated sensor orientation. Photogramm. Eng. Remote Sens. 2007, 73, 89–97. [Google Scholar] [CrossRef]

- Freitas, V.L.S.; Reis, B.M.F.; Tommaselli, A.M.G. Automatic shadow detection in aerial and terrestrial images. Bull. Geod. Sci. 2017, 23, 578–590. [Google Scholar] [CrossRef]

| Settings | Camera Ricoh Theta S |

|---|---|

| Sensor Size | Two 1/2.3” CMOS Sensors (14 Mpx) |

| Still Image | 2688 × 2688 pixels in each sensor |

| Dual Fisheye Video | 960 × 1080 pixels in each sensor |

| Principal Distance | 1.43 mm |

| Camera Dimensions | 44 mm × 130 mm × 22.9 mm—weight 125 g |

| Fittings | Remote control, RSWC201 wireless, HDMI and USB. |

| MPU6050 | Sensor | Full Scale Range | Resolution | Linearity | Sensitivity |

| Accelerometers | ±4 g | 0.001198 m/s2 | 0.5% | 8192 LSB */g | |

| Gyroscopes | ±250°/s | 0.0076294°/s | 0.2% | 131 LSB */(°/s) | |

| UBLOX NEO 6M | Sensor | Time pulse frequency range | Time pulse resolution | Velocity resolution | Heading resolution |

| GPS L1 frequency | 0.25 Hz to 1 kHz | 30 ns | 0.1 m/s | 0.5° |

| Offsets | Δx (mm) | Δy (mm) | Δh (mm) | Δr (°) | Δp (°) | Δy (°) |

|---|---|---|---|---|---|---|

| CAM1/Platform | 101.4 | 85.05 | 138.0 | −78.75 | 29.45 | 174.57 |

| ROP | −0.79 | 0.41 | 1.904 | 0.0149 | 0.0526 | 179.66 |

| Statistics | E (m) | N (m) | H (m) | Total Error (m) |

|---|---|---|---|---|

| 0.007 | 0.0006 | −0.001 | 0.007 | |

| σ | 0.059 | 0.021 | 0.004 | 0.063 |

| RMSE | 0.060 | 0.021 | 0.005 | 0.064 |

| MPU6050 | Euler Angles | (°) | σ (°) | RMSE (°) |

| Yaw (γ) | −0.0888 | 3.0652 | 3.0588 | |

| Pitch (ϕ) | 0.0190 | 0.5474 | 0.5463 | |

| Roll (ω) | 0.0999 | 1.0690 | 1.0710 | |

| UBLOX NEO 6M | Accuracy | (m) | σ (m) | RMSE (m) |

| Absolute (Planimetric) | 0.34 | 0.21 | 0.40 | |

| Absolute (Planialtimetric) | 4.34 | 1.0 | 4.45 | |

| Relative (Planialtimetric) | 0.026 | 0.078 | 0.082 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Campos, M.B.; Tommaselli, A.M.G.; Honkavaara, E.; Prol, F.D.S.; Kaartinen, H.; El Issaoui, A.; Hakala, T. A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application. Sensors 2018, 18, 827. https://doi.org/10.3390/s18030827

Campos MB, Tommaselli AMG, Honkavaara E, Prol FDS, Kaartinen H, El Issaoui A, Hakala T. A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application. Sensors. 2018; 18(3):827. https://doi.org/10.3390/s18030827

Chicago/Turabian StyleCampos, Mariana Batista, Antonio Maria Garcia Tommaselli, Eija Honkavaara, Fabricio Dos Santos Prol, Harri Kaartinen, Aimad El Issaoui, and Teemu Hakala. 2018. "A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application" Sensors 18, no. 3: 827. https://doi.org/10.3390/s18030827

APA StyleCampos, M. B., Tommaselli, A. M. G., Honkavaara, E., Prol, F. D. S., Kaartinen, H., El Issaoui, A., & Hakala, T. (2018). A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application. Sensors, 18(3), 827. https://doi.org/10.3390/s18030827