An Improved Calibration Method for a Rotating 2D LIDAR System

Abstract

:1. Introduction

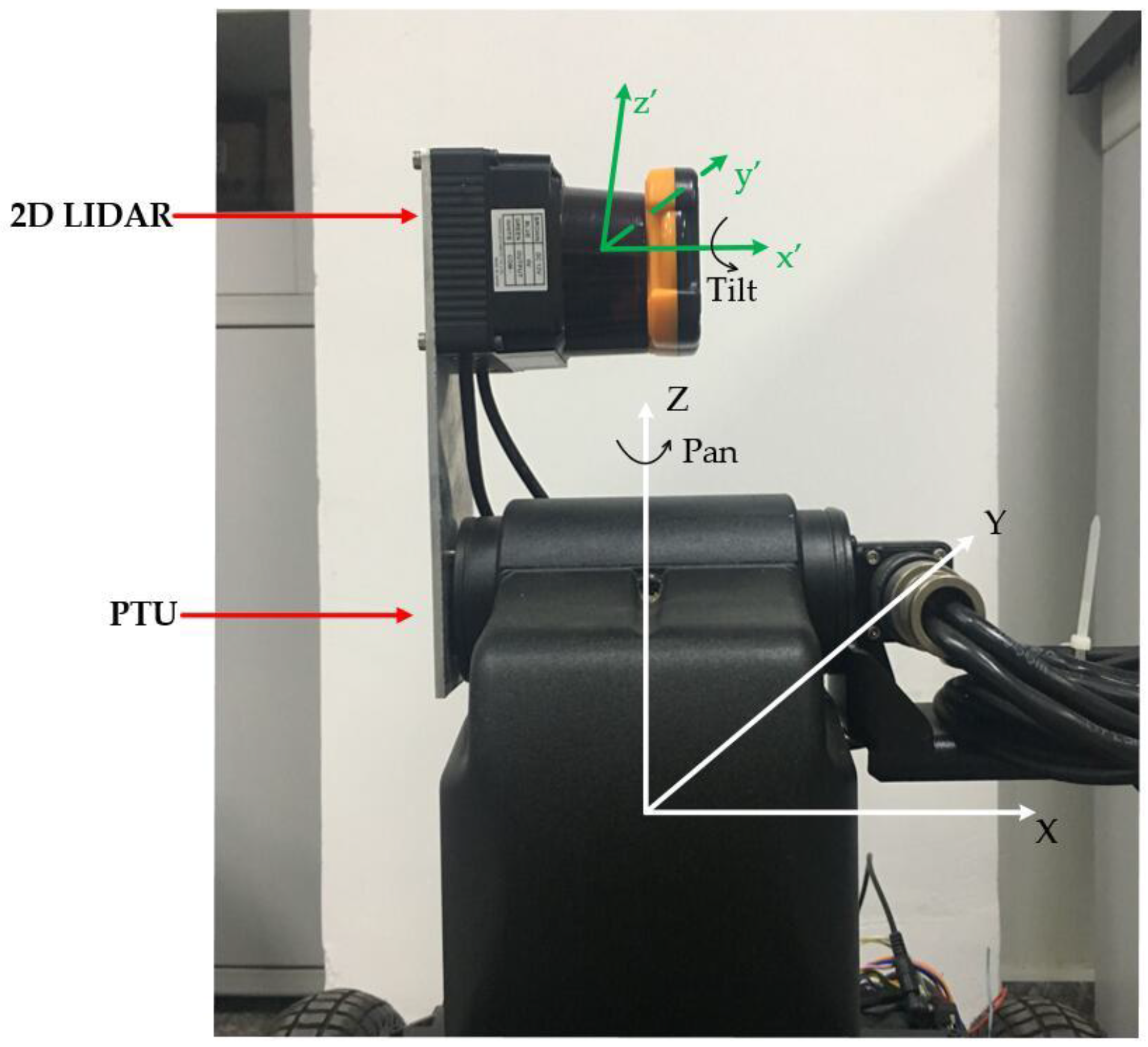

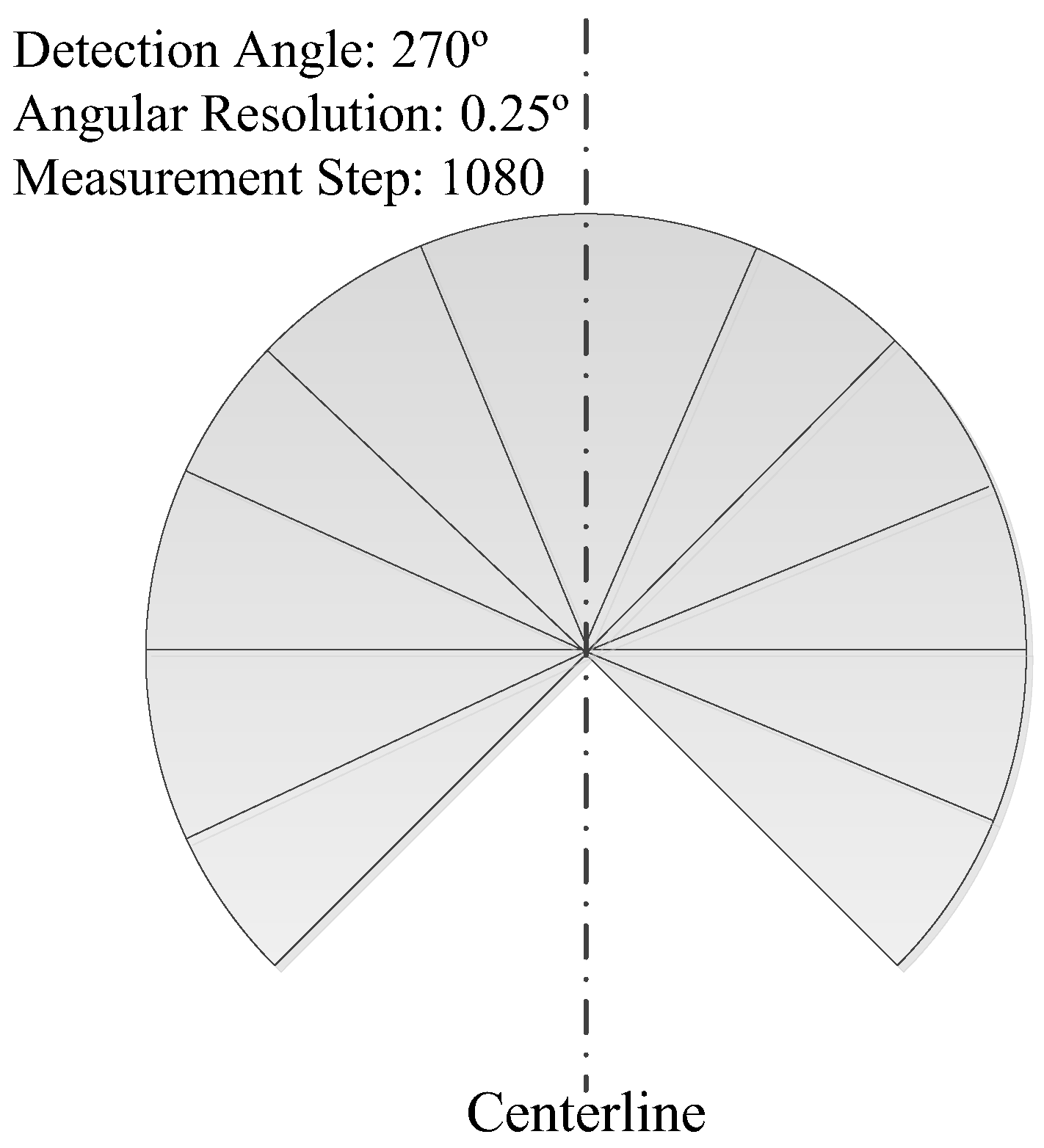

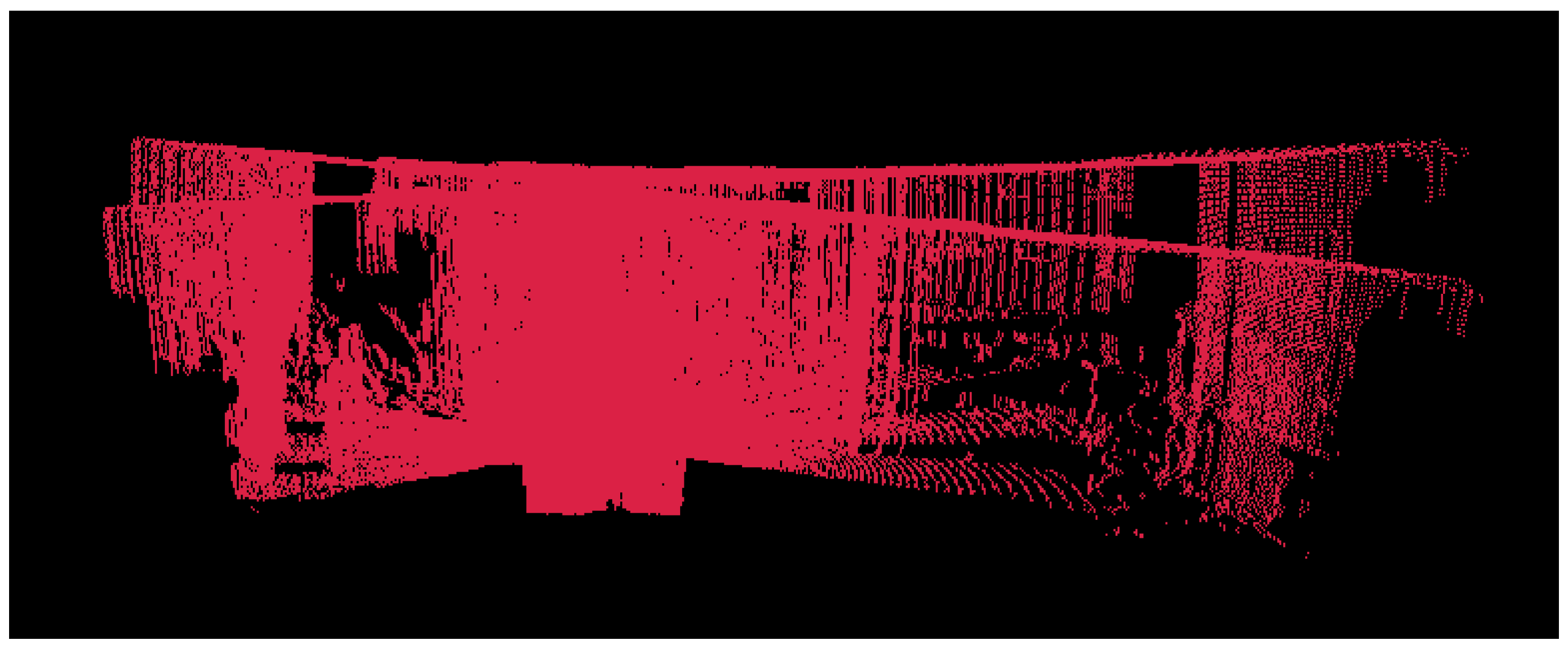

2. R2D-LIDAR system

3. Calibration Model and Strategy

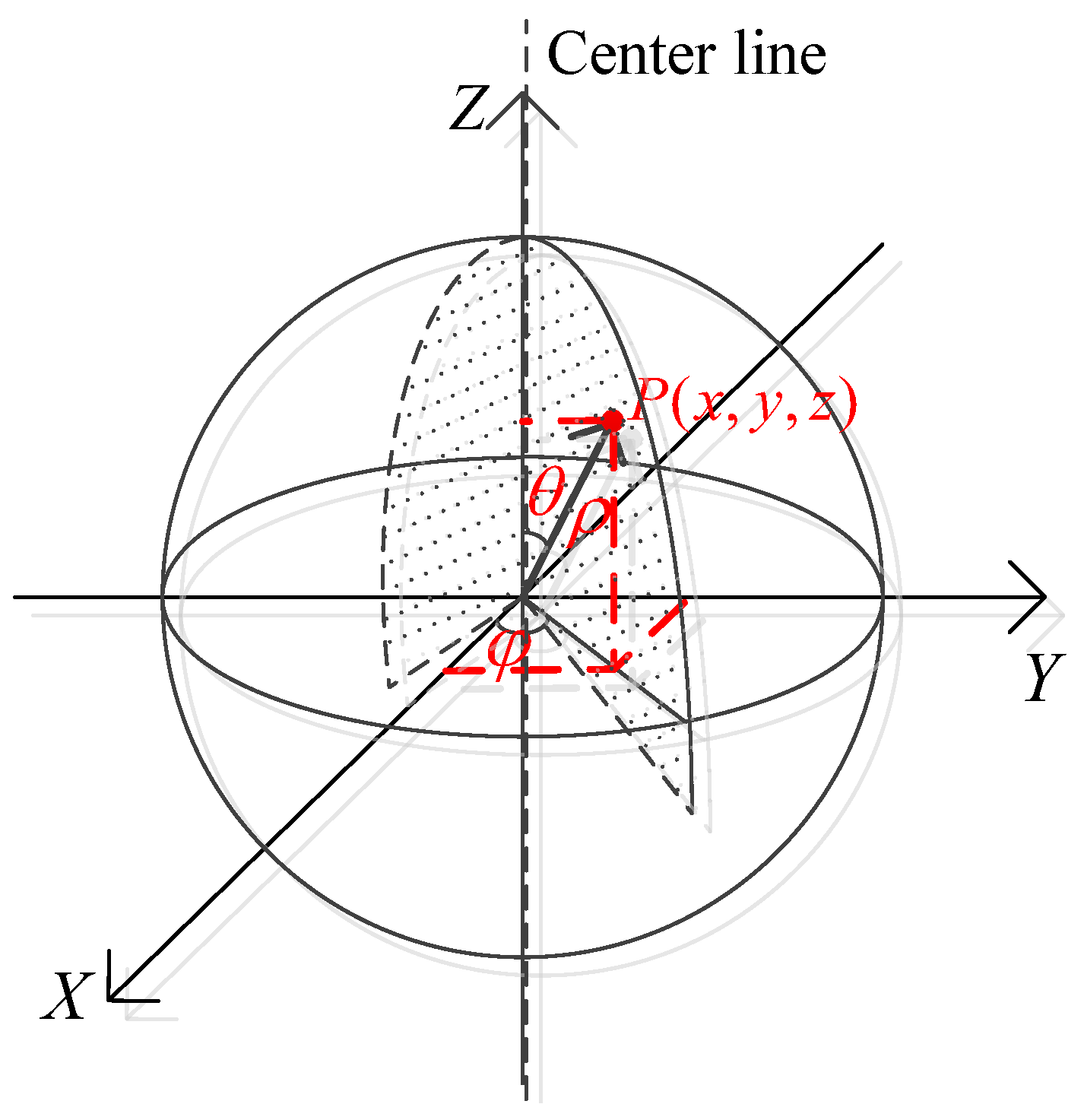

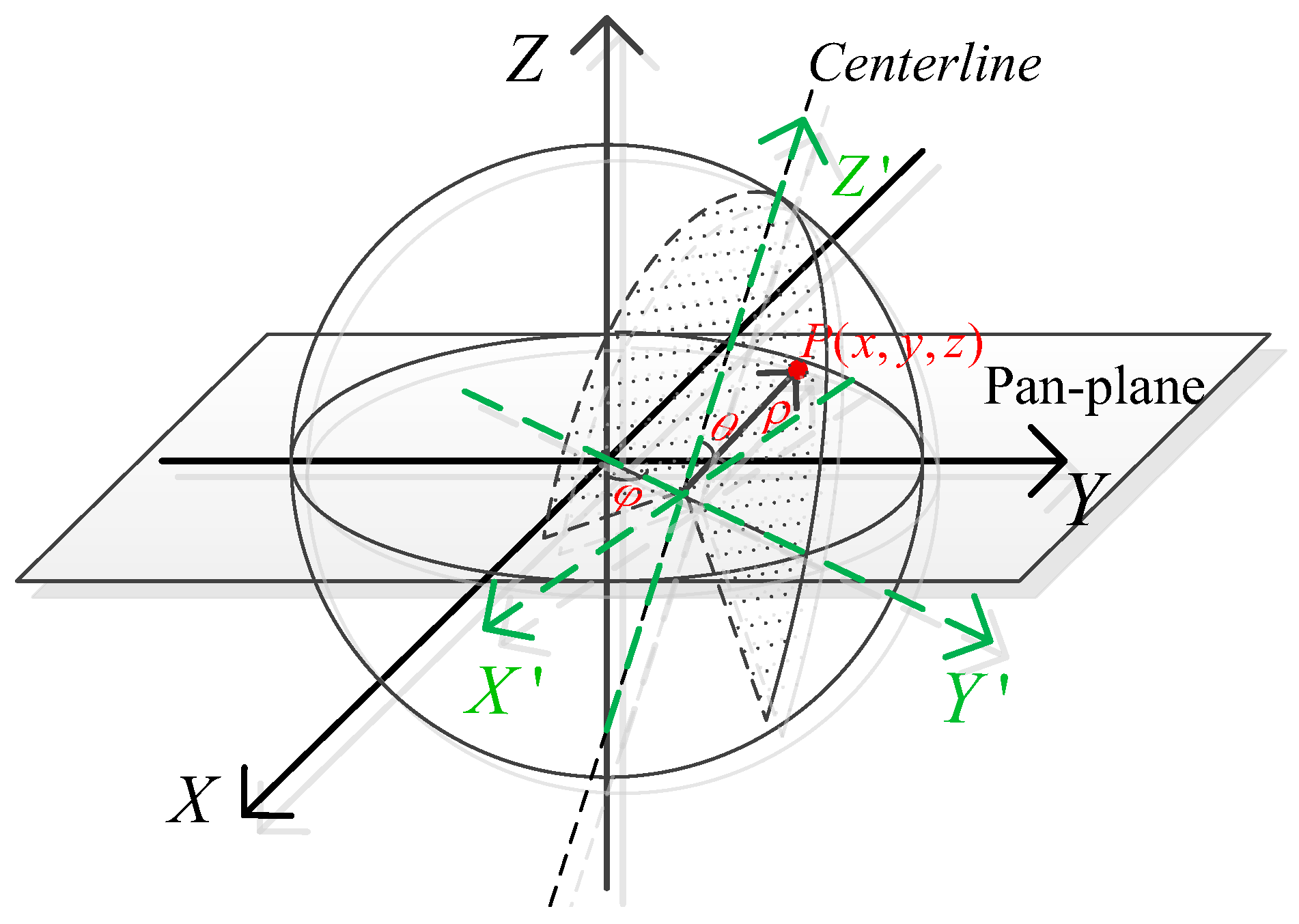

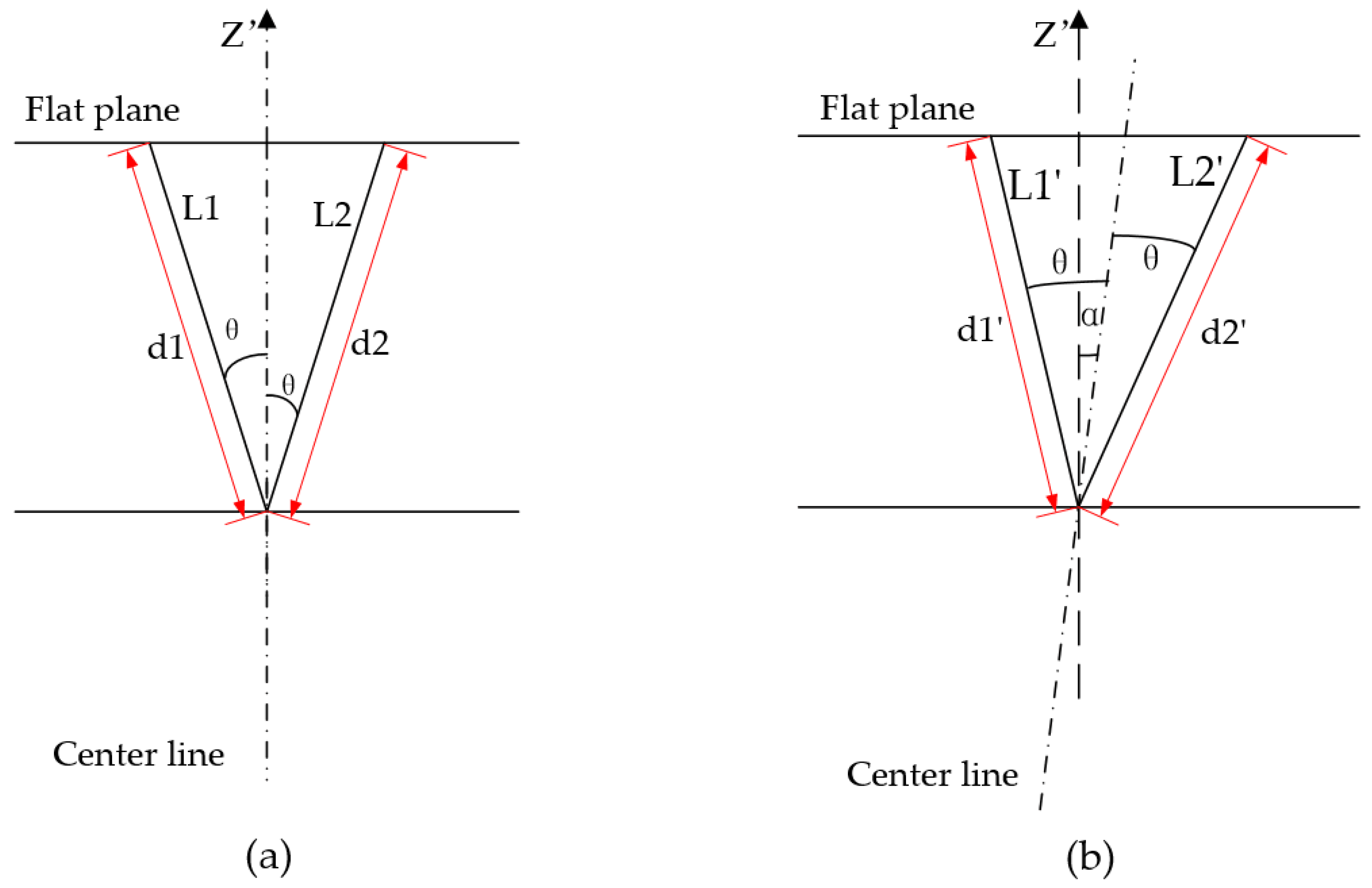

3.1. Measurement Model

3.2. LM Optimized Algorithm

4. Experiments

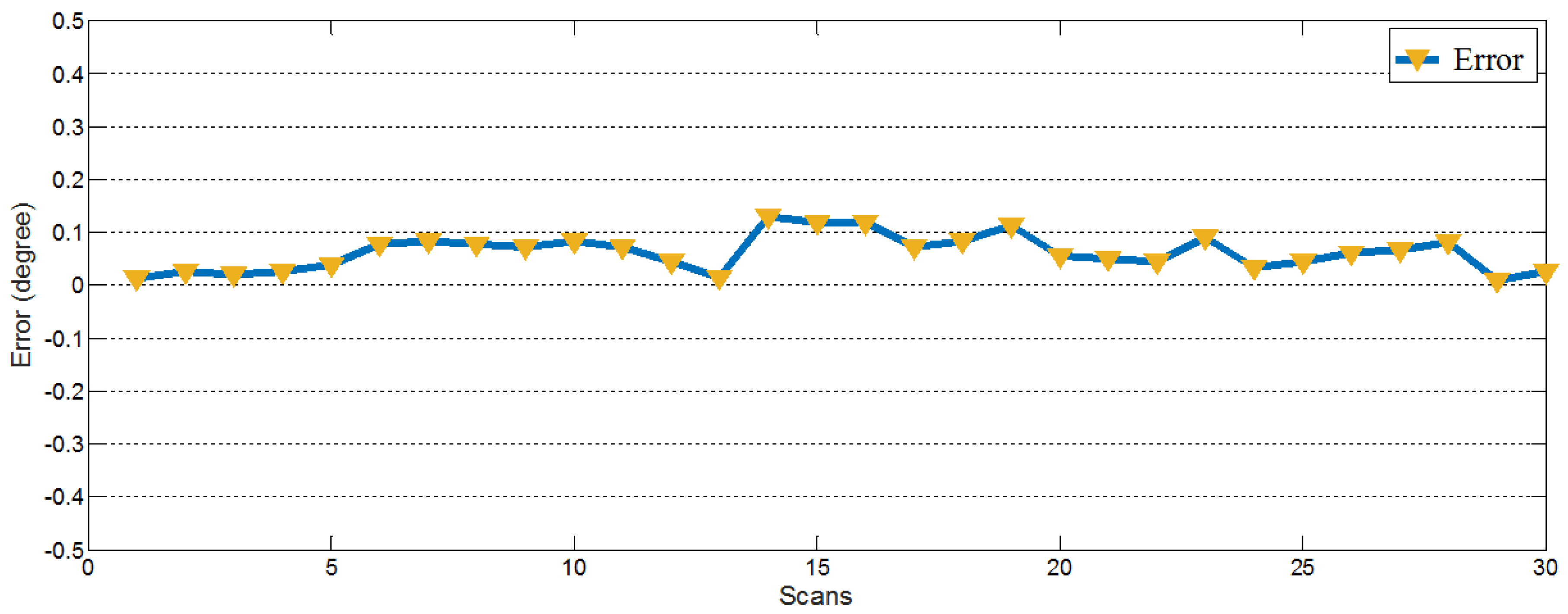

4.1. Simulation

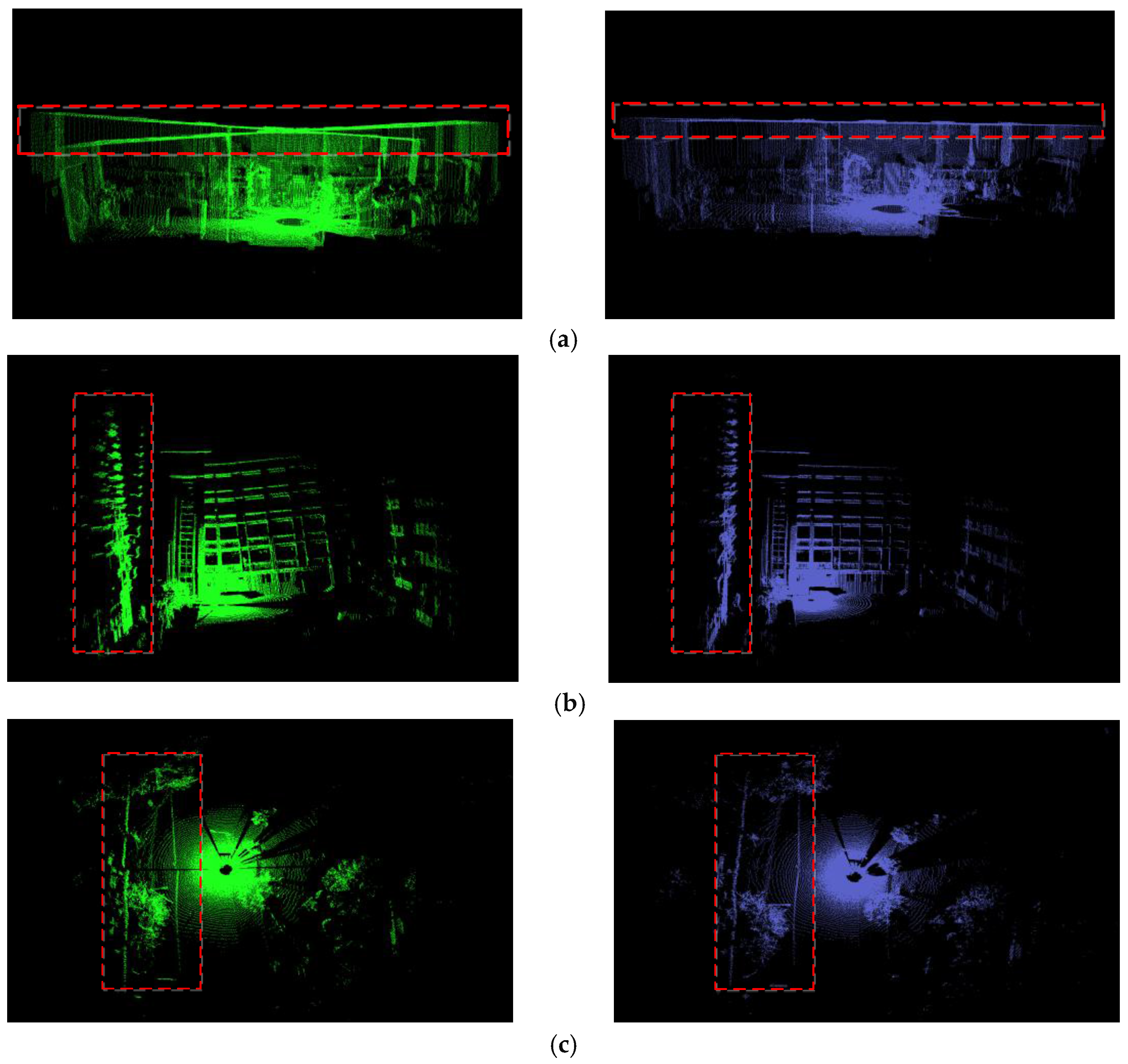

4.2. Calibration in Different Scenarios

4.3. Comparison with Alismail’s Work

4.4. Accuracy of Calibration Result

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ye, Y.; Fu, L.; Li, B. Object Detection and Tracking Using Multi-layer Laser for Autonomous Urban Driving. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 259–264. [Google Scholar]

- Dumitrascu, B.; Filipescu, A.; Petrea, G.; Minca, E.; Filipescu, S.; Voda, A. Laser-based Obstacle Avoidance Algorithm for Four Driving/Steering Wheels AutonomousVehicle. In Proceedings of the 2013 17 TH International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 11–13 October 2013; pp. 187–192. [Google Scholar]

- Xu, N.; Zhang, W.; Zhu, L.; Li, C.; Wang, S. Object 3D surface reconstruction approach using portable laser scanner. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Chengdu, China, 26–28 May 2017. [Google Scholar]

- Chi, S.; Xie, Z.; Chen, W. A Laser Line Auto-Scanning System for Underwater 3D Reconstruction. Sensors 2016, 16, 1534. [Google Scholar] [CrossRef] [PubMed]

- Wen, C.; Pan, S.; Wang, C.; Li, J. An indoor backpack system for 2-D and 3-D mapping of building interiors. IEEE Geosci. Remote Sens. Lett. 2016, 13, 992–996. [Google Scholar] [CrossRef]

- Cole, D.; Newman, P.M. Using laser range data for 3D SLAM in outdoor environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006. [Google Scholar]

- Zhang, Y.; Du, F.; Luo, Y.; Xiong, Y. Map-building approach based on laser and depth visual sensor fusion SLAM. Appl. Res. Comput. 2016, 33. [Google Scholar] [CrossRef]

- Lenac, K.; Kitanov, A.; Cupec, R.; Petrovic, I. Fast planar surface 3D SLAM using LIDAR. Robot. Auton. Syst. 2017, 92, 197–220. [Google Scholar] [CrossRef]

- Lin, W.; Hu, J.; Xu, H.; Ye, C.; Ye, X.; Li, Z. Graph-based SLAM in indoor environment using corner feature from laser sensor. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017; pp. 1211–1216. [Google Scholar]

- Liang, X.; Chen, H.Y.; Li, Y.J.; Liu, Y.H. Visual Laser-SLAM in Large-scale Indoor Environments. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 19–24. [Google Scholar]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A.; Hertzberg, J. Globally consistent 3D mapping with scan matching. Robot. Auton. Syst. 2008, 56, 130–142. [Google Scholar] [CrossRef]

- Newman, P.; Sibley, G.; Smith, M.; Cummins, M.; Harrison, A.; Mei, C.; Posner, I.; Shade, R.; Schroeter, D.; Murphy, L. Navigating, recognizing and describing urban spaces with vision and lasers. Int. J. Robot. Res. 2009, 28, 1406. [Google Scholar] [CrossRef]

- Droeschel, D.; Schwarz, M.; Behnke, S. Continuous mapping and localization for autonomous navigation in rough terrain using a 3D laser scanner. Robot. Auton. Syst. 2017, 88, 104–115. [Google Scholar] [CrossRef]

- Real-Moreno, O.; Rodriguez-Quinonez, J.C.; Sergiyenko, O.; Basaca-Preciado, L.C.; Hernandez-Balbuena, D.; Rivas-Lopez, M.; Flores-Fuentes, W. Accuracy improvement in 3D laser scanner based on dynamic triangulation for autonomous navigation system. In Proceedings of the 2017 IEEE 26th International Symposium on Industrial Electronics (ISIE), Edinburgh, UK, 19–21 June 2017; pp. 1602–1608. [Google Scholar]

- Alismail, H.; Browning, B. Automatic Calibration of Spinning Actuated LIDAR Internal Parameters. Field Robot. 2014, 32, 723–747. [Google Scholar] [CrossRef]

- User’s Manual and Programming Guide HDL-64E S3; Velodyne: Morgan Hill, CA, USA, 2013; Available online: www.velodynelidar.com (accessed on 30 January 2018).

- User Guide RS-LiDAR-16; Robosense: Shenzhen, China, 2014; Available online: www.robosense.ai (accessed on 30 January 2018).

- Kang, J.; Doh, N.L. Full-DOF Calibration of a Rotating 2-D LIDAR with a Simple Plane Measurement. IEEE Trans. Robot. 2016, 32, 1245–1263. [Google Scholar] [CrossRef]

- Olivka, P.; Krumnikl, M.; Moravec, P.; Seidl, D. Calibration of Short Range 2D Laser Range Finder for 3D SLAM Usage. J. Sens. 2016, 2016, 3715129. [Google Scholar] [CrossRef]

- Yamao, S.; Hidaka, H.; Odashima, S.; Shan, J.; Murase, Y. Calibration of a Rotating 2D LRF in Unprepared Environments by Minimizing Redundant Measurement Errors. In Proceedings of the 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Munich, Germany, 3–7 July 2017; pp. 172–177. [Google Scholar]

- Gong, Z.; Wen, C.; Wang, C.; Li, J. A target-free automatic self-calibration approach for multibeam laser scanners. IEEE Trans. Instrum. Meas. 2017, 67, 238–240. [Google Scholar] [CrossRef]

- Levenberg, K. A method for the solution of certain nonlinear problems in least squares. Q. J. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Kaj, M.; Hans, B.N.; Ole, T. Methods for Non-Linear Least Squares Problems, 2nd ed.; Informatics and Mathematical Modelling; Technical University of Denmark, DTU: Kongens Lyngby, Denmark, 2004; pp. 6–30. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, Y.; Yu, H.; Dai, H.; Song, S.; Lin, M.; Sun, B.; Jiang, W.; Meng, M.Q.-H. An Improved Calibration Method for a Rotating 2D LIDAR System. Sensors 2018, 18, 497. https://doi.org/10.3390/s18020497

Zeng Y, Yu H, Dai H, Song S, Lin M, Sun B, Jiang W, Meng MQ-H. An Improved Calibration Method for a Rotating 2D LIDAR System. Sensors. 2018; 18(2):497. https://doi.org/10.3390/s18020497

Chicago/Turabian StyleZeng, Yadan, Heng Yu, Houde Dai, Shuang Song, Mingqiang Lin, Bo Sun, Wei Jiang, and Max Q.-H. Meng. 2018. "An Improved Calibration Method for a Rotating 2D LIDAR System" Sensors 18, no. 2: 497. https://doi.org/10.3390/s18020497

APA StyleZeng, Y., Yu, H., Dai, H., Song, S., Lin, M., Sun, B., Jiang, W., & Meng, M. Q.-H. (2018). An Improved Calibration Method for a Rotating 2D LIDAR System. Sensors, 18(2), 497. https://doi.org/10.3390/s18020497