Service Migration from Cloud to Multi-tier Fog Nodes for Multimedia Dissemination with QoE Support

Abstract

:1. Introduction

2. Shifting the Multimedia Load from the Cloud Computing to the Fog Nodes

3. The Migration of Video Services from the Cloud Computing to Multi-Tier Fog Nodes

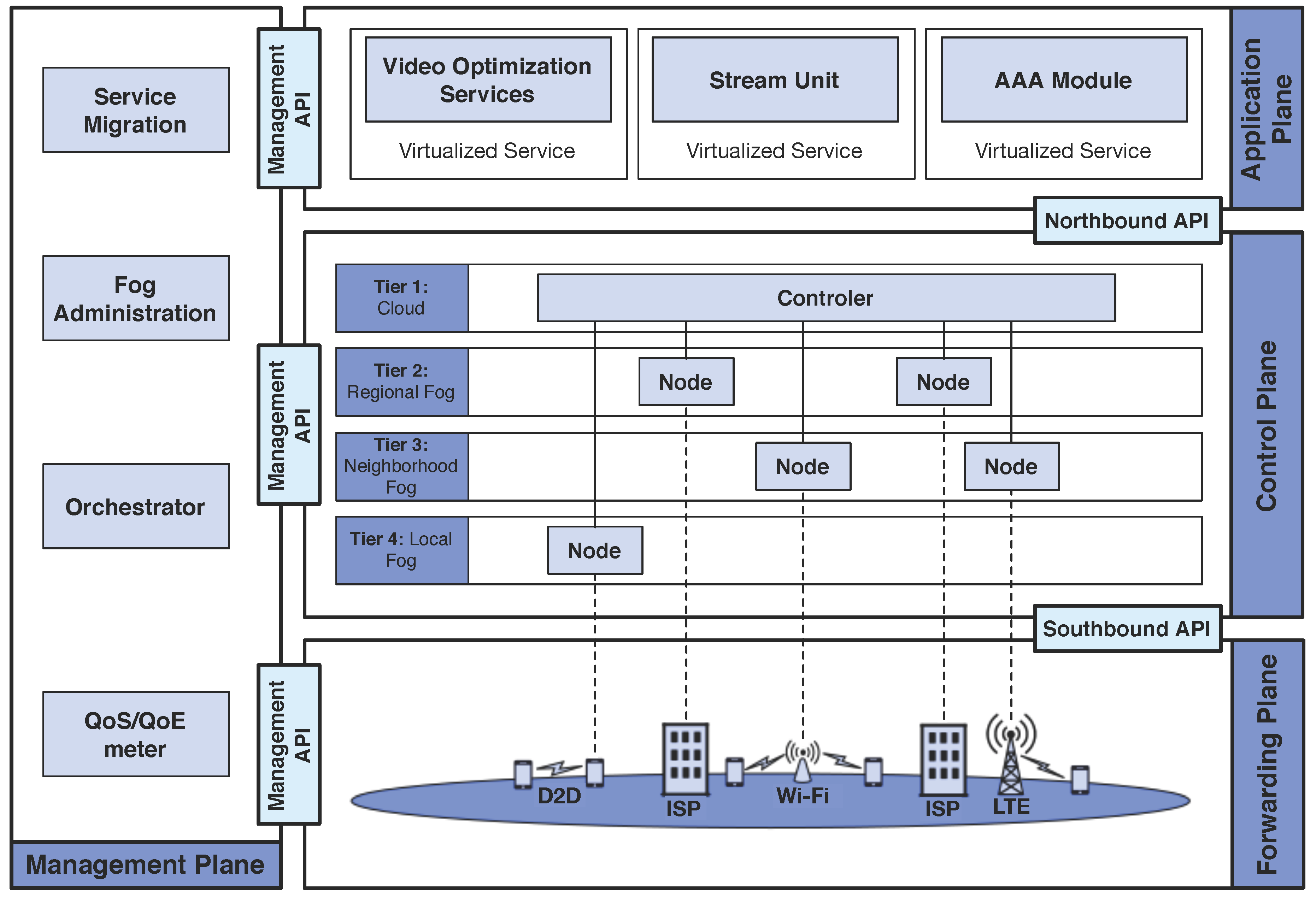

3.1. Multi-Tier Fog Architecture to Provide Service Migration

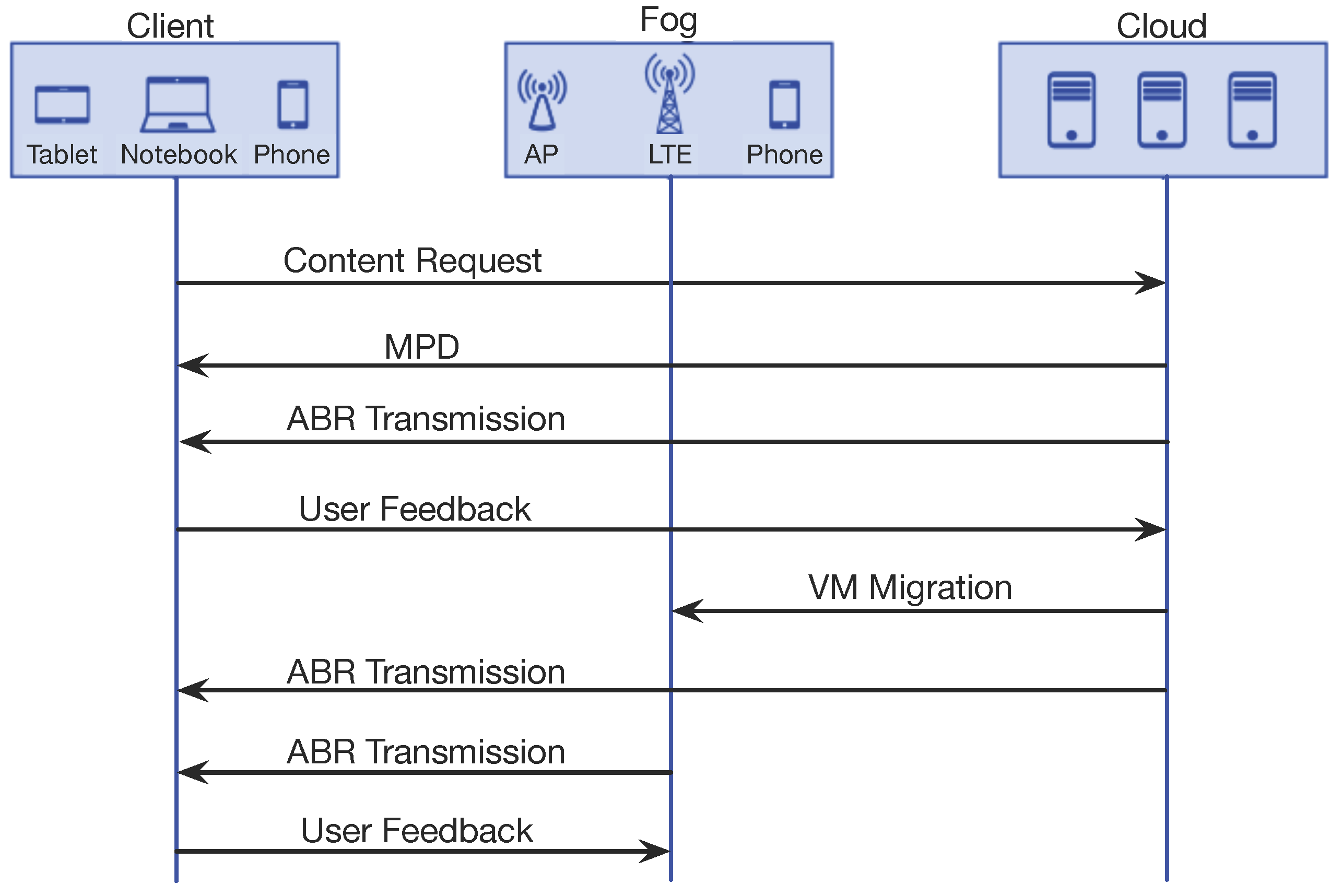

3.2. Video Service Migration

4. Video Optimization Service Migration Evaluation

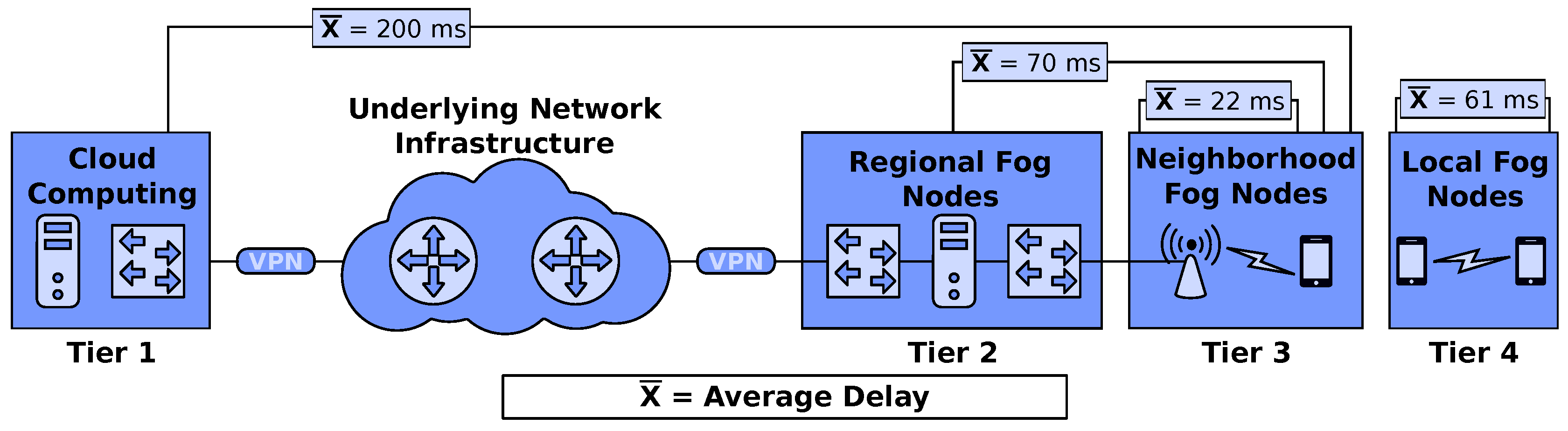

4.1. Scenario Description

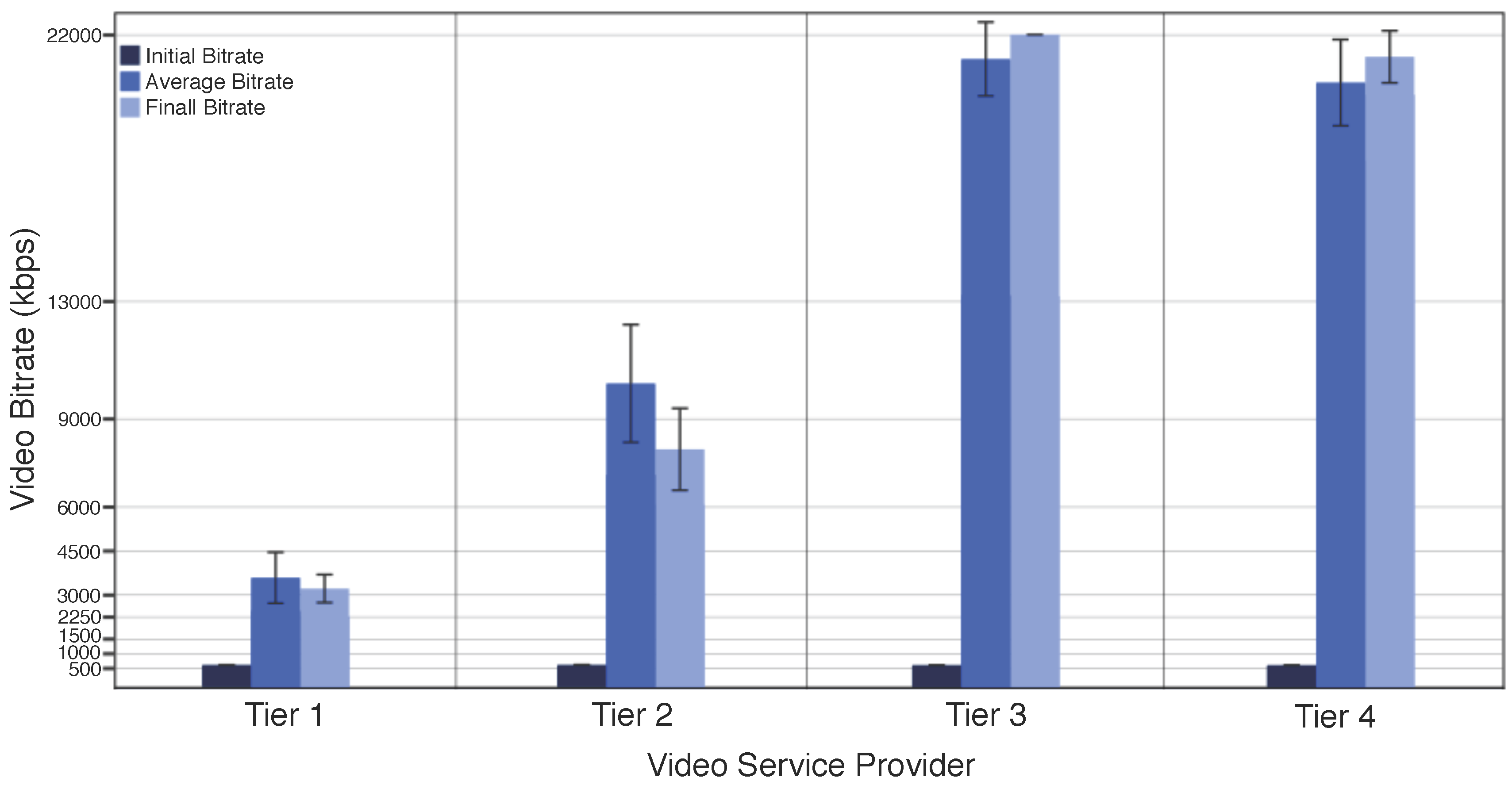

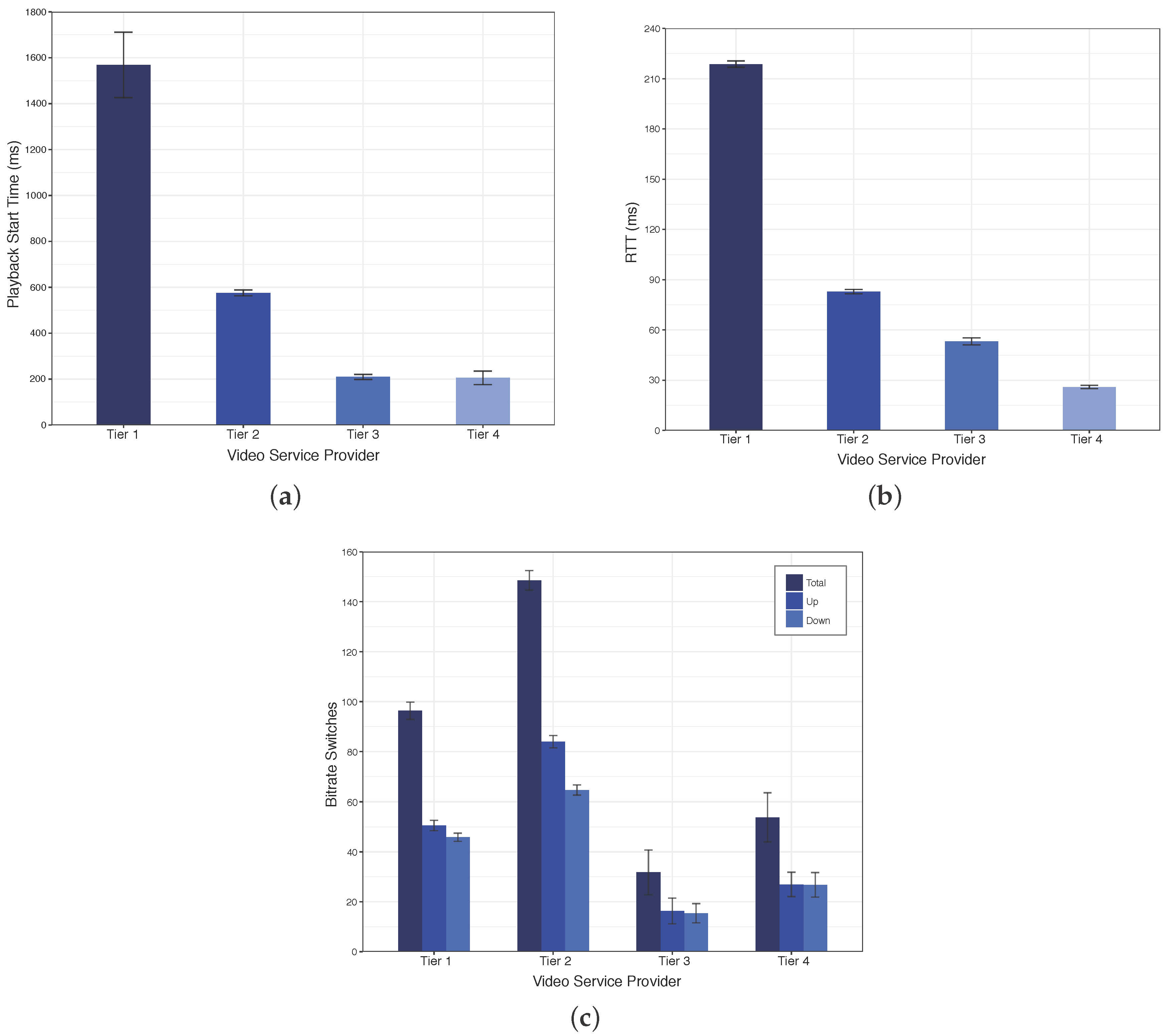

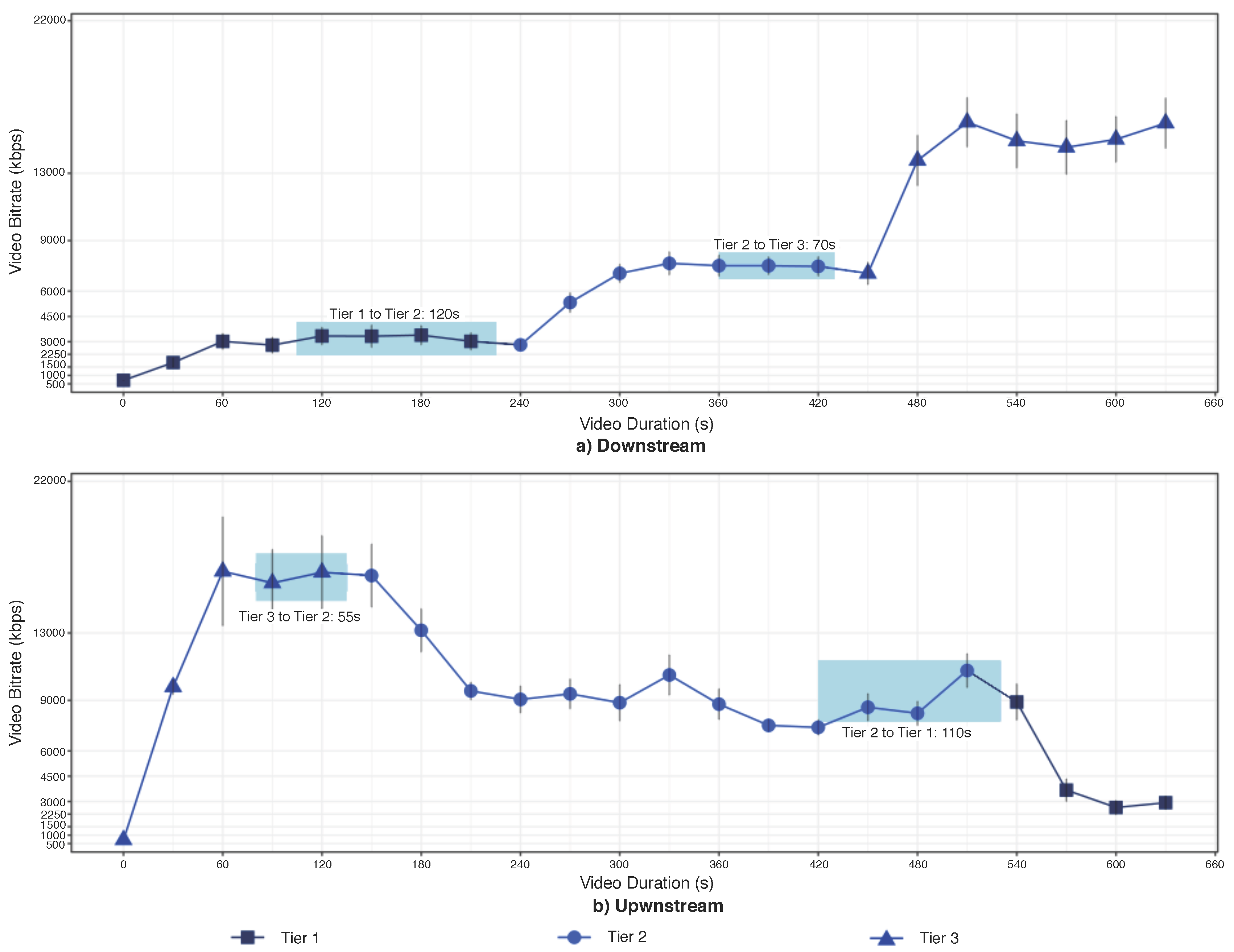

- Bitrate and Bitrate Switch Events: the client player tends to start the video by requesting a lower bitrate from the streaming unit, and gradually keeps increasing. The bitrate could later be reduced as soon as the rate of the playback exceeds the buffering rate, due to degraded network conditions. By lowering the bitrate of the video streaming, the client player minimizes the interruptions during the video playback. On the other hand, as soon as the network conditions improve, the video bitrate can be increased. However, frequent switching in bit rates can degrade the QoE. In this way, the initial bitrate, number of bitrate switching events, average bitrate, and final bitrate affect the user experience on consuming video services.

- Playback Start Time: it is the time duration before a video starts to playout, which typically includes the time taken to download the HTML page (or manifest file), load the video player plug-in, and to playback the initial part of the video.

- RTT: time is taken for a packet to be sent plus the time is taken for an acknowledgment that such packet is received.

4.2. Impact of ABR Schemes Deployed in Different Tiers of the Proposed Architecture

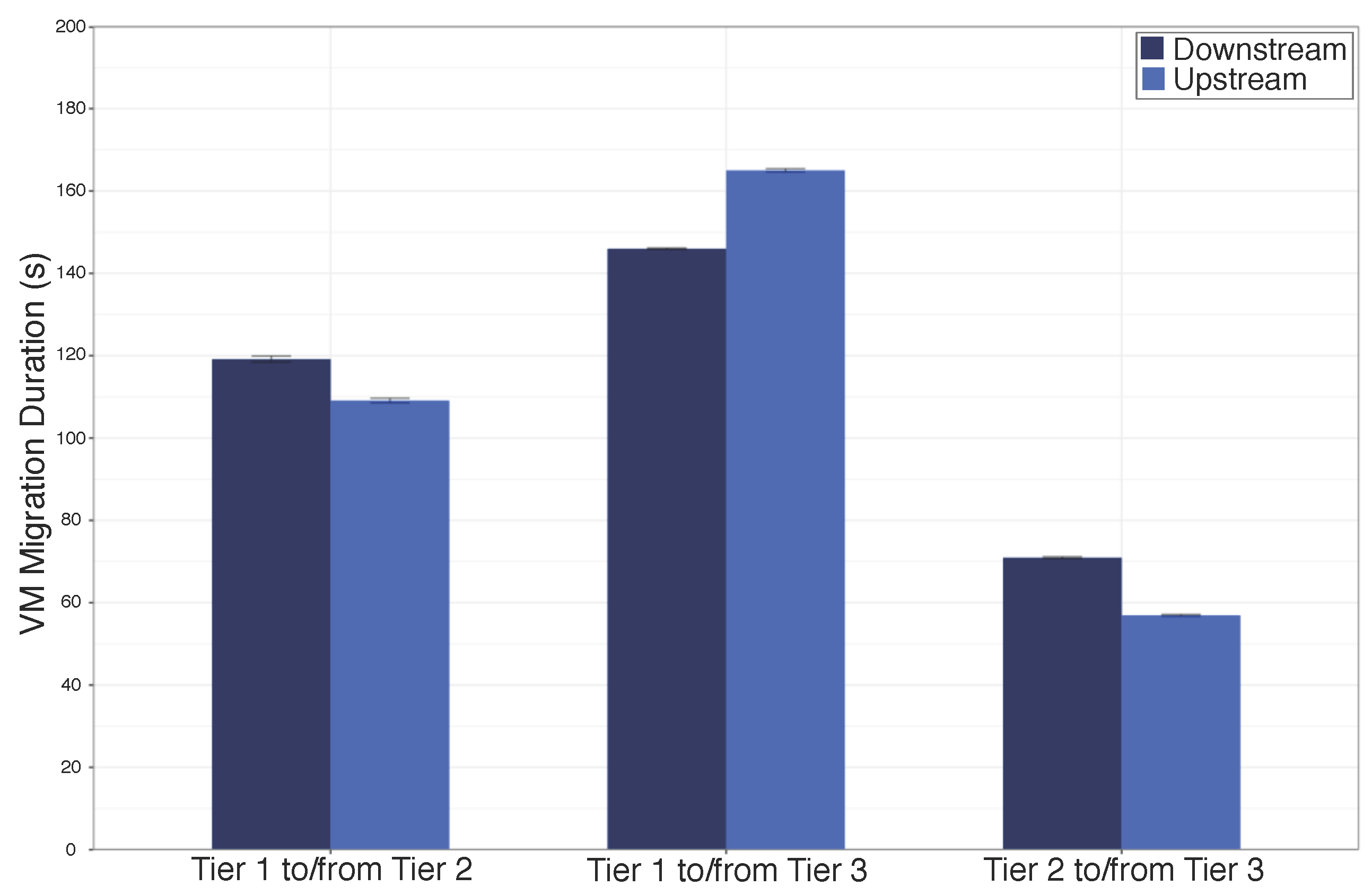

4.3. Impact of Service Migration between Different Tiers of the Proposed Architecture

5. Open Issues about Migration of Video Services from the Cloud Computing to Multi-tier Fog Nodes

- Orchestration: The Orchestrator might consider information about QoE, QoS, topology, video Content, operator, etc., for service migration, ABR streaming, cache schemes, and other decision-making in such multi-tier fog architecture. The orchestrator must take into account specific algorithms to decide about service migration in the case of poor QoE, where machine learning could improve the orchestrator capabilities. Additionally, orchestrator could combine both ABR and cache schemes in each tier to improve the QoE, beyond that which can be achieved by either ABR and caching running individually. The orchestrator must also deal with users mobility between different locations quite often in the current wireless network scenarios, which hamper the delivery of videos with QoE support. Decision-making schemes based on QoE assessment have a significant advantage to strike an ideal balance between network provisioning and user experience. In this way, a set of different user preferences, interests, devices, video content, and network issues must be modeled and integrated into the orchestrator to optimize and selectively cache or adapt the video content, and also to migrate services. However, it is challenging to integrate QoE assessment, management, and control schemes into the decision-making schemes. Furthermore, the fog architecture could also consider information from different sources, such as social networks, to find users located in the same the area which is sharing similar content.

- Rewarding Methods: It is necessary to design mechanisms for offering incentives to encourage user participation as a fog node, since it consumes computational resources and, consequently, energy. For instance, monetary schemes, coupons, virtual currency, or credit-based incentive mechanisms are the most direct rewarding methods in such case, but factors such as quantization, fairness, and effectiveness must be taken into account. In this context, it lacks a model to describe the best trade-off among rewarding methods, the overall QoE, and the individual contribution of devices for the multimedia system.

- Security: The authorization of the terminal equipment and the mobile subscriber to become a fog node must respect security goals, such as confidentiality, integrity, availability, and accountability. For a fog node to establish a secure communication with a client, some level of pre-provisioning (i.e., bootstrapping) on both nodes is necessary. The minimal number of configuration parameters (e.g., pre-shared keys) for each relay must be configured for each possible peer. This configuration would increase the amount of information the relays may keep for security procedures. On the other hand, cloud-assisted security mechanisms could decrease the required local resources, but they could also increase the execution time for by AAA parties. Consequently, much rests on enabling secure and authenticated multimedia exchanges between fog nodes, which should employ trusted links.

- Copyright: Considering fog nodes, as third-party devices, has implications on copyright issues. Since they can be viewed as parallel and unprotected communication links, as well as they can create threats for the management of digital rights. Therefore, it is also necessary to establish a chain of trust to support this mode of operation in the light of copyright enforcement. Siting between the specified security mechanisms, it is necessary to maintain dependably of the nodes that provide the multimedia content.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Singhal, C.; De, S. Energy-Efficient and QoE-Aware TV Broadcast in Next-Generation Heterogeneous Networks. IEEE Commun. Mag. 2016, 54, 142–150. [Google Scholar] [CrossRef]

- Cisco Visual Networking Index: Forecast and Methodology, 2015–2020, White Pape; Cisco: San Jose, CA, USA. Available online: http://www.cisco.com/c/dam/en/us/solutions/collateral/service-provider/global-cloud-index-gci/white-paper-c11-738085.pdf (accessed on 20 January 2018).

- Ericsson Mobility Report: On the Pulse of the Networked Society, White Paper; Ericsson: Plano, TX, USA. Available online: http://www.cisco.com/c/dam/en/us/solutions/collateral/service-provider/global-cloud-index-gci/white-paper-c11-738085.pdf (accessed on 20 January 2018).

- Peng, M.; Li, Y.; Jiang, J.; Li, J.; Wang, C. Heterogeneous Cloud Radio Access Networks: A New Perspective for Enhancing Spectral and Energy Efficiencies. IEEE Wirel. Commun. 2014, 21, 126–135. [Google Scholar] [CrossRef]

- Marotta, M.; Faganello, L.; Schimuneck, M.; Granville, L.; Rochol, J.; Both, C. Managing Mobile Cloud Computing Considering Objective and Subjective Perspectives. Comput. Netw. 2015, 93, 531–542. [Google Scholar] [CrossRef]

- Yin, X.; Jindal, A.; Sekar, V.; Sinopoli, B. A Control-Theoretic Approach for Dynamic Adaptive Video Streaming over HTTP. In Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication, London, UK, 17–21 August 2015; ACM: New York, NY, USA, 2015; pp. 325–338. [Google Scholar]

- Bilal, K.; Khalid, O.; Erbad, A.; Khan, S.U. Potentials, Trends, and Prospects in Edge Technologies: Fog, Cloudlet, Mobile Edge, and Micro Data Centers. Comput. Netw. 2018, 130, 94–120. [Google Scholar] [CrossRef]

- Kraemer, F.A.; Braten, A.E.; Tamkittikhun, N.; Palma, D. Fog Computing in Healthcare—A Review and Discussion. IEEE Access 2017, 5, 9206–9222. [Google Scholar] [CrossRef]

- Byers, C.C. Architectural Imperatives for Fog Computing: Use Cases, Requirements, and Architectural Techniques for Fog-Enabled IoT Networks. IEEE Commun. Mag. 2017, 55, 14–20. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Masip-Bruin, X.; Marín-Tordera, E.; Tashakor, G.; Jukan, A.; Ren, G.J. Foggy Clouds and Cloudy Fogs: A Real Need for Coordinated Management of Fog-to-Cloud Computing Systems. IEEE Wirel. Commun. 2016, 23, 120–128. [Google Scholar] [CrossRef]

- Varghese, B.; Wang, N.; Barbhuiya, S.; Kilpatrick, P.; Nikolopoulos, D.S. Challenges and Opportunities in Edge Computing. In Proceedings of the IEEE International Conference on Smart Cloud (SmartCloud), New York, NY, USA, 18–20 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 20–26. [Google Scholar]

- Huang, T.Y.; Johari, R.; McKeown, N.; Trunnell, M.; Watson, M. A Buffer-based Approach to Rate Adaptation: Evidence from a Large Video Streaming Service. In Proceedings of the ACM SIGCOMM CCR, Chicago, IL, USA, 17–22 August 2014; ACM: New York, NY, USA, 2014; pp. 187–198. [Google Scholar]

- Wang, X.; Chen, M.; Taleb, T.; Ksentini, A.; Leung, V. Cache in the Air: Exploiting Content Caching and Delivery Techniques for 5G Systems. IEEE Commun. Mag. 2014, 52, 131–139. [Google Scholar] [CrossRef]

- Song, F.; Ai, Z.Y.; Li, J.J.; Pau, G.; Collotta, M.; You, I.; Zhang, H.K. Smart Collaborative Caching for Information-Centric IoT in Fog Computing. Sensors 2017, 17. [Google Scholar] [CrossRef] [PubMed]

- Van Lingen, F.; Yannuzzi, M.; Jain, A.; Irons-Mclean, R.; Lluch, O.; Carrera, D.; Perez, J.L.; Gutierrez, A.; Montero, D.; Marti, J.; et al. The Unavoidable Convergence of NFV, 5G, and Fog: A Model-Driven Approach to Bridge Cloud and Edge. IEEE Commun. Mag. 2017, 55, 28–35. [Google Scholar] [CrossRef]

- Vilalta, R.; Lopez, V.; Giorgetti, A.; Peng, S.; Orsini, V.; Velasco, L.; Serral-Gracia, R.; Morris, D.; De Fina, S.; Cugini, F.; et al. TelcoFog: A unified flexible fog and cloud computing architecture for 5G networks. IEEE Commun. Mag. 2017, 55, 36–43. [Google Scholar] [CrossRef]

- Sun, X.; Ansari, N. EdgeIoT: Mobile Edge Computing for the Internet of Things. IEEE Commun. Mag. 2016, 54, 22–29. [Google Scholar] [CrossRef]

- Urgaonkar, R.; Wang, S.; He, T.; Zafer, M.; Chan, K.; Leung, K.K. Dynamic Service migration and Workload Scheduling in Edge-Clouds. Perform. Evaluation 2015, 91, 205–228. [Google Scholar] [CrossRef]

- Kumar, N.; Zeadally, S.; Rodrigues, J.J. Vehicular Delay-Tolerant Networks for Smart Grid Data Management Using Mobile Edge Computing. IEEE Commun. Mag. 2016, 54, 60–66. [Google Scholar] [CrossRef]

- Wang, N.; Varghese, B.; Matthaiou, M.; Nikolopoulos, D.S. ENORM: A Framework For Edge NOde Resource Management. IEEE Trans. Serv. Comput. 2017, PP, 1–14. [Google Scholar] [CrossRef]

- Jo, M.; Maksymyuk, T.; Strykhalyuk, B.; Cho, C.H. Device-to-Device-based Heterogeneous Radio Access Network Architecture for Mobile Cloud Computing. IEEE Wirel. Commun. 2015, 22, 50–58. [Google Scholar] [CrossRef]

- Batalla, J.M.; Krawiec, P.; Mavromoustakis, C.X.; Mastorakis, G.; Chilamkurti, N.; Negru, D.; Bruneau-Queyreix, J.; Borcoci, E. Efficient Media Streaming with Collaborative Terminals for the Smart City Environment. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar] [CrossRef]

- Liotou, E.; Tsolkas, D.; Passas, N.; Merakos, L. Quality of Experience Management in Mobile Cellular Networks: Key Issues and Design Challenges. IEEE Commun. Mag. 2015, 53, 145–153. [Google Scholar] [CrossRef]

- Pedersen, H.; Dey, S. Enhancing Mobile Video Capacity and Quality Using Rate Adaptation, RAN Caching and Processing. IEEE/ACM Trans. Netw. 2016, 24, 996–1010. [Google Scholar] [CrossRef]

- Juluri, P.; Tamarapalli, V.; Medhi, D. Measurement of Quality of Experience of Video-on-Demand Services: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 401–418. [Google Scholar] [CrossRef]

- Sheng, M.; Han, W.; Huang, C.; Li, J.; Cui, S. Video Delivery in Heterogenous CRANS: Architectures and Strategies. IEEE Wirel. Commun. 2015, 22, 14–21. [Google Scholar] [CrossRef]

- Wang, X.; Chen, M.; Han, Z.; Wu, D.; Kwon, T. TOSS: Traffic Offloading by Social Network Service-based Opportunistic Sharing in Mobile Social Networks. In Proceedings of the IEEE International Conference on Computer Communications (INFOCOM), Toronto, ON, Canada, 27 April–2 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2346–2354. [Google Scholar]

- Cha, M.; Kwak, H.; Rodriguez, P.; Ahn, Y.Y.; Moon, S. I Tube, You Tube, Everybody Tubes: Analyzing the World’s Largest User Generated Content Video System. In Proceedings of the 7th ACM SIGCOMM conference on Internet measurement, San Diego, CA, USA, 24–26 October 2007; ACM: New York, NY, USA, 2007; pp. 1–14. [Google Scholar]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; ACM: New York, NY, USA, 2012; pp. 13–16. [Google Scholar]

- Jarschel, M.; Zinner, T.; Hoßfeld, T.; Tran-Gia, P.; Kellerer, W. Interfaces, Attributes, and Use Cases: A Compass for SDN. IEEE Commun. Mag. 2014, 52, 210–217. [Google Scholar] [CrossRef]

- Wickboldt, J.A.; De Jesus, W.P.; Isolani, P.H.; Both, C.B.; Rochol, J.; Granville, L.Z. Software-Defined Networking: Management Requirements and Challenges. IEEE Commun. Mag. 2015, 53, 278–285. [Google Scholar] [CrossRef]

- Baktir, A.C.; Ozgovde, A.; Ersoy, C. How Can Edge Computing Benefit from Software-Defined Networking: A Survey, Use Cases & Future Directions. IEEE Commun. Surv. Tutor. 2017, 19, 2359–2391. [Google Scholar]

- Zacarias, I.; Gaspary, L.P.; Kohl, A.; Fernandes, R.Q.; Stocchero, J.M.; de Freitas, E.P. Combining Software-Defined and Delay-Tolerant Approaches in Last-Mile Tactical Edge Networking. IEEE Commun. Mag. 2017, 55, 22–29. [Google Scholar] [CrossRef]

- Zheng, K.; Hou, L.; Meng, H.; Zheng, Q.; Lu, N.; Lei, L. Soft-Defined Heterogeneous Vehicular Network: Architecture and Challenges. IEEE Netw. 2016, 30, 72–80. [Google Scholar] [CrossRef]

- Luo, T.; Tan, H.P.; Quek, T.Q. Sensor OpenFlow: Enabling Software-Defined Wireless Sensor Networks. IEEE Commun. Lett. 2012, 16, 1896–1899. [Google Scholar] [CrossRef]

- FFmpeg. ffplay Documentation. Available online: https://ffmpeg.org/ffplay.html (accessed on 20 January 2018).

- Clark, C.; Fraser, K.; Hand, S.; Hansen, J.G.; Jul, E.; Limpach, C.; Pratt, I.; Warfield, A. Live Migration of Virtual Machines. In Proceedings of the 2nd Conference on Symposium on Networked Systems Design & Implementation, Berkeley, CA, USA, 2–4 May 2005; Volume 2, pp. 273–286. [Google Scholar]

- Patel, P.D.; Karamta, M.; Bhavsar, M.; Potdar, M. Live Virtual Machine Migration Techniques in Cloud Computing: A Survey. Int. J. Comput. Appl. 2014, 86, 18–21. [Google Scholar]

- Leelipushpam, P.G.J.; Sharmila, J. Live VM Migration Techniques in Cloud Environment—A survey. In Proceedings of the IEEE Conference on Information & Communication Technologies (ICT), Thuckalay, Tamil Nadu, India, 11–12 April 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 408–413. [Google Scholar]

- NITLAB. Icarus Nodes Documentation. Available online: https://nitlab.inf.uth.gr/NITlab/hardware/wireless-nodes/icarus-nodes (accessed on 20 January 2018).

- Pica8. Pica 8 white box SDN. Available online: http://www.pica8.com/ (accessed on 20 January 2018).

- Project Floodlight. Open Source Software for Building Software-Defined Networks. Available online: http://www.projectfloodlight.org/floodlight/ (accessed on 20 January 2018).

- ExoPlayer. An Extensible Media Player for Android. Available online: https://github.com/google/ExoPlayer (accessed on 20 January 2018).

- KVM. Kernel Virtual Machine Documentation. Available online: https://www.linux-kvm.org/page/Main_Page (accessed on 20 January 2018).

- Video Library. Download Guide: Big Buck Bunny, Sunflower version. Available online: http://bbb3d.renderfarming.net/download.html (accessed on 20 January 2018).

| Video Resolution | Video Bitrate |

|---|---|

| 240p | 300 kbps |

| 360p | 500 kbps |

| HD 480p | 1000 kbps |

| HD 720p | 1500 kbps |

| HD 720p | 2250 kbps |

| Full HD 1080p | 3000 kbps |

| Full HD 1080p | 4500 kbps |

| Quad HD 1440p | 6000 kbps |

| Quad HD 1440p | 9000 kbps |

| 4K UHD 2160p | 13,000 kbps |

| 4K UHD 2160p | 20,000 kbps |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosário, D.; Schimuneck, M.; Camargo, J.; Nobre, J.; Both, C.; Rochol, J.; Gerla, M. Service Migration from Cloud to Multi-tier Fog Nodes for Multimedia Dissemination with QoE Support. Sensors 2018, 18, 329. https://doi.org/10.3390/s18020329

Rosário D, Schimuneck M, Camargo J, Nobre J, Both C, Rochol J, Gerla M. Service Migration from Cloud to Multi-tier Fog Nodes for Multimedia Dissemination with QoE Support. Sensors. 2018; 18(2):329. https://doi.org/10.3390/s18020329

Chicago/Turabian StyleRosário, Denis, Matias Schimuneck, João Camargo, Jéferson Nobre, Cristiano Both, Juergen Rochol, and Mario Gerla. 2018. "Service Migration from Cloud to Multi-tier Fog Nodes for Multimedia Dissemination with QoE Support" Sensors 18, no. 2: 329. https://doi.org/10.3390/s18020329

APA StyleRosário, D., Schimuneck, M., Camargo, J., Nobre, J., Both, C., Rochol, J., & Gerla, M. (2018). Service Migration from Cloud to Multi-tier Fog Nodes for Multimedia Dissemination with QoE Support. Sensors, 18(2), 329. https://doi.org/10.3390/s18020329