1. Introduction

Bearings are critical parts in rotating machines and their health condition has a great impact on production. However, because of non-linear factors such as frictions, clearance and stiffness, vibration signals of bearings acquired in real application scenarios are characterized by non-linearity and instability which make bearing fault diagnosis difficult [

1].

The general fault diagnosis process involves three main steps, namely signal acquisition and processing, fault feature extraction and fault feature classification [

2]. Sensors are utilized to acquire signals with noises, and signal processing techniques are applied subsequently to improve the signal-to-noise ratio [

3]. Particularly, ideal fault feature extraction can express the feature information of filtered signals comprehensively and efficiently, and it is the basis to produce an accurate fault feature classification. Therefore, a reasonable and efficient fault feature extraction plays an important role in fault diagnosis. Current fault extraction methods mainly include time domain, frequency domain and time-frequency domain analysis [

4].

Time domain analysis is one of the earliest methods studied and applied. It calculates various statistical parameters in the time domain, for instance peak amplitude, kurtosis and skewness [

5,

6,

7] to construct feature vectors. Frequency domain analysis transforms signals from the time domain into the frequency domain first, mainly focusing on Fourier Transform (FT) [

8], then the periodical features, frequency features and distribution features of signals are extracted with methods such as cepstrum analysis and envelope spectrum analysis to construct feature vectors [

9,

10].

However, time domain or frequency domain analysis only extracts the information in the corresponding domain, resulting in the loss of information in the other domain. With in-depth study, time-frequency domain fault feature extraction methods were developed accordingly. They can extract both time and frequency information. They also have shown superiority for analyzing nonlinear and unstable signals.

As a typical time and frequency domain analysis method, Short Time Fourier Transform (STFT) [

11] improves the analysis capability for unstable signals by introducing a fixed-width time window function. However, a fixed-width time window function in STFT cannot guarantee optimal time and frequency resolution simultaneously. The Wavelet Transform (WT) [

12] introduces time and frequency scale coefficients to overcome the drawbacks of STFT. WT is based on the theory of inner product mapping and a reasonable basis function is the key to guarantee the effectiveness of WT. However, it is difficult to select a proper basis function. Therefore, to improve the adaptive analysis capability to signals, Empirical Mode Decomposition (EMD) [

13] and Local Mean Decomposition (LMD) [

14] methods were successively studied and applied. According to the local characters of signals themselves, EMD and LMD adaptively decompose a signal into various components which have better statistical characters for later analysis. Compared with each other, EMD is a mature tool for long-term study and usage, while LMD has an improved decomposition process and better decomposition results with physical explanations [

15].

In recent years, EMD and LMD have been extensively studied and implemented. Mejia-Barron et al. [

16] developed a method based on EMD to decompose signals and extract features, completing the fault diagnosis of winding faults. Saidi et al. [

17] introduced a synthetical application of bi-spectrum and EMD to detect bearing faults. Cheng et al. [

18] combined EEMD and entropy fusion to extract fault features for planetary gearboxes, and furthermore implemented fault diagnosis successfully. Yi et al. [

19] also utilized EEMD to pre-process signals for further fault diagnosis for bearings. Liu and Han et al. [

20] applied LMD and multi-scale entropy methods to extract fault features and analyzed faults successfully. Yang et al. [

21] proposed an ensemble local mean decomposition method and applied it in rub-impact fault diagnosis for rotor systems. Han and Pan et al. [

22] integrated LMD, sample entropy and energy ratio to process vibration signals and realized the fault feature extraction and fault diagnosis in rolling element bearings. Yasir and Koh et al. [

23] adopted LMD and multi-scale permutation entropy and realized bearing fault diagnosis. Guo et al. [

24] studied an improved fault diagnosis method for gearbox combining LMD and a synchrosqueezing transform.

Fault feature classification is implemented after fault feature extraction. Nowadays, shallow machine learning methods are extensively utilized to solve the classification problem. Support Vector Machine, Artificial Neural Network and Fuzzy Logical System are widely applied in condition monitoring and fault diagnosis [

25]. Particularly, SVM is based on statistics and minimum theory of structured risk, and it has better classification performance when dealing with the practical problems of a small amount of and non-linear samples. To solve the multi-class classification problems, based on SVM, Cherkassky [

26] proposed a one-against-all (oaa) strategy in his studies, transforming a N-class classification problem into

N binary classification problems. Also, Kressel [

27] used a method to transform a N-class classification problem into

binary classification problems, namely the one-against-one (oao) strategy. Wu et al. [

28] adopted SVM to diagnosis via analyzing the full-spectrum to extract fault features. Saimurugan et al. [

29] improved the diagnosis performance by integrating SVM and avdecision tree. Santos et al. [

30] selected SVM for classification in wind turbine fault diagnosis with several trails of different kernels.

Currently, researchers all over the world have carried out extensive studies on bearing fault diagnosis. To our best knowledge, fault diagnosis methods still need further study, although various solutions have been investigated from different aspects. The main problems to be solved in this paper are summarized as follows:

- (1)

Vibration signals acquired in real application scenarios are non-linear and unstable and their statistical characters are time-varying. Hence, it is difficult to extract effective and comprehensive fault features only in the time-domain or in frequency-domain.

- (2)

Conventional fault feature extraction methods take the overall characteristics of signals into account via calculating statistical parameters to construct feature vectors with fixed dimensions, however, local detailed characteristics are neglected. Therefore, fault information contained in vectors may be insufficient or redundant in different working conditions because vectors have a fixed dimension, consequently leading to lower reliability and robustness of fault feature extraction. Meanwhile, data-driven classifiers are sensitive to classification features and minor changes in classification features may result in performance reduction [

31].

In order to improve the fault diagnosis performance, in this paper, SSA is proposed to adaptively extract fault features and construct unfixed-dimension feature vectors according to local characters of signals. Then, SSA is implemented under the designed framework. Signals are decomposed firstly to obtain components with better analyzability, LMD and EEMD are both utilized to decompose signals into different components from different analysis aspects. SSA is utilized to extract fault features adaptively and feature vectors with non-fixed dimensions are constructed subsequently. Finally, SVM is selected to classify the fault features considering its inherent advantages to small amount train samples.

2. Methodology

2.1. Self-Adaptive Spectrum Analysis

Aiming at solving the problem that conventional feature extraction methods neglect local details of signal and fault information may be redundant or insufficient because of fixed-dimension feature vectors, Self-adaptive Spectrum Analysis (SSA) is proposed. With the SSA method, unfixed-dimension feature vectors are constructed by extracting the local characteristics of signals adaptively.

At first, a number of signals corresponding to different categories of fault types are selected. To implement SSA method efficiently, Fast Fourier Transform (FFT) is used to transform the signals into frequency domain to get corresponding spectrums for better readability. Then an overall frequency-window is set to all spectrums according to the fluctuation in spectrums, and local feature information inside the frequency-window is extracted to construct feature vectors.

In order to implement the proposed SSA, some definitions are given:

Definition 1. Differential frequency.

is the minimum frequency unit in SSA. Normally, feature information is extracted at points corresponding to (n = 1, 2, 3,…), where is calculated as follows:

Firstly, in each spectrum, the maximum amplitude and corresponding frequency value are found. All the frequency values are denoted as , , , …, , where m means the sequence number of signals. More than two fault categories must be included within the selected signals.

Secondly, the frequency values are arranged into different vectors according to the categories of samples; vectors are denoted as:

where

k means

k kinds of faults,

. Here we assume that different categories have the same amount of signals. Then, the average values of all elements in each vector are figured out and denoted as

,

,…,

, respectively, then a vector

is constructed.

Thirdly, minimum frequency value and the maximum frequency value are selected in vector . Then, two neighboring frequency values are also selected in , between which there is the maximum value among the differences between every two neighboring frequencies, the lower frequency is denoted as and the higher one is denoted as .

Finally,

,

,

,

are arranged in ascending order, and absolute values

of differences between every neighboring two frequencies are calculated. The minimum non-zero

value is picked to be the value of

:

Definition 2. Frequency Window.

The frequency window is a specific frequency section for extracting feature information,

is the left boundary while

is the right boundary. Frequency window is determined with fixed boundaries, and feature information is extracted inner the window. Boundaries are calculated as follows:

where

is a round down function,

is a round up function.

Definition 3. Tolerance.

Tolerance

denotes that in a section which is centered with

,

is taken as the semidiameter to determine the searching section (

,

], and the maximum amplitude value corresponding to a frequency within this section can be regarded as the amplitude value to

.

is calculated as follows:

Definition 4. Peak value ratio coefficient.

denotes the degree of peak amplitude value. It is utilized to judge whether the amplitude value is normal or not and all construct fault feature vectors. is calculated as follows:

Firstly, average value of all the amplitude values in frequency window [

] is calculated, denoted as

, also the maximum amplitude value in section (

,

] is selected and denoted as

. Finrfally,

can be calculated as follows:

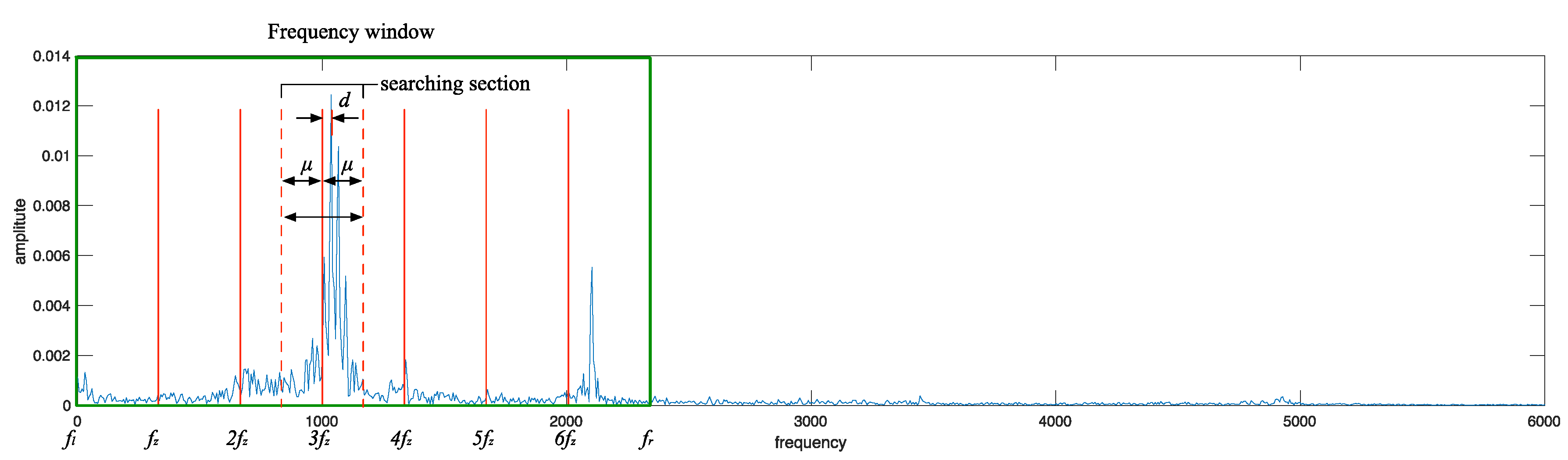

Figure 1 gives a description of the definitions mentioned above.

Combined with

Figure 1, SSA is implemented on each spectrum as follows:

- (1)

Calculating values of differential frequency and boundaries , , frequency window is determined;

- (2)

Calculating all the values, taking as side intervals to determine different searching sections;

- (3)

Selecting the maximum amplitude in each searching section and corresponding frequency value, calculating the absolute frequency interval between this frequency value and section center , also, are calculated, frequency interval vector , Peak value ratio coefficient vector ;

- (4)

Setting a threshold value for , and could be optimized automatically by the overall accuracy. is used to judge if an anomaly exists in sections. When , the corresponding section is regarded as an abnormal one;

- (5)

If an anomaly is found, figuring out whether all the frequency values corresponding to abnormal sections are on the same side of (n = 1, 2, 3,…) along the frequency axis simultaneously. If they are on the same side, selecting a minimum in , and shifting the spectrum to the opposite direction by . Subsequently, repeating steps 1 to 3. While, if they are not on the same side, skip steps 5 and 6;

- (6)

is taken as the fault feature vector extracted from the spectrum.

2.2. Framework Construction of Fault Diagnosis

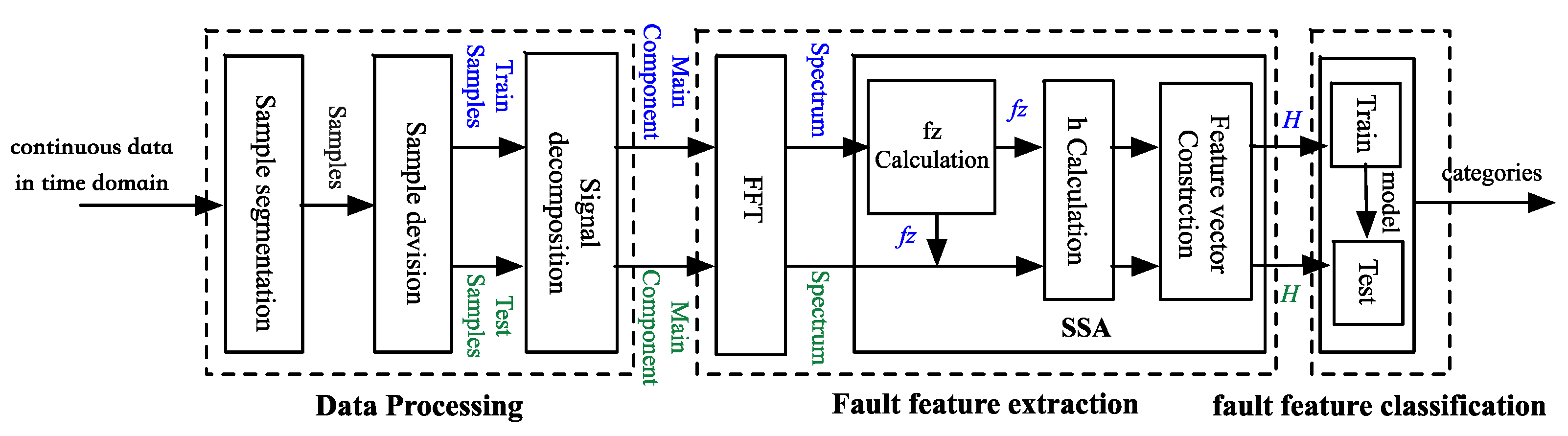

The overall framework construction of the proposed fault diagnosis method based on SSA in our research is shown in

Figure 2.

The proposed fault diagnosis method includes three parts, namely data processing, fault feature extraction and fault feature classification.

2.2.1. Data Processing

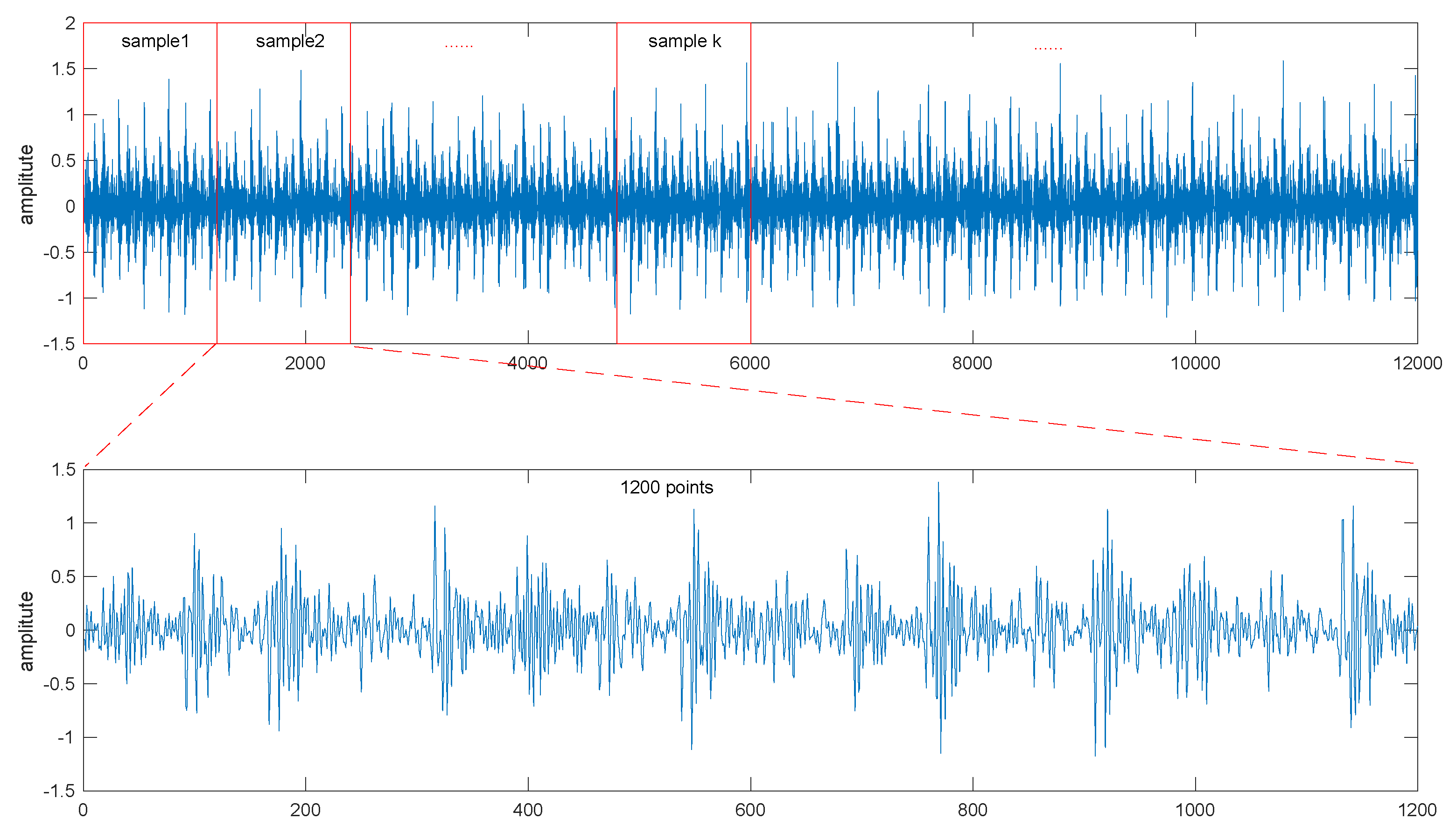

As shown in

Figure 3, a signal segment containing 120,000 points is selected, then it is segmented into 100 parts with a same length. In total, 100 samples are extracted from one signal segment. Therewith, 100 samples are separated into a training sample set and a test sample set.

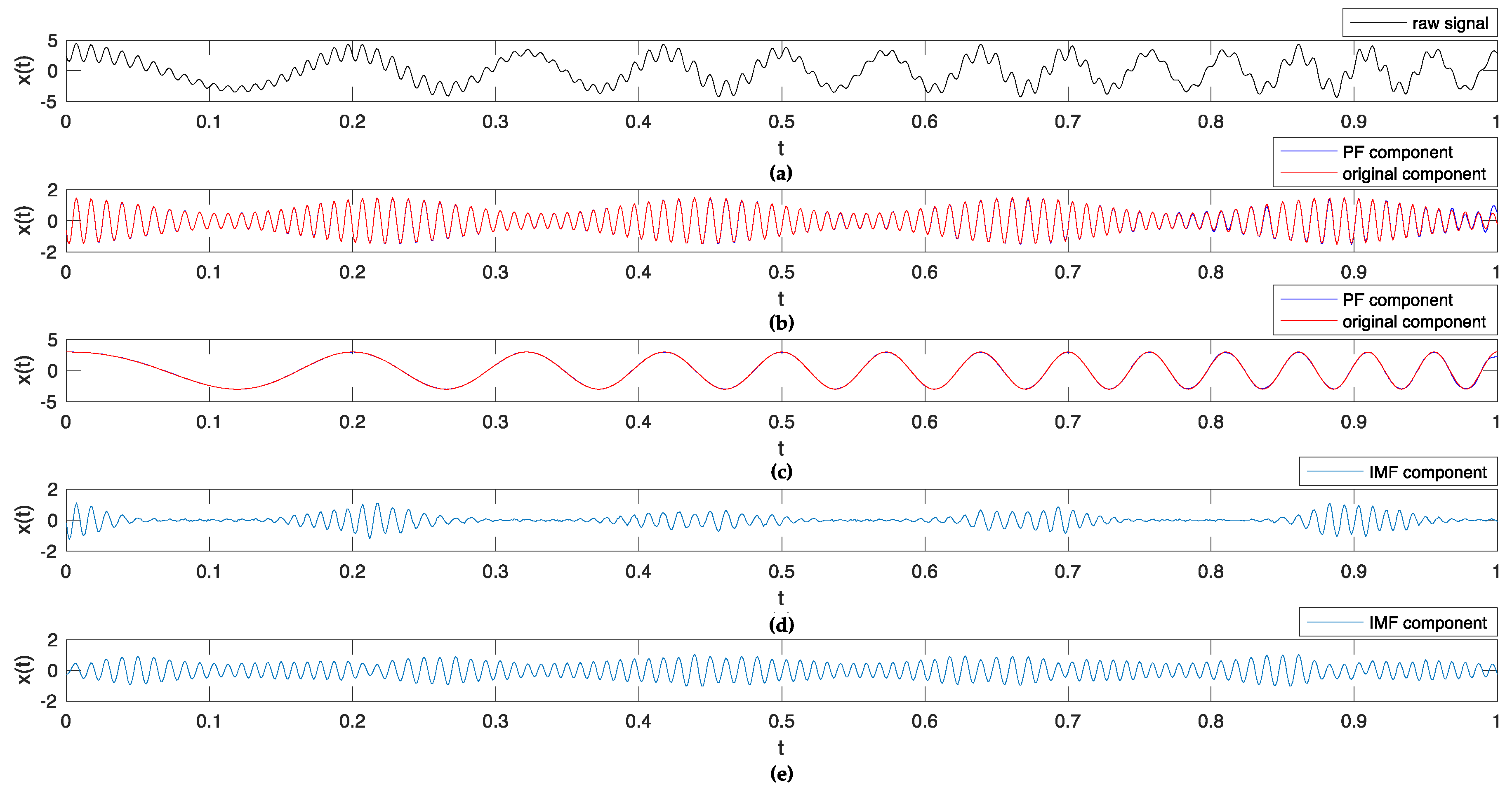

Each sample is decomposed into a set of components with better analyzability with a time-frequency analysis method, LMD and EEMD are two commonly used ones. The very first component in each set of components is chosen to extract fault features because they accumulate the main part of the energy.

2.2.2. Fault Feature Extraction

FFT is utilized to transform the decomposed component into the frequency domain, and then SSA is implemented to extract fault features. First the components of the training samples are selected to calculate , and is utilized for both the training samples and test samples to extract fault features.

2.2.3. Fault Feature Classification

Fault feature vectors are classified into different fault patterns. Vectors extracted from the training samples are utilized to train the classification model and parameters are tuned to optimize the model. Here, SVM is selected because of its better performance in classification with small samples. Eventually, categories are output with the well-trained model.

2.3. Experiment Preparation

2.3.1. Data Selection and Processing

Vibration signals acquired from bearings are utilized for validation. In this paper, selected bearing data published by Case Western Reverse University were used [

32]. Single point faults are introduced to the test bearings on different parts (ball, inner race and outer race) to simulate different kinds of faults. Vibration signals of different kinds of faults with different failure degrees are collected under different loads to construct the experimental data set.

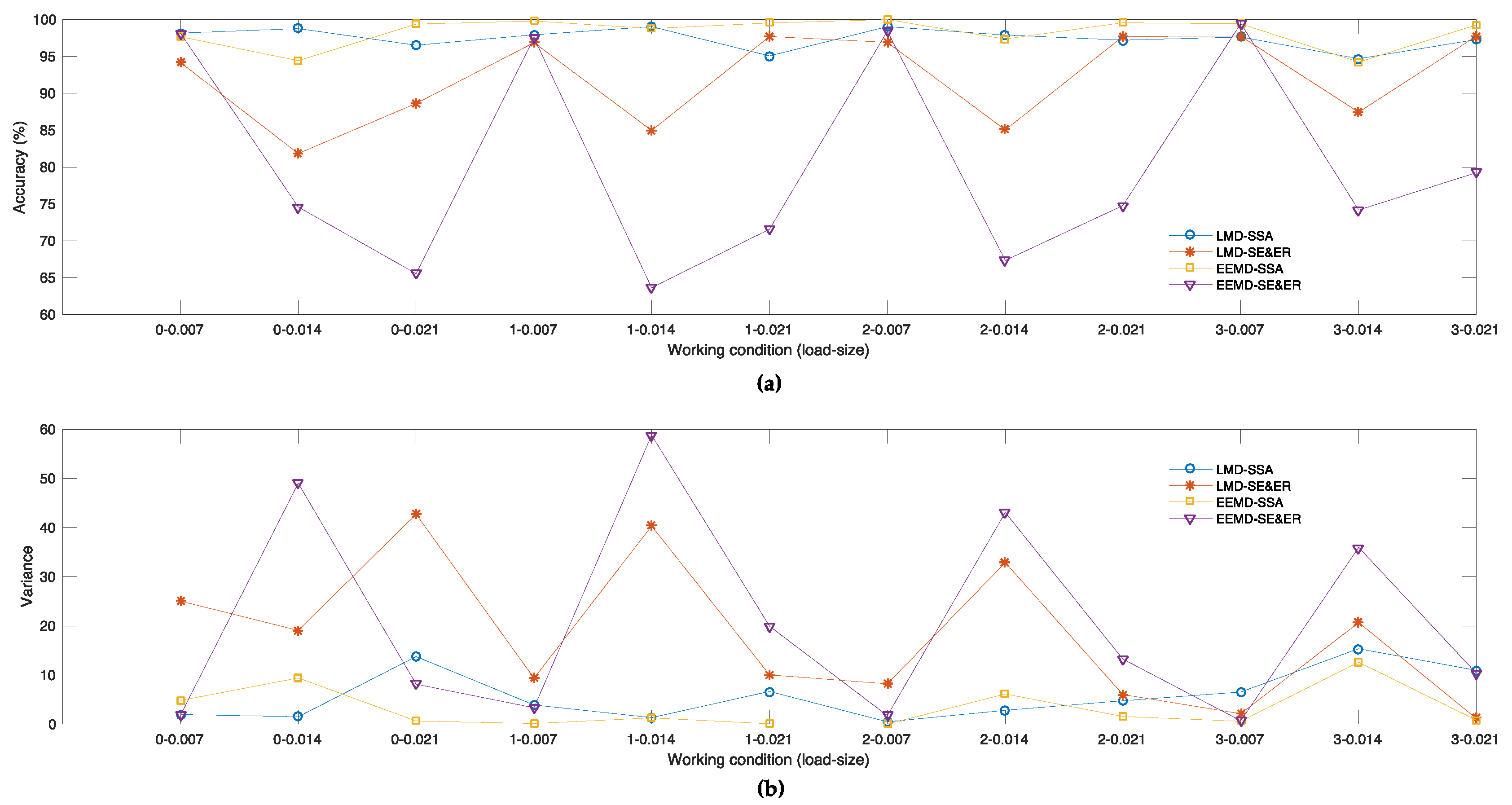

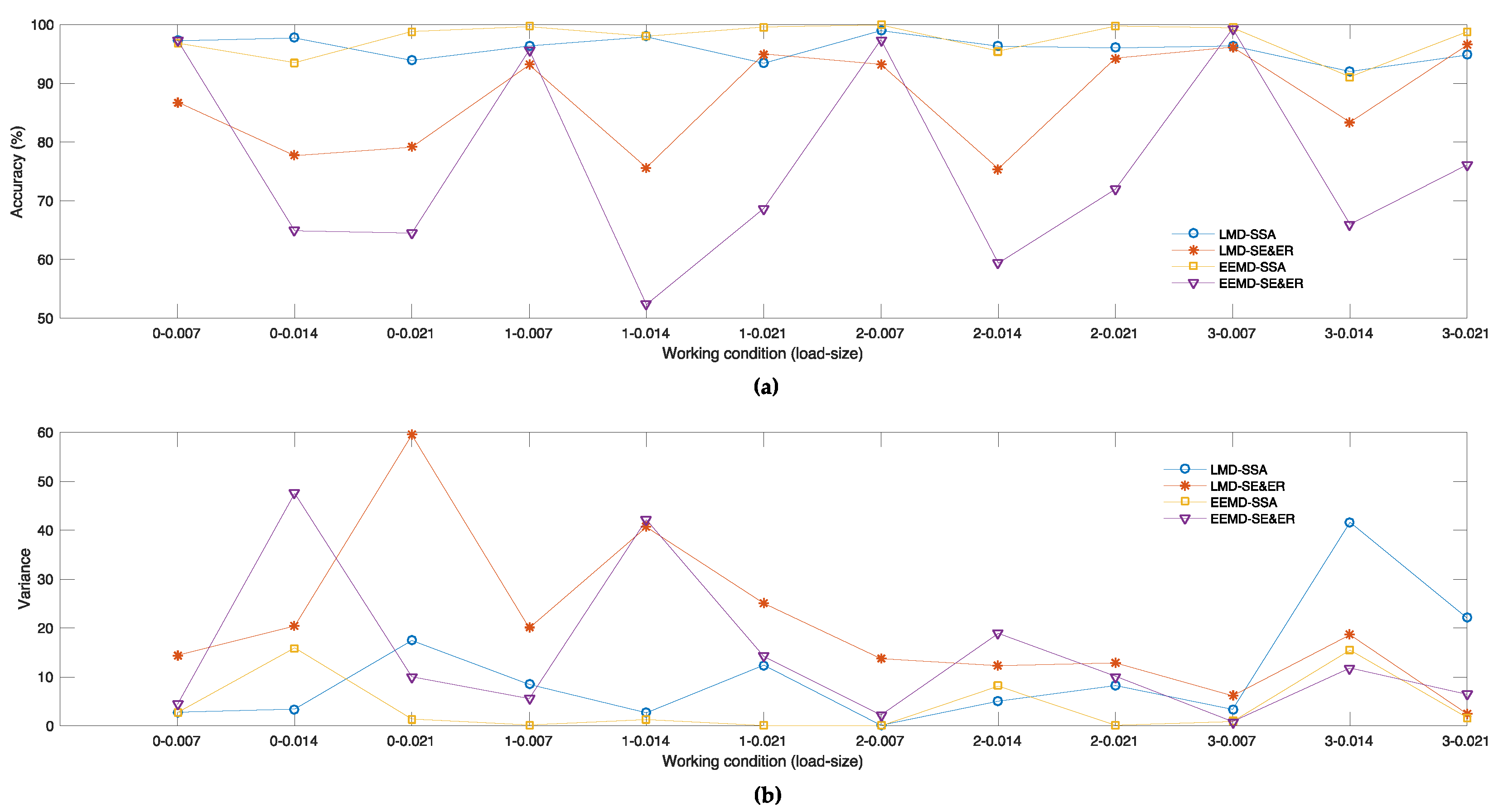

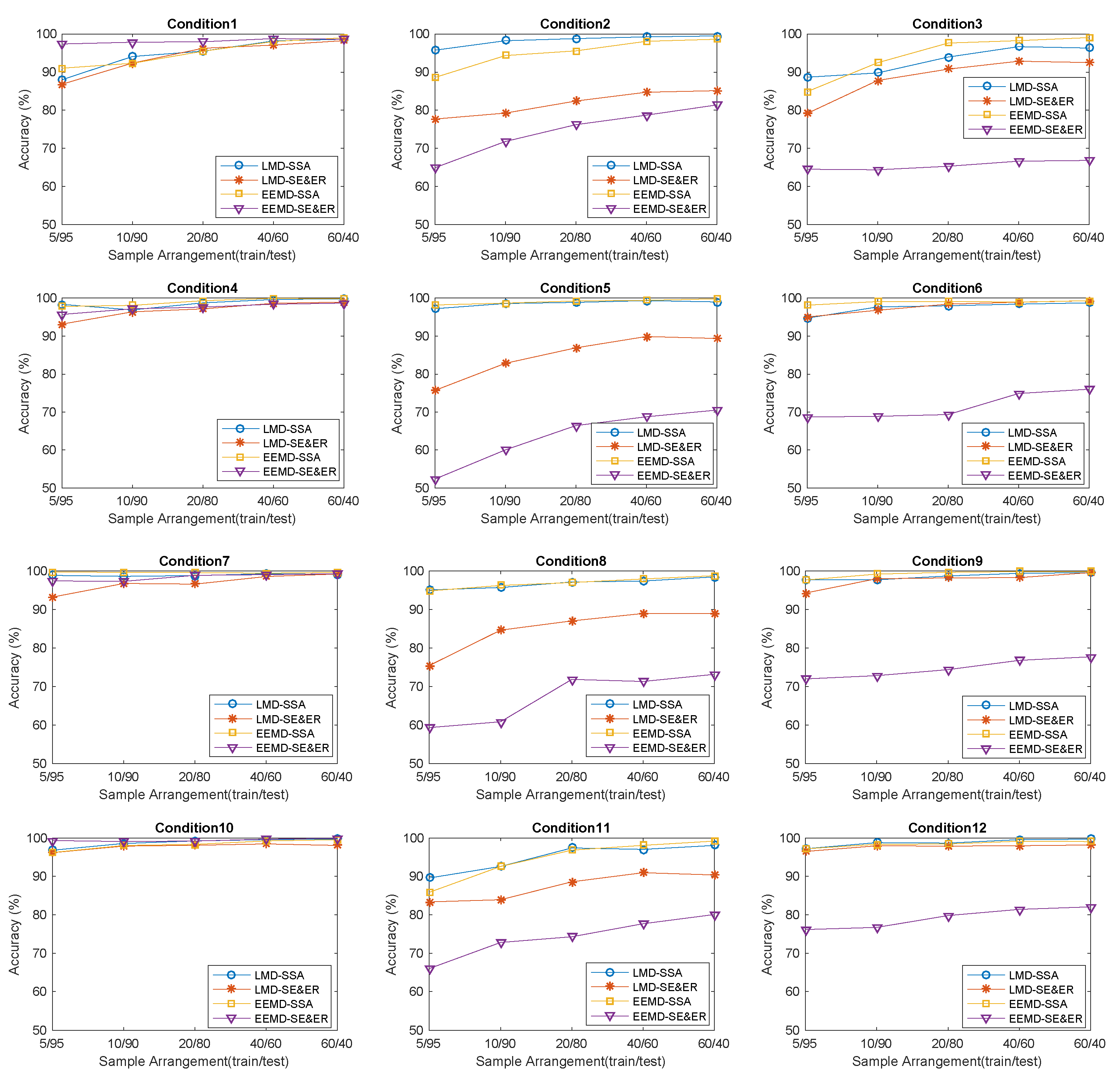

The data set consisted of vibration data collected on SKF bearings, and the sampling frequency is 12 kHz. Twelve kinds of combinations under four kinds of loads (0, 1, 2 and 3 hp) and three kinds of failure degrees (0.007, 0.014 and 0.021 inch) form 12 different working conditions.

Under each working condition, four kinds of fault mode (normal, ball fault, inner race fault and outer race fault) are simulated, and four time-varying signals corresponding to the faults are collected, respectively. Each signal is processed with the proposed method given in

Figure 3 to extract 100 samples, and 100 feature vectors are subsequently constructed. Eventually, 400 feature vectors are determined under every working condition.

2.3.2. Parameter Determination

Parameters corresponding to decomposition methods, fault feature extraction process and fault feature classification modeling process are determined as follows:

Parameters to be determined in signal decomposition methods:

- (1)

In LMD, parameters are determined according to reference [

33];

- (2)

In EEMD, parameters are determined according to reference [

34];

Parameters to be determined in SSA method:

- (1)

, differential frequency value is calculated according to Equation (2);

- (2)

, left boundary value is calculated according to Equation (3);

- (3)

, right boundary value is calculated according to Equation (4);

- (4)

, tolerance value is calculated according to Equation (5);

- (5)

, peak value coefficient ratio is calculated according to Equation (6);

- (6)

, the minimum value in vector is selected as the threshold value of ;

Parameters to be determined in pattern recognition method:

- (1)

In SVM, cost

c is a basic parameter while

g is a specific one in RBF kernel. In this paper, Grid search [

35] is applied and overall accuracy is taken into consideration to tune the two parameters.