Abstract

Demand for interactive wearable devices is rapidly increasing with the development of smart devices. To accurately utilize wearable devices for remote robot controls, limited data should be analyzed and utilized efficiently. For example, the motions by a wearable device, called Myo device, can be estimated by measuring its orientation, and calculating a Bayesian probability based on these orientation data. Given that Myo device can measure various types of data, the accuracy of its motion estimation can be increased by utilizing these additional types of data. This paper proposes a motion estimation method based on weighted Bayesian probability and concurrently measured data, orientations and electromyograms (EMG). The most probable motion among estimated is treated as a final estimated motion. Thus, recognition accuracy can be improved when compared to the traditional methods that employ only a single type of data. In our experiments, seven subjects perform five predefined motions. When orientation is measured by the traditional methods, the sum of the motion estimation errors is 37.3%; likewise, when only EMG data are used, the error in motion estimation by the proposed method was also 37.3%. The proposed combined method has an error of 25%. Therefore, the proposed method reduces motion estimation errors by 12%.

Keywords:

motion estimation; Bayesian probability; Myo device; orientation; EMG; genetic algorithm; weight 1. Introduction

Motion controller devices for user interfacing are utilized to recognize user’s motions or to control remote robots. Motion recognition is an approach that enables users to interact with a computer naturally by using wearable devices to capture their movements, positions, and gestures. These devices become increasingly smaller, more intelligent, and more varied in type and configuration. For example, motions are detected by measuring arm angles or muscle activities from a band worn on a arm [1], wearing a ring can allow for finger gestures to be utilized as input signals [2], and gloves can be sued to recognize users’ hand gestures [3]. Myo device, developed by Thalmic Labs, is a band-shaped gesture control device that is worn on one of arms [4].

Researchers have proposed motion estimation methods based on Bayesian probability to reduce the number of Myo device [5], but these methods have several problems. First, Bayesian probability lowers accuracy because it is calculated by dividing the Myo device data into sections without considering its distribution. Second, motion estimation accuracy is reduced when Bayesian probability is calculated without considering the associations of each of the x, y, and z orientations. Therefore, it is necessary to improve the Bayesian probability approach to motion estimation to increase estimation accuracy. In other words, accuracy can be increased by using additional data such as electromyogram (EMG) of Myo device.

This paper proposes a method for calculating Bayesian probabilities and estimating motions using EMG and orientation data from a Myo device. The final motions are determined by comparing the Bayesian probabilities of motions applying the weights that are calculated by the proposed method based on orientations and EMGs. In this paper, the measured raw data of subjects who are not calibrated for predefined motions are used. In this paper, only the orientation and EMG are utilized without using any velocity. Orientation data and EMG signals of a Myo device measured at a rate of 30 frames is utilized.

The remainder of the paper is organized as follows: Section 2 introduces research on wearable devices. Section 3 proposes a method for Bayesian probability calculation and motion estimation based on multiple types of Myo device data. Section 4 describes the experimental method, results, and the analysis of those results. Finally, Section 5 outlines the conclusions and explores directions of future research.

2. Related Works

There are the diverse kinds of contactable wearable devices, such as Myo device and GestTrack3D Hand Tracker of GestTrackTek in USA [6]. Myo device is a built-in armband processor that recognizes motions by sensing the movements of the hands and arms delivered by the muscles through orientation, Inertial Measurement Units (IMU), and eight EMG sensors. Unlike Kinect and Leap Motion, which are widely known as motion recognition devices, Myo device and GestTrack3D Hand Tracker are Bluetooth-connected, so that they can be used remotely at up to 15 m.

When a Myo device is worn, the sensors measure muscle activity to determine the kind of gestures performed expressed as electrical signals. For example, an EMG extracts and amplifies the activity accompanying muscle contraction. The muscle sensor is composed of an amplifier, a high-pass filter (HPF), and a low-pass filter (LPF). EMG signals are amplified because the intensity of signals from muscle contractions is minute. The range of signals measured in the muscles is 10–2000 Hz, whereas the informative signals from the typical human’s muscles usually appears at 20–450 Hz. Therefore, signals of other frequencies can be regarded as noise. Myo device is equipped with an EMG, Bluetooth 4.0 communication, a three-axis accelerator, a three-axis gyroscope, and a three-axis magnetometer. Myo device can measure 25 finger and arm movements that are related to muscle activity, including movements more complex than simple hand gestures. It also measured the degrees of freedom (DOFs) of the hand, and can provide natural language interactions. Harrison et al. [1] studied the differences in recognition accuracy across user group in a system like Myo device, in which 10 sensors were attached to the arm sensor to learn the waveform using machine learning.

The Myo device also has a 9-axis Inertial Measurement Unit (IMU) that includes a 3-axis gyroscope, a 3-axis accelerometer, and a 3-axis magnetometer. The direction and movement of the wearer’s arm are measured by these units and determined by analyzing the spatial data provided. Orientation represents the positions of the band in terms of roll, pitch, and yaw. The angular velocity of Myo device is provided in vector form and the accelerometer represents the acceleration corresponding of Myo device.

Strelow and Singh [7] proposed a motion estimation method using a camera and various sensors. Performance improved when the motion estimation method using the camera, the gyro sensor, and the acceleration sensor, as compared to when the camera was used independently. Gesture recognition through the integration of acceleration and EMG sensors [8] and the fast Fourier transform (FFT) of EMG sensors has been investigated when setting a specific EMG recognition interval [9]. Additional learning methods, such as a decision tree of acceleration sensor data and a k-nearest neighbor algorithm, have been studied for behavior recognition [10]. Further, behavior recognition has been performed using dynamic Bayesian networks of bio-signals and acceleration sensors [11].

A study of cube games using gesture recognition [6] recognized hand gestures through several EMG sensors and three-axis acceleration sensors. In addition, studies on gesture recognition have examined using EMG signals of static hand movements [12], and Wilcher motor control of wearable devices to recognize the EMG signals of neck muscles [13]. However, most of these studies are limited to a static motion, rather than a changing dynamic pattern of motion over time [8,13], or a motion that is dynamic, but clearly distinguishable [9]. On the other hand, there are also other research methods using genetic algorithm optimizations [14,15,16,17].

Since biological signals have very different body characteristics, it is necessary to use very complex algorithms, such as the classification of precise sensors and clear operation, learning, and processing using a large amount of data, and so the research scope is very limited.

To improve motion estimation accuracy, the equipment used must also be improved, which can be costly. Kim et al. [5] proposed a motion estimation method using one Myo device sensor that estimates subordinate motion using data placed in dependency by the Bayesian probability of the data obtained from two Myo devices for motion estimation. To estimate motion, the sensor value section is determined after finding the interval based on all Myo device sensor value ranges, and the Bayesian probability is calculated when considering the number of sensor values measured in each section. However, most sensor values are contained in only a few intervals, and are excluded from others.

Lee [18] proposed an algorithm that redefines a data segment by redefining only the segment with the measured sensor value, and determines the motion to be meaningful data. However, there are limitations in solving this problem by considering only orientation data; we propose a motion estimation method based on weighted with Genetic algorithms and Bayesian probability, which suggests a Bayesian probability for orientation data and EMG data separately, and then selects the final motion by comparing the two in motion estimation. In this study, we define five basic operations and compute the motion probabilities that are estimated by various data characteristics to derive the final probability, enabling more accurate motion estimation under a variety of conditions.

Recently, Myo’s EMG motion estimation study is Kim [19]. They suggested an algorithm to estimate the arm motion using the MYO armband attached to the upper arm. The motion of the lower arm is estimated through the EMG signal of the upper arm. The motion of the upper arm is estimated through the IMU sensor. They used an algorithm with sum, average, and rate based on the data measured on EMG of MYO. According to the results of this study, accurate motion estimation results can be confirmed at 0° and 90°.

Nourhan T. [20] proposed a method to measure the muscle momentum according to the degree of muscle contraction during exercise using EMG data. The characteristics of the EMG obtained from the muscles are analyzed to classify the RMS (Root Mean Square) and the MDF (M-dimensional feature vector), and then the data set is learned using the artificial neural network (ANN). They are using ANN proposed two algorithms to predict muscle fatigue by classifying them as non-fatigue and fatigue.

Mendez, I. [21] proposed a method to solve the problem by decision tree using measured data from Myo’s EMG to determine user’s pose. After learning the measured data of 10 scissors, rocks, and beams, we randomly played the game and showed 78% of the results. Myo’s EMG signal is analyzed to show the related research on classification method for motion estimation as a research method that is applied to game after learning.

However, the Myo device is better suited to determine the relative position of the arm, not the absolute position. Myo device-related approaches can be applied to diverse fields, such as robot control and remote medical support. Since Myo device’s sensor data feature always provides a constant absolute coordinate system, the Myo device orientation starts measuring and is arranged in the current position with the movement of the next position and relative position coordinates.

In this sense, “relative” in this study is the Bayesian probability by taking Myo device on the upper arm and forearm of right arm in the learning stage and then taking into account their correlation. In the recognition phase, Myo device is put on the forearm of the arm, and then the Bayesian probability is determined and the motion is determined by selecting the motion having the Euclidian distance closest to the motion defined in the learning.

Therefore, in order to control remote or remote objects such as robot control, it is possible to perform more accurate and elaborate missions, by calculating the displacement of the relative coordinates from the current position starting point to provide various motion recognition information. The approach of wearing Myo devices on the upper arm and forearm is generalizable to other parts of the body, such as the legs.

In this paper, the methodology for motion estimation is as follows. We used the orientation and EMG raw data measured at 30 frame rates after wearing the upper arm and forearm of the right arm for the five defined stopping operations as input data. The data measured in two Myo devices are defined as independent motion and the Myo device worn at the upper arm is defined as the dependent motion. Learning is defined by defining the correlation in the learning stage. In the recognition phase, the final motion is determined by looking at the motion defined at the learning stage and the motion at the upper arm as the orientation and EMG raw data measured at one Myo device worn at the forearm of the arm for motion estimation. Detailed methodologies are described in detail in the following chapters.

3. The Myo Device Motion Estimation Framework

User’s motions can be estimated more accurately by utilizing multiple currently measured data. The following details the procedure of the proposed motion estimation method.

3.1. Measurement Methodology

The proposed method utilizes a wearable Myo device. To estimate a user’s motions, Myo devices are set on the upper arm and forearm of one arm, and a subordinate relation of the two are set, for example, the upper arm can be defined as a dependency on the forearm. At the end of learning, the subordinate relationship enables motion estimation of the upper arm in subordinate relation to the independent motion of the forearm at the recognition phase. Figure 1 shows Myo devices attached to the forearm. In this paper, signal patterns are analyzed by measuring a user’s wrist movements with eight EMGs.

Figure 1.

(a) Positioning of a Myo device for electromyogram (EMG) measurement, (b) eight EMG signals of one Myo device.

Figure 2 shows the EMG signals acquired after wearing Myo devices on the upper arm and forearm. The eight EMGs have different signals depending on the muscle activity being measured. As shown in Figure 3b, one Myo device has eight EMG sensors built in the band. Therefore, the EMG signals corresponding to the corresponding muscles of the wearable area are input to each of the eight EMG channels. The human arm muscles are composed of several muscles with different strengths depending on the degree of development and the magnitude of the force, and all of the eight EMG signals show different measured values even when measured at the same time, according to the position of the EMG channel measured. However, when worn at the same position, a certain pattern is maintained depending on the motion.

Figure 2.

EMG signal recording.

Figure 3.

Components and structures of the proposed method.

The final motion is determined by selecting the motion corresponding to the signal with the highest Bayesian probability calculated by each signal.

The overall structure of the motion estimation approach is shown in Figure 3. First, Bayesian probability is obtained by using the orientation x, y, and z and EMG obtained from the Myo devices. Weights are calculated and then used to estimate the final motion.

The proposed method consists of data processing, training, and recognition stages. Orientation and EMG data are recorded with Myo devices. The orientation data are transferred to the Bayesian probability calculation step of the training stage. The calculated Bayesian probability is used in a genetic algorithm to obtain weights, which are then transferred to the motion estimation step in the recognition stage.

3.2. Data Measurement

In the data processing phase, the Myo device measures data. Data that can be thus obtained are shown in Table 1.

Table 1.

Myo device dataset format [14].

In the proposed method, only the orientation and EMG are used, but motion estimation accuracy can be improved using additional data of other types by applying the proposed method.

3.3. Traning Stage

Training consists of two steps. The orientation data and EMG input from the data processing phase are processed, as shown in Figure 4, in the Bayesian probability calculation.

Figure 4.

Training Stage.

The motions not worn by Myo device are estimated by the orientation and EMG of the motions worn by Myo device. Set the independent motion , and the dependent motion Set , , and where are the ith possible value of the values collected in advance, , and is the amount of sections.

The weight calculation step is shown in Figure 5. ,) is weight function. Each orientation is weighted and expressed as 15 bits for the genetic algorithm. The fitness function for weight calculation in the genetic algorithm is defined, as follows. The x, y, and z orientations are defined as fitness functions by defining the Euclidean distance as the closest value to the previously defined motion.

Figure 5.

Data structure of the genetic algorithms.

Each weight ranges from −1 to 1 in increments of 0.2. For orientation, three weights,, , and , are utilized for ,, and are expressed as 15 bits for the genetic algorithm. The orientation fitness function is defined as shown in Equation (1).

where . Therefore, each weight is normalized during the genetic algorithm process. For example, , , and are normalized by

The dependent motion are also calculated by EMGs, , …, and , …,, obtaining the weights, for EMGs by the Generic algorithm.

3.4. Recognition Stage

The Bayesian probability calculation from the orientation and EMG data in the motion estimation step reflects the weights determined by the genetic algorithm as shown in Figure 6.

Figure 6.

Recognition stage.

Then, the Bayesian probability updated by the weights is calculated, and final motion is estimated. Of the Bayesian probabilities calculated from the x, y, and z orientations and EMG data, the motion with the highest probability as the final motion is selected as in Equation (3). Handling multiple data types can more accurately estimate motions than traditional methods.

4. Experiments and Analysis

4.1. Overview of Experimental Environments

The experimental environment of this study was implemented with Unity 5.4.2 based on the Win10 operating system and four Myo device devices based on Bluetooth 2.0 were used. Figure 7 shows the system conceptual diagram for constructing the experimental environment. We implemented the proposed algorithm and compared it with the traditional algorithm in an experiment for which we defined five user motions.

Figure 7.

System of Myo device Motion Estimation.

First, the subject calibrates his motions by the developed application connecting with Thalmic Labs’ Myo Connect manager. When the subject moves a lot, he needs to calibrate again from the beginning. In addition, the calibration is deducted by performing four pre-defined gestures, finger spread, wave-in, wave-out, and relaxed state gestures, after wearing a Myo device.

4.2. Motion Definitions

The subjects’ motions were defined by five representative major motions of the arm. To learn these motions, Myo devices were worn on the upper and lower parts of the subjects’ left arm. Figure 8 defines these motions showing the right arm, but they are defined in the same way for the left arm. Flexion refers to the downward motion of the lower arm, the extension of the lower arm. It is a motion that is 90° to the body, rising from the side under the Abduction. Internal is the motion in which the arms are folded inward from the center of the waist. External is a motion that stretches 180° outward from the center-right navel position, as opposed to internal motion. In the learning stage, five subjects performed the predefined movements 10 times with an interval of 10 min after wearing Myo devices on the upper arm and forearm.

Figure 8.

This is a figure, the five defined postures. (a) Screen shoots of Flexion posture; (b) Screen shoots of Extension posture; (c) Screen shoots of Abduction posture; (d) Screen shoots of Internal posture; and, (e) Screen shoots of External posture.

The learned motion is recorded for each user and stored in the user table, and is used to estimate the most accurate motion in the recognition stage. In addition, motion recognition features are recorded for each user, and user-independent motion estimation could be possible using the average value obtained in the experimental data.

4.3. Result of the Proposed Method by Orientation

Figure 9 shows the result of the orientation algorithm experiment, and compares the motion estimation results of the proposed method and the traditional research [17]. Figure 9a,b shows the experimental results of Abduction for orientation and (c,d) are experimental comparison results of Flexion motions. The other figures (e,f) are experimental comparison results of Extension motions, (g,h) are experimental results of External motions, (i,j) are results of Internal motions.

Figure 9.

Differences in orientation-based estimation between the traditional method [17] and the proposed method, and the average of cumulative errors in the x, y, and z orientations. (a,c,e,g) and (i) compare the differences in the estimated motion of the x, y, and z orientations using the previous research method. (b,d,f,h,j) showing the differences in the estimation results of these orientations when they are weighted by the proposed algorithm.

The histogram behind the graph represents the mean value of the total error of the motion estimation. The histogram of the sum of the errors shows that the motion estimation error of the proposed algorithm is small. (f,h,j) show smaller differences than (e,g,i).

As shown in Table 2, the sum of the total errors for the motion estimation result of the previous and newly proposed methods is 257.0418. The total sum of the errors of the motion estimation of the previous method was 95.88, which was an error of 37.3%; the error rate of the proposed method is 65.1, which is an error rate of 25%. The sum of the differences of the motion estimation results from EMG data was 96.03, indicating that its error rate was also 37.3%. With this comparison, we can see that the proposed method reduced estimation errors from orientation data by 12% as compared with the motion estimation result of the previous study.

Table 2.

Difference in the total sum of the difference of the original and estimated orientations.

Figure 10 and Figure 11 show the results of motion estimation based on the original orientation-based method of and the proposed weight-based Bayesian probability method using an avatar.

Figure 10.

The following figure is a comparison of the experimental results of the previous studies on the Abduction motion and the proposed studies. Motion Estimation result Screen of Proposed Method (a) Screenshot of raw orientation x, y, and z (b) Shows the results of the previous research (c) Shows the motion estimation result after applying the weight of the genetic algorithm (d) Shows the Bayesian probability estimation result that reflects the weight.

Figure 11.

The following figure is a comparison of the experimental results of the previous studies on the Internal motion and the proposed studies. Motion Estimation result Screen of Proposed Method (a) Screenshot of raw orientation x, y, and z (b) Shows the results of the previous research (c) Shows the motion estimation result after applying the weight of the genetic algorithm (d) Shows the Bayesian probability estimation result that reflects the weight.

The algorithm comparisons for the main frames in all estimated motions are shown in red and blue circles. As can be seen from the original motions, the proposed algorithm showed improved results.

4.4. Result of the Proposed Method by EMG Data

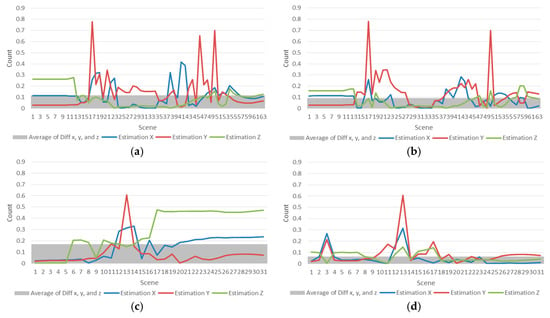

The results of the algorithm experiment on EMG data are shown in Figure 12, which graphs the differences between the previous study and the raw orientation of the proposed method.

Figure 12.

Differences in EMG estimation between the traditional method [17] and the proposed method, and the average of the cumulative x, y, and z error. (a,c,e,g,i) compare the differences in the estimated motion of the x, y, and z EMG using the previous research method. (b,d,f,h,j) show the EMG based motion estimation results using Bayesian probability that reflects the weights calculated by the genetic algorithm.

Figure 12 shows the experimental result of Abduction for EMGs, (a,b) are graphs of experimental results of Abduction motions, and (c,d) are comparison experimental results of Flexion motions. The other figures (e,f) are comparison experimental results of Extension motions, (g,h) are experimental results of External motions, (i,j) are results of Internal motions. Figure 12a,c,e,g,i compares the differences in the estimated motion of the x, y, and z EMG, using the previous research method. Figure 12b,d,f,h,j shows the EMG based motion estimation results using Bayesian probability, which reflects the weights calculated by the genetic algorithm. The graphs show the errors produced with the original data. The closer the orientation was to 0, the lower the estimation error was. The background histogram shows the average of the total errors. The graph also shows that the average errors of the newly proposed algorithm is also lower. Figure 12f,h,j shows a smaller difference when compared to Figure 12e,g,i.

5. Conclusions

In this paper, we proposed a Bayesian probability-based motion estimation algorithm that reflects the weight of Myo device signals by utilizing a genetic algorithm. In the training phase, we showed that upper-arm motions can be estimated by learning Bayesian probability with only one lower-arm Myo device after learning using two Myo devices: one on each of the upper and lower part of one arm. In addition, the performance improved when recalculating the Bayesian probability to reflect the weights calculated by the genetic algorithm. Experimental results showed that the data used for motion estimation are complementary by using two types of data: orientation and EMG.

The proposed algorithm, using a composite of orientation and EMG data, reduced motion estimation errors by 12% as compared with previous methods. Motion estimation errors from EMG data were also 37%, which agrees with traditional studies. In the future, it is necessary to study robot controls by transferring estimated users’ motions using a Myo, as proposed in this paper.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIP) (NRF-2015R1A2A2A01003779).

Author Contributions

Jeongsook Chae wrote the paper, Yong Jin performed experiments, and Yunsick Sung and Kyungeun Cho analyzed the results of the experiments and revise the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Harrison, C.; Tan, D.; Morris, D. Skinput: Appropriating the Body as an Input Surface. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 453–462. [Google Scholar]

- Khademi, M.; Hondori, H.M.; McKenzie, A.; Dodakian, L.; Lopes, C.V.; Cramer, S.C. Free-Hand Interaction with Leap Motion Controller for Stroke Rehabilitation. In Proceedings of the Extended Abstracts on Human Factors in Computing Systems (CHI 2014), Toronto, ON, Canada, 26 April–1 May 2014; pp. 1663–1668. [Google Scholar]

- Kim, P.Y.; Kim, J.W.; Sung, Y. Bayesian Probability-based Hand Property Control Method. In Proceedings of the 3rd International Conference on Intelligent Technologies and Engineering Systems (ICITES2014); Springer: Berlin/Heidelberg, Germany, 2014; Volume 345, pp. 251–256. [Google Scholar]

- Kim, A.R.; Rhee, S.Y. Mobile Robot Control using Kinect Sensor and Smartphone. Proc. KIIS Spring Conf. 2013, 23, 133–134. [Google Scholar]

- Kim, P.Y.; Sung, Y.; Park, J. Bayesian Probability-based Motion Estimation Method in Ubiquitous Computing Environments. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; Volume 373, pp. 593–598. [Google Scholar]

- Kim, K.T.; Kim, J.R.; Choi, J.S.; Kim, J.H.; Lee, S.Y. Depth Camera-Based 3D Hand Gesture Controls with Immersive Tactile Feedback for Natural Mid-Air Gesture Interactions. Sensors 2015, 15, 1022–1046. [Google Scholar] [CrossRef] [PubMed]

- Strelow, D.; Singh, S. Optimal Motion Estimation from Visual and Inertial Measurements. In Proceedings of the Sixth IEEE Workshop on Applications of Computer Vision (WACV 2002), Orlando, FL, USA, 4 December 2002; pp. 314–319. [Google Scholar]

- Zhang, X.; Chen, X.; Li, Y.; Lantz, V.; Wang, K.; Yang, J. A Framework for Hand Gesture Recognition Based on Accelerometer and EMG Sensors. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2011, 41, 1064–1076. [Google Scholar] [CrossRef]

- Kim, J.H.; Wagner, J.; Rehm, M.; Andre, E. Bi-channel Sensor Fusion for Automatic Sign Language Recognition. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition (FG’08), Amsterdam, The Netherlands, 17–19 September 2008. [Google Scholar] [CrossRef]

- Maguire, D.; Frisby, R. Comparison of Feature Classification Algorithm for Activity Recognition based on Accelerometer and Heart Rate Data. In Proceedings of the 9th IT&T Conference, Dublin, Ireland, 22–23 October 2009. [Google Scholar]

- Yang, S.I.; Hong, J.H.; Cho, S.B. Activity Recognition based on Multi-Modal Sensors using Dynamic Bayesian Networks. J. KIISE Comput. Syst. Theory 2009, 5, 72–76. [Google Scholar]

- Ahsan, M.R.; Ibn, I.M.; Khalifa, O. Electromygraphy (EMG) Signal based Hand Gesture Recognition using Artificial Neural Network (ANN). In Proceedings of the 4th International Conference on IEEE Mechatronics (ICOM), Kuala Lumpur, Malaysia, 17–19 May 2011. [Google Scholar] [CrossRef]

- Moon, I.; Lee, M.; Chu, J.; Mun, M. Wearable EMG-based HCI for Electric-Powered Wheelchair Users with Motor Disabilities. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation (ICTA 2005), Barcelona, Spain, 18–22 April 2005. [Google Scholar] [CrossRef]

- Abduo, M.; Galster, M. MYO Gesture Control Armband for Medical Applications; Department of Computer Science and Software Engineering University of Canterbury: Christchurch, New Zealand, 2015. [Google Scholar]

- Darani, Z.S.; Kaedi, M. Improving the Interactive Genetic Algorithm for Customer-Centric Product Design by Automatically Scoring the Unfavorable Designs. In Human-Centric Computing and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Sabharwal, S.; Aggarwal, M. Test Set Generation for Pairwise Testing Using Genetic Algorithms. J. Inf. Process. Syst. 2017, 13, 1103–1125. [Google Scholar]

- Wu, J.H.; Zhang, G.J.; Ren, Y.Z.; Zhang, X.Y.; Yang, Q. Weighted Local Naive Bayes Link Prediction. J. Inf. Process. Syst. 2017, 13, 914–927. [Google Scholar] [CrossRef]

- Lee, S.G.; Sung, Y.; Park, J.H. Motion Estimation Framework and Authoring Tools based on MYOs and Bayesian Probability. Multimedia Tools Appl. 2016, 1–20. [Google Scholar] [CrossRef]

- Kim, H.J.; Lee, S.G.; Kim, D. Arm Motion Estimation Algorithm using MYO armband. In Proceedings of the 2017 First IEEE International Conference on Robot Computing, Taichung, Taiwan, 10–12 April 2017; pp. 376–3817. [Google Scholar]

- Nourhan, T.M.; Piechnick, M.; Falkenberg, J.; Nazmy, T. Detection of Muscle Fatigue Using Wearable (MYO) Surface Electromyography Based Control Device. In Proceedings of the 2017 IEEE 8th International Conference on Information Technology (ICIT), Amman, Jordan, 17–18 May 2017; pp. 44–49. [Google Scholar]

- Mendez, I.; Hansen, B.W.; Grabow, C.M.; Smedegaard, E.J.L.; Skogberg, N.B.; Uth, X.J.; Bruhn, A.; Geng, B. Evaluation of the MYO Armband for the Classification of hand motions. In Proceedings of the 2017 IEEE International Conference on Rehabilitation Robotics (ICRORR), London, UK, 17–20 July 2017. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).