1. Introduction

Human computer/machine interface (HCI/HMI) is an interfacing technology between the user and a computer/machine. In particular, HCIs using bio-signals that are voluntarily controlled, such as electromyograms (EMGs), electroencephalograms (EEGs), and electro-oculograms (EOGs), have been studied for people with disabilities. Artificial prosthesis and communication systems, including virtual keyboards, are typical HCI applications [

1,

2,

3]. HCIs are very important technologies for people with disabilities because they are not just support tools, but they could improve a disabled person’s quality of life.

Amyotrophic lateral sclerosis (ALS), well known as Lou Gehrig’s disease, is a disease that causes the death of motor neurons [

4]. In early ALS, the muscles of the hands, fingers, and legs become weak and thin. Additionally, it becomes difficult to talk and swallow food. As time passes, the symptoms get worse until voluntary breathing becomes difficult in the end stage. In many cases, eye movements are usually spared until the final stage, although the limbs and tongue are paralyzed. Thus, many patients with ALS use communication supporting tools based on eye movement [

5].

Interface systems using brain activities, such as EEGs, Electro-corticograms, and magneto-encephalograms, are called brain–computer interfaces (BCIs). Steady-state visual evoked potential (SSVEP) or P300-based BCI systems for communication support have been reported by many BCI research groups. Valbuena et al. introduced the Rhombus layout SSVEP-based virtual keyboard using five flickering stimuli [

6]. They arranged the letters according to on their prevalence in the English language. The P300 Speller described by Farwell and Donchin provided a 6 × 6 matrix of characters [

7]. They used the P300 component of the event-related potential (ERP) elicited by rarely occurred “attended” stimuli.

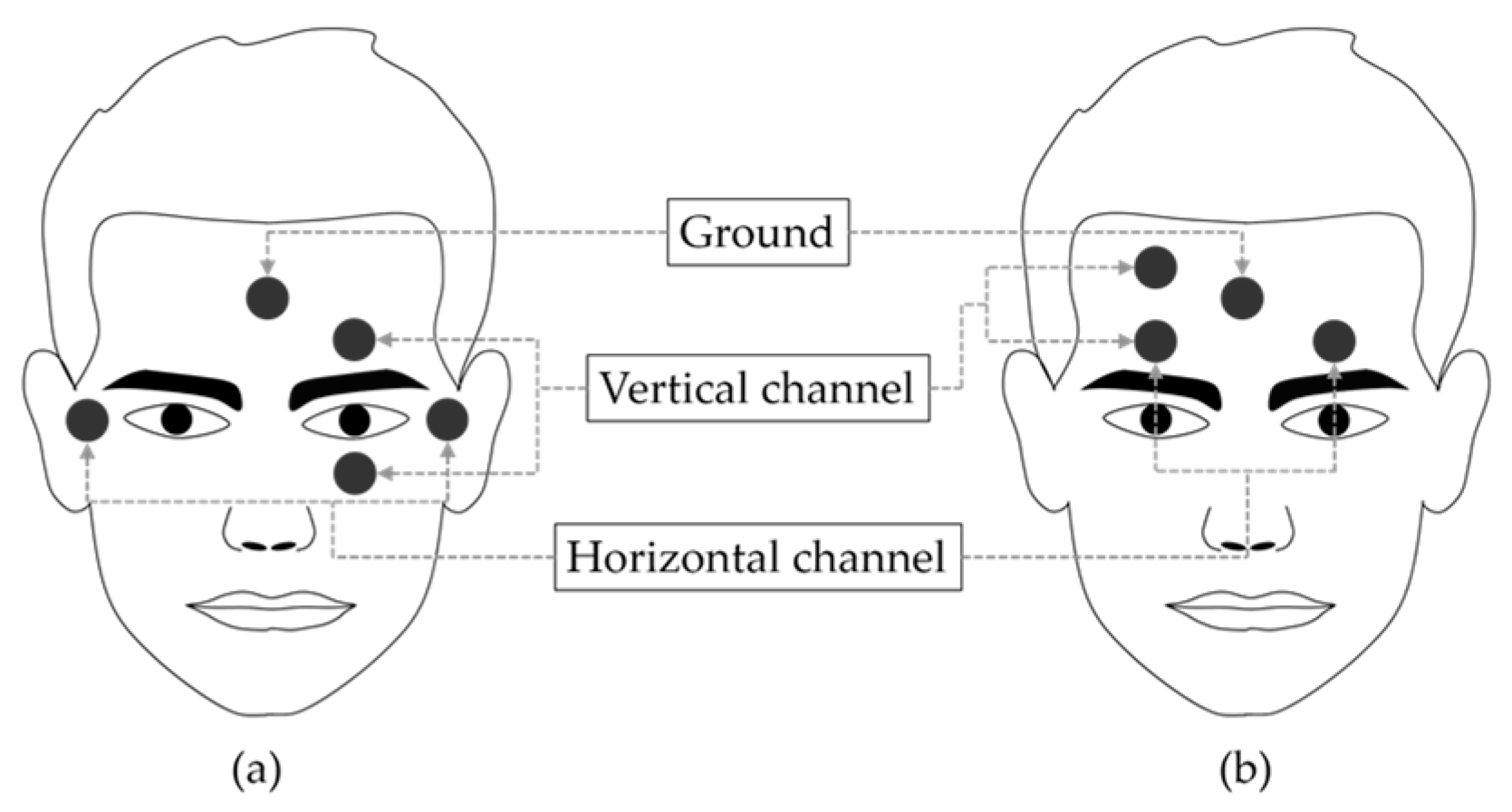

An EOG is a signal that measures the potential difference between the retina and the cornea [

8]. The eyeball can be modeled as a dipole with the positive cornea in the front and the negative retina in the back. The primary applications of EOG are ophthalmological diagnosis and eye movement recording. To record eye movements, surface electrodes are typically placed at four positions around the eyes (above, below, left, and right). The pair of electrodes placed above and below the eyes is used to measure the vertical movement of the eyes, and the pair of electrodes placed to the left and right of the eyes are used to measure the horizontal movement of the eyes. When the gaze is shifted to the left side, the electrical field of the left side of the eyes becomes positive and the opposite side becomes negative.

A virtual keyboard based on EOG was introduced by Tecce et al. in 1998 [

2]. The results of studies on this keyboard showed its usefulness as a communication assisting system for those who are paralyzed or unable to speak. In 2002, Barea et al. developed a wheelchair driving system using EOG to increase the mobility of disabled users who have motor paralysis [

9]. Usakli et al. reported an EOG measurement system using an opposite phase summing approach to remove the direct current (DC) level and reduce power line noise in 2010 [

10]. Using the nearest neighbor algorithm, they classified eye movement in four directions and two types of blinking with 95% accuracy. In addition, there are several studies that developed goggle-type wearable EOG measurement systems to improve the user’s comfort by reducing preparation time [

11,

12,

13]. However, the electrodes around the eyes often cause discomfort to the user, and this discomfort has been the motivation for many studies [

14,

15,

16,

17]. To simplify and improve user convenience, many studies have proposed new electrode placement that can replace conventional EOG electrode placement [

14,

15,

16,

17,

18].

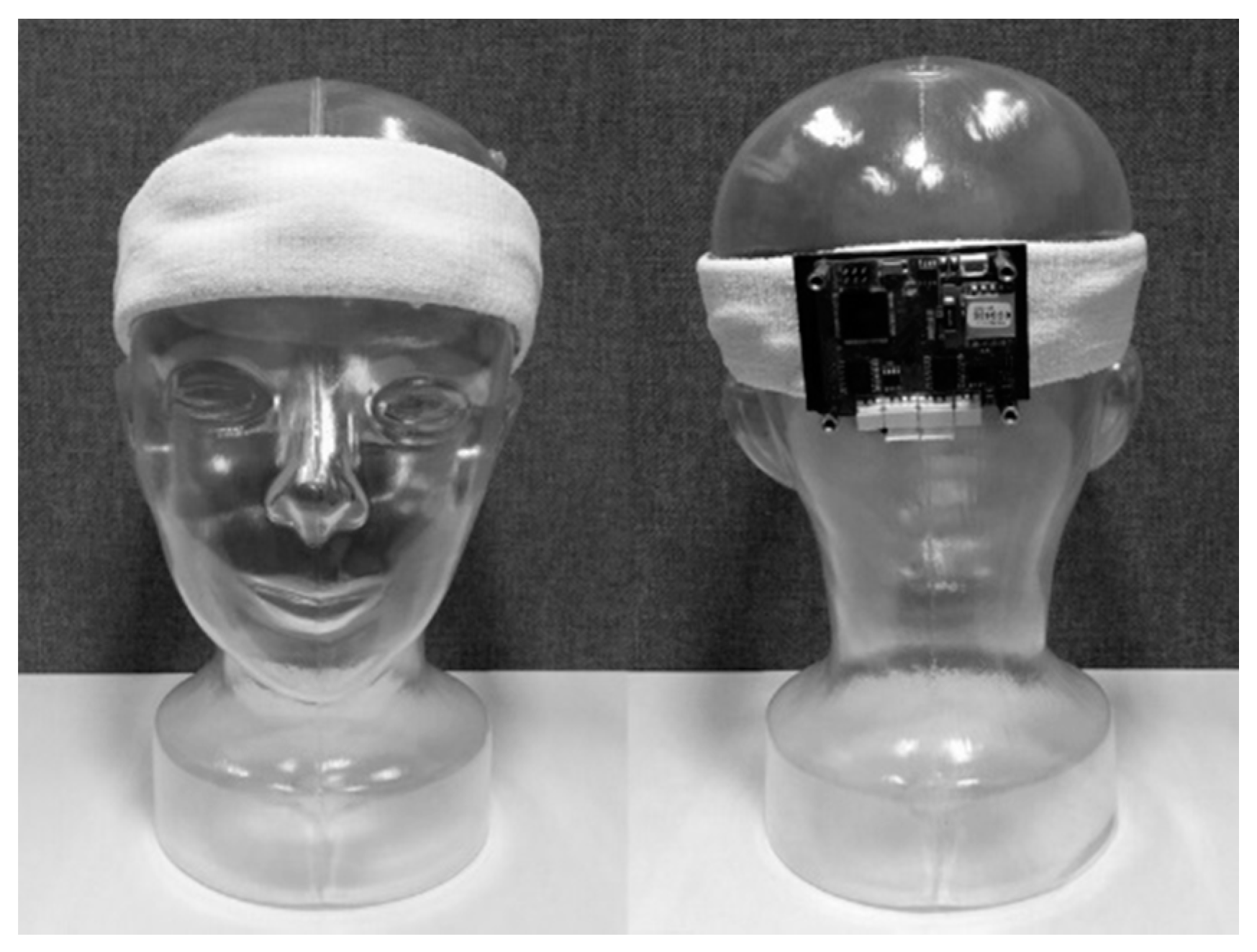

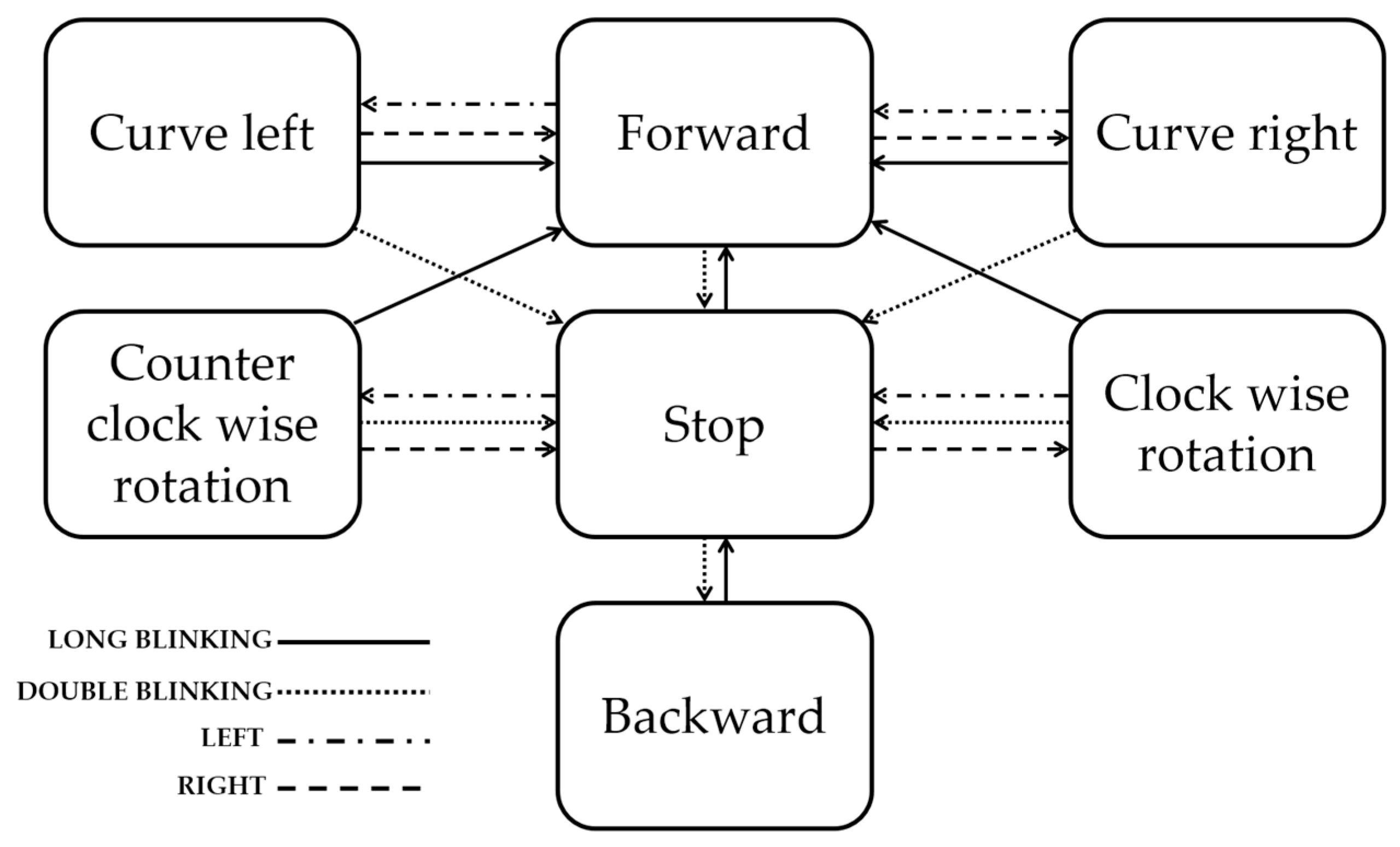

The aim of this study was to measure eye movements by placing a small number of electrodes only on the forehead for wearable HCI system. Three electrodes were placed on the forehead in the vertical and horizontal directions, sharing a positive electrode, and the ground electrode was placed at the center of the forehead. We developed a headband-type wireless wearable sensor system for detection of six class of eye movements and verify it through three applications.

4. Discussion

4.1. Forehead EOG

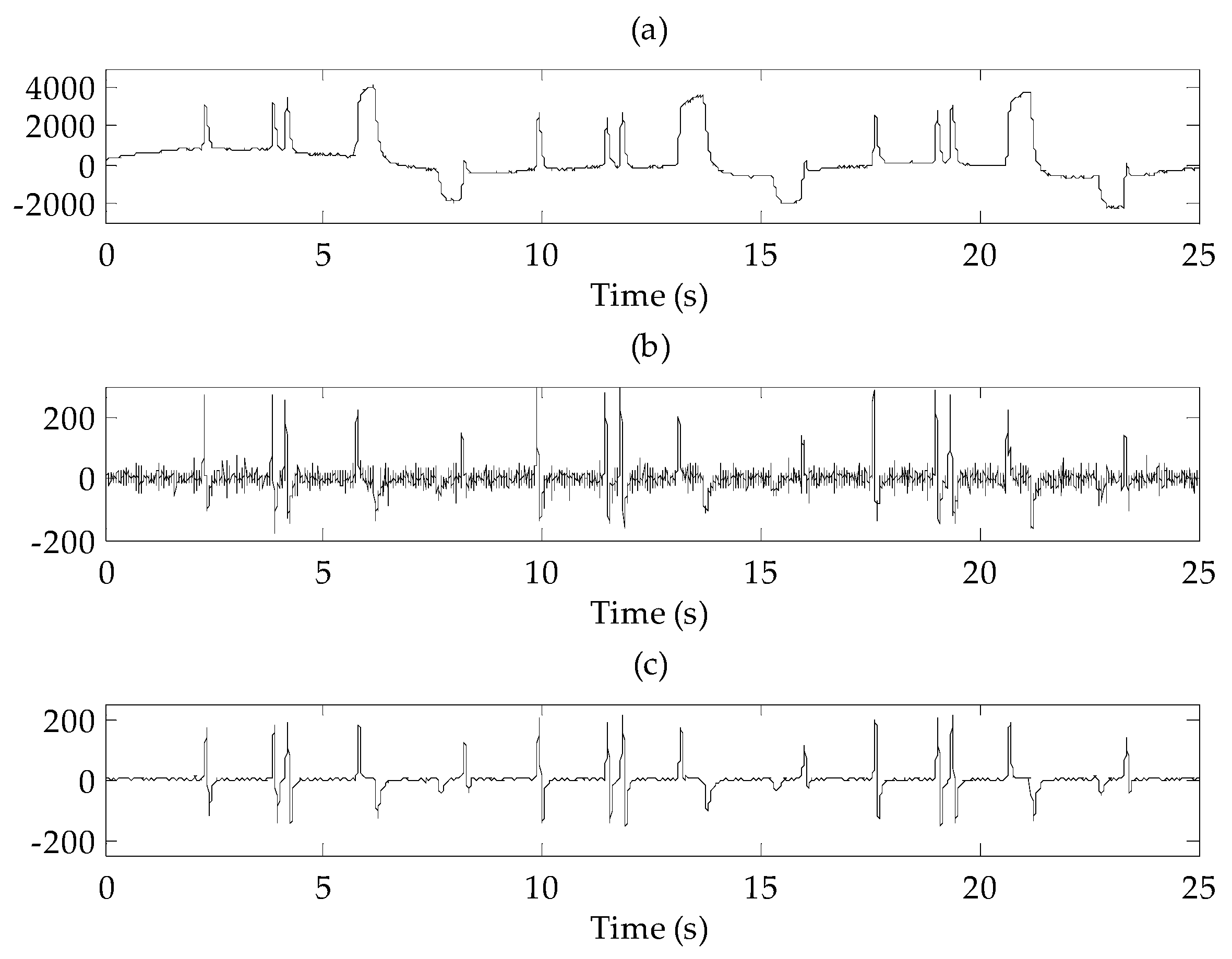

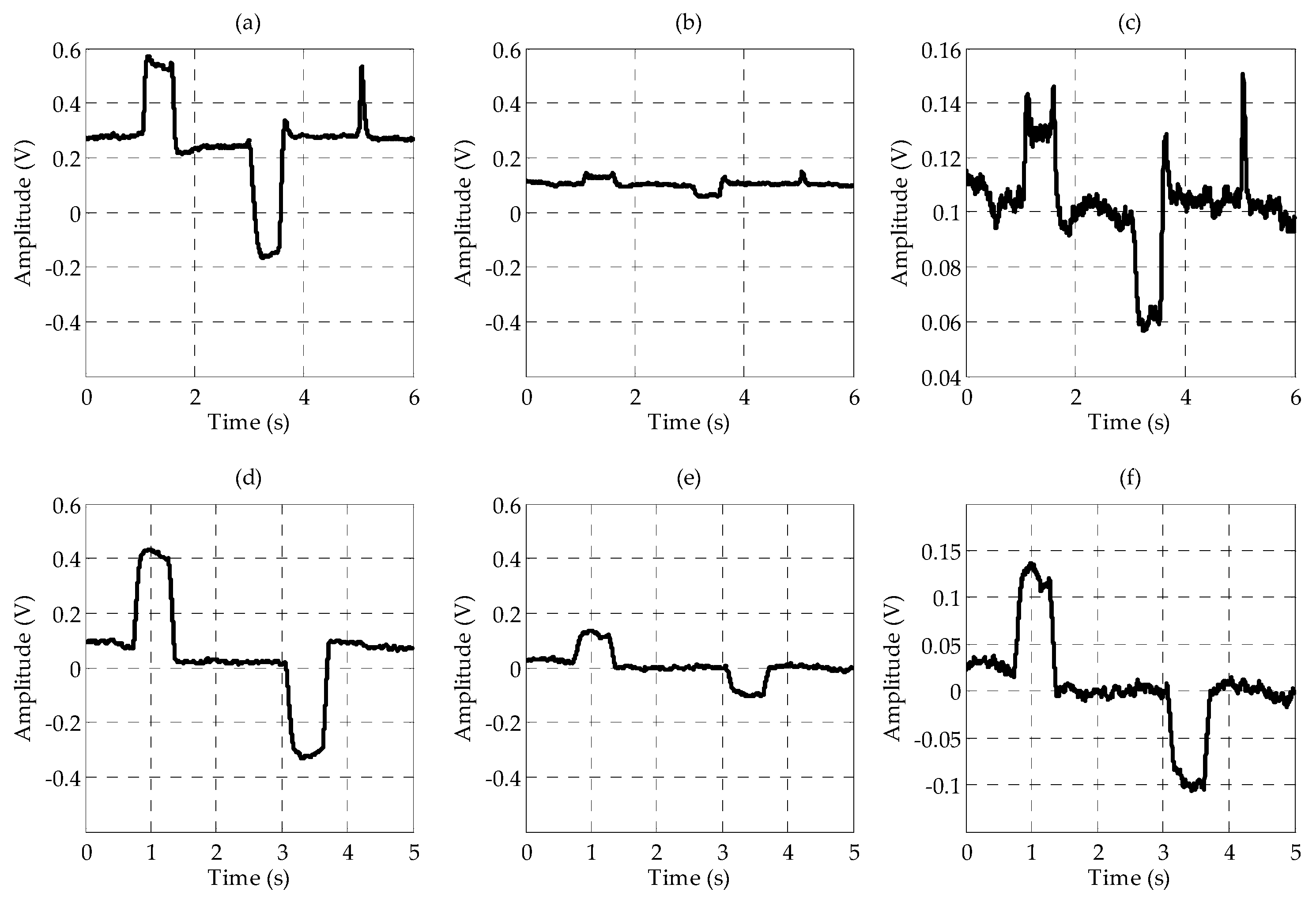

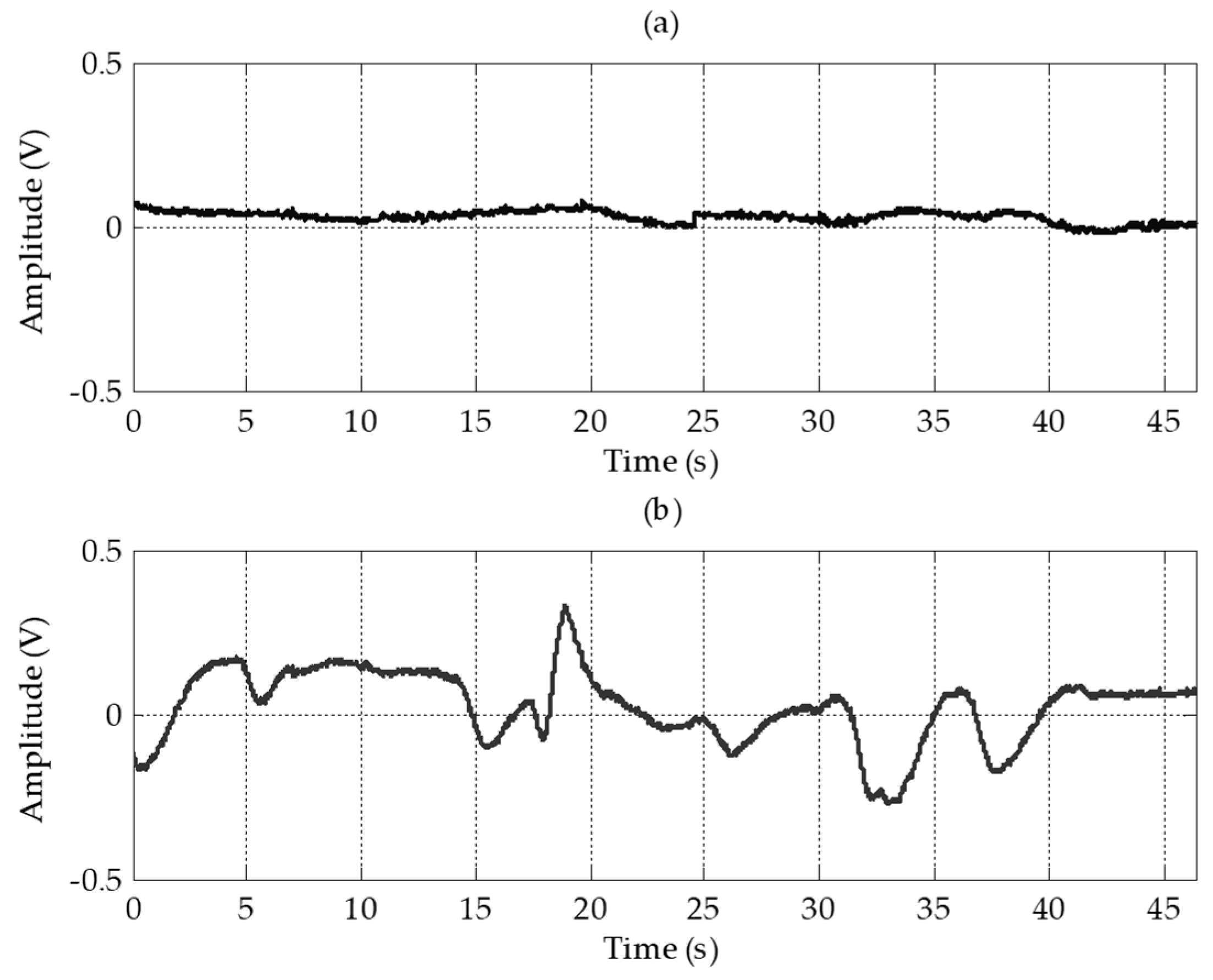

Four electrodes including the ground electrode were placed on the forehead. Electrodes for the vertical channel were arranged vertically and electrodes for the horizontal channel were arranged horizontally to minimize interference between each channel. By sharing a positive electrode for the two channels, the number of electrodes was reduced. Although the amplitudes and signal qualities were lower than in conventional EOG, the EOG waveforms were well measured on the forehead. In the vertical channel, the amplitudes of the forehead EOG and conventional EOG were almost nine times different, and in the horizontal channel, they were almost three times different. For forehead EOG, the baseline drift was more severe than in conventional EOG. Baseline drift indicates the non-periodic DC-level fluctuation of the signal. Because the amplitude of the EOG signal in the time domain contains important information, fluctuations other than amplitude changes caused by eye movements have a negative effect on the EOG analysis. The excess baseline drift could be due to several factors, including lighting conditions, electrode contact, EMG artifacts, changes in skin potential, age, sex, or diurnal variations [

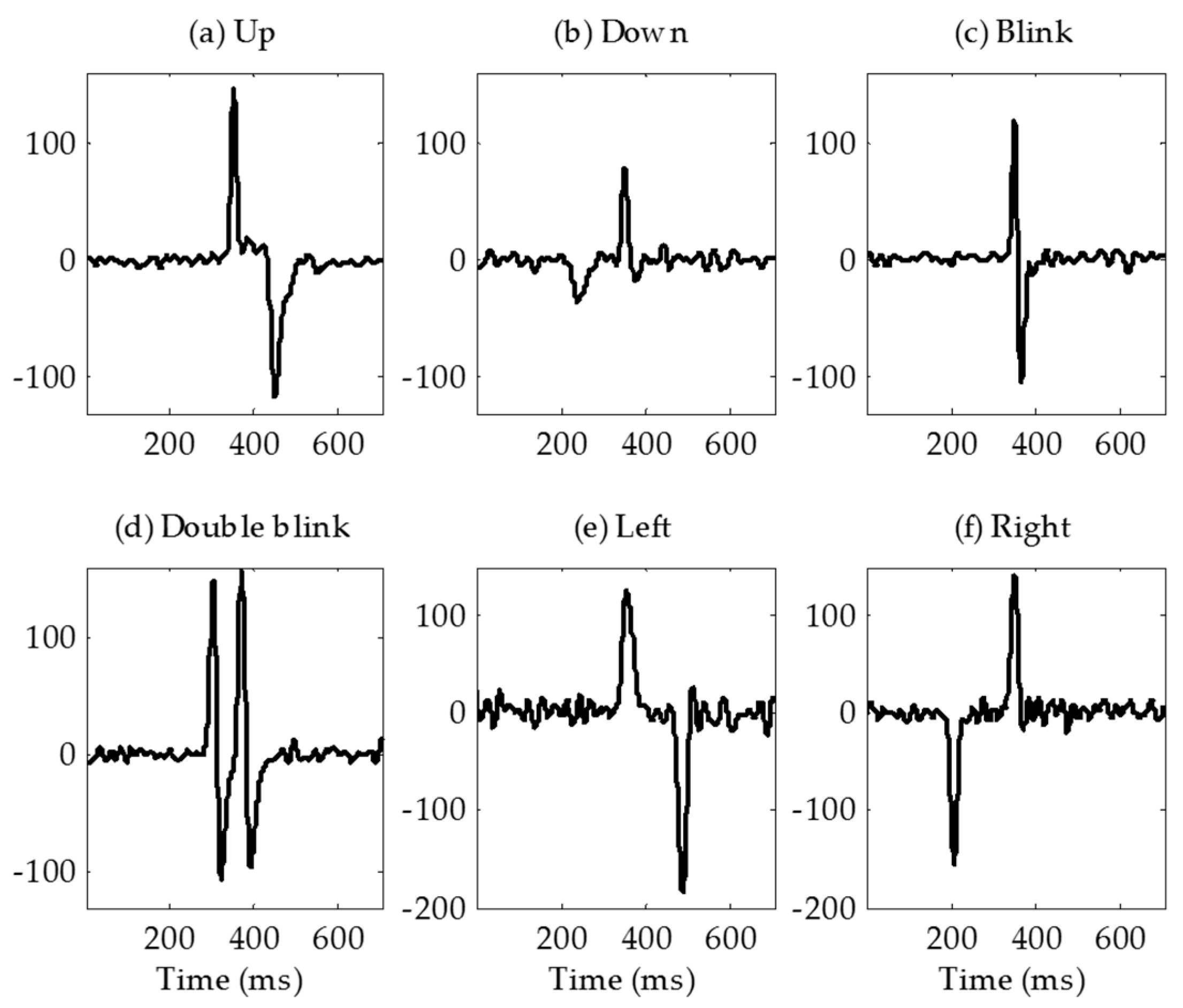

19]. The waveforms similar to the conventional EOG were measured in the forehead, but the correlation coefficient between conventional EOG and forehead EOG was very low due to this baseline drift. The raw signals were differentiated to eliminate this baseline drift and then low-pass filtered to remove high-frequency noise. In this study, we have overcome this problem by differentiating the signal; however, in future research, it is necessary to study why the drift becomes worse in forehead EOG. After the signal processing, the correlation coefficients between conventional EOG and forehead EOG were 0.87 and 0.88 in vertical channel and horizontal channel, respectively. As shown in

Figure 10, the “down” signal amplitude is smaller than that of the other movements. The reason for this is that the distance between the electrode and the source (dipole model described in introduction) is longer than the other movements. In the evaluation, no subject felt that it was difficult to control the downward movement because the threshold was adjusted to the amplitude of the “down”.

4.2. Comparison with Previous EOG Based HCI Studies

Conventional EOG-based HCI systems provide more directional movement detection than the system proposed in this study. In Wu’s study, they classified eight directional eye movements with 88.59% average accuracy (up, down, left, right, up-left, down-left, up-right, and down-right) [

20]. In Phukpattaranont’s study, they proposed efficient features for classification of eye movements [

21]. They classified not only the eight directional eye movements but also clockwise and counter clockwise eye movements.

Several studies measured EOG without using conventional electrode positions. Guo et al. developed a single-channel patchable EOG sensor which can be stuck on the forehead above the eyebrow [

14]. They classified three types of eye movements (upward, downward, and blinking) with an 84% classification accuracy. In the study by Wu et al. they classified four classes of commands which were combined with three types of eye movements (double blink, single blink, and looking up) [

15]. They measured single-channel EOG using a MindWave Mobile Headset (NeoroSky, San Jose, CA, USA), and the classification accuracy was 92.3%. An earphone-based eye gesture interface system was developed by Manabe et al. [

18]. They classified three classes of eye gestures with three electrodes implanted in a pair of earphones. Belkacem et al. proposed an eye-movement-based communication system using two temporal EEG sensors with four electrodes [

16]. They classified six classes of eye movements (left, right, up, down, blink, and center) with an 85.2% classification accuracy. In Zhang’s study, they proposed novel driving fatigue detection system using forehead EOG [

17]. Although they did not present classification result of eye movement, their electrode position was similar to this paper. They placed four electrodes on the forehead, the reference electrode on the left mastoid, the ground electrode on the right mastoid. The advantage of our system over previous studies is that it detected six eye movements with a small number of electrodes located only on the forehead. In addition to the headband, electrodes placed on the forehead can be applied to many wearable devices such as cap and other head gears. And such wearable systems not only increase the usability of the user but also could reduce the resistance to the system.

Table 2 summarizes the previous studies measuring EOG without using conventional electrode placement.

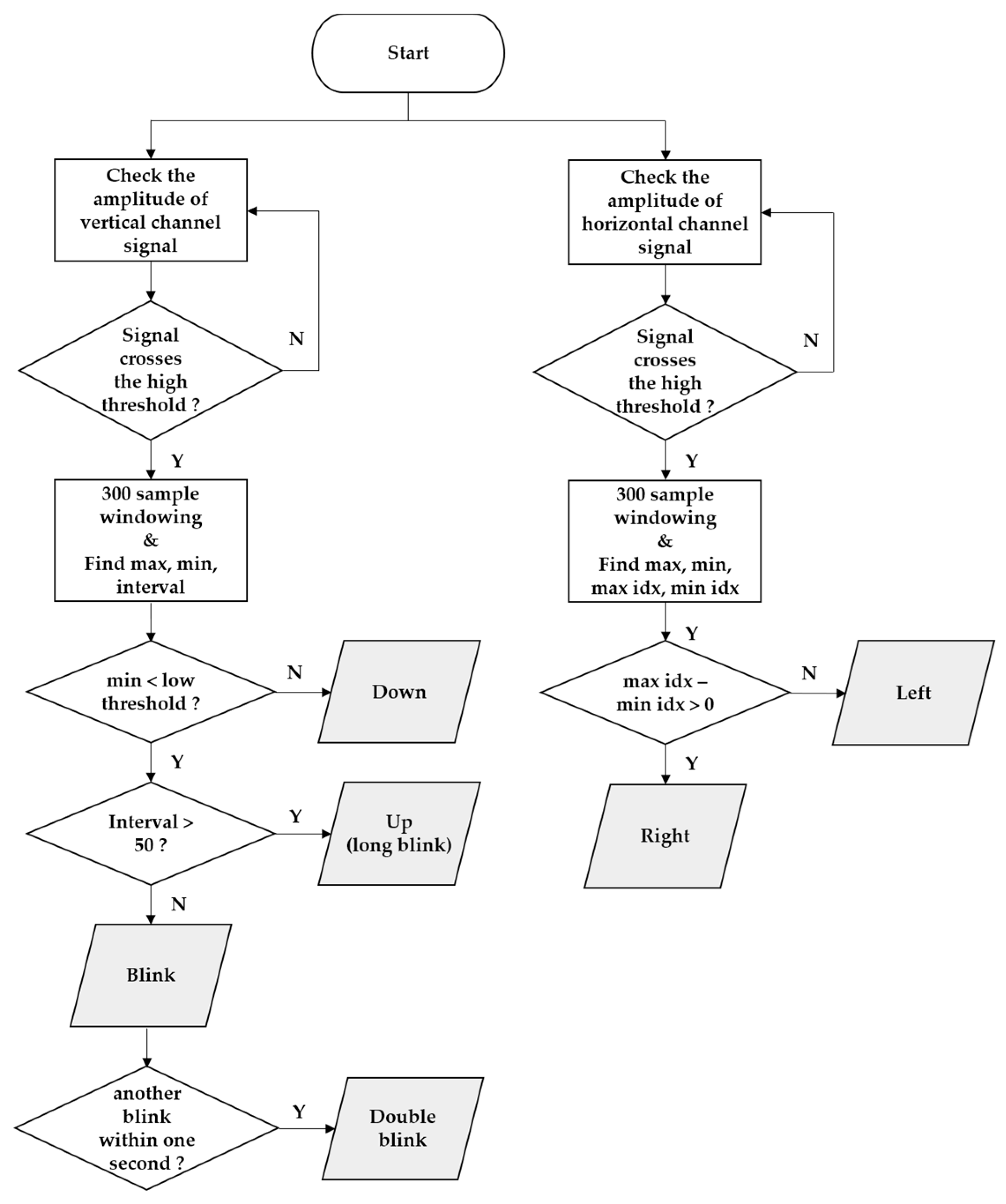

4.3. Classification Algorithm

We used the decision tree method to classify eye movements for a training-free algorithm. However because the amplitudes of the EOG signals were different for each user, a threshold setting for each user was required. In this study, to prevent unintended signaling due to gaze shifting when searching for the desired letter, the threshold was set to approximately 90% of the amplitude when the user moved their eyes intentionally. If the threshold is set too high, errors caused by normal gaze shifting can be reduced, but failures may occur due to decreasing amplitude caused by fatigue of the ocular muscle. If the threshold is set too low, failures can be reduced, but errors due to normal gaze shifting may increase. In the experiment using the modified Bremen speller, there was one error and five failures. The algorithm classified a “double blink” as “up (long blink)” twice because the interval threshold for classifying “blink” and “up (long blink)” was set too low. Thus, it is necessary to increase the interval threshold for users who cannot blink quickly. The five failures occurred because the threshold was set too high. There is a possibility that the failure rate may increase due to eye fatigue when used for experiments with a long duration. However, if the threshold is set too low, an undesired command can be signaled when the gaze shifts to find a letter. Therefore, it is important to set appropriate thresholds in this algorithm.

4.4. Applications

The Bremen BCI system, which was developed by Valbuena et al., is a virtual keyboard system using steady state visual evoked potential (SSVEP) [

6]. The system has five flickering lights of different frequencies that induce SSVEP and encode four directional cursor movements and selection. Their typing speed was 2.56 LPM (re-computed in terms of their reported results). Although typing speeds were not as fast in this study, SSVEP-based BCI systems can increase speed by improving detection algorithms. Volosyak increased the typing speed of a Bremen BCI system to 8.28 LPM (re-computed in terms of their reported results) by applying a signal processing method [

22]. SSVEP-based keyboards further increase the typing speed by increasing the number of stimuli. Hwang et al. developed a QWERTY style virtual keyboard using 30 LEDs flickering with different frequencies. Their average accuracy and typing speed were 87.58% and 9.39 LPM, respectively [

23]. Furthermore, our previous study on hybrid SSVEP-P300 BCI achieved results of 93% accuracy and 7.15 LPM typing speed (re-computed in terms of reported results) [

24].

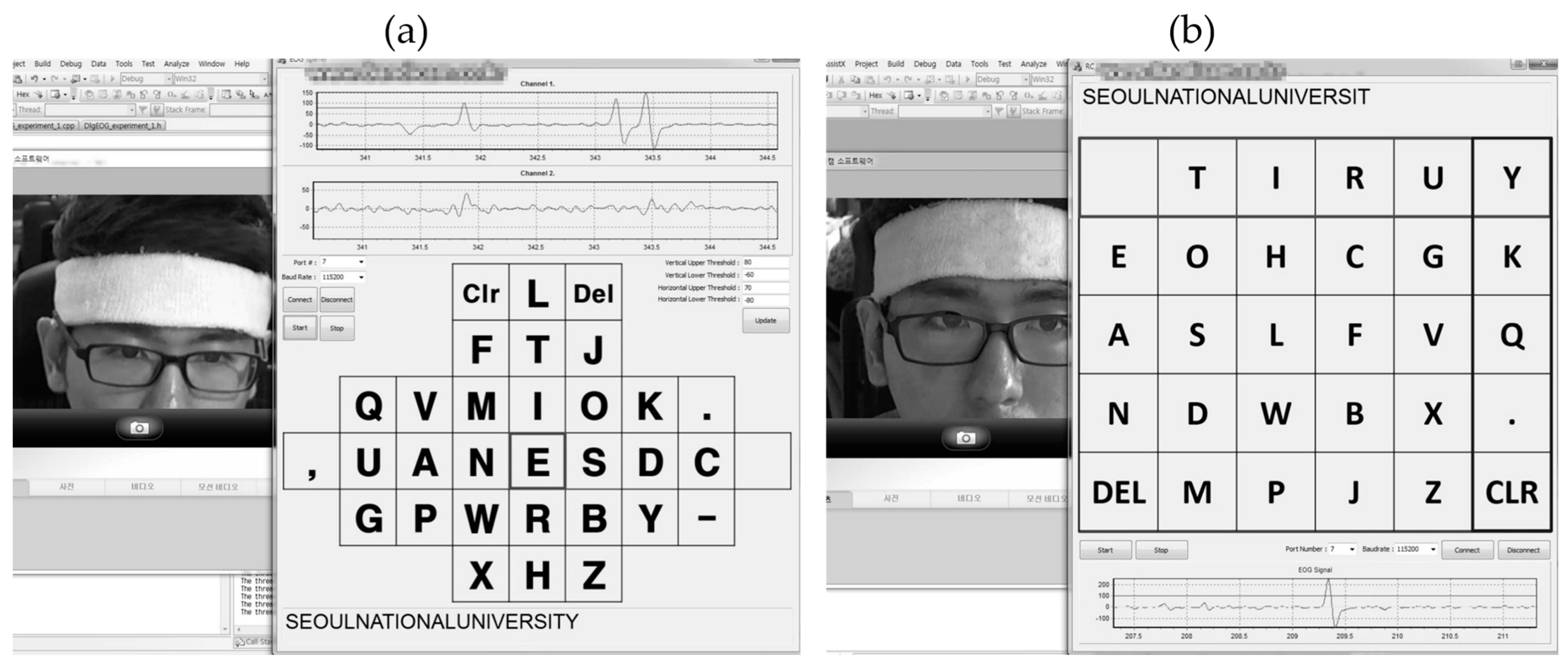

In our system, the five flickering lights were removed and five commands were encoded by eye movements. In our experiments, a long blink was used to move the cursor upward instead of upward eye movement because the user found upward eye movement uncomfortable. Because of Bell’s phenomenon [

25], the eyes moved upward when they were closed, so the same EOG waveforms were generated for both actions. As described earlier, the typing speed in the modified Bremen keyboard application was 10.81 LPM, which is higher than or equivalent to that of the recently proposed virtual keyboard mentioned above.

The modified Bremen speller cannot be used with a patient who cannot move their eyes in four directions. Thus, we tested our system with the virtual keyboard that uses automatic scanning, which required only one eye movement signal from patients who communicate using eye blinks. There are several types of scanning methods: element scanning, row-column scanning, and block scanning [

26]. We chose row-column scanning method because we thought it was intuitive and suitable for a large number of targets as found on a keyboard. In this application, because a double blink was the encoded the switch that was used to select the row and column, only the vertical channel signal was used. As described in the results, the mean typing speed of row-column scanning was 7.75 LPM. The mean typing speed was lower than for the modified Bremen keyboard application, but the subjects reported that it was easier and more comfortable to use because it only used a double blink.

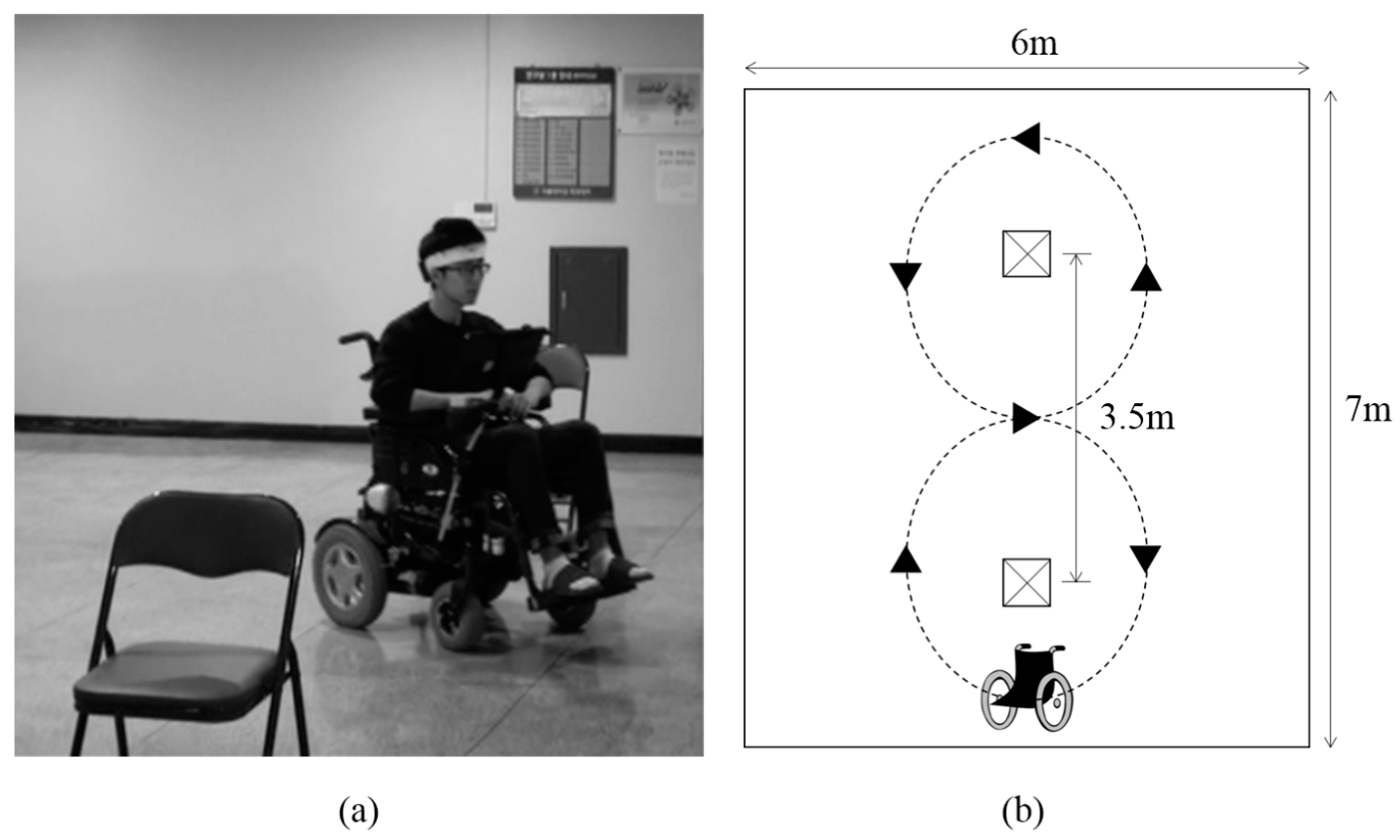

To prevent accidents caused by signaling errors, the power wheelchair driving was tested with the four classes of eye movements that the user felt most comfortable with. Although this test was conducted to evaluate the possibility of using forehead EOG for wheelchair driving and successful results were obtained, considering that the speed and the rotation angle of the wheelchair were fixed in the test environment for safety, more research is needed to make real world driving a possibility.

4.5. Limitations

There were several limitations in this study. First, the reaction times for left/right and up/down commands were different. Signals that were differentiated and low-pass filtered consisted of positive and negative peaks. For the ‘left’ and ‘up’ signals, the positive peak occurred before the negative peak. For the “right” and “down” signals, the negative peak occurred before the positive peak. Because the classification algorithm sampled the signal centered on the positive peak that exceed the threshold, a user could experience a delay when they performed “right” and “down” commands. In the virtual keyboard experiment, the subjects experienced some ambiguity in the training period but were able to quickly adapt; this did not affect the performance. In the wheelchair driving experiment, the subject had to move his eye beforehand, in order to consider the delay time. In future study, the algorithm must be modified to eliminate the delay as it confuses users and can be a big problem in wheelchair driving. It could be solved by simultaneously monitoring both positive and negative peaks. Second, the algorithm we proposed classified only 4 directional eye movements. As described in

Section 4.2, several studies using conventional EOG provided more than eight directional movement classifications. However, unlike their studies, we used a forehead EOG, which has lower signal quality than conventional EOG. Thus, further work on signal processing and the classification algorithm are needed to detect more eye movements when using a forehead EOG. Third, we evaluated the proposed system with four subjects. It is necessary to evaluate with more subjects to confirm the generality. However, three subjects who were not familiar with the proposed system produced good results with little training, and this confirmed the possibility of a wearable forehead EOG system. It is important to note that the wearing configuration of the headband affected the performance of the system. Correct wearing of the headband is necessary because subjects may experience interference between both channels if the headband is worn incorrectly. A method of eliminating inter-channel interference through signal processing needs to be studied further.