Abstract

Due to the direct influence of night vision equipment availability on the safety of night-time aerial reconnaissance, maintenance needs to be carried out regularly. Unfortunately, some defects are not easy to observe or are not even detectable by human eyes. As a consequence, this study proposed a novel automatic defect detection system for aviator’s night vision imaging systems AN/AVS-6(V)1 and AN/AVS-6(V)2. An auto-focusing process consisting of a sharpness calculation and a gradient-based variable step search method is applied to achieve an automatic detection system for honeycomb defects. This work also developed a test platform for sharpness measurement. It demonstrates that the honeycomb defects can be precisely recognized and the number of the defects can also be determined automatically during the inspection. Most importantly, the proposed approach significantly reduces the time consumption, as well as human assessment error during the night vision goggle inspection procedures.

1. Introduction

Night vision goggles (NVGs) are used to enhance the visibility of helicopter crew members in low-light environments [1]. The basic NVGs structure is comprised of a mounting frame to hold all of the components, an objective lens to focus the night image onto the photocathode, a channel-plate proximity-focused image-intensifier, and a magnifying eyepiece with focusing adjustments to display the intensified image to the viewer [2]. The electro-optic system of the image intensifier detects and intensifies reflected energy in the visible range (400 to 700 nm) and the near-infrared range (700–1000 nm) of the electromagnetic spectrum [3]. The image quality of NVGs relies on the intensifier to amplify the detected electromagnetic signals [4]. The function of an image intensifier is to convert weak visible and near-infrared light to electrons, which are then converted into large quantities of secondary electrons via an electron amplifier (creating an electron cloud). These electrons collide with the screen to create visible light. The availability of NVGs directly influences mission safety during night-time reconnaissance such that regular maintenance is compulsory.

Image peculiarities commonly seen in NVGs include shading, edge glow, bright spots, dark spots, honeycomb, distortion, flicker, and scintillation [5]. Among these peculiarities, the honeycomb defect is also known as fixed pattern noise of a faint hexagonal form. A honeycomb-like pattern in the image is most often seen in high-light-level conditions [6]. If it is obvious or distracting, the image-intensifier should be replaced. Determining honeycomb defects are relatively more difficult than other types of defects because they lack a set reference and are much more difficult to identify with the naked eye. According to the current standard operation procedure, the aviator’s night vision imaging systems AN/AVS-6(V)1 and AN/AVS-6(V)2 remain reliant on manual calibration [7]. Prior to the calibration, technicians are going to be asked to enter a dark room for approximately twenty minutes to increase their sensitivity to faint light in darkness. This operation procedure definitely prolongs the total calibration time. Moreover, the goggles must be placed on a testing platform and technicians are required to perform calibration through the goggle’s eyepiece while manually adjusting the focus [8,9,10]. During the calibration, manual observation through the eyepiece of the NVGs and manual adjustment of focal length are performed simultaneously. Long inspection times induce negative physiological effects easily, such as loss of concentration and biases, which lead to improper calibration results.

To reduce the technical training time and to achieve efficient inspection of the NVGs, a camera was installed on the testing platform which captured the image through the NVGs. An auto-focusing algorithm and custom-design hardware were integrated to develop an automated defect detection system for NVGs. Automatic detection of honeycomb defects using image pattern recognition was proposed to reduce the calibration difficulty of manual operations.

Currently, there are two methods for auto-focusing, namely, active and passive auto-focusing. In active auto-focusing, add-on infrared [11] or other measuring tools [12] are used to measure the distance between the camera lens and target object. Passive auto-focusing involves calculating sharpness from single images obtained by the camera. Sharpness curves are then generated after calculating the sharpness of multiple images, where the peak values from the sharpness curve correspond to the optimal focal length [13,14,15]. This study adjusted focal length using the images obtained from the lens of the NVGs. Hence, a passive auto-focusing method was used. However, the key to this method is whether a correct in-focus point can be effectively calculated from the image information. The light source luminosity is also an important factor affecting the passive auto-focus system [16,17,18]. The ANVTP (TS-3895A/UV) used in this study provided a stable low-light environment, minimizing the effects from the light source.

The optimal sharpness calculation methods can be categorized as the typical depth from focus (DFF) or depth from defocus (DFD) methods. DFD is commonly used in depth estimation and scene reconstruction. This method has relatively few calculation samples, which improves servomotor efficiency at the expense of accuracy degradation [19]. Due to noise in the image, stability, and precision need to be considered simultaneously. This study followed the similar sharpness calculations proposed by Pertuz et al. [20].

Based on the preceding introduction, this work dedicates to pursue efficient and precise automatic defect detection for NVGs. The main features include:

- A novel searching algorithm, which is able to achieve fast and accurate focusing, is proposed. The main advantage of the developed method is also addressed through a comparison study.

- Different sharpness estimation methods for NVGs are also considered for comparison studies.

- A honeycomb defect detection process is proposed to automatic point out the number of defects and it corresponding locations. Therefore, the detection procedure can be realized efficiently and objectively.

2. Proposed Approach

This study employed an aviator’s night vision testing platform (TS-3895A/UV) to provide the required low-light environment during calibration and inspection [21]. To achieve auto defect detection purpose, the main procedure includes two parts. Firstly, fast auto focusing, which provides clear image for honeycomb detection, is addressed. Secondly, an image processing algorithm is proposed to detect the honeycomb defect. Detail experimental results are discussed in Section 2. In Section 2.1, hardware specifications are provided. Performances conducted by different sharpness measurement methods are given in Section 2.2. The proposed method and its efficiency is addressed in Section 2.3. Finally, the detail honeycomb detect detection procedure is introduced in Section 2.4.

2.1. A. Experimental Setup

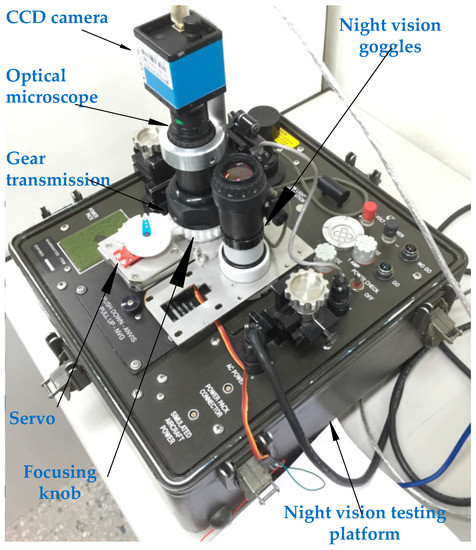

An optical microscope (Computer M0814-MP2, CBC Americas, Cary, NC, USA) with CCD (DFK 41BU02, Imaging Source, Charlotte, NC, USA) detection was used in this study. The CCD camera had a size of 50.6 mm × 50.6 mm × 50 mm and weight of 265 g. The image dimensions were 1280 × 960 pixels with a pixel size of 18.6 μm and a focal length of 8 mm. A DC servo driver (S03Q3, Grand Wing Servo-Tech Co., Ltd., Upland, NY, USA) was used to rotate the focusing knob of the NVGs. The servo controller was implemented using an Arduino Mega 2560 board (Smart Projects, Olivetti, Italy), which generates pulse width modulation (PWM) signals of 0.9–2.1 ms for ±60° movement range of the servomotor. The resulting system is shown in Figure 1, where a fixed mechanism is used to fix the camera on the NVGs and a servo transmission mechanism is implemented to adjust the focal length of the NVGs.

Figure 1.

System installation.

2.2. Process for Passive Auto-Focusing

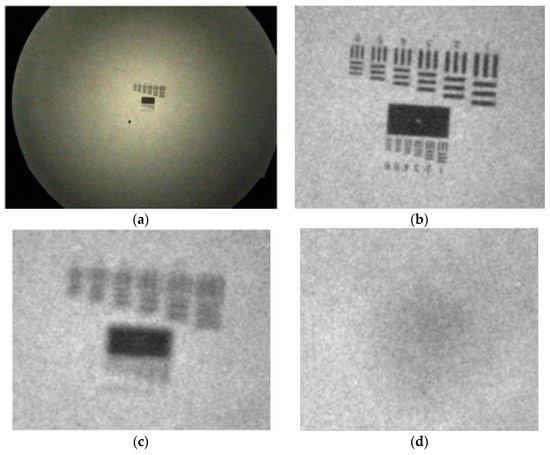

Passive auto-focusing involves sharpness measure from images obtained by the camera. Sharpness curve generated according to image sharpness of different focal length is a good indication of sensitivity to in-focal status [13,14]. Before establishing a comprehensive process for passive auto-focusing in this study, the classic sharpness measure reviewed by Pertuz et al. was studied [20]. For image sharpness calculation, twenty-eight methods are considered, which can be divided into six categories including, gradient-based operators, Laplacian-based operators, wavelet-based operators, statistics-based operators, DCT-based operators, and miscellaneous operators. To verify the feasibility, the experiments were conducted through the same image capturing device, test target as well as light source. As shown in Figure 2, the target images Figure 2b–d is 200 × 250 pixel images obtained from the color image Figure 2a, of size 960 × 1280 × 3 pixels through the NVGs. The sharpness curves under the 28 method were then estimated for the target images.

Figure 2.

Image preprocessing. (a) Initial image; (b) Sharp image (correctly focused); (c) Blurry image (slightly out of focus); (d) Blurry image (severely out of focus).

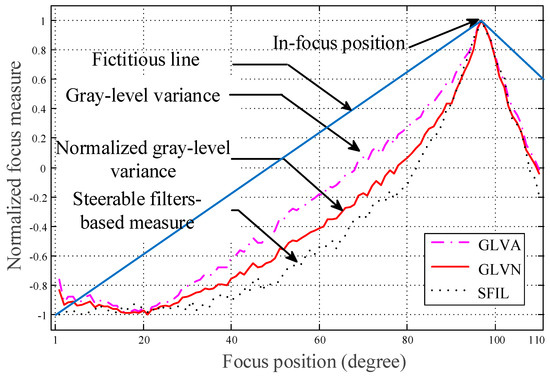

By the considerations of accuracy, computation time, correlation coefficients to the optimal value and entropy as shown in Table 1, the normalized gray-level variance sharpness measure will be the best candidate and is, thus, applied in this study. In Figure 3, a fictitious line is assumed as a sharpness reference. Based on the evaluation of entropy, gray-level variance is with lower performance. On the contrary, steerable filters-based measure is with better performance on entropy. However, referring to Table 1, the normalized gray-level variance measure is able to achieve the best average elapsed time, correlation coefficient and entropy. The normalized gray-level variance measure [20] is described as follows:

where denotes the pixel intensities at the position and is the average intensity of the image which is of size .

Table 1.

Statistical sharpness estimation comparison.

Figure 3.

Sharpness to servomotor rotation angle.

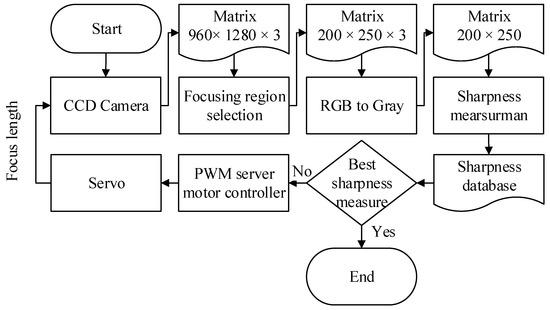

The required focus length of the NVGs on the testing platform is adjusted by a servomotor driven by a microprocessor Arduino Mega 2560 board. The focal length can then be tuned such that the best sharpness is obtained. The complete passive auto-focusing process is described in Figure 4. Firstly, a single image is captured from a CCD camera, where a region of interest is selected with the size of 200 × 250 × 3 pixels. The interested image is transformed in to a gray one and then the corresponding sharpness is calculated. Once the best sharpness is attained, the procedure is finished. Otherwise, the focus length is going to be further modified via a servo motor for the next iteration.

Figure 4.

Passive auto-focusing process for the NVGs.

2.3. Search Approaches

Since the peak point is the optimal focal point, the searching aim is going to find an effective approach to estimate the optimal solution. Due to excessive time requirements and ineffective global search strategy, peak search strategies have been successively proposed [22,23]. A hill-climbing search (HCS) [24] and rule-based algorithm are both popular methods, which have been widely applied for peak point searching [25].

Moreover, this paper proposed a gradient-based variable step search method. The best progression step size is estimated by evaluating the variation of the normalized gray-level, which can be formulated as follows:

where denotes as the best angle position, is the progression steps of servo motor, and is a scaling factor. The normalized gray-level variance under the is returned by .

In order to improve the efficiency of autofocus, different searching methods were applied for comparison purposes. Firstly, the focus measurement from different rotation angles is collected, where the focus measurement is evaluated by Equation (1). This step is used to construct a truth database for the following comparisons. Secondly, using five search algorithms, including global, hill-climbing, binary, rule-based, and gradient-based variable step search methods, to calculate the iterations and estimate accuracy. The results are summarized in Table 2. The hill-climbing search method is not able to find the optimal solution. The accuracy by using binary-search method is less than the one obtained by applying gradient-based variable step search method. For rule-based search method, the number of iterations are higher than other search methods. With the consideration of a trade-off between iterations and accuracy, it can be concluded that the gradient-based variable step search method will be the best candidate.

Table 2.

Comparison of studied search algorithms.

2.4. Honeycomb Defect Detection Procedure

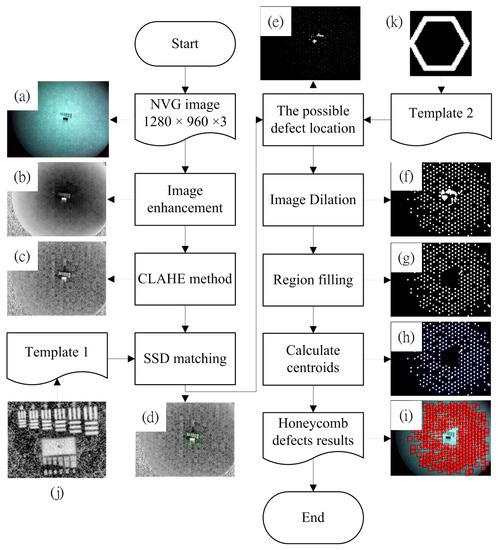

After completing the process as given in Section B, automatic honeycomb defect detection can be performed as the schematic diagram shown in Figure 5. The step-by-step procedure is described as follows:

Figure 5.

(a–k) Automatic honeycomb defect detection process.

- A color image of dimensions 960 × 1280 × 3 pixels is obtained via a CCD camera, as shown in Figure 5a.

- Since the green color array component from the RGB color system has the most obvious honeycomb defects and the blue color array component has the least, the green array is subtracted from the blue array after extracting them from the color image to reduce processing dimensions. This allows a reduction in the non-honeycomb defect region components and accentuates the honeycomb defect region information. The obtained gray level image is shown in Figure 5b.

- The contrast limited adaptive histogram equalization (CLAHE) method [26] is introduced to process the correlation between the pixel locations and the other gray level pixels. An increased contrast ratio is achieved and the defect regions are accentuated, as shown in Figure 5c.

- The sum square difference (SSD) method [27] is used to detect the positions of the mark, which is applied to the focusing process, using the template image shown in Figure 5j. The mark area is highlighted by a rectangular region shown in Figure 5d. The matching measure SSD is defined as follows:where is the search region (or the so-called primary image) at the position and is template image at the position which is of size .

- To find the location of the honeycomb defects, the binary template, Figure 5k, of size 61 × 58 pixels is introduced to carry out a “shift” operation for the image obtained in step 3. During the operation, a sub-image will be binarized using a threshold of its average intensity. If the amount of the corresponding pixels having the same value with “template 2” is greater than 55% of the total amount, the sub-image will set to be white. Otherwise, it will be black. The “white” area indicates possible location of the honeycomb defect as shown in Figure 5e. Hence, this step will produce a binary image, wherein the white points mark locations that are similar to honeycomb defects. The process of calculated possible defect location image is summarized in Table 3.

Table 3. The process for defect location detection.

Table 3. The process for defect location detection. - White color expansion is performed on the resulting binarized image of step 5 to group the honeycomb defects around the neighborhood, as shown in Figure 5f.

- The region of mark identified in step 4 is removed for further processing. After the removal, the image becomes Figure 5g.

- For honeycomb defect positioning, the centroid of each white-colored dot indicates the upper left corner of a honeycomb defect. The result is shown in Figure 5h.

Improvement percentage: ; Rule-based search parameter (Initial, Coarse, Mid, Fine); Gradient-based variable step search parameter (, Initial , Initial ); The iteration stop condition is: , where is the minimal step size of the servo.

3. Results and Discussion

The results from Table 2 shows that the global search is able to find the correction peak point. However, it causes 110 times iteration and thereby this method is time consuming. For the hill-climbing search, it is sensitive to sharpness curve and thus the searching robustness is not high enough. For the binary search, it leads to successful results. Nevertheless, only sub-optimal solution is available. For the rule-based search, the number of iterations are less than those by global-search but greater than those by the binary search. This method is able to find the correct solution under that the initial parameter is 12, coarse parameter is 3, mid-parameter is 2, and the fine parameter is 1. However, determination of parameters is highly dependent on technicians’ experiences. For detailed parameter definitions please refer to [25].

In this study we developed a gradient-based variable step search method. By setting and an initial position to be 0.28° and 60°, respectively, the correct peak point can be latched. Moreover, the number of iterations is less than those by using rule-based search. Synthesizing all of the experiences, Table 2 shows that the best estimation by the proposed gradient-based search is better than the best one obtained by the rule-based approach, and a high accuracy (i.e., L1) can be guaranteed as well.

The experiment for defect detection is carried out by using 30 NVG samples, which are pre-identified by well-trained technicians. Among the test samples, fifteen of them are with honeycomb defects and the others are defect-free. The gradient-based peak search method (2) is applied in this approach. The normalized gray-level variance sharpness measure is carried out on Windows 8.1 64-bit system running under an Intel Core i7 3770 CPU with 8 GB memory. The system run-time for auto-focusing is less than 15 s, which includes the time required for image capture, sharpness calculation and servomotor control.

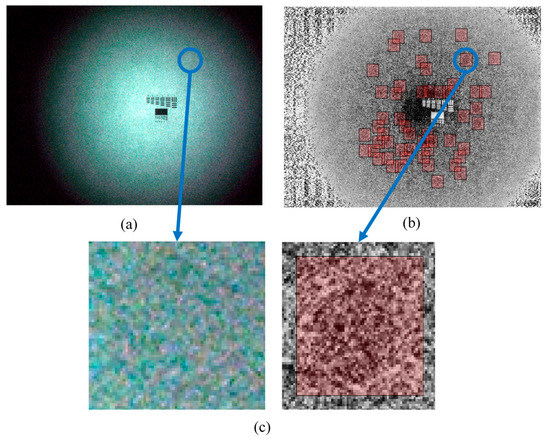

The detail inspection results of the 30 NVG samples by using the proposed approach are listed in Table 4. The results illustrate that the honeycomb defect detection system is able to identify defective and non-defective NVGs separately. Especially for the blurred honeycomb defect as shown in Figure 6a–c, it will be very difficult to identify even by professional technicians. However, Figure 6a–c evidently show that this issue can be solved with the aid of the proposed method. Using the same hardware configuration for auto-focusing, the entire image processing time for defect identification was within 45 s via the use of MATLAB® Version 2016a (The Mathworks, Natick, MA, USA) parallel computing toolbox. The algorithm can be further implemented by C++ to reduce the processing time down to 5 s. For the current manual procedure, it takes at least 30 min. Therefore, experiments evidently verify that the developed hardware configurations and algorithms can achieve precise and effective inspections.

Table 4.

Experiment results for honeycomb defect detection.

Figure 6.

Image processing results for slight defect of honeycomb. (a) The slight defect of honeycomb. (b) The slight defect of honeycomb’s result show at CLAJE image. (c) Zoom-in of the slight honeycomb defect.

4. Conclusions

This paper is concerned with the inspection of the aviator’s night vision imaging system AN/AVS-6(V)1 and AN/AVS-6(V)2. Twenty-eight types of sharpness measures were investigated and, finally, a gradient-based variable step searching was used to achieve fast auto-focusing system. Experimental results indicate that using the normalized gray-level variance operator together with the gradient-based peak search method ensures both the efficiency and accuracy of the inspection. Moreover, an automatic honeycomb defect detection algorithm was proposed to address the defective level of the night vision instrument and was further verified by experiments. By using the proposed approach, the inspection time for manual calibration can be significantly attenuated. Meanwhile, the variance due to personal subjective understanding regarding in-focus, as well as other human errors, could be avoided. Therefore, the high maintenance quality for NVGs is going to be guaranteed through a systematic, scientific, and objective way.

Acknowledgments

The authors would like to thank T.C. Tseng for his assistance on Aviator’s Night Vision Goggles Calibration and C. L. Chen for his valuable suggestions on image processing. This work was partly funded by the Ministry of Science and Technology (MOST) under the grant no. MOST 105-2218-E-006-025 and MOST 105-2218-E-006-015.

Author Contributions

B.-L.J. and C.-C.P. conceived and designed the experiments; B.-L.J. performed the experiments; B.-L.J. and C.-C.P. analyzed the data; C.-C.P. contributed analysis tools; B.-L.J. wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Harrington, L.K.; McIntire, J.P.; Hopper, D.G. Assessing the binocular advantage in aided vision. Aviat. Space Environ. Med. 2014, 85, 930–939. [Google Scholar] [CrossRef]

- Biberman, L.M.; Alluisi, E.A. Pilot Errors Involving Head-Up Displays (HUDS), Helmet-Mounted Displays (HMDS), and Night Vision Goggles (NVGS); DTIC Document; DTIC: Fort Belvoir, VA, USA, 1992. [Google Scholar]

- Sabatini, R.; Richardson, M.; Cantiello, M.; Toscano, M.; Fiorini, P.; Zammit-Mangion, D.; Jia, H. Night vision imaging systems development, integration and verification in military fighter aircraft. J. Aeronaut. Aerosp. Eng. 2013, 2, 1–12. [Google Scholar]

- SK, V.; Joshi, V. Nvg research, training and operations in field: Bridging the quintessential gap. Ind. J. Aerosp. Med. 2012, 56, 1. [Google Scholar]

- Antonio, J.C.; Joralmon, D.Q.; Fiedler, G.M.; Berkley, W.E. Aviator’s Night Vision Imaging System Preflight Adjustment/Assessment Procedures; DTIC Document; DTIC: Fort Belvoir, VA, USA, 1994. [Google Scholar]

- Lapin, L. How to Get Anything on Anybody; Intelligence Here, Ltd.: Shasta, CA, USA, 2003. [Google Scholar]

- United States Department of the Army. Operator’s Manual: Aviator’s Night Vision Imaging System AN/AVS-6(V)1, (NSN 5855-01-138-4749) and AN/AVS-6(V)2, (NSN 5855-01-138-4748); Department of the Army: Washington, DC, USA, 1989.

- Macuda, T.; Allison, R.S.; Thomas, P.; Truong, L.; Tang, D.; Craig, G.; Jennings, S. Comparison of three night vision intensification tube technologies on resolution acuity: Results from grating and hoffman anv-126 tasks. In Proceedings of the Helmet- and Head-Mounted Displays X: Technologies and Applications, Orlando, FL, USA, 28 March 2005; pp. 32–39. [Google Scholar]

- Pinkus, A.; Task, H.L. Night Vision Goggles Objectives Lens Focusing Methodology; DTIC Document; DTIC: Fort Belvoir, VA, USA, 2000. [Google Scholar]

- Craig, G.L.; Erdos, R.; Jennings, S.; Brulotte, M.; Ramphal, G.; Sabatini, R.; Dumoulin, J.; Petipas, D.; Leger, A.; Krijn, R.; et al. Flight Testing of Night Vision Systems in Rotorcraft; North Atlantic Treaty Organisation: Washington, DC, USA, 2007. [Google Scholar]

- Zeng, D.; Benilov, A.; Bunin, B.; Martini, R. Long-wavelength ir imaging system to scuba diver detection in three dimensions. IEEE Sens. J. 2010, 10, 760–764. [Google Scholar] [CrossRef]

- Bernal, O.D.; Zabit, U.; Bosch, T.M. Robust method of stabilization of optical feedback regime by using adaptive optics for a self-mixing micro-interferometer laser displacement sensor. IEEE J. Sel. Top. Quantum Electron. 2015, 21, 336–343. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Hwang, R.-C.; Chen, Y.-J. A passive auto-focus camera control system. Appl. Soft Comput. 2010, 10, 296–303. [Google Scholar] [CrossRef]

- Gamadia, M.; Kehtarnavaz, N. A filter-switching auto-focus framework for consumer camera imaging systems. IEEE Trans. Consum. Electron. 2012, 58, 228–236. [Google Scholar] [CrossRef]

- Yousefi, S.; Rahman, M.; Kehtarnavaz, N. A new auto-focus sharpness function for digital and smart-phone cameras. IEEE Trans. Consum. Electron. 2011, 57, 1003–1009. [Google Scholar] [CrossRef]

- Florea, C.; Florea, L. Parametric logarithmic type image processing for contrast based auto-focus in extreme lighting conditions. Int. J. Appl. Math. Comput. Sci. 2013, 23. [Google Scholar] [CrossRef]

- Gamadia, M.; Kehtarnavaz, N.; Roberts-Hoffman, K. Low-light auto-focus enhancement for digital and cell-phone camera image pipelines. IEEE Trans. Consum. Electron. 2007, 53, 249–257. [Google Scholar] [CrossRef]

- Chrzanowski, K. Review of night vision metrology. Opto-Electron. Rev. 2015, 23, 149–164. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Z.; Jiang, M.; Chang, M. Fast and accurate auto-focusing algorithm based on the combination of depth from focus and improved depth from defocus. Opt. Express 2014, 22, 31237–31247. [Google Scholar] [CrossRef] [PubMed]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Estrada, A. Feasibility of Using the an/pvs-14 Monocular Night Vision Device for Pilotage; DTIC Document; DTIC: Fort Belvoir, VA, USA, 2000. [Google Scholar]

- Tsai, D.C.; Chen, H.H. Reciprocal focus profile. IEEE Trans. Image Process. 2012, 21, 459–468. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Zhang, X.; Fu, H.; Chen, L.; Zhang, H.; Fu, X. Robust passive autofocus system for mobile phone camera applications. Comput. Electr. Eng. 2014, 40, 1353–1362. [Google Scholar] [CrossRef]

- Kang-Sun, C.; Sung-Jea, K. New Autofocusing Technique Using the Frequency Selective Weighted Median Filter for Video Cameras. In Proceedings of the 1999 ICCE International Conference on Consumer Electronics, Los Angeles, CA, USA, 22–24 June 1999; pp. 160–161. [Google Scholar]

- Kehtarnavaz, N.; Oh, H.J. Development and real-time implementation of a rule-based auto-focus algorithm. Real-Time Imaging 2003, 9, 197–203. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems IV; Paul, S.H., Ed.; Academic Press Professional, Inc.: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Essannouni, F.; Thami, R.O.H.; Aboutajdine, D.; Salam, A. Simple noncircular correlation method for exhaustive sum square difference matching. Opt. Eng. 2007, 46, 107004. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).