Ground Penetrating Radar as a Contextual Sensor for Multi-Sensor Radiological Characterisation

Abstract

:1. Introduction

2. Integration of Radiological and Contextual Sensors

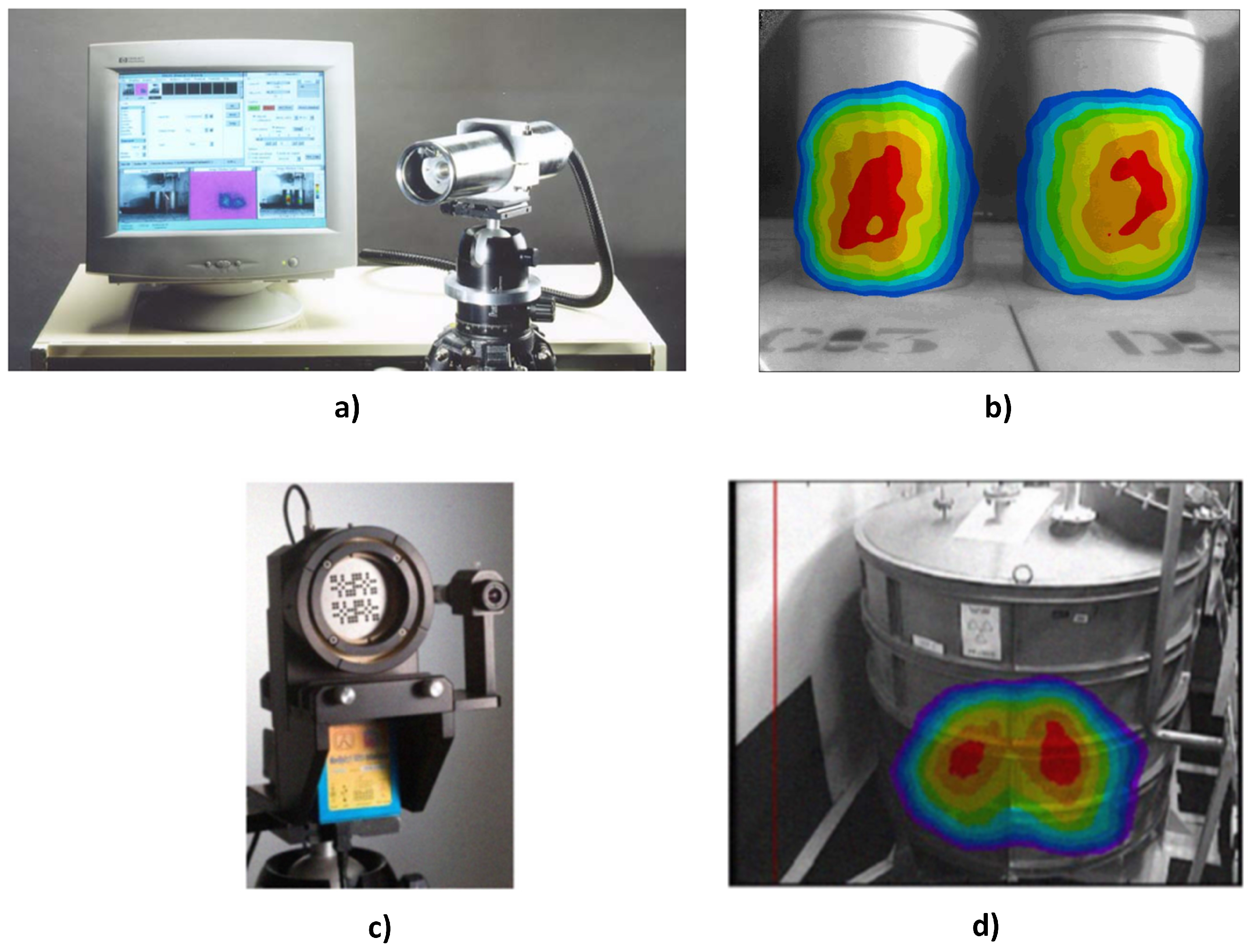

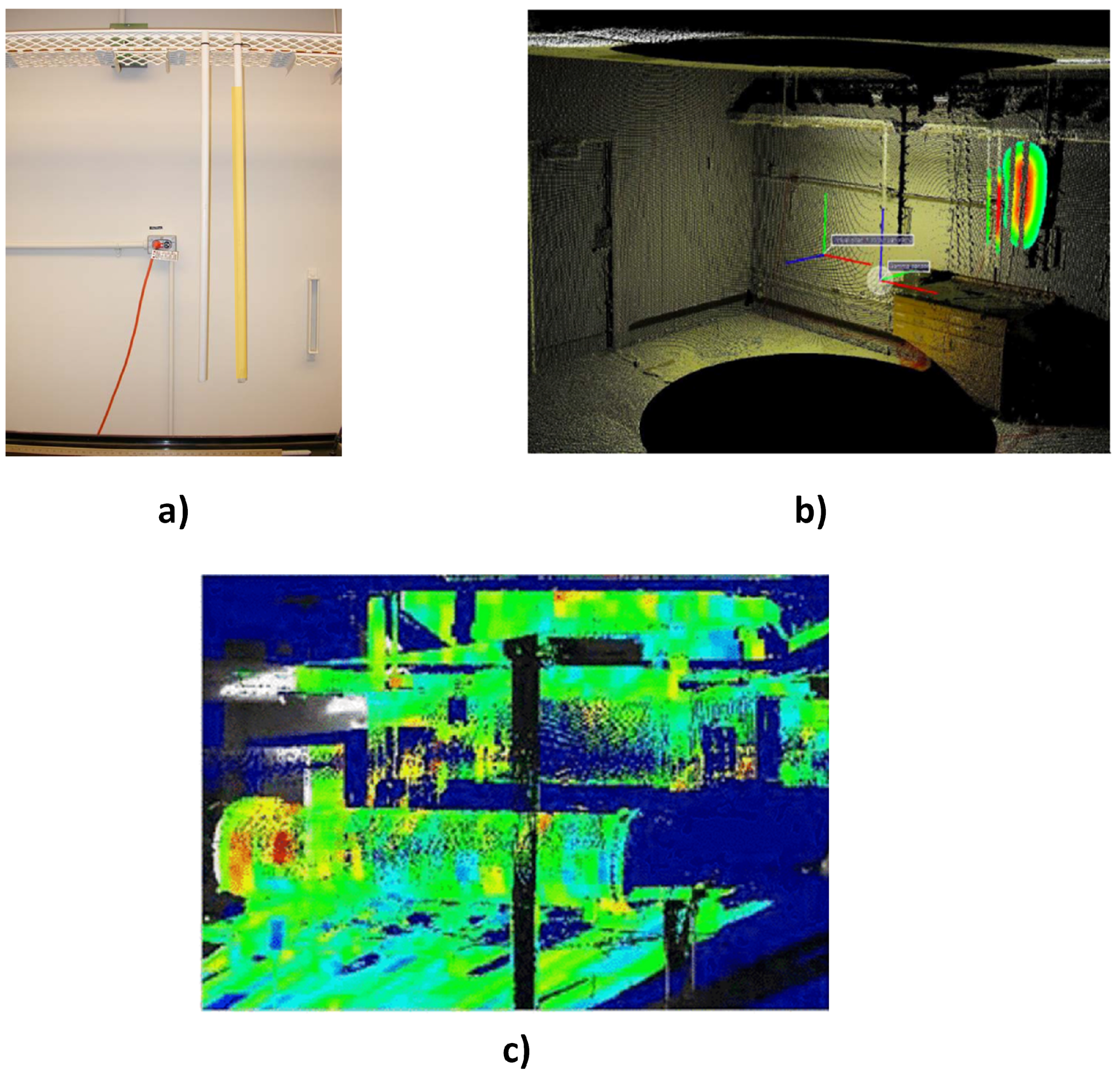

2.1. Passive Integration of Radiological and Contextual Sensors

2.2. Active Integration of Radiological and Contextual Sensors

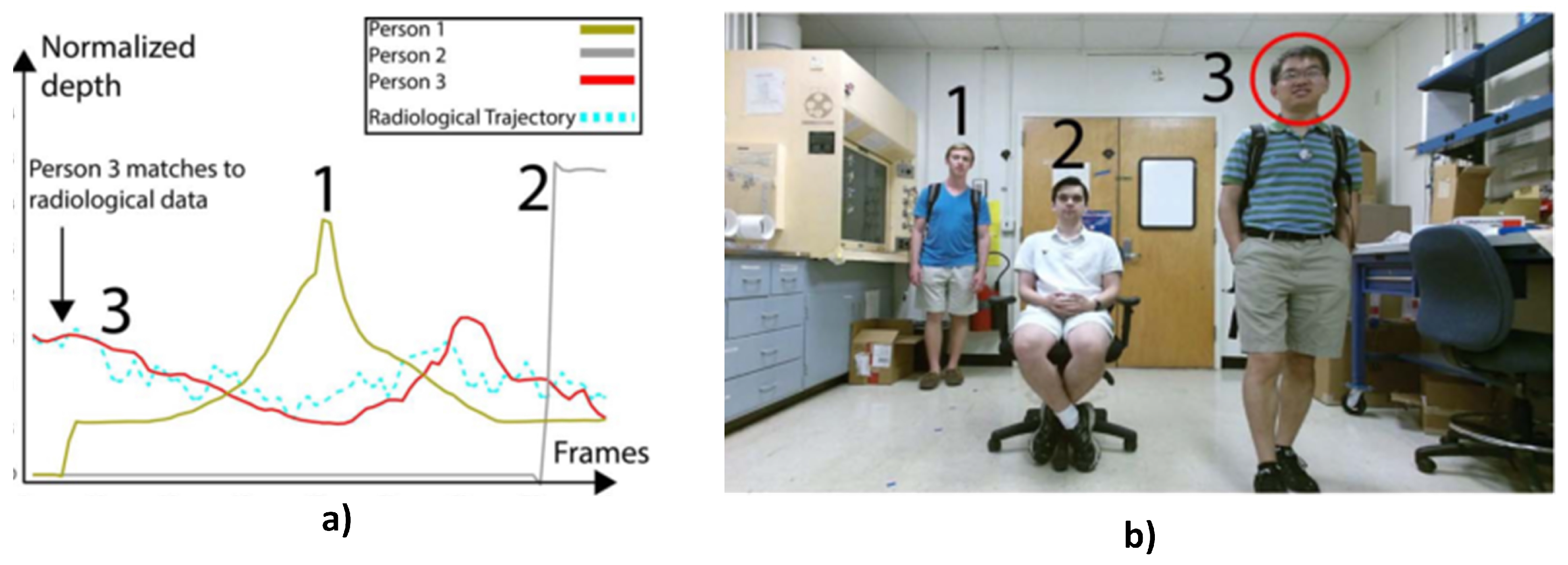

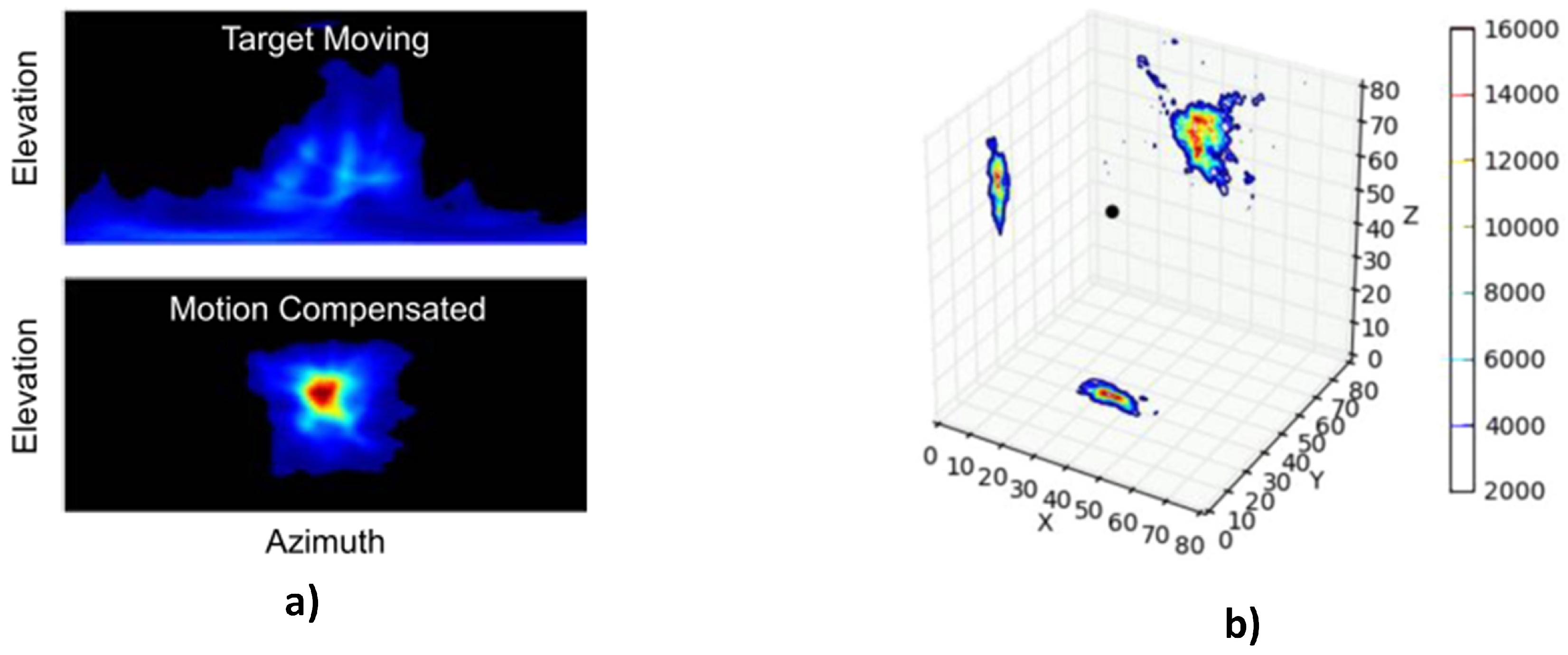

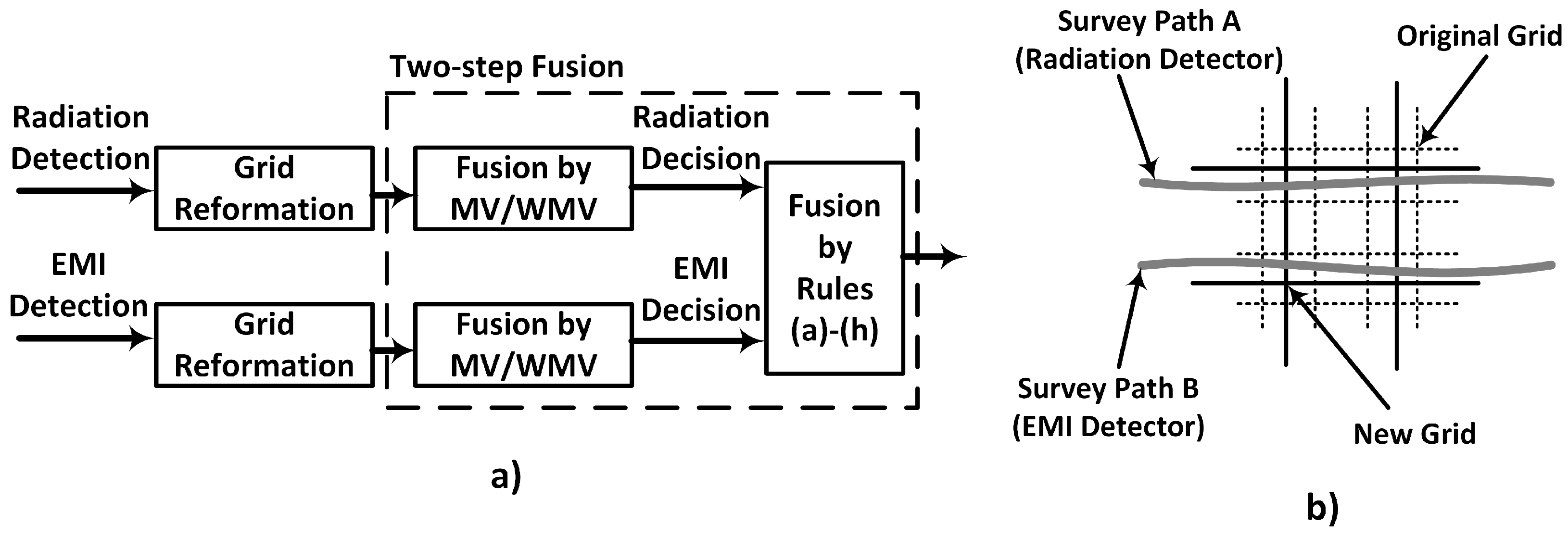

2.2.1. Low Level Fusion of Radiological and Contextual Sensors

2.2.2. Higher Level Fusion of Radiological and Contextual Sensors

3. GPR as a Contextual Sensor for Radiological Characterisation of Nuclear Sites

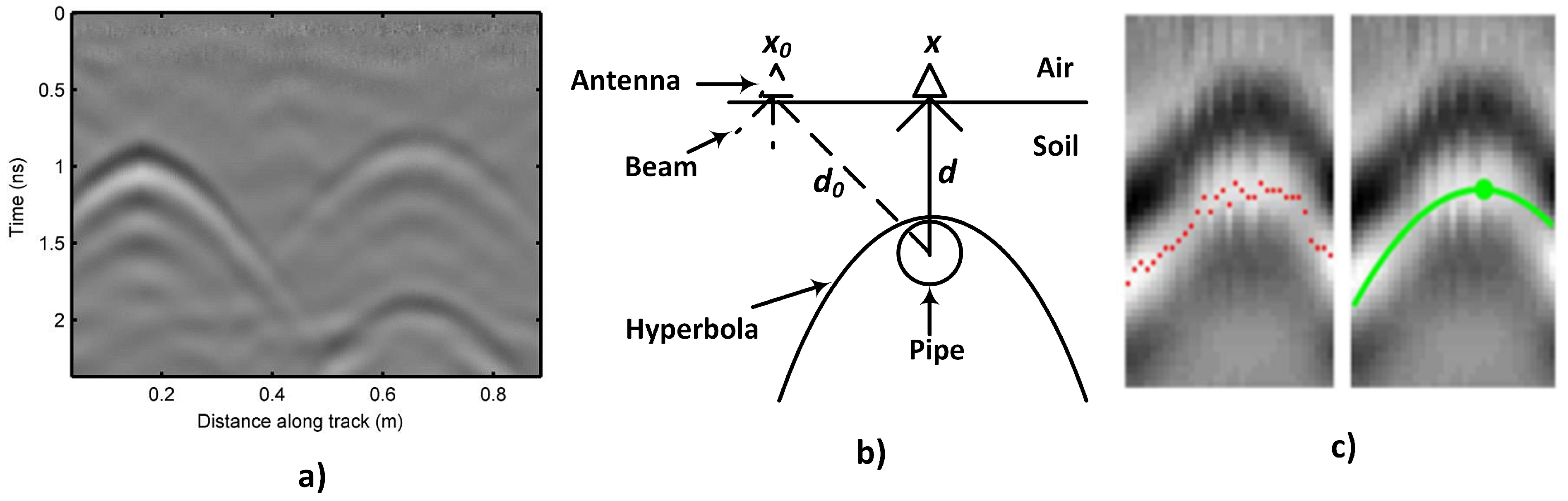

3.1. Non-Destructive Investigation of Underground Pipes

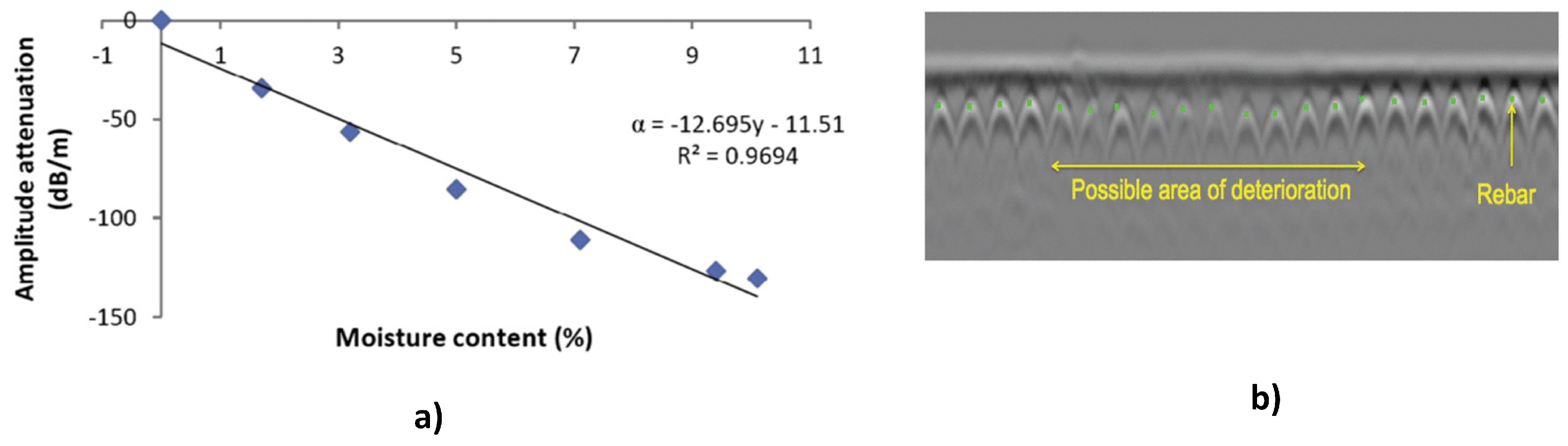

3.2. Non-Destructive Investigation of Concrete Structures

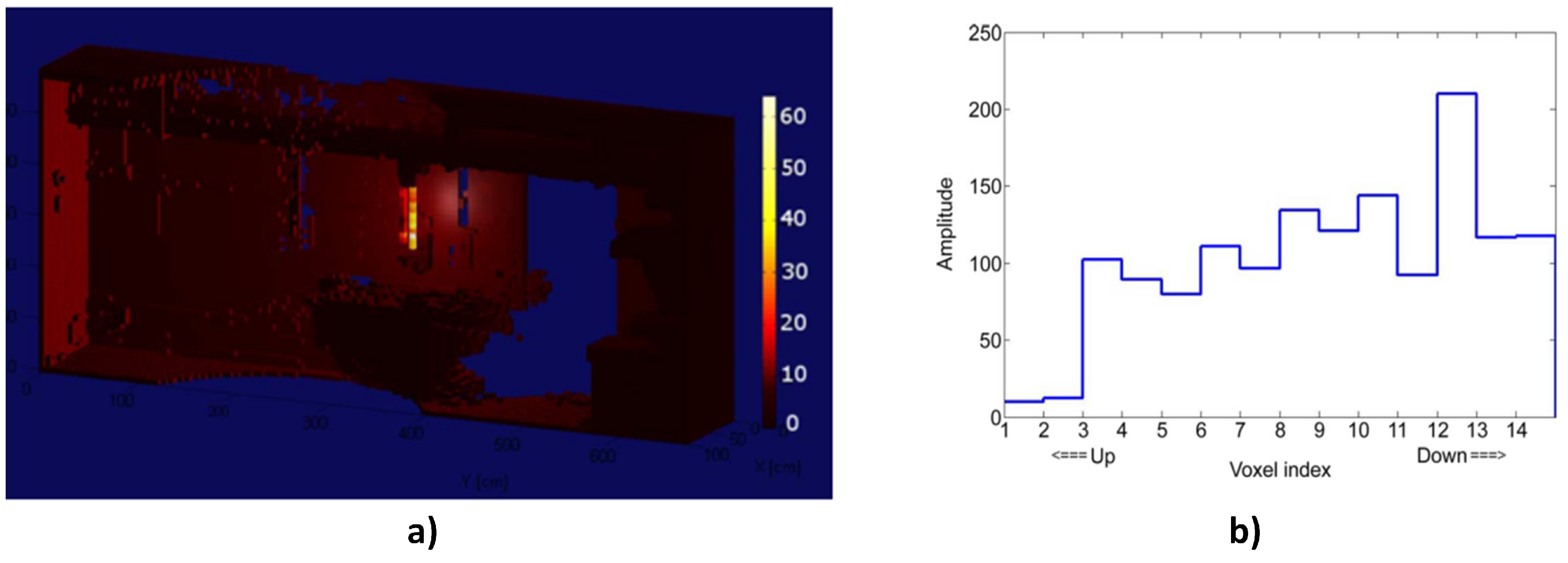

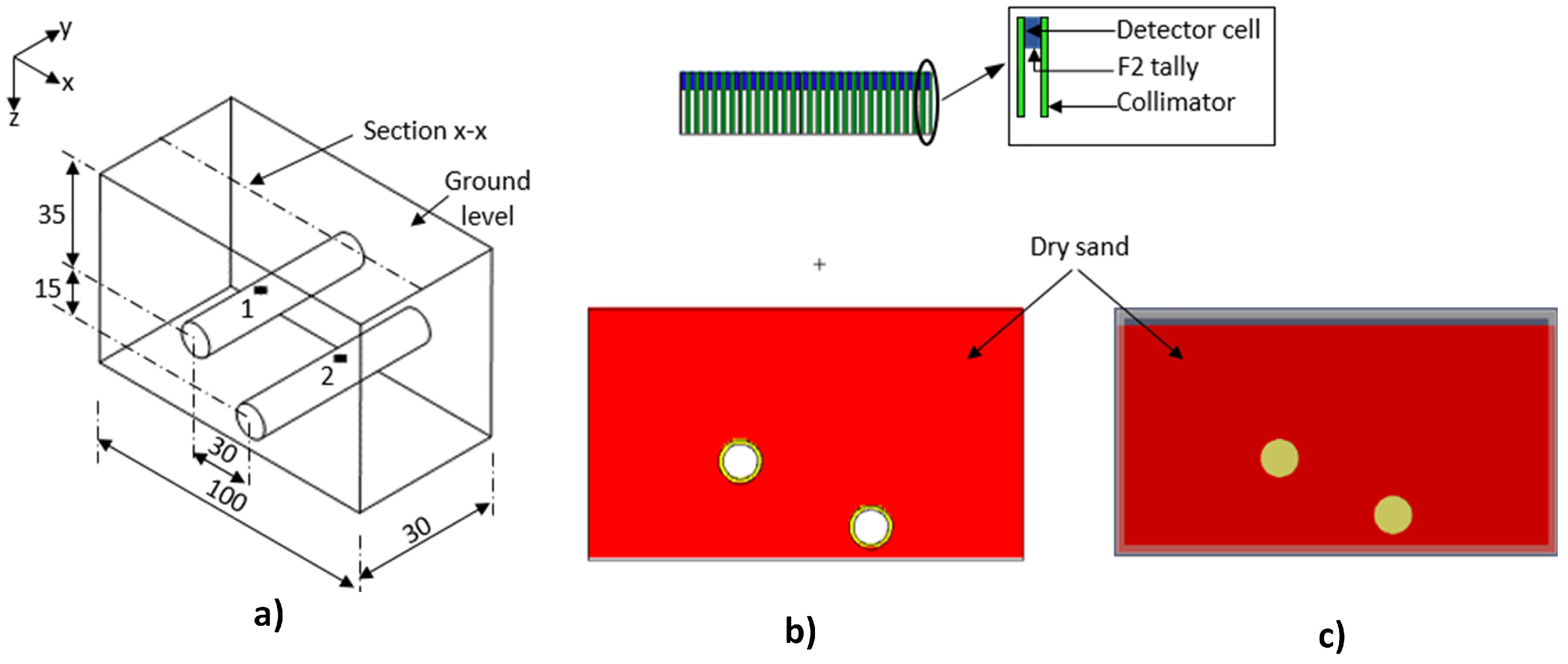

4. Combined GPR and Radiation Imaging for 3D Localisation of Radioactive Contamination in Underground Pipes

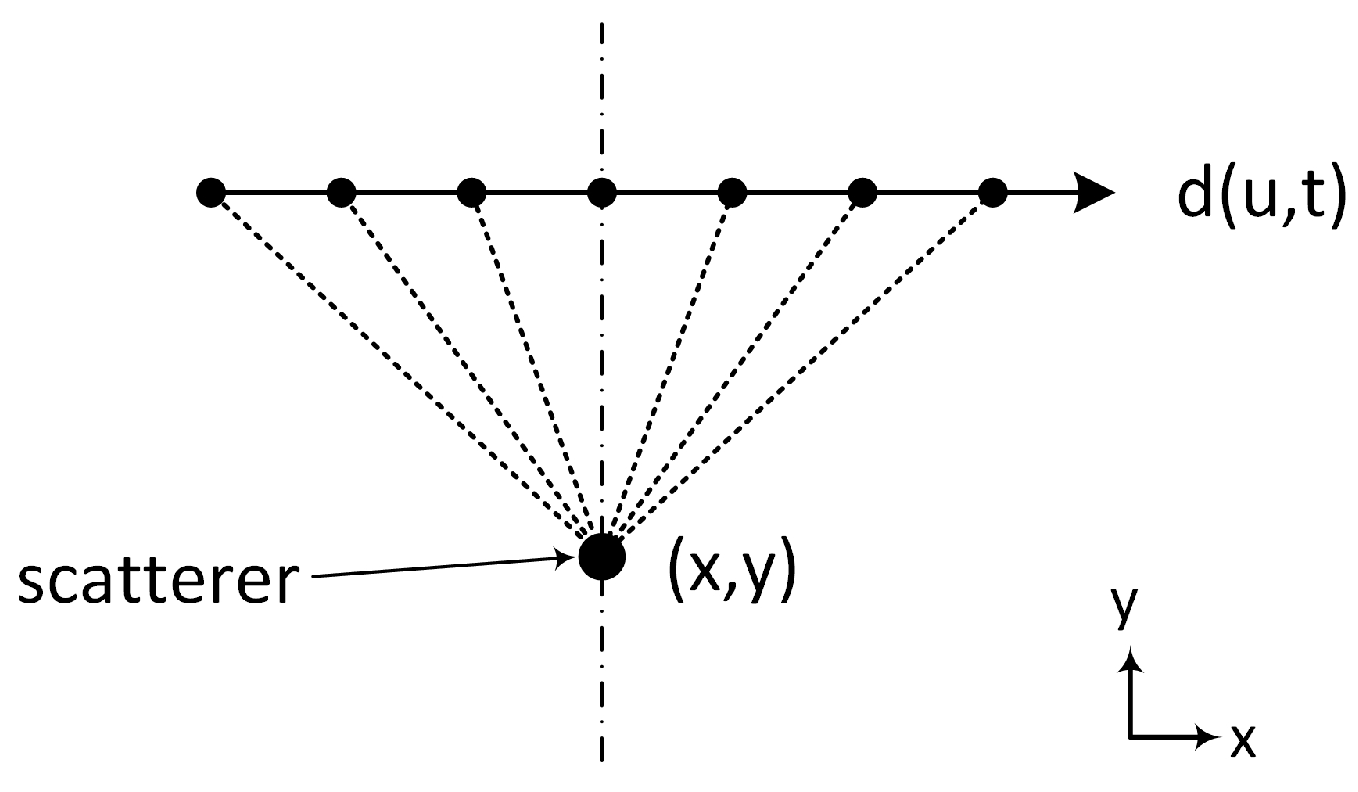

4.1. Methodology

4.1.1. Matched Filter Synthetic Aperture Radar Imaging

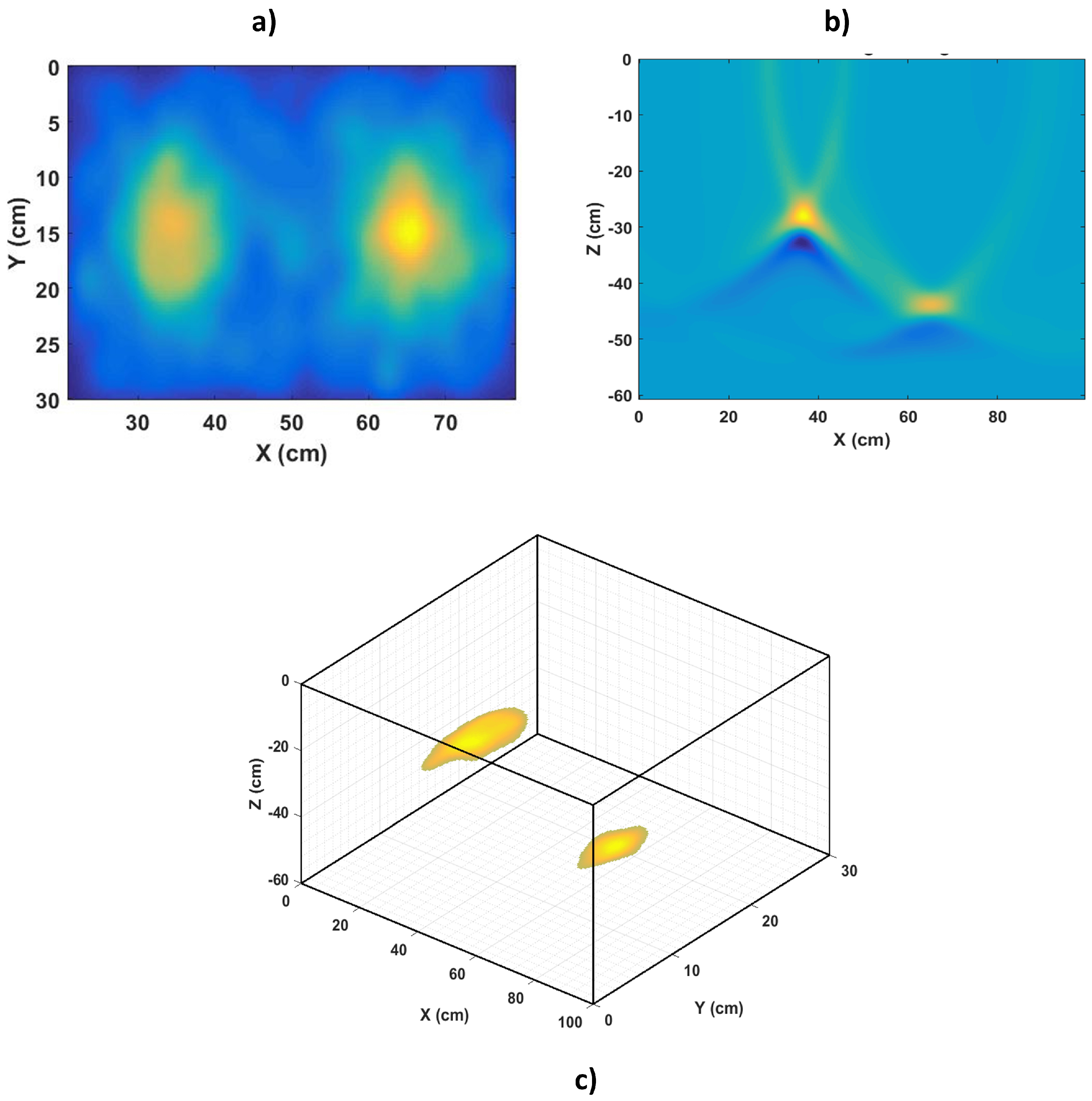

4.2. Simulation Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| GPR | Ground-penetrating radar |

| MSDF | Multi-sensor data fusion |

References

- Elkins, K.C.; Moncayo, V.M.; Kim, H.; Olson, L.D. Utility of gray-matter segmentation of ictal-Interictal perfusion SPECT and interictal 18F-FDG-PET in medically refractory epilepsy. Epilepsy Res. 2017, 130, 93–100. [Google Scholar] [CrossRef] [PubMed]

- Clifford, E.T.H.; McFee, J.E.; Ing, H.; Andrews, H.R.; Tennant, D.; Harper, E.; Faust, A.A. A militarily fielded thermal neutron activation sensor for landmine detection. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2007, 579, 418–425. [Google Scholar] [CrossRef]

- Gal, O.; Dessus, B.; Jean, F.; Lainé, F.; Lévêque, C. Operation of the CARTOGAM portable gamma camera in a photon-counting mode. IEEE Trans. Nucl. Sci. 2001, 48, 1198–1204. [Google Scholar] [CrossRef]

- Carrel, F.; Khalil, R.A.; Colas, S.; Toro, D.D.; Ferrand, G.; Gaillard, E.; Gmar, M.; Hameau, D.; Jahan, S.; Lainé, F.; et al. GAMPIX: A New Gamma Imaging System for Radiological Safety and Homeland Security Purposes. In Proceedings of the 2011 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Valencia, Spain, 23–29 October 2011; pp. 4739–4744. [Google Scholar]

- Wahl, C.G.; Kaye, W.R.; Wang, W.; Zhang, F.; Jaworski, J.M.; King, A.; Boucher, Y.A.; He, Z. The Polaris-H imaging spectrometer. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2015, 784, 377–381. [Google Scholar] [CrossRef]

- Gamage, K.; Joyce, M.; Adams, J. Combined digital imaging of mixed-field radioactivity with a single detector. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2011, 635, 74–77. [Google Scholar] [CrossRef]

- Knoll, G. Radiation Interactions. In Radiation Detection and Measurement, 4th ed.; John Wiley and Sons Inc.: Hoboken, NJ, USA, 2010; Chapter 2; pp. 47–53. [Google Scholar]

- Aucott, T.J.; Bandstra, M.S.; Negut, V.; Chivers, D.H.; Cooper, R.J.; Vetter, K. Routine surveys for Gamma-Ray background characterization. IEEE Trans. Nucl. Sci. 2013, 60, 1147–1150. [Google Scholar] [CrossRef]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Miller, B.; Foster, A.; Nuvia, M.D.; Hill, M.; Foster, A. Pipeline Characterisation and Decommissioning within the Nuclear Industry: Technology Review and Site Experience; Technical Report 2; Nuclear Decommissioning Authority: Cumbria, UK, 2016.

- Sullivan, P.O.; Nokhamzon, J.G.; Cantrel, E. Decontamination and dismantling of radioactive concrete structures. NEA News 2010, 28, 27–29. [Google Scholar]

- Gmar, M.; Gal, O.; Goaller, C.L.; Ivanov, O.P.; Potapov, V.N.; Stepanov, V.E.; Laine, F.; Lamadie, F. Development of coded-aperture imaging with a compact gamma camera. IEEE Trans. Nucl. Sci. 2003, 51, 1052–1056. [Google Scholar]

- Gal, O.; Gmar, M.; Ivanov, O.P.; Lainé, F.; Lamadie, F.; Le Goaller, C.; Mahé, C.; Manach, E.; Stepanov, V.E. Development of a portable gamma camera with coded aperture. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2006, 563, 233–237. [Google Scholar] [CrossRef]

- Hughes, K.; Lightfoot, J. RadScan 600-a portable instrument for the remote imaging of gamma contamination: Its design and use in aiding decommissioning strategy. In Proceedings of the 1996 IEEE Nuclear Science Symposium Conference Record, Anaheim, CA, USA, 2–9 November 1996; pp. 930–933. [Google Scholar]

- Ecker, M.; Vincent, R. Light Detection and Ranging ( LiDAR ) Technology Evaluation; Technical Report; Missouri Department of Transportation: Jefferson, MO, USA, 2010. [Google Scholar]

- Sequeira, V.; Goncalves, J.G.M. 3D Verification of Plant Design. In Proceedings of the 25th ESARDA Symposium on Safeguards and Nuclear Materials Management, Stockholm, Sweden, 13–15 May 2003; IAEA: Stockholm, Sweden, 2003. [Google Scholar]

- Mihailescu, L.; Vetter, K.; Ruhter, W.; Chivers, D.; Coates, C.; Smith, S.; Hines, J.; Caiado, A.C.R.; Sequeira, V.; Fiocco, M.; et al. Combined Measurements with Three-Dimensional Design Information Verification System and Gamma Ray Imaging—A Collaborative Effort Between Oak Ridge National Laboratory, the Joint Research Center at Ispra. In Proceedings of the 47th INMM Annual Meeting, Nashville, TN, USA, 16–20 July 2006; Insitute of Nuclear Materials Management: Tucson, AZ, USA, 2006. [Google Scholar]

- Raffo-Caiado, A.C.; Ziock, K.P.; Hayward, J.P.; Smith, S.; Solodov, A.; Mihailescu, L.; Vetter, K.; Dougan, A.; Burks, M.; Goncallves, J.; et al. Investigation of Combined Measurements with Three-Dimensional Design Information Verification System and Gamma-Ray Imaging Systems for International Safeguards Applications. In Proceedings of the 50th INMM Annual Meeting, Tucson, AZ, USA, 12–16 July 2009; Insitute of Nuclear Materials Management: Tucson, AZ, USA, 2009; pp. 12–16. [Google Scholar]

- Boehnen, C.; Paquit, V.; Ziock, K.; Guzzardo, T.; Whitaker, M.; Raffo-Caiado, A. Field trial of a highly portable coded aperture gamma ray and 3D imaging system. In Proceedings of the 2011 Future of Instrumentation International Workshop (FIIW), Oak Ridge, TN, USA, 7–8 November 2011; pp. 75–78. [Google Scholar]

- Hall, D.; Llinas, J. An introduction to multisensor data fusion. Proc. IEEE 2002, 85, 6–23. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.F. Sensor Models and Multisensor Integration. Int. J. Rob. Res. 1988, 7, 97–113. [Google Scholar] [CrossRef]

- Luo, R.; Kay, M. Data Fusion and Sensor Integration; Academic Press: Boston, MA, USA, 1992; pp. 7–136. [Google Scholar]

- Basaeed, E.; Bhaskar, H.; Al-Mualla, M. Beyond pan-sharpening: Pixel-level fusion in remote sensing applications. In Proceedings of the 2012 IEEE International Conference on Innovations in Information Technology (IIT), Abu Dhabi, United Arab Emirates, 18–20 March 2012; pp. 139–144. [Google Scholar]

- Riley, P.; Enqvist, A.; Koppal, S.J. Low-Cost Depth and Radiological Sensor Fusion to Detect Moving Sources. In Proceedings of the 2015 IEEE International Conference on 3D Vision (3DV), Lyon, France, 19–22 October 2015; pp. 198–205. [Google Scholar]

- Ziock, K.P.; Cheriyadat, A.; Fabris, L.; Goddard, J.; Hornback, D.; Karnowski, T.; Kerekes, R.; Newby, J. Autonomous radiation monitoring of small vessels. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2011, 652, 10–15. [Google Scholar] [CrossRef]

- Ziock, K.P.; Bradley, E.C.; Cheriyadat, A.; Cunningham, M.; Fabris, L.; Fitzgerald, C.L.; Goddard, J.S.; Hornback, D.E.; Kerekes, R.A.; Karnowski, T.P.; et al. Performance of the roadside tracker portal-less portal monitor. IEEE Trans. Nucl. Sci. 2013, 60, 2237–2246. [Google Scholar] [CrossRef]

- Gao, D.; Yao, Y.; Pan, F.; Yu, T.; Yu, B.; Guan, L.; Dixon, W.; Yanoff, B.; Tian, T.P.; Krahnstoever, N. Computer vision aided target linked radiation imaging. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1162–1169. [Google Scholar]

- Mihailescu, L.; Vetter, K.; Chivers, D. Standoff 3D gamma-ray imaging. IEEE Trans. Nucl. Sci. 2009, 56, 479–486. [Google Scholar] [CrossRef]

- Long, Z.; Wei, W.; Turlapaty, A.; Du, Q.; Younan, N.H. Fusion of radiation and electromagnetic induction data for buried radioactive target detection and characterization. IEEE Trans. Nucl. Sci. 2013, 60, 1126–1133. [Google Scholar] [CrossRef]

- Sundaresan, A.; Varshney, P.K.; Rao, N.S.V. Distributed detection of a nuclear radioactive source using fusion of correlated decisions. In Proceedings of the 2007 IEEE 10th International Conference on Information Fusion, Quebec, QC, Canada, 9–12 July 2007. [Google Scholar]

- Chin, J.C.; Yau, D.K.; Rao, N.S.; Yang, Y.; Ma, C.Y.; Shankar, M. Accurate localization of low-level radioactive source under noise and measurement errors. In Proceedings of the 6th ACM conference on Embedded Network Sensor Systems, Raleigh, NC, USA, 5–7 November 2008. [Google Scholar]

- Rao, N.S.V.; Sen, S.; Prins, N.J.; Cooper, D.A.; Ledoux, R.J.; Costales, J.B.; Kamieniecki, K.; Korbly, S.E.; Thompson, J.K.; Batcheler, J.; et al. Network algorithms for detection of radiation sources. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2015, 784, 326–331. [Google Scholar] [CrossRef]

- Moreno, D.; Taubin, G. Simple, accurate, and robust projector-camera calibration. In Proceedings of the 2012 IEEE Second International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), Zurich, Switzerland, 13–15 October 2012; pp. 464–471. [Google Scholar]

- Ziock, K.P.; Fabris, L.; Carr, D.; Collins, J.; Cunningham, M.; Habte, F.; Karnowski, T.; Marchant, W. A fieldable-prototype, large-area, gamma-ray imager for orphan source search. IEEE Trans. Nucl. Sci. 2008, 55, 3643–3653. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th international joint conference on Artificial intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Ziock, K.P.; Boehnen, C.B.; Ernst, J.M.; Fabris, L.; Hayward, J.P.; Karnowski, T.P.; Paquit, V.C.; Patlolla, D.R.; Trombino, D.G. Motion correction for passive radiation imaging of small vessels in ship-to-ship inspections. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2016, 805, 116–126. [Google Scholar] [CrossRef]

- Wilderman, S.J.; Fessler, J.A.; Clinthorne, N.H.; LeBlanc, J.W.; Rogers, W.L. Improved modeling of system response in list mode EM reconstruction of Compton scatter camera images. IEEE Trans. Nucl. Sci. 2001, 48, 111–116. [Google Scholar] [CrossRef]

- Vetter, K. Multi-sensor radiation detection, imaging, and fusion. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2016, 805, 127–134. [Google Scholar] [CrossRef]

- Ziock, K.P.; Collins, J.W.; Fabris, L.; Gallagher, S.; Horn, B.K.P.; Lanza, R.C.; Madden, N.W. Source-Search Sensitivity of a Large-Area, Coded-Aperture, Gamma-ray Imager. IEEE Trans. Nucl. Sci. 2006, 53, 1614–1621. [Google Scholar] [CrossRef]

- Bandstra, M.S.; Aucott, T.J.; Brubaker, E.; Chivers, D.H.; Cooper, R.J.; Curtis, J.C.; Davis, J.R.; Joshi, T.H.; Kua, J.; Meyer, R.; et al. RadMAP: The Radiological Multi-sensor Analysis Platform. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 2016, 840, 59–68. [Google Scholar] [CrossRef]

- Aucott, T.J.; Bandstra, M.S.; Negut, V.; Curtis, J.C.; Chivers, D.H.; Vetter, K. Effects of Background on Gamma-Ray Detection for Mobile Spectroscopy and Imaging Systems. IEEE Trans. Nucl. Sci. 2014, 61, 985–991. [Google Scholar] [CrossRef]

- Du, Q.; Wei, W.; May, D.; Younan, N.H. Noise-adjusted principal component analysis for buried radioactive target detection and classification. IEEE Trans. Nucl. Sci. 2010, 57, 3760–3767. [Google Scholar] [CrossRef]

- Yang, H.; Du, Q.; Ma, B. Decision fusion on supervised and unsupervised classifiers for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2010, 7, 875–879. [Google Scholar] [CrossRef]

- Galande, A.; Patil, R. The Art of Medical Image Fusion: A Survey. In Proceedings of the 2013 IEEE International Conference on Advances in Computing, Communications and Informatics (ICACCI), Mysore, India, 22–25 August 2013. [Google Scholar]

- Laraia, M.T. Nuclear Decommissioning: Planning, Execution and International Experience; Woodhead Publishing Limited: Cambridge, UK, 2012. [Google Scholar]

- Abdel-Aleem, M.; Chibelushi, C.C.; Moniri, M. Multisensor data fusion for the simultaneous location and condition assessment of underground water pipelines. In Proceedings of the 2011 IEEE International Conference on Networking, Sensing and Control (ICNSC), Delft, The Netherlands, 11–13 April 2011; pp. 416–421. [Google Scholar]

- Annan, A.P. Electromagnetic Principles of Ground Penetrating Radar. In Ground Penetrating Radar Theory and Applications; Jol, H.M., Ed.; Elsevier: Oxford, UK, 2009; Chapter 1; p. 18. [Google Scholar]

- Daniels, D.J. Properties of Materials. In Ground Penetrating Rada, 2nd ed.; Daniels, D.J., Ed.; Institution of Electrical Engineers: London, UK, 2004; Chapter 4; pp. 73–128. [Google Scholar]

- Rogers, C.D.F. Support Smart and Liveable Cities. In Proceedings of the 22015 IEEE 8th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Florence, Italy, 7–10 July 2015; pp. 1–4. [Google Scholar]

- Metje, N.; Ahmad, B.; Crossland, S.M. Causes, impacts and costs of strikes on buried utility assets. Proc. Inst. Civ. Eng. Munici. Eng. 2015, 168, 165–174. [Google Scholar]

- Mooney, J.P.; Ciampa, J.D.; Young, G.N.; Kressner, A.R.; Carbonara, J. GPR mapping to avoid utility conflicts prior to construction of the M-29 transmission line. In Proceedings of the 2010 IEEE PES Transmission and Distribution Conference and Exposition, New Orleans, LA, USA, 19–22 April 2010; pp. 1–8. [Google Scholar]

- El-Mahallawy, M.S.; Hashim, M. Material classification of underground utilities from GPR images using DCT-based SVM approach. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1542–1546. [Google Scholar] [CrossRef]

- Ayala-Cabrera, D.; Herrera, M.; Izquierdo, J.; Ocaña-Levario, S.J.; Pérez-García, R. GPR-based water leak models in water distribution systems. Sensors 2013, 13, 15912–15936. [Google Scholar] [CrossRef]

- Shihab, S.; Al-Nuaimy, W. Radius estimation for cylindrical objects detected by ground penetrating radar. Subsurf. Sens. Technol. Appl. 2005, 6, 151–166. [Google Scholar] [CrossRef]

- Qiao, L.; Qin, Y.; Ren, X.; Wang, Q. Identification of Buried Objects in GPR Using Amplitude Modulated Signals Extracted from Multiresolution Monogenic Signal Analysis. Sensors 2015, 15, 30340–30350. [Google Scholar] [CrossRef] [PubMed]

- Mertens, L.; Persico, R.; Matera, L.; Lambot, S. Automated Detection of Reflection Hyperbolas in Complex GPR Images with No. a Priori Knowledge on the Medium. IEEE Trans. Geosci. Remote Sens. 2016, 54, 580–596. [Google Scholar] [CrossRef]

- Richards, M.A.; Scheer, J.A.; Holm, W.A. Matched Filter Imaging. In Principles of Modern Radar Vol. I: Basic Principles; Scitech Publishing Inc.: Raleigh, NC, USA, 2010; Chapter 21; pp. 871–873. [Google Scholar]

- Gonzalez-Huici, M.A.; Catapano, I.; Soldovieri, F. A comparative study of GPR reconstruction approaches for landmine detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4869–4878. [Google Scholar] [CrossRef]

- Huuskonen-Snicker, E.; Mikhnev, V.A.; Olkkonen, M.K. Discrimination of buried objects in impulse GPR using phase retrieval technique. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1001–1007. [Google Scholar] [CrossRef]

- Kaur, P.; Dana, K.J.; Romero, F.A.; Gucunski, N. Automated GPR Rebar Analysis for Robotic Bridge Deck Evaluation. IEEE Trans. Cybern. 2015, 46, 2265–2276. [Google Scholar] [CrossRef] [PubMed]

- Miller, B.; Foster, A.; Burgess, P.; Metrology, R.; Hill, M.; Foster, A. Pipeline Characterisation and Decommissioning within the Nuclear Industry: Good Practice Guide; Technical Report 2; Nuclear Decommissioning Authority: Cumbria, UK, 2016.

- Senin, S.; Hamid, R. Ground penetrating radar wave attenuation models for estimation of moisture and chloride content in concrete slab. Constr. Build. Mater. 2016, 106, 659–669. [Google Scholar] [CrossRef]

- Trela, C.; Kind, T.; Schubert, M.; Gunther, M.; Eichen, U.D.; Trelabamde, C. Detection of Weak Scatterers in Reinforced Concrete Structures. In Proceedings of the 2014 IEEE 15th International Conference on Ground Penetrating Radar (GPR), Brussels, Belgium, 30 June–4 July 2014. [Google Scholar]

- Norris, W.E.; Naus, D.J.; Graves, H.L. Inspection of nuclear power plant containment structures. Nucl. Eng. Des. 1999, 192, 303–329. [Google Scholar] [CrossRef]

- Pelowitz, D.B. MCNPX User’s Manual, Version 2.7.0; Los Alamos National Laboratory: Los Alamos, NM, USA, 2011.

- Warren, C.; Giannopoulos, A.; Giannakis, I. gprMax: Open source software to simulate electromagnetic wave propagation for Ground Penetrating Radar. Comput. Phys. Commun. 2016, 209, 163–170. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ukaegbu, I.K.; Gamage, K.A.A. Ground Penetrating Radar as a Contextual Sensor for Multi-Sensor Radiological Characterisation. Sensors 2017, 17, 790. https://doi.org/10.3390/s17040790

Ukaegbu IK, Gamage KAA. Ground Penetrating Radar as a Contextual Sensor for Multi-Sensor Radiological Characterisation. Sensors. 2017; 17(4):790. https://doi.org/10.3390/s17040790

Chicago/Turabian StyleUkaegbu, Ikechukwu K., and Kelum A. A. Gamage. 2017. "Ground Penetrating Radar as a Contextual Sensor for Multi-Sensor Radiological Characterisation" Sensors 17, no. 4: 790. https://doi.org/10.3390/s17040790

APA StyleUkaegbu, I. K., & Gamage, K. A. A. (2017). Ground Penetrating Radar as a Contextual Sensor for Multi-Sensor Radiological Characterisation. Sensors, 17(4), 790. https://doi.org/10.3390/s17040790