Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images

Abstract

1. Introduction

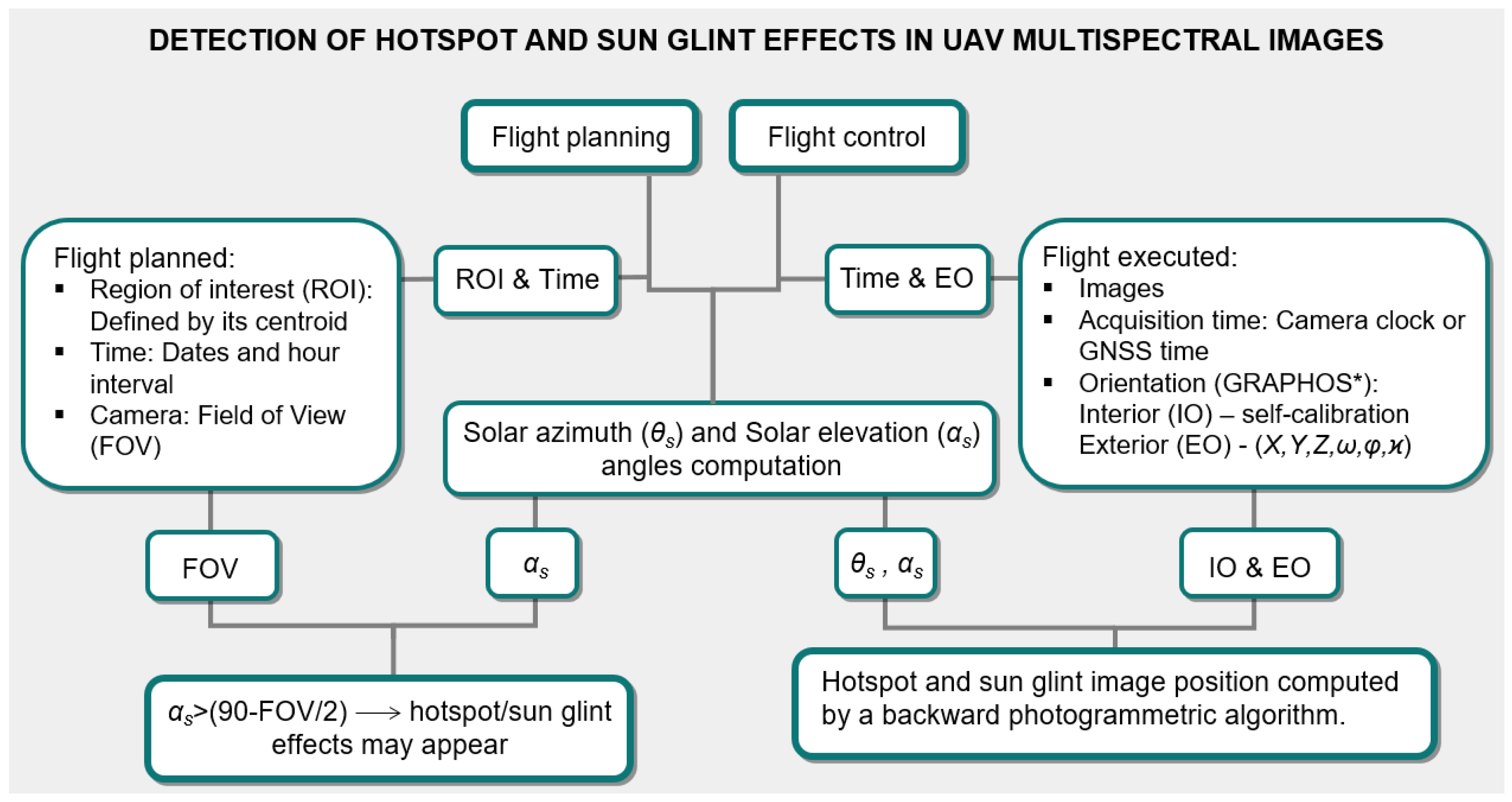

2. Methodology

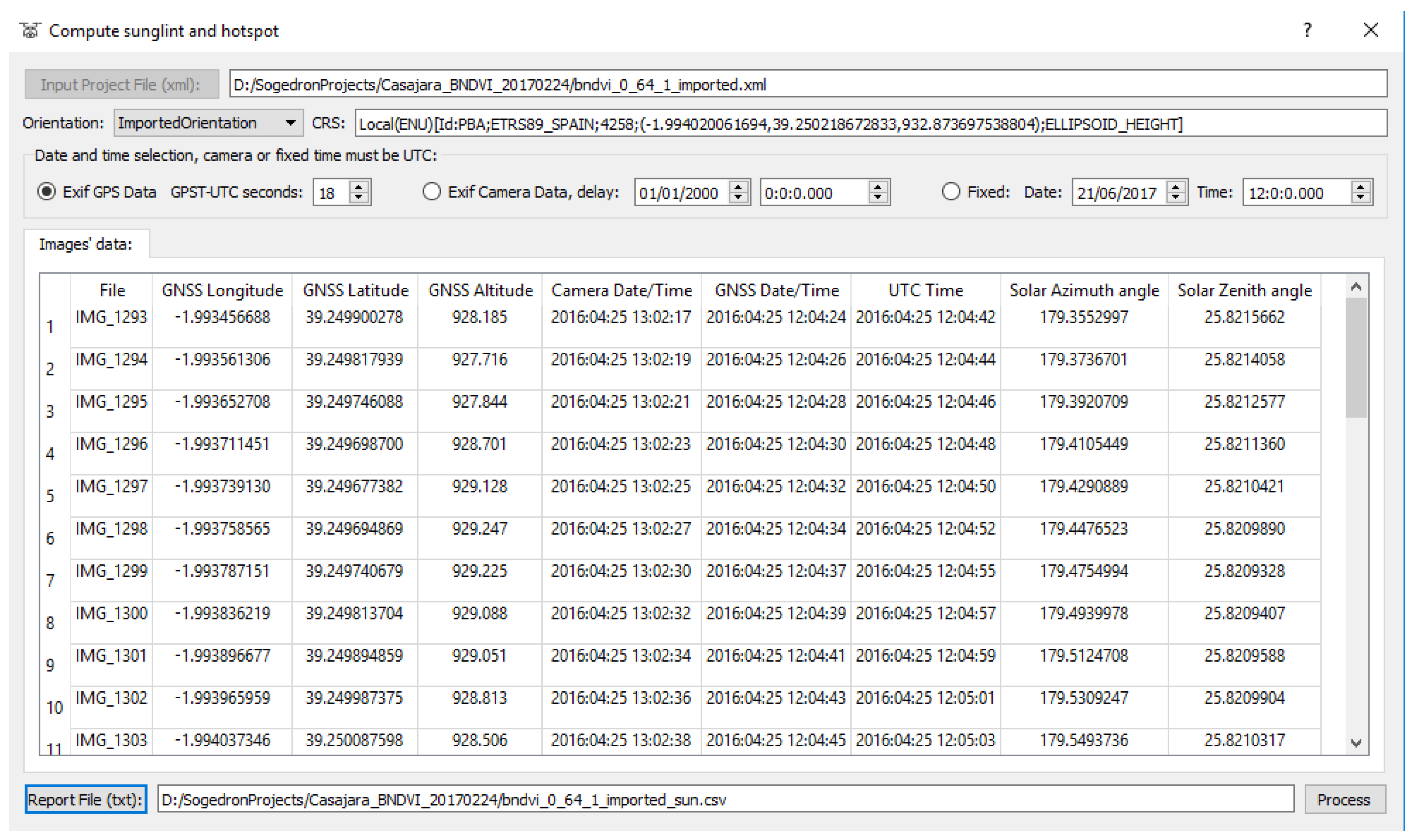

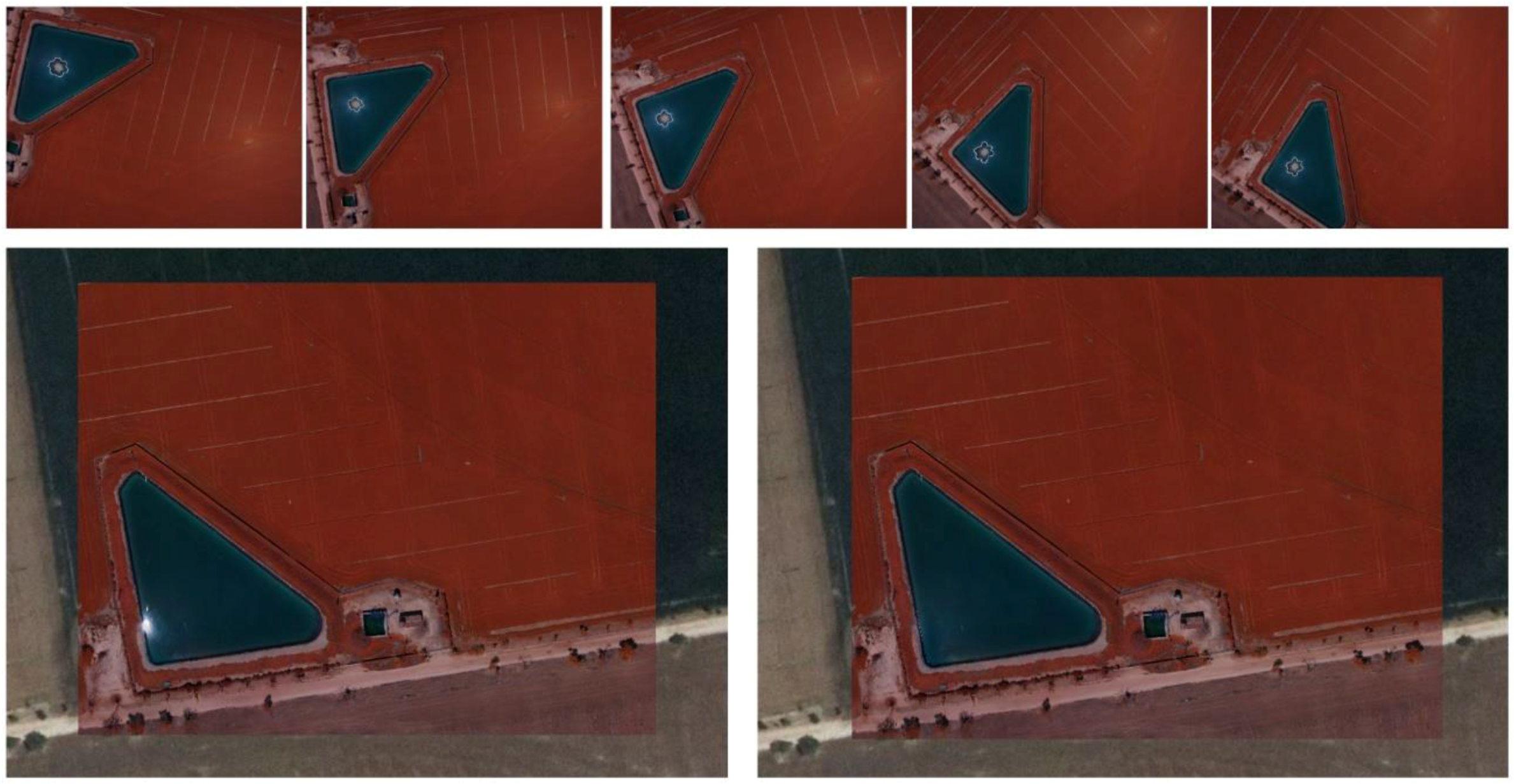

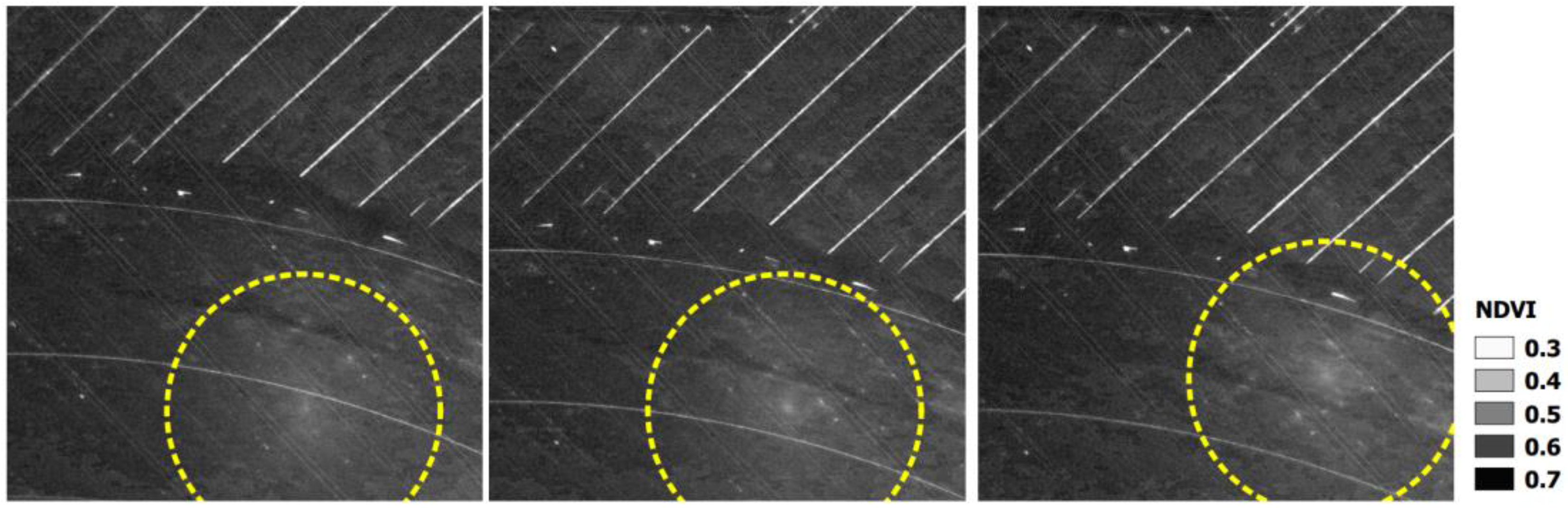

2.1. Analysis of UAV’s Metadata and Data Registration

2.2. Images Orientation

2.3. Solar Positioning

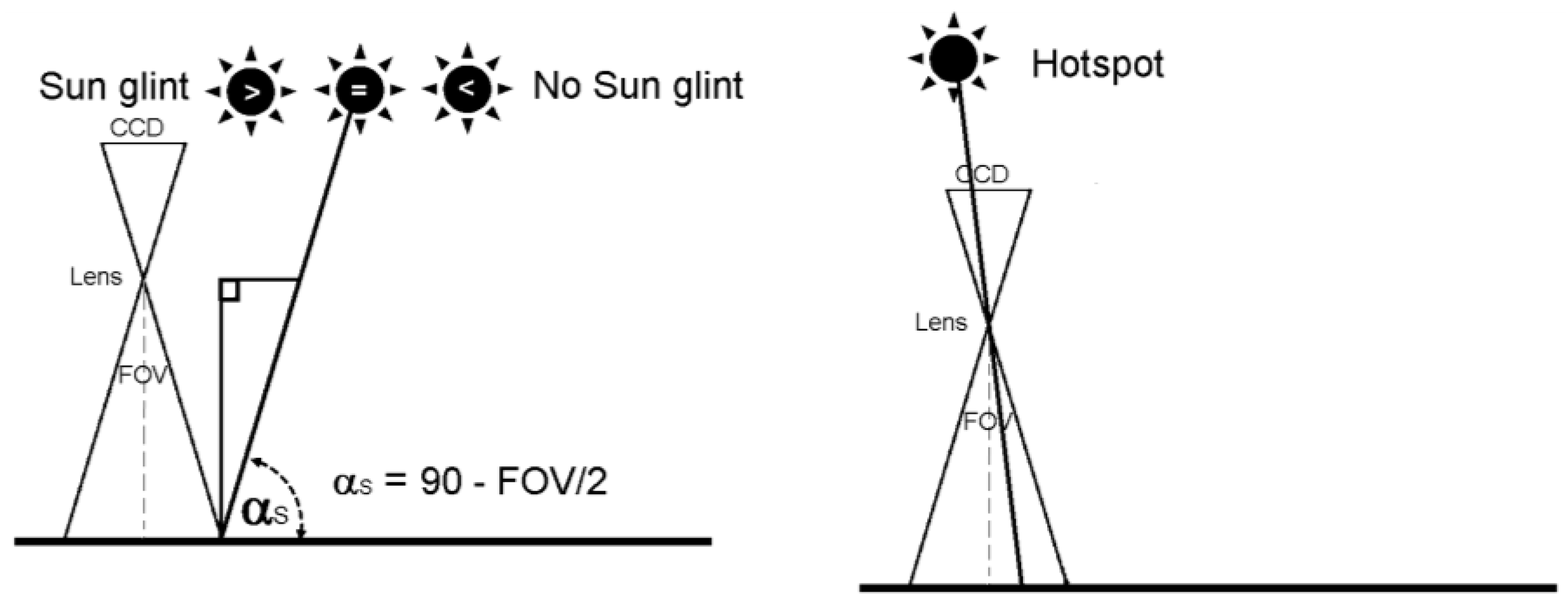

2.4. Hotspot and Sun Glint Detection

- -

- Determination of hotspot/sun glint direction angles (azimuth and elevation). In the case of hotspot, the azimuth angle (θhs) is computed as the solar azimuth θS ± 180 degrees. In the case of sun glint, the azimuth angle (θsg) is computed using directly the solar azimuth (θS). The elevation for both effects (αhs, αsg) corresponds with the relative sun elevation for each image (αS).

- -

- Transformation between coordinate reference systems. In order to guarantee better accuracy in the photogrammetric process, a transformation from geodesic coordinates (latitude, longitude and ellipsoidal altitude) to LSR coordinates is performed for each image (see Section 2.2).

- -

- Hotspot/sun glint direction vector. Using an arbitrary distance (e.g., 150 m), the sun elevation and azimuth angles (αS, θS) and the image orientation in LSR coordinates, a vector is defined in the internal geodetic LSR system for each image. This arbitrary distance is defined with the length of a vector with origin in the projection center of the camera (SX, SY, SZ) and with the direction of the optical axis of the camera (rij). Flight height can be a reference for establishing the length of this arbitrary distance. As a result, a direction vector for the possible hotspot (θhs, αhs) and sun glint (θsg, αsg) effect is defined. It should be noted, that all the points of the vector are projected in the same image point, so the distance chosen is completely arbitrary.

- -

- Hotspot/sun glint ground coordinates. Using the hotspot/sun glint direction vector the hotspot/sun glint ground coordinates (X, Y, Zhs/sg) are computed in the internal geodetic LSR system.

- -

- Hotspot/sun glint image coordinates. A backward photogrammetric process is applied to detect both effects in the images, using the external and internal orientation of each image and based on the collinearity condition (Equation (1)). If the image coordinates computed (x, yhs/sg) (in pixels) are within the format of the camera, hotspot or sun glint effects will appear in the images.

- -

- Masks definition. Since all the process developed accumulate errors (e.g., from the acquisition time to the inner and exterior orientation parameters) and the own effects enclose a size, a buffer area definition of 50 × 50 pixels is defined around these hotspot and sun glint coordinates to isolate those parts that can be affected by sun effects in the images. This buffer size is related with the ground sample distance (GSD) or pixel size. In our case, the GSD is 5 cm, so a buffer area of 50 × 50 pixels corresponds to a ground area of 2.5 m × 2.5 m. Since the sun glint and hotspot effects can show different sizes and shapes, this value is considered enough for enclosing the effect, including also the own propagation error of the process. Masks were used in the photogrammetric process as exclusion areas, that is, nor keypoints used in the orientation of images, neither the image points sampled during the orthophoto generation were taken from these exclusion areas. Alternatively, these exclusion areas were completed taking advantage of the high number of images acquired in UAV flights and the high overlap between images (70%).

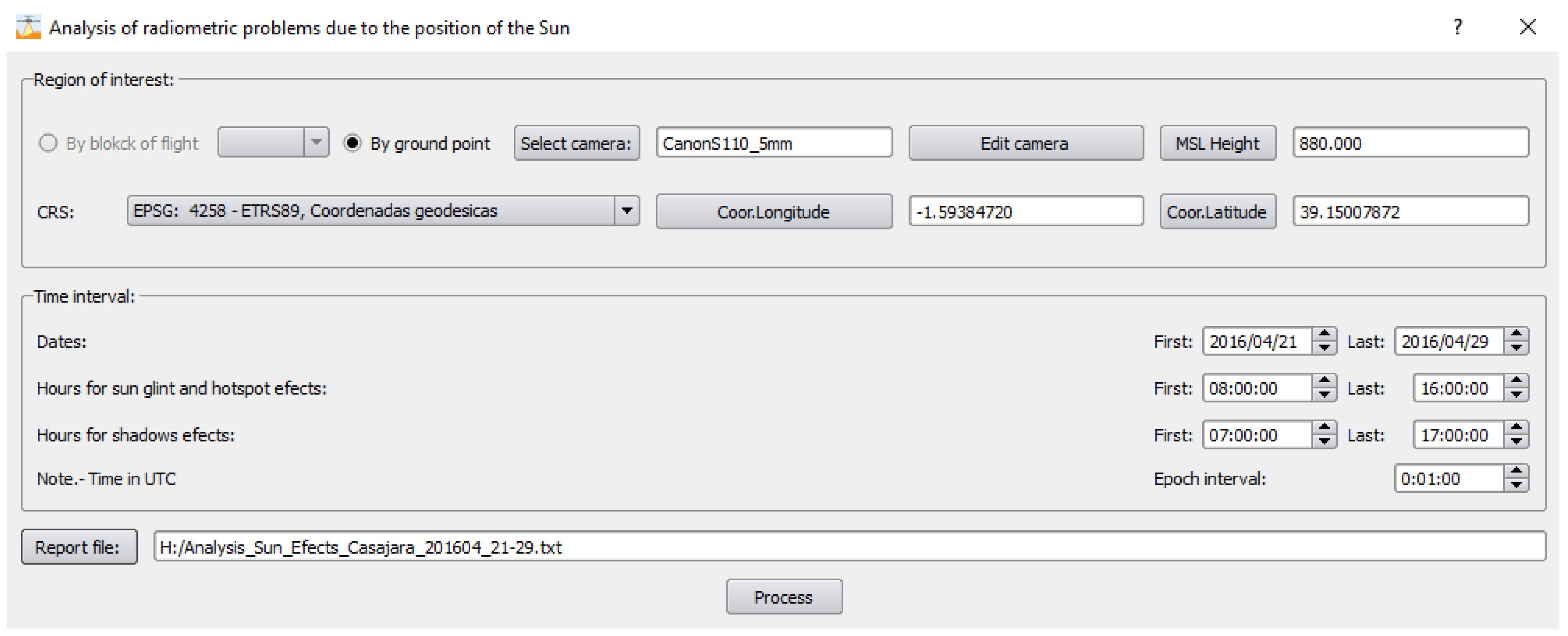

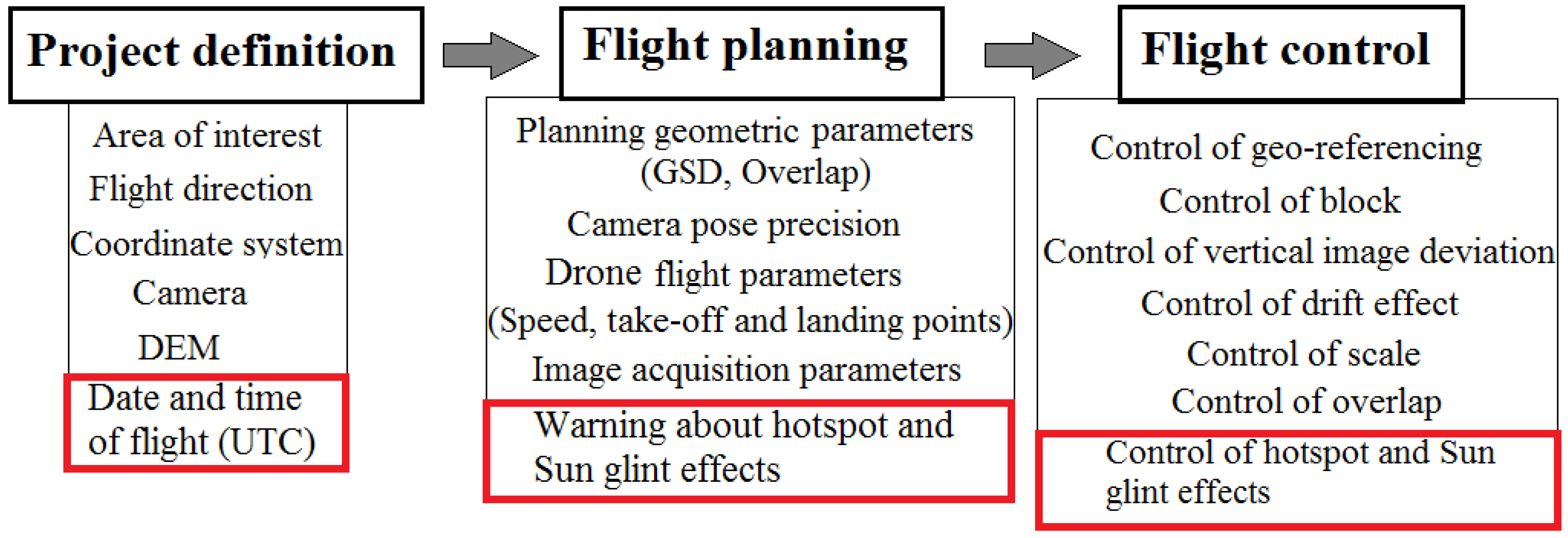

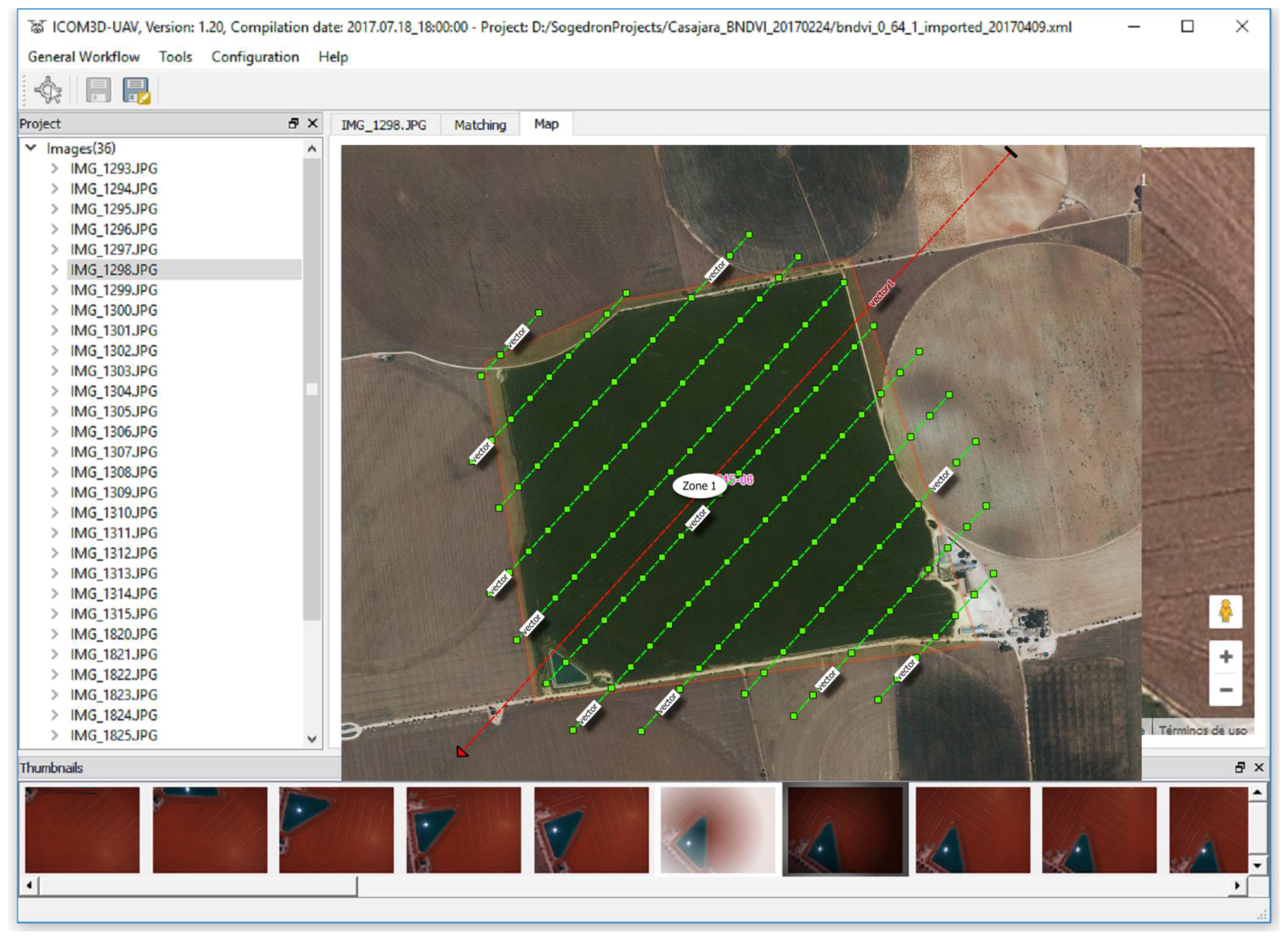

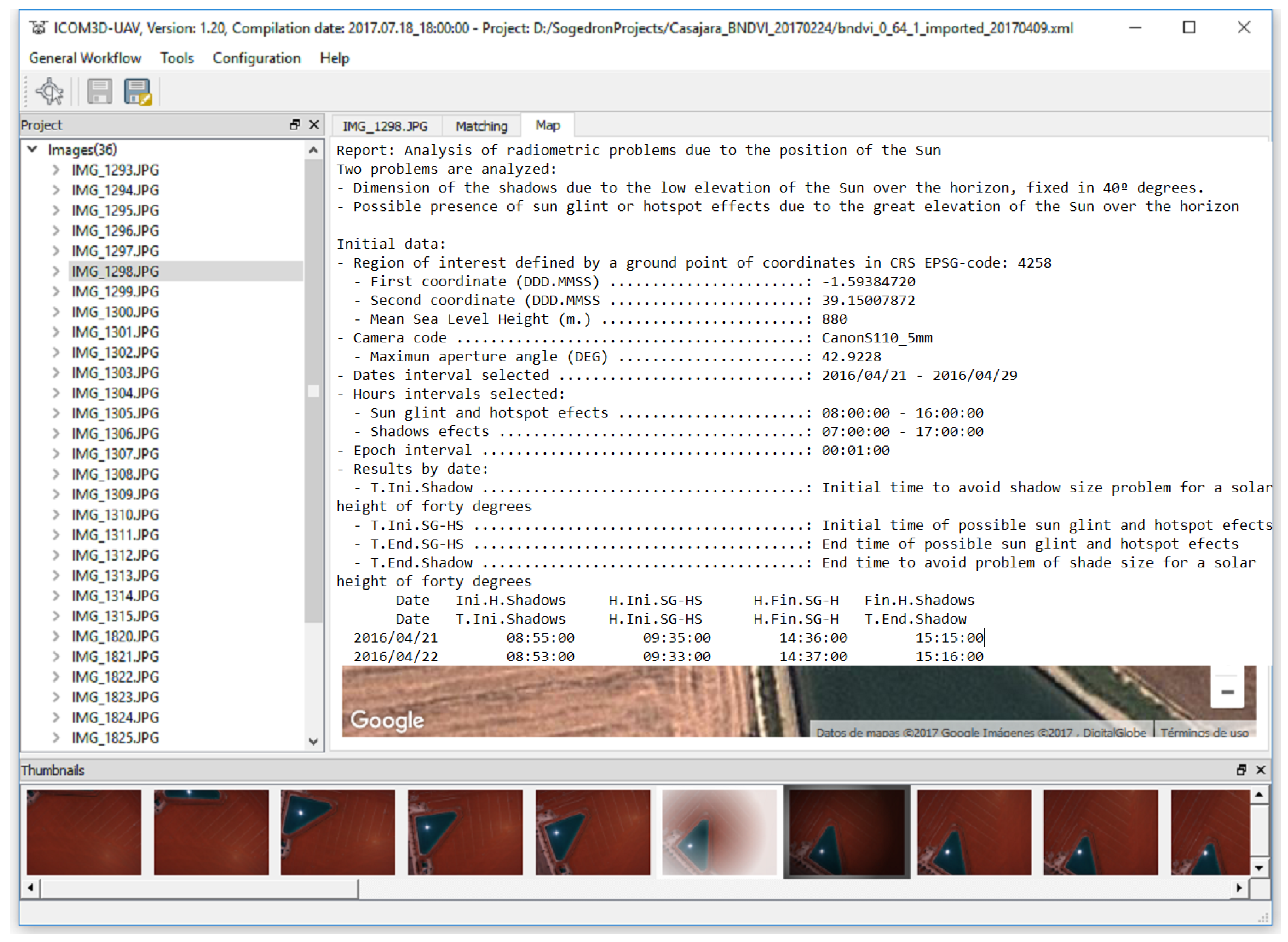

3. UAV Flight Planning and Control Software: MFlip-v2

3.1. Project Definition

3.2. Flight Planning

3.3. Flight Control

4. Experimental Results

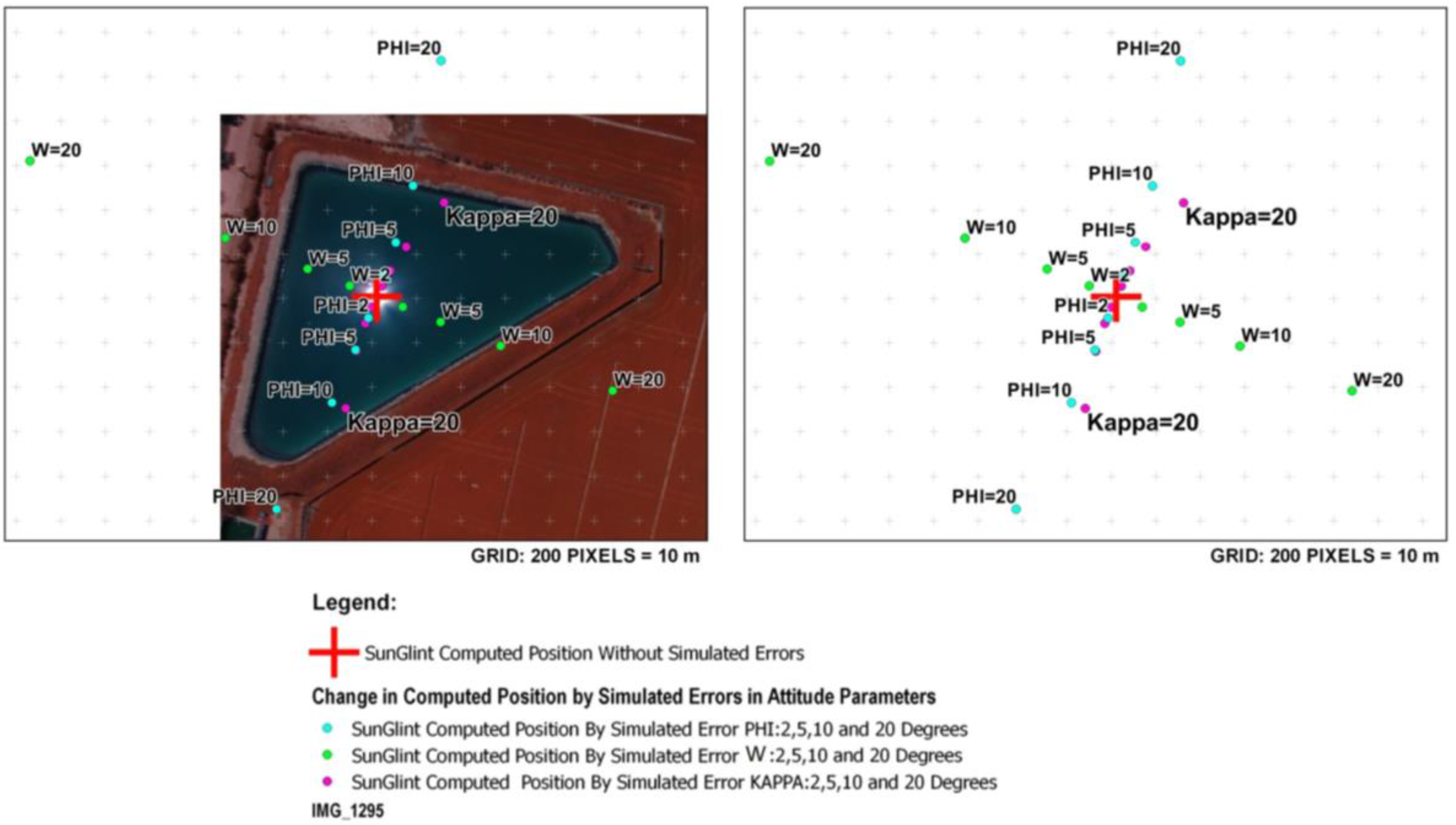

- GNSS positioning errors (meters): 0.1, 0.2, 0.5, 1, 2, 5, 10, 20, 50, 100;

- Time errors (seconds): 0.1, 0.2, 0.5, 1, 2, 5, 10, 20, 50, 100;

- Orientation (degrees): 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 20.

- (a)

- The main errors in detection of sun reflections are related with the camera orientation parameters. Therefore, it is crucial to apply a photogrammetric approach to solve this orientation (Section 2.2.).

- (b)

- Simulated spots correspond to errors in orientation which are far from the errors obtained in our photogrammetric approach, even when low-cost UAV sensors are used.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Gonzalez-Aguilera, D.; Rodriguez-Gonzalvez, P. Drones—An Open Access Journal. Drones 2017, 1, 1. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.J.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. Discuss. 2016, 20, 697–713. [Google Scholar] [CrossRef]

- Díaz-Varela, R.A.; de la Rosa, R.; León, L.; Zarco-Tejada, P.J. High-Resolution airborne UAV imagery to assess olive tree crown parameters using 3D photo reconstruction: Application in breeding trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef]

- Valero, S.; Salembier, P.; Chanussot, J. Object recognition in hyperspectral images using binary partition tree representation. Pattern Recognit. Lett. 2015, 56, 45–51. [Google Scholar] [CrossRef]

- Balali, V.; Jahangiri, A.; Ghanipoor, S. Multi-class US traffic signs 3D recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inform. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Yuqing, H.; Wenrui, D.; Hongguang, L. Haze removal for UAV reconnaissance images using layered scattering model. Chin. J. Aeronaut. 2016, 29, 502–511. [Google Scholar]

- Ribeiro-Gomes, K.; Hernandez-Lopez, D.; Ballesteros, R.; Moreno, M.A. Approximate georeferencing and automatic blurred image detection to reduce the costs of UAV use in environmental and agricultural applications. Biosyst. Eng. 2016, 151, 308–327. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Akanksha; Javier Martín-Torres, F.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Su, T.C. A study of a matching pixel by pixel (MPP) algorithm to establish an empirical model of water quality mapping, as based on unmanned aerial vehicle (UAV) images. Int. J. Appl. Earth Obs. Geoinf. 2017, 58, 213–224. [Google Scholar] [CrossRef]

- Teng, W.L.; Loew, E.R.; Ross, D.I.; Zsilinsky, V.G.; Lo, C.; Philipson, W.R.; Philpot, W.D.; Morain, S.A. Fundamentals of Photographic Interpretation, Manual of Photographic Interpretation, 2nd ed.; Philipson, W.R., Ed.; American Society for Photogrammetry and Remote Sensing: Bethesda, MD, USA, 1997; pp. 49–113. [Google Scholar]

- Chen, J.M.; Cihlar, J. A hotspot function in a simple bidirectional reflectance model for satellite applications. Geophys. Res. 1997, 102, 907–925. [Google Scholar] [CrossRef]

- Pierrot-Deseilligny, M.; Clery, I. APERO, an open source bundle adjustment software for automatic calibration and orientation of set of images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 269–276. [Google Scholar]

- Harmel, T.; Chami, M. Estimation of the sunglint radiance field from optical satellite imagery over open ocean: Multidirectional approach and polarization aspects. Geophys. Res. Oceans 2013, 118, 76–90. [Google Scholar] [CrossRef]

- Bréon, F.-M.; Maignan, F.; Leroy, M.; Grant, I. Analysis of hot spot directional signatures measured from space. Geophys. Res. 2002, 107, 4282. [Google Scholar] [CrossRef]

- Giles, P. Remote sensing and cast shadows in mountainous terrain. Photogramm. Eng. Remote Sens. 2001, 67, 833–840. [Google Scholar]

- Dare, P.M. Shadow analysis in high-resolution satellite imagery of urban areas. Photogramm. Eng. Remote Sens. 2005, 71, 169–177. [Google Scholar] [CrossRef]

- Adeline, K.R.M.; Chen, M.; Briottet, X.; Pang, S.K.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Krahmer, F.; Lin, Y.; McAdoo, B.; Ott, K.; Wang, J.; Widemannk, D. Blind Image Deconvolution: Motion Blur Estimation; Technical Report; Institute of Mathematics and its Applications, University of Minnesota: Minneapolis, MN, USA, 2006. [Google Scholar]

- Shan, Q.; Jia, J.; Agarwala, A. High-quality Motion Deblurring from a Single Image. ACM Trans. Gr. 2008, 27, 1–10. [Google Scholar]

- Lelégard, L.; Brédif, M.; Vallet, B.; Boldo, D. Motion blur detection in aerial images shot with channel-dependent exposure time. In IAPRS; Part 3A; ISPRS Archives: Saint-Mandé, France, 2010; Volume XXXVIII, pp. 180–185. [Google Scholar]

- Kay, S.; Hedley, J.D.; Lavender, S. Sun Glint Correction of High and Low Spatial Resolution Images of Aquatic Scenes: A Review of Methods for Visible and Near-Infrared Wavelengths. Remote Sens. 2009, 1, 697–730. [Google Scholar] [CrossRef]

- Goodman, J.A.; Lee, Z.; Ustin, S.L. Influence of Atmospheric and Sea-Surface Corrections on Retrieval of Bottom Depth and Reflectance Using a Semi-Analytical Model: A Case Study in Kaneohe Bay. Hawaii Appl. 2008, 47, 1–11. [Google Scholar] [CrossRef]

- Hochberg, E.; Andrefouet, S.; Tyler, M. Sea Surface Correction of High Spatial Resolution Ikonos Images to Improve Bottom Mapping in Near-Shore Environments. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1724–1729. [Google Scholar] [CrossRef]

- Wang, M.; Bailey, S. Correction of Sun Glint Contamination on the SeaWiFS Ocean and Atmosphere Products. Appl. Opt. 2001, 40, 4790–4798. [Google Scholar] [CrossRef] [PubMed]

- Montagner, F.; Billat, V.; Belanger, S. MERIS ATBD 2.13 Sun Glint Flag Algorithm; ACRI-ST: Biot, France, 2011. [Google Scholar]

- Gordon, H.; Voss, K. MODIS Normalized Water-Leaving Radiance Algorithm Theoretical Basis Document (MOD 18); version 5; NASA: Washington, DC, USA, 2004.

- Lyzenga, D.; Malinas, N.; Tanis, F. Multispectral Bathymetry Using a Simple Physically Based Algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2251–2259. [Google Scholar] [CrossRef]

- Hedley, J.; Harborne, A.; Mumby, P. Simple and Robust Removal of Sun Glint for Mapping Shallow-Water Benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Murtha, P.A.; Deering, D.W.; Olson, C.E., Jr.; Bracher, G.A. Vegetation. In Manual of Photographic Inter, 2nd ed.; Philipson, W.R., Ed.; Aamerican Society for Photogrammetry and Remote Sensing: Bethesda, MD, USA, 1997. [Google Scholar]

- Sun, M.W.; Zhang, J.Q. Dodging research for digital aerial images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 349–353. [Google Scholar]

- Camacho-de Coca, F.; Bréon, F.M.; Leroy, M.; Garcia-Haro, F.J. Airborne measurement of hot spot reflectance signatures. Remote Sens. Environ. 2004, 90, 63–75. [Google Scholar] [CrossRef]

- Huang, X.; Jiao, Z.; Dong, Y.; Zhang, H.; Li, X. Analysis of BRDF and albedo retrieved by kernel-driven models using field measurements. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 149–161. [Google Scholar] [CrossRef]

- Hernandez-Lopez, D.; Felipe-Garcia, B.; Gonzalez-Aguilera, D.; Arias-Perez, B. An Automatic Approach to UAV Flight Planning and Control for Photogrammetric Applications: A Test Case in the Asturias Region (Spain). Photogramm. Eng. Remote Sens. 2013, 1, 87–98. [Google Scholar] [CrossRef]

- González-Aguilera, D.; López-Fernández, L.; Rodriguez-Gonzalvez, P.; Guerrero, D.; Hernandez-Lopez, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; et al. Development of an all-purpose free photogrammetric tool. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B6, 31–38. [Google Scholar]

- Tombari, F.; Di Stefano, L. Interest points via maximal self-dissimilarities. In Proceedings of the Asian Conference on Computer Vision, Singapore, Singapore, 1–5 November 2014; pp. 586–600. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In The Proceedings of the Seventh IEEE International Conference on Computer Vision; IEEE Computer Society: Washington, DC, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: New York, NY, USA, 2003; p. 655. [Google Scholar]

- Kraus, K.; Jansa, J.; Kager, H. Advanced Methods and Applications Volume 2. Fundamentals and Standard Processes Volume 1; Institute for Photogrammetry Vienna University of Technology: Bonn, Germany, 1997. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Kukelova, Z.; Pajdla, T. A minimal solution to the autocalibration of radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; p. 7. [Google Scholar]

- NDVI Camera—NGB Converted Canon S110 Camera. Available online: https://event38.com/product/ndvi-camera-ngb-converted-canon-s110-camera/ (accessed on 14 October 2017).

| Technical Specifications: UAV Carabo S3 | |

| Climb rate | 2.5 m/s |

| Cruising speed | 6.0 m/s |

| Vehicle mass | 2.6 kg |

| Recommended payload mass | 450 g |

| Maximum payload mass | 900 g |

| Dimensions | 690 mm from rotor shaft to rotor shaft |

| Flight duration | up to 40 min (Full HD smart sport camera) |

| Battery | 6 S/22.2 V |

| Accelerometer/magnetometer | ST Micro LSM303D 14 bit |

| Accelerometer/gyroscope | Invensense MPU 6000 |

| Gyroscope | ST Micro L3GD20H 16 bit |

| Barometer | MEAS MS5611 |

| GPS | uBlox LEA 6H |

| Autopilot | Pixhawk |

| Operational Conditions: UAV Carabo S3 | |

| Temperature | −10 °C to 45 °C |

| Humidity | max. 90% (rain or snow is no problem) |

| Wind of tolerance | up to 12 m/s |

| Flight radius | 500 m on RC (Remote Control), with WP (Way Point navigation) up to 2 km |

| Ceiling altitude | up to 2500 m |

| Technical Specifications: Canon Powershot S110 | |

| Sensor Type | 1/1.7” CMOS sensor |

| Sensor size (w, h) | (7.2, 5.4) mm |

| Effective Pixels | 4000 × 3000 pixels |

| Focal length | 5.2 mm |

| Weight | 198 g (inc battery) |

| Self-Calibration Parameters: Canon Powershot S110 | |

| Calibrated focal length | 5.054 mm |

| Principal point (x, y) | (−0.034, −0.126) mm |

| Radial distortion (k1, k2) | (−0.0370017, −0.00429136) |

| Tangential distortion (P1, P2) | (−0.00116555, −0.00518746) |

| Image | Sun Glint Detection (x, y in Pixels) | Hotspot Detection (x, y in Pixels) |

|---|---|---|

| IMG_1293 | n.a. | (2804, 2327) |

| IMG_1294 | (1160, 16) | (2833, 2355) |

| ………...... | ………………. | ………………. |

| IMG_1303 | (1153, 2215) | n.a. |

| IMG_1304 | (1098, 2243) | (2962, 47) |

| IMG_1305 | (1109, 2237) | (2960, 26) |

| ………….. | ……………..… | ……………..… |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortega-Terol, D.; Hernandez-Lopez, D.; Ballesteros, R.; Gonzalez-Aguilera, D. Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images. Sensors 2017, 17, 2352. https://doi.org/10.3390/s17102352

Ortega-Terol D, Hernandez-Lopez D, Ballesteros R, Gonzalez-Aguilera D. Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images. Sensors. 2017; 17(10):2352. https://doi.org/10.3390/s17102352

Chicago/Turabian StyleOrtega-Terol, Damian, David Hernandez-Lopez, Rocio Ballesteros, and Diego Gonzalez-Aguilera. 2017. "Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images" Sensors 17, no. 10: 2352. https://doi.org/10.3390/s17102352

APA StyleOrtega-Terol, D., Hernandez-Lopez, D., Ballesteros, R., & Gonzalez-Aguilera, D. (2017). Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images. Sensors, 17(10), 2352. https://doi.org/10.3390/s17102352