Detection of Nuclear Sources by UAV Teleoperation Using a Visuo-Haptic Augmented Reality Interface

Abstract

1. Introduction

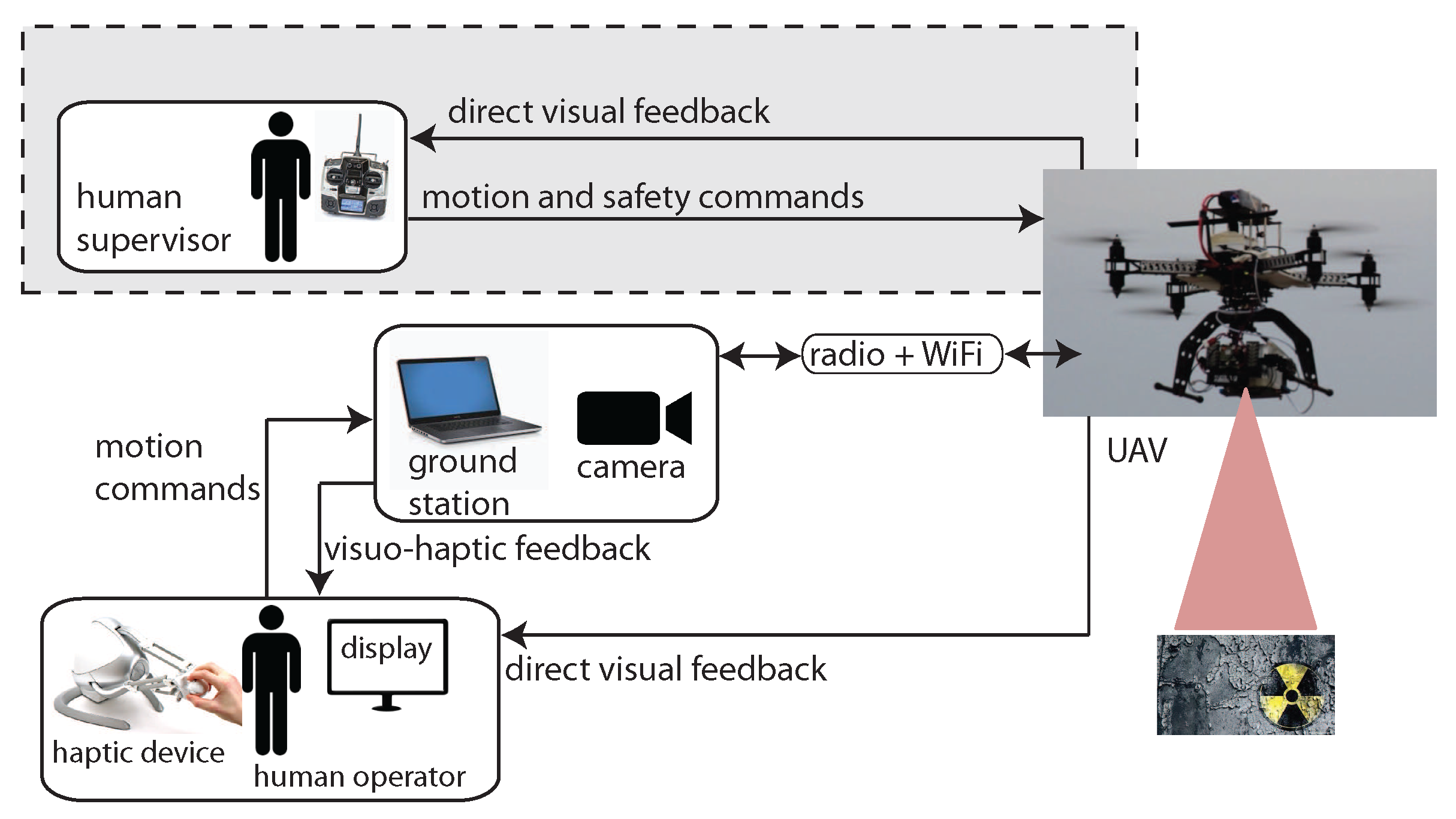

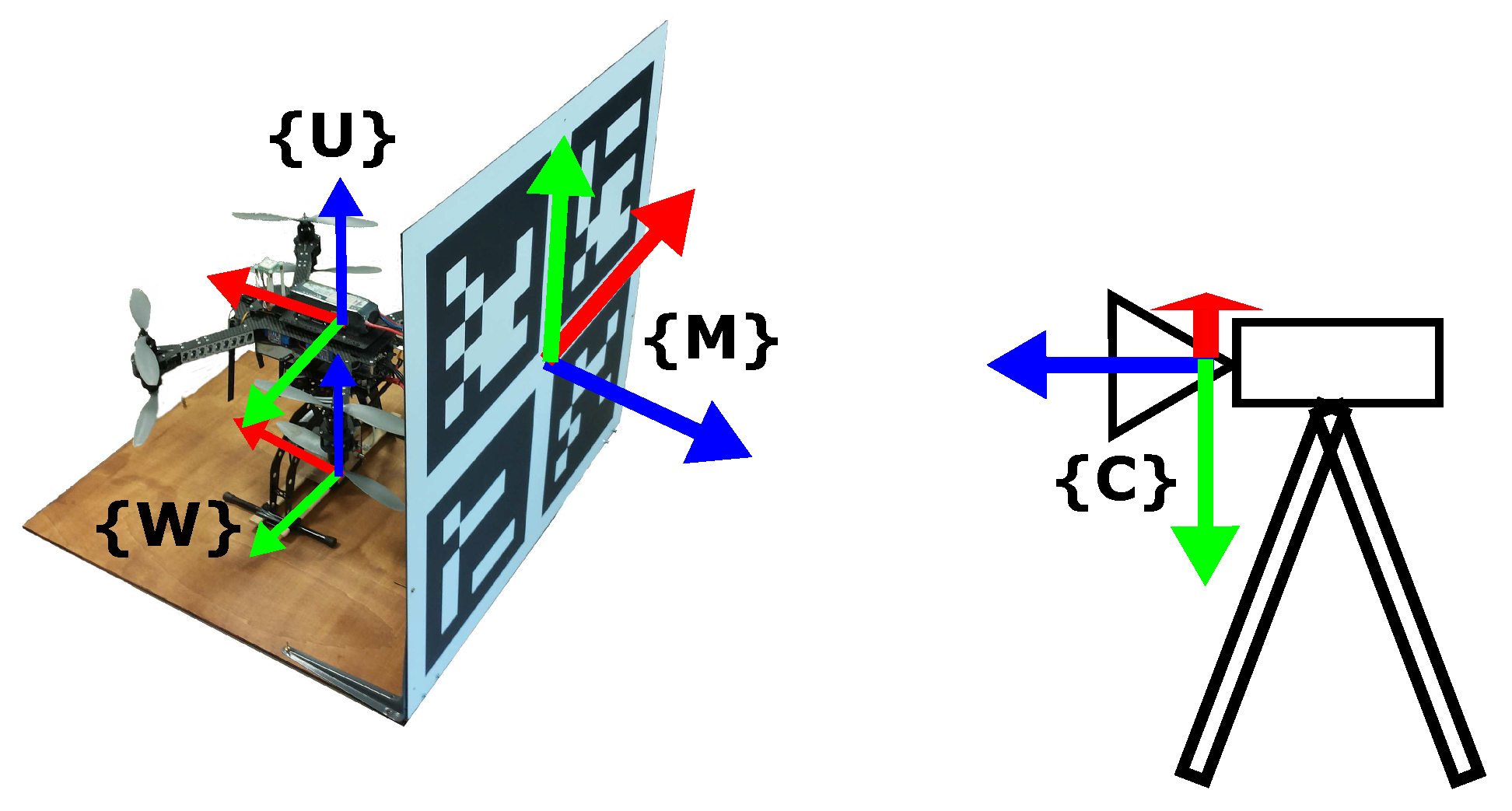

2. Overview of the System

2.1. UAV Platform

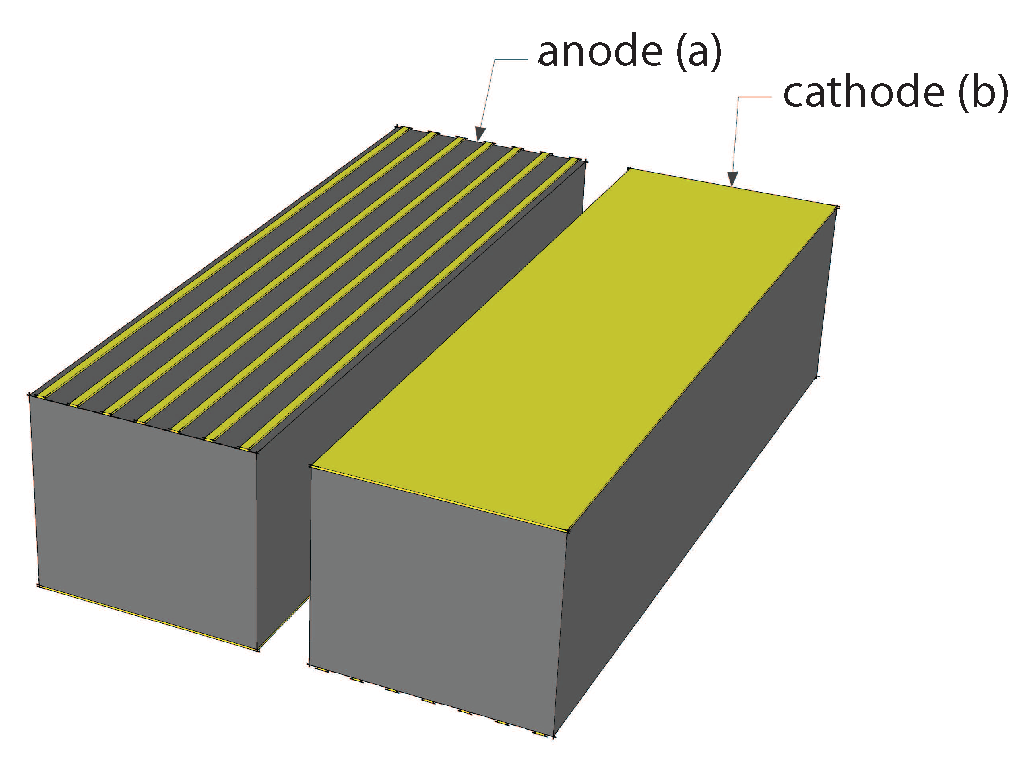

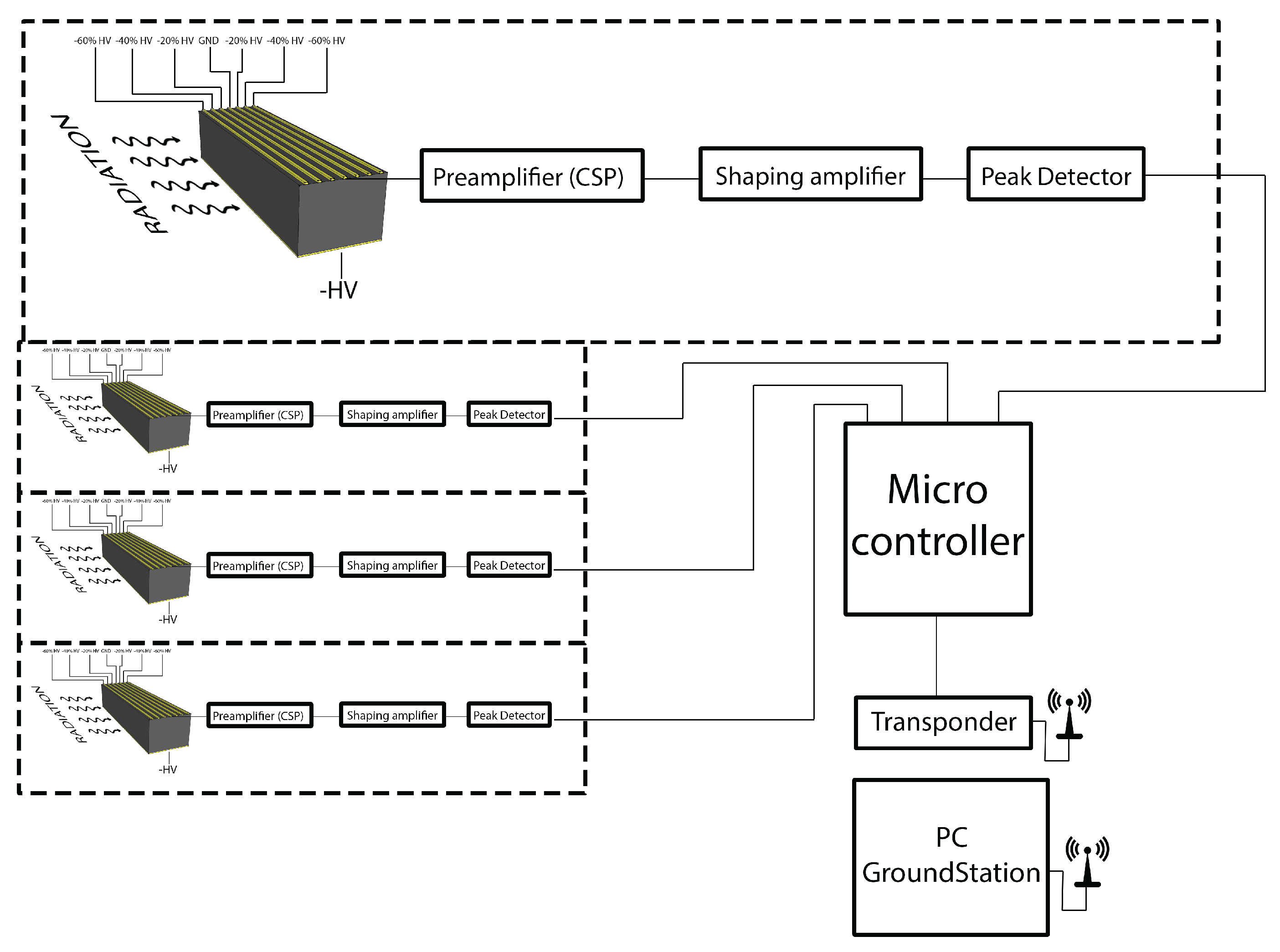

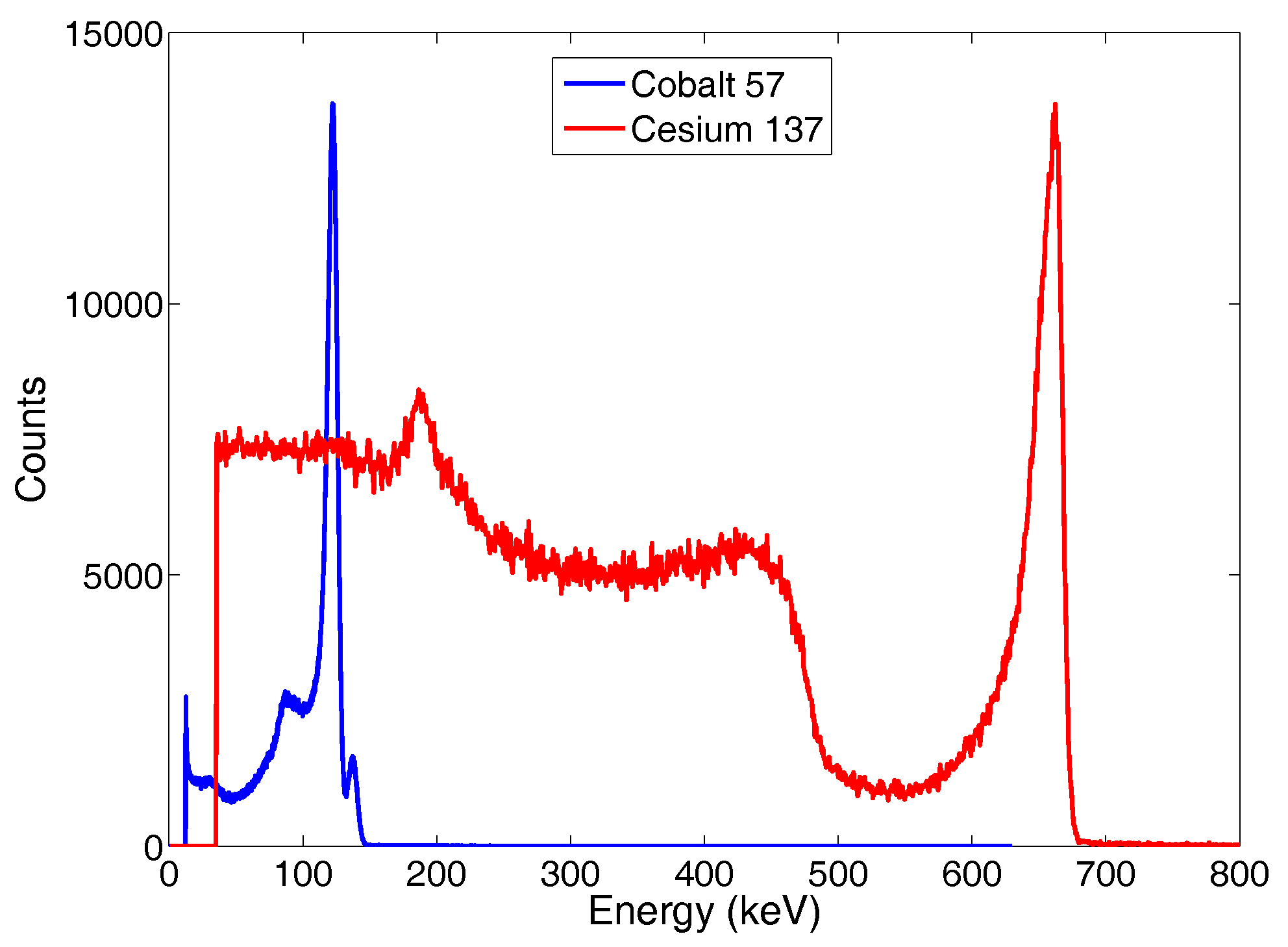

2.2. CdZnTe Gamma-Ray Detector

3. Visuo-Haptic Augmented Reality Interface

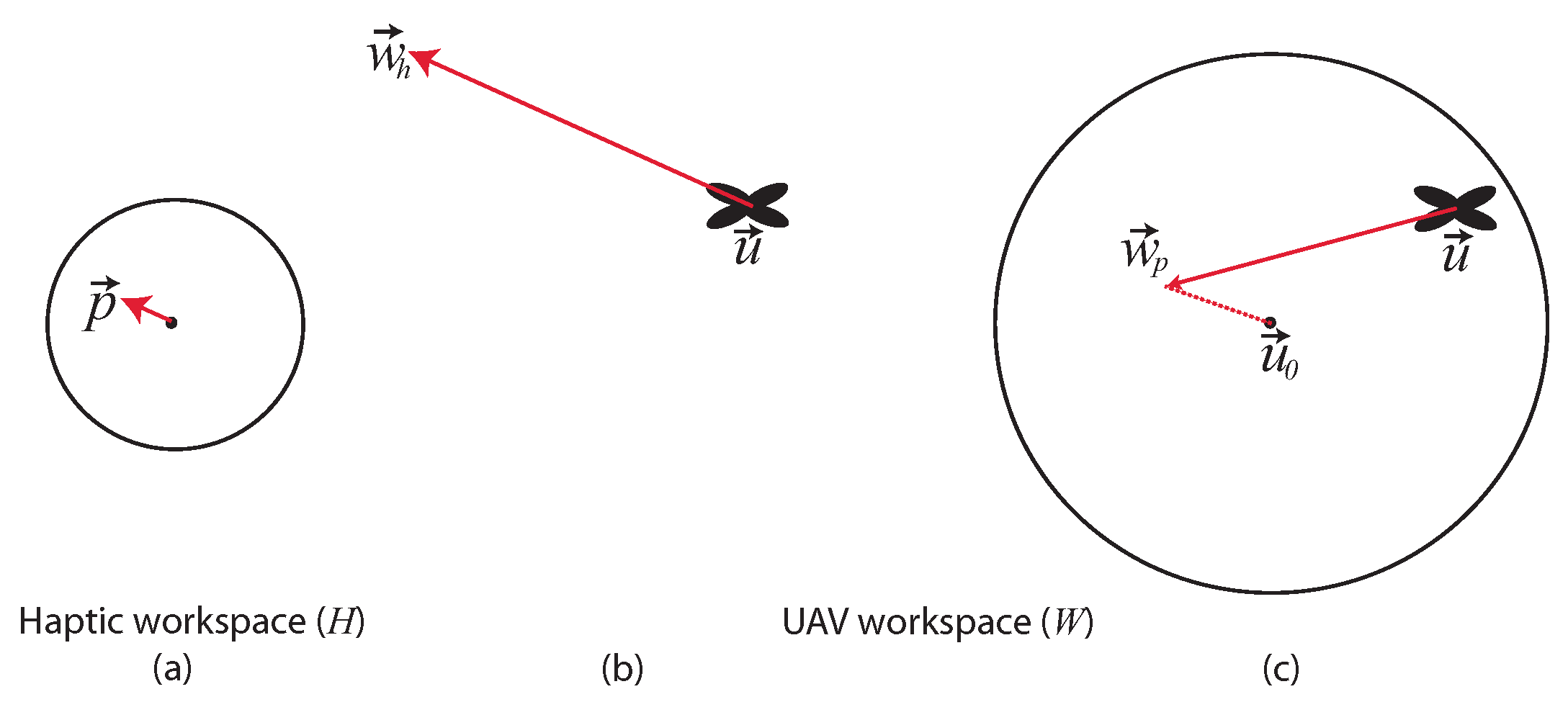

3.1. Haptic Teleoperation

3.2. Vision-Based UAV Detection

| Algorithm 1: Vision-based UAV detection. |

| Input: Intrinsic camera parameters |

| S: Image at t |

| : UAV position at t from EKF in camera frame |

| : UAV position from EKF at on S |

| : search window (center, size) |

| : displacement threshold between two frames |

| : last UAV predicted state on S |

| Output: : estimated UAV position |

| 1: projection on S |

| 2: Compute foreground image |

| 3: dilation |

| 4: |

| 5: Extract blobs in |

| 6: for do |

| 7: Extract AABB of |

| 8: end for |

| 9: |

| 10: for each do |

| 11: Classify with SVM |

| 12: if then |

| 13: |

| 14: |

| 15: end if |

| 16: end for |

| 17: if then |

| 18: |

| 19: |

| 20: |

| 21: else |

| 22: predicted position on S |

| 23: |

| 24: for each do |

| 25: |

| 26: if |

| then |

| 27: |

| 28: |

| 29: end if |

| 30: end for |

| 31: if then |

| 32: |

| 33: |

| 34: |

| 35: else |

| 36: |

| 37: |

| 38: |

| 39: end if |

| 40: end if |

3.3. Visual Feedback Displayed in Augmented Reality

4. Experiments

4.1. Preliminary Evaluation

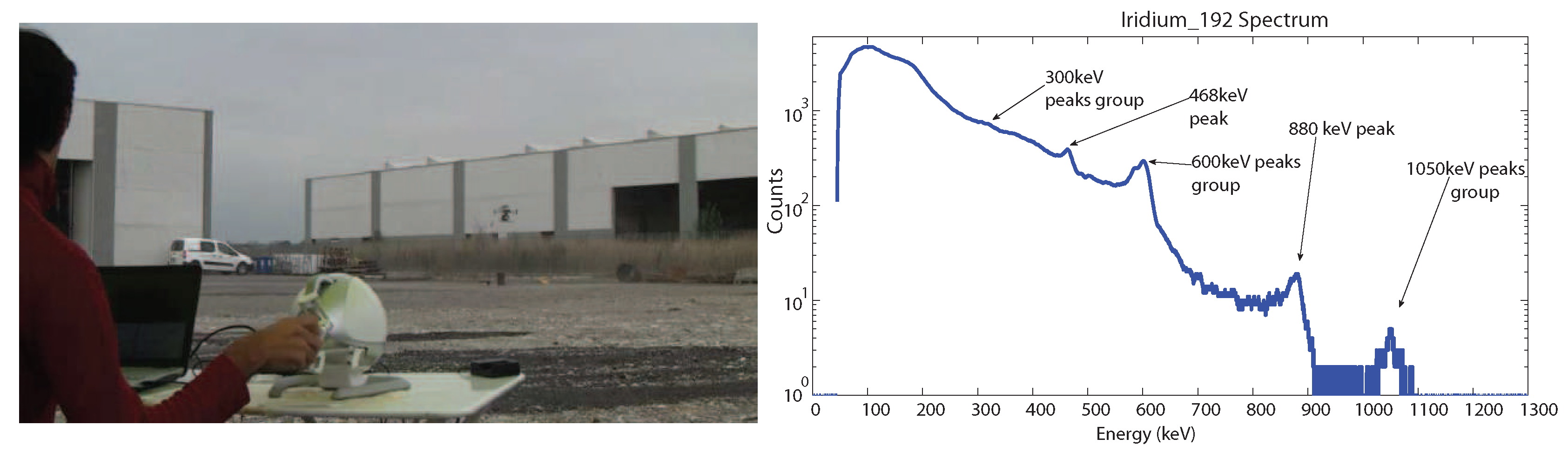

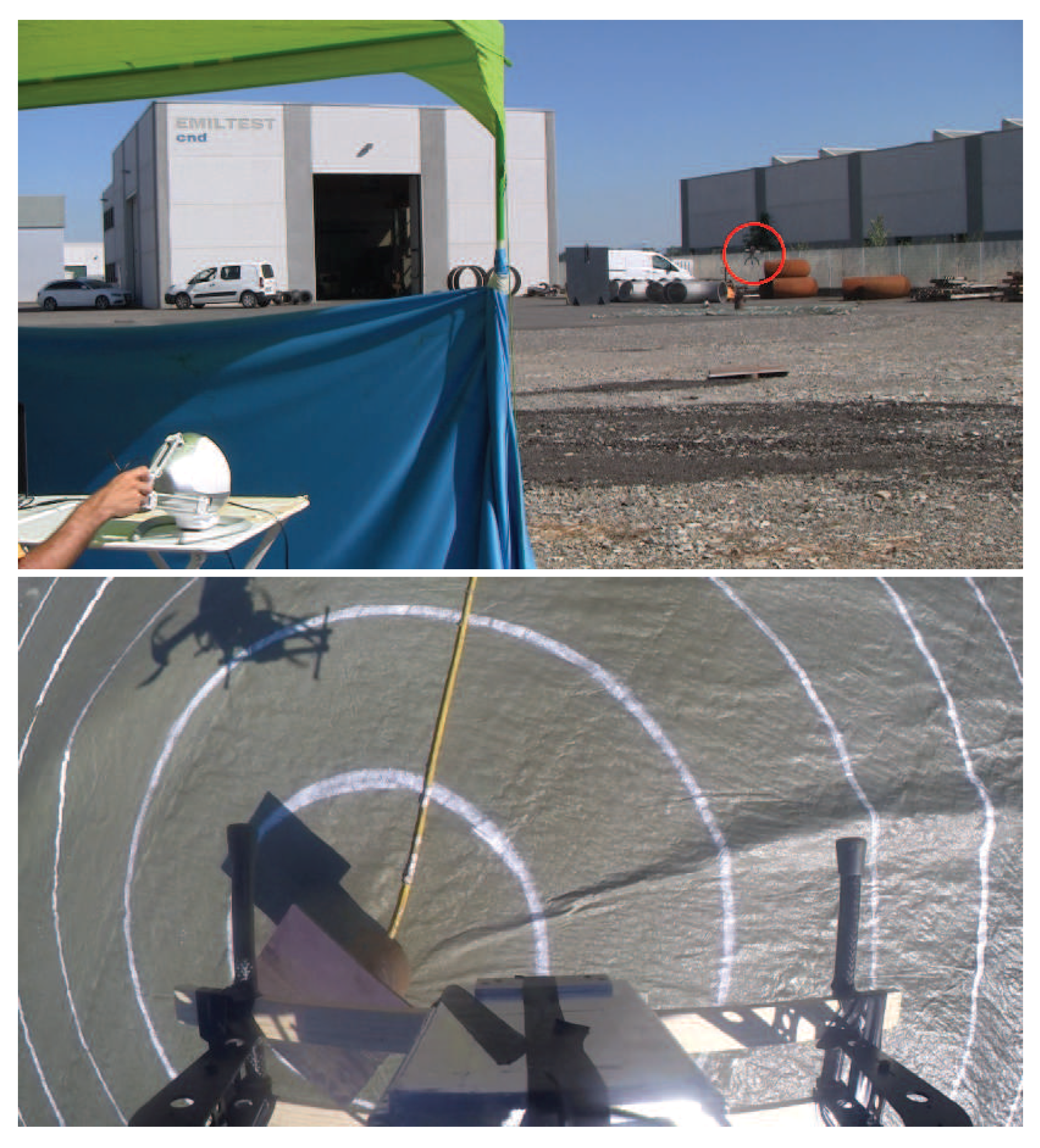

4.2. Evaluation of Nuclear Source Detection Accuracy in Real Environments

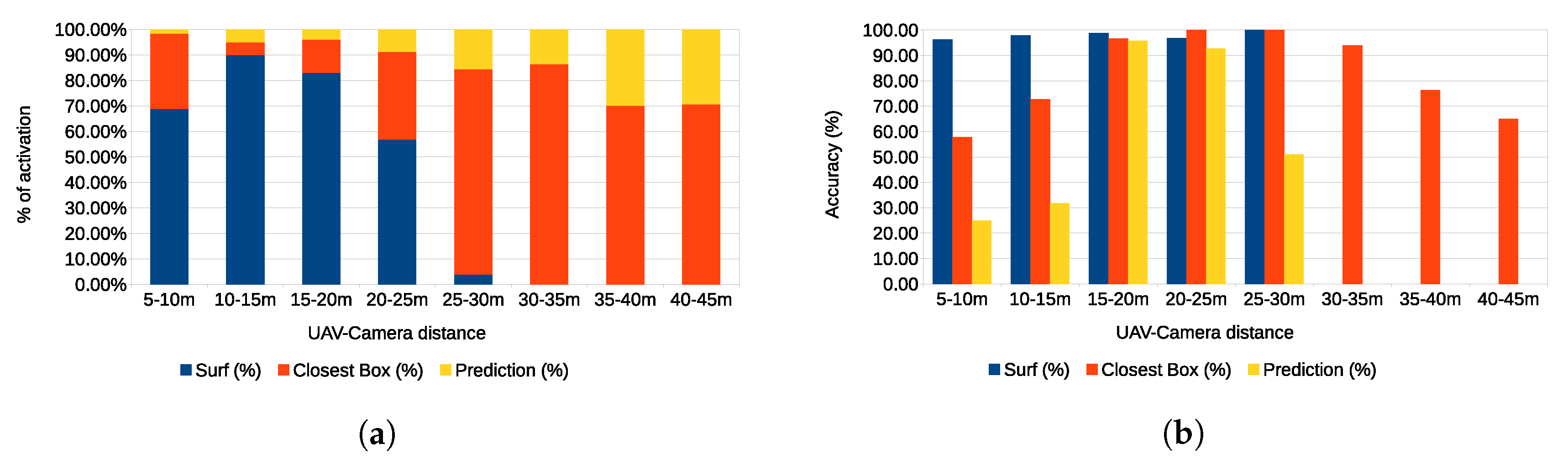

4.3. Evaluation of Visual Feedback

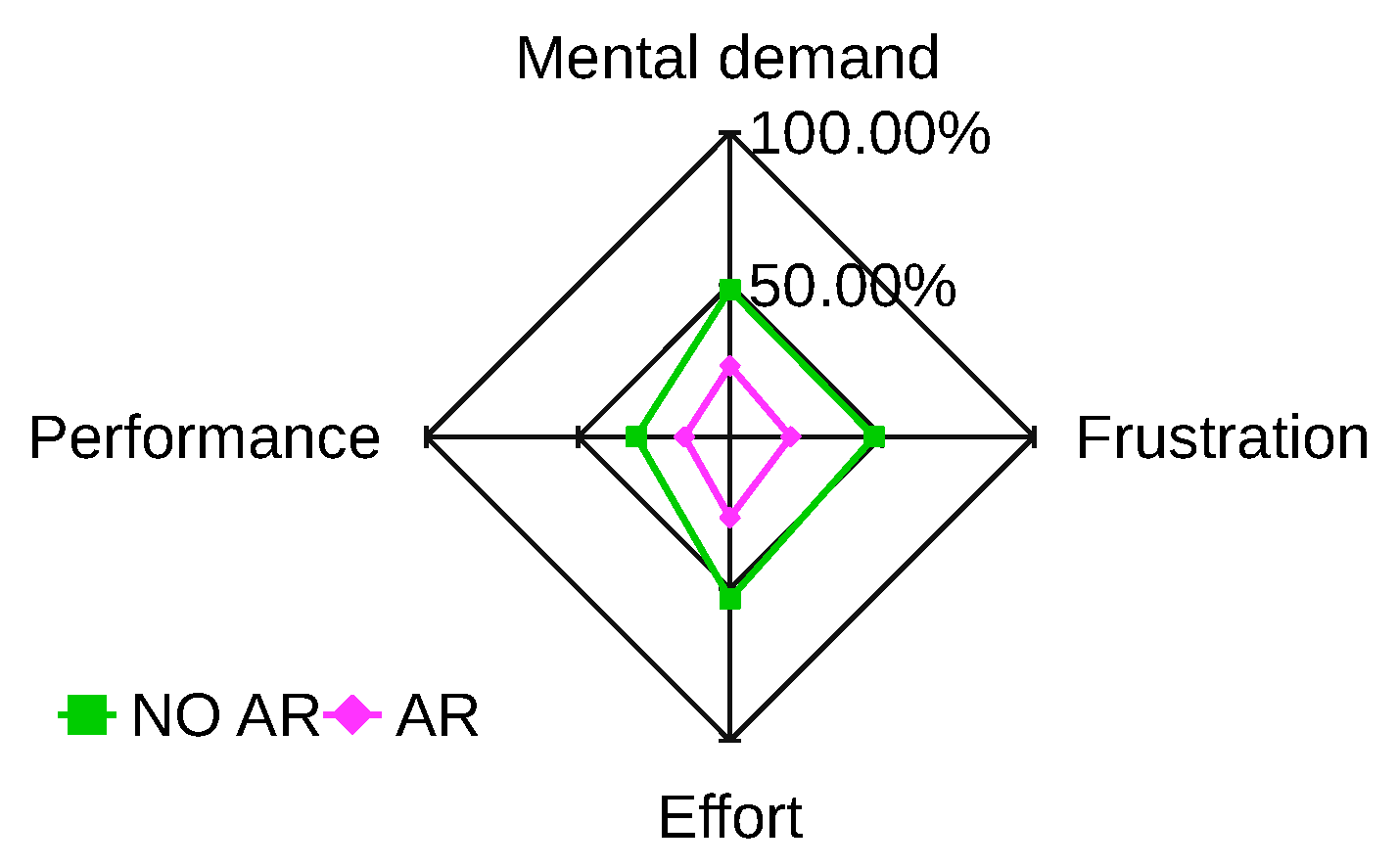

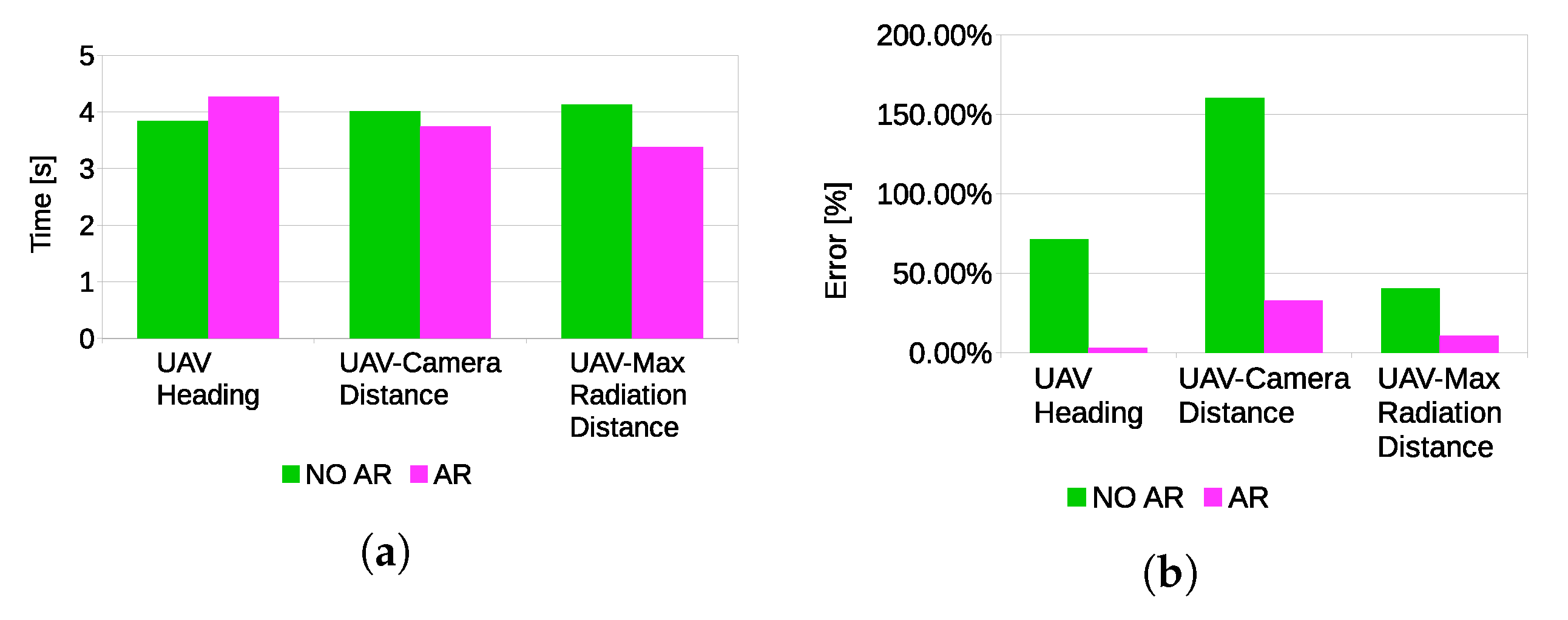

4.4. Usability Experiments

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| VHAR | Visuo-Haptic Augmented Reality |

| CdZnTe | Cadmium Zinc Telluride |

References

- Boudergui, K.; Carrel, F.; Domenech, T.; Guenard, N.; Poli, J.P.; Ravet, A.; Schoepff, V.; Woo, R. Development of a drone equipped with optimized sensors for nuclear and radiological risk characterization. In Proceedings of the 2nd International Conference on Advancements in Nuclear Instrumentation Measurement Methods and their Applications (ANIMMA), Ghent, Belgium, 6–9 June 2011; pp. 1–9. [Google Scholar]

- MacFarlane, J.; Payton, O.; Keatley, A.; Scott, G.; Pullin, H.; Crane, R.; Smilion, M.; Popescu, I.; Curlea, V.; Scott, T. Lightweight aerial vehicles for monitoring assessment and mapping of radiation anomalies. J. Environ. Radioact. 2014, 136, 127–130. [Google Scholar] [CrossRef] [PubMed]

- Martin, P.; Payton, O.; Fardoulis, J.; Richards, D.; Scott, T. The use of unmanned aerial systems for the mapping of legacy uranium mines. J. Environ. Radioact. 2015, 143, 135–140. [Google Scholar] [CrossRef] [PubMed]

- Martin, P.; Payton, O.; Fardoulis, J.; Richards, D.; Yamashiki, Y.; Scott, T. Low altitude unmanned aerial vehicle for characterising remediation effectiveness following the FDNPP accident. J. Environ. Radioact. 2016, 151 Pt 1, 58–63. [Google Scholar] [CrossRef]

- Pöllänen, R.; Toivonen, H.; Peräjärvi, K.; Karhunen, T.; Ilander, T.; Lehtinen, J.; Rintala, K.; Katajainen, T.; Niemelä, J.; Juusela, M. Radiation surveillance using an unmanned aerial vehicle. Appl. Radiat. Isotopes 2009, 67, 340–344. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Xu, Y.; Di, L.; Chen, Y. Low-cost Multi-UAV Technologies for Contour Mapping of Nuclear Radiation Field. J. Intell. Robot. Syst. 2013, 70, 401–410. [Google Scholar] [CrossRef]

- Neumann, P.; Bartholmai, M.; Schiller, J.; Wiggerich, B.; Manolov, M. Micro-drone for the characterization and self-optimizing search of hazardous gaseous substance sources: A new approach to determine wind speed and direction. In Proceedings of the IEEE International Workshop on Robotic and Sensors Environments (ROSE), Phoenix, AZ, USA, 15–16 October 2010; pp. 1–6. [Google Scholar]

- Newaz, A.A.R.; Jeong, S.; Lee, H.; Ryu, H.; Chong, N.Y.; Mason, M.T. Fast radiation mapping and multiple source localization using topographic contour map and incremental density estimation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Okuyama, S.; Torii, T.; Suzuki, A.; Shibuya, M.; Miyazaki, N. A Remote Radiation Monitoring System Using an Autonomous Unmanned Helicopter for Nuclear Emergencies. J. Nucl. Sci. Technol. 2008, 45, 414–416. [Google Scholar] [CrossRef]

- Micconi, G.; Aleotti, J.; Caselli, S. Evaluation of a Haptic Interface for UAV Teleoperation in Detection of Radiation Sources. In Proceedings of the 18th IEEE Mediterranean Electrotechnical Conference (MELECON), Lemesos, Cyprus, 18–20 April 2016. [Google Scholar]

- Micconi, G.; Aleotti, J.; Caselli, S.; Benassi, G.; Zambelli, N.; Zappettini, A. Haptic Guided UAV for Detection of Radiation Sources in Outdoor Environments. In Proceedings of the 3rd RED-UAS 2015 Workshop on Research, Education and Development of Unmanned Aerial Systems, Cancun, Mexico, 23–25 November 2015. [Google Scholar]

- Lu, Y.; Macias, D.; Dean, Z.S.; Kreger, N.R.; Wong, P.K. A UAV-Mounted Whole Cell Biosensor System for Environmental Monitoring Applications. IEEE Trans. NanoBiosci. 2015, 14, 811–817. [Google Scholar] [CrossRef] [PubMed]

- Kurvinen, K.; Smolander, P.; Pöllänen, R.; Kuukankorpi, S.; Kettunen, M.; Lyytinen, J. Design of a radiation surveillance unit for an unmanned aerial vehicle. J. Environ. Radioact. 2005, 81, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Sanada, Y.; Torii, T. Aerial radiation monitoring around the Fukushima Dai-ichi nuclear power plant using an unmanned helicopter. J. Environ. Radioact. 2015, 139, 294–299. [Google Scholar] [CrossRef] [PubMed]

- Frew, E.W.; Brown, T.X. Networking Issues for Small Unmanned Aircraft Systems. J. Intell. Robot. Syst. 2009, 54, 21–37. [Google Scholar] [CrossRef]

- Towler, J.; Krawiec, B.; Kochersberger, K. Radiation Mapping in Post-Disaster Environments Using an Autonomous Helicopter. Remote Sens. 2012, 4, 1995–2015. [Google Scholar] [CrossRef]

- Zollmann, S.; Hoppe, C.; Kluckner, S.; Poglitsch, C.; Bischof, H.; Reitmayr, G. Augmented Reality for Construction Site Monitoring and Documentation. Proc. IEEE 2014, 102, 137–154. [Google Scholar] [CrossRef]

- Sun, M.; Dong, N.; Jiang, C.; Ren, X.; Liu, L. Real-Time MUAV Video Augmentation with Geo-information for Remote Monitoring. In Proceedings of the Fifth International Conference on Geo-Information Technologies for Natural Disaster Management (GiT4NDM), Mississauga, ON, Canada, 9–11 October 2013; pp. 114–118. [Google Scholar]

- Okura, F.; Kanbara, M.; Yokoya, N. Augmented telepresence using autopilot airship and omni-directional camera. In Proceedings of the IEEE Intermational Symposium on Mixed and Augmented Reality (ISMAR), Seoul, Korea, 13–16 October 2010; pp. 259–260. [Google Scholar]

- Iwaneczko, P.; Jȩdrasiak, K.; Nawrat, A. Augmented Reality in UAVs Applications. In Innovative Simulation Systems; Nawrat, A., Jȩdrasiak, K., Eds.; Springer: Cham, Switzerland, 2016; pp. 77–86. [Google Scholar]

- Ai, Z.; Livingston, M.A.; Moskowitz, I.S. Real-time unmanned aerial vehicle 3D environment exploration in a mixed reality environment. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016. [Google Scholar]

- Zollmann, S.; Hoppe, C.; Langlotz, T.; Reitmayr, G. FlyAR: Augmented Reality Supported Micro Aerial Vehicle Navigation. IEEE Trans. Visualization Comput. Graph. 2014, 20, 560–568. [Google Scholar] [CrossRef] [PubMed]

- Reyes, S.; Romero, H.; Salazar, S.; Lozano, R.; Santos, O. Outdoor haptic teleoperation of a hexarotor UAV. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 972–979. [Google Scholar]

- Kanso, A.; Elhajj, I.H.; Shammas, E.; Asmar, D. Enhanced teleoperation of UAVs with haptic feedback. In Proceedings of the IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Busan, Korea, 7–11 July 2015; pp. 305–310. [Google Scholar]

- Lam, T.; Boschloo, H.; Mulder, M.; van Paassen, M. Artificial Force Field for Haptic Feedback in UAV Teleoperation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 39, 1316–1330. [Google Scholar] [CrossRef]

- Carloni, R.; Lippiello, V.; D’Auria, M.; Fumagalli, M.; Mersha, A.; Stramigioli, S.; Siciliano, B. Robot Vision: Obstacle-Avoidance Techniques for Unmanned Aerial Vehicles. IEEE Robot. Autom. Mag. 2013, 20, 22–31. [Google Scholar] [CrossRef]

- Omari, S.; Hua, M.D.; Ducard, G.; Hamel, T. Bilateral haptic teleoperation of VTOL UAVs. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 2393–2399. [Google Scholar]

- Masone, C.; Giordano, P.; Bulthoff, H.; Franchi, A. Semi-autonomous trajectory generation for mobile robots with integral haptic shared control. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6468–6475. [Google Scholar]

- Hou, X.; Mahony, R.; Schill, F. Comparative Study of Haptic Interfaces for Bilateral Teleoperation of VTOL Aerial Robots. IEEE Trans. Syst. Man Cybern. Syst. 2015, 46, 1352–1363. [Google Scholar] [CrossRef]

- Hou, X.; Mahony, R.; Schill, F. Representation of vehicle dynamics in haptic teleoperation of aerial robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1485–1491. [Google Scholar]

- Son, H.I.; Kim, J.; Chuang, L.; Franchi, A.; Giordano, P.; Lee, D.; Bulthoff, H. An evaluation of haptic cues on the tele-operator’s perceptual awareness of multiple UAVs’ environments. In Proceedings of the IEEE World Haptics Conference (WHC), Istanbul, Turkey, 21–24 June 2011; pp. 149–154. [Google Scholar]

- Ruesch, A.; Mersha, A.; Stramigioli, S.; Carloni, R. Kinetic scrolling-based position mapping for haptic teleoperation of unmanned aerial vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 3116–3121. [Google Scholar]

- Stramigioli, S.; Mahony, R.; Corke, P. A novel approach to haptic tele-operation of aerial robot vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 5302–5308. [Google Scholar]

- Mersha, A.; Stramigioli, S.; Carloni, R. On Bilateral Teleoperation of Aerial Robots. IEEE Trans. Robot. 2014, 30, 258–274. [Google Scholar] [CrossRef]

- Mersha, A.; Hou, X.; Mahony, R.; Stramigioli, S.; Corke, P.; Carloni, R. Intercontinental haptic teleoperation of a flying vehicle: A step towards real-time applications. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 4951–4957. [Google Scholar]

- Stegagno, P.; Basile, M.; Bulthoff, H.; Franchi, A. A semi-autonomous UAV platform for indoor remote operation with visual and haptic feedback. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3862–3869. [Google Scholar]

- Del Sordo, S.; Abbene, L.; Caroli, E.; Mancini, A.; Zappettini, A.; Ubertini, P. Progress in the development of CdTe and CdZnTe semiconductor radiation detectors for astrophysical and medical applications. Sensors 2009, 9, 3491–3526. [Google Scholar] [CrossRef] [PubMed]

- Camarda, G.; Bolotnikov, A.; Cui, Y.; Hossain, A.; Kohman, K.; James, R. CdZnTe room-temperature semiconductor gamma-ray detector for national-security applications. In Proceedings of the 2007 IEEE Long Island Systems, Applications and Technology Conference, LISAT, Farmingdale, NY, USA, 4 May 2007; pp. 107–114. [Google Scholar]

- Kowatari, M.; Kubota, T.; Shibahara, Y.; Fujii, T.; Fukutani, S.; Takamiya, K.; Mizuno, S.; Yamana, H. Application of a CZT detector to in situ environmental radioactivity measurement in the Fukushima area. Radiat. Prot. Dosim. 2015, 167, 348–352. [Google Scholar] [CrossRef] [PubMed]

- Kuvvetli, I.; Budtz-Jørgensen, C.; Zappettini, A.; Zambelli, N.; Benassi, G.; Kalemci, E.; Caroli, E.; Stephen, J.; Auricchio, N. A 3D CZT high resolution detector for X- and gamma-ray astronomy. SPIE Int. Soc. Opt. Eng. 2014, 9154. [Google Scholar] [CrossRef]

- Marchini, L.; Zappettini, A.; Gombia, E.; Mosca, R.; Lanata, M.; Pavesi, M. Study of surface treatment effects on the metal-CdZnTe interface. IEEE Trans. Nucl. Sci. 2009, 56, 1823–1826. [Google Scholar] [CrossRef]

- Zivkovic, Z.; van der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Sourimant, G.; Morin, L.; Bouatouch, K. GPS, GIS and Video Registration for Building Reconstruction. In Proceedings of the IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; Volume 6, pp. 401–404. [Google Scholar]

- Karlekar, J.; Zhou, S.Z.; Nakayama, Y.; Lu, W.; Loh, Z.C.; Hii, D. Model-based localization and drift-free user tracking for outdoor augmented reality. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Singapore, 19–23 July 2010; pp. 1178–1183. [Google Scholar]

- Min, S.; Lei, L.; Wei, H.; Xiang, R. Interactive registration for Augmented Reality GIS. In Proceedings of the International Conference on Computer Vision in Remote Sensing (CVRS), Xiamen, China, 16–18 December 2012; pp. 246–251. [Google Scholar]

- Durso, F.T.; Dattel, A.R. SPAM: The real-time assessment of SA. In A Cognitive Approach to Situation Awareness: Theory and Application; Ashgate Publishing: Farnham, UK, 2004; pp. 137–154. [Google Scholar]

| Nuclear Source | Dose (mSv/year) | Source Activity (Bq) | Counts/s |

|---|---|---|---|

| Americium 241 | 1 | 1270 | |

| Cobalt 57 | 1 | 411 | |

| Cesium 137 | 1 | 159 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aleotti, J.; Micconi, G.; Caselli, S.; Benassi, G.; Zambelli, N.; Bettelli, M.; Zappettini, A. Detection of Nuclear Sources by UAV Teleoperation Using a Visuo-Haptic Augmented Reality Interface. Sensors 2017, 17, 2234. https://doi.org/10.3390/s17102234

Aleotti J, Micconi G, Caselli S, Benassi G, Zambelli N, Bettelli M, Zappettini A. Detection of Nuclear Sources by UAV Teleoperation Using a Visuo-Haptic Augmented Reality Interface. Sensors. 2017; 17(10):2234. https://doi.org/10.3390/s17102234

Chicago/Turabian StyleAleotti, Jacopo, Giorgio Micconi, Stefano Caselli, Giacomo Benassi, Nicola Zambelli, Manuele Bettelli, and Andrea Zappettini. 2017. "Detection of Nuclear Sources by UAV Teleoperation Using a Visuo-Haptic Augmented Reality Interface" Sensors 17, no. 10: 2234. https://doi.org/10.3390/s17102234

APA StyleAleotti, J., Micconi, G., Caselli, S., Benassi, G., Zambelli, N., Bettelli, M., & Zappettini, A. (2017). Detection of Nuclear Sources by UAV Teleoperation Using a Visuo-Haptic Augmented Reality Interface. Sensors, 17(10), 2234. https://doi.org/10.3390/s17102234