An Informationally Structured Room for Robotic Assistance †

Abstract

: The application of assistive technologies for elderly people is one of the most promising and interesting scenarios for intelligent technologies in the present and near future. Moreover, the improvement of the quality of life for the elderly is one of the first priorities in modern countries and societies. In this work, we present an informationally structured room that is aimed at supporting the daily life activities of elderly people. This room integrates different sensor modalities in a natural and non-invasive way inside the environment. The information gathered by the sensors is processed and sent to a centralized management system, which makes it available to a service robot assisting the people. One important restriction of our intelligent room is reducing as much as possible any interference with daily activities. Finally, this paper presents several experiments and situations using our intelligent environment in cooperation with our service robot.1. Introduction

Inside the many applications related to quality of life technologies, elderly care is one of the most promising ones, both in social and economic terms. Improving the quality of life of the elderly is also one of the first priorities in modern countries and societies, where the percentage of elderly people is rapidly increasing due mainly to great improvements in medicine during the last few decades.

A potential approach to support daily life activities of elderly people consists of creating a living environment supported by different technologies, including different sensor modalities and service robots. A common idea in assistive environments consists of gathering information about people's activities together with their surroundings in a way that an intelligent decision system can support the persons [1–7]. In addition to intelligent environments, service robots can be available to assist people in their daily activities and environments [8–12]. Actually, it is expected that service robots will soon be playing the role of companion for elderly people or as general assistants for humans with special needs at home. However, human environments are very complex and sometimes difficult to monitor only with the sensors mounted on the robot. One solution to this problem consists of supporting the service robot with information about the environment using ambient intelligent technologies [13–17].

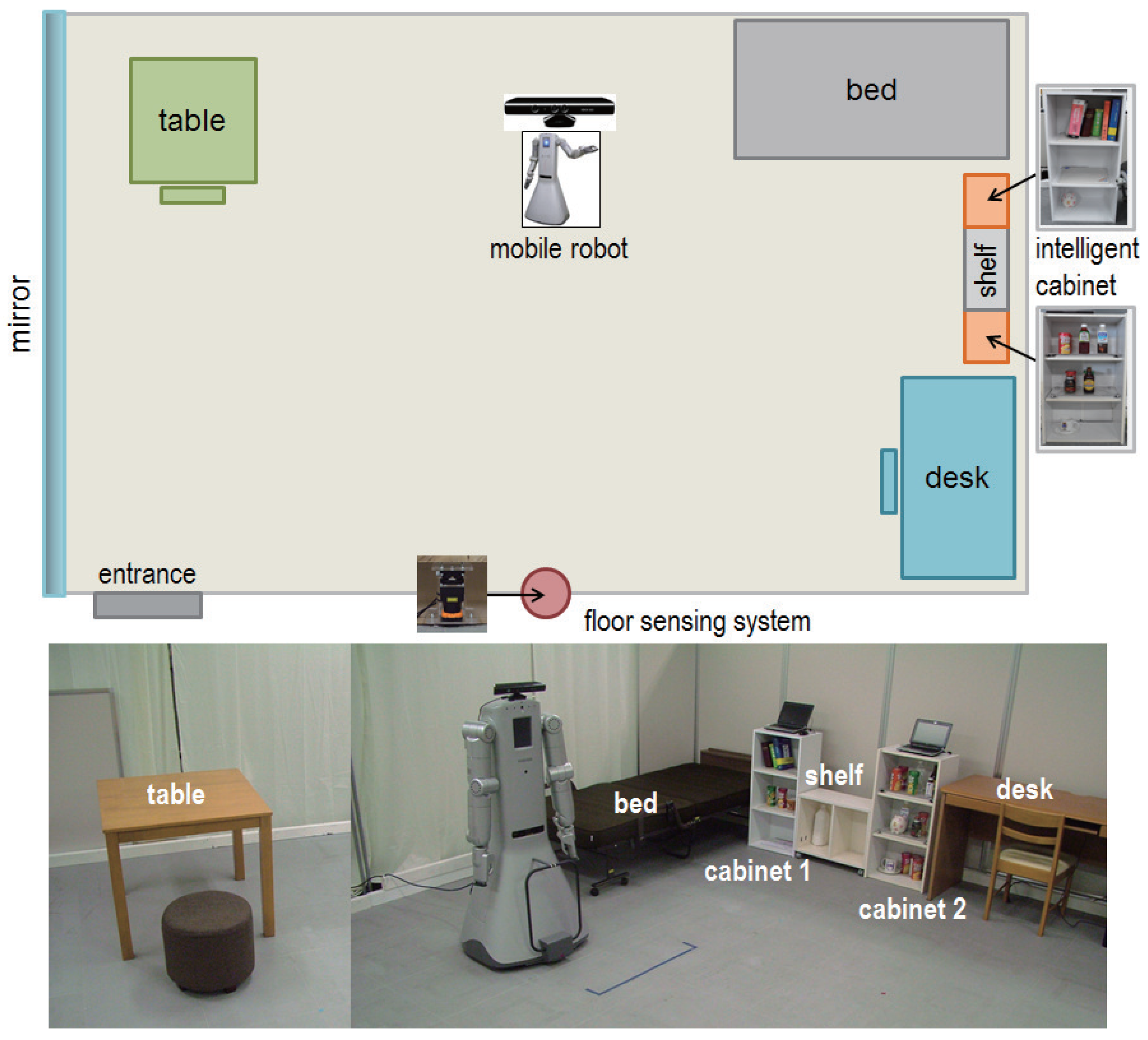

This intelligent room is composed of a network of distributed sensors that are installed at different locations, pieces of furniture and objects inside the room. These sensors monitor people's activity inside the room and send the information to a centralized management system, which processes the data and makes it available to a service robot that assists the inhabitants. In addition, the service robot can send information coming from its own perception system. Figure 1 shows an overview of our intelligent room together with our service robot. One important restriction in our intelligent environment is to avoid interfering with the daily activity of people and to reduce as much as possible the invasion of their privacy. In addition, we constrain the use of the camera on the robot to predetermined situations only. Finally, the sensors of our environment are weak in the sense that they acquire only a small part of the information needed for activity monitoring. Therefore, an important aspect of our informationally structured environment consists of the integration of the information provided by the weak sensors in order to determine changes in the environment.

The first ideas for our structured room appeared in [13,14] inside the Robot Town project, which established the main ideas and foundations to create a complete structured city. Our work extends the ideas from [13,14] and makes particular contributions to informationally structured rooms. The work in [15] also proposes an informative space where sensor data are managed using ubiquitous computing. However, the system in [15] only covers localization and navigation of the service robot. By contrast, in our work, we additionally integrate people activity and object detection and manipulation. An alternative paradigm is the physically-embedded intelligent system (PEIS) presented in [16], which combines the robot skills with ambient intelligence. PEIS provides a distributed environment that exchanges information among different functional software components. In our approach, in contrast, we use a centralized management system that provides the extra reasoning needed by the robot to support its tasks. In addition, implementations of the PEIS system usually rely on camera monitoring systems. However, we try to avoid vision systems in our approach to reduce as much as possible the invasion of privacy. Finally, strongly sensory structured micro-rooms are presented in [17]. These micro-rooms also aim to support elderly people, but the intelligent capabilities are limited, since the number of sensors is reduced to keep prices competitive in production. In comparison, our proposed structured room is composed of several different sensor modalities, including laser range finders and radio-frequency identification (RIF) systems. Moreover, the room is supported by a humanoid robot. Therefore, our system currently has a high cost. However, we think costs can be reduced in the future, since companies are lowering the prices of the sensors used. Moreover, the price of service robots is expected to lower as they reach mass production.

This paper extends our previous works in [7,13,18–22] with the following contributions:

Identified tracking of persons and movable furniture using an inertial sensor and a laser range finder.

Improved daily life object tracking by portable RFID tag readers and an RGB-D camera.

Robot service demonstration of the go-and-fetch task.

The rest of the paper is organized as follows. Section 2 describes the different sensor modalities that compose our informationally structured room. In Section 3, we describe our service robot. The centralized information system is described in Section 4. Finally, in Section 5, we present different experiments and scenarios for assistance and information updating in our room.

2. The Informationally Structured Room

In this section, we describe the different sensor modalities that are found in our informationally structured room. Our intelligent room represents a standard room in a house containing typical appliances and pieces of furniture, as shown in Figure 1. In particular, our room contains a bed, chairs, tables, shelves, cabinets and a service trolley. In the room, we can also find some typical commodities and objects, like, for example, cups, books, bottles or slippers. Some pieces of furniture and objects inside the room are equipped with sensors that help monitoring the activity inside the room. For example, the cabinets and the service trolley contain sensors to detect the objects that are situated on them. In addition, the service trolley and the wheel chair contain inertial sensors to facilitate their localization and activity monitoring. Finally, the room is equipped with a floor sensing system that tracks and detects people and objects moving inside the room. The sensors are distributed and situated in a way that they do not interfere with the daily activities of people living in the room. Moreover, we try to reduce as much as possible the invasion of people's privacy.

2.1. Intelligent Cabinets

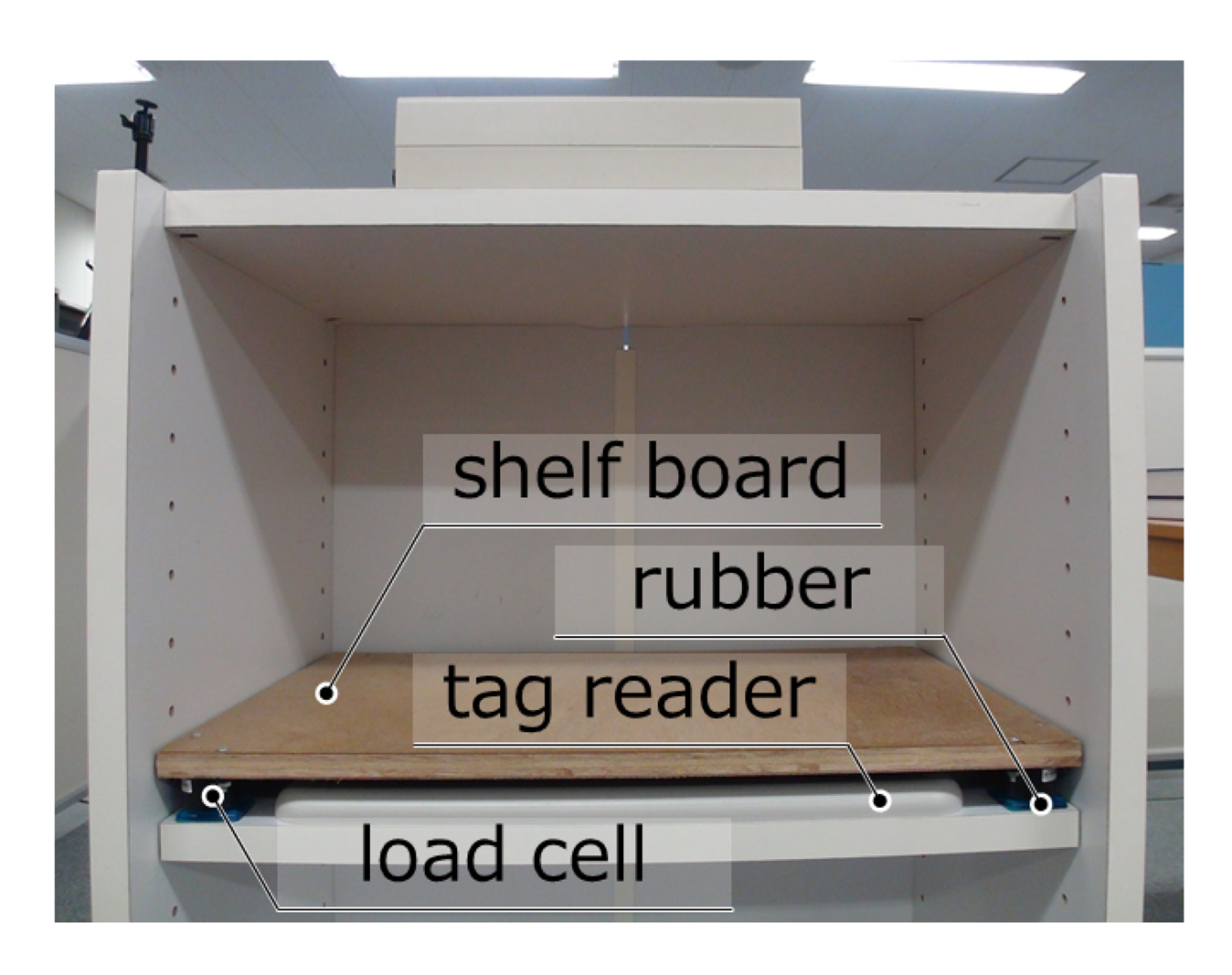

Our informationally structured room contains two intelligent cabinets [19], as shown in Figure 1. Each of these cabinets is equipped with an RFID reader and load cells to detect the type and position of the commodities that are situated inside. Some of the commodities in our environment have an RFID tag attached that contains a unique identifier (ID) assigned to it. This ID is used to retrieve the attributes and characteristics of the commodity from the central database. The RFID readers can detect the presence of different commodities inside the cabinet. In addition, the information from the load cells is used to precisely localize the object inside the cabinet. Figure 2 shows one of the cabinets together with the information that it provides about some commodities that are found inside.

In this particular case, the intelligent cabinet contains nine objects that are detected and shown in our database viewer as in Figure 2. The objects inside the cabinet are difficult to detect with the vision sensor of the robot due to occlusions and difficult viewpoints. Thus, the intelligent cabinet helps the service robot by providing the position and measurements of the objects found inside. Moreover, information about the object ID, its position and weight with a time stamp are sent to our centralize database.

The interval of inputting or outputting objects inside the cabinet has a limitation because of the convergence time of the material vibration of the sensor board. When an object is put on the board, the board vibrates, then if another object is put before the vibration converges, the position and weight of the objects will be inaccurate. To improve the response time and accuracy, we tested several materials for this system and found that plywood (with a 12-mm thickness) as the shelf board and seismic isolation rubber as the legs have good features for converging vibration (see Figure 3). We compare this new system to our our previous one based on acrylic boards [19]. The response time for the new system decreases to less than 400 m, while the previous system had a response time of about 600 ms. Even though the new position accuracy is slightly worse, the new error is inside acceptable ranges, as shown in Table 1.

2.2. Non-Static Intelligent Furniture

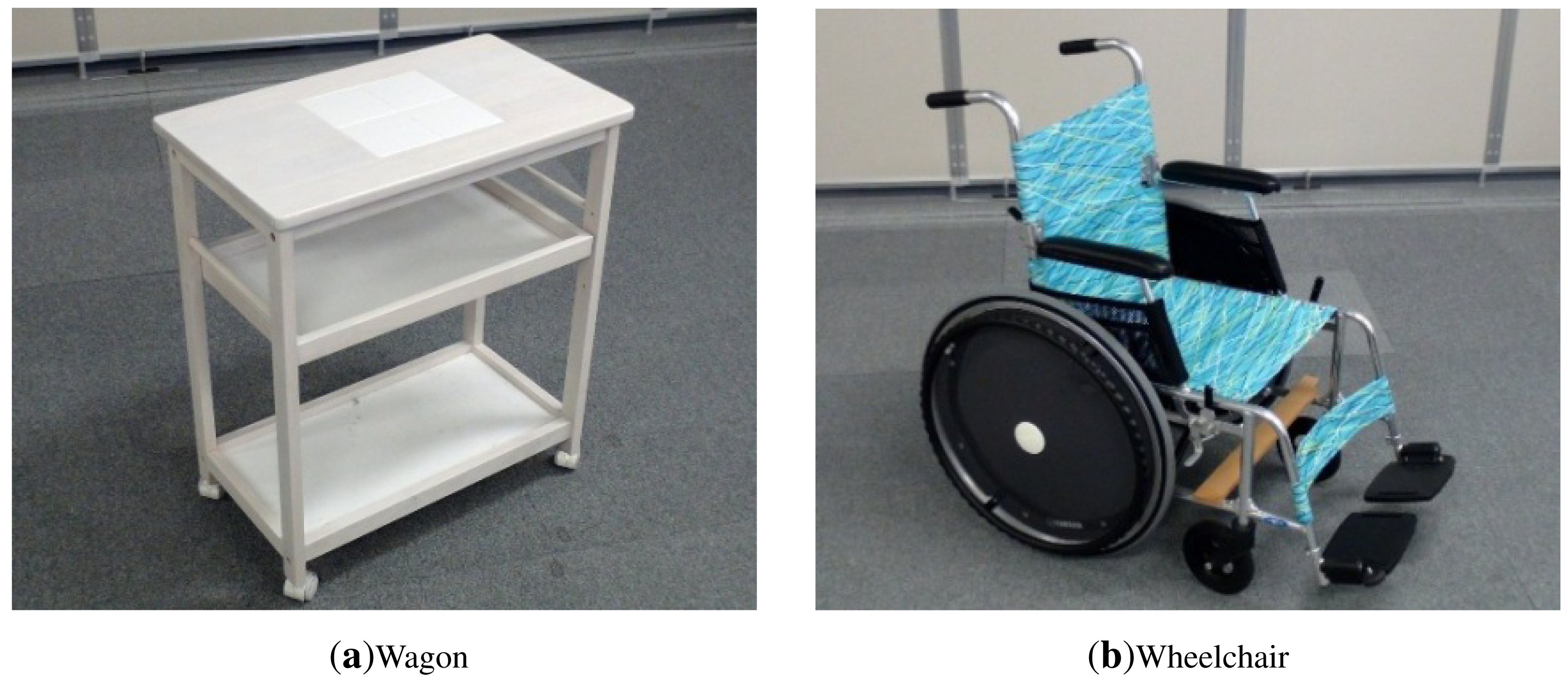

In addition to static pieces of furniture, our intelligent room contains some movable furniture and appliances. In particular, we include an intelligent service trolley and a wheelchair in the room.

The intelligent service trolley is equipped with the same sensor system as the intelligent cabinets, i.e., one RFID reader and a load system composed of four load cells. In this way, the intelligent trolley can provide information about the objects that are on it at any time. The intelligent trolley is additionally equipped with one inertial sensor to help keep track of its position.

The wheelchair is also equipped with one inertial sensor to facilitate its localization inside the room. Figure 4 depicts images of our service trolley and wheelchair.

2.3. Floor Sensing System

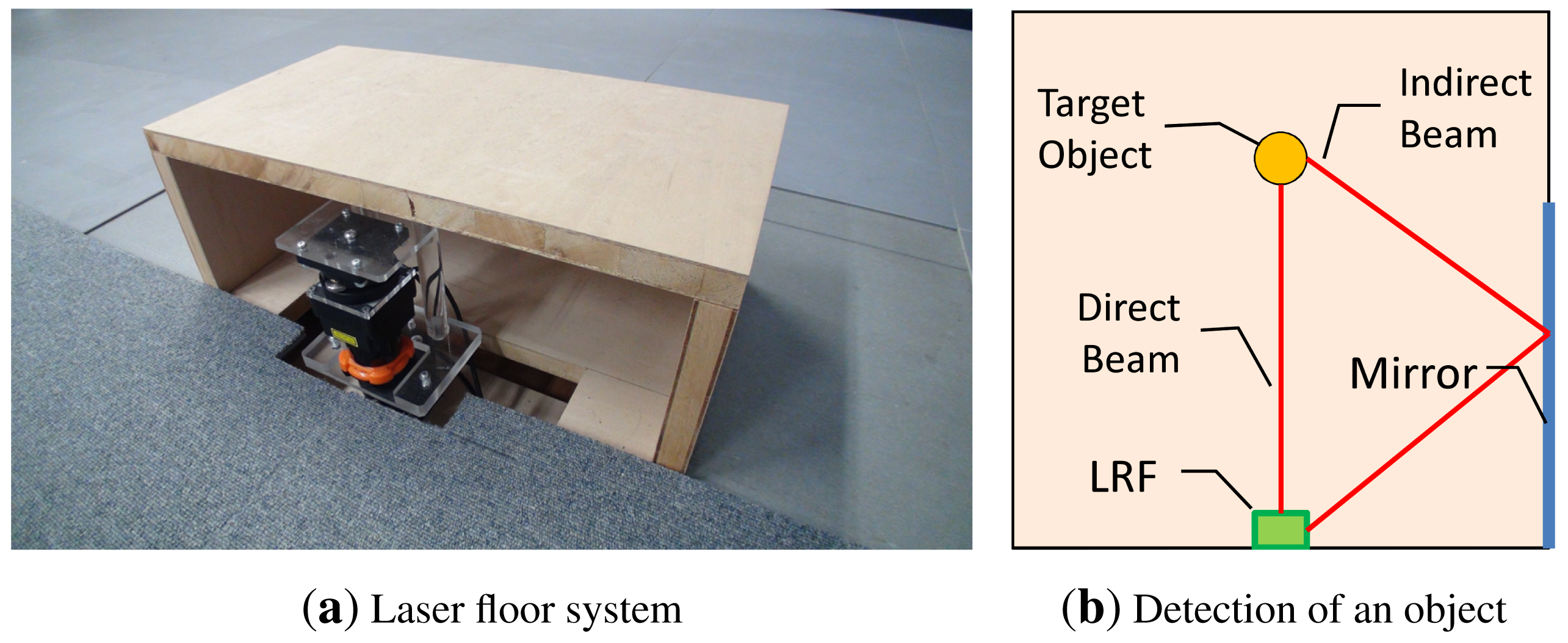

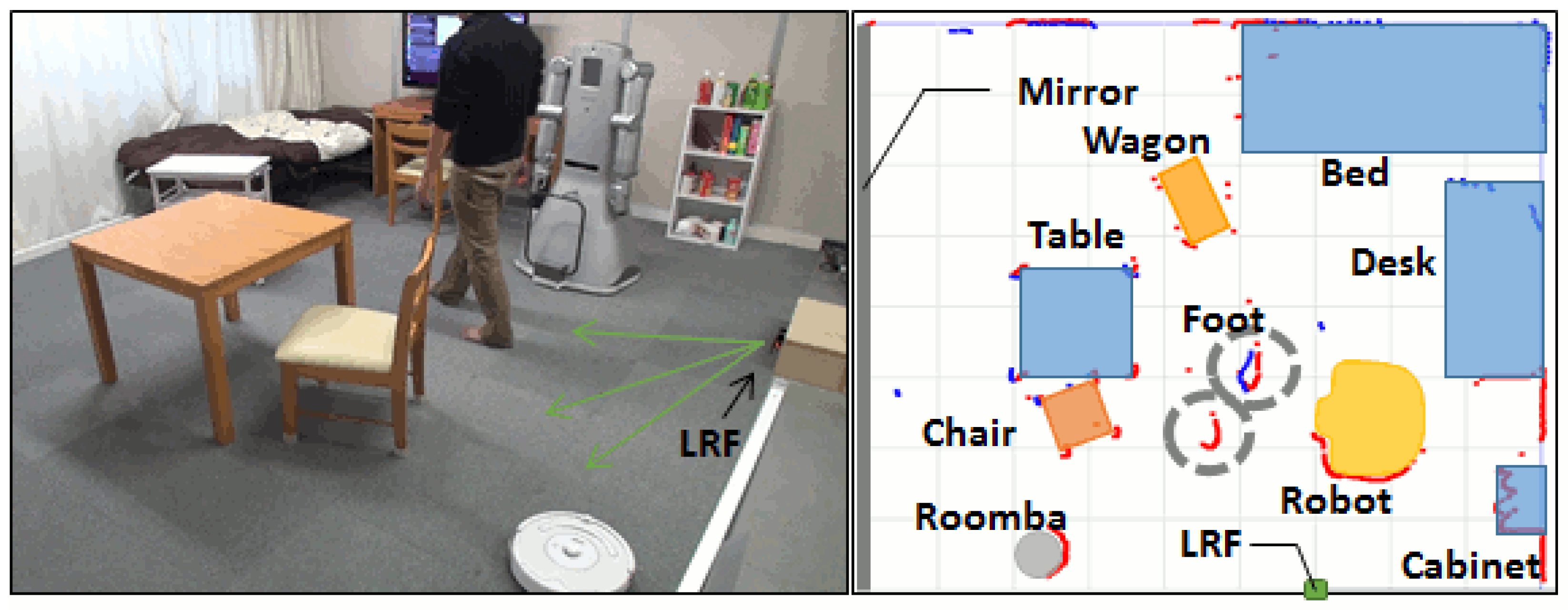

Our informationally structured room is further supported by a floor sensing system, which is used to detect moving objects and people. This system is composed of a laser range finder (LRF), which is situated on the floor on one side of the room, as depicted in Figure 1. The system is extended with a mirror located along one side of the room. This configuration reduces the number of dead angles for the LRF and increases the robustness against occlusions, as shown in Figure 5, thus improving the detection when clutter occurs [23].

The floor sensing system is able to detect the 2D blobs corresponding to different objects, people or robots. The system first applies static background subtraction and then extracts blobs in the rest of the measurements. However, the limited 2D information provided by the laser range finder is not enough to determine the particular entities moving around. Therefore, extra sensor modalities are used to help recognize particular moving objects and persons inside the room. Thus, we attach inertial sensors to the service trolley, the wheelchair and to the slippers of the habitants. By integrating inertial sensor information together with the 2D laser readings, we can locate and recognize each specific object/inhabitant. Details on tracking are given in the next section.

2.4. IMU-Based People Identification

Gate identification by attaching an inertial sensor to a person's waist is proposed in [24]. This method, however, requires attaching inertial sensors to persons or accessing their smart phones in their pockets.

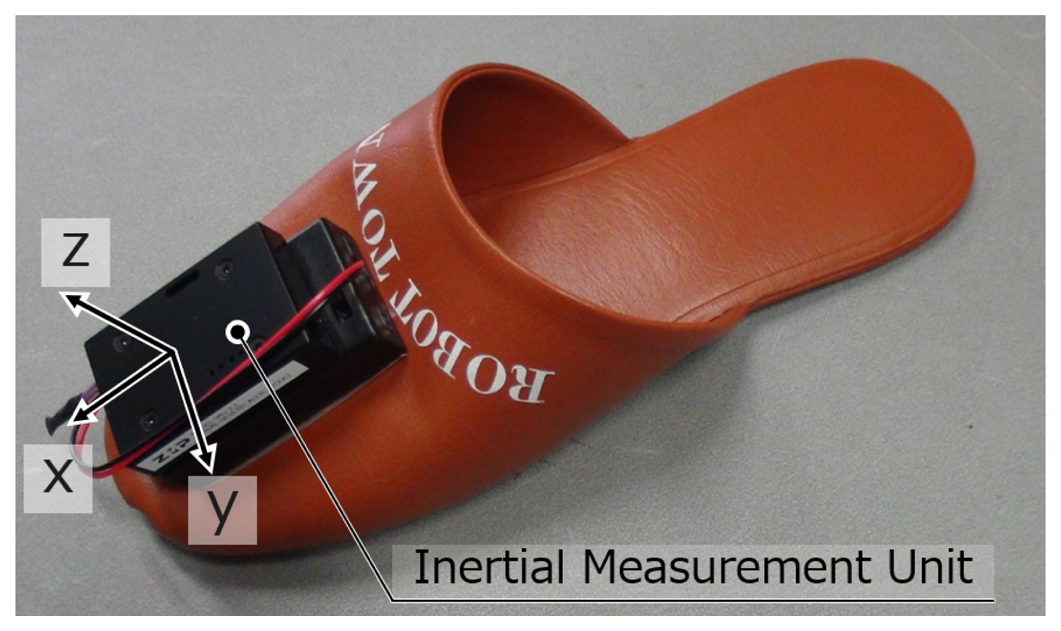

In this paper, we propose a method to identify different persons by attaching an IMU sensor to slippers as shown in Figure 6. Our method does not demand special settings for persons, and it is a natural way to identify visitors and track all persons in public buildings or at home. This is a very convenient means in Japan, because in this country, homes and some public spaces provide slippers for visitors. In this way, the activity of the habitant can be easily extracted, which is very useful for robot service and the life log of the habitant.

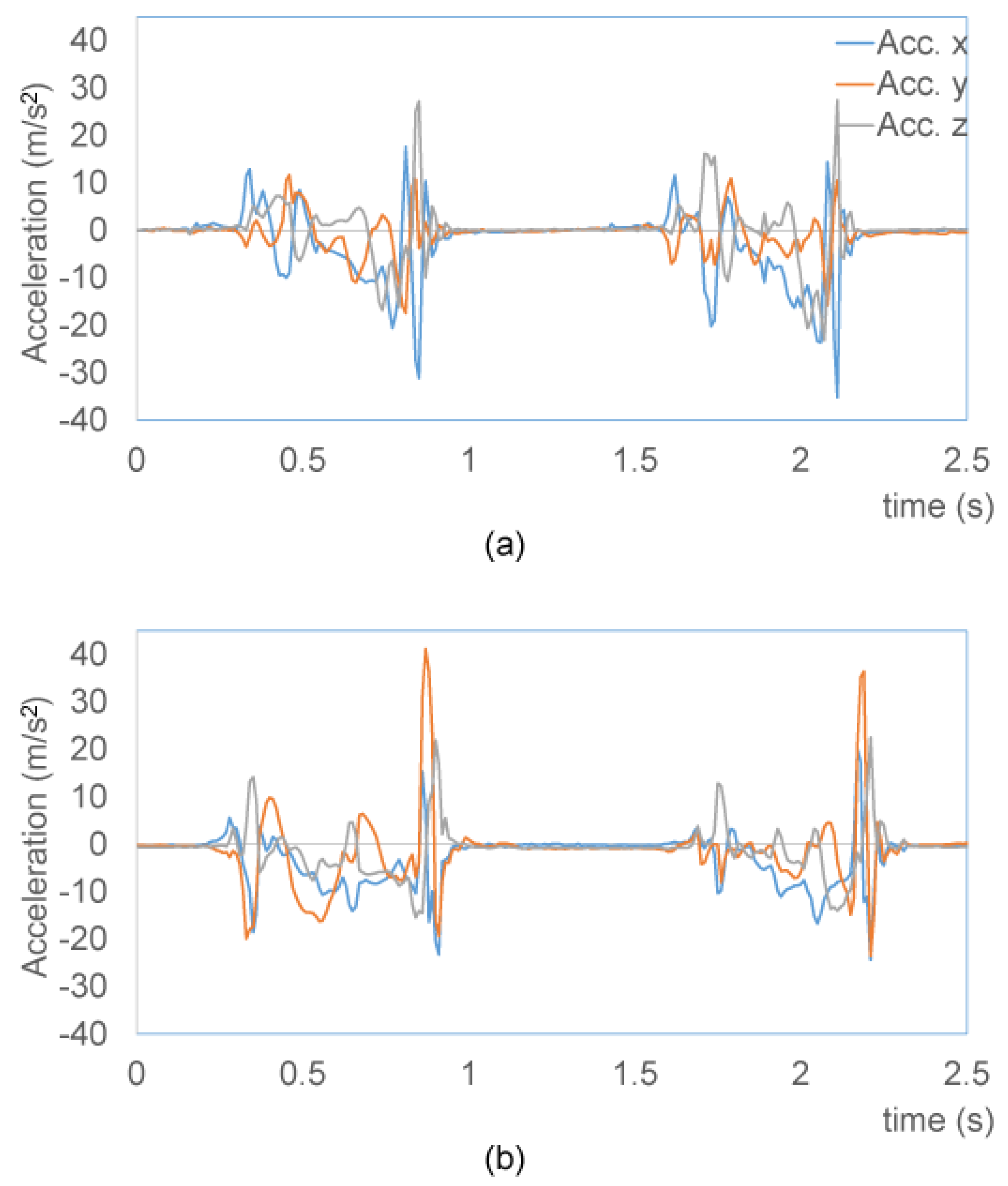

Our system captures the acceleration and angle velocity of gait motion. The foot accelerations of two persons are shown in Figure 7. An autoregressive model [25] is applied to approximate the sequence of waves. The autoregressive model is expressed as:

2.5. People Tracking

In general, it becomes difficult to identify and track persons in a room with little invasion of people's privacy. To solve this problem, several methods have been proposed in the past. In [27,28], multiple persons are tracked using cameras. Some other works [29,30] also propose the combination of cameras and other sensors, such as the pressure sensors on the floor and acceleration sensors. However, it is still difficult to prevent invading persons' privacy if we use cameras.

Some other works avoid the use of cameras in order to keep privacy. The method presented in [31] uses LRF only and cannot identify specific persons. The tracking system in [32] is based on dead reckoning using an inertial sensor, but the accuracy decreases over time. The work in [33] uses passive RFID tags, but sometimes fails to track persons far from the tag reader. An ultrasonic tagging system [6] has a high cost, both in maintenance and space, since many large directional antennas are required for tracking the tags. The use of received signal strength indication (RSSI) of active RFID tags [34] and WiFi signals [35] is not accurate enough for localizing persons in a room.

In our system, people tracking is performed using the laser-based floor system by first applying static background subtraction and then extracting blobs in the rest of the measurements. An example detection of a person using the floor system is shown in the middle image of Figure 8.

For multiple person tracking, we find the correspondence of blobs on the floor and inertial sensors. The foot repeats moving in the air and landing on the floor alternately. In the floor sensing data, the appearance and disappearance of blobs repeat, since the LRF is set only few centimetres above the floor and cannot measure the moving foot in the air. When the foot lands on the floor, a blob appears, and the inertial sensor of the foot detects the stopping of motion. When the foot leaves the floor, the blob disappears, and the inertial sensor detects the starting of motion. A blob and an inertial sensor correspond to each other by checking the timings (Figure 9).

2.6. Tracking of Non-Static Furniture

In the case of the service trolley and the wheelchair, we have to check all possible combinations of four candidate blobs that correspond to the wheels and match them with the geometrical restrictions of the specific object. In the case of the service trolley, the four wheels form a specific rectangle; and in the case of the wheelchair, they form a particular polygon.

The general procedure to characterize the different moving entities is as follows. For each laser observation, we first apply a static background subtraction and extract 2D blobs corresponding to the different moving entities inside the room. Each blob is assigned the nearest blob obtained in the previous measurement, thus obtaining a sequence of each blob position over time. Using these sequences, we can calculate the motion velocity for each blob. In addition, we obtain the velocity information of each moving entity provided by its inertial sensor. We then calculate the correlation between the velocity obtained by the inertial sensor and the velocity of each moving blob. High values in the correlation indicates that the blob is regarded as a candidate for the specific entity. Then, the entity is tracked as the centre of the blobs. For tracking occlusion robustly, the normal Kalman filter [36] with the state vector [x, y, θ, ẋ, ẏ]T is utilized, where (x, y), θ and (ẋ, ẏ) are the position, the direction and the velocity, respectively.

3. Service Robot

People inside our intelligent room are assisted by a SmartPal humanoid service robot from Yaskawa Electric Corporation (see Figure 10). This robot is responsible for fetching objects or pointing to them. The robot is composed of a mobile platform, two arms with seven joints and one-joint grippers used as hands. In addition, we equipped the robot with an RGB-D camera, which is activated for object recognition in restricted regions of interest and only under specific requests. In order to maintain the privacy of people, we do not use this camera for general vision purposes. Additional RFID readers are situated on the hands and front of the robot, as shown in Figure 10.

3.1. Visual Memory System

Our service robot is equipped with a visual memory system, which helps in the task of object searching and finding. The visual memory system is used by the robot to detect changes of predefined places where objects usually appear. In our case, we restrict the application of this visual system to the table of our intelligent room (see Figure 1). The reason for that is to retain the privacy of the people as much as possible and to avoid registering images of the user during his daily and private activities. The visual memory system is composed of two main steps. In the first one, changes are detected in the area of interest, the top of the table in our case, which usually corresponds to appearance, disappearance or movement of objects. In the second step, the areas corresponding to the changes are analysed, and new objects are categorized.

The first step of our visual memory is responsible for detecting changes in the area of interest, which is a table in our case. The change detector works as follows. At some point in time t1, the service robot takes a snapshot z1 of a table. Since we use an RGB-D camera, then our observation is composed of a 3D point cloud. At some later point in time t2, the robot takes a second snapshot z2 of the same table. The positions p1 and p2 of the robot during each observation are known and determined by our localization system, so that we can situate each observation in a global reference system. In addition, we improve the alignment of the two point clouds using the iterative closest point (ICP)algorithm. This step allows us to correct small errors that can occur in our localization system.

For each independent view, we extract the plane corresponding to the top of the table by applying a method based on RANSAC. The remaining points, which pertain to the possible objects on top of the table, are projected to a 2D grid. The cells in this grid are clustered using connected components, and each resulting cluster is assumed to be the 2D representation of a different object on the table. We then compare the 2D clusters in each view and determine the different clusters between the two views, which correspond to changes on the table. The resulting change detection is shown in Figure 11.

3.2. Object Categorization

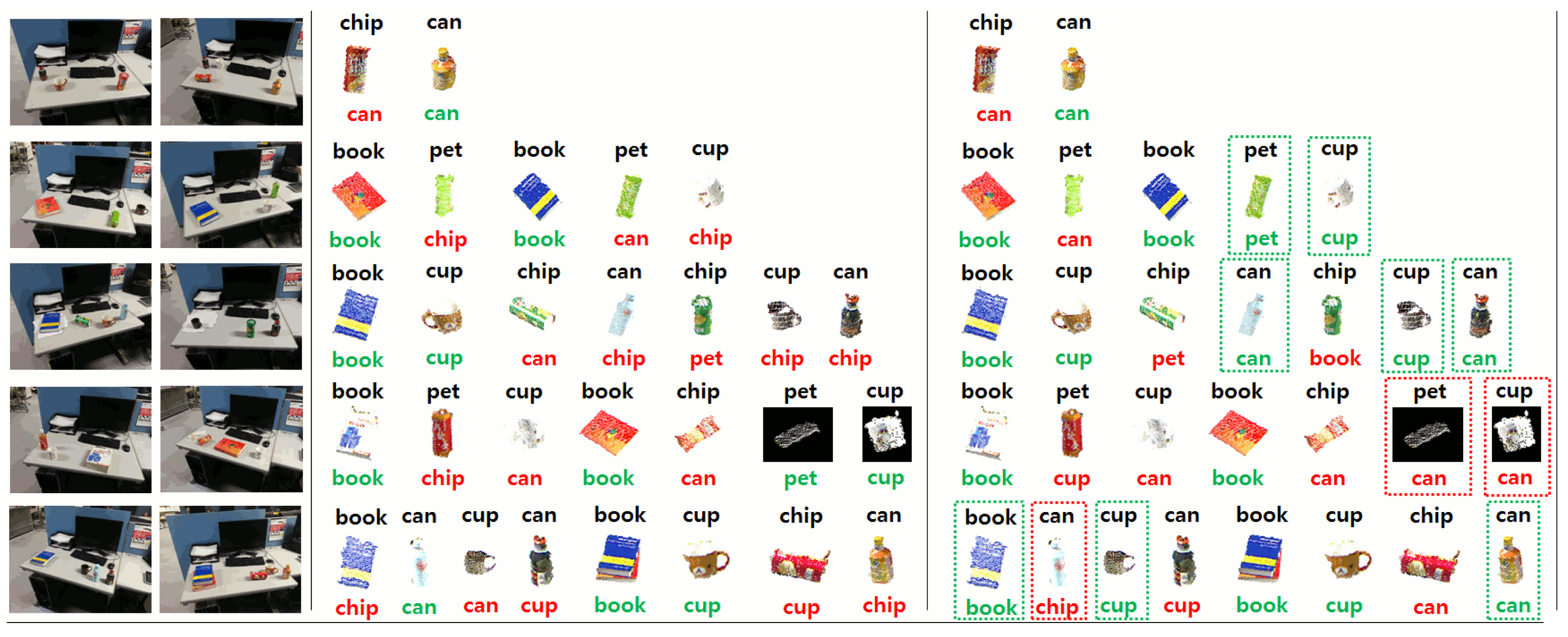

The point clusters corresponding to possible changes on the table are further categorized into a set of predefined object categories contained in our database. This database contains typical commodities, as shown in Figure 12. We extend this dataset with different views of each object, as shown in Figure 13.

Our method finds the best matching between the point clusters representing possible objects and the objects in the database. Our matching method is based on correspondence grouping [37] using the signature of histograms of orientations (SHOT)3D surface descriptor [38]. In our experiments, we used two different approaches to find the best matching. In the first method, the best matching is obtained as the minimum distance according to:

The second method uses distance based on the centroid and standard deviation of each component in the descriptor for each cluster, as introduced in [39].

A comparison of object categorization results using different methods is presented in Section 5.

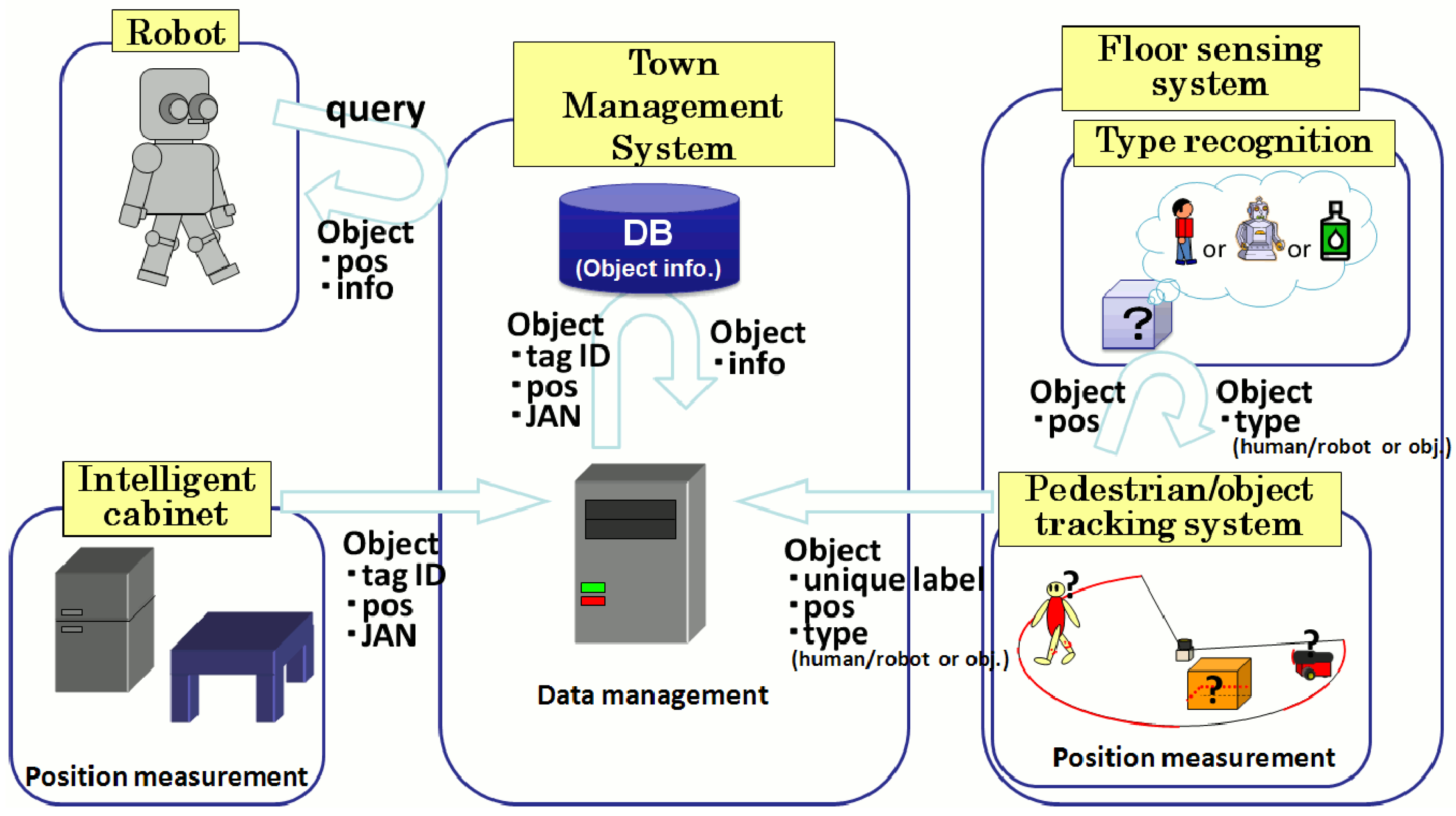

4. Town Management System

All of the sensor modalities that support our intelligent room are connected to our town management system (TMS) [18]. The TMS integrates the data coming from the different sensor modalities into a database that contains the information about the state of the environment. Moreover, the TMS communicates with the service robot and provides it with real-time information on its dynamically changing surroundings. The information flow between our intelligent room and the TMS is shown in Figure 14.

5. Experiments Section

In this section, we present several quantitative and qualitative experiments showing the capabilities of the informationally structured room and our humanoid service robots to solve different situations. We first show the performance of our tracking system for the service trolley and the wheelchair. Then, we present results showing the performance of our 3D object categorization system. Finally, we present two different scenarios in which the informationally structured room interacts with our service robot.

5.1. Tracking of Movable Furniture

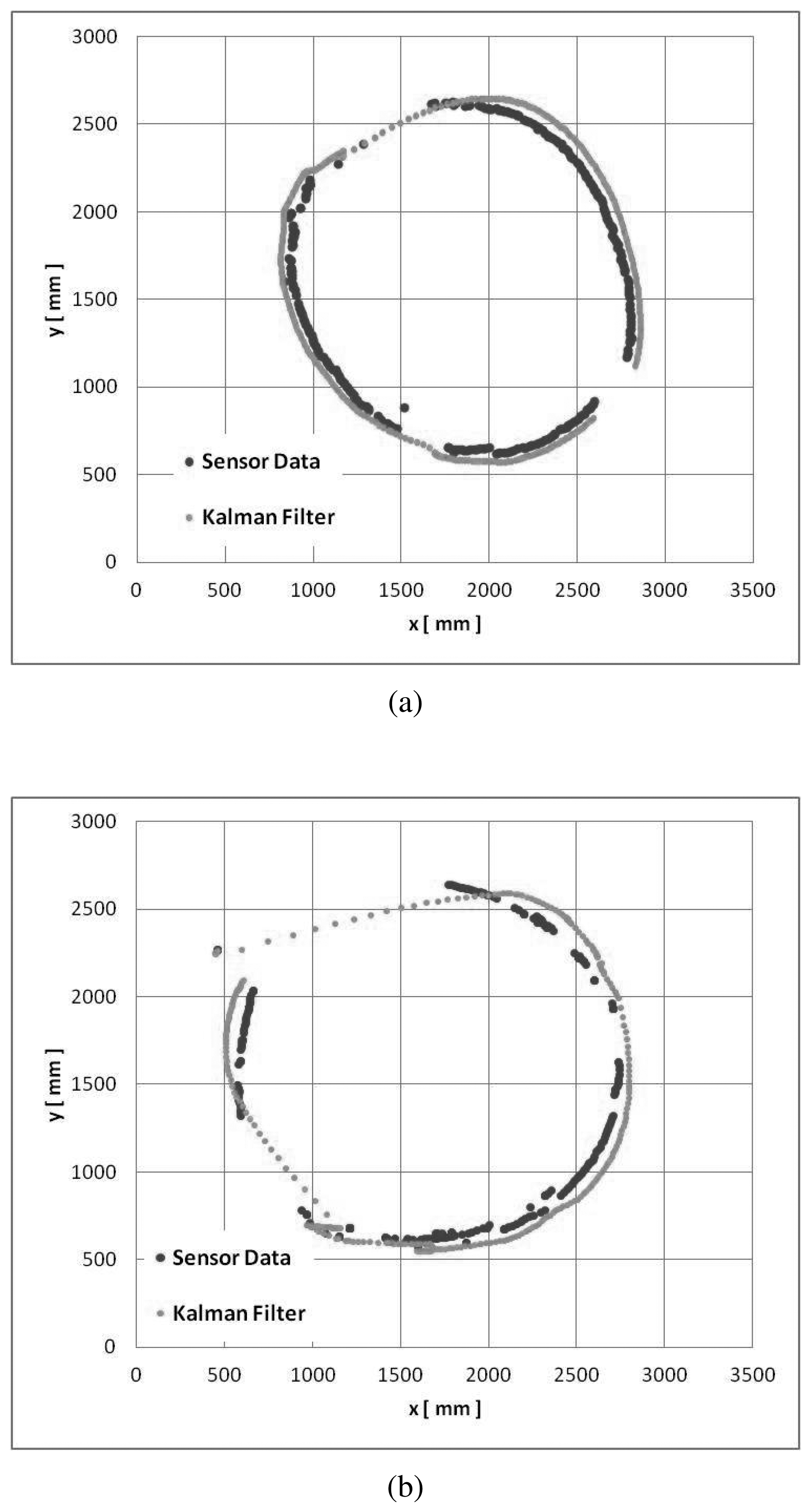

In the following experiment, we show the performance of our tracking system for non-static pieces of furniture. In particular, we show results for the tracking of the service trolley and wheelchair used in our room. These objects are shown in Figure 4. In this experiment, we move the service trolley and the wheelchair in the middle of the room in circles. Both objects where moved simultaneously inside the room, as shown in Figure 15. In this way, we also want to check the robustness our different object detectors.

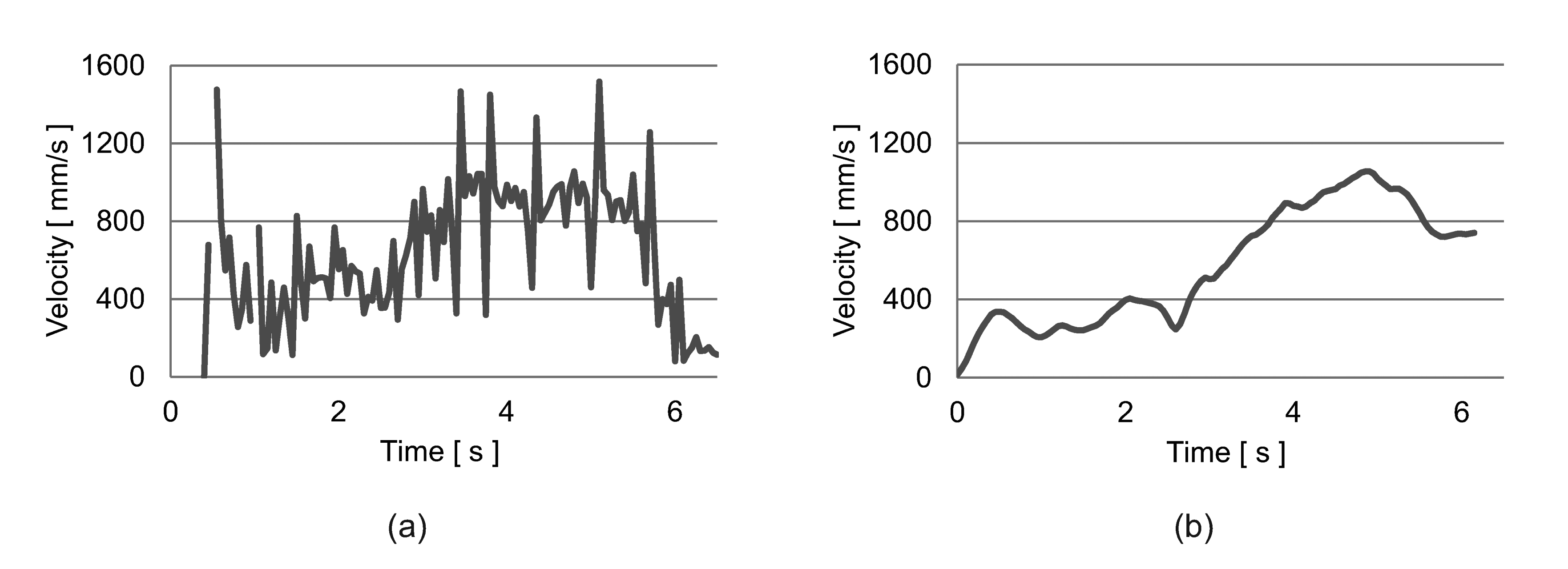

In Figure 16, we can see a comparison between the velocity estimated by the floor sensing system and the velocity estimated by the inertia sensor for the service trolley. These two velocity profiles are correlated and used to detect the service trolley. A similar approach is used for the wheelchair. We further apply a Kalman filter to improve the tracking of the movable furniture. Figure 17 shows the final tracking results for the trolley and the wheelchair.

5.2. Single Person Identification

In this experiment, we verify the identification of persons using our intelligent slippers. For this, we have captured the gate motions of 12 persons. The auto-regressive parameters for our models are calculated using eight steps, and the final feature vector is obtained by normalizing the parameters. To detect a new person, we compare the new obtained data with the calculated model for each person, and a minimum distance criterion is applied.

To test our system, we performed cross-validation 10,000 times by changing the training and test data randomly. The average success rate was 76.0% with a standard deviation of 4.5%.

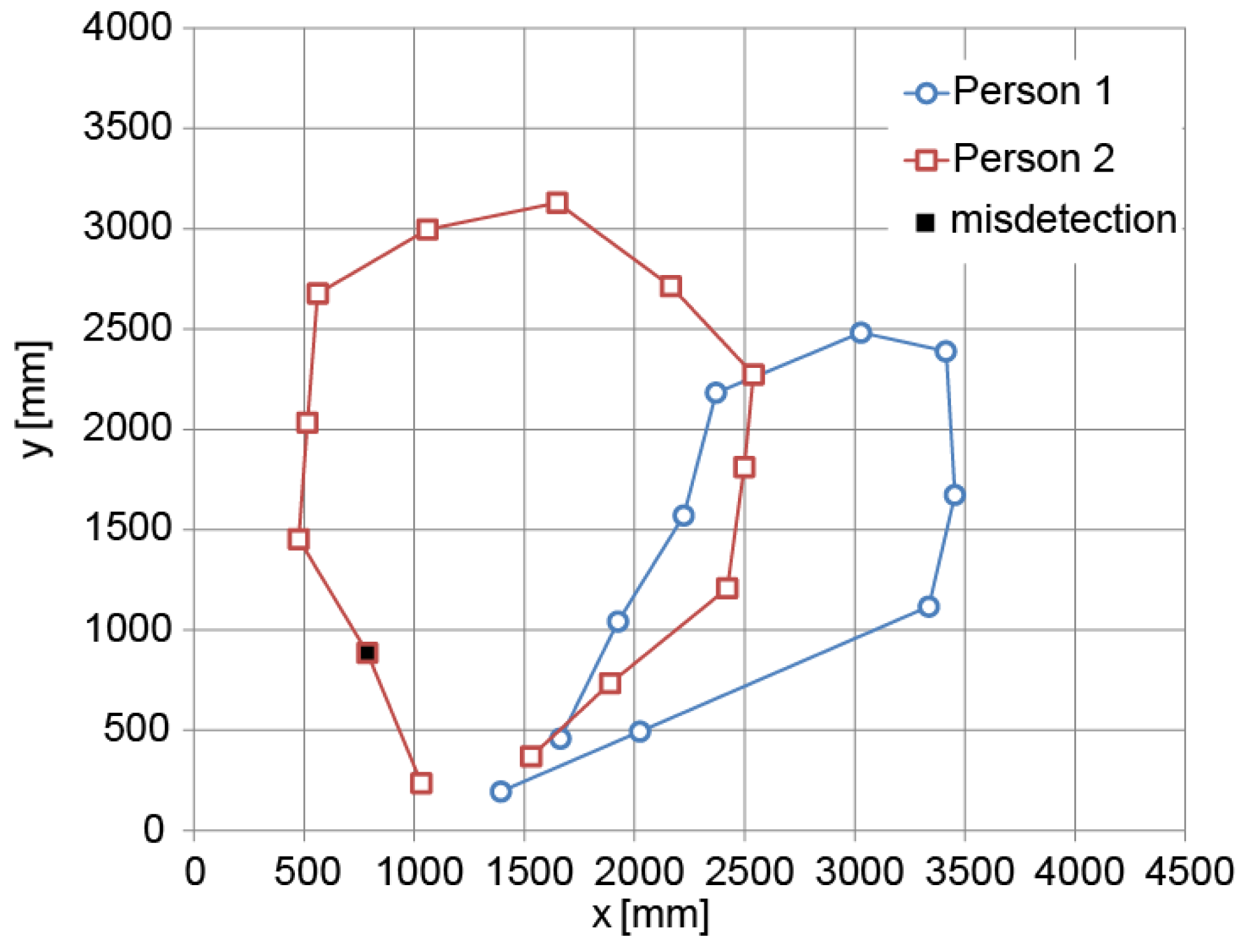

5.3. Tracking of Multiple Persons

To show the performance of our tracking system for multiple persons, we carried out an experiment in which two persons walked simultaneously inside the room, as shown in Figure 18. The detection of each person is depicted in Figure 19. Most of the feet are correctly detected for each person. The detection rate was 96% in this case.

5.4. Categorization of New and Moved Detected Objects

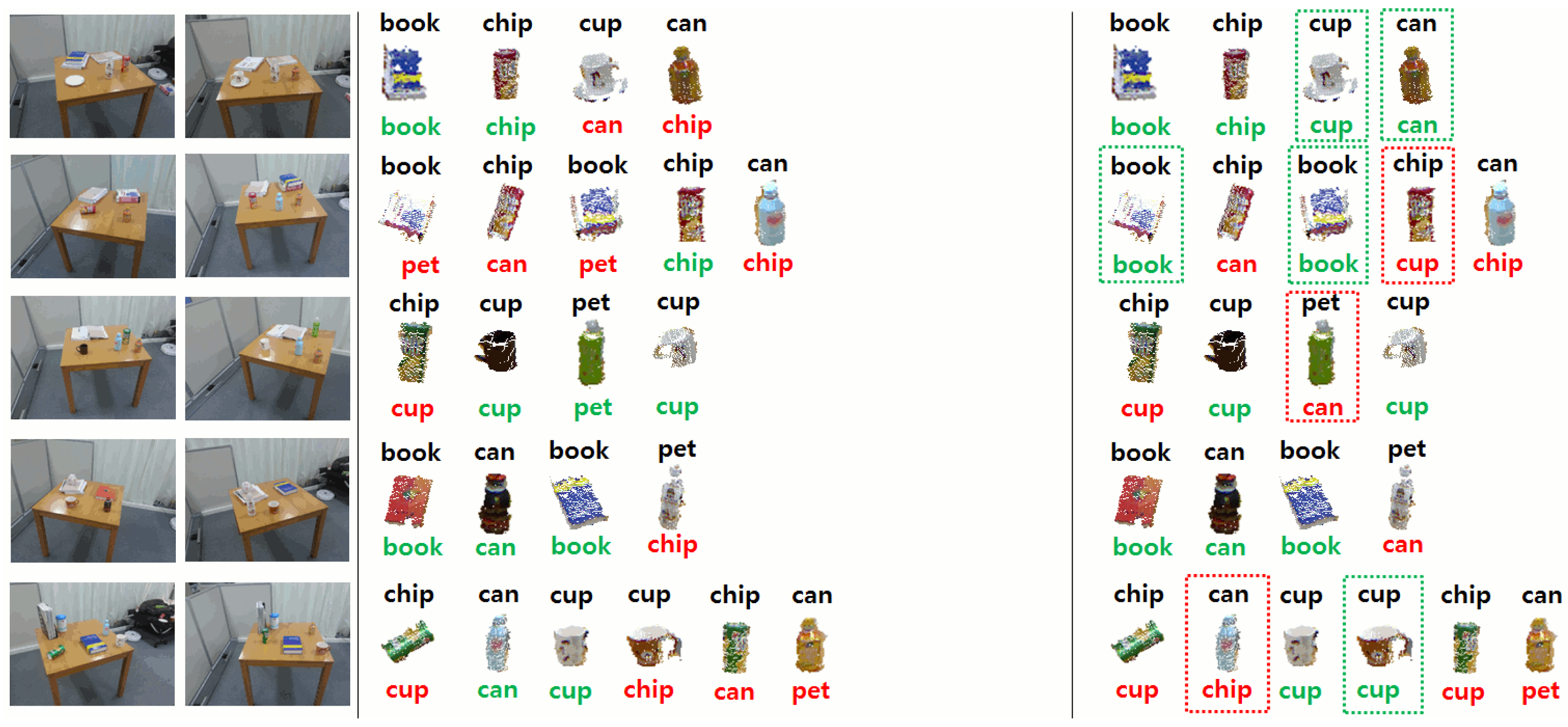

In the following experiments, we present quantitative and qualitative results for the visual memory system used by the service humanoid robot working in the intelligent room. For each individual experiment, the robot took two observations of the same scene from different viewpoints. Then, after applying the change detection approach described in Section 3.1, we obtained the clusters corresponding to new or moved objects in the scene. These objects were then categorized into the different categories in our dataset (see Figure 12). We repeated the experiments in different scenarios by changing the tables and the objects on them.

As explained in Section 3.1, we compared two different methods to calculate the distance of the best matching one. Table 2 shows the results of both methods using our dataset of objects. For this experiment, we divided our dataset of objects (see Section 3.1) into random training and test sets, and we tested the different distance measures.

In addition, we show in Figures 20–22 an example of the detection and categorization results in different real situations inside our intelligent room.

6. Scenarios

In this section, we present different working scenarios in which our service robot interacts with the user inside the intelligent room. These scenarios are aimed at presenting qualitative and quantitative results and to show a complete view of our integrated system.

6.1. Simultaneous People and Object Detection

In our first scenario, our system aims to detect people together with the objects with which they are interacting.

The scenario is presented as follows.

Initially, Object_1is placed inside one of the intelligent cabinets, and Object_2 is placed on the table.

Person_1 enters the room and sits on the bed.

Person_2 enters the room and goes to the intelligent cabinet.

Person_2 takes Object_1 from the intelligent cabinet and puts it on the table.

Person_2 leaves the room.

Person_1 approaches table and takes Object_1 and Object_2.

Person_1 puts Object_1 and Object_2 inside the intelligent cabinet.

Person_2 leaves the room.

The different situations in this scenario are shown in Figure 23. Snapshots of our database with the corresponding timestamps are shown in Table 3. Data corresponding to the latest timestamp are shown first. Initially (timestamp = 15:39:06), Object_2 (ID = 54) is located outside of the cabinet (state = 0). Then (timestamp = 15:40:09), Object_1 (id = 53) is located in the intelligent cabinet (ID = 15, state = 1), and the corresponding position and weight inside the cabinet are recorded. At 15:42:05, Object_1 is removed from the cabinet. The floor sensing system detected that Person_2 stood near the intelligent cabinet during 15:42:04–15:42:06. Then, the object tracker estimated that Person_2 moved Object_1. Later, Object_1 is detected again at 15:42:20, and Object_2 is detected at 15:42:21 by the cabinet. The system successfully estimates movements of objects and persons.

6.2. Finding Moved Objects

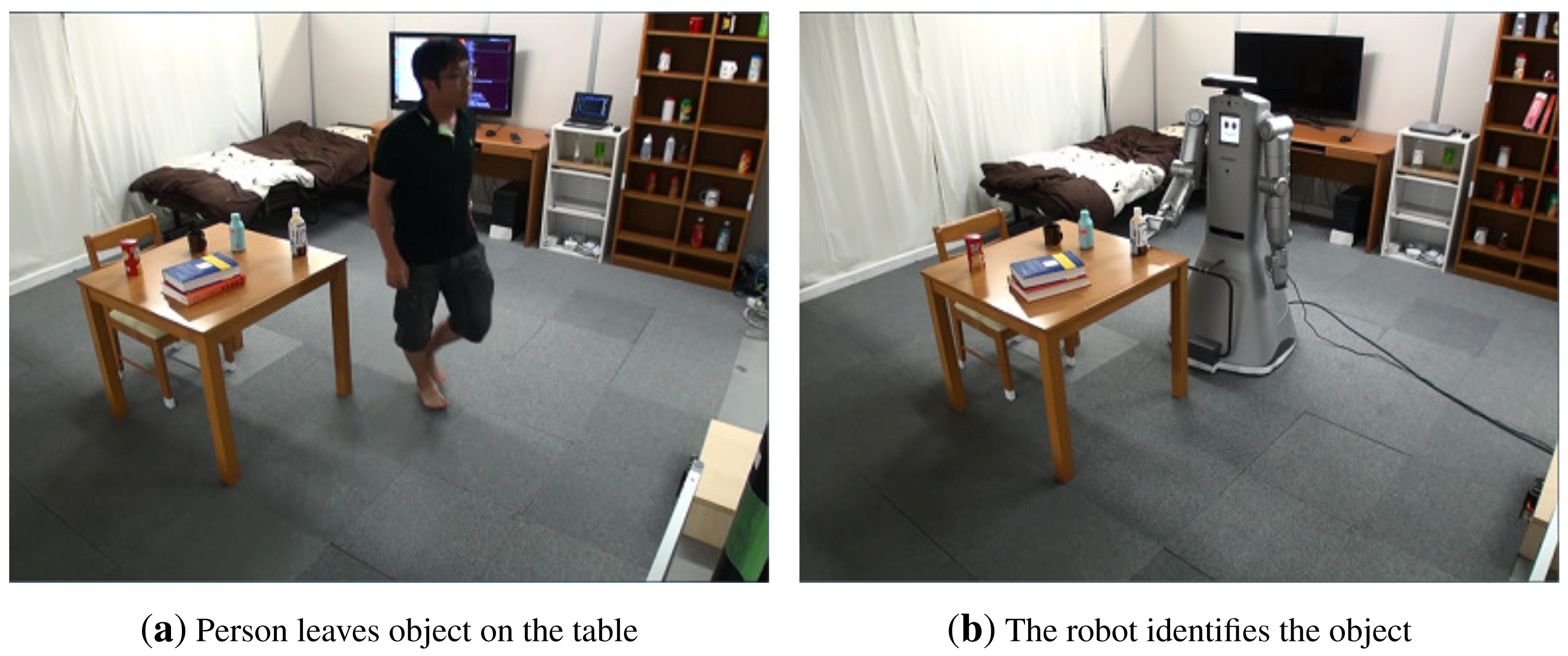

In this experiment, we show qualitative results demonstrating the capability of our system to find the position of an object that was moved by a person. This scenario makes use of several sensor modalities inside the intelligent room, in particular the intelligent cabinets, the floor sensing system and the visual memory of the robot. The idea of this experiment is to show how the room can update the position of objects to make them available in the future to the inhabitant or to the robot.

The sequence of actions carried out by the person inside the room are as follows:

A person enters the room and picks up a beverage from the intelligent cabinet.

This person walks around the room close to the bed, desk and table.

Finally, the person drinks the beverage in front of the table and leaves the bottle on the table.

Leave the bottle at the table.

Exit from the room.

Meanwhile, the person is acting in our intelligent room as follows:

The floor system starts tracking the person after the bottle is taken from the intelligent cabinet.

The different pieces of furniture are assigned a “residence time”, which is the time a person spends close to it.

After the person leaves the room, the system orders the different pieces of furniture according to their residence time.

The piece of furniture with the highest residence time is selected (the table in this case).

The robot approaches the table and identifies the cluster corresponding to the change (bottle).

The robot identifies the commodity using the RFID tag and updates its position in the database.

If the object is not found in the current piece of furniture, then we continue with the next one having the highest residence time.

Some snapshots of the previous scenario are shown in Figure 24. A full video with the experiment is available at [40].

6.3. Go-And-Fetch Task

In this scenario, our service robot serves a beverage from the service trolley to the person on the bed. The different steps in this situation are as follows:

A person enters the room pushing the service trolley.

The floor sensing system identifies and measures the position of the service trolley.

The person on the bed asks the robot to go and fetch the beverage on the service trolley.

The robot approaches the wagon and grasps the object from the service trolley.

The service robot hands over the object to the person on the bed.

Different snapshots of the previous situation are shown in Figure 25. In this previous scenario, the position of the wagon is determined using the floor sensing system and inertial sensor, as explained in Section 2.6. Moreover, the object position is determined with the tag reader and force sensor on the service trolley. The robot grasps the object by using our planning method [41]. In this case, we have substituted the Kinect camera by a three-camera stereo system [42]. This demonstration is supported by the NEDOIntelligent Robotic Technology (RT)Software Project. Several modules, such as voice recognition and stereo vision, are connected to our system. A full video with the experiment is available at [40].

7. Conclusions

In this paper, we have introduced our informationally structured room, which is designed to support daily life activities of elderly people. Our room is equipped with different sensor modalities that are naturally integrated in the environment, reducing the invasion of the personal space of the habitant. In addition, the room is supported by a humanoid robot, which uses the information of the environment to assist the habitants of the room.

Privacy is an important concern in our our work, and for this reason, our room does not contain sensors that can invade the private life of people. We do not use vision cameras to track people activity; in contrast, we use the laser range finder of our floor sensing system and the IMUs attached to the slippers. The humanoid robot is equipped with an RGB-D camera, but its use is restricted to object detection and manipulation. Nevertheless, information about people activity is recorded in the TMS system, and that can present ethical problems. However, the TMS is local to the room and does not need external communication, thus reducing the risk of propagating personal information.

In this work, we have concentrated on the go-and-fetch task, which we prognosticate to be one of the most demanding tasks for the elderly in their daily life. In that respect, we have presented the different subsystems that are implicated in this task and have shown several scenarios to demonstrate the suitability of the different sensor modalities that are used in our room.

In the future, we aim to design and prepare a long-term experiment in which we can test the complete system for longer periods of time and more complex situations.

Acknowledgements

The authors would like to thank the members of the NEDO Intelligent RT Software Project.

Author Contributions

Tokuo Tsuji, Oscar Martinez Mozos, Tsutomu Hasegawa, Ken'ichi Morooka and Ryo Kurazume were responsible for the design of the intelligent subsystems and corresponding experiments presented in this paper. Moreover, they have closely supervised and participated in their implementation. Hyunuk Chae, YoonSeok Pyo and Kazuya Kusaka were responsible for the implementation of the different software and hardware subsystems that appear in this paper and for running the experiments and analysing the corresponding data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nugent, C.; Finlay, D.; Fiorini, P.; Tsumaki, Y.; Prassler, E. Home Automation as a Means of Independent Living. IEEE Trans. Autom. Sci. Eng. 2008, 5, 1–9. [Google Scholar]

- Lee, J.; Hashimoto, H. Intelligent Space–Concept and contents. Adv. Rob. 2002, 16, 265–280. [Google Scholar]

- Kayama, K.; Yairi, I.; Igi, S. Semi-Autonomous Outdoor Mobility Support System for Elderly and Disabled People. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; pp. 2606–2611.

- Mori, T.; Takada, A.; Noguchi, H.; Harada, T.; Sato, T. Behavior Prediction Based on Daily-Life Record Database in Distributed Sensing Space. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AL, Canada, 2–6 August 2005; pp. 1833–1839.

- Nakauchi, Y.; Noguchi, K.; Somwong, P.; Matsubara, T.; Namatame, A. Vivid Room: Human Intention Detection and Activity Support Environment for Ubiquitous Autonomy. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; pp. 773–778.

- Nishida, Y.; Aizawa, H.; Hori, T.; Hoffman, N.; Kanade, T.; Kakikura, M. 3D Ultrasonic Tagging System for Observing Human Activity. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 1, pp. 785–791.

- Murakami, K.; Matsuo, K.; Hasegawa, T.; Kurazume, R. Position Tracking and Recognition of Everyday Objects by using Sensors Embedded in an Environment and Mounted on Mobile Robots. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Vilamoura, Portugal, 7–12 October 2012; pp. 2210–2216.

- Kawamura, K.; Iskarous, M. Trends in Service Robots for the Disabled and the Elderly. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Munich, Germany, 12–16 September 1994; Volume 3, pp. 1647–1654.

- Roy, N.; Baltus, G.; Fox, D.; Gemperle, F.; Goetz, J.; Hirsch, T.; Margaritis, D.; Montemerlo, M.; Pineau, J.; Schulte, J.; et al. Towards Personal Service Robots for the Elderly; Workshop on Interactive Robots and Entertainment (WIRE): Pittsburgh, PA, USA; 30; April 1; May; 2000. [Google Scholar]

- Kim, M.; Kim, S.; Park, S.; Choi, M.T.; Kim, M.; Gomaa, H. Service robot for the elderly. IEEE Robot. Autom. Mag. 2009, 16, 34–45. [Google Scholar]

- McColl, D.; Louie, W.Y.; Nejat, G. Brian 2.1: A socially assistive robot for the elderly and cognitively impaired. IEEE Robot. Autom. Mag. 2013, 20, 74–83. [Google Scholar]

- Chen, T.; Ciocarlie, M.; Cousins, S.; Grice, P.; Hawkins, K.; Hsiao, K.; Kemp, C.; King, C.H.; Lazewatsky, D.; Leeper, A.; et al. Robots for humanity: Using assistive robotics to empower people with disabilities. IEEE Robot. Autom. Mag. 2013, 20, 30–39. [Google Scholar]

- Hasegawa, T.; Muarkami, K. Robot Town Project: Supporting Robots in an Environment with Its Structured Information. Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence, Seoul, Korea, 28–31 October 2006; pp. 119–123.

- Murakami, K.; Hasegawa, T.; Kurazume, R.; Kimuro, Y. A Structured Environment with Sensor Networks for Intelligent Robots. Proceedings of the IEEE International Conference on Sensors, Lecce, Italy, 26–29 October 2008; pp. 705–708.

- Kim, B.; Tomokuni, N.; Ohara, K.; Tanikawa, T.; Ohba, K.; Hirai, S. Ubiquitous Localization and Mapping for Robots with Ambient Intelligence. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4809–4814.

- Saffiotti, A.; Broxvall, M. PEIS Ecologies: Ambient Intelligence meets Autonomous Robotics. Proceedings of the International Conference on Smart Objects and Ambient Intelligence (sOc-EUSAI), Grenoble, France, 12–14 October 2005; pp. 275–280.

- Linner, T.; Güttler, J.; Bock, T.; Georgoulas, C. Assistive robotic micro-rooms for independent living. Autom. Constr. 2015, 51, 8–22. [Google Scholar]

- Murakami, K.; Hasegawa, T.; Kurazume, R.; Kimuro, Y. Supporting Robotic Activities in Informationally Structured Environment with Distributed Sensors and RFID Tags. J. Robot. Mechatron. 2009, 21, 453–459. [Google Scholar]

- Murakami, K.; Hasegawa, T.; Shigematsu, K.; Sueyasu, F.; Nohara, Y.; Ahn, B.W.; Kurazume, R. Position Tracking System of Everyday Objects in an Everyday environment. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 3712–3718.

- Murakami, K.; Matsuo, K.; Hasegawa, T.; Nohara, Y.; Kurazume, R.; Ann, B.W. Position Tracking System of Everyday Objects in an Everyday Environment. Proceedings of the IEEE Sensors, Waikoloa, HI, USA, 1–4 November, 2010; pp. 1879–1882.

- Mozos, O.M.; Tsuji, T.; Chae, H.; Kuwahata, S.; Pyo, Y.; Hasegawa, T.; Morooka, K.; Kurazume, R. The Intelligent Room for Elderly Care. In Natural and Artificial Models in Computation and Biology; Springer-Berlin: Heidelberg Germany, 2013; Volume 7930, pp. 103–112. [Google Scholar]

- Pyo, Y.; Hasegawa, T.; Tsuji, T.; Kurazume, R.; Morooka, K. Floor Sensing System Using Laser Reflectivity for Localizing Everyday Objects and Robot. Sensors 2014, 14, 7524–7540. [Google Scholar]

- Nohara, Y.; Hasegawa, T.; Murakami, K. Floor Sensing System Using Laser Range Finder and Mirror for Localizing Daily Life Commodities. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 1030–1035.

- Ngo, T.T.; Makihara, Y.; Nagahara, H.; Mukaigawa, Y.; Yagi, Y. The largest inertial sensor-based gait database and performance evaluation of gait-based personal authentication. Patt. Recogn. 2014, 47, 228–237. [Google Scholar]

- Whittle, P. Hypothesis Testing in Time Series Analysis; Hafner Publishing Company: New York, NY, USA, 1951. [Google Scholar]

- Walker, G. On Periodicity in Series of Related Terms. Proceedings of the Royal Society of London; Series A, Containing Papers of a Mathematical and Physical Character. 1931; 131, pp. 518–532. [Google Scholar]

- Utsumi, A.; Mori, H.; Ohya, J.; Yachida, M. Multiple-view-based Tracking of Multiple Humans. Proceedings of the Fourteenth International IEEE Conference on Pattern Recognition, Brisbane, Australia, 16–20 August 1998; Volume 1, pp. 597–601.

- Hu, W.; Hu, M.; Zhou, X.; Tan, T.; Lou, J.; Maybank, S. Principal axis-based correspondence between multiple cameras for people tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 663–671. [Google Scholar]

- Mori, T.; Matsumoto, T.; Shimosaka, M.; Noguchi, H.; Sato, T. Multiple Persons Tracking with Data Fusion of Multiple Cameras and Floor Sensors Using Particle Filters; ECCV Workshop on Multi-camera and Multi-modal Sensor Fusion Algorithms and Applications (M2SFA2): Marseille, France, 18 October 2008. Available online: https://hal.inria.fr/inria-00326752/ (accessed on 9 April 2015).

- Ikeda, T.; Ishiguro, H.; Nishimura, T. People Tracking by Cross Modal Association of Vision Sensors and Acceleration Sensors. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), San Diego, CA, USA, 29 October–2 November 2007; pp. 4147–4151.

- Shao, X.; Zhao, H.; Nakamura, K.; Katabira, K.; Shibasaki, R.; Nakagawa, Y. Detection and Tracking of Multiple Pedestrians by Using Laser Range Scanners. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), San Diego, CA, USA, 29 October–2 November 2007; pp. 2174–2179.

- Steinhoff, U.; Schiele, B. Dead Reckoning from the Pocket-an Experimental Study. Proceedings of the 2010 IEEE International Conference on Pervasive Computing and Communications (PerCom), Mannheim, Germany, 29 March–2 April 2010; pp. 162–170.

- Mori, T.; Suemasu, Y.; Noguchi, H.; Sato, T. Multiple People Tracking by Integrating Distributed Floor Pressure Sensors and RFID System. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, San Diego, CA, USA, 10–13 October 2004; Volume 6, pp. 5271–5278.

- Noguchi, H.; Mori, T.; Sato, T. Object Localization by Cooperation between Human Position Sensing and Active RFID System in Home Environment. SICE J. Control Meas. Syst. Integr. 2011, 4, 191–198. [Google Scholar]

- Woo, S.; Jeong, S.; Mok, E.; Xia, L.; Choi, C.; Pyeon, M.; Heo, J. Application of WiFi-based indoor positioning system for labor tracking at construction sites: A case study in Guangzhou MTR. Autom. Constr. 2011, 20, 3–13. [Google Scholar]

- Lauritzen, S.L. Thiele-Pioneer in Statistics; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Tombari, F.; Stefano, L.D. Object Recognition in 3D Scenes with Occlusions and Clutter by Hough Voting. Proceedings of the 4th Pacific-Rim Symposium on Image and Video Technology, Sinapore, Singapore, 14–17 November 2010.

- Tombari, F.; Salti, S.; Stefano, L.D. Unique Signatures of Histograms for Local Surface Description. Proceedings of the European Conference on Computer Vision (ECCV), Crete, Greece, 5–11 September 2010.

- Alexandre, L.A. 3D Descriptors for Object and Category Recognition: a Comparative Evaluation. Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012.

- Intelligent Room. Available online: http://fortune.ait.kyushu-u.ac.jp/∼tsuji/movies.html (accessed on 15 April 2015).

- Tsuji, T.; Harada, K.; Kaneko, K.; Kanehiro, F.; Maruyama, K. Grasp planning for a multifingered hand with a humanoid robot. J. Robot. Mechatron. 2010, 22, 230. [Google Scholar]

- Maruyama, K.; Kawai, Y.; Tomita, F. Model-based 3D Object Localization Using Occluding Contours. Proceedings of the Asian Conference on Computer Vision (ACCV), Xi'an, China, 23–27 September 2010.

| Measurement Item | Proposed System | Previous System [19] | |

|---|---|---|---|

| Maximum response time (ms) | 390 | 610 | |

| Position | average error (mm) | 16.4 | 7.2 |

| maximum error (mm) | 19 | 13 | |

| Weight | average error (g) | 28.5 | 31.0 |

| maximum error (g) | 33 | 55 | |

| measurable minimum value (g) | 54 | 54 | |

| Method 1 | |||||

|---|---|---|---|---|---|

| % | Chipstar | Book | Cup | Can | Pet Bottle |

| Chipstar | 95.83 | 0.00 | 2.08 | 0.00 | 2.08 |

| Book | 0.00 | 100.00 | 0.00 | 0.00 | 0.00 |

| Cup | 10.42 | 0.00 | 58.33 | 10.42 | 20.83 |

| Can | 6.25 | 0.00 | 12.50 | 72.92 | 8.33 |

| Pet bottle | 10.42 | 0.00 | 6.25 | 4.17 | 79.17 |

| Method 2 | |||||

| % | Chipstar | Book | Cup | Can | Pet Bottle |

| Chipstar | 100.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Book | 0.00 | 100.00 | 0.00 | 0.00 | 0.00 |

| Cup | 14.58 | 0.00 | 66.67 | 6.25 | 12.50 |

| Can | 6.25 | 0.00 | 6.25 | 79.17 | 8.33 |

| Pet bottle | 10.42 | 2.08 | 16.67 | 0.00 | 70.83 |

| Time Stamp | id | x | y | z | Weight | State | Place | Event |

|---|---|---|---|---|---|---|---|---|

| 15:42:21.978 | 54 | 298.706 | 124.350 | 620 | 343 | 1 | 15 | object2 detected |

| 15:42:20.032 | 53 | 94.6918 | 122.113 | 620 | 159 | 1 | 15 | object1 detected |

| 15:42:05.778 | 53 | NULL | NULL | NULL | 166 | 0 | 0 | object1 lost |

| 15:40:09.115 | 53 | 199.723 | 116.265 | 620 | 166 | 1 | 15 | object1 detected |

| 15:39:06.223 | 54 | NULL | NULL | NULL | 322 | 0 | 0 | object2 lost |

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsuji, T.; Mozos, O.M.; Chae, H.; Pyo, Y.; Kusaka, K.; Hasegawa, T.; Morooka, K.; Kurazume, R. An Informationally Structured Room for Robotic Assistance. Sensors 2015, 15, 9438-9465. https://doi.org/10.3390/s150409438

Tsuji T, Mozos OM, Chae H, Pyo Y, Kusaka K, Hasegawa T, Morooka K, Kurazume R. An Informationally Structured Room for Robotic Assistance. Sensors. 2015; 15(4):9438-9465. https://doi.org/10.3390/s150409438

Chicago/Turabian StyleTsuji, Tokuo, Oscar Martinez Mozos, Hyunuk Chae, Yoonseok Pyo, Kazuya Kusaka, Tsutomu Hasegawa, Ken'ichi Morooka, and Ryo Kurazume. 2015. "An Informationally Structured Room for Robotic Assistance" Sensors 15, no. 4: 9438-9465. https://doi.org/10.3390/s150409438

APA StyleTsuji, T., Mozos, O. M., Chae, H., Pyo, Y., Kusaka, K., Hasegawa, T., Morooka, K., & Kurazume, R. (2015). An Informationally Structured Room for Robotic Assistance. Sensors, 15(4), 9438-9465. https://doi.org/10.3390/s150409438