Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera

Abstract

:1. Introduction

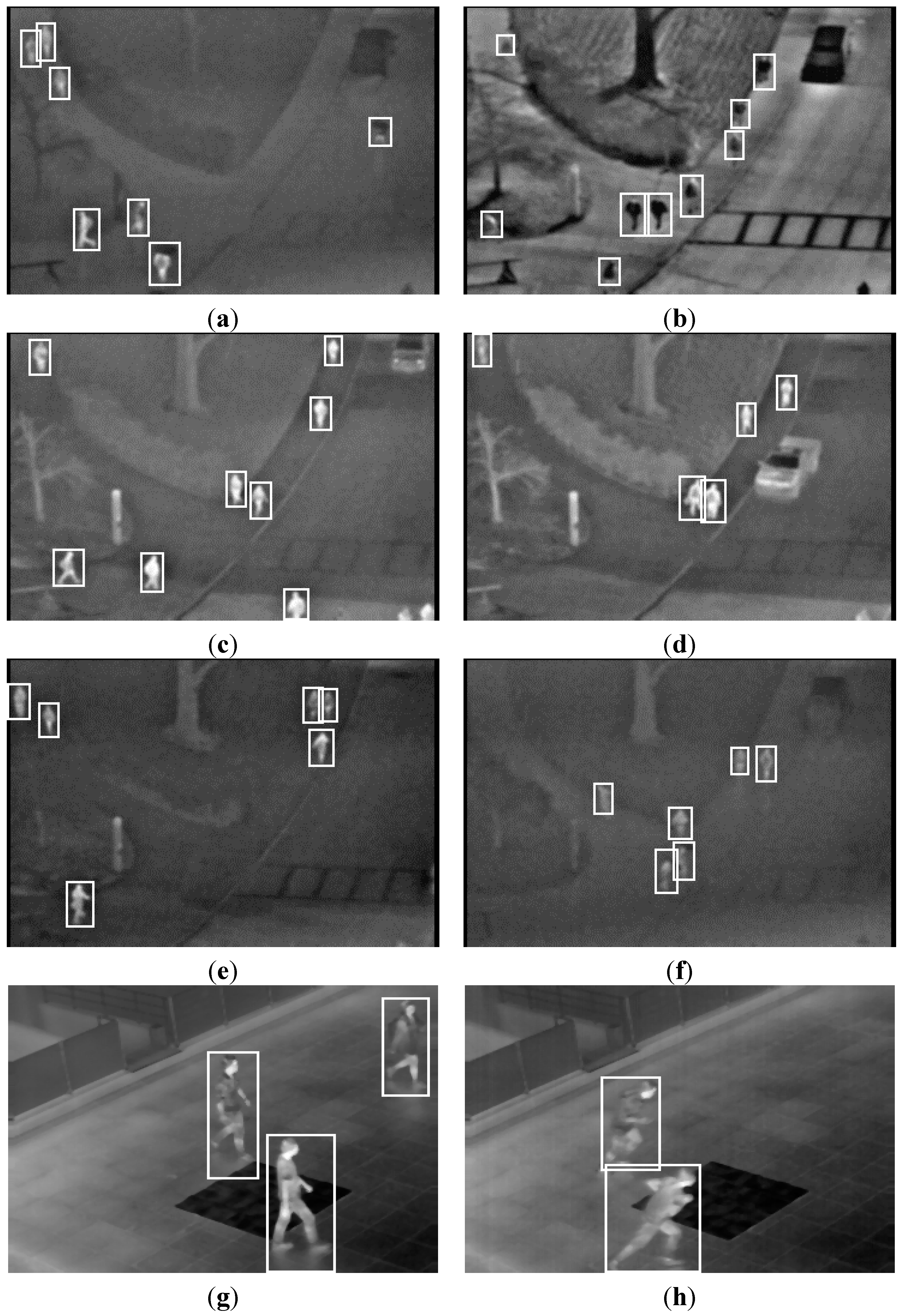

| Category | Without Background Generation [7,8,9,15,16,17,18,19,20] | With Background Generation | |

|---|---|---|---|

| Not Adjusting the Parameters for Detection Based on Background Information [21,22,23,24,25,26,27,28,29] | Adjusting the Parameters for Detection Based on Background Information (Proposed Method) | ||

| Examples |

|

|

|

| |||

| Advantages |

|

|

|

| |||

| Disadvantages |

|

|

|

|

| ||

|

| ||

2. Proposed Method

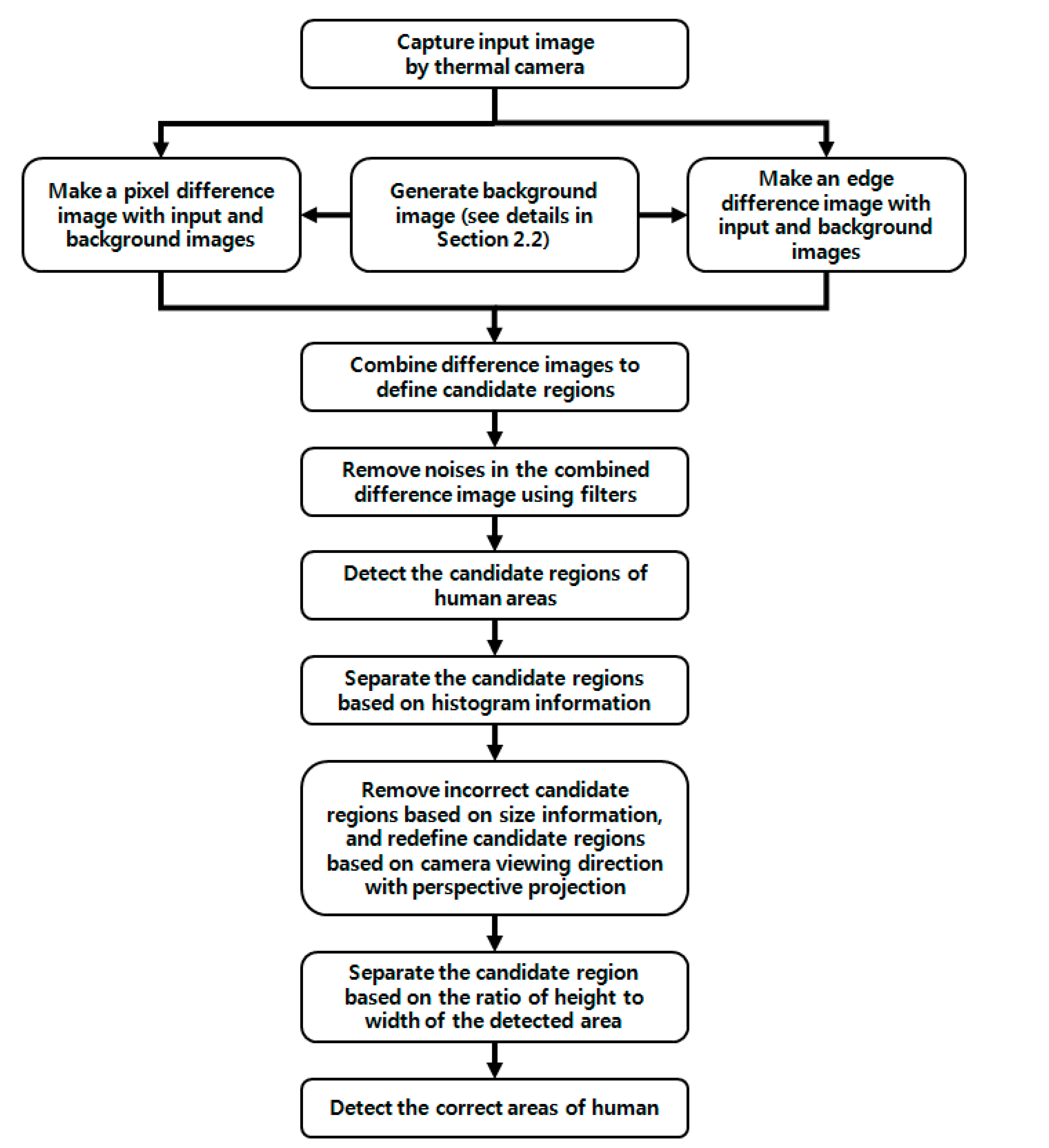

2.1. Proposed Method

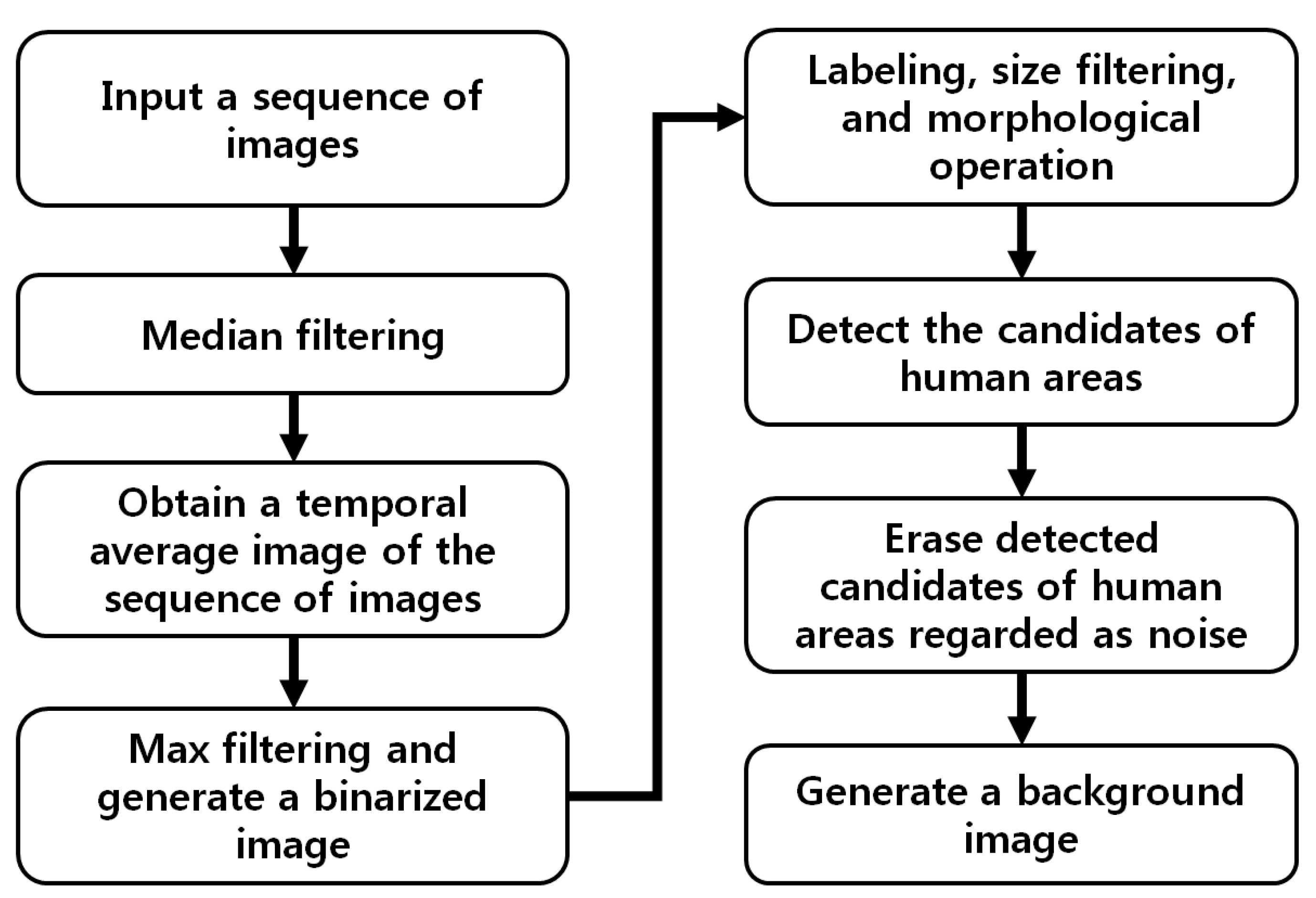

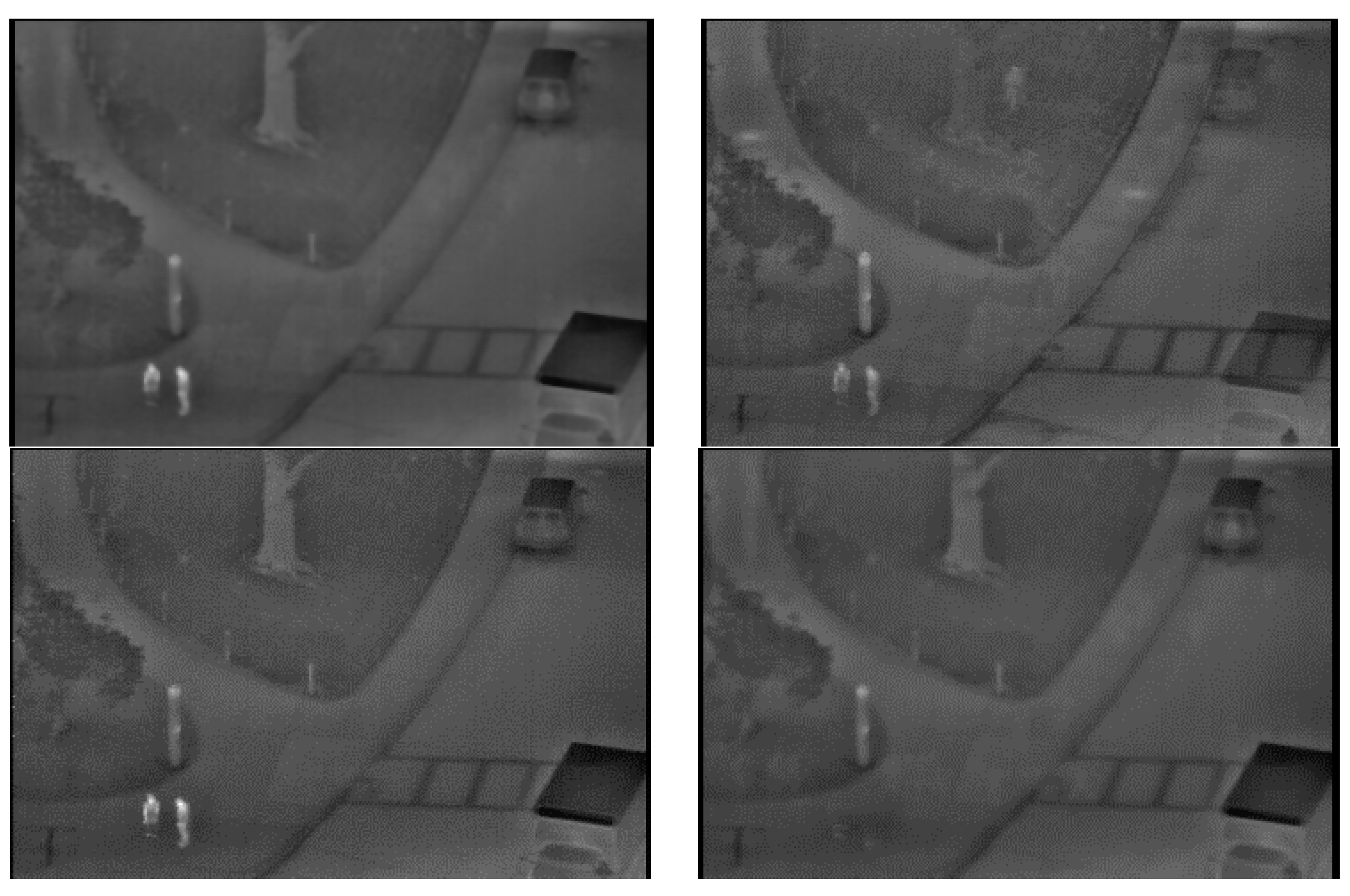

2.2. Generating a Background Image

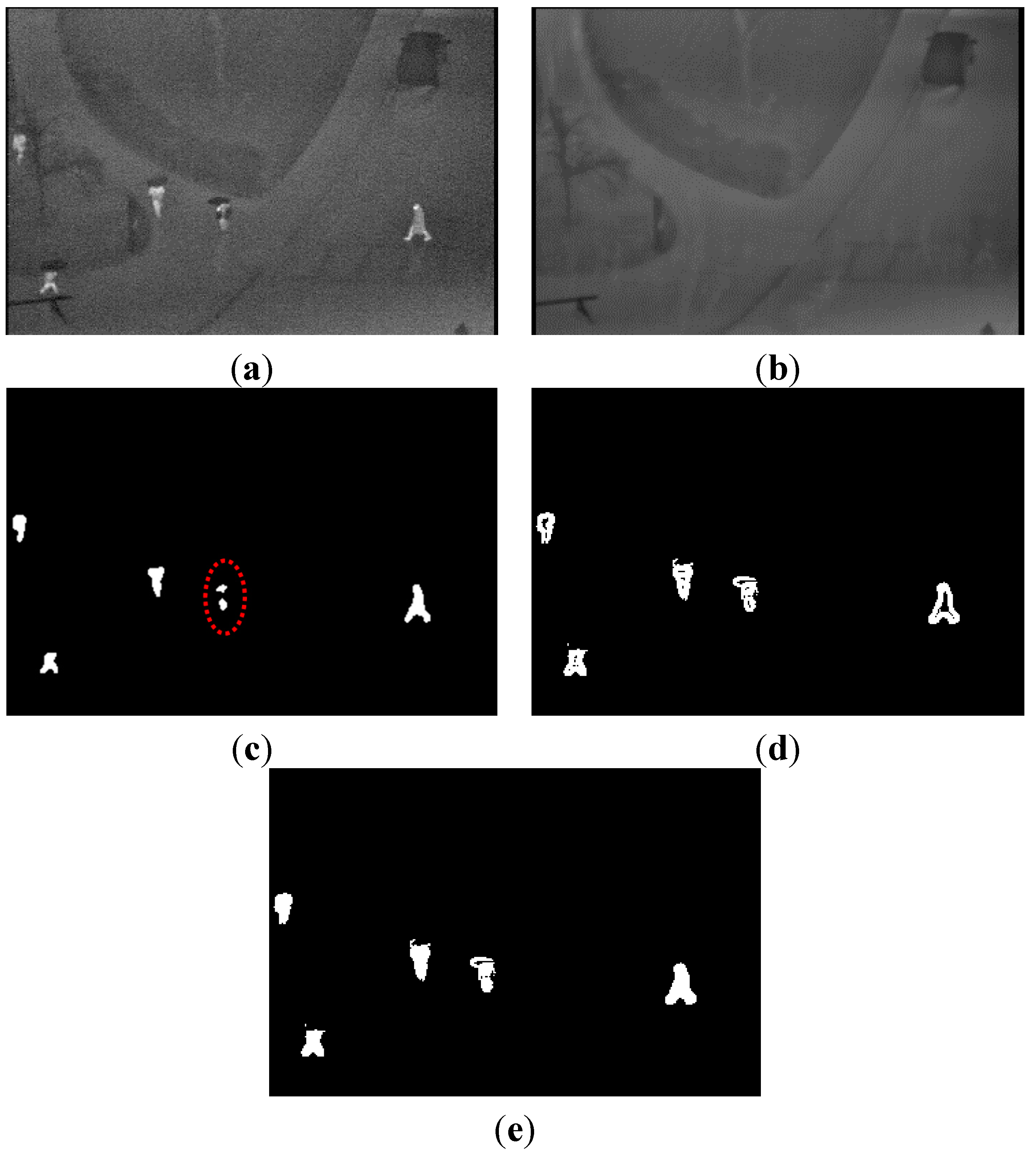

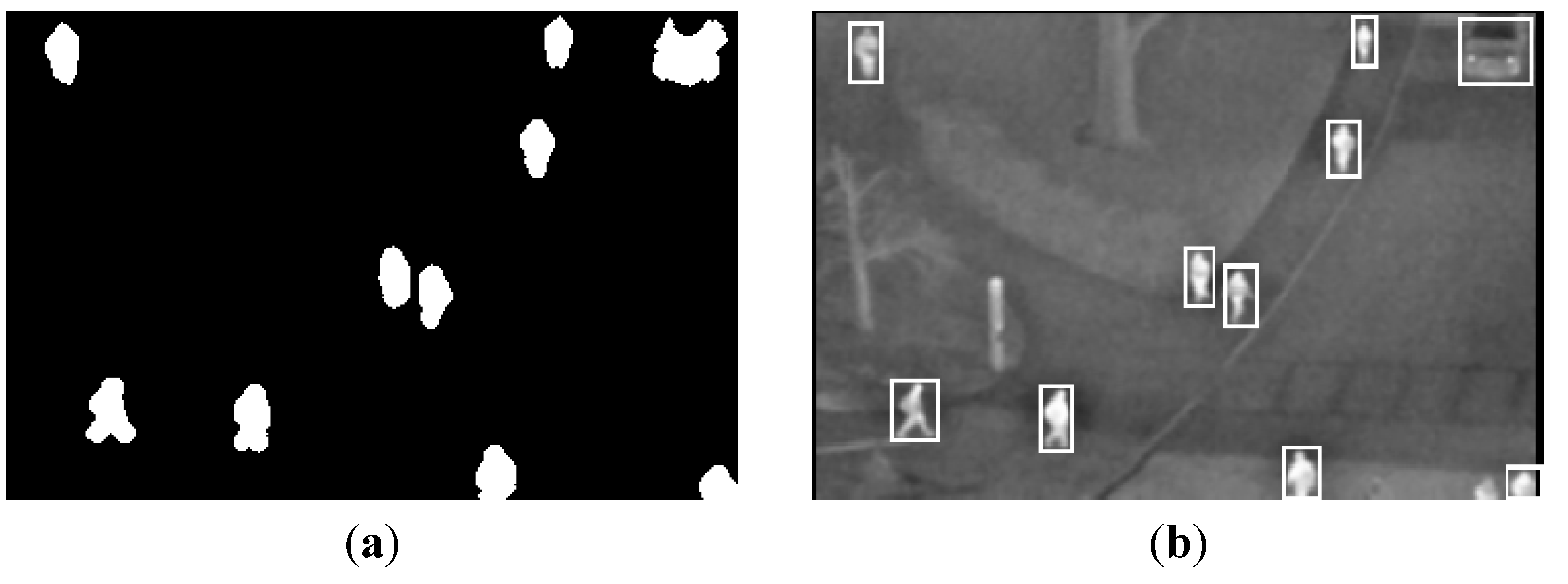

2.3. Generating a Difference Image with the Background and Input Image

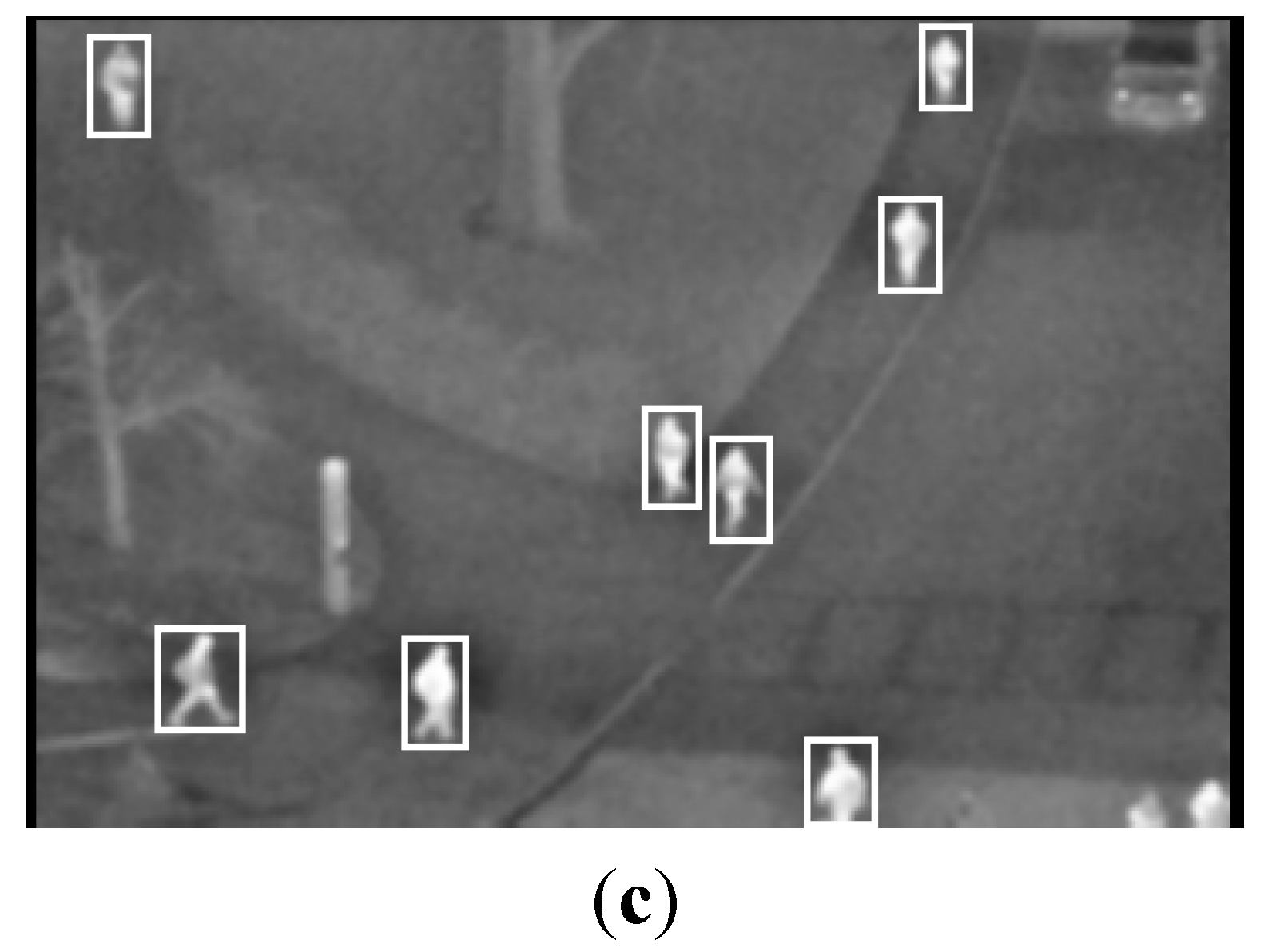

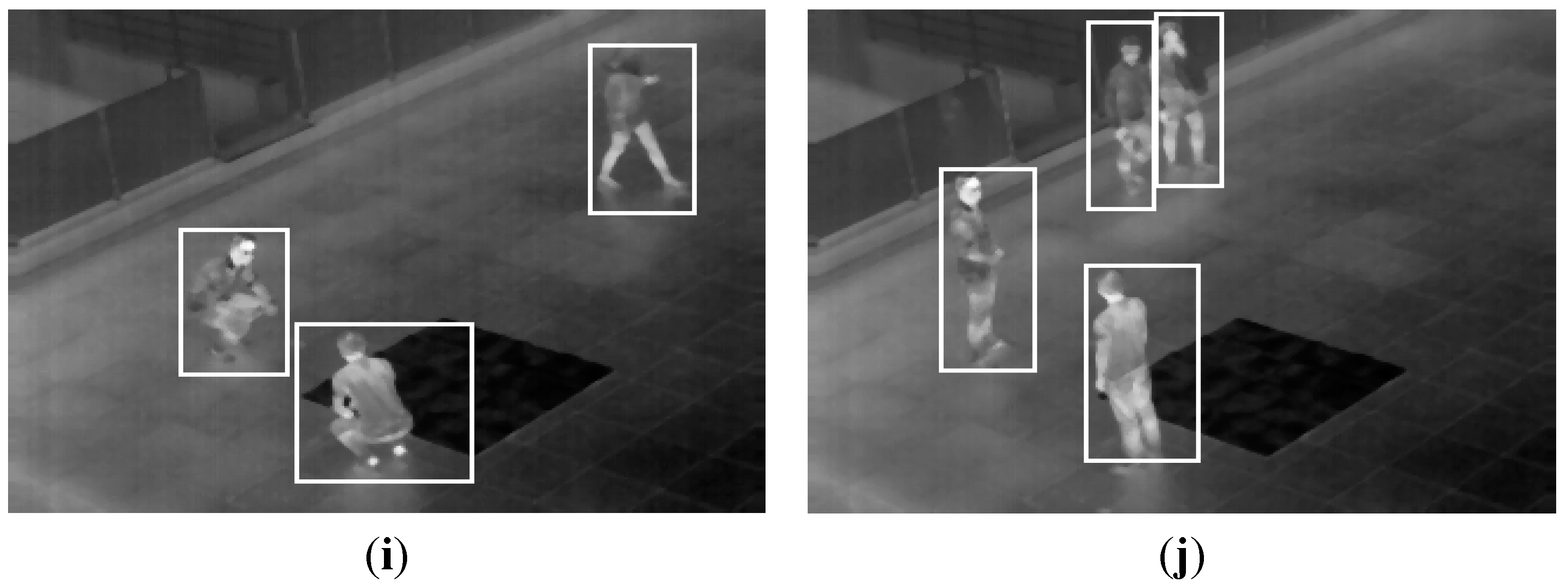

2.4. Human Detection

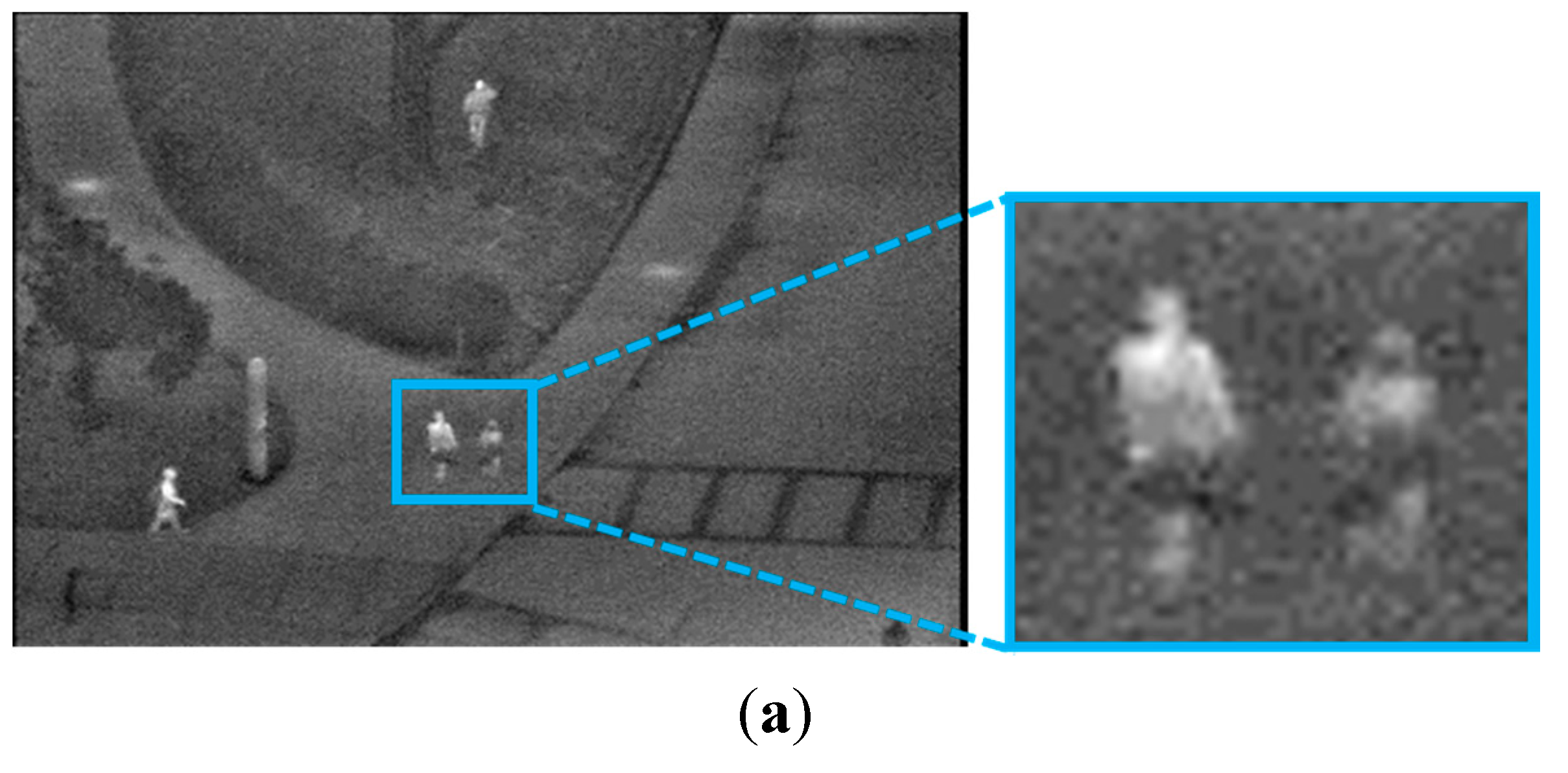

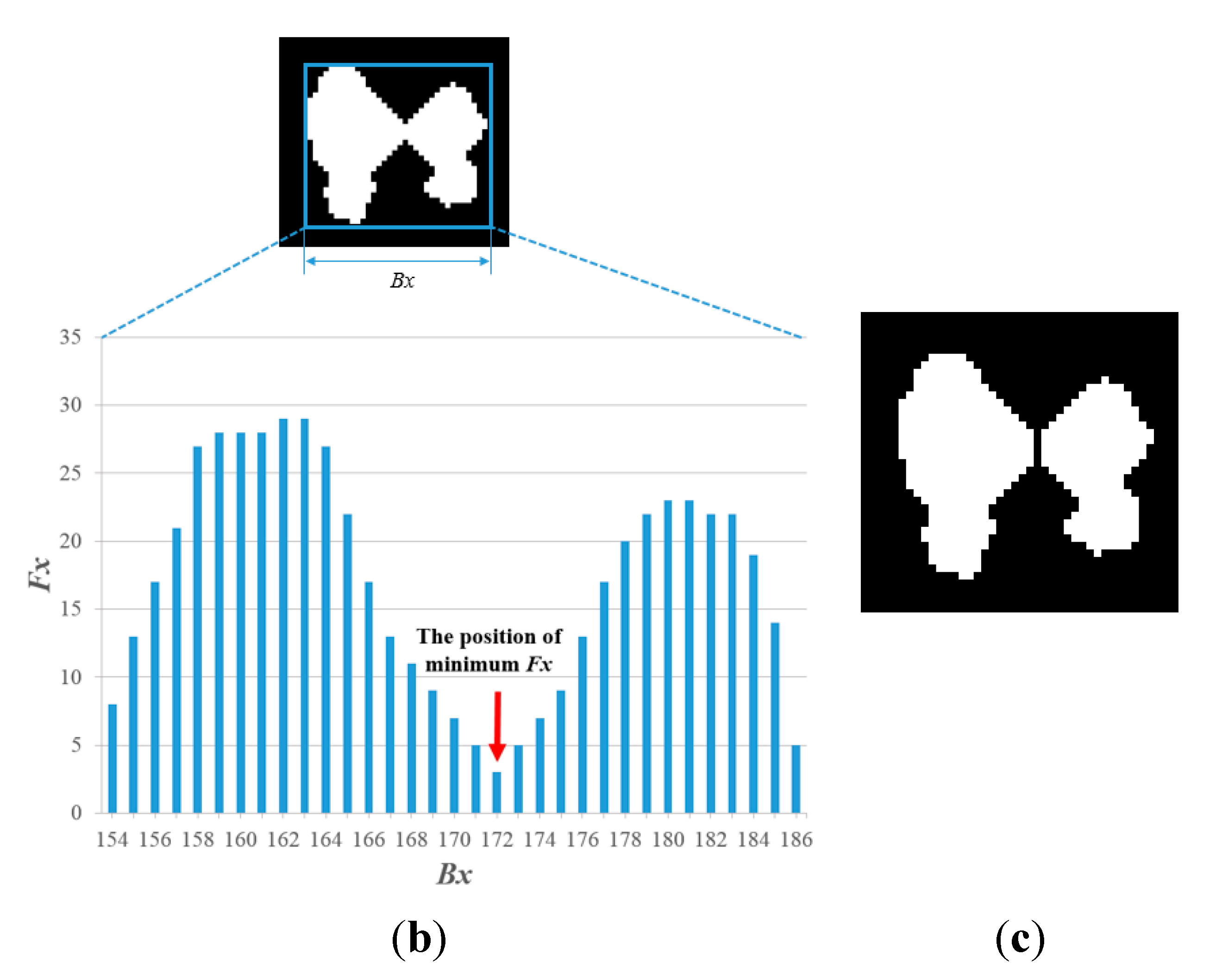

2.4.1. Division of Candidate Region Based on Histogram Information

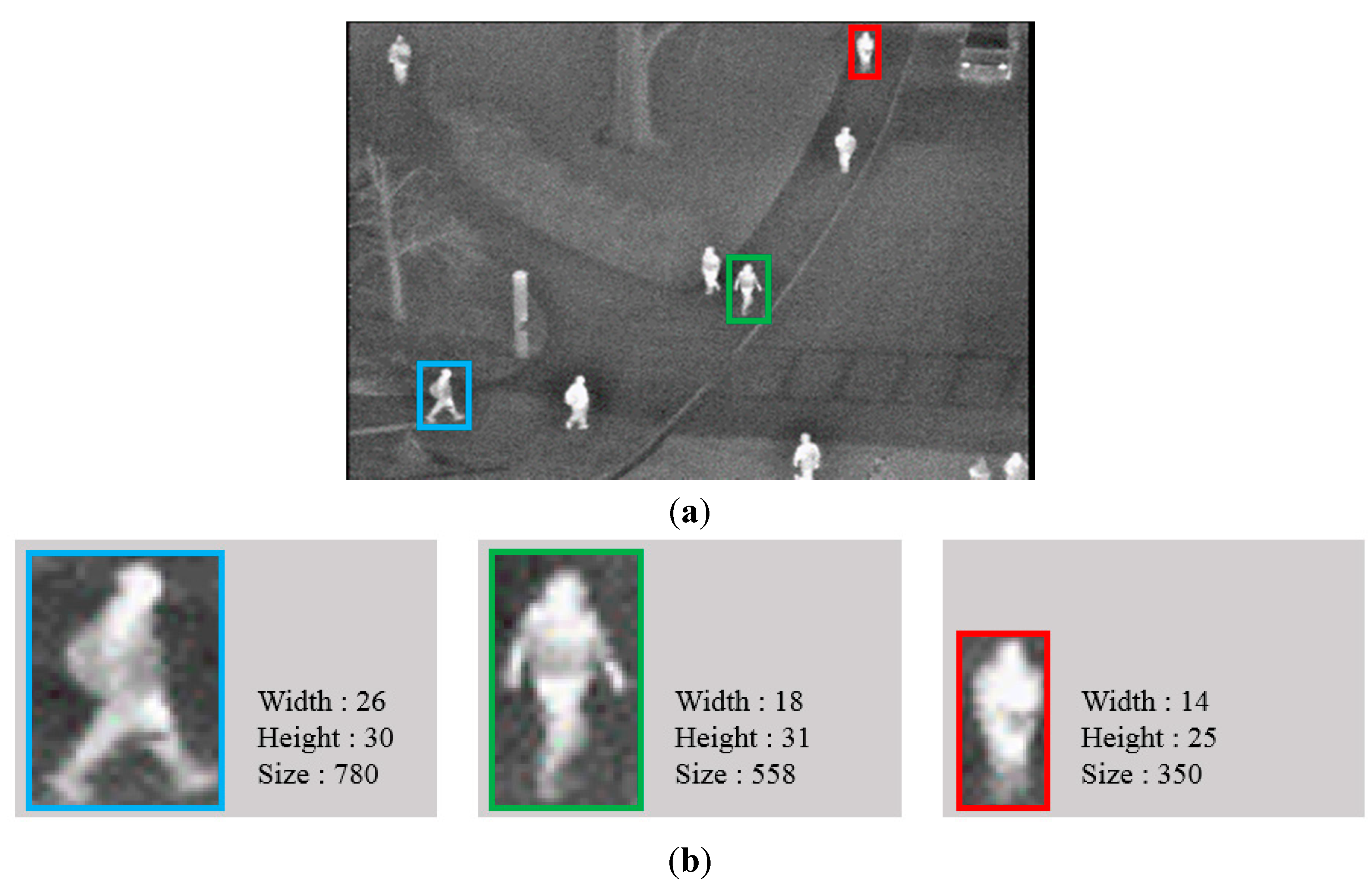

2.4.2. Division of the Candidate Region Based on Camera Viewing Direction with Perspective Projection

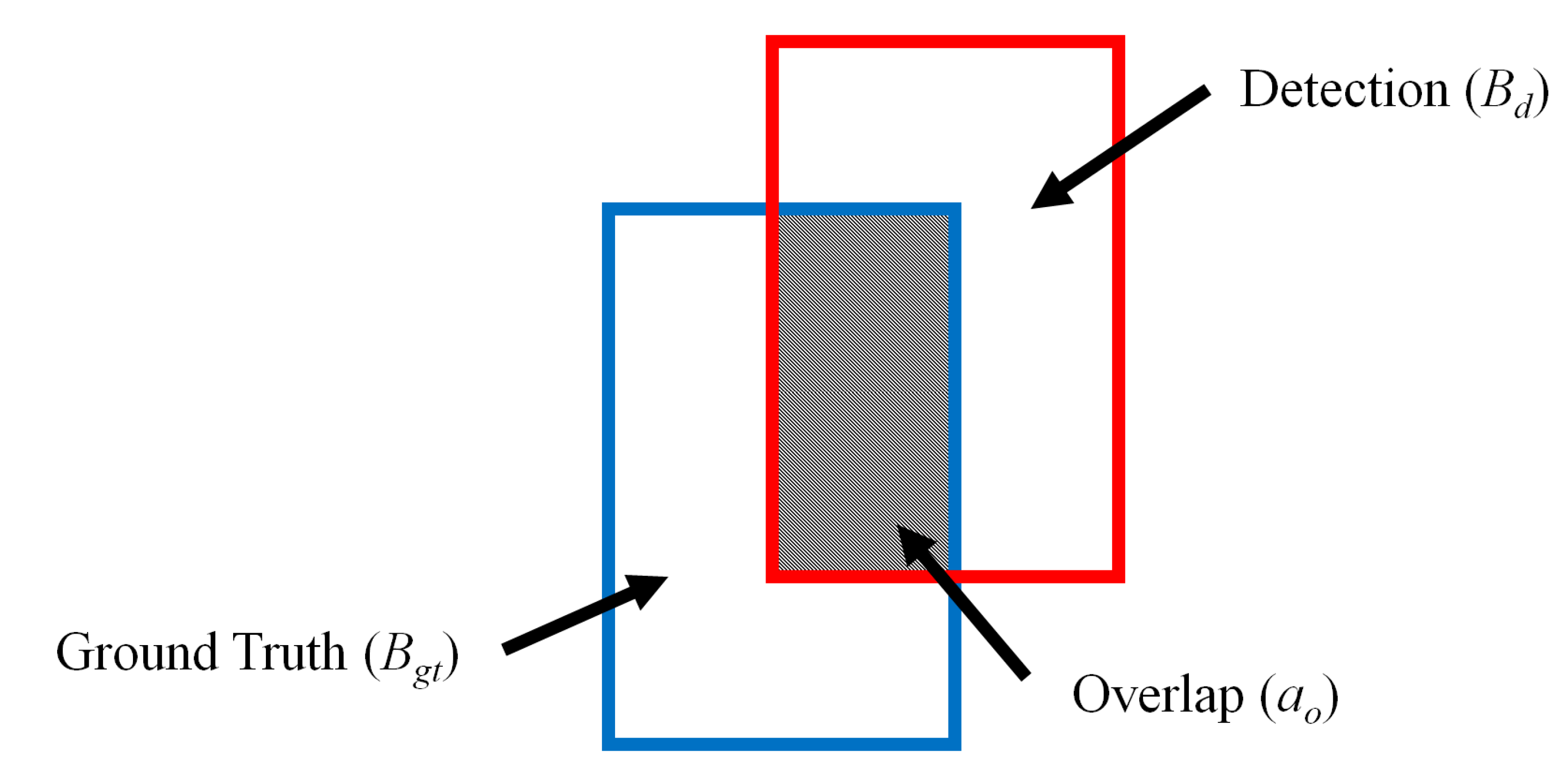

3. Experimental Results

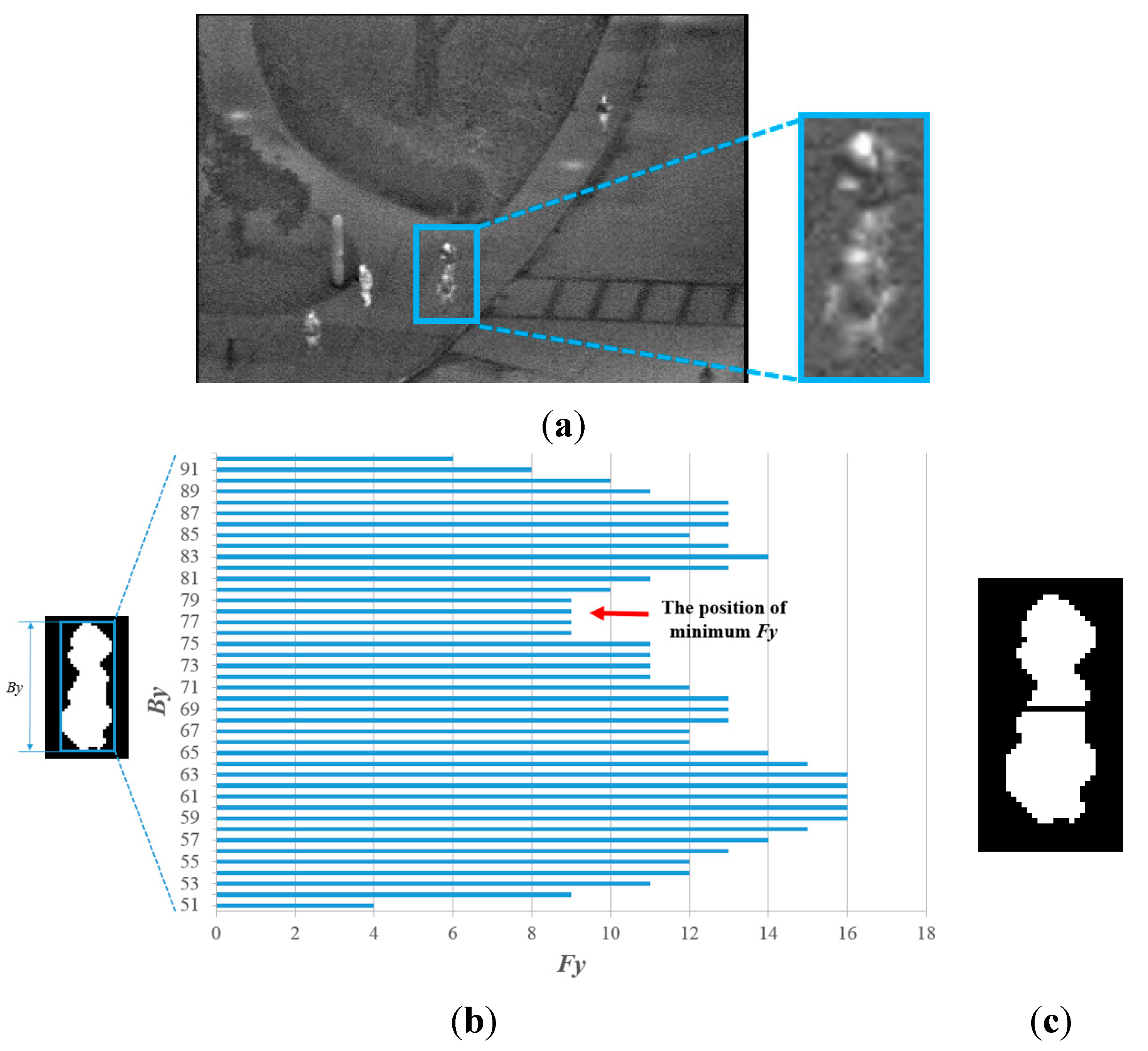

3.1. Dataset Description

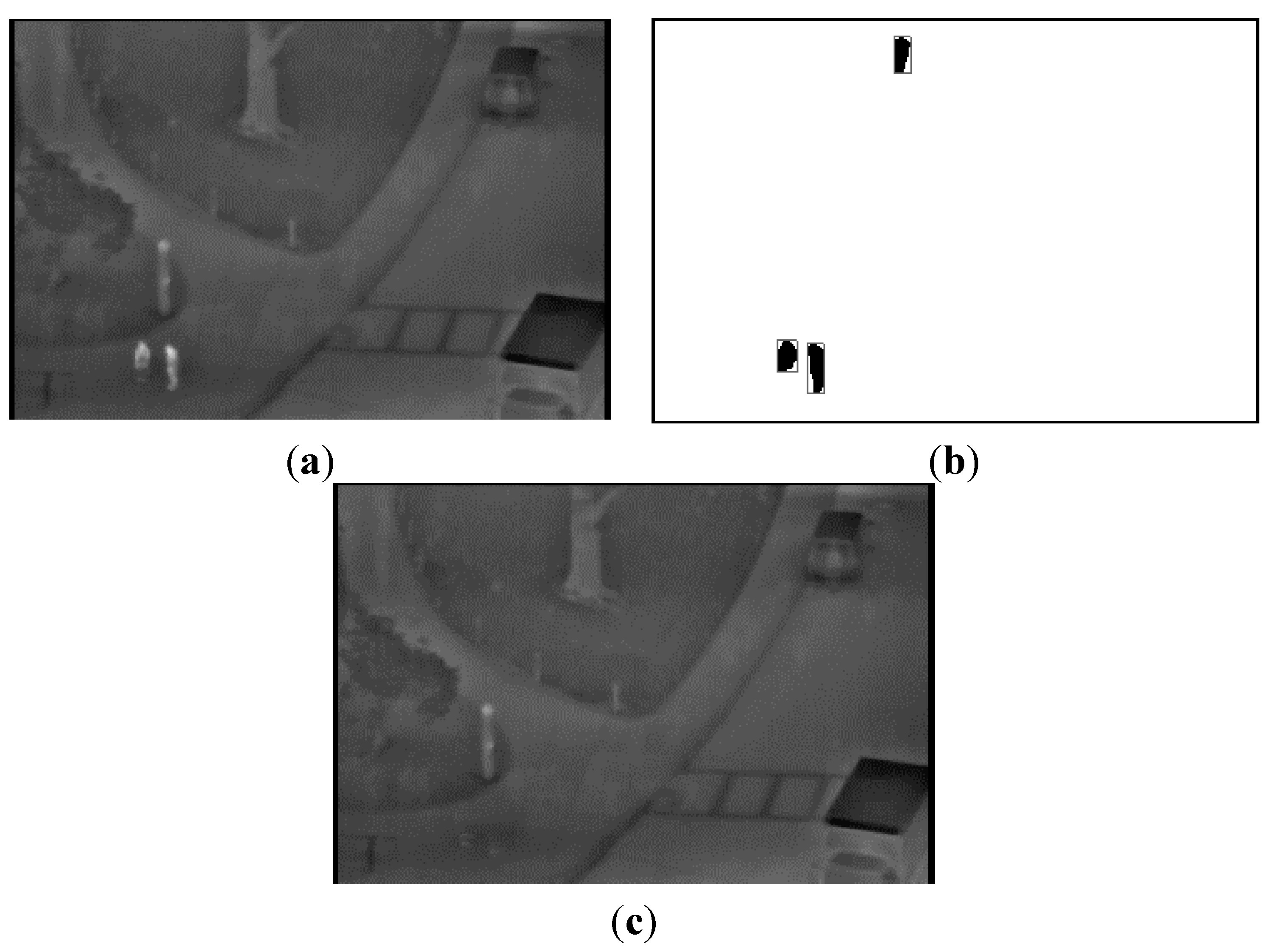

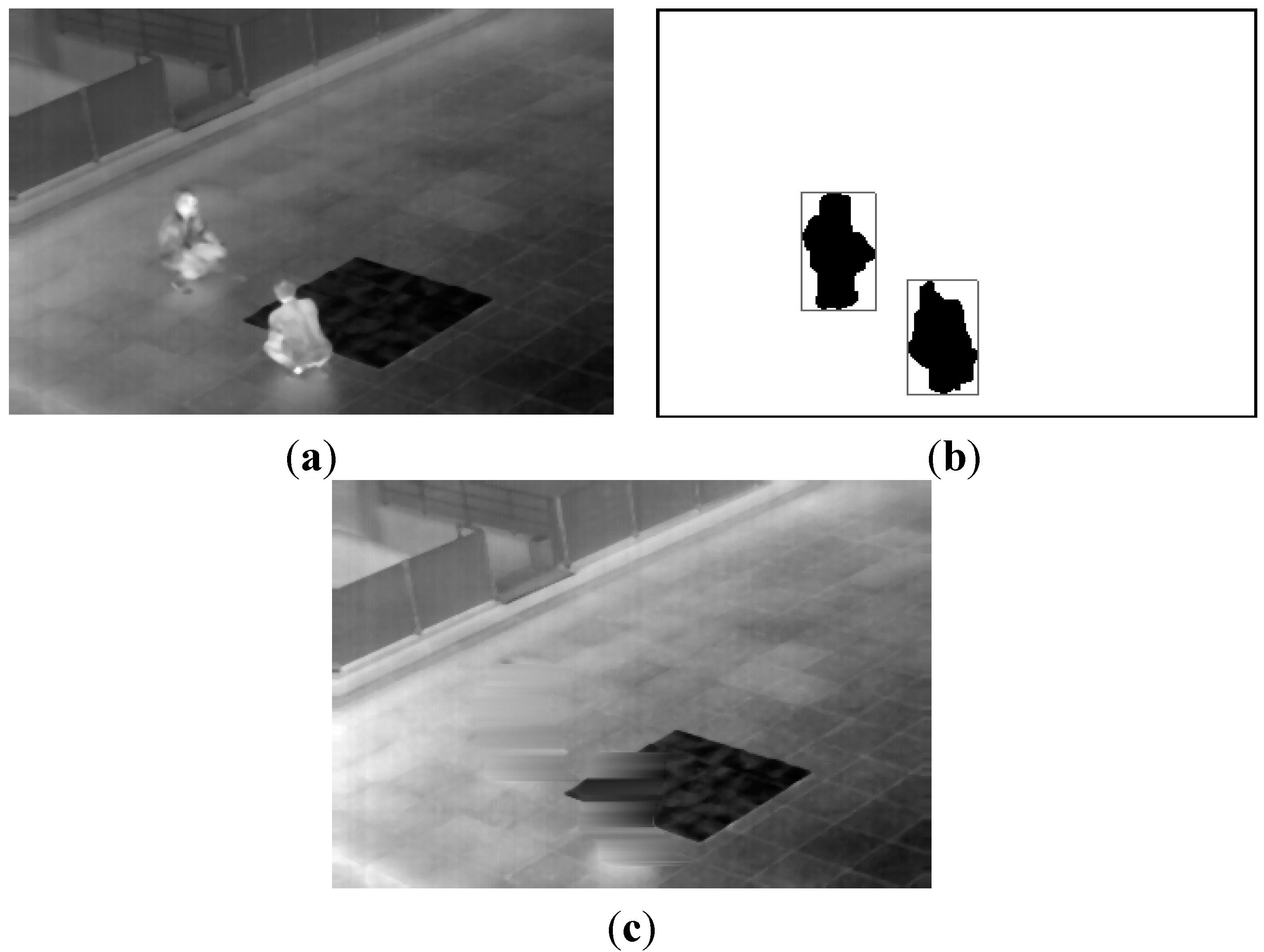

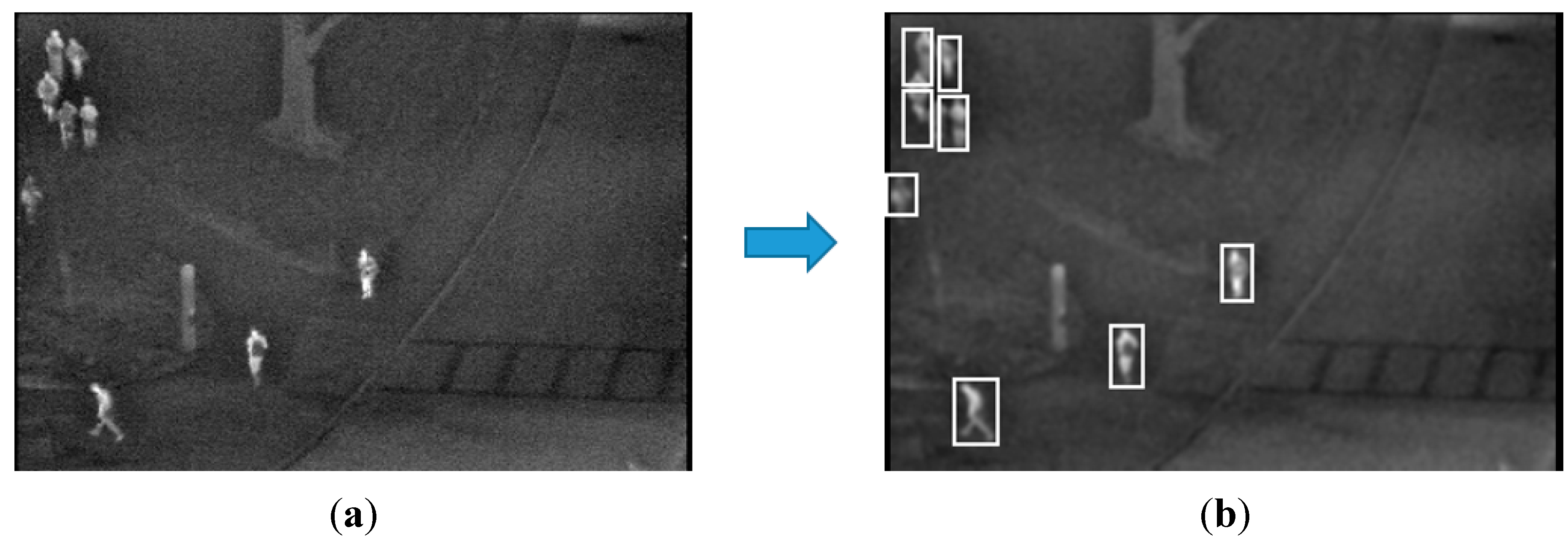

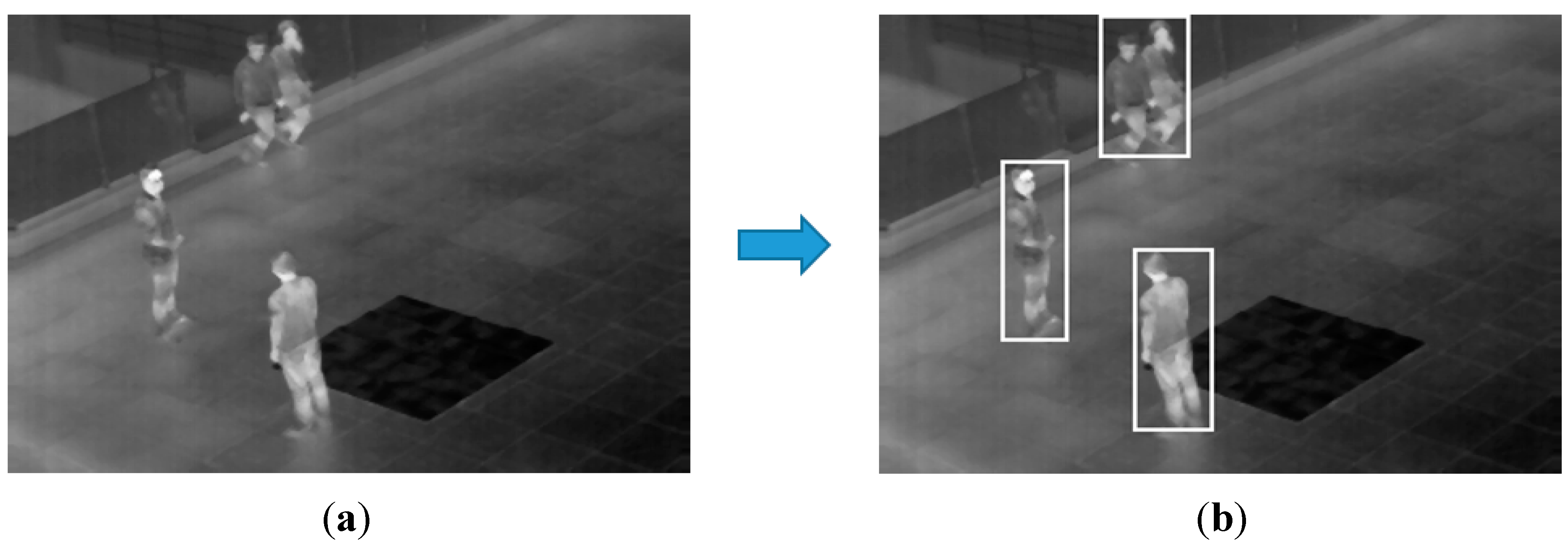

3.2. Results of Generating Background

3.3. Detection Results

| Sequence No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Total | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #Frames | 31 | 28 | 23 | 18 | 23 | 18 | 22 | 24 | 73 | 24 | 284 | |

| #People | 91 | 100 | 101 | 109 | 101 | 97 | 94 | 99 | 95 | 97 | 984 | |

| #TP | [15] | 78 | 95 | 70 | 109 | 91 | 88 | 64 | 82 | 91 | 77 | 845 |

| [22] | 88 | 94 | 101 | 107 | 90 | 93 | 92 | 75 | 95 | 95 | 930 | |

| [26] | 91 | 99 | 100 | 109 | 101 | 97 | 94 | 99 | 95 | 94 | 979 | |

| Proposed method | 91 | 100 | 99 | 109 | 101 | 95 | 94 | 99 | 95 | 97 | 980 | |

| #FP | [15] | 2 | 3 | 13 | 10 | 6 | 2 | 2 | 0 | 9 | 0 | 41 |

| [22] | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 3 | 6 | |

| [26] | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 3 | 6 | |

| Proposed method | 0 | 0 | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | |

| PPV | [15] | 0.98 | 0.97 | 0.84 | 0.92 | 0.94 | 0.98 | 0.94 | 1 | 0.91 | 1 | 0.95 |

| [22] | 1 | 1 | 0.99 | 0.99 | 1 | 1 | 1 | 0.99 | 1 | 0.97 | 0.9936 | |

| [26] | 1 | 1 | 0.98 | 1 | 1 | 1 | 1 | 0.99 | 1 | 0.97 | 0.9939 | |

| Proposed method | 1 | 1 | 0.99 | 0.97 | 1 | 1 | 1 | 1 | 1 | 1 | 0.9959 | |

| Sensitivity | [15] | 0.86 | 0.95 | 0.69 | 1 | 0.83 | 0.91 | 0.68 | 0.83 | 0.96 | 0.79 | 0.86 |

| [22] | 0.97 | 0.94 | 1 | 0.98 | 0.89 | 0.96 | 0.98 | 0.76 | 1 | 0.98 | 0.9459 | |

| [26] | 1 | 0.99 | 0.99 | 1 | 1 | 1 | 1 | 1 | 1 | 0.97 | 0.9949 | |

| Proposed method | 1 | 1 | 0.98 | 1 | 1 | 0.98 | 1 | 1 | 1 | 1 | 0.9959 | |

| Sequence No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Total | |

|---|---|---|---|---|---|---|---|---|---|

| #Frames | 137 | 144 | 64 | 85 | 127 | 127 | 84 | 768 | |

| #People | 203 | 327 | 116 | 238 | 292 | 467 | 168 | 1,811 | |

| #TP | [22] | 174 | 285 | 105 | 219 | 289 | 350 | 167 | 1,589 |

| [26] | 203 | 319 | 98 | 238 | 292 | 412 | 168 | 1,730 | |

| Proposed method | 203 | 314 | 114 | 235 | 292 | 437 | 168 | 1,763 | |

| #FP | [22] | 47 | 21 | 21 | 1 | 3 | 20 | 2 | 115 |

| [26] | 1 | 17 | 0 | 6 | 0 | 16 | 52 | 92 | |

| Proposed method | 0 | 13 | 5 | 6 | 0 | 11 | 0 | 35 | |

| PPV | [22] | 0.7873 | 0.9314 | 0.8333 | 0.9955 | 0.9897 | 0.9459 | 0.9882 | 0.9325 |

| [26] | 0.9951 | 0.9494 | 1 | 0.9754 | 1 | 0.9626 | 0.7636 | 0.9495 | |

| Proposed method | 1 | 0.9602 | 0.9580 | 0.9751 | 1 | 0.9754 | 1 | 0.9805 | |

| Sensitivity | [22] | 0.8571 | 0.8716 | 0.9052 | 0.9202 | 0.9897 | 0.7495 | 0.994 | 0.8774 |

| [26] | 1 | 0.9755 | 0.8448 | 1 | 1 | 0.8822 | 1 | 0.9553 | |

| Proposed method | 1 | 0.9602 | 0.9828 | 0.9874 | 1 | 0.9358 | 1 | 0.9735 | |

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Arandjelović, O. Contextually Learnt Detection of Unusual Motion-Based Behaviour in Crowded Public Spaces. In Proceedings of the 26th Annual International Symposium on Computer and Information Science, London, UK, 26–28 September 2011; pp. 403–410.

- Martin, R.; Arandjelović, O. Multiple-Object Tracking in Cluttered and Crowded Public Spaces. Lect. Notes Comput. Sci. 2010, 6455, 89–98. [Google Scholar]

- Khatoon, R.; Saqlain, S.M.; Bibi, S. A Robust and Enhanced Approach for Human Detection in Crowd. In Proceedings of the International Multitopic Conference, Islamabad, Pakistan, 13–15 December 2012; pp. 215–221.

- Rajaei, A.; Shayegh, H.; Charkari, N.M. Human Detection in Semi-Dense Scenes Using HOG descriptor and Mixture of SVMs. In Proceedings of the International Conference on Computer and Knowledge Engineering, Mashhad, Iran, 31 October–1 November 2013; pp. 229–234.

- Mahapatra, A.; Mishra, T.K.; Sa, P.K.; Majhi, B. Background Subtraction and Human Detection in Outdoor Videos Using Fuzzy Logic. In Proceedings of the IEEE International Conference on Fuzzy Systems, Hyderabad, India, 7–10 July 2013; pp. 1–7.

- Ghiass, R.S.; Arandjelović, O.; Bendada, H.; Maldague, X. Infrared Face Recognition: A Literature Review. In Proceedings of the International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013; pp. 1–10.

- Bertozzi, M.; Broggi, A.; Rose, M.D.; Felisa, M.; Rakotomamonjy, A.; Suard, F. A Pedestrian De-Tector Using Histograms of Oriented Gradients and a Support Vector Machine Classifier. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Seattle, WA, USA, 30 September–3 October 2007; pp. 143–148.

- Li, Z.; Zhang, J.; Wu, Q.; Geers, G. Feature Enhancement Using Gradient Salience on Thermal Image. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications, Sydney, Australia, 1–3 December 2010; pp. 556–562.

- Chang, S.L.; Yang, F.T.; Wu, W.P.; Cho, Y.A.; Chen, S.W. Nighttime Pedestrian Detection Using Thermal Imaging Based on HOG Feature. In Proceedings of the International Conference on System Science and Engineering, Macao, China, 8–10 June 2011; pp. 694–698.

- Bertozzi, M.; Broggi, A.; Caraffi, C.; Rose, M.D.; Felisa, M.; Vezzoni, G. Pedestrian Detection by Means of Far-Infrared Stereo Vision. Comput. Vis. Image Underst. 2007, 106, 194–204. [Google Scholar] [CrossRef]

- St-Laurent, L.; Prévost, D.; Maldague, X. Thermal Imaging for Enhanced Foreground-Background Segmentation. In Proceedings of the International Conference on Quantitative InfraRed Thermography, Padova, Italy, 27–30 June 2006.

- Lin, C.F.; Lin, S.F.; Hwang, C.H.; Chen, Y.C. Real-Time Pedestrian Detection System with Novel Thermal Features at Night. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference, Montevideo, Uruguay, 12–15 May 2014; pp. 1329–1333.

- Zhao, J.; Cheung, S.C.S. Human Segmentation by Fusing Visible-light and Thermal Imaginary. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1185–1192.

- Chen, Y.; Han, C. Night-Time Pedestrian Detection by Visual-Infrared Video Fusion. In Proceedings of the World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 5079–5084.

- Li, W.; Zheng, D.; Zhao, T.; Yang, M. An Effective Approach to Pedestrian Detection in Thermal Imagery. In Proceedings of the International Conference on Natural Computation, Chongqing, China, 29–31 May 2012; pp. 325–329.

- Neagoe, V.E.; Ciotec, A.D.; Barar, A.P. A Concurrent Neural Network Approach to Pedestrian Detection in Thermal Imagery. In Proceedings of the International Conference on Communications, Bucharest, Romania, 21–23 June 2012; pp. 133–136.

- Zhang, L.; Wu, B.; Nevatia, R. Pedestrian Detection in Infrared Images Based on Local Shape Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Olmeda, D.; Armingol, J.M.; Escalera, A.D.L. Discrete Features for Rapid Pedestrian Detection in Infrared Images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 3067–3072.

- Wang, W.; Zhang, J.; Shen, C. Improved Human Detection and Classification in Thermal Images. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2313–2316.

- Wang, W.; Wang, Y.; Chen, F.; Sowmya, A. A Weakly Supervised Approach for Object Detection Based on Soft-Label Boosting. In Proceedings of the IEEE Workshop on Applications of Computer Vision, Tempa, FL, USA, 15–17 January 2013; pp. 331–338.

- Davis, J.W.; Sharma, V. Robust Detection of People in Thermal Imagery. In Proceedings of the International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 713–716.

- Davis, J.W.; Keck, M.A. A Two-Stage Template Approach to Person Detection in Thermal Imagery. In Proceedings of the IEEE Workshop on Applications of Computer Vision, Breckenridge, CO, USA, 5–7 January 2005; pp. 364–369.

- Latecki, L.J.; Miezianko, R.; Pokrajac, D. Tracking Motion Objects in Infrared Videos. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance, Como, Italy, 15–16 September 2005; pp. 99–104.

- Davis, J.W.; Sharma, V. Background-Subtraction Using Contour-Based Fusion of Thermal and Visible Imagery. Comput. Vis. Image Underst. 2007, 106, 162–182. [Google Scholar] [CrossRef]

- Davis, J.W.; Sharma, V. Fusion-Based Background-Subtraction Using Contour Saliency. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Diego, CA, USA, 25 June 2005; pp. 1–9.

- Dai, C.; Zheng, Y.; Li, X. Layered Representation for Pedestrian Detection and Tracking in Infrared Imagery. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Diego, CA, USA, 25 June 2005; pp. 1–8.

- Dai, C.; Zheng, Y.; Li, X. Pedestrian Detection and Tracking in Infrared Imagery Using Shape and Appearance. Comput. Vis. Image Underst. 2007, 106, 288–299. [Google Scholar] [CrossRef]

- Calafut, M. Multiple-Object Tracking in the Infrared, Final Project (EE368) of Stanford University; Stanford University: Stanford, CA, USA; pp. 1–6.

- Li, J.; Gong, W. Real Time Pedestrian Tracking Using Thermal Infrared Imagery. J. Comput. 2010, 5, 1606–1613. [Google Scholar]

- Niblack, W. An Introduction to Digital Image Processing, 1st ed.; Prentice Hall: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- OTCBVS Benchmark Dataset Collection. Available online: http://www.cse.ohio-state.edu/otcbvs-bench/ (accessed on 13 December 2014).

- ICI 7320 Scientific Specifications. Available online: http://www.infraredcamerasinc.com/Thermal-Cameras/Fix-Mounted-Thermal-Cameras/ICI7320_S_fix-mounted_thermal_camera.html (accessed on 13 December 2014).

- Davis, J.W.; Sharma, V. Background-Subtraction in Thermal Imagery Using Contour Saliency. Int. J. Comput. Vis. 2007, 71, 161–181. [Google Scholar] [CrossRef]

- Dagless, E.L.; Ali, A.T.; Cruz, J.B. Visual Road Traffic Monitoring and Data Collection. In Proceedings of the IEEE-IEE Vehicle Navigation and Information Systems Conference, Ottawa, ON, Canada, 12–15 October 1993; pp. 146–149.

- Elhabian, S.Y.; El-Sayed, K.M.; Ahmed, S.H. Moving Object Detection in Spatial Domain Using Background Removal Techniques-State-of-Art. Recent Pat. Comput. Sci. 2008, 1, 32–54. [Google Scholar] [CrossRef]

- Zheng, Y.; Fan, L. Moving Object Detection Based on Running Average Background and Temporal Difference. In Proceedings of the International Conference on Intelligent Systems and Knowledge Engineering, Hangzhou, China, 15–16 November 2010; pp. 270–272.

- Malviya, A.; Bhirud, S.G. Visual Infrared Video Fusion for Night Vision Using Background Estimation. J. Comput. 2010, 2, 66–69. [Google Scholar]

- Olmeda, D.; Premebida, C.; Nunes, U.; Armingol, J.M.; Escalera, A.D.L. Pedestrian Detection in Far Infrared Images. Integr. Comput. Aided Eng. 2013, 20, 347–360. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K. I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Sensitivity and Specificity. Available online: http://en.wikipedia.org/wiki/Sensitivity_and_specificity (accessed on 13 December 2014).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, E.S.; Choi, J.-S.; Lee, J.H.; Shin, K.Y.; Kim, Y.G.; Le, T.T.; Park, K.R. Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera. Sensors 2015, 15, 6763-6788. https://doi.org/10.3390/s150306763

Jeon ES, Choi J-S, Lee JH, Shin KY, Kim YG, Le TT, Park KR. Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera. Sensors. 2015; 15(3):6763-6788. https://doi.org/10.3390/s150306763

Chicago/Turabian StyleJeon, Eun Som, Jong-Suk Choi, Ji Hoon Lee, Kwang Yong Shin, Yeong Gon Kim, Toan Thanh Le, and Kang Ryoung Park. 2015. "Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera" Sensors 15, no. 3: 6763-6788. https://doi.org/10.3390/s150306763

APA StyleJeon, E. S., Choi, J.-S., Lee, J. H., Shin, K. Y., Kim, Y. G., Le, T. T., & Park, K. R. (2015). Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera. Sensors, 15(3), 6763-6788. https://doi.org/10.3390/s150306763