Vector Graph Assisted Pedestrian Dead Reckoning Using an Unconstrained Smartphone

Abstract

:1. Introduction

2. Motivation and Paper Outline

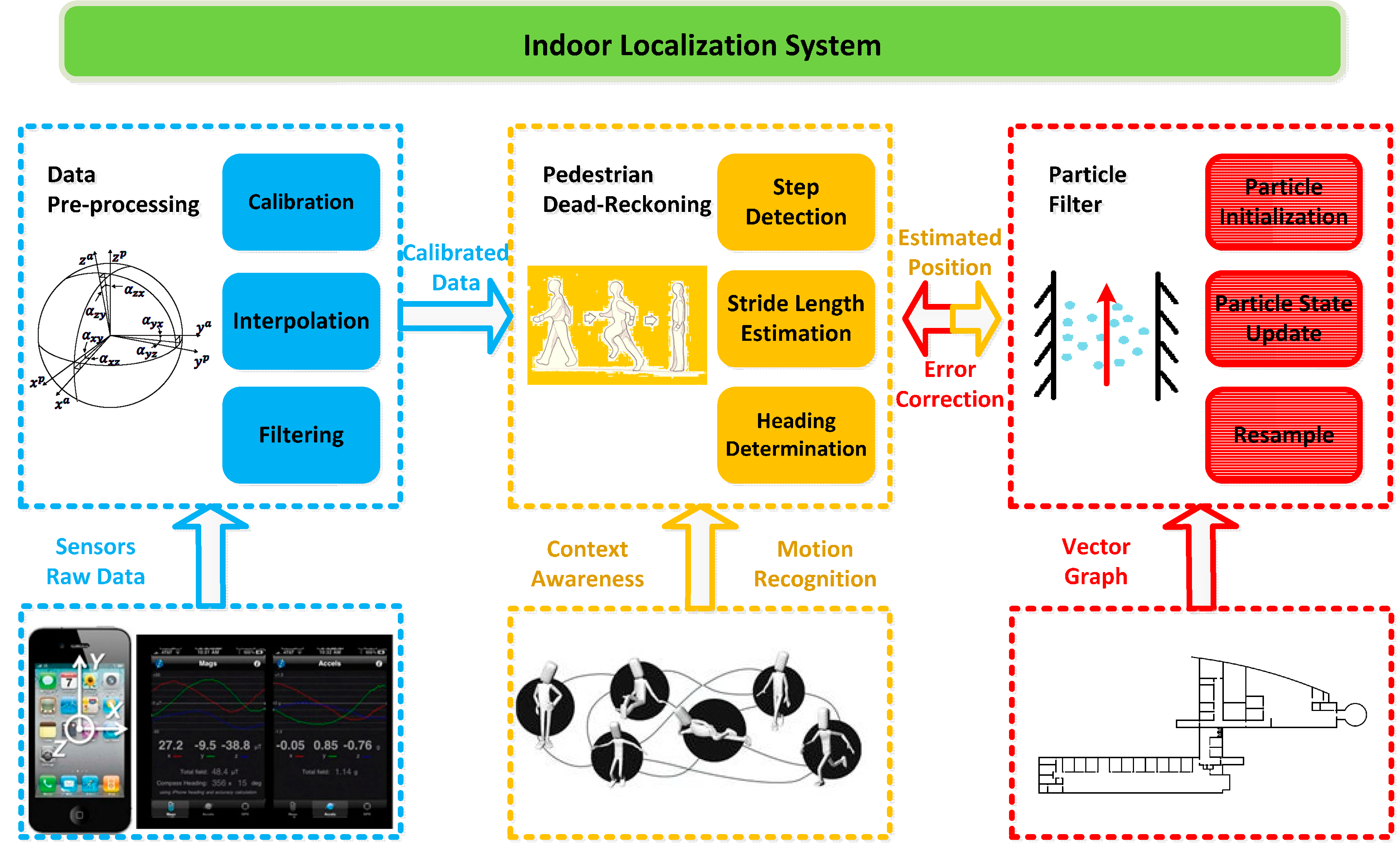

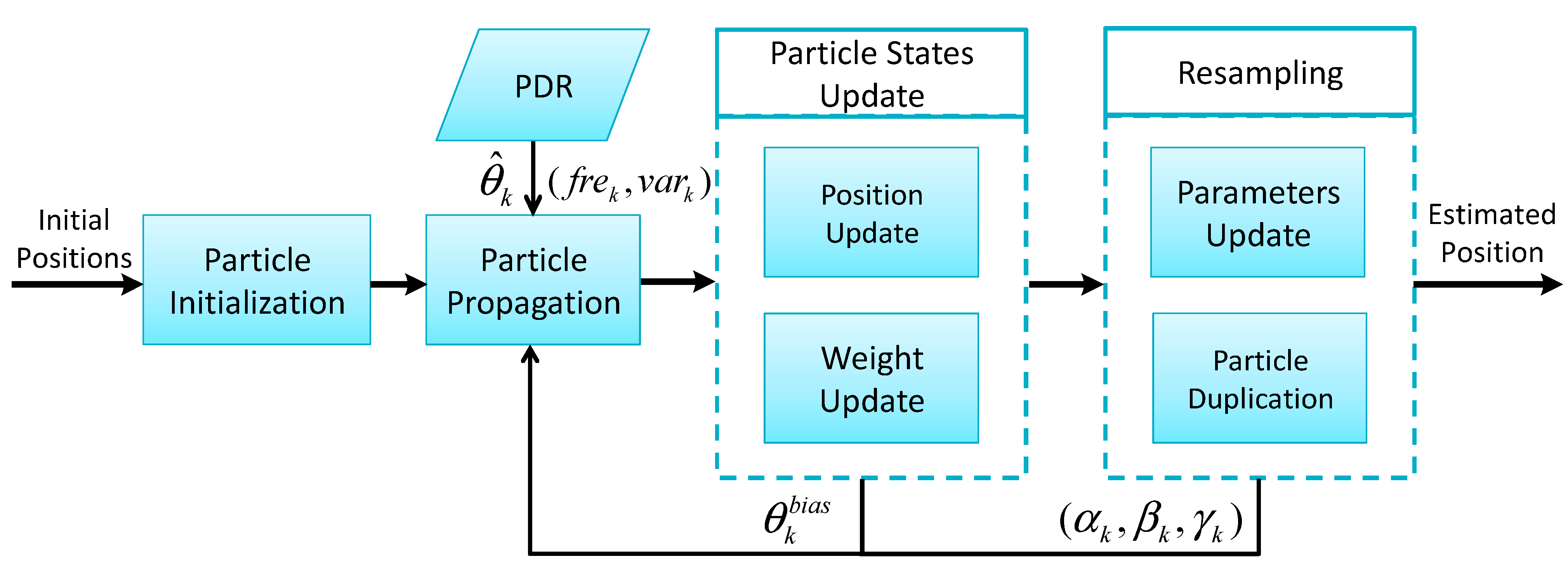

3. Indoor Localization System Architecture

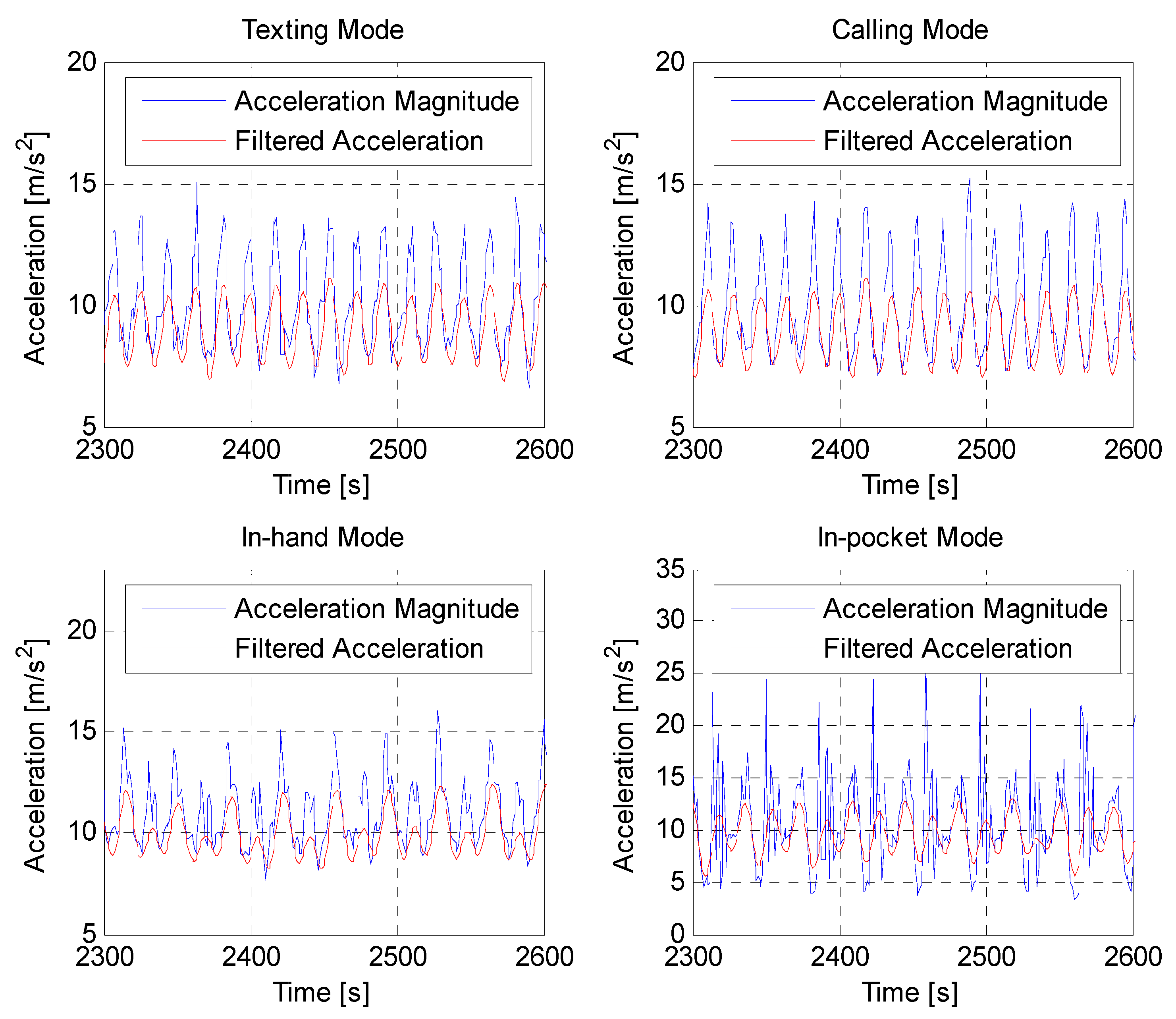

4. Pedestrian Dead Reckoning

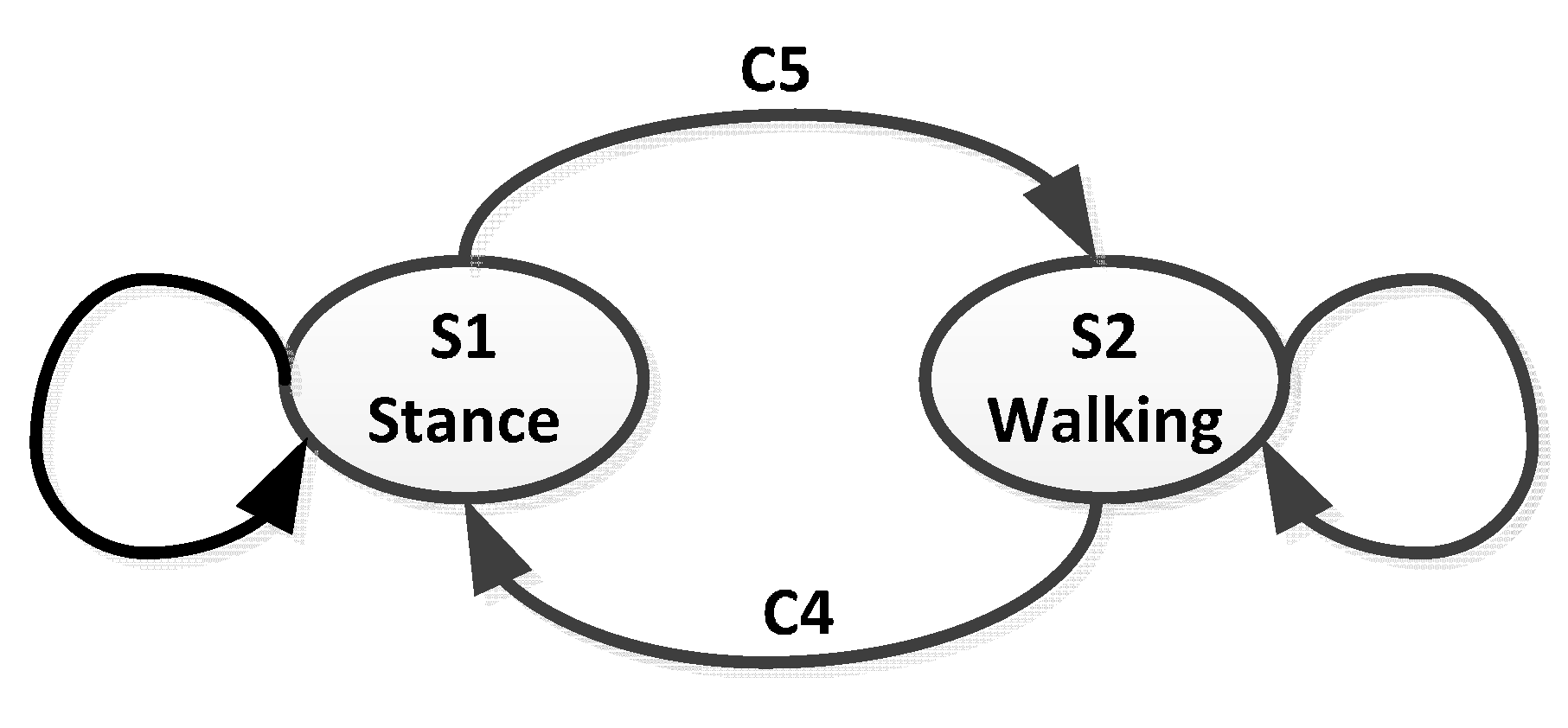

4.1. Step Detection

- C1.

- The total acceleration magnitude amag has to cross the threshold δth from negative to positive.

- C2.

- The time interval ∆t between two consecutive steps defined by C1 must be within the interval threshold from ∆tmin to ∆tmax.

- C3.

- The difference adif between extreme values of amag during a step phase and the threshold δth has to be among λmin to λmax, otherwise a perturbation point is recorded.

- C4.

- Transition from S2 to S1: there is no candidate step or there exist perturbation points in the sliding window.

- C5.

- Transition from S1 to S2: the candidate step number is more than one and there is not any perturbation point within the sliding window, meanwhile the autocorrelation value rmag is larger than threshold rth.

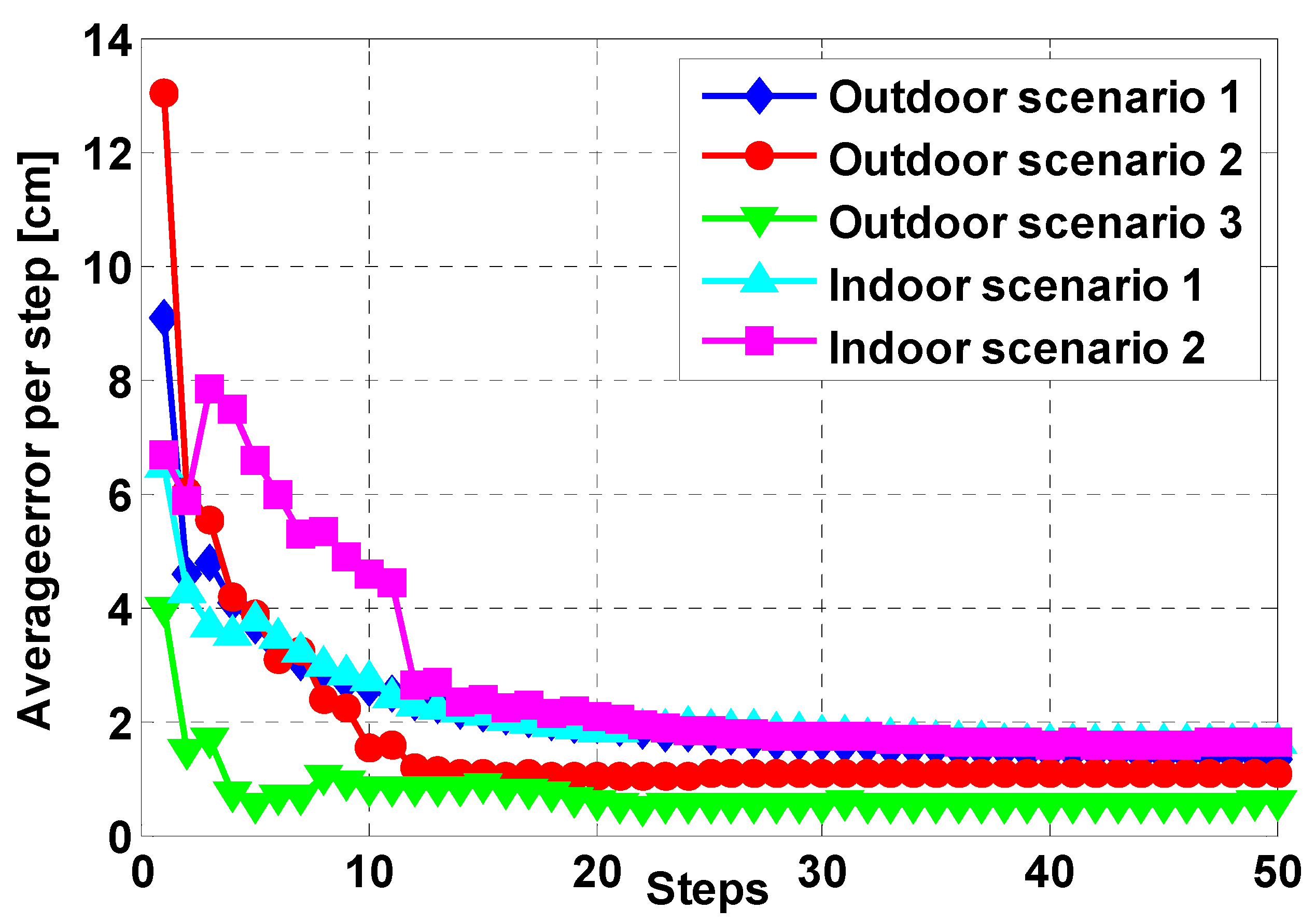

4.2. Step Length Estimation

- •

- , i.e., step length is zero when a pedestrian is static;

- •

- , i.e., step length at turning points is usually smaller than a pedestrian is walking straightly;

- •

- , i.e., step length is a constant equal to the height of sidesteps when a pedestrian is climbing stairs;

- •

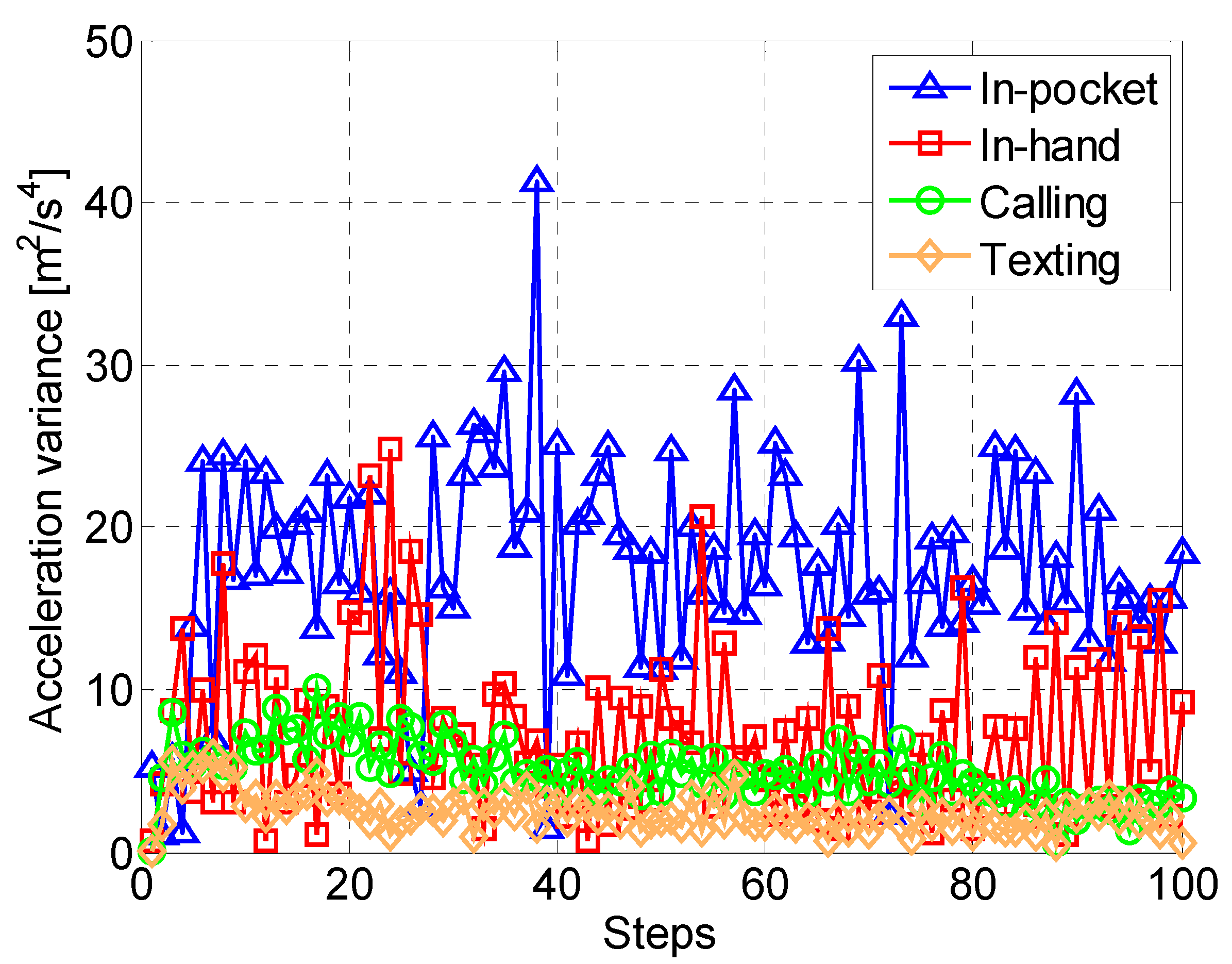

- , i.e., the model parameters βSi,Cj are inversely proportional to the acceleration variances in different carrying contexts. For example, the acceleration variance is the largest in In-pocket context C4 usually, thus the model parameter βSi,C4 is smaller than the parameters in other contexts.

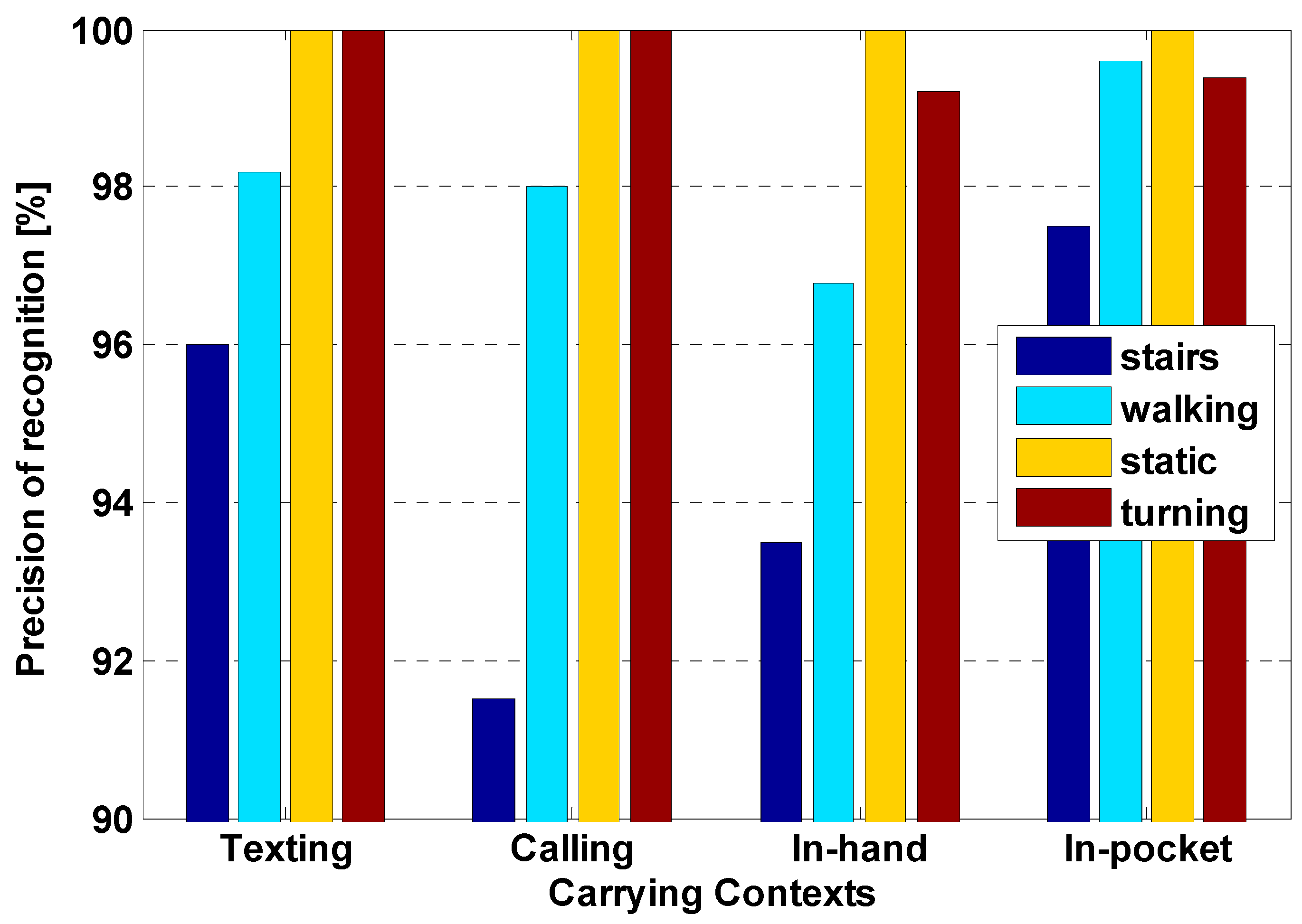

| Carrying Contexts | Recognized Carrying Contexts | |||

|---|---|---|---|---|

| Texting | Calling | In-Hand | In-Pocket | |

| Texting | 100 | 0 | 0 | 0 |

| Calling | 0 | 98.99 | 0 | 1.01 |

| In-hand | 0 | 0 | 100 | 0 |

| In-pocket | 0 | 0 | 0 | 100 |

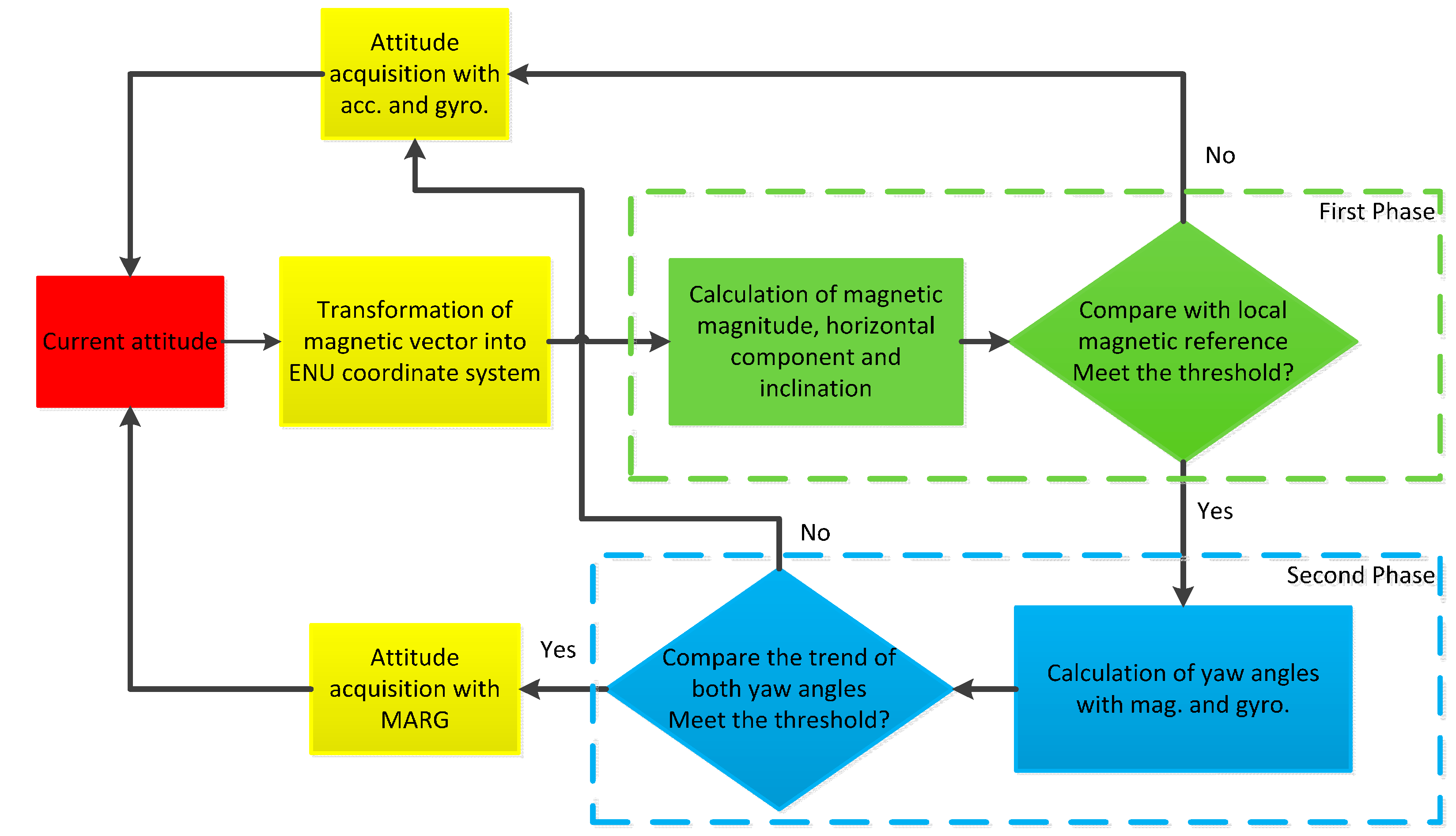

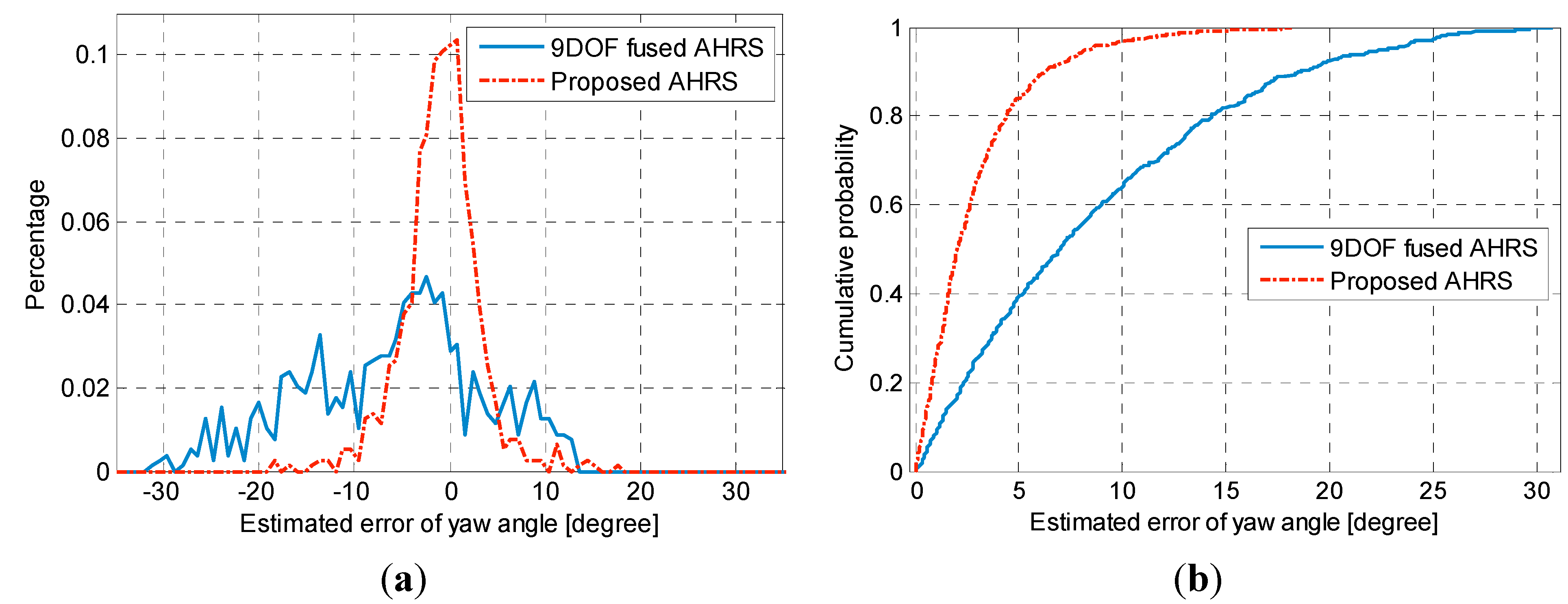

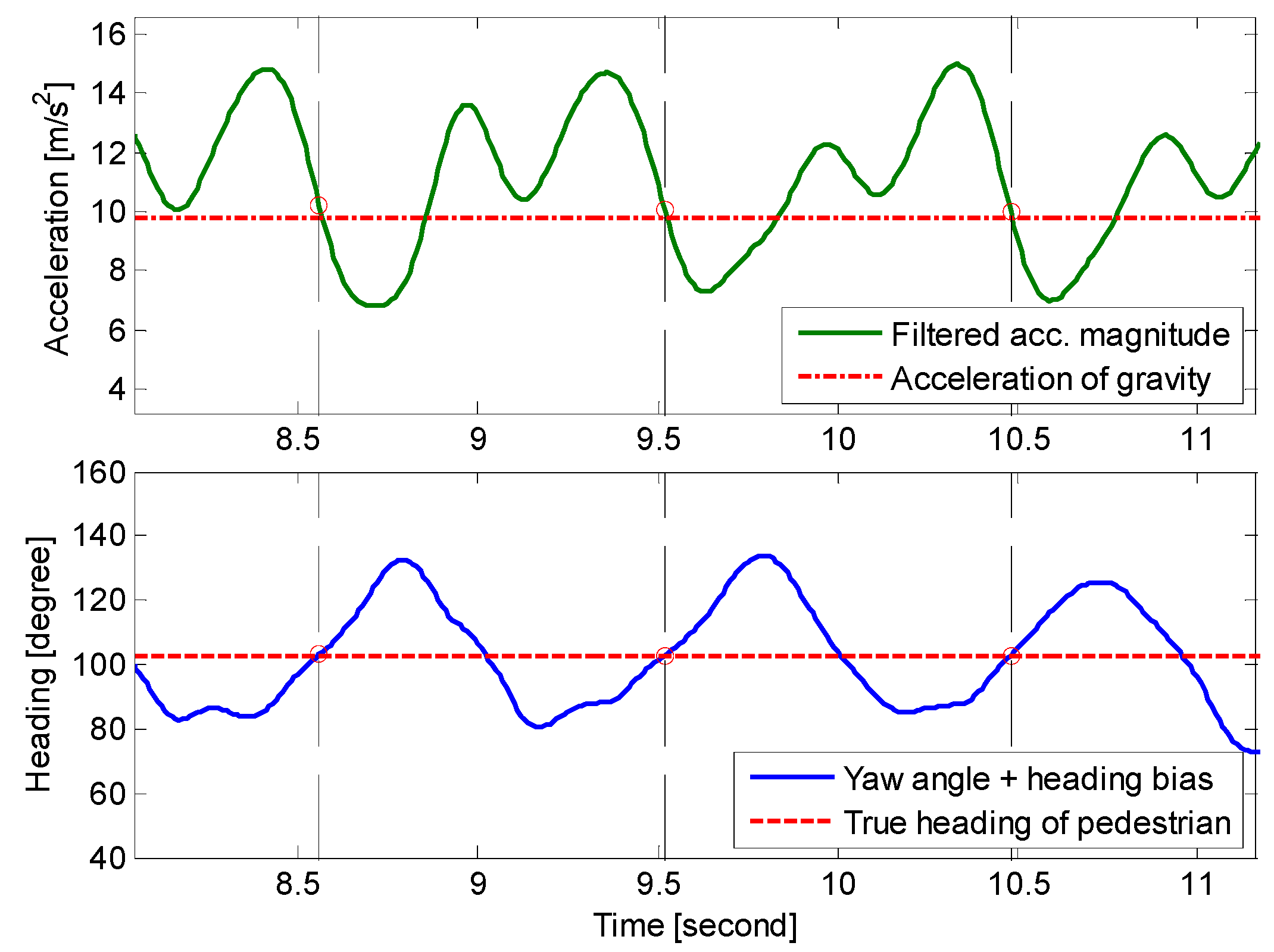

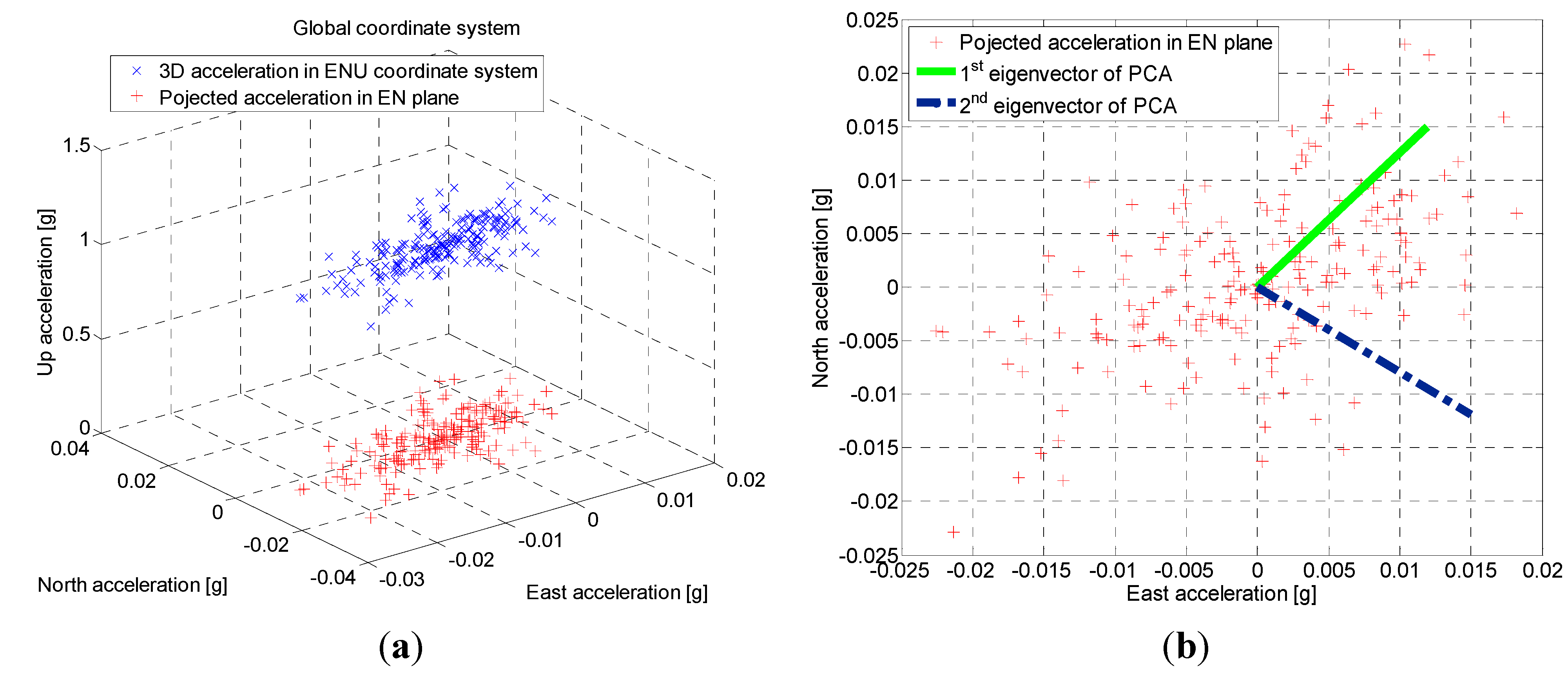

4.3. Heading Determination

5. Particle Filter Algorithm

5.1. Particle Filter Implementation

- •

- If the particles hit an obstacle in non-turning situation (heading difference is less than 25°), the step length model parameters of them are retained and can be inherited by the next generation particles;

- •

- If the particles die in turning situation (heading difference is more than 25°), their model parameters are abandoned and only the model parameters of surviving particles are retained for generating new particles.

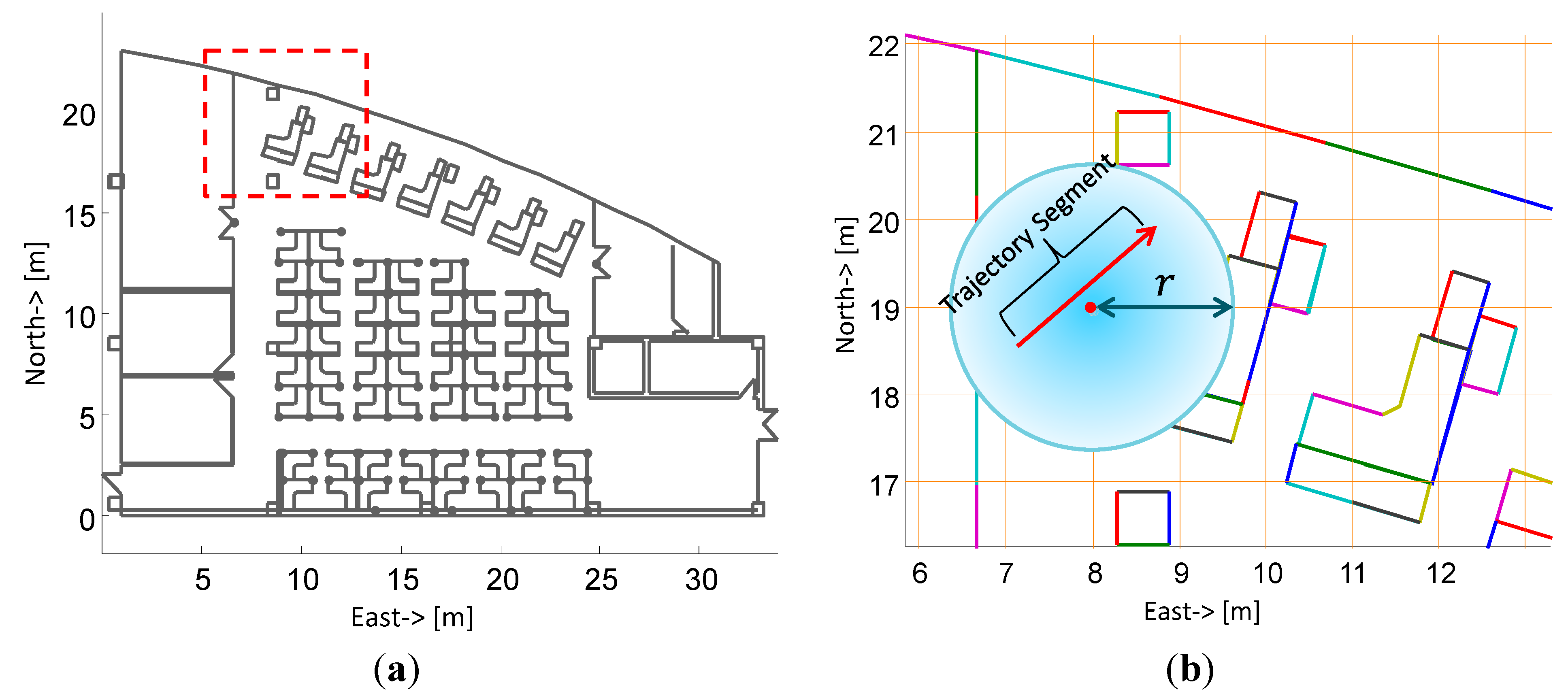

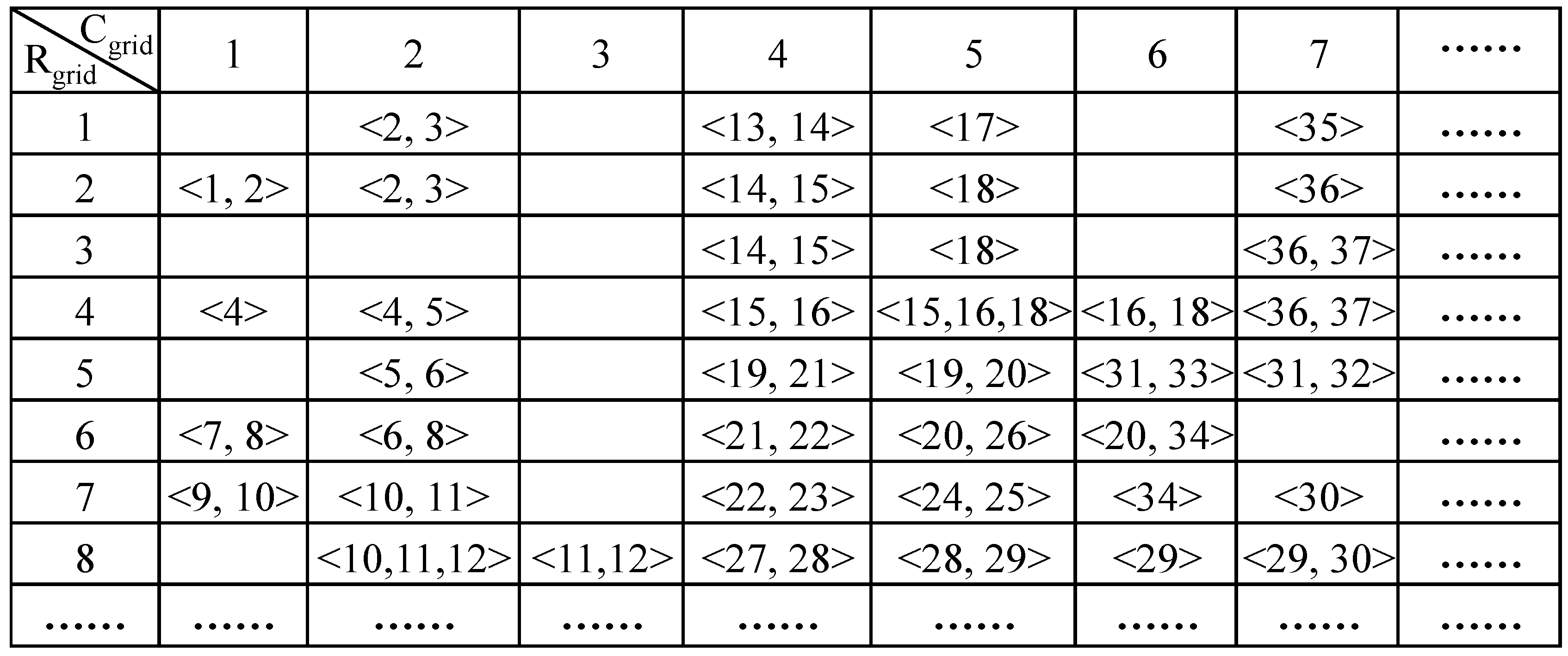

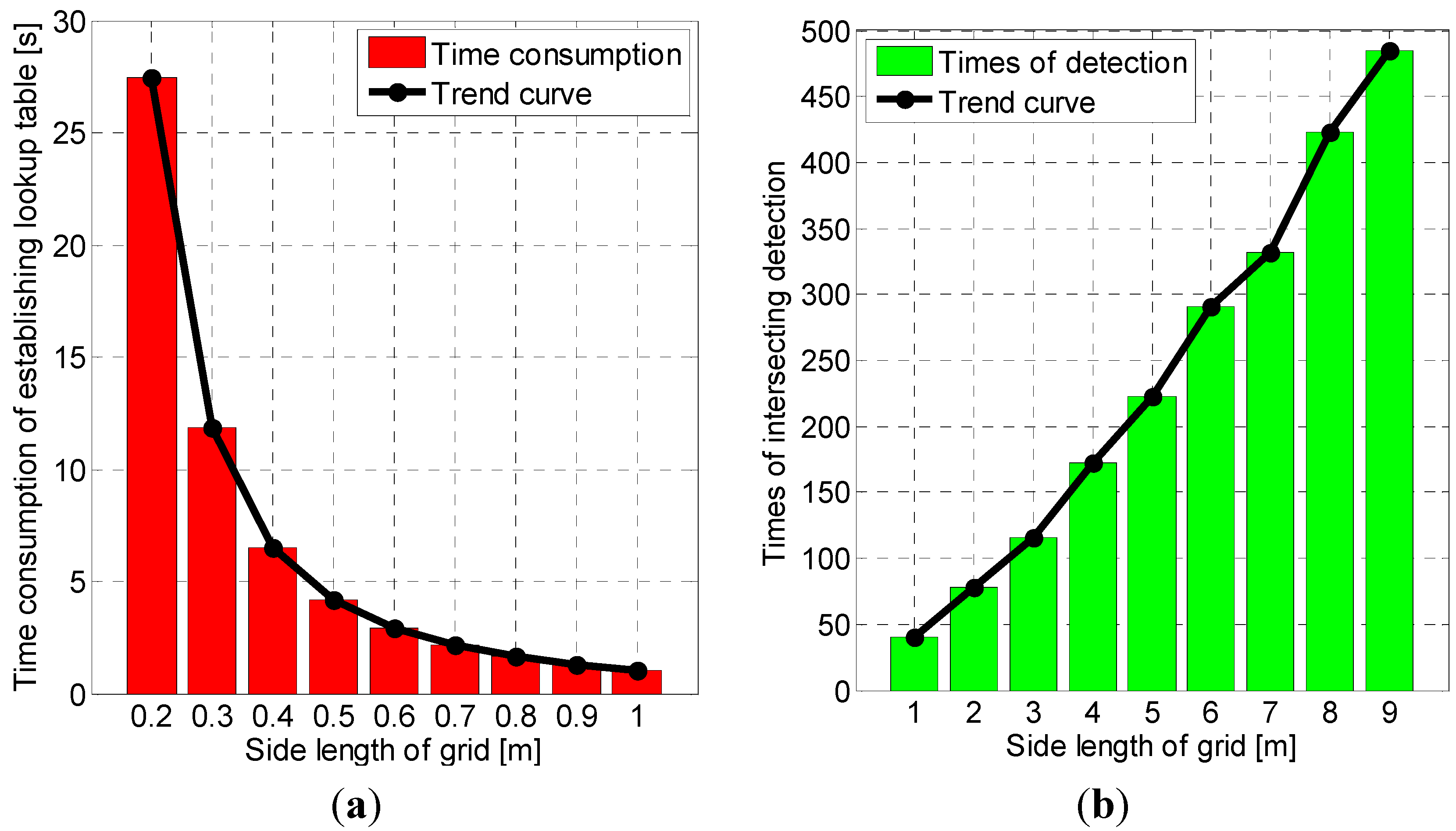

5.2. Map Construction and Optimization

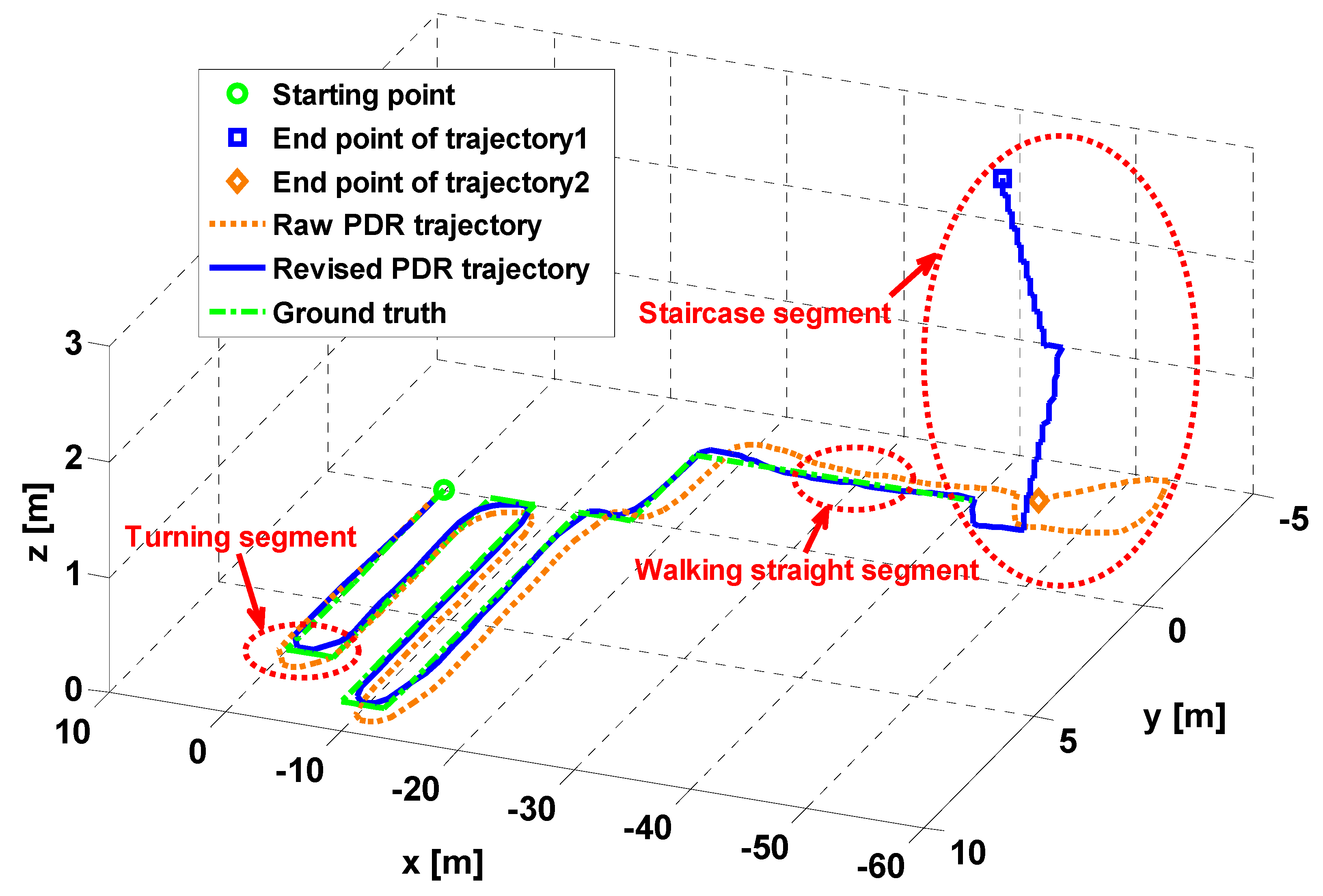

6. Indoor Localization Field Test

6.1. Field Test Setup

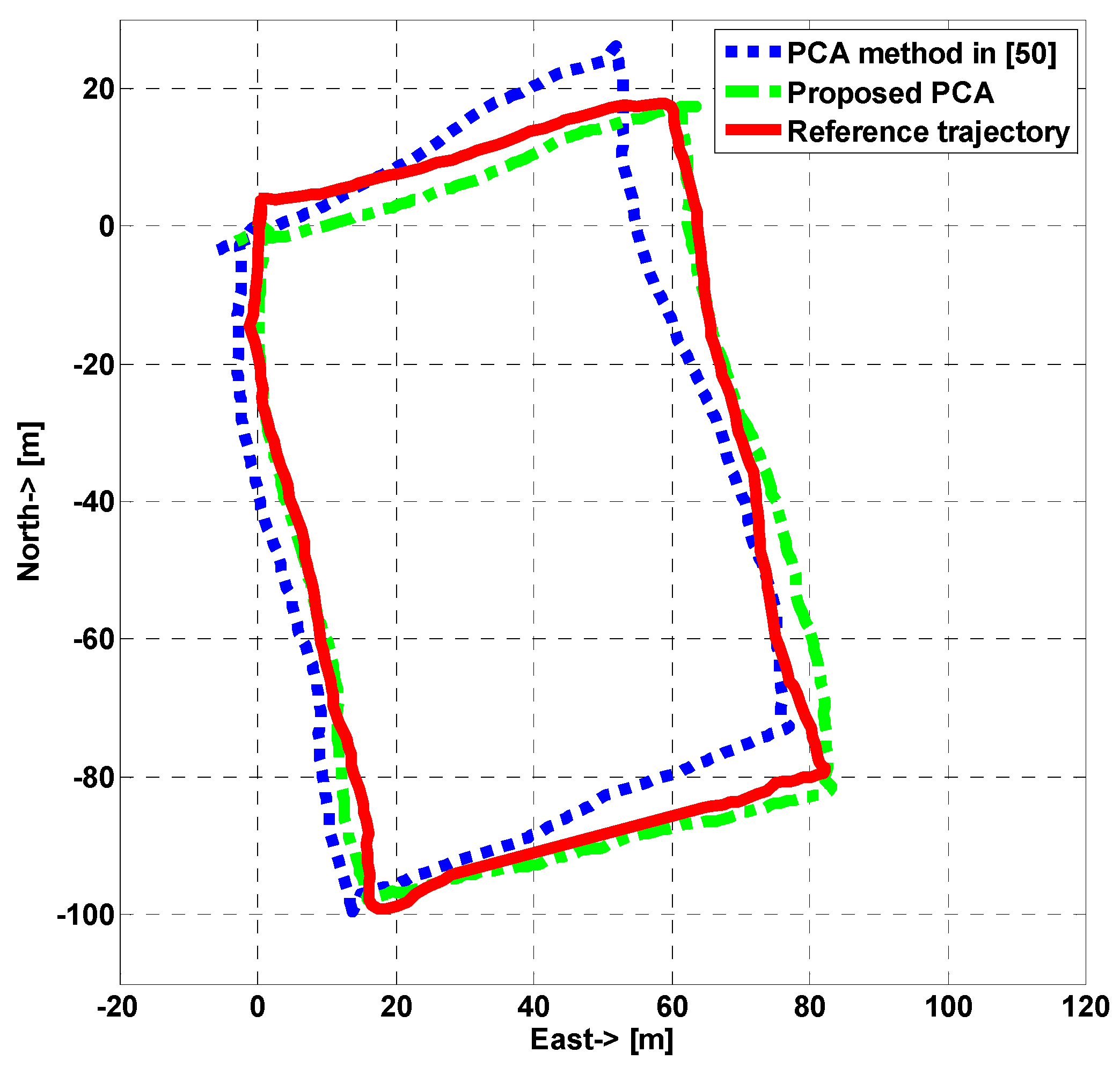

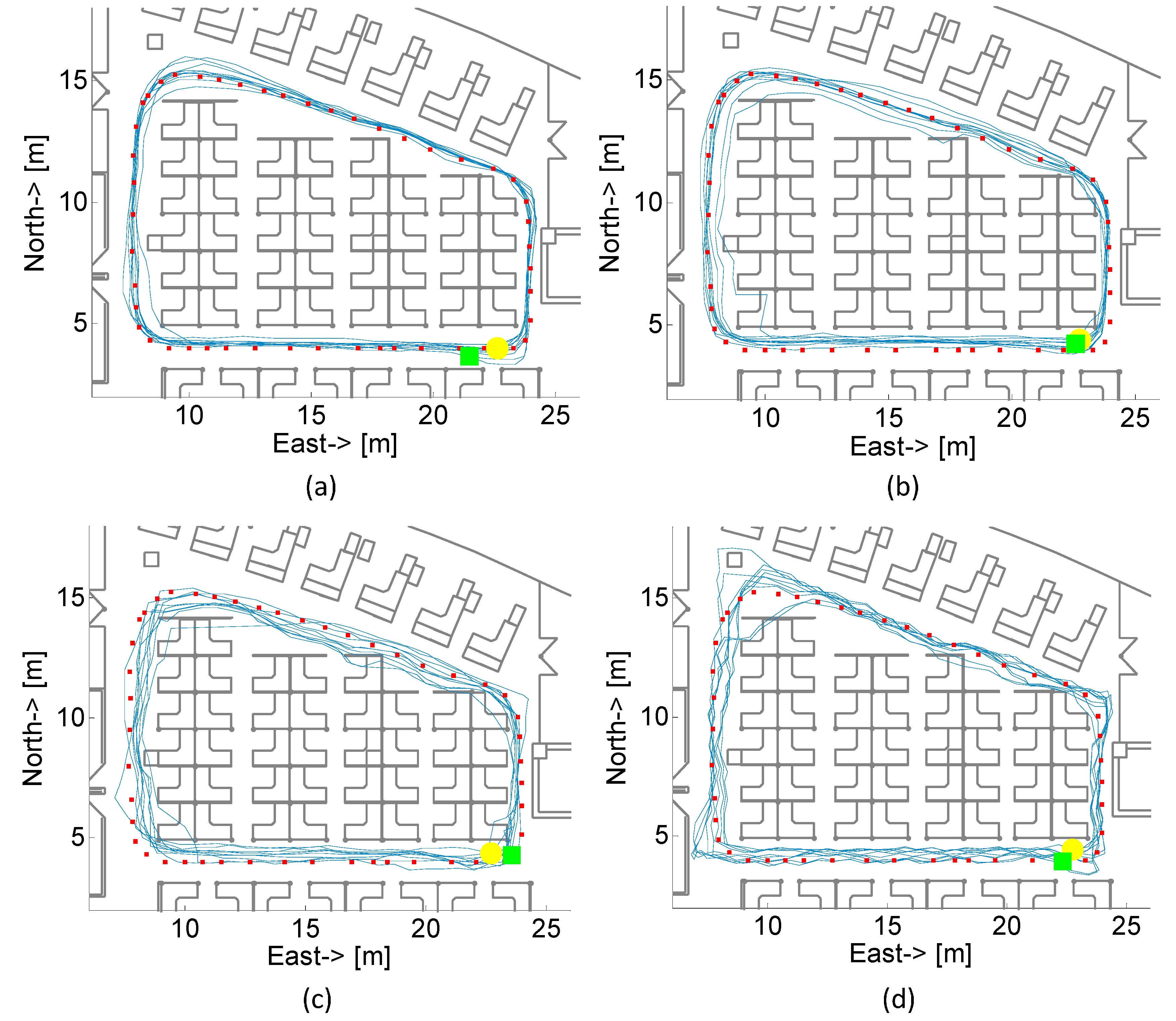

6.2. Field Test Results

| Carrying Modes | Real-Time Localization Errors | |||

|---|---|---|---|---|

| 50% Error | 95% Error | |||

| Proposed | w/o Assistance | Proposed | w/o Assistance | |

| Texting | 0.51 | 0.93 | 0.80 | 2.24 |

| Calling | 0.45 | 1.14 | 0.88 | 4.54 |

| In-hand | 0.50 | 1.38 | 1.01 | 3.65 |

| In-pocket | 0.74 | 1.40 | 1.71 | 3.74 |

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mautz, R. Indoor Positioning Technologies. Habilitation Thesis, ETH Zürich, Zurich, Switzerland, 2012. [Google Scholar]

- Fallah, N.; Apostolopoulos, I.; Bekris, K.; Folmer, E. Indoor human navigation systems: A survey. Interact. Comput. 2013, 25, 21–33. [Google Scholar]

- Bahl, P.; Padmanabhan, V.N. In RADAR: An in-building rf-based user location and tracking system. IEEE INFOCOM 2000, 2, 775–784. [Google Scholar]

- Youssef, M.; Agrawala, A. The Horus WLAN Location Determination System. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services, Seattle, WA, USA, 6–8 June 2005; pp. 205–218.

- Gansemer, S.; Großmann, U.; Hakobyan, S. Rssi-based euclidean distance algorithm for indoor positioning adapted for the use in dynamically changing wlan environments and multi-level buildings. In Proceedings of the IEEE 1st Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010.

- Hightower, J.; Want, R.; Borriello, G. SpotON: An Indoor 3D Location Sensing Technology Based on RF Signal Strength; University of Washington: Seattle, WA, USA, 2000. [Google Scholar]

- Ni, L.M.; Liu, Y.; Lau, Y.C.; Patil, A.P. LANDMARC: Indoor location sensing using active RFID. Wirel. Netw. 2004, 10, 701–710. [Google Scholar] [CrossRef]

- Ward, A.; Jones, A.; Hopper, A. A new location technique for the active office. IEEE Pers. Commun. 1997, 4, 42–47. [Google Scholar] [CrossRef]

- Priyantha, N.B. The Cricket Indoor Location System. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2005. [Google Scholar]

- Want, R.; Hopper, A.; Falcao, V.; Gibbons, J. The active badge location system. ACM Trans. Inf. Syst. (TOIS) 1992, 10, 91–102. [Google Scholar] [CrossRef]

- Kotanen, A.; Hannikainen, M.; Leppakoski, H.; Hamalainen, T.D. Experiments on local positioning with Bluetooth. In Proceedings of the IEEE International Conference on Information Technology: Coding and Computing, Las Vegas, NV, USA, 28–30 April 2003; pp. 297–303.

- Hallberg, J.; Nilsson, M.; Synnes, K. Positioning with Bluetooth. In Proceedings of the IEEE 10th International Conference on Telecommunications, Papeete, French Polynesia, 23 February–1 March 2003; pp. 954–958.

- Leach, M.J.; Sparks, E.P.; Robertson, N.M. Contextual anomaly detection in crowded surveillance scenes. Pattern Recognit. Lett. 2014, 44, 71–79. [Google Scholar] [CrossRef]

- Fernández-Caballero, A.; Castillo, J.C.; Martínez-Cantos, J.; Martínez-Tomás, R. Optical flow or image subtraction in human detection from infrared camera on mobile robot. Robot. Auton. Syst. 2010, 58, 1273–1281. [Google Scholar] [CrossRef]

- Foxlin, E. Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Chen, R.; Chen, Y.; Kuusniemi, H.; Wang, J. An effective pedestrian dead reckoning algorithm using a unified heading error model. In Proceedings of the IEEE/ION Position Location and Navigation Symposium (PLANS), Palm Springs, CA, USA, 4–6 May 2010; pp. 340–347.

- Qian, J.; Ma, J.; Xu, L.; Ying, R.; Yu, W.; Liu, P. Investigating the use of MEMS based wrist-worn IMU for pedestrian navigation application. In Proceedings of the 26th International Technical Meeting of the ION Satellite Division, ION GNSS+ 2013, Nashville, TN, USA, 16–20 September 2013.

- Retscher, G.; Hecht, T. Investigation of location capabilities of four different smartphones for LBS navigation applications. In Proceedings of the IEEE 3rd Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, Australia, 13–15 November 2012.

- Qian, J.; Ma, J.; Ying, R.; Liu, P. RPNOS: Reliable pedestrian navigation on a smartphone. In Proceedings of the Geo-Informatics in Resource Management and Sustainable Ecosystem, Wuhan, China, 8–10 November 2013; pp. 188–199.

- Pei, L.; Chen, R.; Chen, Y.; Leppakoski, H.; Perttula, A. Indoor/outdoor seamless positioning technologies integrated on smart phone. In Proceedings of the IEEE International Conference on Advances in Satellite and Space Communications, Colmar, France, 20–25 July 2009; pp. 141–145.

- Susi, M.; Renaudin, V.; Lachapelle, G. Motion mode recognition and step detection algorithms for mobile phone users. Sensors 2013, 13, 1539–1562. [Google Scholar] [CrossRef] [PubMed]

- Pei, L.; Liu, J.; Guinness, R.; Chen, Y.; Kuusniemi, H.; Chen, R. Using LS-SVM based motion recognition for smartphone indoor wireless positioning. Sensors 2012, 12, 6155–6175. [Google Scholar] [CrossRef] [PubMed]

- Bancroft, J.B.; Afzal, M.H.; Lachapelle, G. High performance GNSS augmented pedestrian navigation in signal degraded environments. In Proceedings of the International Global Navigation Satellite Systems Society (IGNSS) Symposium, Sydney, Australia, 15–17 November 2011.

- Sun, Z.; Mao, X.; Tian, W.; Zhang, X. Activity classification and dead reckoning for pedestrian navigation with wearable sensors. Measur. Sci. Technol. 2009, 20, 015203. [Google Scholar] [CrossRef]

- Godha, S.; Lachapelle, G.; Cannon, M. Integrated GPS/INS system for pedestrian navigation in a signal degraded environment. In Proceedings of the 19th International Technical Meeting of the ION Satellite Division, ION GNSS 2006, Fort Worth, TX, USA, 26–29 September 2006.

- Seitz, J.; Vaupel, T.; Meyer, S.; Gutiérrez Boronat, J.; Thielecke, J. A hidden markov model for pedestrian navigation. In Proceedings of the IEEE 7th Workshop on Positioning Navigation and Communication (WPNC), Dresden, Germany, 11–12 March 2010; pp. 120–127.

- Schmid, J.; Volker, M.; Gadeke, T.; Weber, P.; Stork, W.; Muller-Glaser, K. An approach to infrastructure-independent person localization with an IEEE 802.15.4 WSN. In Proceedings of the IEEE 1st Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010.

- Kourogi, M.; Sakata, N.; Okuma, T.; Kurata, T. Indoor/outdoor pedestrian navigation with an embedded GPS/RFID/self-contained sensor system. In Proceedings of 16th Conference on Advances in Artificial Reality and Tele-Existence, Hangzhou, China, 28 November–1 December 2006; pp. 1310–1321.

- Aufderheide, D.; Krybus, W. Towards real-time camera egomotion estimation and three-dimensional scene acquisition from monocular image streams. In Proceedings of the IEEE 1st Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010.

- Klingbeil, L.; Romanovas, M.; Schneider, P.; Traechtler, M.; Manoli, Y. A modular and mobile system for indoor localization. In Proceedings of IEEE 1st Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010.

- Ascher, C.; Kessler, C.; Wankerl, M.; Trommer, G. Dual IMU indoor navigation with particle filter based map-matching on a smartphone. In Proceedings of the IEEE 1st Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010.

- Link, J.A.B.; Smith, P.; Viol, N.; Wehrle, K. Footpath: Accurate map-based indoor navigation using smartphones. In Proceedings of the IEEE 2nd Conference on Indoor Positioning and Indoor Navigation (IPIN), Guimarães, Portugal, 21–23 September 2011.

- Shih, W.Y.; Lan, K.C. Using smartphone with un-scaled map for indoor localization. In Proceedings of the IEEE 9th Annual Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks (SECON), Seoul, Korea, 18–21 June 2012.

- Woodman, O.; Harle, R. Pedestrian localisation for indoor environments. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’08), Seoul, Korea, 21–24 September 2008; pp. 114–123.

- Qian, J.; Ma, J.; Ying, R.; Liu, P.; Pei, L. An improved indoor localization method using smartphone inertial sensors. In Proceedings of the IEEE 4th Conference on Indoor Positioning and Indoor Navigation (IPIN), Montbéliard, France, 28–31 October 2013.

- Qian, J.; Pei, L.; Ying, R.; Chen, X.; Zou, D.; Liu, P.; Yu, W. Continuous motion recognition for natural pedestrian dead reckoning using smartphone sensors. In Proceedings of the 27th International Technical Meeting of the ION Satellite Division, ION GNSS+ 2014, Tampa, FL, USA, 8–12 September 2014.

- Bonnet, S.; Bassompierre, C.; Godin, C.; Lesecq, S.; Barraud, A. Calibration methods for inertial and magnetic sensors. Sens. Actuators A Phys. 2009, 156, 302–311. [Google Scholar] [CrossRef]

- McKinley, S.; Levine, M. Cubic spline interpolation. Coll. Redw. 1998, 45, 1049–1060. [Google Scholar]

- Bylemans, I.; Weyn, M.; Klepal, M. Mobile phone-based displacement estimation for opportunistic localisation systems. In Proceedings of the IEEE 3rd International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies (UBICOMM), Sliema, Malta, 11–16 October 2009; pp. 113–118.

- Zhang, S.; Xiong, Y.; Ma, J.; Song, Z.; Wang, W. Indoor location based on independent sensors and WIFI. In Proceedings of the IEEE International Conference on Computer Science and Network Technology (ICCSNT), Harbin, China, 24–26 December 2011; pp. 2640–2643.

- Cui, Y.; Ariyur, K.B. Pedestrian navigation with INS measurements and gait models. In Proceedings of the 24th International Technical Meeting of the ION Satellite Division, ION GNSS 2011, Portland, OR, USA, 19–23 September 2011; pp. 1409–1418.

- Cho, S.Y.; Park, C.G. MEMS based pedestrian navigation system. J. Navig. 2006, 59, 135–153. [Google Scholar] [CrossRef]

- Kao, W.; Chen, C.; Lin, J. Step-length estimation using wrist-worn accelerometer and GPS. In Proceedings of the 24th International Technical Meeting of the ION Satellite Division, ION GNSS 2011, Portland, OR, USA, 19–23 September 2011; pp. 3274–3280.

- Chen, R.; Pei, L.; Chen, Y. A smartphone based PDR solution for indoor navigation. In Proceedings of the 24th International Technical Meeting of the ION Satellite Division, ION GNSS 2011, Portland, OR, USA, 19–23 September 2011; pp. 1404–1408.

- Qian, J.; Pei, L.; Zou, D.; Qian, K. Optical flow based step length estimation for indoor pedestrian navigation on a smartphone. In Proceeding of the Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 5–8 May 2014; pp. 205–211.

- Renaudin, V.; Susi, M.; Lachapelle, G. Step length estimation using handheld inertial sensors. Sensors 2012, 12, 8507–8525. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 14th ACM International Conference on Ubiquitous Computing (UbiComp), Pittsburgh, PA, USA, 5–8 September 2012; pp. 421–430.

- Afzal, M.H.; Renaudin, V.; Lachapelle, G. Magnetic field based heading estimation for pedestrian navigation environments. In Proceedings of the IEEE 2nd Conference on Indoor Positioning and Indoor Navigation (IPIN), Guimarães, Portugal, 21–23 September 2011.

- Steinhoff, U.; Schiele, B. Dead reckoning form the pocket-an experimental study. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications (PerCom), Mannheim, Germany, 29 March–2 April 2010.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, J.; Pei, L.; Ma, J.; Ying, R.; Liu, P. Vector Graph Assisted Pedestrian Dead Reckoning Using an Unconstrained Smartphone. Sensors 2015, 15, 5032-5057. https://doi.org/10.3390/s150305032

Qian J, Pei L, Ma J, Ying R, Liu P. Vector Graph Assisted Pedestrian Dead Reckoning Using an Unconstrained Smartphone. Sensors. 2015; 15(3):5032-5057. https://doi.org/10.3390/s150305032

Chicago/Turabian StyleQian, Jiuchao, Ling Pei, Jiabin Ma, Rendong Ying, and Peilin Liu. 2015. "Vector Graph Assisted Pedestrian Dead Reckoning Using an Unconstrained Smartphone" Sensors 15, no. 3: 5032-5057. https://doi.org/10.3390/s150305032

APA StyleQian, J., Pei, L., Ma, J., Ying, R., & Liu, P. (2015). Vector Graph Assisted Pedestrian Dead Reckoning Using an Unconstrained Smartphone. Sensors, 15(3), 5032-5057. https://doi.org/10.3390/s150305032