Abstract

Based on wireless multimedia sensor networks (WMSNs) deployed in an underground coal mine, a miner’s lamp video collaborative localization algorithm was proposed to locate miners in the scene of insufficient illumination and bifurcated structures of underground tunnels. In bifurcation area, several camera nodes are deployed along the longitudinal direction of tunnels, forming a collaborative cluster in wireless way to monitor and locate miners in underground tunnels. Cap-lamps are regarded as the feature of miners in the scene of insufficient illumination of underground tunnels, which means that miners can be identified by detecting their cap-lamps. A miner’s lamp will project mapping points on the imaging plane of collaborative cameras and the coordinates of mapping points are calculated by collaborative cameras. Then, multiple straight lines between the positions of collaborative cameras and their corresponding mapping points are established. To find the three-dimension (3D) coordinate location of the miner’s lamp a least square method is proposed to get the optimal intersection of the multiple straight lines. Tests were carried out both in a corridor and a realistic scenario of underground tunnel, which show that the proposed miner’s lamp video collaborative localization algorithm has good effectiveness, robustness and localization accuracy in real world conditions of underground tunnels.

1. Introduction

In order to strengthen production safety, the underground coal mine has an urgent demand for wireless multimedia communication services [1,2,3,4,5]. Wireless multimedia sensor networks (WMSNs) are a new kind of sensor networks that introduce multimedia applications, such as images, and audio and video, into wireless sensor networks (WSNs). It is expected that the multimedia monitoring ability will be improved significantly by applying WMSNs in underground coal mine because of the excellent multimedia perception ability, fast and convenient wireless access and flexible topology characteristics of WMSNs [6,7,8,9,10]. In this article, WMSNs in an underground coal mine are structured and a video collaborative localization algorithm for miner is proposed based on the WMSNs to improve the safety of miners.

In WMSNs, video localization of targets is divided into localization with a single camera [11,12] and localization with collaborative cameras [13,14,15,16,17,18,19,20]. It is usually required to obtain the height of target in advance [11] or to extract target perfectly [12] for localization with single camera. Since the altitude localization and plane localization of miner’s lamp need to be detected simultaneously, the localization with collaborative cameras are applied in this paper. Video collaborative localization of targets is achieved through fusing the video information collected and processed by multiple cameras. For example, reference [13] proposed a visual collaborative localization algorithm for the passive target positioning problem. According to machine vision theory, the target was projected into several straight lines on the ground plane. The optimum intersection of straight lines, which could be obtained by using Hough transform, was the position estimation of the target. However, because of narrow structures of underground tunnels, cameras can only be deployed along the longitudinal direction of tunnels. Thus, the overlap of projection straight lines may result in location failure with this method.

References [14,15] proposed a method to utilize the active cameras to localize the target collaboratively. The perspective projection model of target was established by utilizing the Gaussian error function. Cameras identify the target location by recognizing the target body and calculating the position and the size of target body based on the perspective projection model. Moreover, the problem of active cameras selection was tackled by balancing the tradeoff between the accuracy of target localization and the energy consumption in camera sensor networks.

Reference [16] presented a technique of multiple feature points to compute the target location in the wireless visual sensor networks. The target edge was determined by Canny detector and the position of target was calculated by fusing the edge information of the target. However, since underground coal mine has feature of insufficient illumination and there may be a lot of coal dusts attaching on miners’ uniforms [21], miner’s body and the mine’s background are very similar, which makes it difficult to distinguish miner’s profile from the mine’s background.

Therefore, considering insufficient illumination and narrow underground tunnels with bifurcated structures, a miner’s lamp video collaborative localization algorithm based on WMSNs was proposed. Since miners must carry cap-lamps to offer auxiliary lighting in underground coal mine which provide a sharp contrast to the dim background, in the algorithm, it was proposed to locate a miner by detecting the cap-lamp of the miner. The miner’s lamp project mapping points on the imaging plane of cameras and the coordinates of mapping points are calculated by cameras. Then, multiple straight lines between the positions of cameras and their corresponding mapping points are established. Finally, a miner can be located by finding the optimal intersection of the multiple straight lines. To verify the effectiveness of the proposed miner’s lamp video collaborative localization algorithm, tests were carried out both in a corridor and a realistic scenario of underground tunnel. The experimental results show that good localization performance is achieved with the proposed algorithm.

The rest of this article is organized as follows. Section 2 introduces the architecture of WMSNs and location scene for underground coal mine. The algorithm of video collaborative localization of the miner’s lamp is discussed in Section 3 and Section 4 presents the experiments results. Section 5 concludes the article.

2. Architecture of WMSNs and Location Scene for Underground Coal Mine

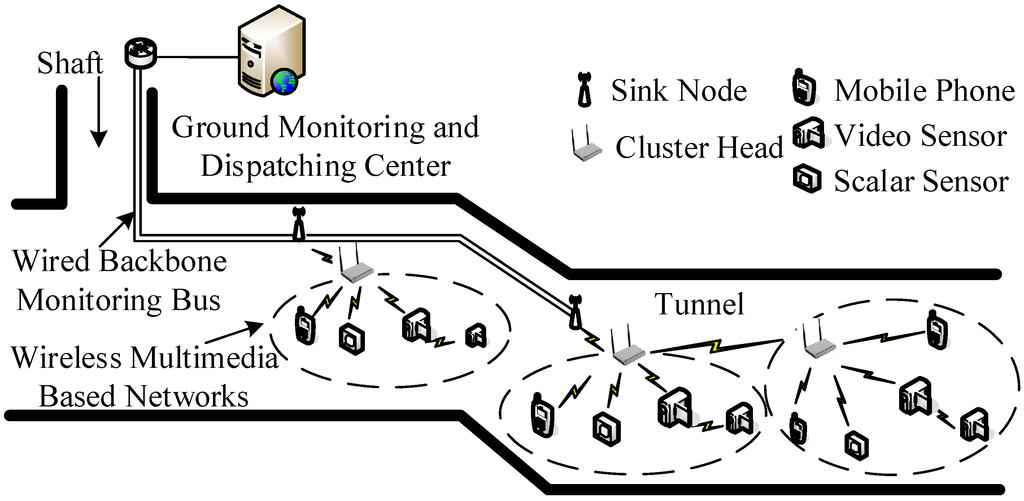

Figure 1 shows the architecture of WMSNs in underground coal mine. As shown in Figure 1, the system consists of three layers, i.e., wireless multimedia based networks, wired backbone monitoring bus as well as Ground Monitoring and Dispatching Center (GMDC). The wireless multimedia based networks are constituted by cameras, mobile phones and scalar sensors, etc. They are deployed in underground coal mine to collect multimedia information. The multimedia information collected is processed by cluster head nodes and sent to the sink nodes in multi-hop relaying way. Sink nodes which connect with wired backbone monitoring bus transmit multimedia information collected to GMDC via wired backbone monitoring bus. In this way, the GMDC can monitor the multimedia information of underground coal mine in real time.

Figure 1.

Architecture of wireless multimedia sensor networks (WMSNs) in underground coal mine.

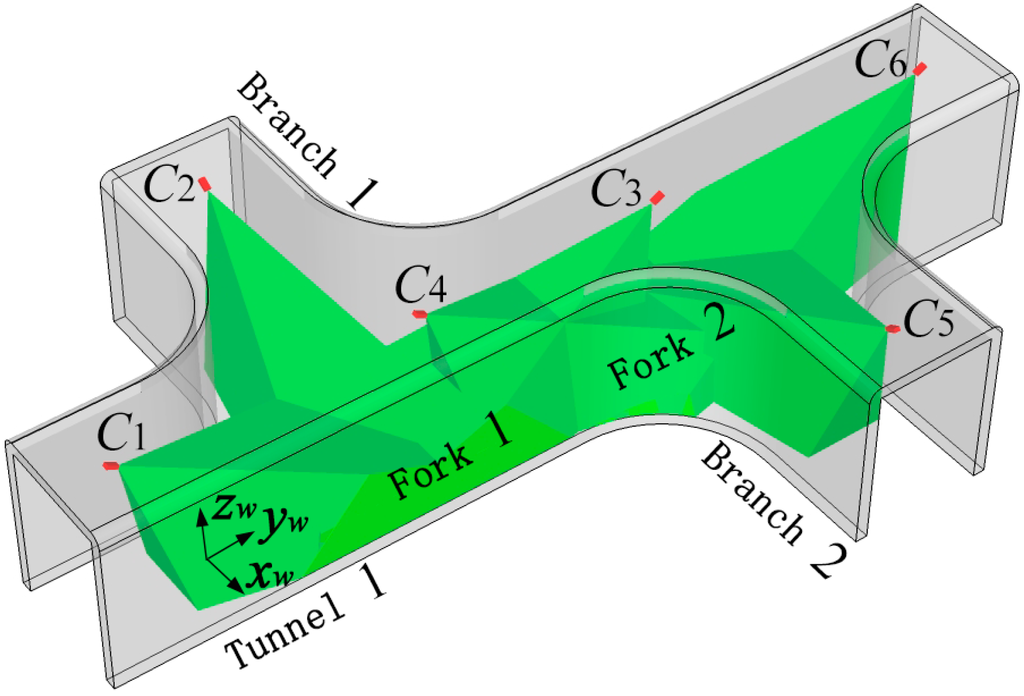

Figure 2 shows the scene of miner localization in underground coal mine with bifurcated structures. With the purpose of describing the positions of cameras and miners, a unified World Coordinate System (WCS) owxwywzw is established along underground tunnels with the transverse direction of the Tunnel 1 as xw-axis, the longitudinal direction as yw-axis and the altitude direction as zw-axis, respectively. The tunnel entrance is selected as the origin of the WCS, so that any point in underground tunnels can be located according to its position along the tunnels. It notes that any underground tunnels can be described with the WCS.

In order to locate miners, the WMSNs cameras deployed in underground tunnels should be aware of positions of themselves as well as positions of adjacent cameras which are referred to the geometrical parameters in this paper. In fact, several algorithms of localization and calibration can be applied to obtain the geometrical parameters automatically [22,23,24,25,26]. However, in our experiments, cameras are deployed manually and their geometrical parameters are measured also manually.

The gray part in Figure 2 represents underground tunnels with bifurcated structures. Tunnel 1 and Branch 1, Branch 2 constitute the bifurcation areas of Forks 1 and 2. Ci in Figure 2 represents camera nodes which have the same image processing, digital processing, wireless transmission and reception properties. Cameras C1, C2 and C3 are deployed along the longitudinal direction of tunnels, facing Fork 1 and monitor Fork 1 together. Similarly, cameras C4, C5 and C6 are deployed along the longitudinal direction of tunnels, facing Fork 2 and monitor Fork 2 together. Cameras C3 and C4 are deployed along the longitudinal direction of Tunnel 1, monitoring the connected region of Fork 1 and Fork 2 together. The green shadow part in Figure 2 represents the areas that cameras monitor. The area that one camera can monitor is approximately a pyramid shape.

Figure 2.

Scene of miner localization in underground coal mine with bifurcated structures.

3. Video Collaborative Localization of the Miner’s Lamp

3.1. The Miner’s Lamp Detection with the Background Subtraction Method

In underground tunnels, generally it is difficult to distinguish miners because of coal dusts and insufficient illumination. However, miners must carry cap-lamps to offer auxiliary lighting in the underground coal mine, which provide a sharp contrast to the dim background. Thus, the background subtraction method [27] is applied in order to detect the miners’ lamps reliably.

From the collected video sequences of underground tunnels, we select one frame image without miner as the initial background image. The difference image is obtained through a subtraction between the current image and the background image. In order to reduce the noise interference caused by the beam divergence of miner’s lamp, the mean filter is applied to smooth the difference image obtained. An appropriate threshold is selected to turn filtered difference image into binary image which has only two pixel value, 0 and 1.

If pixel value of the binary image obtained is 0, it indicates that there is no miner in the monitoring area of a camera. Then, the background image is updated with the current image of video sequences collected by camera at the top of tunnel. In contrast, if any of the pixel values of the binary image is 1, it indicates that a miner has appeared in the monitoring area of a camera. The mapping range on the imaging plane of camera is the region with pixel value of binary image being 1, which indicates that there is a miner with cap-lamp in the area. The pixel coordinate of the mapping point of the miner’s lamp on the imaging plane of camera can be obtained by calculating the geometric center of the mapping range.

3.2. Camera Imaging Model

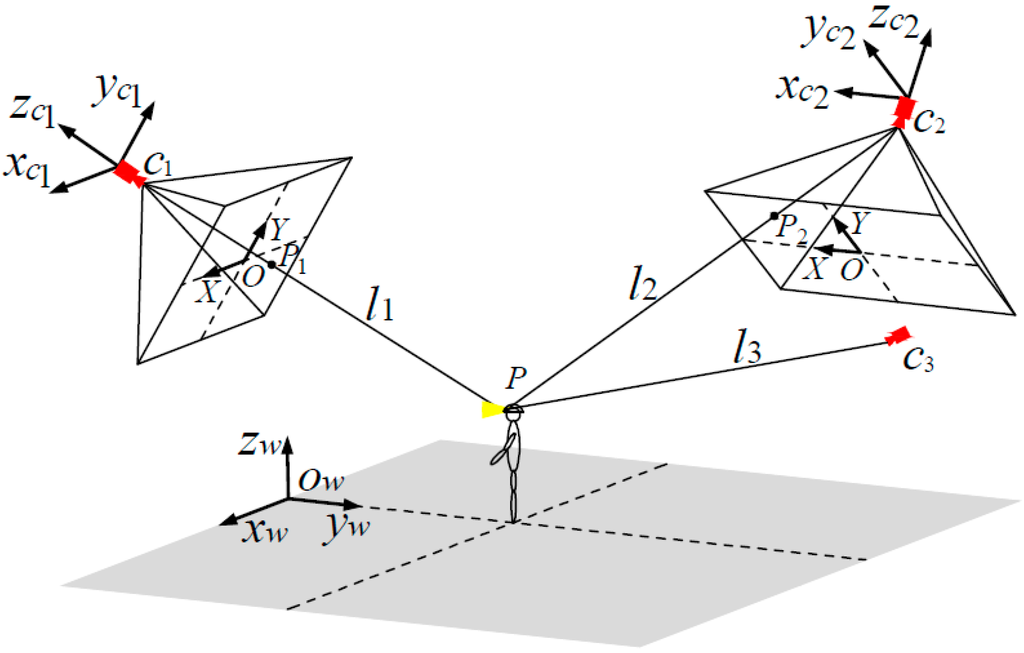

The pixel coordinate of the mapping point needs to be converted to the physical coordinate in the WCS of underground coal mines. Thus, a vision imaging model of cameras is established according to the linear imaging principle of the camera [28]. Figure 3 shows the vision imaging model of cameras C1, C2 and C3 in Figure 2.

The grey shade of Figure 3 represents the ground part of tunnels in Figure 2. P is the position where a miner carries a cap-lamp. Ci is the camera node, assuming its position coordinate in the WCS of underground coal mine is Ci(xwci,ywci,zwci), where the value of i is 1, 2 and 3. The owxwywzw in Figure 3 represents the WCS of underground coal mine that has been established in Figure 2. OXY represents the two-dimensional coordinate system of the imaging plane of camera. X axis and Y axis are respectively the horizontal and vertical direction on the imaging plane of camera. The pyramid composed of camera and its imaging plane represents the region that a camera covers. The Camera Coordinate System (CCS) ocixciycizci of Ci is established, where the position of Ci is selected as the origin of its CCS, zci axis is the opposite direction of optical axis of the camera, xci axis and yci axis are respectively same with the directions of X axis and Y axis on the imaging plane of camera.

Figure 3.

Camera vision imaging model.

In Figure 3, we assume that the camera Ci detects a miner’s lamp and calculates the pixel coordinate Pi(ui,vi) of the mapping point on its own imaging plane. By applying the linear relationship between image resolution of the camera Ci and physical size of the photosensitive element Complementary Metal Oxide Semiconductor (CMOS) in the Camera Coordinate System ocixciycizci, the pixel coordinate Pi (ui,vi) of the mapping point is converted to the physical coordinate Pci(xci,yci,zci) in the CCS ocixciycizci as

where f is the focal length of camera Ci, m and n are the physical dimensions of the photosensitive element CMOS in the horizontal and vertical direction. The image resolution is M × N, where M and N are respectively the number of pixels in the X axis and Y axis direction on the imaging plane. Expressions m/M and n/N are respectively the physical size of a single pixel in the X axis and Y axis direction on the imaging plane of camera.

The physical coordinate Pci(xci,yci,zci) of the mapping point in the CCS ocixciycizci need to be converted to the physical coordinate in the WCS owxwywzw. We assume that the CCS ocixciycizci is overlapped with the WCS owxwywzw when the WCS owxwywzw rotate φ degrees counterclockwise around zw axis, rotate θ degrees counterclockwise around xw axis and translate a vector twi in turn. Then, the physical coordinate Pci(xci,yci,zci) in the CCS ocixciycizci is turned into the physical coordinate Pwi(xwi,ywi,zwi) in the WCS owxwywzw as

where the translational vector twi = (xwci,ywci,zwci) is from the origin point to the camera Ci node in the WCS of underground coal mine. Rotation matrix Rwi is decided by the yaw angle φ and the pitch angle θ of the camera Ci as

3.3. Miner’s Lamp Video Collaborative Localization

When a miner enters the bifurcation area of tunnels where several camera nodes have been deployed, camera Ci detects the miner’s lamp and calculates its pixel coordinate Pi(ui,vi). Assume that cameras which detect the miner’s lamp form a cluster by themselves in wireless way and they are in a state of time synchronization. Transforming the pixel coordinate Pi(ui,vi) with the Equation (1), the physical coordinate Pci(xci,yci,zci) of a miner’s lamp mapping point in the CCS ocixciycizci can be obtained. Therefore, the physical coordinate Pwi(xwi,ywi,zwi) of a miner’s lamp mapping point in the WCS of underground coal mine can also be obtained by applying the Equation (2) to the physical coordinate Pci(xci,yci,zci).

Establish a straight line li between the position coordinate of camera Ci(xwci,ywci,zwci) and the physical coordinate Pwi(xwi,ywi,zwi) as

where (xli,yli,zli) is the coordinate of the point on the straight line li in the WCS and si is the parameter of the straight line li.

The three-dimension (3D) position coordinate of a miner’s lamp in the WCS can be obtained by calculating the intersection of straight lines between the positions of cameras and their corresponding mapping points. However, the underground tunnels are complex and errors of camera measurement caused by position coordinate, yaw angle and pitch angle cannot be avoided. In addition, miner’s lamp orientation with respect to different cameras may be different, which may lead to identification errors of miner’s lamp mapping points because of the beam divergence of miner’s lamp. As a result, multiple straight lines li between the positions of cameras and their corresponding mapping points generally cannot intersect at one point.

We solve this problem by finding an optimal intersection P in the WCS who achieve the minimum square sum J of the distance to multiple straight lines li as

The extremum problem of Equation (5) can be solved by calculating the partial derivative of x, y, z and si respectively and make its value be equal to 0 as

By this way, we can get the least square estimation of the optimal intersection P which provides the position coordinate of miner’s lamp in the WCS of underground coal mine. According to the 3D coordinate of a miner’s lamp, the plane position coordinate (x,y) of the miner in a tunnel and the altitude coordinate z of the miner’s lamp to the ground can also be obtained.

4. Experiments and Performances

4.1. Experiment in a Corridor

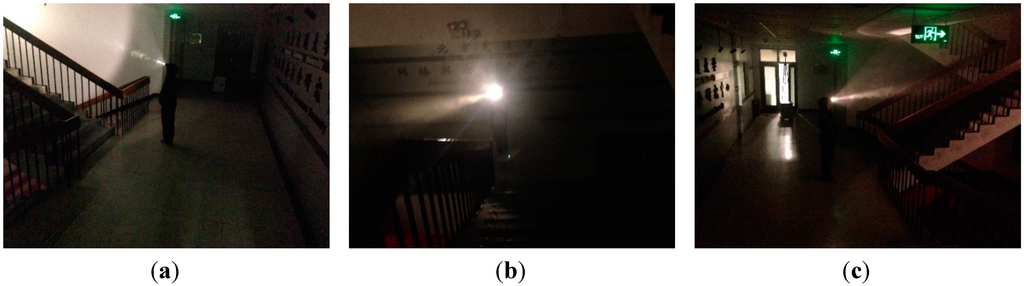

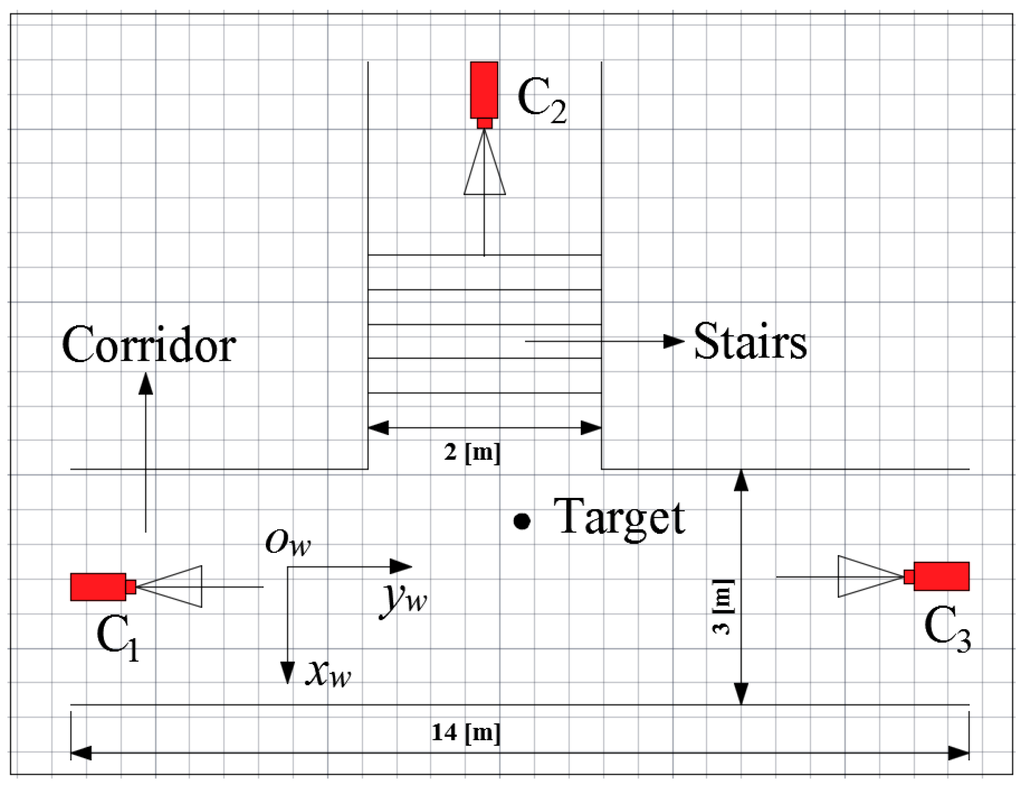

In order to evaluate the performances of the proposed miner’s lamp video collaborative localization algorithm based on underground WMSNs, a test was carried out. A corridor with stairs shown in Figure 4 was selected to simulate the bifurcation structure of underground tunnels. Lights in the corridor were turned off to simulate the insufficient illumination tunnels. Smoke machine sprayed smoke in the corridor to simulate tunnels with great amounts of water vapor and dusts. The tester wore dark blue overalls and safety helmet with miner’s lamp turning on to simulate miners in underground tunnels. A World Coordinate System (WCS) was established at the entrance of the corridor with the transverse direction of the corridor as xw-axis, the longitudinal direction as yw-axis and the altitude direction as zw-axis, respectively.

Figure 4.

(a) The image of the corridor taken by camera C1; (b) The image of the corridor taken by camera C2; (c) The image of the corridor taken by camera C3.

In the test, three isomorphic cameras TY803-130 were adopted manually at the top of the corridor by tripods. The cameras C1, C2 and C3 were deployed according to Figure 2, and the topology of the experiment is shown in Figure 5. Cameras are wirelessly linked by Wi-Fi with carrier frequency 2.4 GHz and 802.11 n transmission protocol. Table 1 lists the intrinsic parameters of three cameras, including focal length f, image resolution M × N and the physical size of the photosensitive element CMOS. Table 2 lists the geometrical parameters of three cameras, including the world coordinates of the locations of cameras, the angle of yaw φ between the WCS xw-axis and the Camera Coordinate System (CCS) xw-axis in counterclockwise direction and the θ between the WCS zw-axis and the CCS zw-axis in counterclockwise direction.

Figure 5.

Topology of the experiment.

Table 1.

Intrinsic parameters of cameras.

| Parameter | Value |

|---|---|

| f/mm | 6.0 |

| M × N /pixel | 1280 × 960 |

| CMOS/mm | 4.8 × 3.6 |

Table 2.

Geometrical parameters of cameras.

| (xw,yw,zw)/m | φ/° | θ/° | |

|---|---|---|---|

| Camera 1 | (0.29, −2.20, 2.32) | 0 | 70 |

| Camera 2 | (−6.33, 2.86, 3.08) | −90 | 64 |

| Camera 3 | (0.15, 8.95, 2.34) | 180 | 70 |

The tester walked into the corridor at a normal pace. Cameras C1, C2 and C3 worked together to monitor the corridor and collected the video information at the same time. At a certain time point, cameras C1, C2 and C3 collected the images of corridor, as is shown in Figure 4a–c, respectively.

Cameras C1, C2 and C3 detected the miner’s lamp by the difference value between the current images from their video sequences and the background images. In the experiment, 60% maximum grey value of difference image is selected as threshold. Cameras C1, C2 and C3 detected the miner’s lamp at interval of 0.5 s and calculated the pixel coordinates of mapping points of the miner’s lamp on their own imaging plane. Then, the pixel coordinates were translated to the coordinates in the WCS by applying Equations (1) and (2). Therefore, three straight lines of three-dimension (3-D) between cameras C1, C2 and C3 and their corresponding mapping points of the miner’s lamp could be established in the WCS.

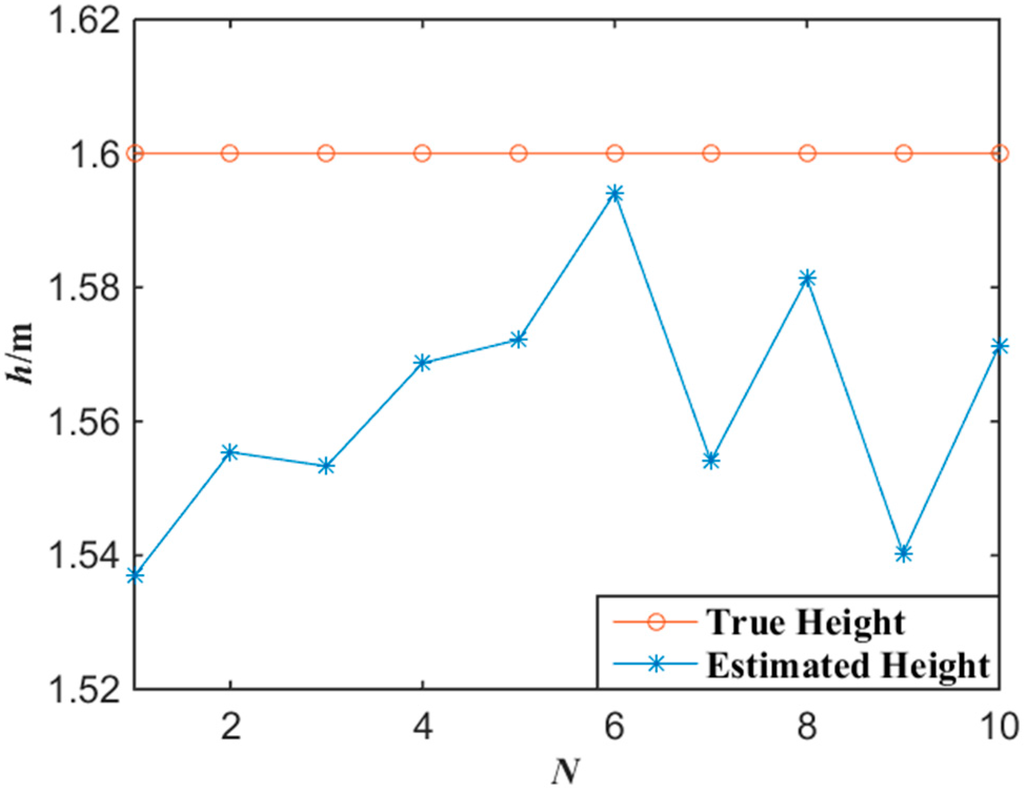

By applying Equations (5) and (6) to calculate the optimum intersection of the three straight lines, the position coordinate of three-dimension (3-D) of the miner’s lamp in the WCS could be obtained which also provided both the plane coordinate of the tester in the corridor and the altitude of the miner’s lamp from the corridor ground. 10 gauge points on the ground of the corridor had been marked and measured to show the localization precision of the miner’s lamp. The tester would walk to pass these 10 gauge points and stay for one second at each gauge point. By applying the proposed algorithm, cameras C1, C2 and C3 could locate the tester’s lamp automatically at interval of 0.5 s when the tester passed each gauge point.

In order to compare the estimated localization of the tester’s lamp with the actual location of the tester’s lamp, the error of altitude localization is defined as

where hr(i) is the actual altitude of the tester’s lamp from the tunnel ground and hl(i) is the altitude of the tester’s lamp obtained by the proposed algorithm. Thus, the average error of the altitude localization is defined as

where N is the number of gauge points in the test.

Similarly, the error of plane localization which includes errors both in x-axis and y-axis is defined as

where is the actual plane location of the tester’s lamp and is the estimated plane coordinates with the proposed algorithm. Thus, the average error of the plane localization is defined as

where N is the number of gauge points in the test.

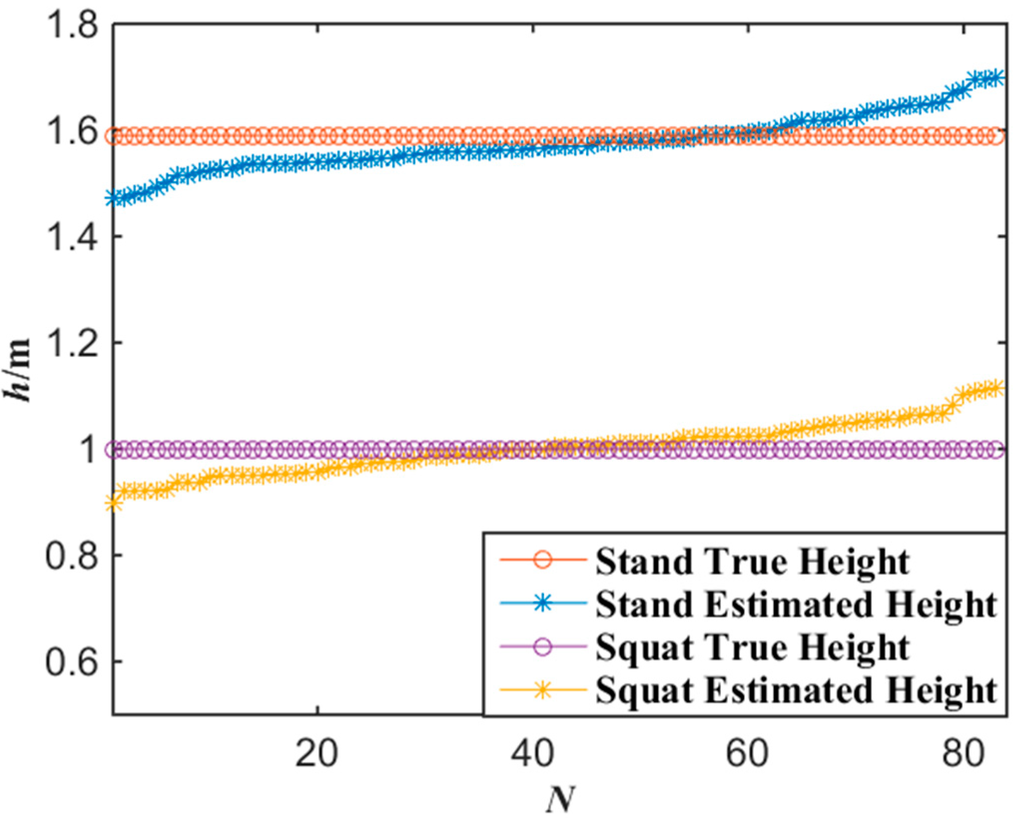

Figure 6 shows the experimental results of the altitude localization of tester’s lamp with the proposed algorithm. According to the experimental data of Figure 6, the average error of the altitude localization of the tester is 3.7 cm by applying Equations (7) and (8).

Figure 6.

Experimental data of altitude with the miner’s lamp video collaborative localization.

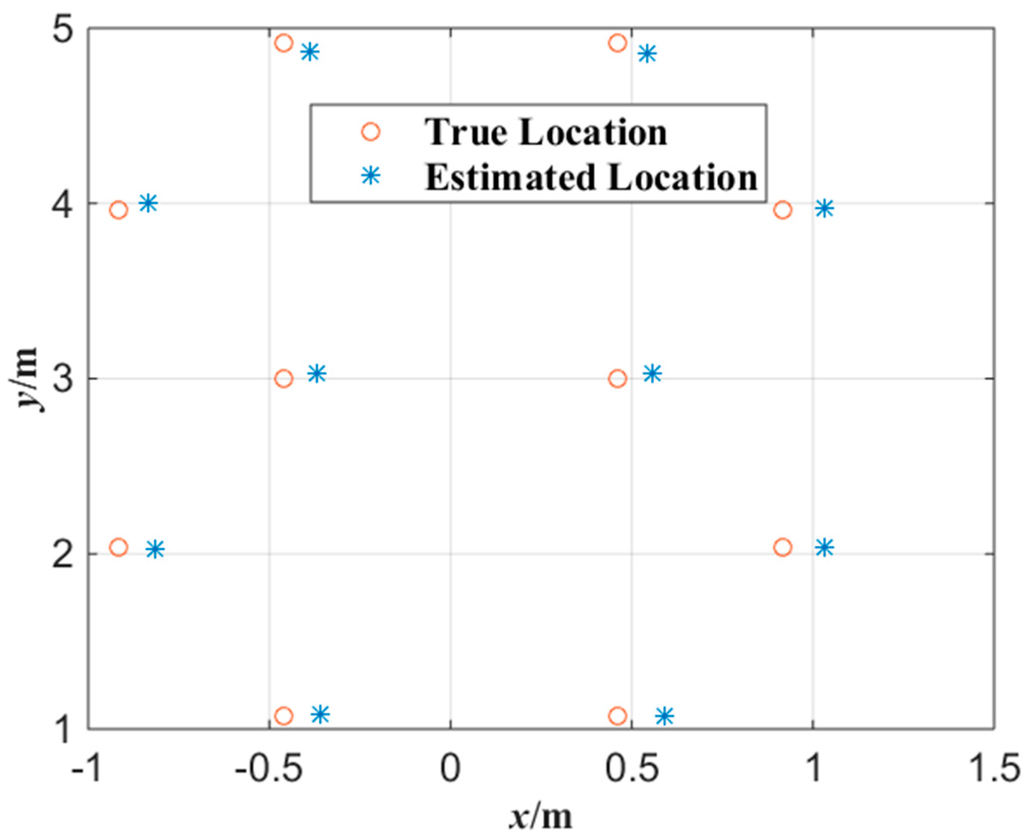

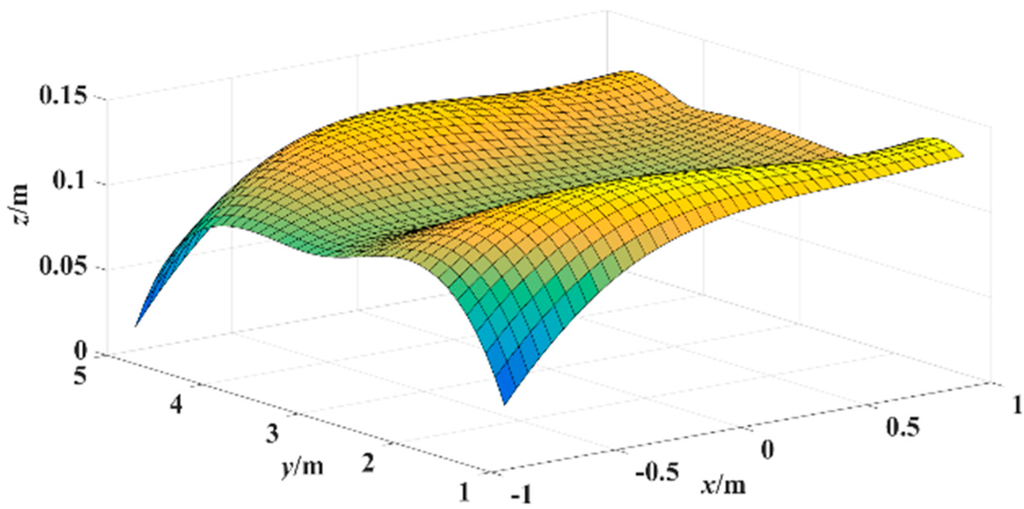

Figure 7 shows the experimental results of the plane localization of tester’s lamp with the proposed algorithm where the tester stood at each gauge point passed. According to the experimental data of Figure 7, the error surface of plane localization is shown in Figure 8 which applies a linear interpolation algorithm to the error of plane localization calculated with Equation (9). In Figure 8, x-y plane is the corridor ground, (x,y) coordinate represents the tester’s actual location, and coordinate z represents the error of plane localization between the locations estimated and the actual locations. As shown in Figure 8, the average error of the plane localization is around 10 cm with the proposed algorithm.

Figure 7.

Experimental data of plane localization with the miner’s lamp video collaborative localization.

Figure 8.

Error surface of plane localization with the miner’s lamp video collaborative localization.

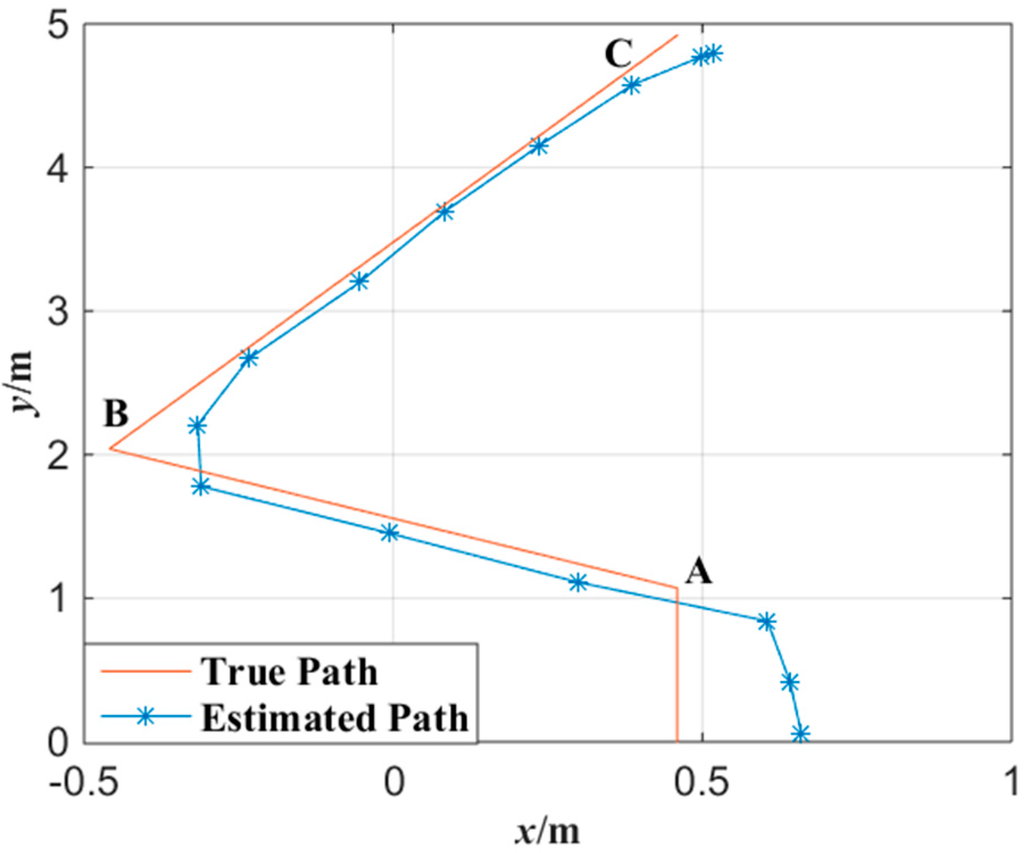

In order to evaluate the performance of the miner’s lamp collaborative localization algorithm under the condition that the miner moves continuously, cameras C1, C2 and C3 were also applied to detect the movement of the tester in the corridor. The tester walked straight into the corridor from the entrance at a normal pace. Then, the tester turned about 45° at point A and walked about 1.5 m to point B. After that, the tester turned again at point B and walked toward point C. Figure 9 shows the tester’s actual motion trajectory as well as the estimated motion trajectory with the proposed algorithm at interval of 0.5 s. In Figure 9, the motion trajectory estimated is obtained by connecting two adjacent tester’s locations with line segments.

Figure 9.

Trajectory tracked with the miner’s lamp video collaborative localization.

In the bottom right of Figure 9, it can be observed that the location error is larger when the tester began to enter into the corridor. This is because when the tester began to enter into the corridor, the mapping points of tester’s cap-lamp were respectively on the edge of the imaging planes of cameras C1, C2 and C3 at the same time. Due to the non-linear relationship between object and image on the imaging edge [29], the mapping points of tester’s cap-lamp on the imaging planes will distort to some extent which will lead to larger location error. However, the maximum error for the movement orbit tracing is about 20 cm which is also acceptable to trace a miner. In contrast, the location error is smaller when the tester was around central area of the corridor. This is because the images of cameras C1, C2 and C3 have a good linear relationship when the miner’s lamp is around central area which results in smaller errors.

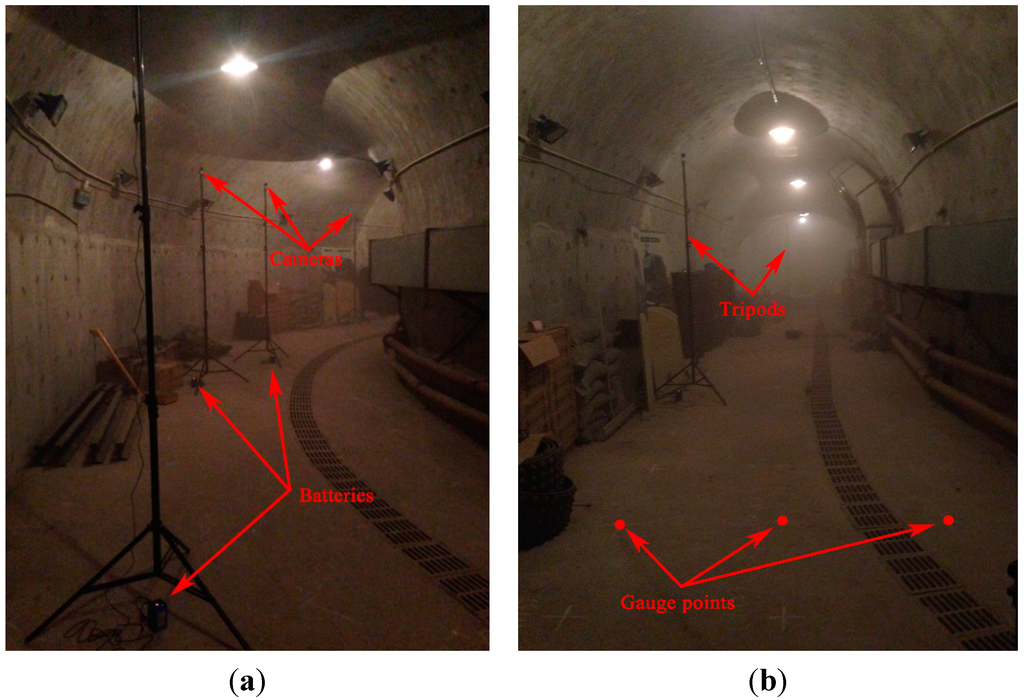

4.2. Experiment in an Underground Tunnel

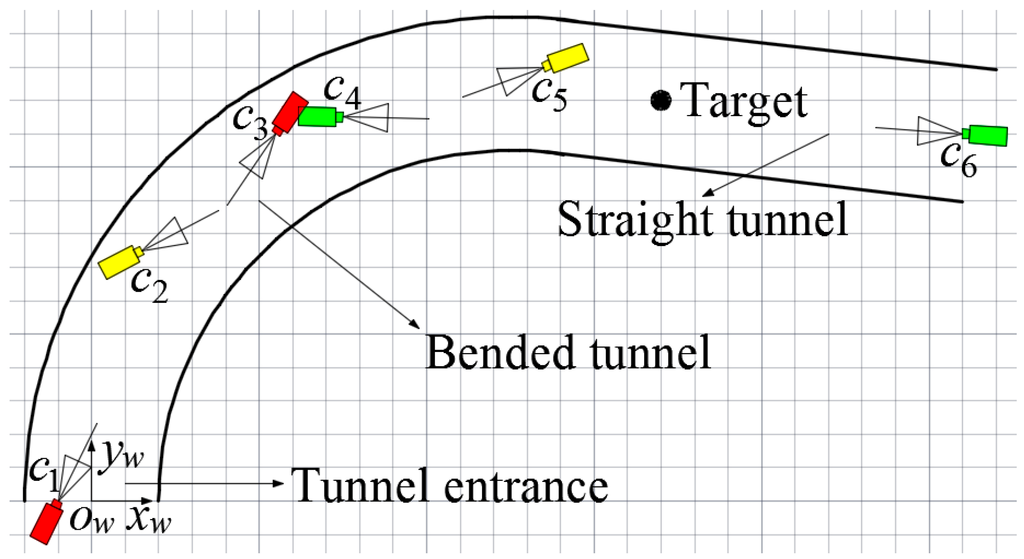

In order to evaluate the performances of the proposed algorithm in the realistic scenario of underground tunnels, a test was also carried out in the realistic scenario of an underground tunnel. The test was performed in the underground tunnel of Track Vibration Abatement and Control Laboratory at Beijing Jiaotong University, as shown in Figure 10. The width and the altitude of the underground tunnel are 4 m with horseshoe shaped structure. In the test, six isomorphic cameras TY803-130 were deployed manually at the top of the tunnel by tripods. The cameras C1, C2, C3, C4, C5 and C6 were powered by lithium batteries.

Figure 10 and Figure 11 show the actual deployment of cameras and the topology of the experiment, respectively. A World Coordinate System (WCS) was established at the entrance of the tunnel with the transverse direction of the tunnel as xw-axis, the longitudinal direction as yw-axis and the altitude direction as zw-axis, respectively. As shown in Figure 11, the six isomorphic cameras were divided into three groups and the two cameras in the same group were deployed oppositely, with cameras C1 and C3 as the first group, cameras C2 and C5 as the second group and cameras C4 and C6 as the third group. Cameras C1 and C3 are deployed crosswise along the direction of center line of the bended tunnel to monitor the area of tunnel entrance together. In the same way, cameras C2 and C5 are deployed crosswise along the direction of center line of the bended tunnel to monitor the area of bended tunnel together. Similarly, cameras C4 and C6 are deployed crosswise along the longitudinal direction of the straight tunnel to monitor the area of straight tunnel together.

Figure 10.

(a) The actual deployment of cameras; (b) Gauge points on the ground of the tunnel.

Figure 11.

Topology of the experiment.

The intrinsic parameters of six cameras, including focal length f, image resolution M × N and the physical size of the photosensitive element CMOS are listed in Table 1. Table 3 lists the geometrical parameters of six cameras, including the world coordinates of the locations of cameras, the angle of yaw φ between the WCS xw-axis and the CCS xw-axis in counterclockwise direction and the θ between the WCS zw-axis and the CCS zw-axis in counterclockwise direction.

Table 3.

Geometrical parameters of cameras.

| (xw,yw,zw)/m | φ/° | θ/° | |

|---|---|---|---|

| Camera 1 | (−1.00, 0, 2.87) | −26.6 | 68.0 |

| Camera 2 | (1.50,7.50, 2.90) | −62.7 | 67.4 |

| Camera 3 | (5.50, 11.00, 2.88) | 146.1 | 70.6 |

| Camera 4 | (7.50, 11.50, 2.90) | −91.2 | 71.8 |

| Camera 5 | (13.50, 13.00, 2.87) | 110.1 | 71.2 |

| Camera 6 | (26.00, 11.00, 2.85) | 86.0 | 72.1 |

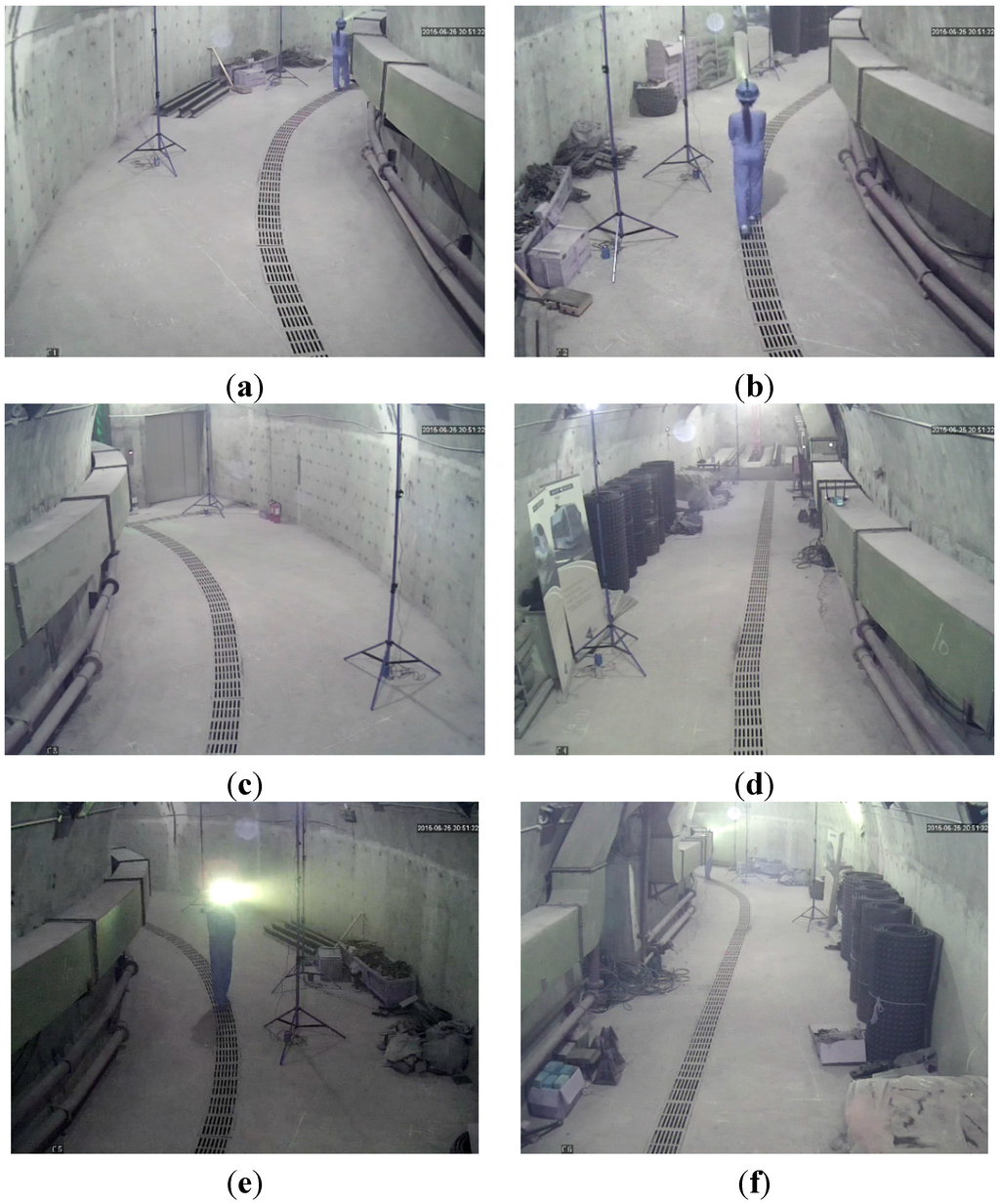

In the test, tester wore dark blue overalls and safety helmet with miner’s lamp turning on to simulate miners in underground tunnels. The tester walked into the tunnel at a normal pace. Cameras C1, C2, C3, C4, C5 and C6 worked together to monitor the tunnel and collected the video information at the same time. Figure 12a–f showed the images of the tunnel collected by cameras C1, C2, C3, C4, C5 and C6 at a certain time point, respectively.

Cameras C1 and C3, cameras C2 and C5, and cameras C4 and C6 detected the miner’s lamp by the difference value between the current images from their video sequences and the background images, respectively. In the experiment, 60% maximum grey value of difference image is selected as threshold. Cameras C1, C2, C3, C4, C5 and C6 detected the miner’s lamp at interval of 0.5 s and calculated the pixel coordinates of mapping points of the miner’s lamp on their own imaging plane. Then, the pixel coordinates were translated to the coordinates in the WCS by applying Equations (1) and (2).

Therefore, when two cameras of a certain group detected the miner’s lamp simultaneously, two straight lines of three-dimension (3-D) between two cameras in the group and their corresponding mapping points of the miner’s lamp could be established in the WCS. By applying Equations (5) and (6) to calculate the optimum intersection of the two straight lines, the position coordinate of three-dimension (3-D) of the miner’s lamp in the WCS could be obtained which provided both the plane coordinate of the tester along the tunnel and the altitude of the miner’s lamp from the tunnel ground.

Figure 12.

(a) The image of the tunnel taken by camera C1; (b) The image of the tunnel taken by camera C2; (c) The image of the tunnel taken by camera C3; (d) The image of the tunnel taken by camera C4; (e) The image of the tunnel taken by camera C5; (f) The image of the tunnel taken by camera C6.

In order to evaluate the localization precision of the proposed algorithm when applied to the realistic scenario of underground tunnel, dozens of gauge points had been marked on the ground of the tunnel, as shown in Figure 10b. Gauge points formed grids with horizontal and vertical spacing of one meter (1 m) on the ground of the tunnel.

In the test, the tester walked and passed these gauge points. The tester would stand and squat about one second (1 s) at each gauge point passed, respectively. The coordinates of gauge points in the WCS and the altitude of the miner’s lamp from the tunnel ground when the tester stood and squatted had been measured manually which provided actual locations of tester’s lamp. When the tester could be located by cameras of two groups simultaneously, the average value of localizations of the two groups was selected as the localization result of the tester’s lamp.

Figure 13 shows the experimental results of the altitude localization of tester’s lamp with the proposed algorithm where the tester stood and squatted at each gauge point passed, respectively. According to the experimental data of Figure 13, when the number of gauge points is 83, the average error of the altitude localization for up-right and squat postures of the tester are 4.7 cm and 3.9 cm by applying Equations (7) and (8), respectively. Generally, when the error of altitude localization is less than 10 cm, the miners’ posture of standing or squatting can be correctly distinguished. Thus, 10 cm error of altitude localization can be accepted. If the altitude of a miner’s lamp is similar to a normal human altitude, it indicates that the miner is in the posture of walking or standing. However, if the altitude of a miner’s lamp is much lower than normal human altitude, it indicates that the miner may be in other postures, for example, in the posture of squat. No matter what posture a miner keeps, the altitude of a miner’s lamp can be obtained with the proposed miner’s lamp video collaborative localization algorithm based on WMSNs and the posture of the miner can be analyzed.

Figure 13.

Experimental data of altitude with the miner’s lamp video collaborative localization.

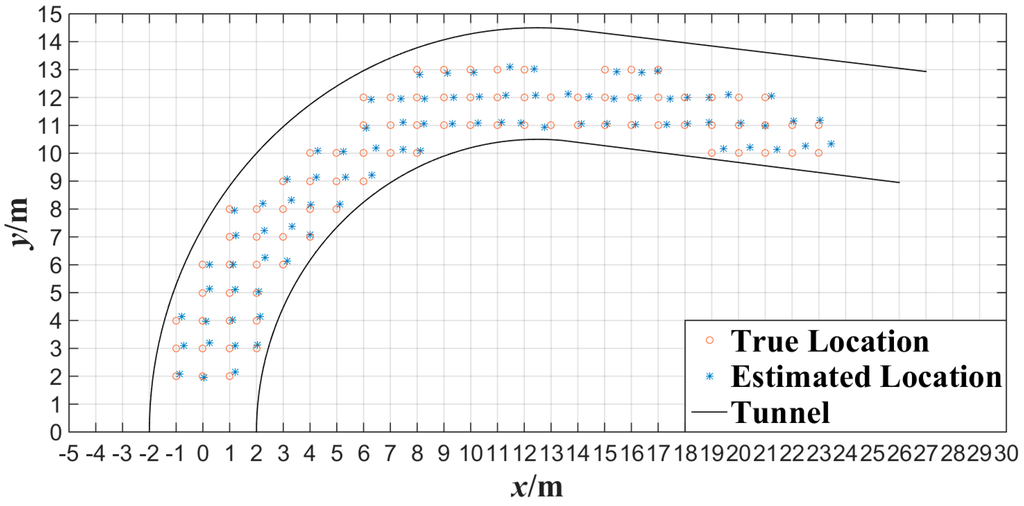

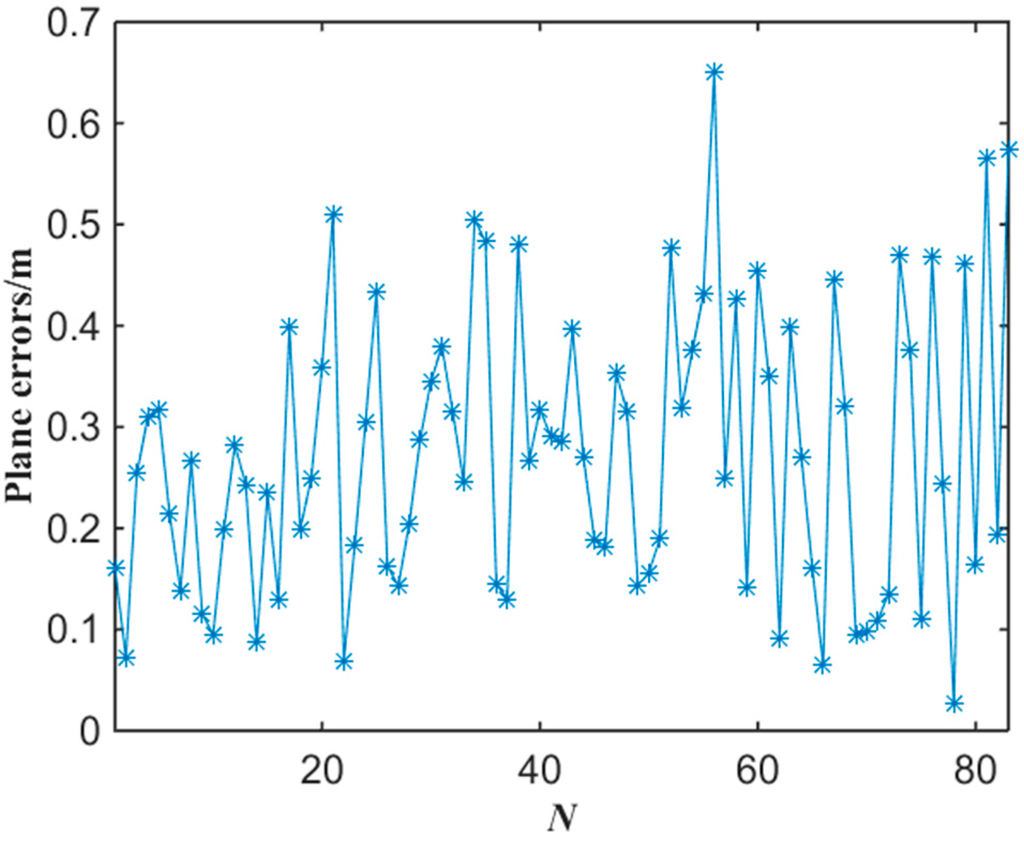

Figure 14 shows the experimental results of the plane localization of tester’s lamp with the proposed algorithm where the tester stood at each gauge point passed. Note that the intersection of grids in Figure 14 is the gauge point with 1 m spacing. We can note that most of the location points estimated have errors in the same direction compared with the gauge points in Figure 14. This is mainly because that the tester’s lamp was always toward the opposite direction of tunnel entrance while walking in the test. According to the experimental data of Figure 14, the error of plane localization for up-right posture of the tester can be obtained by applying Equation (9) as shown in Figure 15. When the number of gauge points is 83, the average error of the plane localization for up-right posture of the tester is 27.4 cm by applying Equation (10). Similarly, when the error of plane localization is less than 50 cm, there will be no obvious deviation for the estimation of plane localization or trajectory of miners along tunnels. The experimental results of Figure 14 and Figure 15 indicate that the plane coordinates of the tester on the ground of a tunnel can be precisely estimated with the proposed miner’s lamp video collaborative localization algorithm. It is worth mentioning that it can be ensured to recognize a miner’s lamp by adjusting the threshold effectively. However, since the illumination of miner’s lamp is generally intensive in different background illumination such as in Figure 4 and Figure 10, 60% maximum grey value of difference image was selected as the threshold, which provided a good performance of recognition and localization accuracy in both scenarios.

Figure 14.

Experimental data of plane localization with the miner’s lamp video collaborative localization.

Figure 15.

The error of plane localization with the miner’s lamp video collaborative localization.

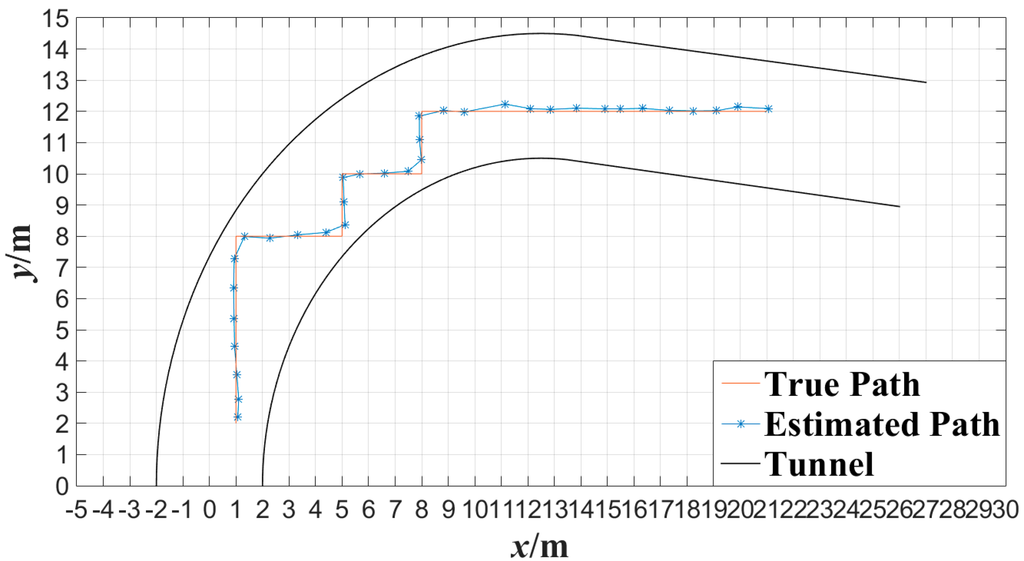

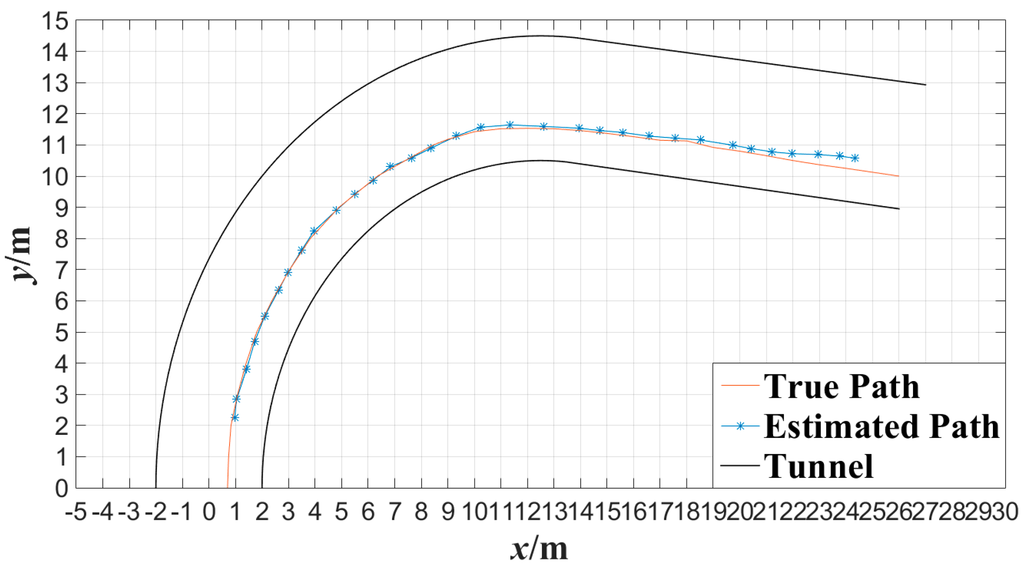

In order to evaluate the performance of the proposed algorithm under the condition that miner moves continuously, cameras C1, C2, C3, C4, C5 and C6 were also applied to detect the movement of the tester in the tunnel. The tester walked along a 90° broken line and a curved line in the tunnel at a normal pace, respectively. Figure 16 shows the tester’s actual motion trajectory along the 90° broken line as well as the estimated motion trajectory with the proposed algorithm at interval of 0.5 s. Figure 16 shows the tester’s actual motion trajectory along the curved tunnel as well as the estimated motion trajectory with the proposed algorithm at interval of 0.5 s. In Figure 16 and Figure 17, the motion trajectory estimated is obtained by connecting two adjacent tester’s locations with line segments. From Figure 16 and Figure 17, it can be observed that the motion trajectory of a miner can be estimated accurately by locating the miner continuously with the proposed miner’s lamp video collaborative localization algorithm. By this way, a miner can be tracked along tunnels.

Figure 16.

Trajectory of broken line tracked with the miner’s lamp video collaborative localization.

Figure 17.

Trajectory of curved line tracked with the miner’s lamp video collaborative localization.

5. Conclusions

Wireless multimedia sensor networks (WMSNs) will improve the monitoring performance of underground coal mines significantly. Based on WMSNs deployed in underground coal mine, a miner’s lamp video collaborative localization algorithm was proposed to locate miners in the scene of bifurcated structures of underground tunnels.

- (1)

- To detect a miner’s lamp, it is proposed to apply background difference method to get the difference value between the background image of underground tunnels and the current image of the video sequences collected.

- (2)

- A least squares method is proposed to find the optimal intersection which solves the problem that multiple straight lines between the positions of cameras and their corresponding mapping points generally cannot intersect at one point.

- (3)

- The experimental results in a corridor indicate that the average error of the altitude localization is 3.7 cm, and the average error of the plane localization is about 10 cm with the proposed algorithm. The experimental results in an underground tunnel indicate that the average error of the altitude localization for up-right and squat postures of the tester are 4.7 cm and 3.9 cm respectively, and the average error of the plane localization for up-right posture of the tester is 27.4 cm with the proposed algorithm.

Acknowledgments

This work was supported by the Natural Science Foundation of China under Grant (51474015) and the National Science & Technology Pillar Program (2013BAK06B03).

Author Contributions

The work was carried out in collaboration between all authors. Kaiming You, Wei Yang and Ruisong Han conceived and designed the experiments. Wei Yang contributed materials. Kaiming You performed the experiments. Kaiming You and Wei Yang analyzed the data. Kaiming You wrote the paper. Each author contributed with expertise in their domain. All authors have contributed to, seen and approved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xu, J.; Yang, W.; Zhang, L.; Han, R.; Shao, X. Multi-Sensor Detection with Particle Swarm Optimization for Time-Frequency Coded Cooperative WSNs Based on MC-CDMA for Underground Coal Mines. Sensors 2015, 15, 21134–21152. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, W.; Han, D.; Kim, Y.I. An Integrated Environment Monitoring System for Underground Coal Mines—Wireless Sensor Network Subsystem with Multi-Parameter Monitoring. Sensors 2014, 14, 13149–13170. [Google Scholar] [CrossRef] [PubMed]

- Pei, Z.; Deng, Z.; Xu, S.; Xu, X. Anchor-Free Localization Method for Mobile Targets in Coal Mine Wireless Sensor Networks. Sensors 2009, 9, 2836–2850. [Google Scholar] [CrossRef] [PubMed]

- Sun, J. Technologies of Monitoring and Communication in the Coal Mine. J. China Coal Soc. 2010, 35, 1925–1929. (In Chinese) [Google Scholar]

- Yang, W.; Feng, X.; Cheng, S.; Sun, J. The Theories and Key Technologies for the New Generation Mine Wireless Information System. J. China Coal Soc. 2004, 29, 506–509. (In Chinese) [Google Scholar]

- Almalkawi, I.T.; Zapata, M.G.; Al-Karaki, J.N.; Morollo-Pozo, J. Wireless Multimedia Sensor Networks: Current Trends and Future Directions. Sensors 2010, 10, 6662–6717. [Google Scholar] [CrossRef] [PubMed]

- Akyildiz, I.F.; Melodia, T.; Chowdhury, K.R. A Survey on Wireless Multimedia Sensor Networks. Comput. Netw. 2007, 51, 921–960. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Melodia, T.; Chowdhury, K.R. Wireless Multimedia Sensor Networks: Applications and Testbeds. Proc. IEEE 2008, 96, 1588–1605. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, Z.; Zhao, K. Research on Distributed Image Compression Algorithm in Coal Mine WMSN. International Journal of Digital Content Technology and Its Applications. Adv. Inst. Converg. Inf. Technol. 2011, 5, 283–291. [Google Scholar]

- De, D.; Gupta, M.D.; Sen, A. Energy Efficient Target Tracking Mechanism Using Rotational Camera Sensor in WMSN. Proced. Technol. 2012, 6, 674–681. [Google Scholar] [CrossRef]

- Qi, M.; Zhang, R.; Jiang, J.; Li, X. Moving Object Localization with Single Camera Based on Height Model in Video Surveillance. In Proceedings of the 1st International Conference on Bioinformatics and Biomedical Engineering, Wuhan, China, 6–8 July 2007; pp. 490–493.

- Öztarak, H.; Akkaya, K.; Yazici, A. Lightweight Object Localization with a Single Camera in Wireless Multimedia Sensor Networks. In Proceedings of the Global Telecommunications Conference, Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–6.

- Zhang, B.; Luo, H.; Liu, J.; Zhao, F.; Liu, S.; Lin, Y. Passive Target Localization Algorithm for Multimedia Sensor Networks. J. Southeast Univ. Nat. Sci. Edit. 2011, 41, 266–269. (In Chinese) [Google Scholar]

- Liu, L.; Zhang, X.; Ma, H. Optimal Node Selection for Target Localization in Wireless Camera Sensor Networks. IEEE Trans. Veh. Technol. 2010, 59, 3562–3576. [Google Scholar] [CrossRef]

- Liu, L.; Ma, H.; Zhang, X. Collaborative Target Localization in Camera Sensor Networks. In Proceedings of the IEEE Wireless Communications and Networking Conference, Las Vegas, NV, USA, 31 March–3 April 2008; pp. 2403–2407.

- Li, W.; Portilla, J.; Moreno, F.; Liang, G.; Riesgo, T. Improving Target Localization Accuracy of Wireless Visual Sensor Networks. In Proceedings of the 37th IEEE Industrial Electronics Conference, Melbourne, Australia, 7–10 November 2011; pp. 3814–3819.

- Lin, Q.; Zeng, X.; Jiang, X.; Jin, X. Video Nodes Based Collaborative Object Localization Framework in Wireless Multimedia Sensor Networks. Equip. Manuf. Technol. Autom. 2011, 317, 1078–1083. [Google Scholar] [CrossRef]

- Karakara, M.; Qi, H. Collaborative Localization in Visual Sensor Networks. ACM Trans. Sens. Netw. 2014, 10, 127–146. [Google Scholar]

- Boulanouar, T.; Lohier, S.; Rachedi, A.; Roussel, G. A Collaborative Tracking Algorithm for Communicating Target in Wireless Multimedia Sensor Networks. In Proceeding of the Wireless and Mobile Networking Conference, Vilamoura, Portugal, 20–22 May 2014; pp. 1–7.

- Chilgunde, A.; Kumar, P.; Ranganath, S.; Huang, W. Multi-Camera Target Tracking in Blind Regions of Cameras with Non-Overlapping Fields of View. In Proceeding of the British Machine Vision Conference (BMVC), London, UK, 7–9 September 2004; pp. 397–406.

- Sun, J.; Jia, N. Human Target Matching and Visual Tracking Method in Coal Mine. J. China Univ. Min. Technol. 2015, 44, 525–533. (In Chinese) [Google Scholar]

- Pescaru, D.; Curiac, D.I. Anchor Node Localization for Wireless Sensor Networks Using Video and Compass Information Fusion. Sensors 2014, 14, 4211–4224. [Google Scholar] [CrossRef] [PubMed]

- Tron, R.; Terzis, A.; Vidal, R. Distributed Consensus Algorithms for Image-Based Localization in Camera Sensor Networks; Springer London: London, UK, 2011; pp. 289–302. [Google Scholar]

- Zhang, B.; Liu, J.; Luo, H. Monocular Vision-Based Distributed Node Localization for Wireless Multimedia Sensor Networks. In Proceedings of the 2nd International Congress on Image & Signal, Tianjin, China, 17–19 October 2009; pp. 1–5.

- Elhamifar, E.; Vidal, R. Distributed Calibration of Camera Sensor Networks. In Proceedings of the Third ACM/IEEE International Conference on Distributed Smart Cameras, Como, Italy, 30 August–2 September 2009; pp. 1–7.

- Barton-Sweeney, A.; Lymberopoulos, D.; Savvides, A. Sensor Localization and Camera Calibration in Distributed Camera Sensor Networks. Broadband Communications. In Proceedings of the 3rd International Conference on Networks and Systems, San Jose, CA, USA, 1–5 October 2006; pp. 1–10.

- Piccardi, M. Background Subtraction Techniques: A Review. IEEE Int. Conf. Syst. Man Cybern. 2004, 4, 3099–3104. [Google Scholar]

- Heikkilä, J.; Silvén, O. A Four-Step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Puerto Rico, Territory, 17–19 January 1997; pp. 1106–1112.

- Douxchamps, D.; Chihara, K. High-Accuracy and Robust Localization of Large Control Markers for Geometric Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intel. 2009, 31, 376–383. [Google Scholar] [CrossRef] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).