In Silico Prediction of Drug-Induced Liver Injury Based on Ensemble Classifier Method

Abstract

1. Introduction

2. Results

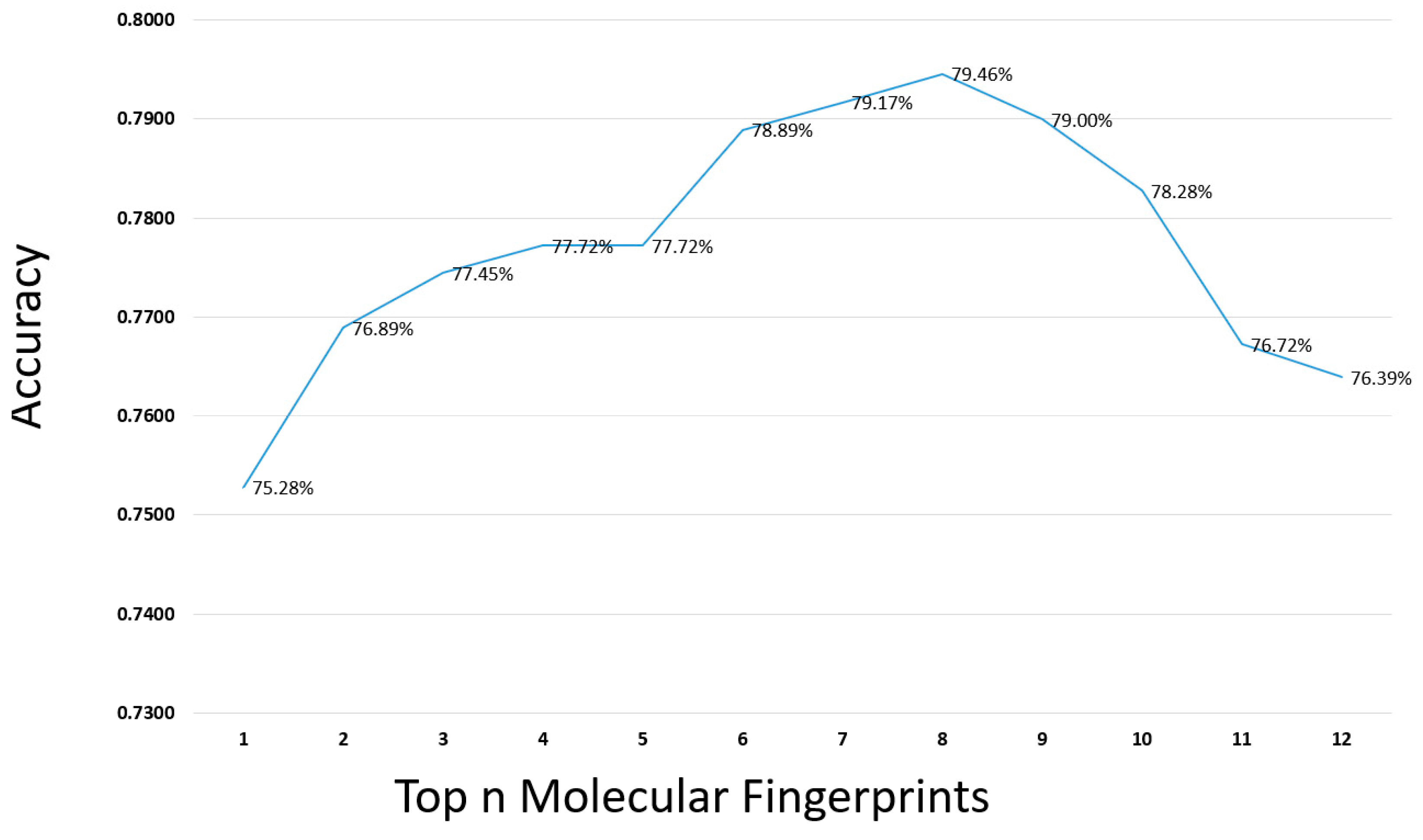

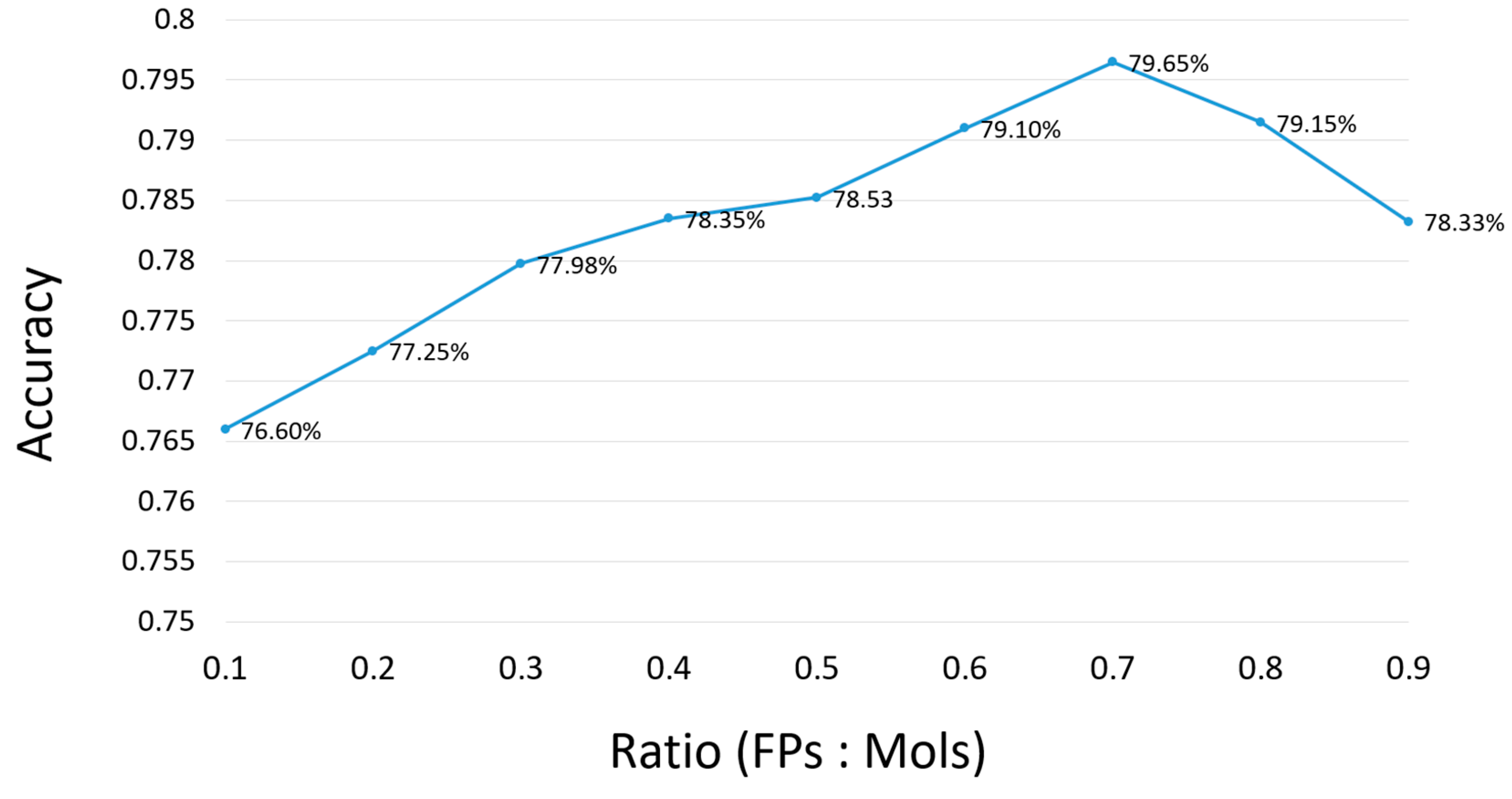

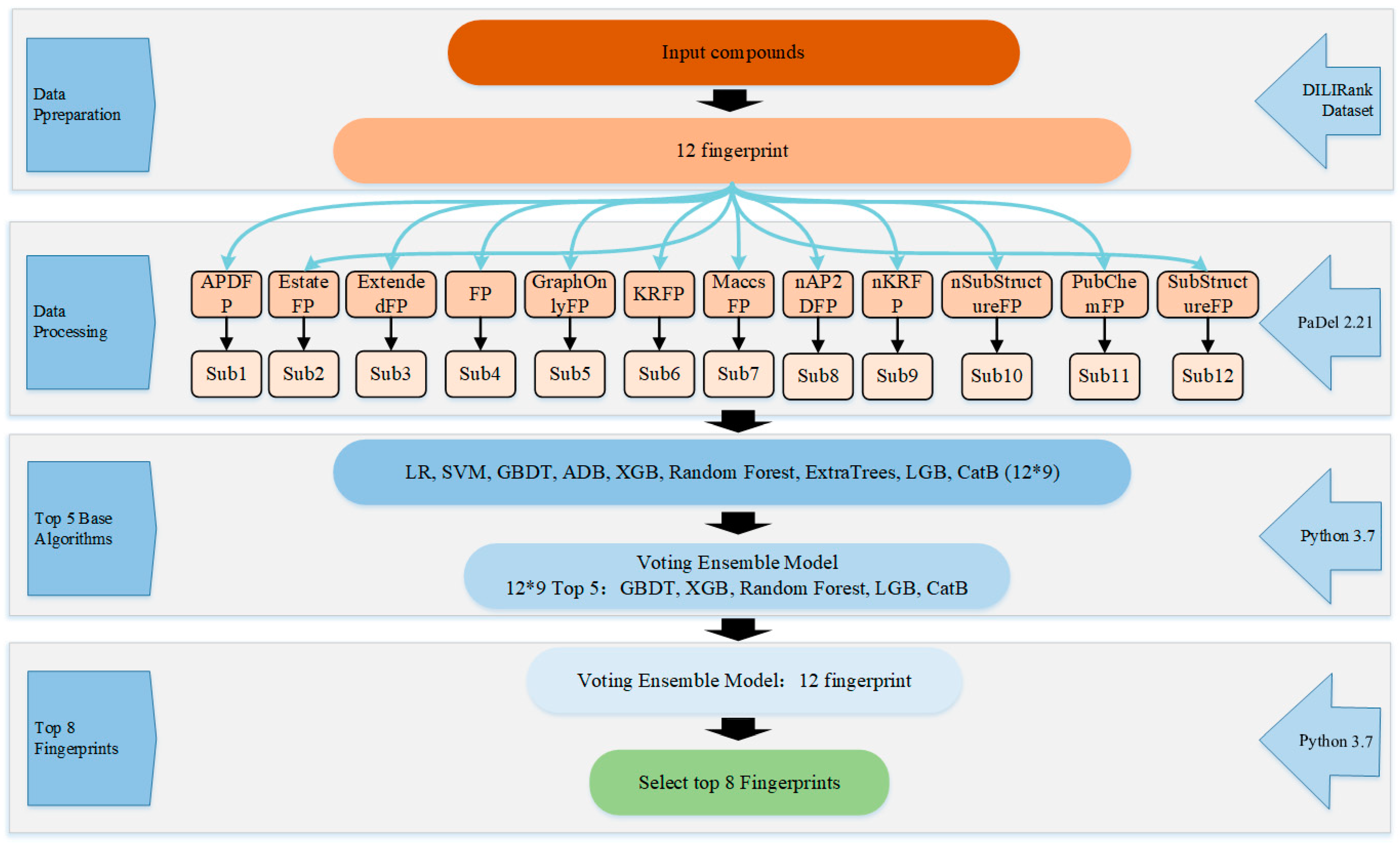

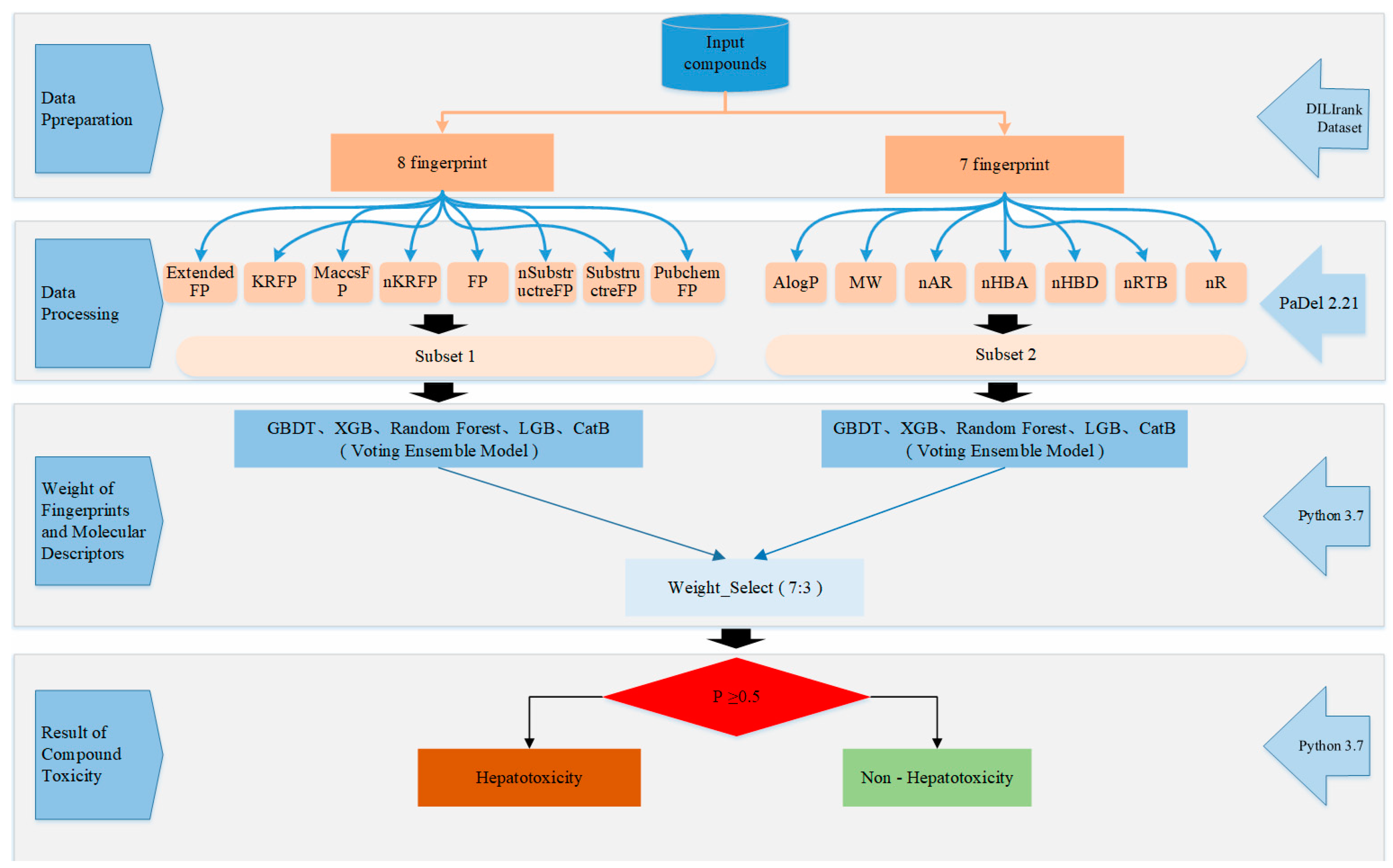

2.1. Parameter Selection for the Proposed Method

2.2. Performance of the Proposed Method

3. Discussion

3.1. Comparison with Previous Methods on Different Datasets

3.2. Comparison with Previous Models on the Same Dataset

3.3. Molecular Descriptors and Fingerprints related to Hepatotoxicity

3.4. Applicability Domain of Model

4. Materials and Methods

4.1. Data Preparation

4.2. Calculation of Molecular Fingerprints

4.3. Feature Selection

4.4. Model Building

4.4.1. Base Classifiers

4.4.2. Ensemble Model

4.5. Performance Evaluation

5. Conclusion

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Paul, S.M.; Mytelka, D.S.; Dunwiddie, C.T.; Persinger, C.C.; Munos, B.H.; Lindborg, S.R.; Schacht, A.L. How to improve R&D productivity: The pharmaceutical industry’s grand challenge. Nat. Rev. Drug Discov. 2016, 9, 203–214. [Google Scholar]

- Kola, I.; Landis, J. Can the pharmaceutical industry reduce attrition rates? Nat. Rev. Drug Discov. 2004, 3, 711–715. [Google Scholar] [CrossRef] [PubMed]

- Arrowsmith, J.; Miller, P. Trial watch: Phase II failures: 2008–2010. Nat. Rev. Drug Discov. 2011, 10, 328–329. [Google Scholar] [CrossRef] [PubMed]

- Ballet, F. Hepatotoxicity in drug development: Detection, significance and solutions. J. Hepatol. 1997, 26, 26–36. [Google Scholar] [CrossRef]

- Ivanov, S.; Semin, M.; Lagunin, A.; Filimonov, D.; Poroikov, V. In Silico Identification of Proteins Associated with Drug-induced Liver Injury Based on the Prediction of Drug-target Interactions. Mol. Inform. 2017, 36. [Google Scholar] [CrossRef] [PubMed]

- Liew, C.Y.; Lim, Y.C.; Yap, C.W. Mixed learning algorithms and features ensemble in hepatotoxicity prediction. J. Comput. Aided Mol. Des. 2011, 25, 855. [Google Scholar] [CrossRef] [PubMed]

- Ekins, S. Progress in computational toxicology. J. Pharmacol. Toxicol. Methods 2014, 69, 115–140. [Google Scholar] [CrossRef] [PubMed]

- Przybylak, K.R. In silico models for drug-induced liver injury—Current status. Expert Opin. Drug Metab. Toxicol. 2012, 8, 201–217. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Hong, H.; Fang, H.; Kelly, R.; Zhou, G.; Borlak, J.; Tong, W. Quantitative Structure-Activity Relationship Models for Predicting Drug-Induced Liver Injury Based on FDA-Approved Drug Labeling Annotation and Using a Large Collection of Drugs. Toxicol. Sci. 2013, 136, 242–249. [Google Scholar] [CrossRef] [PubMed]

- Marzorati, M. How to get more out of molecular fingerprints: Practical tools for microbial ecology. Environ. Microbiol. 2008, 10, 1571–1581. [Google Scholar] [CrossRef]

- Zhu, X.W. In Silico Prediction of Drug-Induced Liver Injury Based on Adverse Drug Reaction Reports. Toxicol. Sci. 2017, 158, 391–400. [Google Scholar] [CrossRef] [PubMed]

- Ekins, S.; Williams, A.J.; Xu, J.J. A predictive ligand-based Bayesian model for human drug-induced liver injury. Drug Metab. Dispos. 2010, 38, 2302–2308. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Suzuki, A.; Thakkar, S.; Yu, K.; Hu, C.; Tong, W. DILIrank: The largest reference drug list ranked by the risk for developing drug-induced liver injury in humans. Drug Discov. Today 2016, 21, 648–653. [Google Scholar] [CrossRef] [PubMed]

- Hong, H.; Thakkar, S.; Chen, M.; Tong, W. Development of Decision Forest Models for Prediction of Drug-Induced Liver Injury in Humans Using A Large Set of FDA-approved Drugs. Sci. Rep. 2017, 7, 17311. [Google Scholar] [CrossRef] [PubMed]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Zhang, H.; Ding, L.; Zou, Y.; Hu, S.Q.; Huang, H.G.; Kong, W.B.; Zhang, J. Predicting drug-induced liver injury in human with Naïve Bayes classifier approach. J. Comput. Aided Mol. Des. 2016, 30, 889–898. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Golbraikh, A.; Oloff, S.; Kohn, H.; Tropsha, A. A Novel Automated Lazy Learning QSAR (ALL-QSAR) Approach: Method Development, Applications, and Virtual Screening of Chemical Databases Using Validated ALL-QSAR Models. J. Chem. Inf. Model 2006, 46, 1984–1995. [Google Scholar] [CrossRef] [PubMed]

- Melagraki, G.; Ntougkos, E.; Rinotas, V.; Papaneophytou, C.; Leonis, G.; Mavromoustakos, T.; Kontopidis, G.; Douni, E.; Afantitis, A.; Kollias, G. Cheminformatics-aided discovery of small-molecule Protein-Protein Interaction (PPI) dual inhibitors of Tumor Necrosis Factor (TNF) and Receptor Activator of NF-κB Ligand (RANKL). PLoS Comput. Biol. 2017, 13, e1005372. [Google Scholar] [CrossRef]

- Hou, T.; Wang, J. Structure—ADME relationship: Still a long way to go? Expert Opin. Drug Metab. Toxicol. 2008, 4, 759–770. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Chen, H.; Li, H.; Zhao, Y. Insights into the Molecular Basis of the Acute Contact Toxicity of Diverse Organic Chemicals in the Honey Bee. Chem. Inf. Model. 2017, 57, 2948–2957. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, Y.; Gu, S.; Wu, Z.; Wu, W.; Liu, C.; Wang, K.; Liu, G.; Li, W.; Lee, P.W. In silico prediction of hERG potassium channel blockage by chemical category approaches. Toxicol. Res. 2016, 5, 570–582. [Google Scholar] [CrossRef] [PubMed]

- Yap, C.W. PaDEL-descriptor: An open source software to calculate molecular descriptors and fingerprints. J. Comput. Chem. 2011, 32, 1466–1474. [Google Scholar] [CrossRef] [PubMed]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Nielsen, D. Tree Boosting with XGBoost—Why Does XGBoost Win “every” Machine Learning Competition? Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2016. [Google Scholar]

- Sheridan, R.P.; Wang, W.M.; Liaw, A.; Ma, J.; Gifford, E.M. Extreme gradient boosting as a method for quantitative structure-activity relationships. Chem. Inf. Model 2016, 5612, 2353. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Lear. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 36, 3–42. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4 December 2017. [Google Scholar]

- Anna, V.D.; Vasily, E.; Andrey, G. CatBoost: Gradient Boosting with Categorical Features Support. 2018. Available online: https://arxiv.org/abs/1810.11363 (accessed on 19 August 2019).

- Roli, F.; Giacinto, G.; Vernazza, G. Methods for Designing Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2001; pp. 78–87. [Google Scholar]

- Rokach, L. Ensemble-based classifiers. Artif. Intell. Rev. 2010, 33, 1–39. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, P.; Wang, B.; Zhang, J.; Li, J. Hot Spot prediction in protein-protein interactions by an ensemble learning. BMC Syst. Biol. 2018, 12, 132. [Google Scholar] [CrossRef]

- Hu, S.S.; Chen, P.; Wang, B.; Li, J. Protein binding hot spots prediction from sequence only by a new ensemble learning method. Amino Acids 2017, 49, 1773–1785. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Wang, N.; Chen, P.; Zheng, C.; Wang, B. Prediction of protein hot spots from whole sequences by a random projection ensemble system. Int. J. Mol. Sci 2017, 18, 1543. [Google Scholar] [CrossRef] [PubMed]

- Varsou, D.-D.; Afantitis, A.; Tsoumanis, A.; Melagraki, G.; Sarimveis, H.; Valsami-Jones, E.; Lynch, I. A safe-by-design tool for functionalised nanomaterials through the Enalos Nanoinformatics Cloud platform. Nanoscale Adv. 2019, 1, 706–718. [Google Scholar] [CrossRef]

- Chen, P.; Wang, B.; Zhang, J.; Gao, X.; Li, J.-Y.; Xia, J.-F. A sequence-based dynamic ensemble learning system for protein ligand-binding site prediction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2016, 13, 901–912. [Google Scholar] [CrossRef] [PubMed]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

| No | Descriptor | Base Classifier | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LR | SVM | GDBT | AdaBT | XGBT | RF | ExtraTrees | LGBT | CatBT | ||

| 1 | AP2DFP | 0.7222 # | 0.6978 | 0.7322 | 0.7233 | 0.7222 | 0.7067 | 0.6944 | 0.6911 | 0.7278 |

| 2 | Estate FP | 0.7078 | 0.7044 | 0.7278 | 0.6811 | 0.7322 | 0.7433 | 0.7211 | 0.7233 | 0.7289 |

| 3 | ExtendedFP | 0.7789 | 0.7322 | 0.7511 | 0.7355 | 0.7556 | 0.7778 | 0.7333 | 0.7689 | 0.7933 |

| 4 | FP | 0.7133 | 0.6878 | 0.7500 | 0.7111 | 0.7478 | 0.7444 | 0.7056 | 0.7422 | 0.7811 |

| 5 | GraphOnlyFP | 0.7067 | 0.6689 | 0.7267 | 0.7056 | 0.7211 | 0.7345 | 0.7089 | 0.7178 | 0.7151 |

| 6 | KRFP | 0.7500 | 0.7344 | 0.7522 | 0.7211 | 0.7578 | 0.7811 | 0.7622 | 0.7611 | 0.7789 |

| 7 | MaccsFP | 0.7300 | 0.7045 | 0.7389 | 0.7256 | 0.7578 | 0.7722 | 0.7456 | 0.7589 | 0.7722 |

| 8 | nAP2DFP | 0.6933 | 0.6822 | 0.7144 | 0.6889 | 0.7078 | 0.7044 | 0.7055 | 0.7033 | 0.7033 |

| 9 | nKRFP | 0.7522 | 0.7056 | 0.7589 | 0.7356 | 0.7544 | 0.7733 | 0.7567 | 0.7545 | 0.7578 |

| 10 | nSubstructreFP | 0.7111 | 0.7033 | 0.7644 | 0.7111 | 0.7378 | 0.77 | 0.7355 | 0.7289 | 0.7633 |

| 11 | PubchemFP | 0.7278 | 0.6956 | 0.7522 | 0.7100 | 0.7389 | 0.75 | 0.7167 | 0.7322 | 0.7589 |

| 12 | SubstructreFP | 0.7300 | 0.7267 | 0.7500 | 0.7378 | 0.7244 | 0.7911 | 0.7700 | 0.7189 | 0.7622 |

| Number (Top 5) | 2 | 0 | 9 | 2 | 10 | 11 | 6 | 9 | 11 | |

| NO | Fingerprint | Average Accuracy |

|---|---|---|

| 3 | ExtendedFP | 0.7693 |

| 6 | KRFP | 0.7662 |

| 7 | MaccsFP | 0.7600 |

| 9 | nKRFP | 0.7598 |

| 4 | FP | 0.7531 |

| 10 | nSubstructreFP | 0.7529 |

| 12 | SubstructreFP | 0.7493 |

| 11 | PubchemFP | 0.7464 |

| 2 | EStateFP | 0.7311 |

| 5 | GraphOnlyFP | 0.7230 |

| 1 | AP2DFP | 0.7160 |

| 8 | nAP2DFP | 0.7067 |

| Algorithms/Fingerprints | LR | SVC | GBDT | ADB | XGB | Random Forest | Extra Trees | LGB | CatB |

|---|---|---|---|---|---|---|---|---|---|

| AP2DFP | 0.7000 | 0.6600 | 0.6200 | 0.5800 | 0.6800 | 0.6640 | 0.6480 | 0.6800 | 0.6360 |

| Estate FP | 0.6600 | 0.6800 | 0.7000 | 0.6800 | 0.7000 | 0.6880 | 0.7200 | 0.7000 | 0.7160 |

| ExtendedFP | 0.7800 | 0.7400 | 0.7000 | 0.7600 | 0.7400 | 0.7480 | 0.7360 | 0.6600 | 0.7800 |

| FP | 0.6600 | 0.7000 | 0.7440 | 0.6600 | 0.7000 | 0.7240 | 0.6760 | 0.7400 | 0.7320 |

| GraphOnlyFP | 0.6000 | 0.6000 | 0.6720 | 0.6400 | 0.7200 | 0.6840 | 0.6720 | 0.7200 | 0.6960 |

| KRFP | 0.7200 | 0.6400 | 0.7240 | 0.6600 | 0.7000 | 0.7840 | 0.7600 | 0.7400 | 0.7520 |

| MaccsFP | 0.7600 | 0.7200 | 0.7200 | 0.7000 | 0.7200 | 0.7520 | 0.7040 | 0.7200 | 0.7360 |

| nAP2DFP | 0.6600 | 0.6200 | 0.6360 | 0.6000 | 0.6600 | 0.7040 | 0.6600 | 0.6400 | 0.6880 |

| nKRFP | 0.6600 | 0.6200 | 0.7160 | 0.7400 | 0.7200 | 0.7520 | 0.7480 | 0.7000 | 0.7320 |

| nSubstructreFP | 0.7200 | 0.6400 | 0.6400 | 0.5400 | 0.6400 | 0.5920 | 0.6280 | 0.6200 | 0.6200 |

| PubchemFP | 0.7200 | 0.7600 | 0.7040 | 0.6200 | 0.7400 | 0.7760 | 0.7360 | 0.6600 | 0.7480 |

| SubstructreFP | 0.7400 | 0.7600 | 0.7200 | 0.7200 | 0.7000 | 0.7360 | 0.7280 | 0.6800 | 0.7440 |

| Model Name | No. of Compounds | Test Method | Q (%) | SE (%) | SP (%) | AUC(%) |

|---|---|---|---|---|---|---|

| Bayesian [12] | 295 | 10-fold CV×100 | 58.5 | 52.8 | 65.5 | 62.0 |

| Decision Forest [9] | 197 | 10-fold CV×2000 | 69.7 | 57.8 | 77.9 | – |

| Naive Bayesian [16] | 420 | Test set | 72.6 | 72.5 | 72.7 | – |

| Our Method | 450 | 5-fold CV×1000 | 77.25 | 64.38 | 85.83 | 75.10 |

| Test set | 81.67 | 64.55 | 96.15 | 80.35 |

| Model Name | No. of Compounds | Test Method | Q (%) | SE (%) | SP (%) | AUC (%) | MCC (%) |

|---|---|---|---|---|---|---|---|

| Decision Forest [14] | 451 | 5-fold CV | 72.9 | 62.8 | 79.8 | – | 51.4 |

| Our Method | 450 | 5-fold CV | 76.9 | 62.2 | 87.0 | 74.6 | 43.2 |

| Fingerprint Type | Abbreviation | Pattern Type | Size (bits) |

|---|---|---|---|

| CDK | FP | Hash fingerprints | 1024 |

| CDK Extended | ExtendedFP | Hash fingerprints | 1024 |

| CDK GraphOnly | GraphOnlyFP | Hash fingerprints | 1024 |

| Estate | EstateFP | Structural features | 79 |

| MACCS | MaccsFP | Structural features | 166 |

| Pubchem | PubchemFP | Structural features | 881 |

| Substructure | Substructure | Structural features | 307 |

| Substructure Count | nSubstructure | Structural features count | 307 |

| Klekota-Roth | KRFP | Structural features | 4860 |

| Klekota-Roth Count | nKRFP | Structural features count | 4860 |

| 2D Atom Pairs | AP2D | Structural features | 780 |

| 2D Atom Pairs Count | nAP2DC | Structural features count | 780 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Xiao, Q.; Chen, P.; Wang, B. In Silico Prediction of Drug-Induced Liver Injury Based on Ensemble Classifier Method. Int. J. Mol. Sci. 2019, 20, 4106. https://doi.org/10.3390/ijms20174106

Wang Y, Xiao Q, Chen P, Wang B. In Silico Prediction of Drug-Induced Liver Injury Based on Ensemble Classifier Method. International Journal of Molecular Sciences. 2019; 20(17):4106. https://doi.org/10.3390/ijms20174106

Chicago/Turabian StyleWang, Yangyang, Qingxin Xiao, Peng Chen, and Bing Wang. 2019. "In Silico Prediction of Drug-Induced Liver Injury Based on Ensemble Classifier Method" International Journal of Molecular Sciences 20, no. 17: 4106. https://doi.org/10.3390/ijms20174106

APA StyleWang, Y., Xiao, Q., Chen, P., & Wang, B. (2019). In Silico Prediction of Drug-Induced Liver Injury Based on Ensemble Classifier Method. International Journal of Molecular Sciences, 20(17), 4106. https://doi.org/10.3390/ijms20174106