Abstract

Chalcogenide glasses (ChGs) are a class of amorphous materials presenting remarkable mechanical, optical, and electrical properties, making them promising candidates for advanced photonic and optoelectronic applications. With the increasing integration of artificial intelligence in modern materials design, we are able to systematically select, prepare, and optimize appropriate compositions for desired applications in a manner that was unachievable before. This study employs various machine learning models to reliably predict the refractive index at 20 °C using a small dataset of 541 samples extracted from the SciGlass database. The input for the algorithms consists of a selected set of physico-chemical features computed for the chemical composition of each entry. Additionally, these algorithms served as inner models for an ensemble logistic regression estimator that achieved a superior value of 0.8985. SHAP feature analysis of the second-best model, CatBoostRegressor ( = 0.8920), revealed the importance of elemental density, atomic weight, ground state atomic gap, and fraction of p valence electrons in tuning the value of the refractive index of a chalcogenide compound.

1. Introduction

Inorganic vitreous compounds that contain no oxygen and include sulfur, selenium, or tellurium are known as chalcogenide glasses (ChGs). Their peculiar structure, characterized by the predominance of covalent and van der Waals bonds between chalcogens and the constituting elements gives them unique mechanical, optical, and electrical properties. Sulfur and selenium naturally tend to form concatenated structures, either octaatomic molecules or long -S-S-/-Se-Se- randomly tangled chains that interact through dispersion forces [1]. In amorphous Te, the chains are much shorter, and some atoms exhibit 1-fold and 3-fold coordination [2]. The existence of additional elements within the chalcogenide matrix generally increases the average coordination number in the material, forming bridges between chains and creating interlaced 2D and 3D disordered architectures. Specifically, halogens terminate chains; group V elements (such as P, As and Sb) are 3-fold coordinated, extending the glass network in 2D; and group IV elements (such as Si and Ge) introduce tetrahedral structures, resulting in a reticulated 3D glass structure. ChGs present low frequency vibrations enabling them to transmit light with wavelengths up to 20 μm (for telluride glasses). Large refractive indices and nonlinear optical phenomena are unique for this class of materials. Today, ChG lenses and waveguides are primary components in thermal imaging devices and optical sensors [1].

The refractive index () is a fundamental bulk optical property in the modern glass industry, and its value must be known with precision. It is defined as the ratio between the speed of light in vacuum and its value in the material’s medium. This property depends on the wavelength of light and the characteristics of the material, which vary with temperature. In practice, the refractive index reported for a material is typically measured for two closely spaced wavelengths as follows: the yellow emission line of sodium (589.3 nm) and that for helium (587.6 nm), with only minor differences in their values [3]. However, since most ChGs are opaque or very poor transmitters for visible radiation, measurements of their refractive index are performed at the wavelengths of interest, typically between 1 μm and 12 μm [1].

Certain physical parameters of constituents in the material influence the value of . For example, in ionic glasses (such as oxidic glasses, fluoride glasses, and borate and phosphate glasses), the polarizability and electronic density of the constituent anions directly determine the refractive index [3]. To infer significant relationships between the physical and chemical parameters and a material property, modern machine learning approaches are preferable to empirical methods. This has been recently proven for the refractive index in a dataset of general inorganic compounds too [4]. Machine learning, a subdomain of artificial intelligence, employs algorithms capable of identifying patterns in data and making predictions about variables. Supervised learning techniques, in particular, rely on input data to predict a target variable [5].

For ChGs, the refractive index was previously studied among other mechanical, electrical, and thermal properties. Mastelini et al. [6] collected data from the SciGlass database, while Singla et al. [7] incorporated the INTERGLAD database. Their coefficients of determination () at testing were 0.87 [6] and 0.916 [7], respectively. These studies employed common machine learning algorithms on datasets where the features represented the chemical composition in atomic percents and included a Shapley additive explanations (SHAP) feature interpretation to reveal their interactions and contributions to the property’s value.

The novelty of our study is based upon the feature representation of the data points, which we collected from SciGlass [8] with our criteria. This approach allows for a more fundamental understanding of the relationship between the refractive index and intrinsic atomic properties. Furthermore, our representation is more general, in the sense that our models can predict compositions containing elements outside the training data, provided that their atomic properties are documented.

2. Results and Discussion

2.1. Performance of the Models

Table 1 presents the three performance metrics for training, cross-validation, and testing for each optimized model. Among all models, CATR achieves the best performance across all datasets, while ADR yields the least satisfactory results on unseen data. The significant improvement in efficiency for ADR compared to its default version is due to a two-step optimization process as follows: tuning the hyperparameters of the inner decision tree model first, followed by optimizing the hyperparameters of the ensemble adaptive boosting model. With the exception of ADR, all models achieved an of at least 0.850 on the test data, which demonstrates both reliability and the benefit of optimization.

Table 1.

Performance of the models after optimization. The highest performance values and the best-performing model are highlighted in bold.

To further improve the test scores, we combined optimized models using a linear regression meta-model (LR) [9]. The predicted refractive index from the optimized models served as input for training LR, forming what we refer to as the “base set”. Ideally, predictions should be made on completely unseen data, but due to the limited size of the cross-validation set (87 entries), we included samples from the training set, where the prediction error ≥ x% of the real value, with x ∈ {5, 6, 7, 8, 9, 10}. Among the different model combinations and x values tested, the best performance was achieved when using ETR, CATR, RFR, and ADR predictions with x = 8 on a base set of 92 entries (5 from the train set) (Table 2).

Table 2.

Performance of the LR meta-model depending on its base set.

2.2. Prediction of the Refractive Index of Experimental Samples

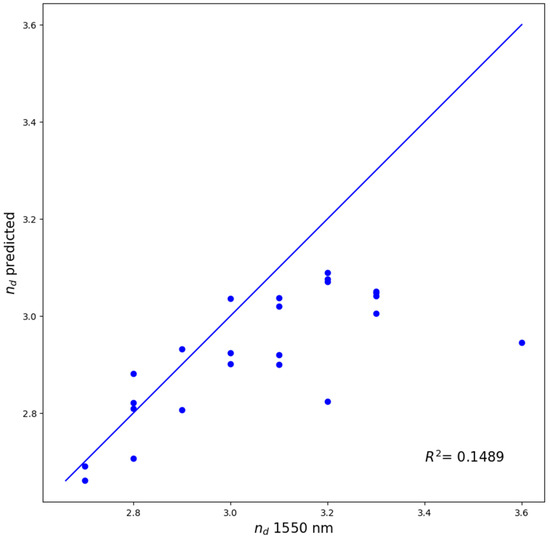

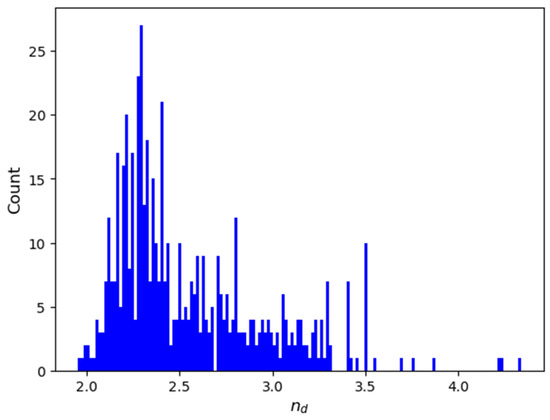

To further assess the practical accuracy of our machine learning model, we compared the predictions from CATR, the best performing interpretable model, to experimentally measured refractive indices for 24 ChGs in the Si-Ge-Te system. Although Si, Ge, and Te are elements represented in our dataset, ternary compositions comprising exclusively of these elements are entirely absent, except for a single binary composition (Si20Te80, nd = 3.2). The samples were thin films prepared via magnetron co-sputtering, with their structural and optical properties characterized in a previous study [10]. The resulting coefficient of determination of (R2 = 0.1489) is arguably low. As illustrated in Figure 1, while eleven data points closely approach the experimental values, the remaining thirteen points, corresponding to the samples with measured refractive index greater than 3.0, are significantly underestimated by our model. Several factors are likely to contribute to this discrepancy, most notably the sparse distribution of high refractive index data within our training dataset (as seen in Figure 4). Additionally, since most inputs documented in the SciGlass database are bulk glasses, structural differences arising from the sputtering process used for thin film deposition may further influence the refractive index values. The chemical composition and experimentally measured refractive indices at 1550 nm for these samples are provided in Table A1 of Appendix A.

Figure 1.

Predictions of the tuned CATR model versus measured values of the refractive index of 24 experimental Si-Ge-Te samples.

2.3. SHAP Feature Importance and Interpretation

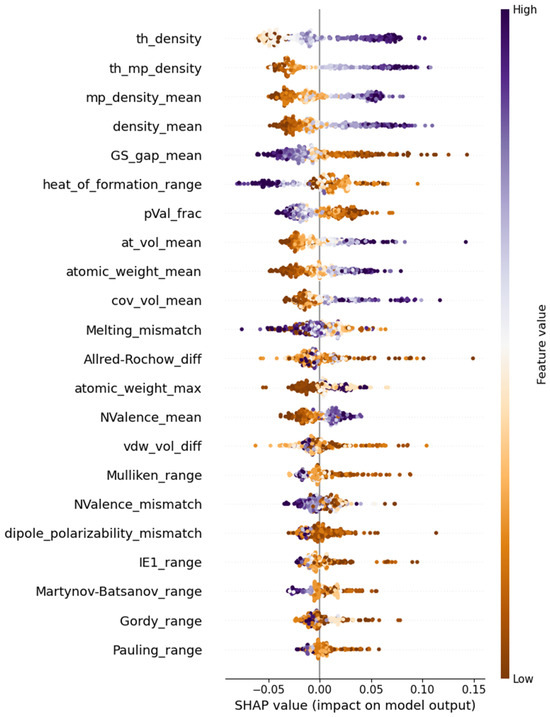

With the most reliable model for predicting the refractive index, we proceeded to analyze the physical interpretation of this optical property using the SHapley Additive exPlanations (SHAP) Python package version 0.41.0 [11]. SHAP provides visualization tools to assess the feature impact on the target variable. The Shapley value, derived from game theory, measures the average contribution of a given parameter to the target variable across all possible prediction scenarios [12]. Since the refractive index is dimensionless, so are its Shapley values. Positive values indicate an increase in due to a feature, while negative values signify a decrease. Figure 2 illustrates the ranked importance of the 22 features in the optimized CatBoostRegressor. Each data point from the training set is represented as a dot color coded according to the corresponding feature magnitude. The plot reveals trends in the impact of extreme feature values, with clear separation in the top ranked features, indicating their strong influence on the refractive index.

Figure 2.

SHAP beeswarm plot of the 22 features in the optimized CATR model.

The top four features influencing the value of are related to the elemental densities of a composition. Theoretical density refers to a weight-percentage-modified harmonic mean of the elemental densities (see the first formula in Table A2). According to the SHAP plot in Figure 1, these four features have a clear impact on the refractive index. The larger the density of the constituent elements, the higher the value of the optical property. Additionally, the presence of heavier elements (having larger atomic volumes and weights) appears to increase . The range of heat formation, together with two electronic features, the mean of the ground state gap and the fraction of p valence electrons, exerts a negative effect on the target. However, the average number of valence electrons influences the refractive index positively. This suggests that materials with a higher mean ground state gap and fewer p valence electrons exhibit less optical interaction, while an increased number of valence electrons contributes to stronger electronic transitions, leading to a higher refractive index.

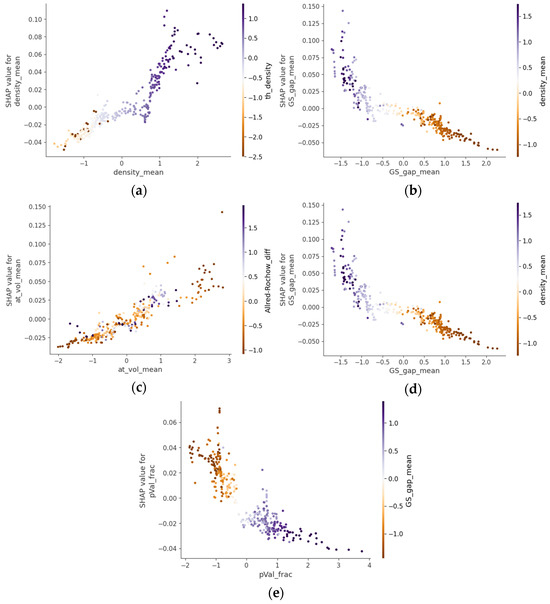

Figure 3 presents selected SHAP dependence plots from the CatBoostRegressor analysis, emphasizing the structural and electronic characteristics most influential for the refractive index in chalcogenide glasses. The values of the features lie on the x-axis, and the Shapley values are on the y-axis. On the right side of the plots, an interacting feature is represented with the color code for its magnitude. Figure 3a demonstrates that increasing the mean elemental density significantly enhances refractive index, aligning with the Lenz–Lorentz relation (n2 − 1)/(n2 + 2) = (4π/3)NARmρ, where ρ is the density, Rm is the molecule polarizability, and NA is the Avogadro number [13]. Experimental data from the Ge-Sb-Se and Ge-Sb-S-Se-Te systems with compositions with heavier elements showed elevated refractive indices due to increased density [14,15]. This correlation was further validated in Ge-As-Se and Ge-Sb-Se glasses, where density increased and refractive index increased proportionally with higher As and Sb contents [16,17].

Figure 3.

SHAP dependence plots highlighting the structural and electronic features most influential for the refractive index in chalcogenide glasses. The panels depict the relationships of the refractive index SHAP values with the (a) mean elemental density, (b) mean ground state electronic gap, (c) mean atomic volume, (d) mean number of valence electrons, and (e) fraction of p-valence electrons.

Figure 3b reveals a pronounced inverse correlation between the mean ground state electronic gap and refractive index, consistent with the Moss relation n4 × Eg = K, where K is a constant [18,19]. Tanaka experimentally verified that narrower bandgaps facilitate electronic transitions at lower energies, enhancing polarizability and the refractive index [20]. Furthermore, optical bandgap measurements in Ge-Sb-S glasses showed that narrower bandgaps corresponded to higher nonlinear refractive indices [21,22], while the THz-IR refractive index correlation reported for As-S and As-Se systems [23] further supports this relationship across different spectral regions.

Figure 3c highlights the direct relationship between the mean atomic volume and refractive index. Borisova established that substituting selenium with larger tellurium atoms in chalcogenide glasses increases the refractive index due to enhanced electronic polarizability [24]. This correlation was further substantiated by Petit et al., who demonstrated that glass compositions with larger mean atomic volumes consistently exhibit higher refractive indices due to the more expansive and easily polarizable electron clouds [25].

Figure 3d confirms that a higher mean number of valence electrons strongly correlates with increased refractive indices. This relationship aligns with studies by Tichy and Ticha, who established empirical correlations between the average coordination number and optical properties in chalcogenide glasses [26]. Kumar et al. provided additional experimental evidence in Ge-Se-In systems, demonstrating that compositions with higher average valence electron counts consistently exhibit elevated refractive indices [27].

Finally, Figure 3e illustrates that increasing the fraction of p-valence electrons negatively impacts the refractive index. While less extensively investigated, this relationship is supported by fundamental quantum mechanical principles regarding orbital characteristics. As established by Pauling and later applied to optical materials by Phillips, p-orbitals exhibit greater directionality and spatial localization compared to s-orbitals or d-orbitals, resulting in reduced polarizability [28,29]. Specifically for chalcogenide glasses, Aniya and Shinkawa developed theoretical models that account for the differential contributions of various orbital types to electronic polarizability, and ultimately, the refractive index [30].

The ranking of features emphasizes that, for ChGs, the mass distribution within the structure, both at room temperature and the melting point, has a greater impact on the refractive index than the electrical parameters do. This aligns well with the structural contrast between ChGs and ionic glasses, where the polarizability of anions and cations primarily decreases or increases the velocity of light in the material [3]. It should be noted that the SHAP feature interpretation remains dependent on the model, so its reliability depends on the accuracy and robustness of the model itself.

3. Methods

3.1. Data Acquisition

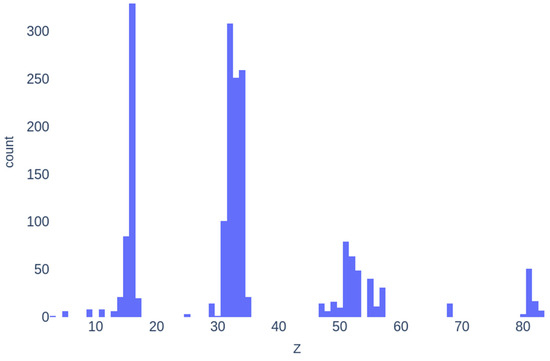

Chalcogenide compositions and their refractive index were extracted from SciGlass [8], which is the largest free and open database of glass compositions and properties of glasses compiled from the literature up to 2019. The dataset of this study consists of composition-property samples that meet specific criteria, constraints, and corrections applied to the raw data. The selection criteria for chemical compositions were as follows: the composition must not contain oxides and must have a non-zero amount of chalcogen. No other elements were excluded from consideration to maximize the number of samples. Since the raw data describes the entries as mixtures of compounds and elements with their respective molar percentages, we converted these into the corresponding elements and their atomic percentages. Entries where the total atomic percentages deviated from 100% to 0.05% were discarded. For compositions within this tolerance, the largest atomic percentage was adjusted by adding or subtracting the difference, accordingly. Duplicate entries were then removed. In cases where duplicated compositions were assigned distinct values, extreme values (i.e., beyond the 0.05% and 99.95% percentiles) were discarded, and the median value was assigned as the for that composition. This approach aligns with the data cleaning methodology of Mastelini et al. [6]. At this stage, extreme values across the entire distribution were also eliminated, each represented by a single composition. Finally, compositions containing Dy, Gd, Pr, Tm, and Yb were excluded due to missing values in the fundamental elemental attributes used for feature generation. The final dataset comprise 541 compositions spanning 32 elements, as follows: Li, S, Ge, Cd, B, Al, Si, Ga, Br, La, Er, F, Mn, Tl, Bi, Na, Zn, Pb, P, Te, As, Sb, Se, Cl, Ag, Cs, In, I, Ba, Cu, Hg, and Sn. The distribution of refractive indices is visualized in Figure 4, and descriptive statistics are presented in Table 3.

Figure 4.

Histogram of the distribution after data extraction.

Table 3.

Descriptive statistics of the values in the dataset.

The frequency of elemental representation in the dataset compositions is shown in Figure 5. S is the most abundant element, appearing in more than 320 samples. Ge is the second most represented, with 308 entries, followed by Se and As. Zn and Li appear only once. Consequently, machine learning models are expected to generalize better for compositions containing elements that are more frequently represented in the dataset.

Figure 5.

Histogram of atomic number distribution in the dataset.

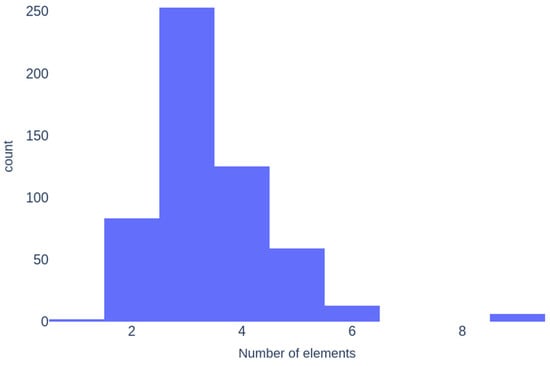

Figure 6 illustrates that ternary glasses are the most common in the dataset, followed by quaternary and binary glasses. Six samples contain nine elements, while two are monoelemental (S and Se glasses).

Figure 6.

Histogram of the number of elements in compositions.

3.2. Features and Feature Selection

This study examines the relationship between fundamental atomic properties and the refractive index. Consequently, we generated an initial set of 379 computational physico-chemical features. These features were derived from 44 elemental properties collected from the Mendeleev Python package [31], as well as from other publications on the prediction of metallic glasses [32,33,34,35,36,37], and on the theoretical calculations of the atomic characteristics [38,39,40,41,42,43]. The dataset encompasses a wide range of topological, thermodynamic, and electronic properties of the elements. Examples include the first ionization energy, density at room temperature, Mulliken electronegativity, Pauling electronegativity, electrophilicity, ground state gap, melting temperature, density at the melting temperature, number of s/p/d/f valence electrons, atomic/covalent/van der Waals volume, dipole polarizability, and coordination number. The complete list of properties can be found in Appendix A. For a composition containing the elements , , ,…, with atomic percentages , , ,…, , a vector of the corresponding values is assigned for each elemental property , , ,…, . Features are constructed by applying mathematical functions to these vectors, or to more property vectors, in more complex cases. The formulas for all these functions, some of which are very complex, are detailed in another study [43]. Table A1 in Appendix A includes the formulas necessary to understand the most important features discussed in Section 2.3.

The dataset comprises 541 collected samples divided as follows: 345 (64%) for training, 87 (16%) for cross-validation, and 109 (20%) were left for testing. A relatively large percentage was allocated to testing to ensure model reliability. It is certain that the 379 candidate features exceed the appropriate dimensionality of predictors for only 345 training entries. Feature standardization was applied in the first preprocessing step. The raw values were normalized based on their distribution (the mean and standard deviation) within the training set, as defined by Equation (1), as follows:

where is a raw feature value, is the mean value across the 345 training entries, and is the standard deviation. After transformation, the standardized features in the training set have a mean of 0 and a standard deviation of 1. The first step for feature selection involved the computation of the mutual information regression score (MIR) [44]. MIR is a non-negative number that quantifies in bits the dependency between two variables (X and Y) by calculating the difference between the entropy of X and the conditional entropy of X given Y [45]. The 25, 30, 33, and 35 features with the highest-MIR values were evaluated across default models using six-fold repeated training with seven-fold cross-validation. In each iteration, the training set was randomly partitioned in seven equal subsets, with one subset designated for validation and the other six used for training. Repetition mitigates sampling bias in the splits. The model’s reliability was assessed using the coefficient of determination, (see Equation (2)). The sets of 30 and 33 features produced the best results across the eight models evaluated. To optimize dimensionality reduction, we selected the 30 features set. Table 4 presents a comparison of the performance metrics for each case with the values rounded to three decimal places. Model names and abbreviations are detailed in Section 2.3 and in the Abbreviations Section.

Table 4.

Performance of the models during training with cross-validation for different feature sets. The highest performance values obtained for each model are highlighted in bold.

The second and final filter for feature selection was recursive elimination (RFS), which uses model-based feature importance scores. This method iteratively removes the least important features at each training step until optimal performance is achieved [46].

Table 5 compares the values for training, cross-validation, and testing across the eight models on the 30-feature set selected via MIR, as well as the refined feature sets obtained through RFS. With the exception of one model, both training and validation performance improved after RFS. The HGBR model lacks an RFS step because it does not provide a feature importance method. The final number of features selected for each model is also included.

Table 5.

Performance of models with and without RFS. Arrows (↗/↘) and equal signs (=) indicate performance improvements, declines, or no change, respectively, upon applying the additional RFS feature-selection step.

3.3. Machine Learning Workflow

In this study, we employ eight ensemble boosting models based on regression trees from the sklearn, lightgbm, catboost, and xgboost Python packages. These models include random forest (RFR) [47], gradient boosting (GBR) [48], adaptive boosting (ADR) [49], extremely randomized trees (ETR) [50], hist gradient boosting (HGBR) [51], light gradient boosting machine (LGBMR) [52], categorical boosting (CATR) [53], and extreme gradient boosting (XGBR) [54].

A regression tree is a supervised learning algorithm consisting of a series of if-else decisions that yield a continuous numerical output. It is typically represented as an upside-down tree, starting with a root node, followed by internal nodes, and ending with leaf nodes. At each node, the dataset is split based on the best threshold value of a feature to minimize the variance of the target in each resulting node. The tree depth increases until a stopping criterion is met, such as the minimum number of instances in the leaf nodes [55]. Equation (3) mathematically describes the prediction of a regression tree, as follows [55,56]:

where represents the set of predictors for the instance, k is the number of the node in the tree, and and are the parameters determined during training.

Regression trees are suitable for capturing complex nonlinear interactions between features, but they tend to overfit because their structure heavily depends on the dataset. Consequently, to enhance generalization, large groups of trees are aggregated into ensembles. We employ two types of ensembles as follows: bagging ensembles, which predict the mean value of their inner regression trees, and boosting ensembles, where each inner tree is trained to correct the errors of its predecessors [55,57].

The random forest regressor (RFR) and extra trees regressor (ETR) are similar algorithms that aggregate predictions from multiple base trees. In the RFR, each tree is trained on a bootstrap sample (sampling with replacement) of the training data, whereas all trees in the ETR receive the same input data. Another difference is how features are split at the nodes, as follows: the RFR selects the optimal threshold value, while the ETR chooses it randomly. Due to the added randomness, the ETR computes faster [58]. Both models are widely used for machine learning tasks due to their strong predictive performance [57,58].

Adaptive boosting (ADR) consists of shallow trees that are sequentially trained on the same input data, while adjusting the weights in the cost function to prioritize examples that were previously more poorly estimated [49]. Gradient boosting trees (GBR) uses gradient descent to optimize a differentiable loss function to train the trees in a sequence. GBR is the simplest model from this approach [55,59]. The histogram-based gradient boosting model (HGBR) determines optimal split points based on feature histograms rather than continuous sorted feature values, reducing computational costs [59]. Light boosting machine (LightGBM/LGBMR), categorical boosting machine (CatBoost/CATR) and extreme gradient boosting machine (XGBoost/XGBR) are highly efficient models handling large and complex datasets. They primarily differ in how they process data types, their objective and regularization functions, and their tree growth strategies. LightGBM grows trees leaf-wise, leading to deeper and narrower structures, whereas XGBoost and CatBoost expand trees level-wise, creating wider structures. CatBoost uses ordered boosting, where trees are trained on subsets of the data while calculating costs on different data points to mitigate overfitting [60,61,62]. XGBoost provides extensive regularization options [54] and CatBoost utilizes symmetric trees, ensuring that all the nodes at a given level use the same feature–split pair, further reducing overfitting [60].

For feature selection, we initially used the default configurations of these models. After selecting the features (a specific set for each model), we fine-tuned the hyperparameters using seven-fold cross-validation, maximizing as the objective function with the Optuna Python package [63]. Model performance was assessed using the coefficient of determination, mean absolute error (MAE), and mean squared error (MSE), as defined in Equations (4) and (5). Lower MAE and MSE values indicate better predictive accuracy. Section 2.1 discusses the performance improvements in detail.

4. Conclusions

In this study, we focused on achieving reliable predictions of the refractive index of chalcogenide glasses using common machine learning algorithms.

Our unique dataset comprises 541 examples extracted and processed from the SciGlass database, covering 32 elements from the periodic table, including halides and transition metals. The expanded set of 379 corresponding candidate features, gathered from the literature of elemental physico-chemical attributes, identifies each entry with a vector of mathematical functions based on the chemical composition and the atomic parameters. This dataset can also serve other supervised learning models (e.g., neural networks) either for training or retraining within transfer learning, particularly for the models trained to predict the refractive index of other types of glasses. Additionally, unsupervised clustering models may be employed to identify meaningful patterns and categories of compositions within our data.

The optimized CatBoostRegressor and the LinearRegression stacking learner achieve high test scores, = 0.8920 and = 0.8985, respectively, comparable with the existing results in the literature. An experimental verification of the CATR model’s predictive capability using compositions from the Si-Ge-Te ternary system, not included in our original dataset, provided further insight into the reliability of our predictions. The model maintained accurate predictions for refractive index values in the range of approximately 2.1–3.0, but it significantly underestimated values above this range, primarily due to the insufficient number of high refractive index samples within the training dataset. The SHAP feature interpretation of the CatBoostRegressor revealed the significant influence of elemental density, atomic ground state gap, and p valence electrons in a composition on the refractive index. These findings are consistent with previous studies into the factors governing refractive index behavior in inorganic materials.

This research further demonstrates the applicability of machine learning techniques for glass design and prediction. However, the limited number of compositions may restrict the generalization power of the algorithms in experiments necessitating the collection of more data by the chalcogenide glass community.

Author Contributions

Conceptualization, A.V. and M.-I.B.; methodology, A.V. and M.-I.B.; validation, M.-I.B.; formal analysis, M.-I.B.; investigation, M.-I.B.; resources, A.V.; data curation, M.-I.B.; writing—original draft preparation, M.-I.B.; writing—review and editing, A.V. and M.-I.B.; visualization, M.-I.B.; supervision, A.V.; project administration, A.V.; funding acquisition, A.V. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant of the Ministry of Research, Innovation and Digitization, CNCS-UEFISCDI, project number PN-IV-P1-PCE-2023-1785 (60 PCE/2025), within PNCDI IV and the Core Program of the National Institute of Materials Physics, granted by the Romanian Ministry of Research, Innovation and Digitization through Project PC3-PN23080303.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon reasonable request from the corresponding author.

Acknowledgments

The authors are very grateful to the reviewers and editors for their valuable suggestions, which have helped improve the paper substantially. M.-I.B. also appreciates the advice and discussions of George Alexandru Nemnes, University of Bucharest about the MIR technique.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ChG | Chalcogenide Glass |

| RFS | Recursive Feature Selector |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| RFR | Random Forest Regressor |

| GBR | Gradient Boosting Regressor |

| ADR | Adaptive Boosting (AdaBoost) Regressor |

| ETR | Extremely Randomized Trees (ExtraTrees) Regressor |

| HGBR | Histogram based Gradient (HistGradient) Boosting Regressor |

| LGBMR | Light Gradient Boosting Machine (LightGBM) Regressor |

| CATR | Categorical Boosting (CatBoost) Regressor |

| XGBR | Extreme Gradient Boosting (XGBoost) Regressor |

Appendix A

The list of elemental properties used for feature construction is as follows: density at 295 K, dipole polarizability, fusion heat, thermal conductivity, covalent radius, heat of formation, van der Waals radius, atomic weight, atomic radius, Mendeleev number, molar heat capacity, first ionization energy, and electronegativities (Allred–Rochow, Cottrell–Sutton, Ghosh, Gordy, Martynov–Batsanov, Mulliken, Nagle, Pauling, Sanderson). Additional properties include the atomic volume; covalent volume; van der Waals volume; density at the melting point; molar volume at the melting point; electrophilicity; coordination number; total number of valence electrons; number of s, p, d, and f valence electrons; periodic table row and column (group); melting temperature; ground state gap; ground state magnetic moment; number of vacant valence electrons; number of vacant s, p, d, and f valence electrons; and space group number.

The notations and meaning of the 22 CATR features are as follows: th_density = theoretical value of the density, th_mp_density = theoretical value of the melting point density, mp_density_mean = the mean of the elemental melting point densities, density_mean = mean of elemental densities, GS_gap_mean = mean of elemental ground state gaps, heat_of_formation_range = range of elemental heats of formation, pVal_frac = fraction of p valence electrons, at_vol_mean = average of the atomic volumes, atomic_weight_mean = average of atomic weights, cov_vol_mean = average of covalent volumes, Melting_mismatch = mismatch of elemental melting temperatures, Allred–Rochow_diff = difference in elemental Allred–Rochow electronegativities, atomic_weight_max = maximum atomic weight in the composition, NValence_mean = average of total number of valence electrons, vdw_vol_diff = difference in van der Waals volumes, Mulliken_range = range of elemental Mulliken electronegativities, NValence_mismatch = mismatch of total number of valence electrons, dipole_polarizability_mismatch = mismatch of dipole polarizabilities, IE1_range = range of first ionization energies, Martynov–Batsanov_range = range of Martynov–Batsanov electronegativities, Gordy_range = range of Gordy electronegativities, and Pauling_range = range of Pauling electronegativities.

Table A1.

Atomic compositions and corresponding experimental refractive indices (measured at 1550 nm) of the 24 Si–Ge–Te chalcogenide glass samples analyzed in this study. Experimental data were obtained from Reference [10].

Table A1.

Atomic compositions and corresponding experimental refractive indices (measured at 1550 nm) of the 24 Si–Ge–Te chalcogenide glass samples analyzed in this study. Experimental data were obtained from Reference [10].

| Si% | Ge% | Te% | at 1550 nm |

|---|---|---|---|

| 22.5 | 62.5 | 15.0 | 3.2 |

| 36.6 | 47.9 | 15.5 | 2.8 |

| 45.6 | 36.8 | 17.6 | 2.7 |

| 21.8 | 53.8 | 24.4 | 3.1 |

| 30.6 | 43.0 | 26.4 | 2.9 |

| 39.7 | 33.8 | 26.5 | 2.7 |

| 15.0 | 56.1 | 28.9 | 3.6 |

| 23.4 | 46.7 | 29.9 | 3.0 |

| 32.6 | 34.8 | 32.6 | 2.8 |

| 22.2 | 39.5 | 38.3 | 3.1 |

| 14.8 | 45.9 | 39.3 | 3.3 |

| 32.4 | 28.2 | 39.4 | 2.8 |

| 27.5 | 32.1 | 40.4 | 2.8 |

| 20.9 | 37.6 | 41.5 | 3.0 |

| 24.0 | 33.3 | 42.7 | 2.9 |

| 12.6 | 32.4 | 55.0 | 3.3 |

| 20.3 | 23.4 | 56.3 | 3.1 |

| 8.8 | 33.1 | 58.1 | 3.2 |

| 14.9 | 26.3 | 58.8 | 3.2 |

| 14.4 | 24.2 | 61.4 | 3.1 |

| 17.5 | 19.7 | 62.8 | 3.0 |

| 8.1 | 23.3 | 68.6 | 3.2 |

| 11.8 | 19.5 | 68.7 | 3.3 |

| 14.2 | 16.0 | 69.8 | 3.3 |

Table A2.

Functions applied to atomic attributes for feature construction.

Table A2.

Functions applied to atomic attributes for feature construction.

| Function | Formula | Prefix/Suffix |

|---|---|---|

| Theoretical value | , where is the weight percentage and is the atomic weight of the element | th_ |

| Mean | _mean | |

| Range | _range | |

| Occupation fraction | , where is the total number of valence electrons of the element | _frac |

| Mismatch | _mismatch | |

| Difference | _diff | |

| Maximum | _max | |

| Minimum | _min |

References

- Zhang, X.-H.; Adam, J.-L.; Bureau, B. Chalcogenide Glasses. In Springer Handbook of Glass; Musgraves, J.D., Hu, J., Calvez, L., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 525–552. ISBN 9783319937267. [Google Scholar]

- Ikemoto, H.; Miyanaga, T. Local Structure of Amorphous Tellurium Studied by EXAFS. J. Synchrotron Rad. 2014, 21, 409–412. [Google Scholar] [CrossRef]

- Shelby, J.E. Introduction to Glass Science and Technology, 2nd ed.; RSC Advancing the Chemical Sciences; Royal Society of Chemistry: Cambridge, UK, 2005; pp. 203–205. ISBN 9780854046393. [Google Scholar]

- Einabadi, E.; Mashkoori, M. Predicting Refractive Index of Inorganic Compounds Using Machine Learning. Sci. Rep. 2024, 14, 24204. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Fu, Z.; Yang, K.; Xu, X.; Bauchy, M. Machine Learning for Glass Science and Engineering: A Review. J. Non-Cryst. Solids X 2019, 4, 100036. [Google Scholar] [CrossRef]

- Mastelini, S.M.; Cassar, D.R.; Alcobaça, E.; Botari, T.; de Carvalho, A.C.P.L.F.; Zanotto, E.D. Machine Learning Unveils Composition-Property Relationships in Chalcogenide Glasses. Acta Mater. 2022, 240, 118302. [Google Scholar] [CrossRef]

- Singla, S.; Mannan, S.; Zaki, M.; Krishnan, N.M.A. Accelerated Design of Chalcogenide Glasses through Interpretable Machine Learning for Composition–Property Relationships. J. Phys. Mater. 2023, 6, 024003. [Google Scholar] [CrossRef]

- SciGlass/README.Md at Master. Epam/SciGlass. Available online: https://github.com/epam/SciGlass/blob/master/README.md (accessed on 3 June 2024).

- LinearRegression. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html (accessed on 15 February 2025).

- Mihai, C.; Sava, F.; Simandan, I.D.; Galca, A.C.; Burducea, I.; Becherescu, N.; Velea, A. Structural and Optical Properties of Amorphous Si–Ge–Te Thin Films Prepared by Combinatorial Sputtering. Sci. Rep. 2021, 11, 11755. [Google Scholar] [CrossRef]

- Welcome to the SHAP Documentation—SHAP Latest Documentation. Available online: https://shap.readthedocs.io/en/latest/ (accessed on 1 March 2025).

- Krishnan, N.M.A.; Kodamana, H.; Bhattoo, R. Interpretable Machine Learning. In Machine Learning for Materials Discovery; Springer International Publishing: Cham, Switzerland, 2024; pp. 159–171. ISBN 9783031446214. [Google Scholar]

- Kakiuchida, H.; Sekiya, E.H.; Shimodaira, N.; Saito, K.; Ikushima, A.J. Refractive Index and Density Changes in Silica Glass by Halogen Doping. J. Non-Cryst. Solids 2007, 353, 568–572. [Google Scholar] [CrossRef]

- Wang, T.; Wei, W.H.; Shen, X.; Wang, R.P.; Davies, B.L.; Jackson, I. Elastic Transition Thresholds in Ge–As(Sb)–Se Glasses. J. Phys. D Appl. Phys. 2013, 46, 165302. [Google Scholar] [CrossRef]

- Dory, J.-B.; Castro-Chavarria, C.; Verdy, A.; Jager, J.-B.; Bernard, M.; Sabbione, C.; Tessaire, M.; Fédéli, J.-M.; Coillet, A.; Cluzel, B.; et al. Ge–Sb–S–Se–Te Amorphous Chalcogenide Thin Films towards on-Chip Nonlinear Photonic Devices. Sci. Rep. 2020, 10, 11894. [Google Scholar] [CrossRef]

- Wang, Y.; Qi, S.; Yang, Z.; Wang, R.; Yang, A.; Lucas, P. Composition Dependences of Refractive Index and Thermo-Optic Coefficient in Ge-As-Se Chalcogenide Glasses. J. Non-Cryst. Solids 2017, 459, 88–93. [Google Scholar] [CrossRef]

- Wen-Hou, W.; Liang, F.; Zhi-Yong, Y.; Xiang, S. Effect of Sb on Structure and Physical Properties of GeSbxSe7−x Chalcogenide Glasses. J. Inorg. Mater. 2014, 29, 1218. [Google Scholar] [CrossRef]

- Moss, T.S. A Relationship between the Refractive Index and the Infra-Red Threshold of Sensitivity for Photoconductors. Proc. Phys. Soc. B 1950, 63, 167–176. [Google Scholar] [CrossRef]

- Ticha, H.; Tichy, L. Remark on the Correlation between the Refractive Index and the Optical Band Gap in Some Crystalline Solids. Mater. Chem. Phys. 2023, 293, 126949. [Google Scholar] [CrossRef]

- Tanaka, K.; Shimakawa, K. Amorphous Chalcogenide Semiconductors and Related Materials; Springer: New York, NY, USA, 2011; ISBN 9781441995094. [Google Scholar]

- Choi, J.W.; Han, Z.; Sohn, B.-U.; Chen, G.F.R.; Smith, C.; Kimerling, L.C.; Richardson, K.A.; Agarwal, A.M.; Tan, D.T.H. Nonlinear Characterization of GeSbS Chalcogenide Glass Waveguides. Sci. Rep. 2016, 6, 39234. [Google Scholar] [CrossRef] [PubMed]

- Petit, L.; Carlie, N.; Humeau, A.; Boudebs, G.; Jain, H.; Miller, A.C.; Richardson, K. Correlation between the Nonlinear Refractive Index and Structure of Germanium-Based Chalcogenide Glasses. Mater. Res. Bull. 2007, 42, 2107–2116. [Google Scholar] [CrossRef]

- Tostanoski, N.J.; Heilweil, E.J.; Wachtel, P.F.; Musgraves, J.D.; Sundaram, S.K. Structure-Terahertz Property Relationship and Femtosecond Laser Irradiation Effects in Chalcogenide Glasses. J. Non-Cryst. Solids 2023, 600, 122020. [Google Scholar] [CrossRef]

- Borisova, Z.U. Glassy Semiconductors; Springer: Boston, MA, USA, 1981; ISBN 9781475708530. [Google Scholar]

- Petit, L.; Carlie, N.; Adamietz, F.; Couzi, M.; Rodriguez, V.; Richardson, K.C. Correlation between Physical, Optical and Structural Properties of Sulfide Glasses in the System Ge–Sb–S. Mater. Chem. Phys. 2006, 97, 64–70. [Google Scholar] [CrossRef]

- Ticha, H.; Tichy, L. Semiempirical relation between non-linear susceptibility (refractive index), linear refractive index and optical gap and its application to amorphous chalcogenides. J. Optoelectron. Adv. Mater. 2002, 4, 381–386. [Google Scholar]

- Kumar, P.; Tripathi, S.K.; Sharma, I. Effect of Bi Addition on the Physical and Optical Properties of Ge20Te74-Sb6Bi (x = 2, 4, 6, 8, 10) Thin Films Deposited via Thermal Evaporation. J. Alloys Compd. 2018, 755, 108–113. [Google Scholar] [CrossRef]

- Pauling, L. The Nature of the Chemical Bond, 2nd ed.; Cornell University Press: Ithaca, NY, USA, 1939. [Google Scholar]

- Phillips, J.C. Excitonic Instabilities and Bond Theory of III-VI Sandwich Semiconductors. Phys. Rev. 1969, 188, 1225–1228. [Google Scholar] [CrossRef]

- Aniya, M.; Shinkawa, T. A Model for the Fragility of Metallic Glass Forming Liquids. Mater. Trans. 2007, 48, 1793–1796. [Google Scholar] [CrossRef]

- Data—Mendeleev 0.20.1 Documentation. Available online: https://mendeleev.readthedocs.io/en/stable/data.html (accessed on 1 August 2023).

- Ward, L.; Agrawal, A.; Choudhary, A.; Wolverton, C. A General-Purpose Machine Learning Framework for Predicting Properties of Inorganic Materials. npj Comput. Mater. 2016, 2, 16028. [Google Scholar] [CrossRef]

- Singh, A.K.; Kumar, N.; Dwivedi, A.; Subramaniam, A. A Geometrical Parameter for the Formation of Disordered Solid Solutions in Multi-Component Alloys. Intermetallics 2014, 53, 112–119. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, Y.; Yang, Y.; Wang, J.; Liu, C.T. Atomic-Size Effect and Solid Solubility of Multicomponent Alloys. Scr. Mater. 2015, 94, 28–31. [Google Scholar] [CrossRef]

- Bharath, K.; Chelvane, J.A.; Kumawat, M.K.; Nandy, T.K.; Majumdar, B. Theoretical Prediction and Experimental Evaluation of Glass Forming Ability, Density and Equilibrium Point of Ta Based Bulk Metallic Glass Alloys. J. Non-Cryst. Solids 2019, 512, 174–183. [Google Scholar] [CrossRef]

- Inoue, A. Stabilization of Metallic Supercooled Liquid and Bulk Amorphous Alloys. Acta Mater. 2000, 48, 279–306. [Google Scholar] [CrossRef]

- Fang, S.; Xiao, X.; Xia, L.; Li, W.; Dong, Y. Relationship between the Widths of Supercooled Liquid Regions and Bond Parameters of Mg-Based Bulk Metallic Glasses. J. Non-Cryst. Solids 2003, 321, 120–125. [Google Scholar] [CrossRef]

- Mansoori, G.A.; Carnahan, N.F.; Starling, K.E.; Leland, T.W. Equilibrium Thermodynamic Properties of the Mixture of Hard Spheres. J. Chem. Phys. 1971, 54, 1523–1525. [Google Scholar] [CrossRef]

- Takeuchi, A.; Inoue, A. Calculations of Mixing Enthalpy and Mismatch Entropy for Ternary Amorphous Alloys. Mater. Trans. JIM 2000, 41, 1372–1378. [Google Scholar] [CrossRef]

- Haynes, W.M. (Ed.) CRC Handbook of Chemistry and Physics: A Ready-Reference Book of Chemical and Physical Data, 97th ed.; CRC Press: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2017; ISBN 9781498754293. [Google Scholar]

- Linstrom, P. NIST Chemistry WebBook, NIST Standard Reference Database 69; The National Institute of Standards and Technology: Gaithersburg, MD, USA, 1997. [Google Scholar]

- Tandon, H.; Chakraborty, T.; Suhag, V. A New Scale of the Electrophilicity Index Invoking the Force Concept and Its Application in Computing the Internuclear Bond Distance. J. Struct. Chem. 2019, 60, 1725–1734. [Google Scholar] [CrossRef]

- Belciu, M.-I.; Velea, A. Prediction of glass formation ability in chalcogenides using machine learning algorithms. Adv. Sci. 2025; submitted. [Google Scholar]

- Mutual_info_regression. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.mutual_info_regression.html (accessed on 15 May 2024).

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating Mutual Information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef] [PubMed]

- RFE. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.RFE.html (accessed on 15 November 2024).

- RandomForestRegressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html (accessed on 15 November 2024).

- GradientBoostingRegressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingRegressor.html (accessed on 15 November 2024).

- AdaBoostRegressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.AdaBoostRegressor.html (accessed on 15 November 2024).

- ExtraTreesRegressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.ExtraTreesRegressor.html (accessed on 15 November 2024).

- HistGradientBoostingRegressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.HistGradientBoostingRegressor.html (accessed on 15 November 2024).

- Lightgbm.LGBMRegressor—LightGBM 4.6.0.99 Documentation. Available online: https://lightgbm.readthedocs.io/en/latest/pythonapi/lightgbm.LGBMRegressor.html (accessed on 15 November 2024).

- CatBoostRegressor. Available online: https://catboost.ai/docs/en/concepts/python-reference_catboostregressor (accessed on 15 November 2024).

- XGBoost Python Package—Xgboost 3.0.0 Documentation. Available online: https://xgboost.readthedocs.io/en/stable/python/index.html (accessed on 15 November 2024).

- Krishnan, N.M.A.; Kodamana, H.; Bhattoo, R. Non-Parametric Methods for Regression. In Machine Learning for Materials Discovery; Springer International Publishing: Cham, Switzerland, 2024; pp. 85–112. ISBN 9783031446214. [Google Scholar]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable; Leanpub: Victoria, BC, Canada, 2020; ISBN 9780244768522. [Google Scholar]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems, 3rd ed.; Data Science/Machine Learning; O’Reilly: Sebastopol, CA, 2023; ISBN 9781098125974. [Google Scholar]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Comparing Random Forests and Histogram Gradient Boosting Models. Available online: https://scikit-learn.org/stable/auto_examples/ensemble/plot_forest_hist_grad_boosting_comparison.html (accessed on 1 March 2025).

- Gradient Boosting Variants—Sklearn vs. XGBoost vs. LightGBM vs. CatBoost. Available online: https://datamapu.com/posts/classical_ml/gradient_boosting_variants/ (accessed on 1 March 2025).

- Wade, C.J. Hands-on Gradient Boosting with XGBoost and Scikit-Learn: Perform Accessible Machine Learning and Extreme Gradient Boosting with Python; Packt: Birmingham, UK, 2020; ISBN 9781839218354. [Google Scholar]

- van Wyk, A. Machine Learning with LightGBM and Python: A Practitioner’s Guide to Developing Production-Ready Machine Learning Systems, 1st ed.; Packt Publishing: Birmingham, UK, 2023; ISBN 9781800564749. [Google Scholar]

- Optuna—A Hyperparameter Optimization Framework. Available online: https://optuna.org/ (accessed on 1 February 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).