Abstract

Chemical synthesis is state-of-the-art, and, therefore, it is generally based on chemical intuition or experience of researchers. The upgraded paradigm that incorporates automation technology and machine learning (ML) algorithms has recently been merged into almost every subdiscipline of chemical science, from material discovery to catalyst/reaction design to synthetic route planning, which often takes the form of unmanned systems. The ML algorithms and their application scenarios in unmanned systems for chemical synthesis were presented. The prospects for strengthening the connection between reaction pathway exploration and the existing automatic reaction platform and solutions for improving autonomation through information extraction, robots, computer vision, and intelligent scheduling were proposed.

1. Introduction

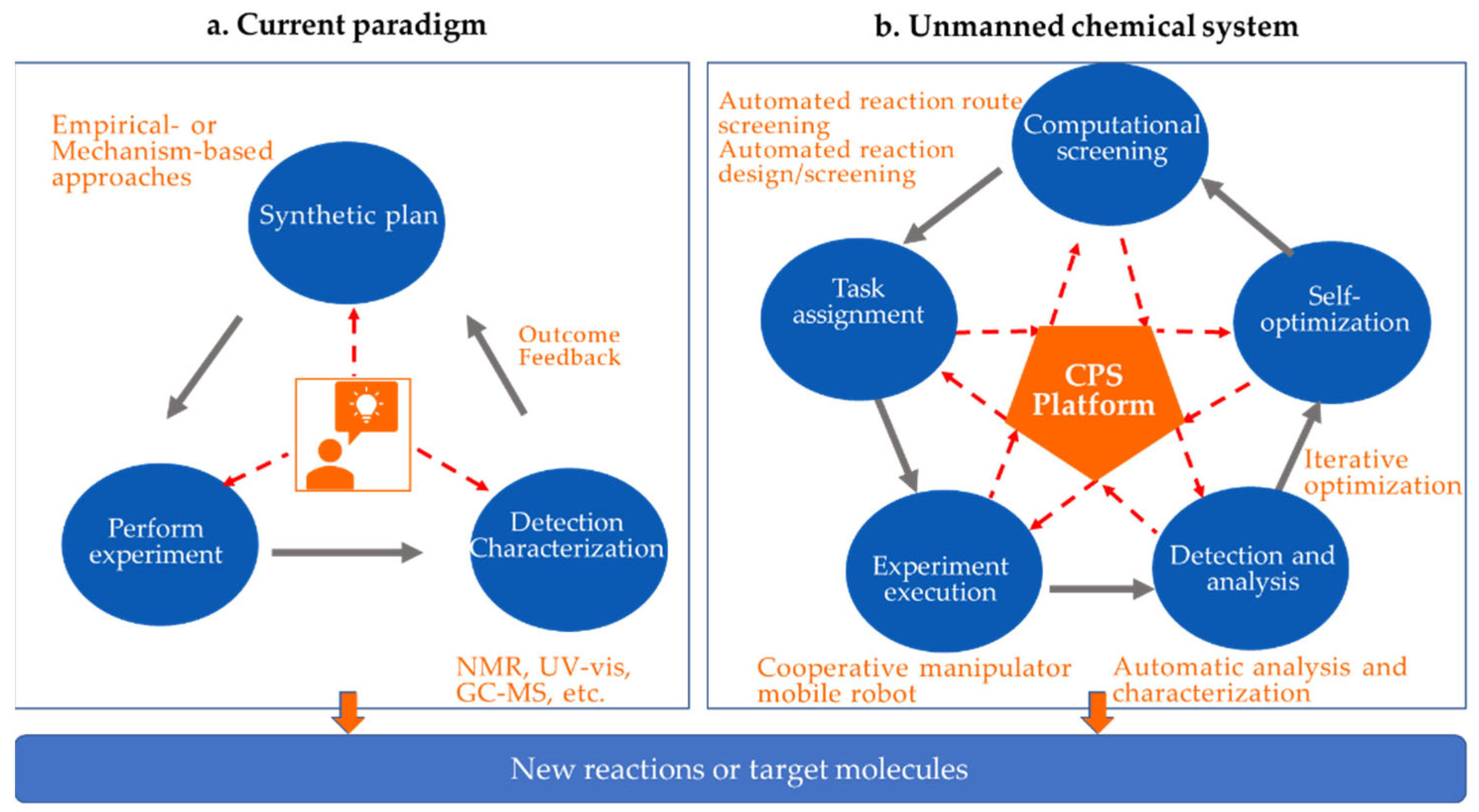

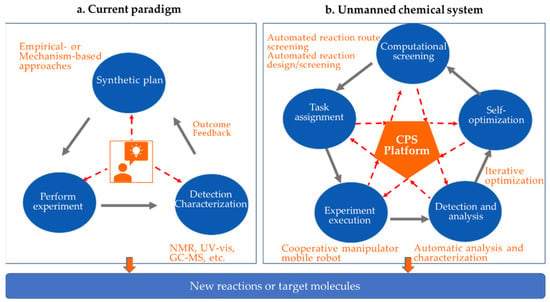

As the core of chemical science, synthetic chemistry refers to creation of new molecular structures (or new substances) with specific functions via one or a series of physical or chemical operations in combination with certain characterization and analysis techniques. It is closely related to energy, the environment, and human health, etc. However, creation of a target molecule often requires professional researchers and highly intensive experimental attempts. This process includes: (1) researchers generate a synthesis plan based on personal chemical intuition from mechanistic understanding or experience; (2) executing synthetic experiments; (3) reaction outcome analysis (e.g., characterization of the structure and properties of the product, yield, selectivity, etc.) (Figure 1a). In this process, high-dimensional parameter spaces (e.g., reactant, catalyst, solvent, etc.) need to be considered, and, therefore, it requires experimenters to explore several condition combinations. This research paradigm not only leads to high labor and material costs but also makes researchers physically tired because of long working-time and repeated work, which might further cause reproducibility and experimental safety problems. It is our dream to build a smart unmanned system that consists of virtual screening, task assignment, automatic experimentation (synthesis and characterization), data feedback, and self-optimization. Such a closed-loop system is driven by machine learning (Figure 1b) through a cyber-physical system (CPS) platform.

Figure 1.

Schematic comparison of chemical synthesis paradigms. (a) The current paradigm; (b) reaction discovery or molecule synthesis with unmanned chemical systems integrated by the CPS (cyber-physical system) platform.

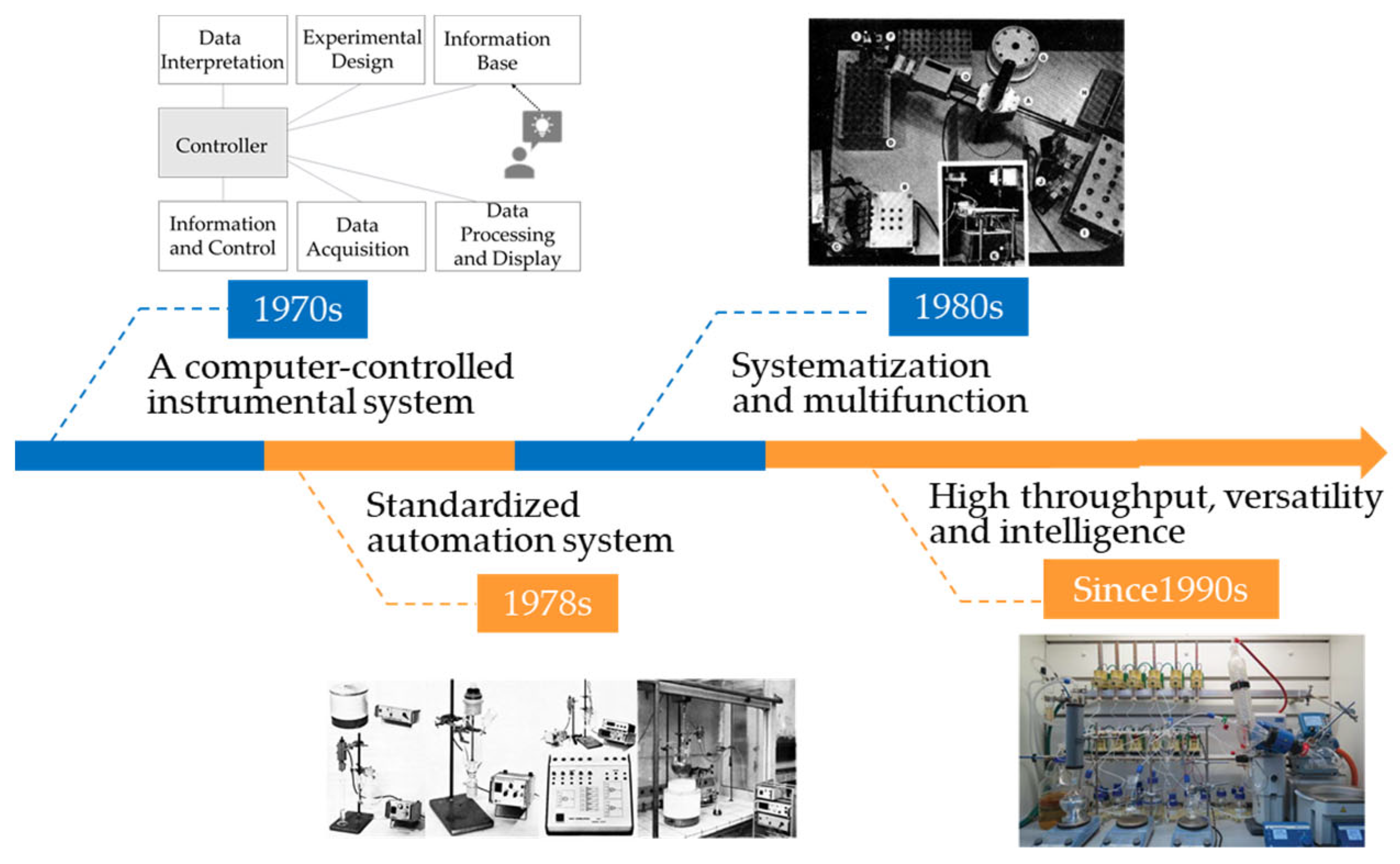

To liberate experimenters from those routine tasks, many endeavors have been made to promote automation and intelligence of automatic chemical systems. The first automated chemical experiment system appeared around the 1970s (Figure 2). Computers were introduced to control delivery of chemicals; e.g., Deming et al. developed a computer-program-controlled automatic synthesis system that dispenses chemicals through a controlling injection pump and realizes automatic synthesis and characterization of specific products in combination with a UV–vis spectrophotometer [1]. Later, Legard and Foucard developed a general automatic toolkit, Logilap, which can select and match different components according to the requirements of the experiment [2]. With development of science and technology, the automation degree of synthetic laboratories has increased rapidly, which can deal with many different types of chemicals and reactions. Frisbee et.al designed an automatic experimental system with multiple reactors and mechanical arms that carried out experiments in parallel. The system could not only perform synthetic operations, such as quenching, extraction, and filtration, but was also equipped with an automatic cleaning machine [3], which further improves the degree of autonomation.

Figure 2.

Development of automated chemical synthesis platform. Picture in 1970s adapted with permission from Ref. [1]. Copyright 1971, American Chemical Society. Pictures in 1978s adapted with permission from Ref. [2]. Copyright 1978, American Chemical Society. Pictures in 1980s adapted with permission from Ref. [3]. Copyright 1984, American Chemical Society. Picture in since 1990s adapted with permission from Ref. [4]. Copyright 2018, The American Association for the Advancement of Science.

With rapid development of the automation laboratory, development of autonomous discovery systems with the ability to think independently is arousing widespread interest [4]. At this time, machine learning has stepped onto the stage in the field of chemistry. Here, applications of machine learning in the field of unmanned chemical systems are introduced, with a focus on the prospect of smart reaction design and synthesis systems.

2. Applications of Machine Learning to Unmanned Chemical Systems

2.1. Categories of Machine Learning Models

Through building mathematical models, machine learning (ML) can learn related tasks from data. Recently, ML has been widely used in medical, chemical, computer vision, and many other fields [5,6,7]. Machine learning is a branch of artificial intelligence that learns by the training set in a certain way. With the amount of training increasing, the performance of ML is gradually optimized, which predicts output of related problems. The model, the strategy, and the algorithm are described as the three elements of ML. The model is used to analyze the problems that need to be solved, which is mapping the relationship between input space and output space, such as non-linear models, linear models, and regression models, etc. The strategy is to select the learning criteria for the optimal model; e.g., regression can use mean square error (MSE) as the learning strategy. Here, the loss function is introduced, which measures the error between the predicted and true results. The algorithm is a specific calculation method for the model to learn, which solves optimization problems. The common optimization algorithms are gradient descent, Newton’s, and quasi-Newton methods, etc. Then, to adjust the model parameters and prevent the model from overfitting, the optimal hyperparameters of the model are selected by using the validation set, and the generalization ability of the model is evaluated by using the test set.

In general, the field of machine learning can be divided into three categories: supervised learning, unsupervised learning, and reinforcement learning (Table 1).

Supervised learning [8], which requires training with labeled input and output data, aims to learn or approximate a mapping function that can best describe the association patterns and relationships between input features and output value. Supervised learning methods can be further divided into two subdomains: classification if the labeled outputs are discrete variables and regression, if the labeled outputs are continuous variables. Once this function is well-trained and converged, it can be utilized to predict the output value for unseen input data. In supervised learning, some representative algorithms are support vector machine [9], naive Bayes [10], hidden Markov model [11], and Bayesian networks [12].

In contrast, unsupervised learning aims to infer the inherent structure of training data without any available labels [13]. According to how the data are processed and analyzed, unsupervised learning methods can be further divided into two categories: clustering, which classifies input data with similar features or properties into the same group; and dimensionality reduction, which reduces the dimension of input data into a relatively smaller set of features with minimum loss of information and performance. As for unsupervised learning, the representative methods are K-means and X-means [14], Gaussian mixture model, and Dirichlet process mixture model [15].

Reinforcement learning [16] aims to allow an autonomous active agent to learn its optimal behavior policy to complete a certain task by maximizing cumulative reward in a trial-and-error manner while interacting with an initially unknown environment. Classical algorithms, such as dynamic programming [17], Monte Carlo methods [18], and temporal-difference learning [19], have witnessed great success in Markov decision processes (MDPs) with discrete state and action space. Recently, with development and partnership of deep learning, deep reinforcement learning (DRL) is capable of addressing high-dimensional problems ranging from video games [20,21] and board games [22] to robotic control tasks [23,24].

Table 1.

Categories of machine learning models and related applications in chemical synthesis.

Table 1.

Categories of machine learning models and related applications in chemical synthesis.

| Categories | Methods | Applications | Ref. |

|---|---|---|---|

| Supervised learning | Support vector machine, Multivariate linear regression (MLR), Decision trees, etc. | Reactivity prediction, Chemical reaction classification, Autonomous research system, etc. | [25,26,27,28,29,30,31,32,33,34,35] |

| Unsupervised learning | K-means and X-means, Gaussian mixture model, Dirichlet process mixture model | Information extraction, Molecular Simulation | [36,37] |

| Reinforcement learning | Temporal difference, Q-learning, Deterministic policy gradient, etc. | Robotic control, Synthetic route plan, etc. | [23,24,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68] |

| Advanced learning | Deep learning, etc. | Natural language processing, Property prediction, Catalyst design, etc. | [69,70,71] |

In addition, numerous machine learning algorithms have been proposed in the past few decades. Here, we briefly introduce some advanced learning methods that may be either promising or often used in unmanned chemical robotic systems (Table 1), which have been widely used in ML communities through combination with the aforementioned three typical ML methods. For example, deep learning has grown to be one of the most popular research trends in the field of machine learning. Due to its well-performed deep architectures, deep learning methods have managed to realize learning hierarchical representations end-to-end and achieve state-of-the-art performance of capturing the statistical patterns of input data [72]. Hence, they have attracted extensive attention from both the academic community and industrial applications, especially in the field of computer vision and natural language processing [73,74,75]. In the field of chemistry, a multilevel attention graph convolution neural network, called DeepMoleNet, has recently been applied to predict energies of hydroxyapatite nanoparticles (HANPs), quantum chemistry properties of organic molecules, and reaction energy of nitrogen reduction reaction process in metal-zeolites [69,70,71].

Representation learning aims to extract and organize discriminative information from high-dimensional input features [76]. To be specific, representation learning aims at obtaining a reasonably sized learning representation that captures several high-dimensional input features to significantly improve computational efficiency and statistical efficiency [77].

Transfer learning is proposed to extract knowledge from source tasks that have been met before and apply the learned knowledge to an unseen target task, enabling domains, tasks, and data distribution to be different [78,79]. Recently, transfer learning has been successfully applied to many real-world applications, such as constructing informative priors, cross-domain text classification, and large-scale document classification [80,81,82].

Distributed learning aims to scale up learning algorithms to use massive data to learn within a reasonable time by allocating the training process among several workstations [83]. Different from the traditional learning framework that gathers data into a single workstation for central learning, distributed learning allows the learning process to be carried out in a distributed manner for managing a large amount of data [84].

Active learning attempts to deal with the situation where the labeled data are sparse and hard to obtain in real-world applications, aiming to select the most critical instances to complete labeling and training [85]. It has been verified that active learning can achieve higher classification accuracy using as few labeled data as possible via query strategies than traditional learning methods [85].

2.2. Chemical Automation

Automation has emerged as a highly efficient strategy to conduct routine operations in chemistry, and recent advances in robotics and computing capability have greatly facilitated development of chemical automation. In material science, many functional materials or drugs are discovered serendipitously. However, due to the vastness of chemical space (estimated to be larger than 1060), it is a great challenge for material discovery [86]. The combination of ML and chemistry would narrow the gap between the infinite chemical space and finite synthetic capacity. The ML methods commonly used in chemistry include multivariate linear regression (MLR), decision trees, random forests (RF), gradient tree boosting consider, k-nearest neighbors and support vector machines (SVM), and deep learning.

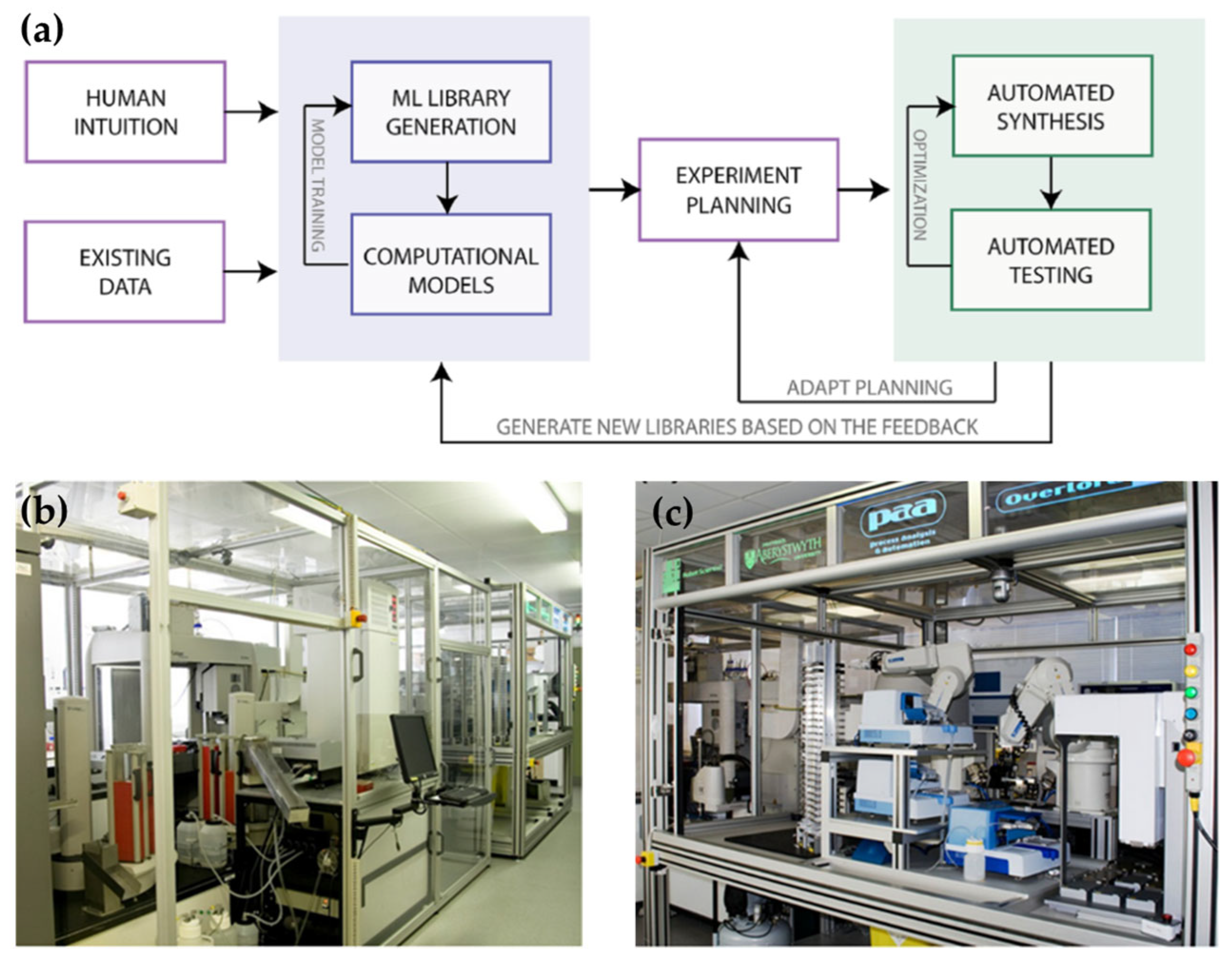

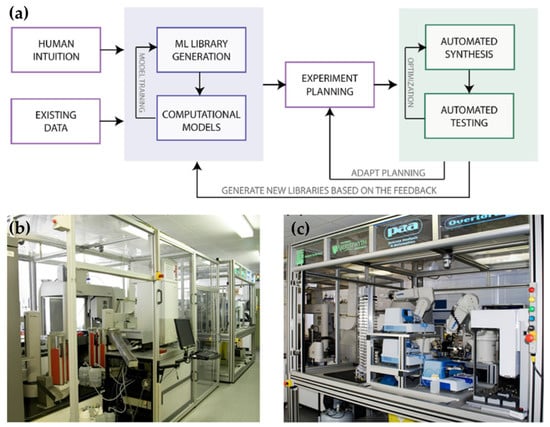

Introduction of ML to unmanned chemical systems would construct an autonomous discovery system, as shown in Figure 3 [25]. The general workflow of the autonomous discovery system includes generating hypothesis, testing, and adjusting the hypothesis according to the feedback from automated experiments (Figure 3a). The emergence of autonomous discovery system makes a new leap in the context of chemical automation. The first batch of autonomous discovery systems, such as Adam and Eve, are used in biomedicine, as shown in Figure 3b,c. Adam has sophisticated software and hardware that can also support microbiological experiments, and it can be fully automated to discover new scientific knowledge. Adam used decision trees and random forests to study the function of genes and enzymes [26]. Compared to Adam, Eve focuses on drug screening, and it is designed to be flexible to perform different bio-activity analysis [27].

Figure 3.

Unmanned chemical system. (a) Schematic illustration of an autonomous discovery system; (b) Adam’s laboratory robotic system; (c) Eve’s laboratory robotic system. Figure 3a is reprinded with permission from Ref. [25]. Copyright 2019, American Chemical Society. Figure 3b,c is adapted from Ref. [27] and used under CC BY 2.0.

For chemical synthesis, Steiner et al. developed a system called the Chemputer, which consists of a reaction flask, a jacketed filtration setup capable of being heated or cooled, an automated liquid–liquid separation module, and a solvent evaporation module. This equipment is capable of accomplishing four key stages of a synthetic process: reaction, processing, separation, and purification [4]. To further improve the degree of automation, Fitzpatrick et al. designed a LeyLab system that can complete automatic synthesis and autonomously optimize the reaction parameters [28]. To speed up the efficiency of design, Nikolaev et al. built an autonomous research system (ARES) that utilizes autonomous robotics, artificial intelligence, data sciences, and high-throughput and in situ techniques, and ARES uses the random forest algorithm [29]. Further, Granda et al. proposed an organic synthesis robot with machine learning algorithm SVM to perform chemical reactions, analyses, and predict the reactivity of possible reagent combinations. This approach allows the platform to operate experiments without prior knowledge. The predictive power of this system was demonstrated to be able to predict the reactivity of 1000 combinations with greater than 80% accuracy after considering the results of slightly over 10% of the dataset [30].

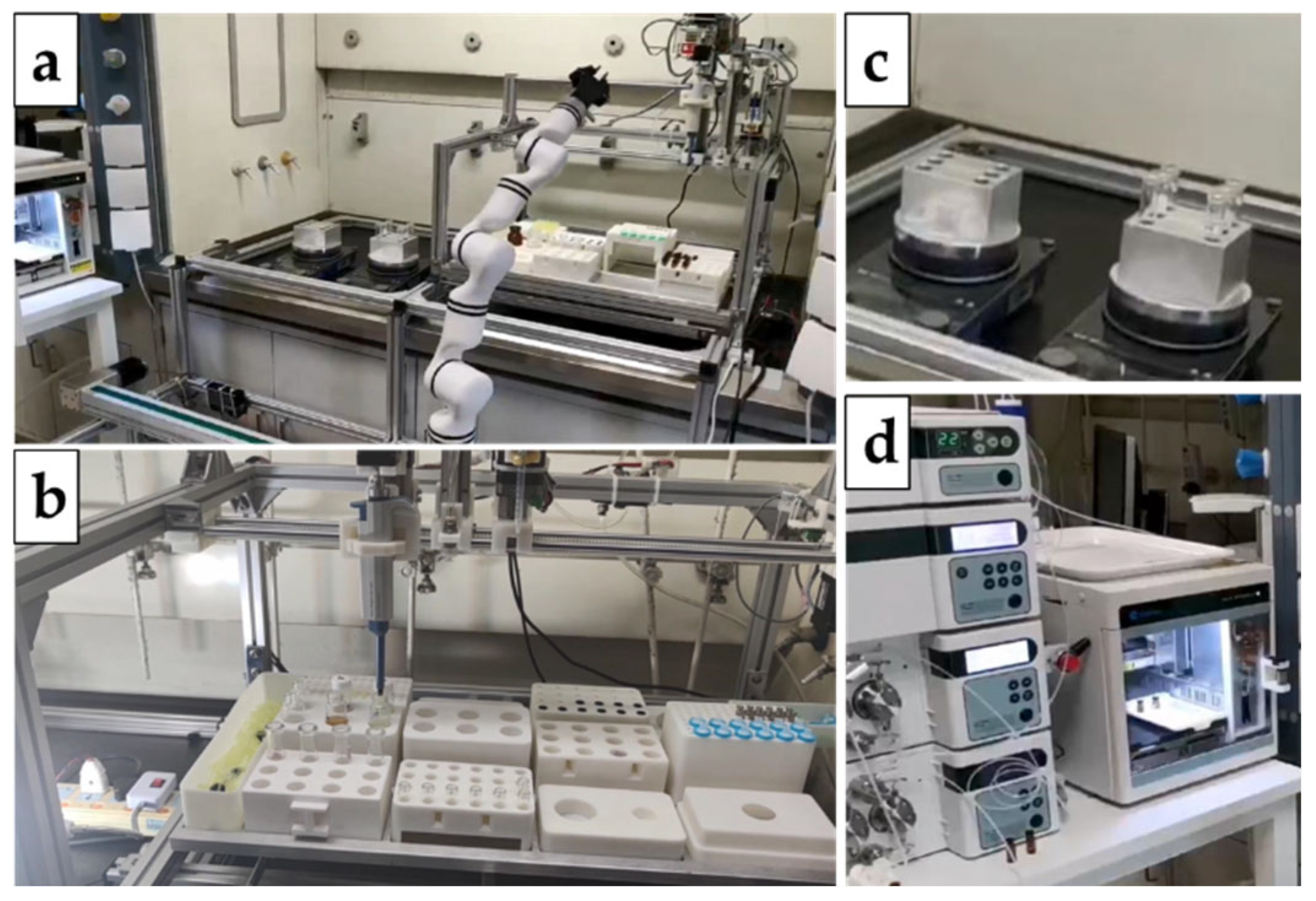

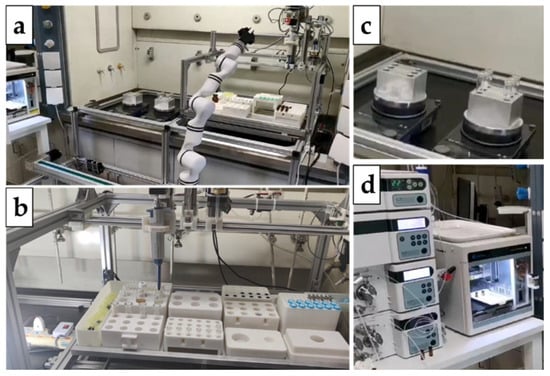

3. Implementation and Applications of Unmanned Chemical Systems

It is a goal to design an intelligent, unmanned automatic chemical reaction machine with high precision and efficiency. Holistic solutions for machine learning algorithms have also been developed to drive the whole system to work autonomously and intelligently. As shown in Figure 4, the designed system is implemented by two cooperative robots, i.e., a wheeled mobile robot with a manipulator arm for material handling and a manipulator of robotic arm on a guided rail for chemical manipulation, a reactive agent station, and a reaction stage with a magnetic stirring reactor. The capacities of this platform were demonstrated by its application in a condensation reaction of 2,4-dinitrophenyldrazine with formaldehyde and automatic catalyst evaluation of a heterogeneous aza Diels–Alder reaction, suggesting the designed machine is applicable to both homogenous and heterogeneous chemical reactions [87]. The goodness of fit in three robotic operation sets (R2 = 0.99857, SD = 0.00174) shows that the repeatability of robotic operation on the automation operation is better than manual operation (R2 = 0.98667, SD = 0.00443).

Figure 4.

Illustration of an unmanned chemical system, where (a) is the robotic arm, (b) is the pipetting station, (c) is the reacting region, (d) is the characterization module (e.g., HPLC).

We introduce the potential solution from the chemical task allocation to the completion of the experimental task, which is divided into five stages or functionalities, i.e., information extraction, control of robots, computer vision, intelligent scheduling, and computational-based virtual screening.

3.1. Information Extraction

To expand the capabilities of unmanned laboratory systems, we try to make the machines automatically execute the synthetic task following the procedures that are described in patents or literature. To achieve this purpose, the relevant information describing synthetic procedures should be extracted and translated into machine-understandable language. Thus, we need to design automated conversion from unstructured experimental procedures into structured ones: a series of reaction steps with associated properties. Extracting information from text is a problem of text-mining in the field of natural language processing (NLP). Traditional rule-based methods may work if the information is described in a fixed format. However, the description of a synthetic procedure for chemical reactions is generally unstandardized, and, thus, it is unlikely to define rules covering every possible way. Therefore, development of machine-learning models learning from data instead of rules would be more robust to noise in text [88].

Keyword extraction is a branch of text mining that is mainly divided into two categories, i.e., unsupervised keyword extraction and supervised keyword extraction. Unsupervised keyword extraction does not require manual labeling. It selects the candidate words from the text and selects the ones with high scores as keywords, and the scoring strategies include keyword extraction based on statistical features, keyword extraction based on the lexicographic model, and keyword extraction based on the topic model. The key of the supervised keyword extraction method is to train the extractor classifier, which first classifies the candidate words extracted in the article and finally labels the candidate words as keywords. To avoid the high labor costs of supervised methods, unsupervised keyword extraction is the main method. Deep learning is a specialized branch of machine learning and one of its major strengths is its potential to extract higher-level features from the raw input data [89]. Further, sequence-to-sequence models are the most common models used in text-mining nowadays, including recurrent neural (RNN) network, long short-term memory (LSTM) network, and models based on transformer [90] architecture, e.g., bidirectional encoder representations [91].

We currently adopt the BERT model to address our issues, which uses a self-attention mechanism to allow access to all words in the text simultaneously for considering contextual information. The BERT model training process includes two stages: pre-training on unlabeled data and additional training on labeled data for a specific application problem [92]. That is, a classification layer can be added to the pre-trained model, and all the parameters are jointly fine-tuned on a downstream task.

Based on this model, we further divide our task into two subtasks: named entity recognition (NER) and relation extraction (RE). First, we extract all the entities, including the reaction-step, chemicals, solvent, reaction time, temperature, etc., by fine-tuning the pre-trained BERT model. Subsequently, we estimate the relations between pairs of these entities, e.g., time or temperature is the parameter of the specific reaction-step and quantity is the parameter of a chemical or solvent. In this way, we can finally obtain all the sequential steps with associated properties and the relations between them. According to these entities and relations, we map the corresponding reaction-steps to the operations that the machines in our unmanned chemical laboratory can perform to build a complete workflow. In this way, machines can automatically execute chemical reactions following the reported procedures, provided that all the necessary conditions and chemicals are available.

3.2. Robotic Control for Unmanned Chemical Systems

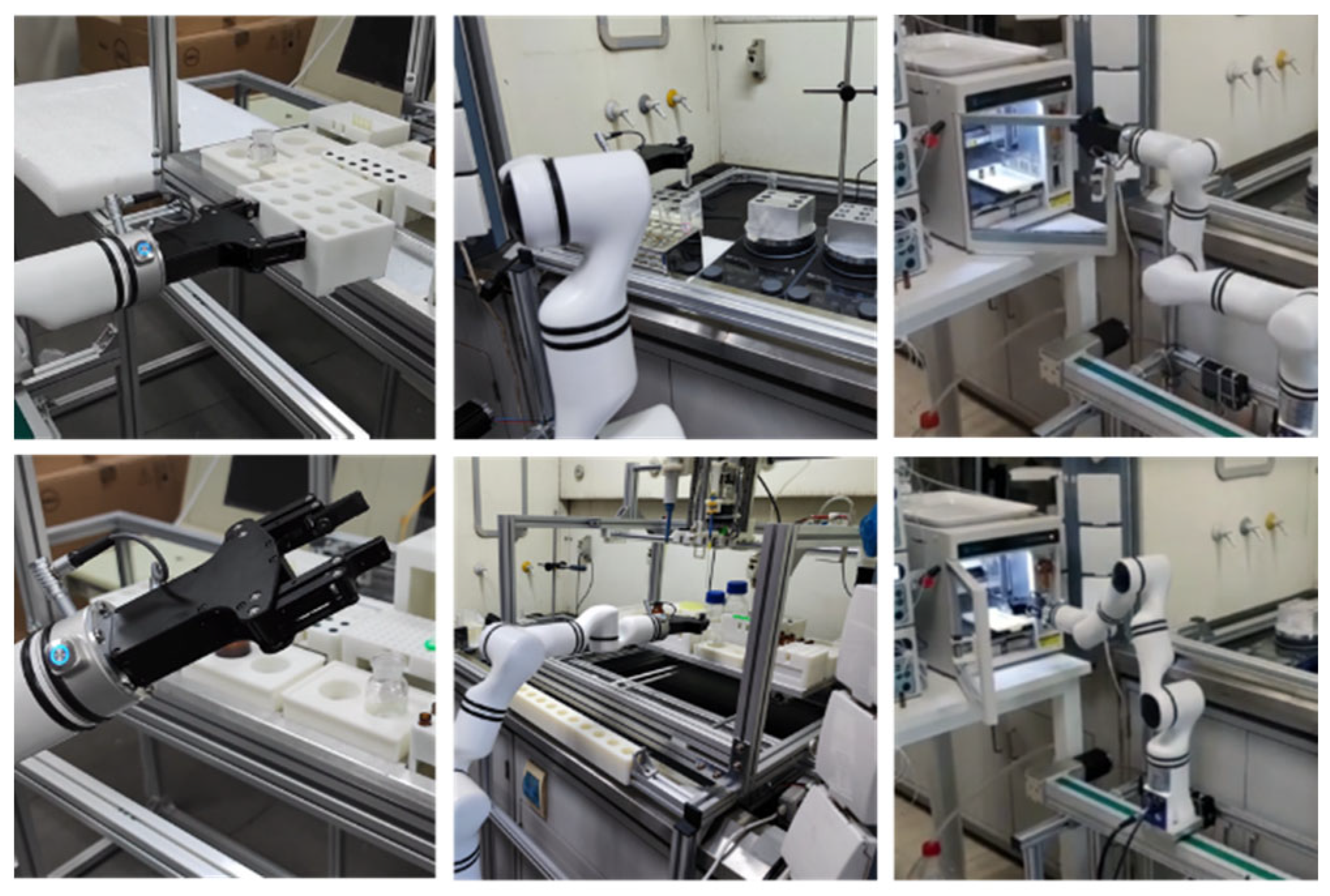

Control of Robotic Arm. The robotic arm is considered to be the most critical component in carrying out elaborate operations in unmanned chemical systems [93]. Typical operations of robotic arms include grasping chemical reaction vessels or sample vials and manipulating other instruments. These operations have been realized in one of our independently developed unmanned chemical laboratories, as shown in Figure 5.

Figure 5.

The robotic arm in our unmanned chemical laboratory carries out elaborate operations, such as grasping and transferring reaction tubes or sample vials and manipulating other instruments.

In the specific scenario of an unmanned chemical laboratory, the robotic arm is required to manipulate the end-effector towards multiple target positions for further operations at minimum cost. To empower the robotic arm with the intelligence of generalizing manipulation abilities to these tasks, one may use reinforcement learning (RL) [16] framework to learn the optimal behavior policy in a trial-and-error manner. In recent years, researchers have made a series of meaningful attempts at application of reinforcement learning and deep learning algorithms in the field of robot arm planning. Amarjyot et al. [38] comprehensively analyze and compare the task performance of reinforcement learning algorithms in robot arm grasping. Finn Chelsea et al. [39] directly realize the work from image input to targeted capture using multiple long short-term memory networks. Song et al. [40] realize lower training burden and more efficient planning path by ingeniously constructing value function. Devin et al. [41] use the attention mechanism to realize the trajectory prediction of the robot arm to the external moving objects, thus improving the obstacle avoidance ability in the moving scene. Tuomas et al. [42] use the soft Q-learning algorithm to complete the control of a series of complex manipulations of the manipulator, such as building a log tower, and proved the outstanding advantages of the maximum entropy strategy in the continuous control of the manipulator through systematic comparative experiments.

However, the reward sparsity in goal-conditioned tasks of robotic arm control can cause traditional RL algorithms to fail. For example, for a typical robot grasping scene, the target object is an object randomly distributed in the workspace. In such a scenario, it is difficult to define the reward function because, if the distance between the end and the target is simply applied, the goal of avoiding obstacles cannot be expressed. At the same time, the premise of solving the accurate distance is to have clear modeling of the environment, obstacles, and targets. In this case, it is not meaningful to apply reinforcement learning. However, if a simple 0–1 variable is used as the reward function, most rounds cannot bring about state change, resulting in extremely low learning efficiency, which means the learning agent will not be able to receive any reward signal until eventually achieving the desired goal [43].

Many endeavors have been made to improve learning efficiency of reinforcement learning algorithms in the typical continuous control problem of motion planning of robotic arms and deployed a series of practical examples on real robotic arms [44,45,46,47], such as the deep deterministic policy gradient proposed by Lillicrap et al. [44] and the soft traveler critic proposed by Haarnoja et al. [45]. Outstanding achievements have been made in improving accuracy of state and environment evaluation. The applicability of these algorithms in the field of robot control has also been widely verified by real machines on a variety of complex tasks, such as posture arrival, handling, grasping, and solving magic squares [48].

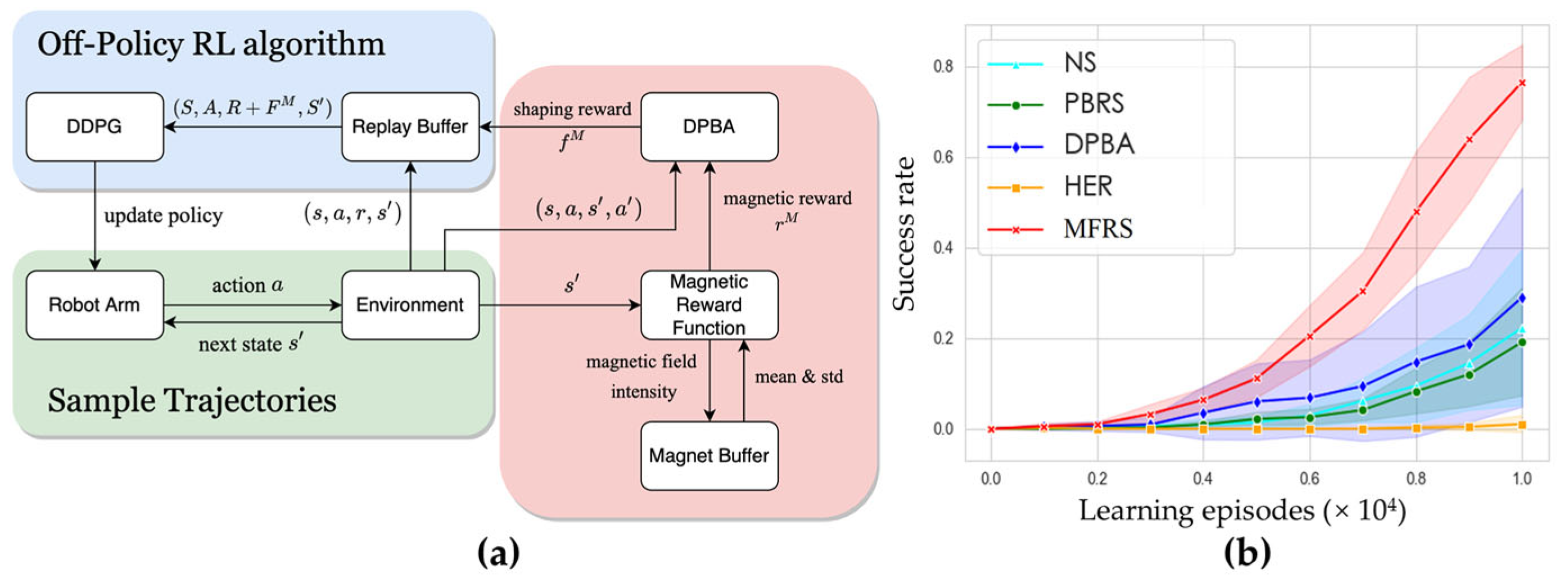

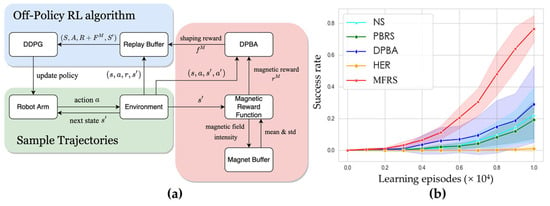

In our unmanned chemical laboratory system, to address this sample inefficiency problem caused by sparse reward, we proposed a magnetic field-based reward shaping (MFRS) method for goal-conditional RL tasks with multiple targets and obstacles. Reward shaping [49,50,51,52] is an effective method to incorporate human domain knowledge into the process of policy learning and also maintains optimal policy invariance. Existing methods generally use a distance metric to calculate the shaping reward and may fail to carry sufficient information of learning tasks. As shown in Figure 6a, we utilize the properties of permanent magnets to establish the reward function in the field of the target and obstacles and concurrently learn a secondary potential function to convert the magnetic reward function into the form that satisfies the optimal policy invariance. With the nonlinear and anisotropic distribution of the magnetic field intensity, the MFRS method is capable of providing a more informative and explicit optimization landscape compared to the distance-based reward-shaping approaches, where S is a batch of states, A is a batch of actions, S’ is a batch of next states, R is the corresponding original rewards received from the environment, is the corresponding shaping rewards derived from DPBA based on magnetic rewards, and deep deterministic policy gradient (DDPG) [53] is a model-free actor–critic algorithm that can operate over continuous state and action spaces. Experiment results in simulated and real-world tasks both demonstrate the effective robot manipulation ability and improved sample efficiency. Figure 6b illustrates the success rate in each learning episode for various methods in simulation environments, where we evaluate MFRS in comparison to four baseline methods.

Figure 6.

A reinforcement learning method using magnetic-field-based reward shaping (MFRS) for robotic arm control. (a) Overview of MFRS for goal-conditioned RL tasks with multiple targets and obstacles. (b) Success rate per learning episode of MFRS with comparison to baselines trained in simulation environments. NS: training the policy with the original reward given by the environment without shaping. PBRS [49]: potential-based reward shaping, which adds a calculated shaping reward to the original reward, where the potential function is built on Euclidean distance. DPBA [52]: dynamic potential-based advice, which can transform any given reward function into the form of potential-based reward shaping to ensure the optimal policy invariance property. HER [54]: hindsight experience replay, which relabels the desired goals in the replay buffer with the achieved goals in the same trajectories. We adopt the default “final” strategy in HER that chooses the additional goals corresponding to the final state of the environment.

Control of Mobile Robots. Deep reinforcement learning (DRL) has great potential in complex decision-making and control problems, including robotic manipulation, legged locomotion, robotic navigation, multi-agent game, and other fields [55,56,57,58]. In the field of mobile robots, researchers try to use DRL to train robots to complete different tasks on the basis of lacking accurate physical models. Currently, the commonly used DRL-based training methods include end-to-end learning from scratch with a given task reward and imitation learning with some reference trajectory clips. Hwangbo et al. [24] successfully used the trust region policy optimization (TRPO) algorithm [59] to train a quadruped robot to complete different tasks, such as following high-level body velocity commands, running faster than ever before, and recovering from falling. Tsounis et al. [60] divided the motion of the quadruped robot into gait planning and gait control and used the proximal policy optimization (PPO) algorithm and model-based motion planning to train the quadruped robot to walk in the unstructured simulation environment. Fu et al. [61] used PPO algorithm to train the hexapod robot to move in an uneven plum blossom pile environment and used simplified kinematic and dynamic constraints to ensure rationality of the motion trajectory. The trained policy was verified in both simulation and real environments. Haarnoja et al. [62] proposed the soft actor critical (SAC) algorithm based on the maximum entropy reinforcement learning paradigm and trained an under-actuated 8-DOF quadruped robot to walk about 2 h end-to-end in the real world. Different from traditional reinforcement learning, imitation learning provides an imitation trajectory object, which makes the behavior of the agent more natural, and at the same time uses the prior trajectory information to accelerate the whole training process. Peng et al. [63] combined trajectory imitation with task objectives and used the paradigm of imitation learning to train legged robots to learn high dynamic behavior from a wide range of example motion clips, and the trained policy can respond to external disturbances robustly. Using the paradigm of GAN, generative adversarial imitation learning (GAIL) teaches agents to imitate target trajectory distributions. It solves the problem that traditional imitation learning can only imitate a single motion trajectory and suffers from accumulated trajectory errors. Peng et al. [64] proposed adversarial motion priors (AMP) algorithm based on the paradigm of GAIL, which enables agents to learn different behavior styles by using unstructured motion clips. However, the current DRL algorithm has low sample efficiency and is difficult to complete training tasks directly in the real world. In fact, it requires many trial-and-error processes in the simulation environment, which can be faster, safer, and more informative. How to transfer the trained policy from the simulation to reality to enhance the application value of the DRL is a key issue, known as Sim2Real transfer, which has also attracted the attention of many researchers [65].

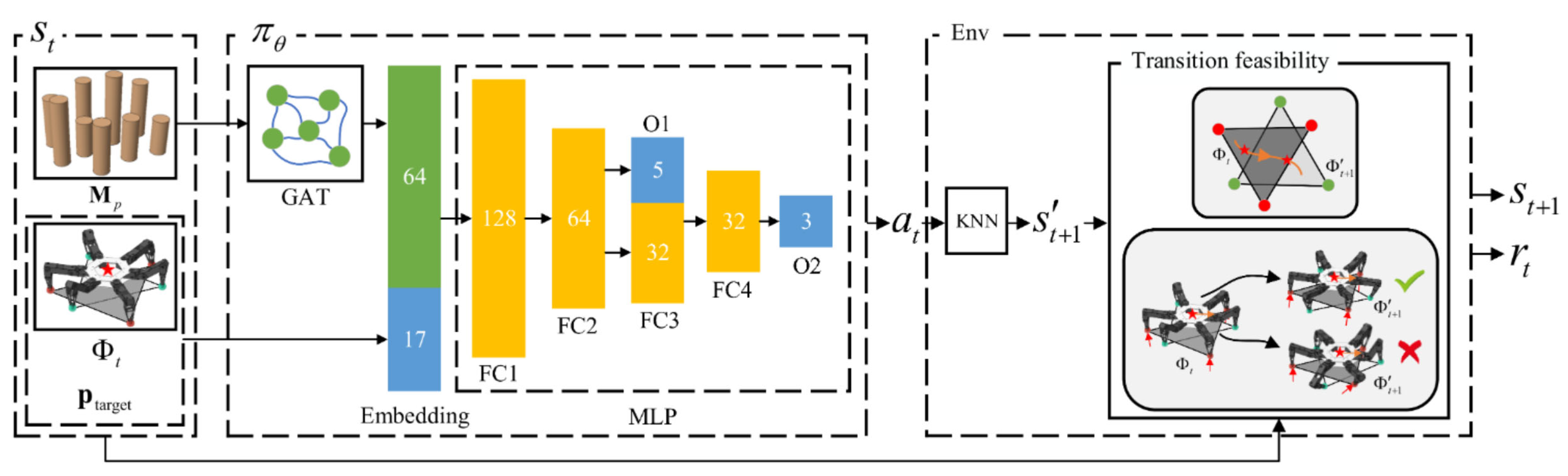

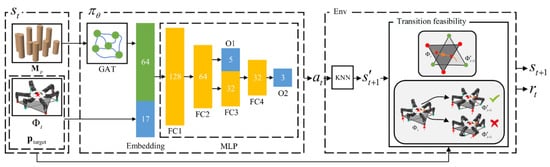

Compared with wheeled robots, legged robots can pass through challenging environments by planning the landing points sequences and have broad application prospects [66,67], which makes control of legged robots more challenging. Hence, it is critical to solve the legged motion planning problem, and the effective control methods for legged robots are also of great importance for wheeled robots and robotic arms. For example, we propose a DRL-based multi-contact motion planning method for hexapod robots moving on randomly generated uneven plum blossom piles, as shown in Figure 7, where we design a policy network to map the current state of the hexapod robot to the action ; the proprioceptive information is represented by , is the center coordinate of the target area, and is the coordinates of all the plum-blossom piles. We feed the coordinates of all randomly distributed plum-blossom piles in the environment into the graph attention network (GAT) [94]. The output of GAT is concatenated with the remaining part of state and subsequently input into the multilayer perceptron (MLP), where FC is the fully connected layer and O is the output layer. According to , the target footholds are found by the K-nearest neighbors (KNN) algorithm. The motion of the hexapod robot is formulated as a discrete-time finite Markov decision process (MDP) with a specific reward function. The designed DRL algorithm is used to generate the center of mass (COM) and feet trajectories of the hexapod robot. The inputs of the policy network include the robot’s exteroceptive measurements, the coordinate of the target area, and the environmental features extracted from the graph convolution neural network. In order to judge the transferability between two adjacent states, we propose a transition feasibility model based on the simplified single rigid body dynamic model and the trajectory optimization technique, and then the corresponding reward is determined. The trained policy is evaluated in different plum blossom pile environments, and both the simulation and experimental results on physical systems demonstrate the feasibility and efficiency of the DRL-based multi-contact motion planning method.

Figure 7.

Overview of the DRL-based multi-contact motion planning method for hexapod robots.

3.3. Computer Vision for Unmanned Chemical Systems

3.3.1. Computer Vision for Robotic Manipulation

Real-time perception of the environment plays an important role in control of robotic manipulation. The effect of real-time perception depends on the performance of the sensors. Compared with mechanics pressure sensors [95], laser distance sensors [96], millimeter-wave radar [97], use of cameras-based vision solutions to achieve flexible planning of robotic arms [98] is undoubtedly the most appropriate in the chemical laboratory from the consideration of both accuracy and economy.

Robotic hand-eye systems in unmanned chemistry labs fall into two categories [99,100,101]: one is position-based vision servoing (PBVS) using two stages of vision-based positioning and path-planning for robotic arms; the other mainly adopts the ‘end-to-end’ idea and does not use the understandable but complicated algorithmic process of “visually determine the target and obstacle locations-determine whether the robot arm is impacted at each joint-solve the rational planning of the robot arm” to complete manipulation of the robot arm. The latter directly uses the image taken by the camera as the input variable for robot arm planning, which is also called image-based visual servoing (IBVS).

Actually, in real-world applications, position-based vision servoing often uses images to determine the pose of the target while utilizing position, and image-based vision servoing often includes algorithms such as collision detection based on position data. This mixture is independently referred to as hybrid-based vision servoing (HBVS) [102].

In position-based visual servoing, the scenario has very strong requirements for consistency between model and reality. To address this, Sharifi et al. proposed a new iterative adaptive extended Kalman filter algorithm to estimate the position and pose of an object in real time to improve positional estimation accuracy in the presence of certain model errors and noise [103]. Wang et al. proposed a dynamic model based on data, which connects information utilization between distance judgment and manipulator planning and achieved a more stable control effect [104]. For relatively fixed scenes, such as unmanned chemical laboratories and industrial assembly lines, adding some target marks can undoubtedly greatly enhance efficiency of object recognition. A typical target mark usually consists of an array of easily recognizable graphic elements, such as squares, circles, and lines.

In image-based vision servoing, visual information is used to obtain the characteristics of the observed object in image space, and the error is formed by comparing it with the desired image characteristics, and a control law is set based on this error to control the robot or camera motion. Its strategy of relying on the current image to complete control also causes its control information to be limited to the “current field of view”, which may result in control failure for large motion scenes [105].

The primary requirement of traditional graphical IBVS is to complete extraction of picture features to complete the comparison between the target state of the robotic arm and the current rotation state. The commonly used machine vision feature extraction algorithms in this process are SIFT [106], Daisy [107], SURF [108], ORB [109], etc. Many current works use convolutional neural networks to complete the entire process from feature extraction to control scheme generation, eliminating the errors arising in feature extraction and comparison. Saxena et al. train Flow Net using synthetic images to predict the current pose of the camera and complete visual servo operation by comparing it with the target pose [110]. Bateux et al. train the VGG network to directly predict the relative positional error between two images [111]. Neuberger et al. first use the pose estimation network to complete the initial pose tracking and then use a vision feature extraction algorithm to complete fine-tuning, taking full advantage of the high accuracy of IBVS steady-state [112].

3.3.2. Object Detection

In unmanned chemical experimental systems, visual recognition involves target identification and detection, and detection is the prerequisite for robotic manipulation tasks. However, due to the complex and diverse environments of chemical laboratories, a variety of chemical instruments and equipment may become targets for recognition by the detection system. The distance and location between each target and the robot are different and the size of the target in the image varies greatly. In addition, given that the images taken in the laboratory scene are affected by the brightness of light and darkness and mutual occlusion of each target, it is greatly challenging in application of computer-vision-based object detection.

At present, there are two types of object detection methods: traditional image processing and deep learning methods. Researchers have been focusing on deep learning and convolutional neural networks (CNN) since the AlexNet [113] algorithm achieved first place in the ImageNet competition in 2012. At present, the mainstream deep-learning-based target recognition detection methods contain three categories: first-order target recognition detection, second-order target recognition detection, and anchor-free target recognition detection. One-stage object detection is represented by the YOLO and SSD series algorithms. The image input to the convolutional neural network will directly obtain a rectangular box identifying the detected target, the target category, and the probability judged by the algorithm. Two-stage object detection (for example, R-CNN [114]) is more complex, more expensive to train, and slower to recognize but with higher recognition accuracy compared with one-stage object detection. Anchor-free object detection directly detects the target without a preset anchor frame for detection and currently can be divided into key-point-based detection methods and center-point-based detection methods, where CornerNet [115], CenterNet [116], FCOS [117], etc., achieve better results.

There have been many attempts at target identification and detection in unmanned chemical environments. Sagi Eppel et al. evaluated the correspondence of real liquid surface contours to achieve recognition of liquid surfaces and liquid levels in various transparent containers with a miss rate of less than 1% for gas–liquid surfaces [118], and, in 2016, Sagi Eppel et al. proposed a graph-cutting algorithm capable of being used to identify the boundaries of materials in transparent containers with high accuracy for identification of the boundaries of liquid, solid, granular, and powder materials in various glass containers in chemical laboratories [119]. In 2018, Solana-Altabella et al. developed two different computer-vision-based analytical chemistry methods to quantify iron in commercial pharmaceutical formulations with less than 2% error [120]. In 2020, Sagi Eppel et al. proposed a machine learning approach and provided a new dataset, Vector-LabPics, for identifying materials and containers in chemical laboratory containers [121]. In the same year, Tara Zepel et al. developed a generalizable and inexpensive computer-vision-based system for liquid-level monitoring and control [122]. In 2021, Kosenkov proposed a new technique for automated titration experiments using computer vision [123].

Although the current computer-vision-based recognition and detection technology has helped to improve the efficiency of chemical experiments, most of the existing unmanned chemical experiment systems are designed with multiple dedicated chemical automation devices, which results in high cost and poor versatility of unmanned chemical experiment systems. It is still a great challenge to develop efficient and practical computer-vision-based object recognition and detection systems for automatic unmanned chemical laboratory systems.

3.4. Intelligent Scheduling for Unmanned Systems

Design of an automation system framework possessing the function that supports users regarding remote operation (e.g., plan experimental workflow, execute and monitor experiments, etc.) is another important aspect to improve the degree of automation. Meanwhile, to make the system allocate experimental resources to several tasks reasonably, improve equipment utilization efficiency, and reduce the total time of tasks, the system requires intelligent scheduling to optimize the process of the whole system.

Scheduling is defined as scheduling of resources, including machines and materials used by several tasks, in time so that one or more objective functions of the whole system can be optimized. As one of the key problems in the field of intelligent manufacturing, scheduling problem has been widely used in enterprise management, production management, and scientific research. In view of the concept of scheduling, it has the features of objective diversity, environmental uncertainty, and the complexity of solving the whole problem. For the diversity of objectives, the general aim of production scheduling is based on the combination of scheduling plan and evaluation index. The diversity of scheduling objectives is caused by different manufacturing environments. At the same time, the scheduling index requires to consider non-unique indicators, including the lowest cost and the highest utilization rate of the equipment. Consequently, the design scheduling should pay attention to avoid conflict between indicators. For uncertainty, there are many random uncertainties and other factors in production scheduling, e.g., equipment failure and communication failure. The real environment may not be consistent with the plan, which requires dynamic scheduling to fully adapt to working environment changes. For complexity, objective diversity and uncertainty cause the complexity of solving the production scheduling problem.

During development of scheduling, several solutions have emerged, e.g., job-shop problem based on genetic algorithm, flow-shop scheduling problem adaptive simulated annealing algorithm, genetic algorithms for changing structural space, and neural network for solving dynamic scheduling problems. Generally, production scheduling can be divided into two parts: the first is classical scheduling theory, which is a scheduling method based on operational research theory. The second is intelligent scheduling, which is based on artificial intelligence technology. Classic scheduling is a mathematical model based on operations research to solve the optimization model with constraints. Due to the real environment being dynamic, it is hard for classical scheduling models to adapt to the complexity of scheduling problems. However, based on artificial intelligence technology, assembly of intelligent equipment that integrates self-perception, self-learning, data analysis, decision generation, and production adjustment can be well suited to production practice.

For chemistry automation, it is necessary to coordinate various types of instruments to complete the experiment, avoid resource conflicts, and reduce the execution time of the experiment. To solve the lab tasks scheduling problem, Cabrera et al. used a simulated annealing algorithm to solve dynamic scheduling of experimental tasks [124]. Alexander et al. used ant colony algorithm to solve task scheduling in the mineral laboratory and compared it with the exact method to verify its effectiveness [125]. Li et al. described sample detection in a medical laboratory as a parallel batch online scheduling problem and solved it with the objective of minimizing maximum weighted flow time [126]. Takeshi et al. described the lab automation scheduling problem with time constraints between operations as a mixed integer programming problem and proposed a scheduling method based on the branch and bound algorithm [127]. Shan et al. used the improved genetic algorithm to solve the biological laboratory scheduling problem. Wu et al. defined the task assignment problem of metrological detection in an unmanned metrological laboratory as an open shop scheduling problem and proposed a discrete gray wolf optimization algorithm based on fitness and distance evaluation criteria to solve it.

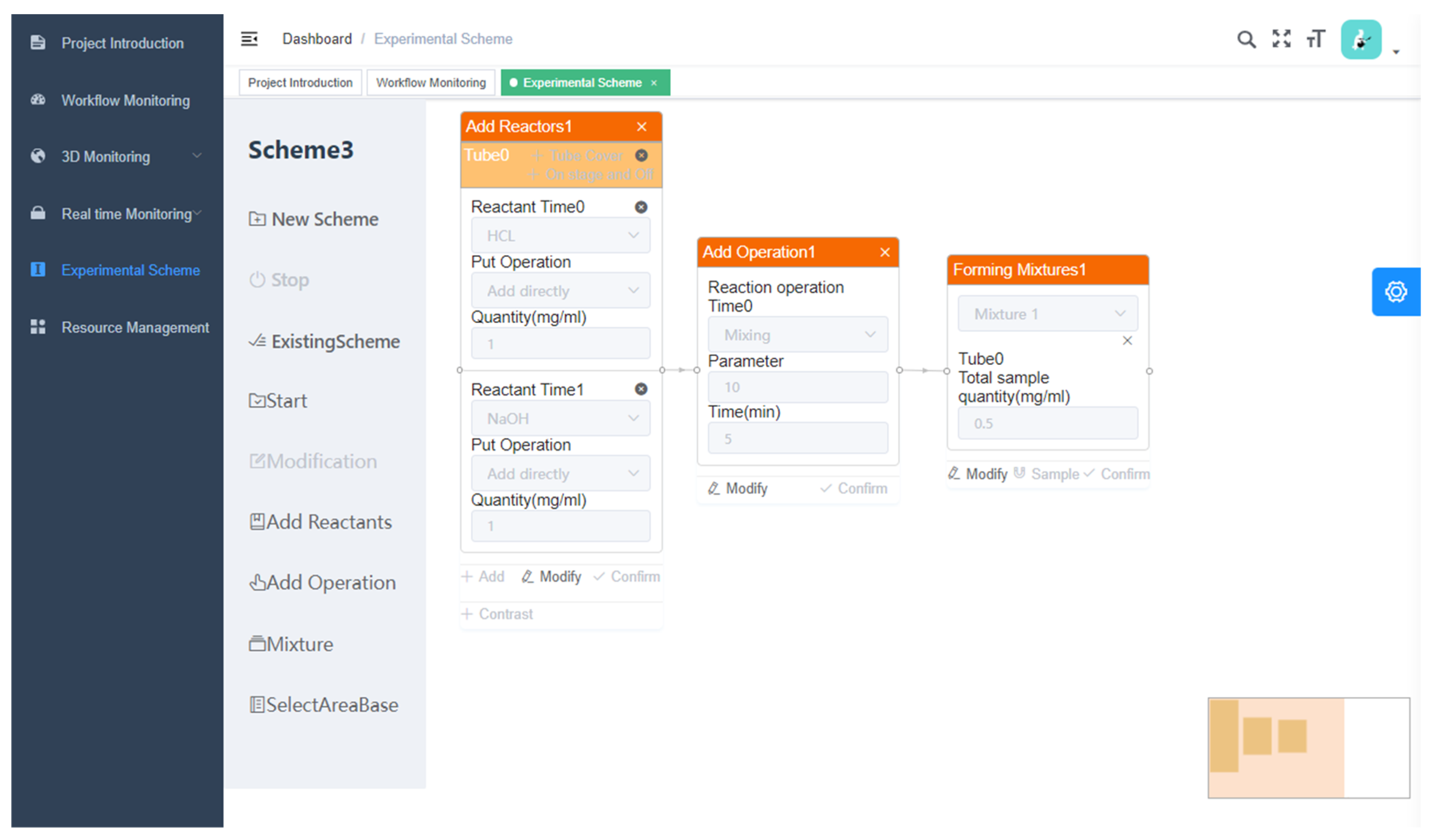

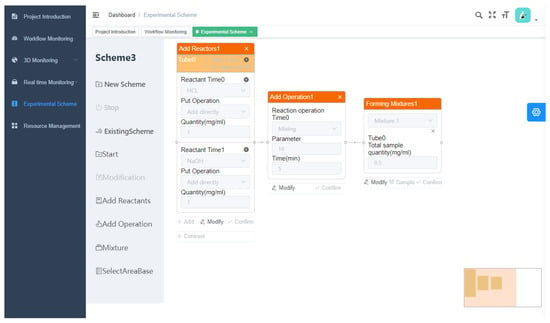

We also designed a framework of automated chemical synthesis system, which implements the functions of experiment scheme formulation, experiment flow monitoring, experiment equipment management, and user interaction interface. By unifying the software and hardware interfaces and communication protocols, integration of experimental equipment is more efficient and can satisfy the diverse experimental requirements of users. In the design of the experimental scheme, the system can directly implement the required experimental process in the interface of net. The web page of the unmanned chemical system is shown in Figure 8.

Figure 8.

Illustration of the web page of the unmanned chemical system. The leftmost menu is the system administration section. The central section of the diagram shows the core content of the experimental design of an unmanned chemical system. First, we can drag ‘Add Reactants’, ‘Add Operation’, and ‘Mixture’ from the left menu to the empty configuration area on the right and fill in the required parameters. Then, we can click ‘Start’ to start the unmanned chemical system and the chemical experiment.

To improve the experimental efficiency of the system and achieve the best match between resources and tasks, it is necessary for the computer to comprehensively calculate the experimental equipment, materials, and requirements. Finally, the system obtains the optimal scheduling scheme. In the scheduling algorithm, it is necessary to consider use of materials and tubes, reaction conditions, and time required by different experimental processes and coordination of the mechanical arm, liquid transfer station, product detection station, and other devices to reduce the idle time of the equipment. In intelligent scheduling, optimization of scheduling can be regarded as a reinforcement learning problem, and the reinforcement learning process of searching the best policy is used as the optimization process of searching the optimal solution. Zhang et al. proposed a reinforcement learning method to minimize average weighted delay in irrelevant parallel machine scheduling [128]. Gyoung et al. proposed genetic reinforcement learning to solve the scheduling problem as an RL problem [129]. Jamal et al. considered random job arrival and machine failure and adopted reinforcement learning of Q-factor algorithm to improve the dynamic job shop scheduling problem [130].

3.5. Augmentation of Automatic Chemical Systems with Computation-Based Screening

Despite that the automatic chemical synthesis system can liberate experimenters and make the accessible data in the order of thousands, the high-dimensional parameter spaces make chemical research with high-throughput experiments cost-prohibitive. For instance, optimization of the maximum reaction yield for a target product always needs to explore hundreds or thousands of possible reaction condition combinations (e.g., catalysts, ligands, substrates, additives, etc.). This contributes to high time- and material costs for random high-throughput experiments with automated synthesis. Thus, it is highly desirable to introduce other tools to lower the scope and parameters for automated experiments. With advances in computer science and computational methods and software, a diverse array of high-throughput or automated computational toolkits are now available. We believe integration of the automatic reaction machine with computational-based virtual screening will not only augment the intelligence of automatic chemical systems but also makes the exploration process more time- and resource-efficient. Recently, Jiang and co-workers developed a comprehensive artificial intelligence chemistry laboratory. The platform incorporates a machine reading module, a mobile robot module, and a computational brain module and can target different chemical tasks [131]. An investigation of dye-sensitized photocatalytic H2 productions with an average of deviation bars of 5.5% for H2 production rate and 8.3% for RhB degradation efficiency demonstrates the high accuracy and repeatability of the platform. Here, we will take chemical synthesis as an example to facilitate augmentation of automatic chemical systems with computational-based simulation from three aspects, including quantum-mechanical-based automated reaction design, purely data-driven reactivity prediction models, and retrosynthetic route planning.

In past decades, quantum-mechanical (QM) calculations have achieved great success both in discovery of new mechanisms and new reactions [132,133,134]. Although the data-driven approach tends to be more efficient in reactivity prediction of the chemical reactions, the QM-based new reaction design remains highly indispensable because it is not only an accurate approach for modeling chemical reactivity but also can provide rich mechanistic insight for reactions. More importantly, several automated reaction pathways searching methods have been developed, such as MD/CD, Q2MM, LASP, etc. [135,136,137,138,139]. These automated computational workflows offer powerful and low-labor-intensive tools for new reaction and catalyst design due to their low or no human intervention. Further, through combination with machine learning techniques, accurate and efficient automated reactivity prediction tools would be achieved to realize rapid prediction of chemical reaction mechanisms as well as high-throughput virtual screening [140]. Then, candidate reactions would be discovered and recommended for automatic chemical systems and the latter further carry out experimental verification accordingly. Thus, such a paradigm will reduce the number of trial-and-error experiments required for new reaction discovery.

In addition to QM-based reactivity prediction, fast strategies based on purely data-driven ML methods would be highly useful to improve the material efficiency of automated chemical reaction systems. Recently, machine learning (ML) methodologies were demonstrated to be useful in prediction of reactivities, regio-, and stereoselectivity [31,32,33,34,35]. For example, Doyle et al. reported the performance of the random forest model on the prediction of the reaction yield of a Buchwald–Hartwig amination reaction [31]. A dataset of 4608 reactions generated through high-throughput experiments (HTE) on four-parameter combinations (including twenty-three isoxazole additives, fifteen aryl and heteroaryl halides, four palladium catalysts, and three bases) was used for modeling. The final model predicted the yield with root mean square error (RMSE) of 11.3% and R2 of 0.83 on a series of out-of-sample additives. Coupling this approach with the experiments might facilitate one to explore the reaction reactivities or optimal conditions of new substrates. Machine-learning-guided workflow for improving the efficiency of automated experimentations generally consists of four steps: (1) data collection from automated experiments or literature reports; (2) feature selection and building reactivity/selectivity prediction model; (3) virtual screening on a large reaction space; (4) candidate reaction recommendation; (5) verification through automated synthesis; (6) feedback and iterative to retrain the model for performance improvement; (7) discovery of new reactions. In comparison with QM-based screening, the purely data-driven ML approach requires the lowest computational cost, and we, therefore, believe that introduction of an ML-assisted reaction discovery approach to the automated chemical synthesis system will significantly improve efficiency.

The aforementioned reactivity prediction strategies are mainly focused on development of new reactions or process optimization. Another important aspect is how to efficiently construct the target functional molecules (e.g., natural products, pharmaceuticals, agro-, and fine chemicals, etc.) with the automated chemical synthesis machine. Computer-assisted retrosynthesis is a valuable tool for planning the synthetic route of the desired structures, and ML has achieved great success in this field [68,141,142,143]. For instance, Waller and Segler et al. [68] combined deep neural network policy learned from the published knowledge of chemistry, Monte Carlo tree search (MCTS), to guide the search and filter the network to preselect the most possible reverse synthesis route [68]. The approach was modeled on 12.4 million reactions from the Reaxy database, and the results showed that the model was 30 times faster than the known computer-aided search method based on rule extraction and manual-designed heuristic. Compared with the 87.5% accuracy of the benchmark forecast, the accuracy of this strategy is 95%. With a designed synthetic route, automated chemical systems can execute the synthesis task to obtain the target molecules. For example, based on the continuous flow reaction system, Jamison and Jensen et al. developed an open-source kit, ASKCOS, for computer-aided synthesis route design (CASP) [141], which was used to plan the molecular synthesis route, design the operating conditions according to the prior reaction efficiency information (e.g., the residence time of each part of the continuous flow reaction, the amount and concentration of reactants and products). Then, that synthetic information was refined into a chemical “formula”, which was then sent to the robot platform to automatically assemble hardware and conduct the synthetic operation to build molecules.

4. Conclusions

This paper first reviewed unmanned chemical synthesis systems and provided a basic introduction to machine learning. Second, we expounded on the problems existing in the current unmanned chemistry systems. Finally, the prospect for strengthening the connection between reaction pathway exploration and the existing automatic reaction platform and solutions for improving autonomation through combination with machine learning algorithms, such as information extraction, control of robots, computer vision, and intelligent scheduling, were proposed. In addition to the aforementioned aspects, there is great power and potential in application of machine learning models for chemistry, such as property predictions, material design, molecular simulations, and chemistry grammar extraction [144,145,146,147,148]. In the future, merging of data science and machine learning algorithms with automation techniques will revolutionize unmanned chemical synthesis systems toward higher intelligence.

Author Contributions

Conceptualization, J.M. and C.C; methodology, B.X.; software, X.G. and J.Z.; validation, G.W. (Gaobo Wang) and Y.Z.; formal analysis, G.W. (Guoqiang Wang), Y.Z. and X.C.; writing—original draft preparation, X.W. and G.W. (Guoqiang Wang); writing—review and editing, X.W., G.W. (Guoqiang Wang), J.M. and C.C.; supervision, C.C and J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (Grant Number 2019YFC0408303 to J.M.), National Natural Science Foundation of China (Grant Numbers 62073160 to C.C., 22033004 to J.M., 2273035 to G.Q.W.).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deming, S.N.; Pardue, H.L. Automated instrumental system for fundamental characterization of chemical reactions. Anal. Chem. 1971, 43, 192–200. [Google Scholar] [CrossRef]

- Legrand, M.; Foucard, A. Automation on the laboratory bench. J. Chem. Educ. 1978, 55, 767. [Google Scholar] [CrossRef]

- Frisbee, A.R.; Nantz, M.H.; Kramer, G.W.; Fuchs, P.L. Laboratory automation. 1: Syntheses via vinyl sulfones. 14. Robotic orchestration of organic reactions: Yield optimization via an automated system with operator-specified reaction sequences. J. Am. Chem. Soc. 1984, 106, 7143–7145. [Google Scholar] [CrossRef]

- Steiner, S.; Wolf, J.; Glatzel, S.; Andreou, A.; Granda, J.M.; Keenan, G.; Hinkley, T.; Aragon-Camarasa, G.; Kitson, P.J.; Angelone, D.; et al. Organic Synthesis in a Modular Robotic System Driven by a Chemical Programming Language. Science 2019, 363, eaav2211. [Google Scholar] [CrossRef]

- Mohammed, M.; Khan, M.B.; Bashier, E.B.M. Machine Learning: Algorithms and Applications; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Das, K.; Behera, R.N. A survey on machine learning: Concept, algorithms and applications. IJIRCCE 2017, 5, 1301–1309. [Google Scholar]

- Mahesh, B. Machine learning algorithms—A review. Int. J. Sci. Res. IJSR 2020, 9, 381–386. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Zheng, J.; Shen, F.; Fan, H.; Zhao, J. An online incremental learning support vector machine for large-scale data. Neural. Comput. Appl. 2013, 22, 1023–1035. [Google Scholar] [CrossRef]

- Mitchell, T.; Buchanan, B.; DeJong, G.; Dietterich, T.; Rosenbloom, P.; Waibel, A. Machine learning. Annu. Rev. Comput. Sci. 1990, 4, 417–433. [Google Scholar] [CrossRef]

- Ghosh, C.; Cordeiro, C.; Agrawal, D.P.; Rao, M.B. Markov chain existence and hidden Markov models in spectrum sensing. In Proceedings of the 2009 IEEE International Conference on Pervasive Computing and Communications, Galveston, TX, USA, 9–13 March 2009; pp. 1–6. [Google Scholar]

- Yue, K.; Fang, Q.; Wang, X.; Li, J.; Liu, W. A parallel and incremental approach for data-intensive learning of bayesian networks. IEEE Trans. Cybern. 2015, 45, 2890–2904. [Google Scholar] [CrossRef]

- Hinton, G.; Sejnowski, T.J. Unsupervised Learning: Foundations of Neural Computation; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Safatly, L.; Bkassiny, M.; Al-Husseini, M.; El-Hajj, A. Cognitive radio transceivers: Rf, spectrum sensing, and learning algorithms review. Int. J. Antenn. Propag. 2014, 2014, 548473. [Google Scholar] [CrossRef]

- Bkassiny, M.; Jayaweera, S.K.; Li, Y. Multidimensional dirichlet process-based non-parametric signal classification for autonomous self-learning cognitive radios. IEEE Trans. Wirel. Commun. 2013, 12, 5413–5423. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Bellman, R. Dynamic programming. Science 1966, 153, 34–37. [Google Scholar] [CrossRef]

- Tesauro, G.; Galperin, G. On-line policy improvement using montecarlo search. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–5 December 1996; Volume 9. [Google Scholar]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, X.; Houthooft, R.; Schulman, J.; Abbeel, P. Benchmarking deep reinforcement learning for continuous control, in International conference on machine learning. PMLR 2016, 48, 1329–1338. [Google Scholar]

- Hwangbo, J.; Lee, J.; Dosovitskiy, A.; Bellicoso, D.; Tsounis, V.; Koltun, V.; Hutter, M. Learning agile and dynamic motor skills for legged robots. Sci. Robot. 2019, 4, eaau5872. [Google Scholar] [CrossRef]

- Dimitrov, T.; Kreisbeck, C.; Becker, J.S.; Aspuru-Guzik, A.; Saikin, S.K. Autonomous molecular design: Then and now. ACS Appl. Mater. 2019, 11, 24825–24836. [Google Scholar] [CrossRef]

- King, R.D.; Rowland, J.; Aubrey, W.; Liakata, M.; Markham, M.; Soldatova, L.N.; Whelan, K.E.; Clare, A.; Young, M.; Sparke, A.; et al. The robot scientist Adam. Computer 2009, 42, 46–54. [Google Scholar] [CrossRef]

- Sparkes, A.; Aubrey, W.; Byrne, E.; Clare, A.; Khan, M.N.; Liakata, M.; Markham, M.; Rowland, J.; Soldatova, L.N.; Whelan, K.E.; et al. Towards robot scientists for autonomous scientific discovery. Autom. Exp. 2010, 2, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Fitzpatrick, D.E.; Battilocchio, C.; Ley, S.V. A novel internet-based reaction monitoring, control and autonomous self-optimization platform for chemical synthesis. Org. Process. Res. Dev. 2016, 20, 386–394. [Google Scholar] [CrossRef]

- Nikolaev, P.; Hooper, D.; Webber, F.; Rao, R.; Decker, K.; Krein, M.; Poleski, J.; Barto, R.; Maruyama, B. Autonomy in materials research: A case study in carbon nanotube growth. NPJ Comput. Mater. 2016, 2, 16031. [Google Scholar] [CrossRef]

- Granda, J.M.; Donina, L.; Dragone, V.; Long, D.L.; Cronin, L. Controlling an organic synthesis robot with machine learning to search for new reactivity. Nature 2018, 559, 377–381. [Google Scholar] [CrossRef]

- Ahneman, D.T.; Estrada, J.G.; Lin, S.; Dreher, S.D.; Doyle, A.G. Predicting reaction performance in C–N cross-coupling using machine learning. Science 2018, 360, 186–190. [Google Scholar] [CrossRef]

- Sandfort, F.; Strieth-Kalthoff, F.; Kghnemund, M.; Beecks, C.; Glorius, F. A Structure-Based Platform for Predicting Chemical Reactivity. Chem 2020, 6, 1379–1390. [Google Scholar] [CrossRef]

- Zahrt, A.F.; Henle, J.J.; Rose, B.T.; Wang, Y.; Darrow, W.T.; Denmark, S.E. Prediction of Higher Selectivity Catalysts by Computer Driven Workflow and Machine Learning. Science 2019, 363, eaau5631. [Google Scholar] [CrossRef]

- Li, X.; Zhang, S.-Q.; Xu, L.-C.; Hong, X. Predicting Regioselectivity in Radical C-H Functionalization of Heterocycles through Machine Learning. Angew. Chem. Int. Ed. 2020, 59, 13253–13259. [Google Scholar] [CrossRef]

- Zhu, Q.; Gu, Y.; Liang, X.; Wang, X.; Ma, J. A Machine Learning Model To Predict CO2 Reduction Reactivity and Products Transferred from Metal-Zeolites. ACS Catal. 2022, 12, 12336–12348. [Google Scholar] [CrossRef]

- Schwaller, P.; Benjamin, H.; Reymond, J.-L.; Strobelt, H.; Laino, T. Extraction of organic chemistry grammar from unsupervised learning of chemical reaction. Sci. Adv. 2021, 7, eabe4166. [Google Scholar] [CrossRef]

- Glielmo, A.; Husic, B.E.; Rodriguez, A.R.; Clementi, C.; Noé, F.; Laio, A. Unsupervised Learning Methods for Molecular Simulation Data. Chem. Rev. 2021, 121, 9722–9758. [Google Scholar] [CrossRef]

- Amarjyoti, S. Deep reinforcement learning for robotic manipulation-the state of the art. arXiv 2017, arXiv:1701.08878. [Google Scholar]

- Finn, C.; Levine, S. Deep visual foresight for planning robot motion. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 2786–2793. [Google Scholar]

- Song, S.; Zeng, A.; Lee, J.; Funkhouser, T. Grasping in the wild: Learning 6-dof closed-loop grasping from low-cost demonstrations. IEEE Robot. Autom. Lett. 2020, 5, 4978–4985. [Google Scholar] [CrossRef]

- Devin, C.; Abbeel, P.; Darrell, T.; Levine, S. Deep object-centric representations for generalizable robot learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 7111–7118. [Google Scholar]

- Haarnoja, T.; Pong, V.; Zhou, A.; Dalal, M.; Abbeel, P.; Levine, S. Composable deep reinforcement learning for robotic manipulation. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 6244–6251. [Google Scholar]

- Schaul, T.; Horgan, D.; Gregor, K.; Silver, D. Universal value function approximators. PMLR 2015, 37, 1312–1320. [Google Scholar]

- Lillicrap, T.; Hunt, J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Daan Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Offpolicy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Fujimoto, S.; Hoof, H.; Meger, D. Addressing function approximation error in actor-critic methods. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1587–1596. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. In Proceedings of the Conference and Workshop on Neural Information Processing System, Long Beach, CA, USA, 4–9 December 2017; pp. 1–12. [Google Scholar]

- Breyer, M.; Furrer, F.; Novkovic, T.; Siegwart, R. Comparing task simplifications to learn closed-loop object picking using deep reinforcement learning. IEEE Robot. Autom. Lett. 2019, 4, 1549–1556. [Google Scholar] [CrossRef]

- Ng, A.Y.; Harada, D.; Russell, S. Policy invariance under reward transformations: Theory and application to reward shaping. Proc. Int. Conf. Mach. Learn. 1999, 99, 278–287. [Google Scholar]

- Wiewiora, E.; Cottrell, G.W.; Elkan, C. Principled methods for advising reinforcement learning agents. In Proceedings of the 20th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2003; pp. 792–799. [Google Scholar]

- Devlin, S.M.; Kudenko, D. Dynamic potential-based reward shaping. In Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems, Valencia, Spain, 4–8 June 2012; pp. 433–440. [Google Scholar]

- Harutyunyan, A.; Devlin, S.; Vrancx, P.; Nowé, A. Expressing arbitrary reward functions as potential-based advice. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, P.; Zaremba, W. Hindsight experience replay. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, R.; Nageotte, F.; Zanne, P.; Mathelin, M.; Dresp-Langley, B. Deep reinforcement learning for the control of robotic manipulation: A focused mini-review. Robotics 2021, 10, 22. [Google Scholar] [CrossRef]

- Yue, J. Learning Locomotion For Legged Robots Based on Reinforcement Learning: A Survey. In Proceedings of the 2020 International Conference on Electrical Engineering and Control Technologies (CEECT), Melbourne, Australia, 10–13 December 2020; pp. 1–7. [Google Scholar]

- Zeng, F.; Wang, C.; Ge, S.S. A survey on visual navigation for artificial agents with deep reinforcement learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Gronauer, S.; Diepold, K. Multi-agent deep reinforcement learning: A survey. Artif. Intell. Rev. 2022, 55, 895–943. [Google Scholar] [CrossRef]

- Schulman, J.; Levine, S.; Jordan, M.; Abbeel, P. Trust region policy optimization. International conference on machine learning. PMLR 2015, 37, 1889–1897. [Google Scholar]

- Tsounis, V.; Alge, M.; Lee, J.; Farshidian, F.; Hutter, M. Deepgait: Planning and control of quadrupedal gaits using deep reinforcement learning. IEEE Robot. Autom. Lett. 2020, 5, 3699–3706. [Google Scholar] [CrossRef]

- Fu, H.; Tang, K.; Li, P.; Zhang, W.; Wang, X.; Deng, G.; Wang, T.; Chen, C. Deep Reinforcement Learning for Multi-contact Motion Planning of Hexapod Robots. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI-21), Montreal, QC, Canada, 19–27 August 2021; pp. 2381–2388. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, T.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P.; et al. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Peng, X.B.; Abbeel, P.; Levine, S.; Panne, M. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Trans. Graph. TOG 2018, 37, 1–14. [Google Scholar] [CrossRef]

- Peng, X.B.; Ma, Z.; Abbeel, P.; Levine, S. Amp: Adversarial motion priors for stylized physics-based character control. ACM Trans. Graph. TOG 2021, 40, 1–20. [Google Scholar] [CrossRef]

- Zhao, W.; Queralta, J.P.; Westerlund, T. Sim-to-real transfer in deep reinforcement learning for robotics: A survey. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, Australia, 1–4 December 2020; pp. 737–744. [Google Scholar]

- Belter, D.; Łabęcki, P.; Skrzypczyński, P. Adaptive motion planning for autonomous rough terrain traversal with a walking robot. J. Field Robot. 2016, 33, 337–370. [Google Scholar] [CrossRef]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef]

- Segler, M.H.S.; Preuss, M.; Waller, M.P. Planning chemical syntheses with deep neural networks and symbolic AI. Nature 2018, 555, 604–610. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, Y.; Chen, H.; Qin, T.; Zhou, X.; Huo, J.; Dong, H.; Yang, X.; Zhu, X.; Chen, X.; et al. Machine learning on properties of multiscale multisource hydroxyapatite nanoparticles datasets with different morphologies and sizes. NPJ Comput. Mater. 2021, 7, 142. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, L.; Jia, Q.; Cheng, Z.; Jiang, Y.; Guo, Y.; Ma, J. Transferable Multilevel Attention Neural Network for Accurate Prediction of Quantum Chemistry Properties via Multitask Learning. J. Chem. Inf. Model. 2021, 61, 1066–1082. [Google Scholar] [CrossRef]

- Gu, Y.; Zhu, Q.; Liu, Z.; Fu, C.; Wu, J.; Zhu, Q.; Jia, Q.; Ma, J. Nitrogen Reduction Reaction Energy and Pathway in Metal-zeolites: Deep Learning and Explainable Machine Learning with Local Acidity and Hydrogen Bonding Features. J. Mater. Chem. A 2022, 10, 14976–14988. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Deep learning and its applications to signal and information processing [exploratory dsp]. IEEE Signal Process. Mag. 2010, 28, 145–154. [Google Scholar] [CrossRef]

- Dahl, G.E.; Yu, D.; Deng, L.; Acero, A. Context-dependent pretrained deep neural networks for large-vocabulary speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2011, 20, 30–42. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Cires, D.C.; Meier, U.; Gambardella, L.M.; Schmidhuber, J. Deep, big, simple neural nets for handwritten digit recognition. Neural Comput. 2010, 22, 3207–3220. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Huang, F.; Yates, A. Biased representation learning for domain adaptation. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Republic of Korea, 12–14 July 2012; pp. 1313–1323. [Google Scholar]

- Xiang, E.W.; Cao, B.; Hu, D.H.; Yang, Q. Bridging domains using worldwide knowledge for transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 770–783. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Ling, X.; Dai, W.; Xue, G.-R.; Yang, Q.; Yu, Y. Spectral domain-transfer learning. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 488–496. [Google Scholar]

- Raina, R.; Ng, A.Y.; Koller, D. Constructing informative priors using transfer learning. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 713–720. [Google Scholar]

- Zhang, J. Deep transfer learning via restricted boltzmann machine for document classification. In Proceedings of the 2011 10th International Conference on Machine Learning and Applications and Workshops, Honolulu, HI, USA, 18–21 December 2011; pp. 323–326. [Google Scholar]

- Peteiro-Barral, D.; Guijarro-Berdiñas, B. A survey of methods for distributed machine learning. Prog. Artif. Intell. 2013, 2, 1–11. [Google Scholar] [CrossRef]

- Zheng, H.; Kulkarni, S.R.; Poor, H.V. Attribute-distributed learning: Models, limits, and algorithms. IEEE Trans. Signal Process. 2010, 59, 386–398. [Google Scholar] [CrossRef]

- Fu, Y.; Li, B.; Zhu, X.; Zhang, C. Active learning without knowing individual instance labels: A pairwise label homogeneity query approach. IEEE Trans. Knowl. Data Eng. 2013, 26, 808–822. [Google Scholar] [CrossRef]

- Pyzer-Knapp, E.O.; Suh, C.; Gómez-Bombarelli, R.; Aguilera-Iparraguirre, J.; Aspuru-Guzik, A. What is high-throughput virtual screening? A perspective from organic materials discovery. What Is High-throughput Virtual Screening? A Perspective from Organic Materials Discovery. Annu. Rev. Mater. Sci. 2015, 45, 195–216. [Google Scholar] [CrossRef]

- Wang, G.; Xin, B.; Wang, G.; Zhang, Y.; Lu, Y.; Guo, L.; Li, S.; Chen, C.; Cheng, X.; Ma, J. Fidelity of Robotic Chemical Operations of Homogenous and Heterogeneous Reactions. ChemRxiv 2021. [Google Scholar] [CrossRef]

- Vaucher, A.C.; Zipoli, F.; Geluykens, J.; Nair, V.H.; Schwaller, P.; Laino, T. Automated extraction of chemical synthesis actions from experimental procedures. Nat. Commun. 2020, 11, 3601. [Google Scholar] [CrossRef]

- Choudhary, K.; DeCost, B.; Chen, C.; Jain, A.; Tavazza, F.; Cohn, R.; Park, C.W.; Choudhary, A.; Agrawal, A.; Billinge, S.J.L.; et al. Recent advances and applications of deep learning methods in materials science. NPJ Comput. Mater. 2020, 8, 59. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Koroteev, M.V. BERT: A review of applications in natural language processing and understanding. arXiv 2021, arXiv:2103.11943. [Google Scholar]

- Burger, B.; Maffettone, P.M.; Gusev, V.V.; Aitchison, C.M.; Bai, Y.; Wang, X.; Li, X.; Alston, B.M.; Li, B.; Clowes, R.; et al. A mobile robotic chemist. Nature 2020, 583, 237–241. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Almassri, A.M.; Wan Hasan, W.; Ahmad, S.A.; Ishak, A.J.; Ghazali, A.; Talib, D.; Wada, C. Pressure sensor: State of the art, design, and application for robotic hand. J. Sens. 2015, 2015, 846487. [Google Scholar] [CrossRef]

- Kumar, S.; Savur, C.; Sahin, F. Dynamic awareness of an industrial robotic arm using time-of-flight laser-ranging sensors. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 2850–2857. [Google Scholar]

- Lang, S.A.; Demming, M.; Jaeschke, T.; Noujeim, K.M.; Konynenberg, A.; Pohl, N. 3D SAR imaging for dry wall inspection using an 80 GHz FMCW radar with 25 GHz bandwidth. In Proceedings of the 2015 IEEE MTT-S International Microwave Symposium, Phoenix, AZ, USA, 17–22 May 2015; pp. 1–4. [Google Scholar]

- Salehi, I.; Rotithor, G.; Saltus, R.; Dani, A.P. Constrained image-based visual servoing using barrier functions. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14254–14260. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual servo control. i. basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]