Category Name Expansion and an Enhanced Multimodal Fusion Framework for Few-Shot Learning

Abstract

1. Introduction

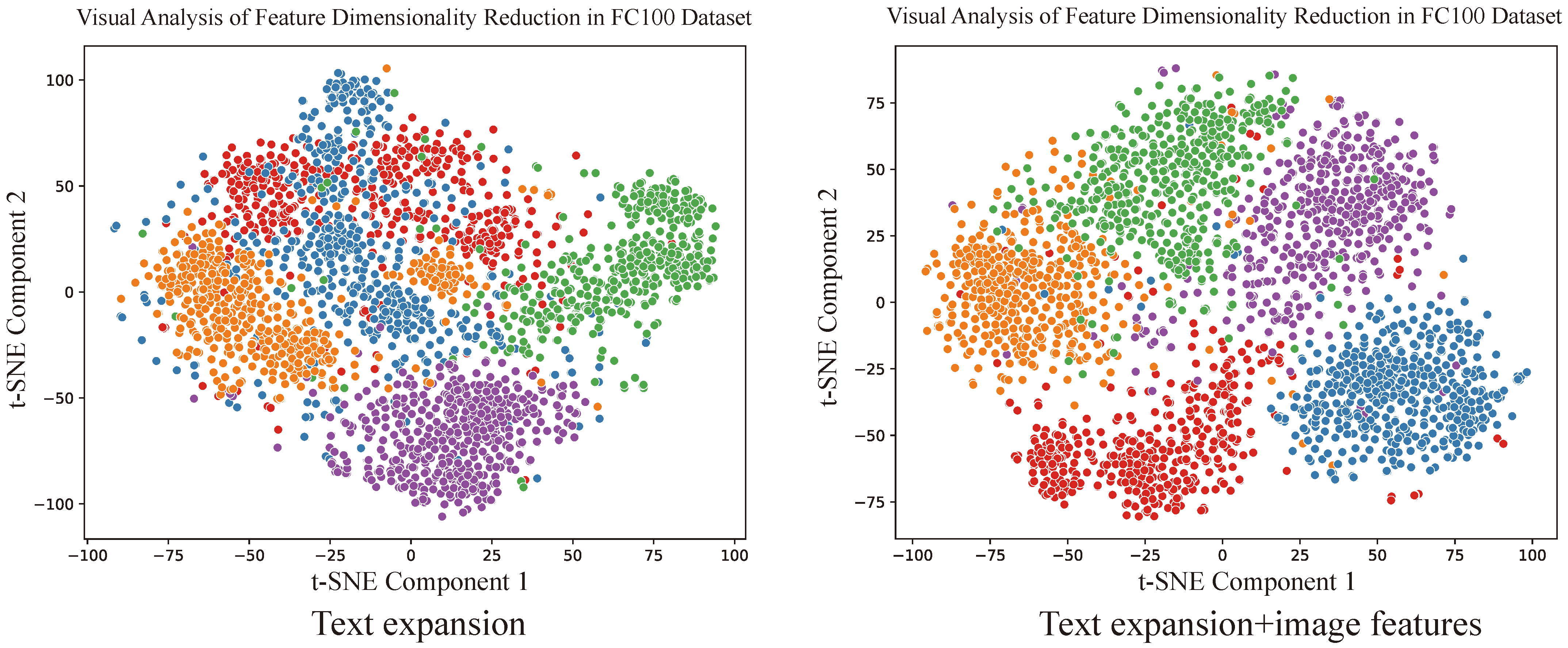

- The combination of category name expansion and image feature augmentation: Based on traditional category name expansion, we incorporate specific sample image features to supplement the expanded content. By introducing image features, the semantic description of the categories becomes more precise and detailed, effectively improving the performance of multimodal few-shot learning.

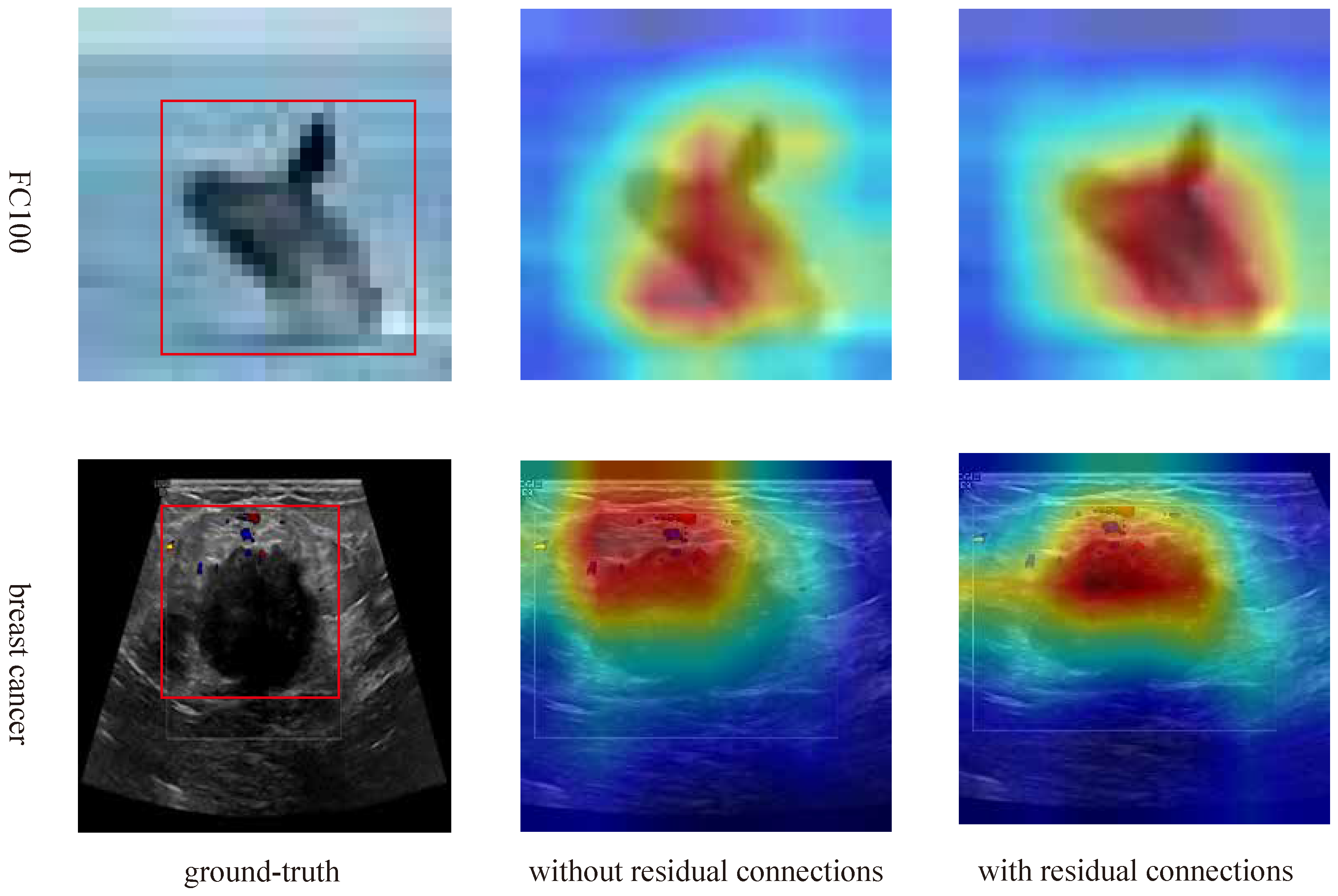

- Improved visual and textual feature fusion method: In each layer of feature fusion, we designed a cross-modal residual connection structure to ensure that the output at each layer retains the original information while facilitating the effective alignment of multimodal information.

- Multi-task validation: We validated the proposed method’s effectiveness across multiple few-shot learning tasks. Experimental results demonstrate that our approach outperforms existing multimodal few-shot learning methods across various datasets, showcasing its broad applicability and excellent performance in different domains and tasks.

2. Related Works

2.1. Few-Shot Learning

2.2. Multimodal Few-Shot Learning

3. Method

3.1. Problem Description

3.2. Overall Architecture of the Model

3.2.1. Multi-Level Fusion Module for Name Expansion and Image Feature Augmentation

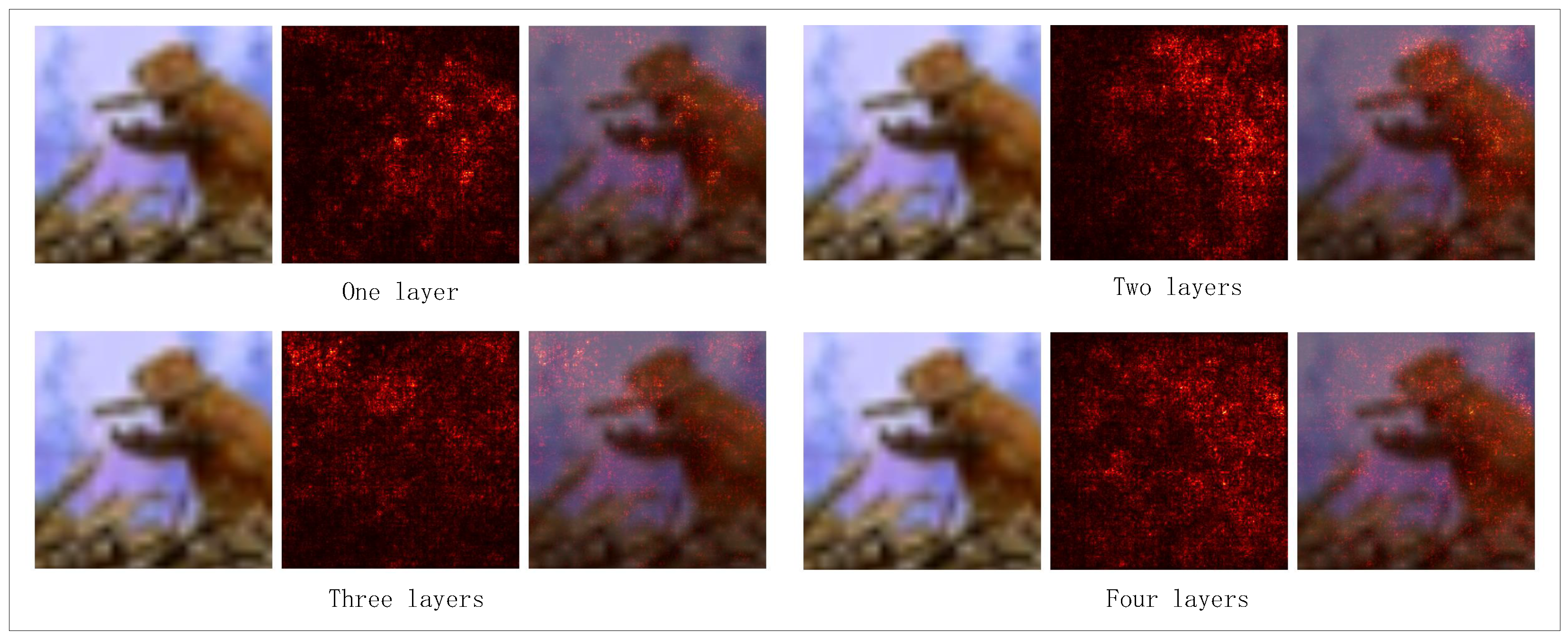

3.2.2. Multi-Stage Fusion Module

3.2.3. Model Training Process

3.2.4. Model Testing Phase

4. Experiments

4.1. Dataset Settings

4.2. Implementation Details

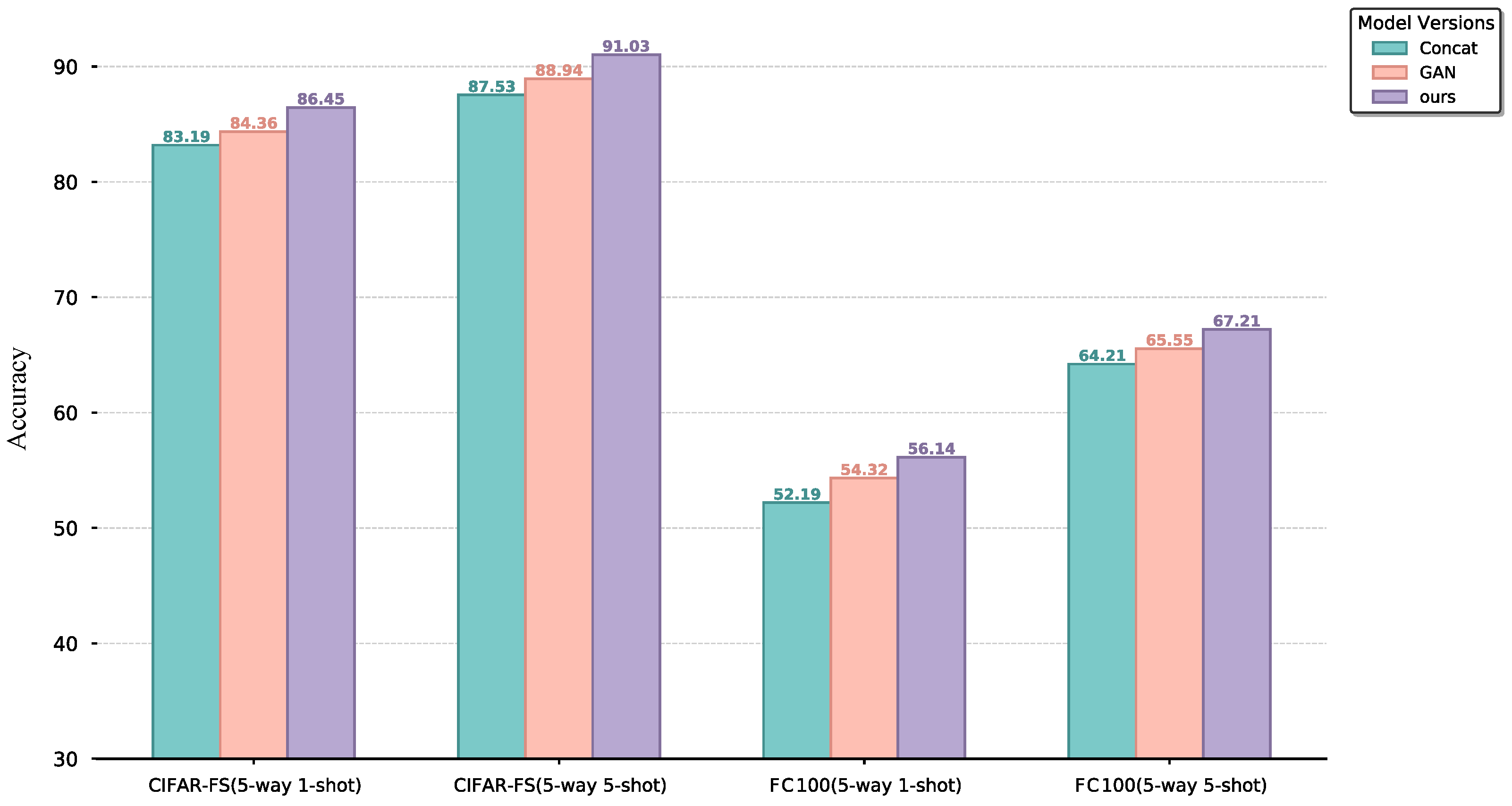

4.3. Comparison Experiments

4.4. Ablation Study

4.4.1. Effectiveness of Image Feature Augmentation

4.4.2. Learnable Category Embedding Distribution Modeled

4.4.3. Cross-Modal Hierarchical Residual Connections

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, H.; Mai, H.; Gong, Y.; Deng, Z.H. Towards well-generalizing meta-learning via adversarial task augmentation. Artif. Intell. 2023, 317, 103875. [Google Scholar] [CrossRef]

- Zeng, Z.; Xiong, D. Unsupervised and few-shot parsing from pretrained language models. Artif. Intell. 2022, 305, 103665. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, L.; Li, J.; Liang, X.; Zhang, M. Are the BERT family zero-shot learners? A study on their potential and limitations. Artif. Intell. 2023, 322, 103953. [Google Scholar] [CrossRef]

- Pachetti, E.; Colantonio, S. A systematic review of few-shot learning in medical imaging. Artif. Intell. Med. 2024, 156, 102949. [Google Scholar] [CrossRef] [PubMed]

- Jia, J.; Feng, X.; Yu, H. Few-shot classification via efficient meta-learning with hybrid optimization. Eng. Appl. Artif. Intell. 2024, 127, 107296. [Google Scholar] [CrossRef]

- Xin, Z.; Chen, S.; Wu, T.; Shao, Y.; Ding, W.; You, X. Few-shot object detection: Research advances and challenges. Inf. Fusion 2024, 107, 102307. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, T.; Dai, W.; Cai, W.; Zhou, X.; Chen, D. Few-shot Adaptation of Multi-modal Foundation Models: A Survey. Artif. Intell. Rev. 2024, 57, 268. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Elsken, T.; Staffler, B.; Metzen, J.H.; Hutter, F. Meta-Learning of Neural Architectures for Few-Shot Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12362–12372. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a Model for Few-Shot Learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Andrychowicz, M.; Denil, M.; Gómez, S.; Hoffman, M.W.; Pfau, D.; Schaul, T.; Shillingford, B.; de Freitas, N. Learning to learn by gradient descent by gradient descent. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Santoro, A.; Bartunov, S.; Botvinick, M.; Wierstra, D.; Lillicrap, T. Meta-learning with memory-augmented neural networks. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, ICML’16, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1842–1850. [Google Scholar]

- Alhussan, A.A.; Abdelhamid, A.A.; Towfek, S.K.; Ibrahim, A.; Abualigah, L.; Khodadadi, N.; Khafaga, D.S.; Al-Otaibi, S.; Ahmed, A.E. Classification of Breast Cancer Using Transfer Learning and Advanced Al-Biruni Earth Radius Optimization. Biomimetics 2023, 8, 270. [Google Scholar] [CrossRef] [PubMed]

- Gidaris, S.; Bursuc, A.; Komodakis, N.; Perez, P.P.; Cord, M. Boosting Few-Shot Visual Learning with Self-Supervision. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8058–8067. [Google Scholar]

- Hiller, M.; Ma, R.; Harandi, M.; Drummond, T. Rethinking Generalization in Few-Shot Classification. Adv. Neural Inf. Process. Syst. 2022, 35, 3582–3595. [Google Scholar]

- Mangla, P.; Singh, M.; Sinha, A.; Kumari, N.; Balasubramanian, V.; Krishnamurthy, B. Charting the Right Manifold: Manifold Mixup for Few-shot Learning. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Tian, Y.; Wang, Y.; Krishnan, D.; Tenenbaum, J.B.; Isola, P. Rethinking Few-Shot Image Classification: A Good Embedding Is All You Need? In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 266–282. [Google Scholar]

- Koch, G.R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 4080–4090. [Google Scholar]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. DeepEMD: Differentiable Earth Mover’s Distance for Few-Shot Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 5632–5648. [Google Scholar] [CrossRef] [PubMed]

- Doersch, C.; Gupta, A.; Zisserman, A. CrossTransformers: Spatially-aware few-shot transfer. arXiv 2021, arXiv:2007.11498. [Google Scholar] [CrossRef]

- Bateni, P.; Goyal, R.; Masrani, V.; Wood, F.; Sigal, L. Improved Few-Shot Visual Classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14481–14490. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, J.; Jiang, S.; He, Z. Simple Semantic-Aided Few-Shot Learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 28588–28597. [Google Scholar] [CrossRef]

- Vuorio, R.; Sun, S.H.; Hu, H.; Lim, J.J. Multimodal model-agnostic meta-learning via task-aware modulation. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Number 1. Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 1–12. [Google Scholar]

- Tang, Y.; Lin, Z.; Wang, Q.; Zhu, P.; Hu, Q. AMU-Tuning: Effective Logit Bias for CLIP-based Few-shot Learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 23323–23333. [Google Scholar] [CrossRef]

- Hou, R.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Cross attention network for few-shot classification. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Number 360. Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 4003–4014. [Google Scholar]

- Bertinetto, L.; Henriques, J.F.; Torr, P.H.; Vedaldi, A. Meta-learning with differentiable closed-form solvers. arXiv 2018, arXiv:1805.08136. [Google Scholar]

- Oreshkin, B.; López, P.R.; Tadam, L.A. Task dependent adaptive metric for improved few-shot learning. Adv. Neural Inf. Process. Syst. 2018, 721–731. [Google Scholar]

- Xiao, F.; Pedrycz, W. Negation of the Quantum Mass Function for Multisource Quantum Information Fusion with its Application to Pattern Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2054–2070. [Google Scholar] [CrossRef] [PubMed]

- Xiao, F.; Cao, Z.; Lin, C.T. A Complex Weighted Discounting Multisource Information Fusion with its Application in Pattern Classification. IEEE Trans. Knowl. Data Eng. 2023, 35, 7609–7623. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Oreshkin, B.N.; Rodriguez, P.; Lacoste, A. TADAM: Task dependent adaptive metric for improved few-shot learning. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, Montréal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; pp. 719–729. [Google Scholar]

- Lee, K.; Maji, S.; Ravichandran, A.; Soatto, S. Meta-Learning with Differentiable Convex Optimization. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10649–10657. [Google Scholar] [CrossRef]

- Kim, J.; Kim, H.; Kim, G. Model-Agnostic Boundary-Adversarial Sampling for Test-Time Generalization in Few-Shot Learning. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part I. Springer: Cham, Switzerland, 2020; pp. 599–617. [Google Scholar]

- Dong, B.; Zhou, P.; Yan, S.; Zuo, W. Self-Promoted Supervision for Few-Shot Transformer. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 329–347. [Google Scholar]

- Sun, S.; Gao, H. Meta-AdaM: An Meta-Learned Adaptive Optimizer with Momentum for Few-Shot Learning. Adv. Neural Inf. Process. Syst. 2023, 36, 65441–65455. [Google Scholar]

- Chen, W.; Si, C.; Zhang, Z.; Wang, L.; Wang, Z.; Tan, T. Notice of Removal: Semantic Prompt for Few-Shot Image Recognition. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 23581–23591. [Google Scholar] [CrossRef]

| Method | CIFAR-FS | FC100 | ||

|---|---|---|---|---|

| 5-Way 1-Shot | 5-Way 5-Shot | 5-Way 1-Shot | 5-Way 5-Shot | |

| ProtoNet | 72.20 ± 0.70 | 83.50 ± 0.50 | 41.54 ± 0.76 | 57.08 ± 0.76 |

| TADAM | - | - | 40.10 ± 0.40 | 56.10 ± 0.40 |

| MetaOptNet | 72.80 ± 0.70 | 84.30 ± 0.50 | 47.20 ± 0.60 | 55.50 ± 0.60 |

| MABAS | 73.51 ± 0.92 | 85.65 ± 0.65 | 42.31 ± 0.75 | 58.16 ± 0.78 |

| RFS | 71.50 ± 0.80 | 86.00 ± 0.50 | 42.60 ± 0.70 | 59.10 ± 0.60 |

| SUN | 78.37 ± 0.46 | 88.84 ± 0.32 | - | - |

| FewTURE | 77.76 ± 0.81 | 88.90 ± 0.59 | 47.68 ± 0.78 | 63.81 ± 0.75 |

| Meta-AdaM | - | - | 41.12 ± 0.49 | 56.14 ± 0.49 |

| SP-CLIP | 82.18 ± 0.40 | 88.24 ± 0.32 | 48.53 ± 0.38 | 61.55 ± 0.41 |

| SemFew | 84.34 ± 0.67 | 89.11 ± 0.54 | 54.27 ± 0.77 | 65.02 ± 0.72 |

| ours | 86.45 ± 0.56 | 91.03 ± 0.61 | 56.14 ± 0.76 | 67.21 ± 0.75 |

| Method | MRI + Text | Pathology + Text | ||

|---|---|---|---|---|

| 5-Way 1-Shot | 5-Way 5-Shot | 5-Way 1-Shot | 5-Way 5-Shot | |

| ProtoNet | 52.43 ± 0.56 | 57.12 ± 0.64 | 48.32 ± 0.61 | 54.77 ± 0.58 |

| MetaOptNet | 53.12 ± 0.61 | 59.05 ± 0.70 | 49.89 ± 0.67 | 56.23 ± 0.62 |

| MABAS | 54.08 ± 0.63 | 60.21 ± 0.72 | 50.16 ± 0.66 | 57.34 ± 0.68 |

| RFS | 55.00 ± 0.62 | 60.55 ± 0.73 | 51.03 ± 0.69 | 58.10 ± 0.65 |

| SUN | 54.70 ± 0.65 | 59.85 ± 0.71 | 50.78 ± 0.70 | 57.98 ± 0.64 |

| FewTURE | 55.56 ± 0.64 | 61.24 ± 0.69 | 51.21 ± 0.68 | 58.40 ± 0.66 |

| SemFew | 55.12 ± 0.63 | 60.90 ± 0.72 | 50.50 ± 0.67 | 57.89 ± 0.69 |

| ours | 56.87 ± 0.54 | 62.53 ± 0.66 | 52.49 ± 0.72 | 59.56 ± 0.71 |

| Method | CIFAR-FS | FC100 | Breast Cancer (MRI + Text) | |||

|---|---|---|---|---|---|---|

| 5-Way 1-Shot | 5-Way 5-Shot | 5-Way 1-Shot | 5-Way 5-Shot | 5-Way 1-Shot | 5-Way 5-Shot | |

| Without image | 83.16 ± 0.58 | 89.50 ± 0.59 | 53.21 ± 0.75 | 64.95 ± 0.77 | 53.87 ± 0.52 | 60.87 ± 0.65 |

| With image | 86.45 ± 0.56 | 91.03 ± 0.61 | 56.14 ± 0.76 | 67.21 ± 0.75 | 56.87 ± 0.54 | 62.53 ± 0.66 |

| Noise Type | CIFAR-FS | ||

|---|---|---|---|

| Baseline-Text | Baseline-Image | ΔAcc | |

| No Noise | 84.12 ± 0.58 | 86.45 ± 0.56 | +2.33 |

| Random Replacement (20%) | 81.20 ± 0.72 | 83.75 ± 0.65 | +2.55 |

| Truncation (50%) | 82.10 ± 0.70 | 84.20 ± 0.68 | +2.10 |

| Method | CIFAR-FS | FC100 | ||

|---|---|---|---|---|

| 5-Way 1-Shot | 5-Way 5-Shot | 5-Way 1-Shot | 5-Way 5-Shot | |

| without residual connections | 84.23 ± 0.57 | 88.50 ± 0.60 | 53.95 ± 0.77 | 64.44 ± 0.74 |

| with residual connections | 86.45 ± 0.56 | 91.03 ± 0.61 | 56.14 ± 0.76 | 67.21 ± 0.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, T.; Lyu, L.; Xie, X.; Wei, N.; Geng, Y.; Shu, M. Category Name Expansion and an Enhanced Multimodal Fusion Framework for Few-Shot Learning. Entropy 2025, 27, 991. https://doi.org/10.3390/e27090991

Gao T, Lyu L, Xie X, Wei N, Geng Y, Shu M. Category Name Expansion and an Enhanced Multimodal Fusion Framework for Few-Shot Learning. Entropy. 2025; 27(9):991. https://doi.org/10.3390/e27090991

Chicago/Turabian StyleGao, Tianlei, Lei Lyu, Xiaoyun Xie, Nuo Wei, Yushui Geng, and Minglei Shu. 2025. "Category Name Expansion and an Enhanced Multimodal Fusion Framework for Few-Shot Learning" Entropy 27, no. 9: 991. https://doi.org/10.3390/e27090991

APA StyleGao, T., Lyu, L., Xie, X., Wei, N., Geng, Y., & Shu, M. (2025). Category Name Expansion and an Enhanced Multimodal Fusion Framework for Few-Shot Learning. Entropy, 27(9), 991. https://doi.org/10.3390/e27090991