1. Introduction

The information explosion on the Internet has made search engines vital for knowledge access. While early search systems relied on basic keyword matching, advances in big data and artificial intelligence technology have raised user expectations towards deep query understanding and precise results. This evolution is further accelerated by the rise of retrieval-augmented generation (RAG) systems, where the quality of the initial retrieval phase critically determines the quality of the generated output [

1,

2].

The core goal of the search engine is to quickly and accurately return the most relevant results to the user’s query in the massive data. The effectiveness of a search engine hinges on its two-stage architecture: retrieval and ranking. The retrieval stage is responsible for screening out potentially relevant candidate sets from a large collection of documents, while the ranking stage further evaluates the relevance of candidate sets in detail. Among them, retrieval effectiveness in the retrieval stage is crucial in the overall search engine, which determines how many truly relevant documents the system can cover. If key documents are omitted in the retrieval stage, even if the subsequent ranking algorithm is powerful, it will seriously affect the overall performance of the system. Therefore, improving retrieval effectiveness is a fundamental challenge in optimizing the effectiveness of search engines.

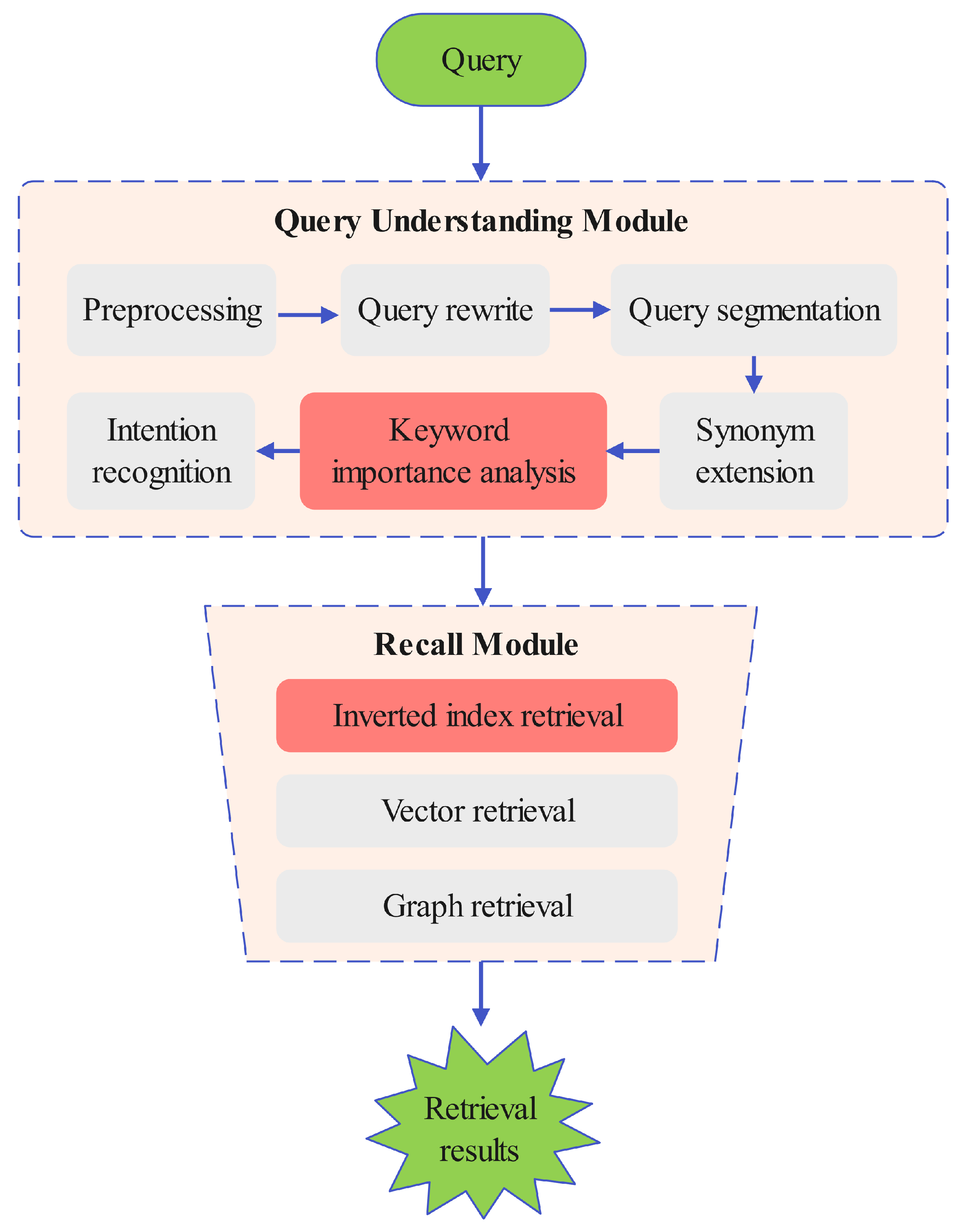

The main challenges in enhancing search retrieval effectiveness stem from two aspects: the query understanding module and the recall module. The query understanding module encompasses tasks such as query preprocessing, query rewriting, query segmentation, synonym extension, keyword importance analysis, and intention recognition. Among them, accurately analyzing the importance of query keywords is crucial for deeply understanding user intent, as it enables the search engine to efficiently retrieve highly relevant content from massive information sources. Therefore, it is of great significance to boost overall retrieval performance. Traditional methods for keyword importance analysis, such as TF-IDF [

3], BM25 [

4], and BM25F [

5], rely primarily on statistical features. While these approaches consider term frequency and distribution, they ignore semantic relationships between keywords, limiting their ability to handle the semantic complexity of natural language queries. As a result, they often fall short in accurately assessing keyword importance in real-world knowledge search engines. Word embedding models based on deep learning neural networks, such as Word2Vec [

6], GloVe [

7], and Qwen3 Embedding [

8], can capture rich semantic relationships and similarities between words. These networks have been successfully applied to the importance analysis of query keywordsand have contributed to improved retrieval effectiveness. However, these solutions still suffered from several limitations in our practical application: (i) The complex network structure results in long inference times, which impacts search performance. (ii) They require a large amount of training data. (iii) They demand significant computational resources for both training and inference. A more recent context-aware term-weighting approach [

9], which leverages a large pre-trained language model and the complete context of the query or document, dynamically assigns an importance weight to each term within it. While it achieves superior effectiveness by capturing deeper contextual semantics, its model structure is vastly more complex, leading to even greater online latency. To overcome these layered challenges that form the statistical shortcomings of traditional methods to the semantic yet inefficient nature of neural approaches, we propose a new query keyword importance analysis method, which is called semantic entropy-driven keyword importance analysis(SE-KIA). Our approach is designed to bridge this gap; it captures meaningful semantic relationships without relying on computationally intensive deep neural networks during inference. By leveraging lightweight semantic entropy measures derived from domain-specific query logs and corpus co-occurrence statistics, SE-KIA enables the search engine to dynamically estimate keyword importance in a computationally efficient, explainable, and scalable manner. This makes it particularly suitable for vertical domain search engines where both semantic understanding and operational efficiency are critical.

In modern search engines, the recall module primarily utilizes technologies such as inverted index retrieval, vector retrieval, and graph retrieval. Vector retrieval leverages advanced deep learning models to transform various types of data, including texts and images, into vector representations [

10,

11]. By measuring similarity between vectors, it effectively captures semantic relationships and supports complex content matching. Graph retrieval is based on the constructed knowledge graphs or user behavior graphs. It mines potential associations by utilizing the relationships between nodes and edges, thus enabling the discovery of in-depth information associations based on relationships [

12,

13]. Inverted index retrieval is a classic and fundamental search method that organizes data in a unique and efficient manner, laying a solid foundation for rapid information retrieval. The core concept of the inverted index is to establish a mapping relationship between each term during document collection and the documents that contain that term [

14,

15]. This reverse indexing structure from terms to documents significantly enhances retrieval efficiency, especially when dealing with large-scale text data, where its advantages are particularly prominent. In this paper, we focus on optimizing inverted index retrieval and propose a hybrid recall strategy that integrates multi-stage and logical combination methods (HRS-MSLC), with the goal of enhancing the relevance of retrieval results.

In this paper, we focus on the retrieval stage of inverted index retrieval, aiming to improve retrieval effectiveness and thus optimize the retrieval effect of the entire search engine. First, we propose a new keyword importance analysis method driven by semantic entropy in the query understanding module, enabling the search engine to more accurately understand user intent. Then, we introduce a hybrid recall strategy that integrates the concepts of multi-stage and logical combinations in the recall module, enabling the search engine’s retrieval results to be more relevant to the query.

In summary, the contributions of this paper are as follows:

We propose a keyword importance analysis method driven by semantic entropy(SE-KIA) to achieve term weighting. Taking the theory of semantic entropy as its theoretical foundation, it makes use of the user information in search query logs and combines it with the corpus of the search engine, providing a comprehensive context for understanding the importance of keywords in the domain related to the search engine. Based on the integration of these three aspects, SE-KIA enables the search engine to dynamically adjust the weights of query keywords. As a result, it significantly enhances the search engine’s ability to accurately recognize user intent, ensuring that the search results are more relevant and better meet the users’ information needs.

We propose a hybrid recall strategy with multi-stage recall and logical combination recall(HRS-MSLC). In the form of multi-queue recall, we separately recall the keywords obtained from the multi-granularity word segmentation of the query and simultaneously consider the “AND” and “OR” logical relationships between the keywords. This strategy aims to overcome the limitations of traditional recall methods, which often find it difficult to strike an appropriate balance between retrieving a sufficient number of relevant documents and ensuring highly relevant recall results.

An in-depth analysis of the application effect of SE-KIA and HRS-MSLC in our vertical domain search engine was conducted. The Hit Rate@1 is improved from 85.6% to 92.9% (a significant increase of 7.3%), and the Hit Rate@3 is improved from 87.5% to 94.1% (a significant increase of 6.6%). Meanwhile, the problems of recall documents only hitting a single keyword and keyword importance analysis judgment error have been effectively solved, which proves that they can effectively improve the search performance.

The rest of our paper is organized as follows:

Section 2 reviews related work.

Section 3 introduces the retrieval stage in a search engine in general. In

Section 4, we introduce our keyword import analysis algorithm, SE-KIA. In

Section 5, we introduce our recall strategy, HRS-MSLC. Then, the results of the experiment are presented in

Section 6. Finally, the conclusions are given in

Section 7.

3. Retrieval Stage in Search Engine

A search engine is typically divided into a retrieval stage and a ranking stage. The retrieval stage mainly aims to efficiently identify a candidate set relevant to the user’s query from a vast collection of documents or data. It is like a “coarse-screening” process, with the goal of finding all potentially relevant information as comprehensively as possible to lay the foundation for subsequent precise ranking and filtering. The ranking stage, on the other hand, precisely ranks the recalled candidate documents. By comprehensively considering factors such as the relevance between the documents and the query, the quality of the documents, and user preferences, it calculates the score of each document, ranks the documents that best meet the user’s needs at the top, and presents them to the user.

In this paper, we focus on the retrieval stage, which is a crucial stage within the entire search engine. Our goal is to improve the retrieval effectiveness of the retrieval stage, reducing the omission of valuable information during the initial screening process. In this way, the performance of the entire search engine can be enhanced, and the users’ search experience can be significantly enriched. The retrieval stage architecture in a search engine is described in

Figure 1, which primarily consists of two core modules: the query understanding module and the recall module.

3.1. Query Understanding Module

The query understanding module is responsible for analyzing the query content input by the user and deeply interpreting the user’s search intention. The query understanding module performs complex processing on the user’s query through the following key components:

Query preprocessing: It mainly performs the following preprocessing on queries to facilitate subsequent analysis by other modules, including removing useless symbols, uniformly converting cases, truncating extremely long queries, etc.

Query rewrite: It optimizes user queries through two key techniques: query correction to fix spelling and syntax errors [

29,

30] and query completion to predict and supplements partial queries for better intent matching [

31].

Query segmentation: It divides the user query into meaningful words or phrases, which helps the search engine accurately understand the key points of user queries [

32]. After word segmentation, the query terms can be precisely matched with the words in the knowledge base index, allowing for the rapid location of relevant documents or information.

Synonym expansion: Users have different expression habits. Through synonym expansion, words that are synonymous or nearly synonymous with the query term can be included in the retrieval scope. In addition, in some domains, the words used by users may not be the typical words that best express their needs [

33]. Synonym expansion is helpful in uncovering users’ potential true intentions.

Keyword importance analysis: By analyzing the importance of query keywords to achieve precise term weighting, the search engine can quickly grasp the core of the query and produce the optimal retrieval output [

9]. This is the key to having an in-depth understanding of users’ query intentions and is of great significance to improve the accuracy of search recall.

Intention recognition: Through the accurate identification of users’ intentions, the search engine can provide results that meet expectations, thereby enhancing user satisfaction [

34].

Among these components, keyword importance analysis plays a crucial role. In this paper, we focus on this component and propose a new method for analyzing the importance of keywords driven by semantic entropy.

3.2. Recall Module

In terms of the retrieval module, technologies such as inverted index retrieval, vector retrieval, and graph retrieval are widely used in modern retrieval systems.

Inverted index retrieval: The inverted index is the most classic and crucial retrieval technology in search engines, enabling efficient retrieval of massive amounts of data. Its core idea is to construct a reverse index from terms to the documents containing these terms in the corpus. First, the document set in the corpus undergoes preprocessing, and a dictionary of all unique terms is built. Then, each term corresponds to an inverted list that records the IDs of the documents containing the term, along with additional information such as term frequency and position. When users input a query, the search engine segments it into keywords, retrieves relevant document IDs using the inverted index, and computes the final results through set operations. Currently, there are multiple open-source search engines, such as Solr [

35] and Elasticsearch [

36], which have built-in functions for efficiently constructing and querying inverted indexes.

Vector retrieval: A typical vector retrieval system consists of two stages: offline processing and online retrieval. In the offline stage, documents are encoded into embeddings using pretrained models, then indexed via the approximate nearest neighbor(ANN) [

37] algorithm for efficient similarity search. During online retrieval, queries are vectorized and matched against the index, with the top N results returned by similarity ranking.

Graph retrieval: Graph retrieval is a technology for information retrieval. It is based on the graph data structure, with documents and entities as nodes and their relationships as edges. When a user initiates a retrieval request, the input keywords are converted into query conditions. The system will start from the nodes related to the query and conduct a traversal exploration along the edges according to the characteristics of the graph structure. Then, according to a certain sorting algorithm that comprehensively considers factors like relevance and importance, the results that best meet the user’s needs are presented to the user, thereby achieving efficient and accurate information retrieval.

In this paper, we focus on the optimization of inverted index retrieval and propose a hybrid recall strategy that integrates multi-stage recall and logical combination recall.

4. SE-KIA: Semantic Entropy-Driven Keyword Importance Analysis

In this section, we propose a keyword importance analysis method to achieve term weighting for the query understanding module in a search engine. We have a search engine in the vertical field of telecommunications, which contains a large number of knowledge documents, with the aim of helping users efficiently obtain knowledge. Previously, our search engine analyzed the importance of query keywords by assigning a static word weight to each term based solely on part-of-speech analysis. Such a simple keyword importance analysis method has the problem that term weight assignments are not accurate enough. Furthermore, a single global keyword importance table was used, causing the weight of each keyword to remain constant across all queries, thereby preventing the dynamic adjustment of keyword weights according to the query context. In fact, dynamic weighting is very important in search engines to distinguish the importance of the same keyword in different queries. For example, for the query “nr 7:3 timeslot ratio”, it can be said that the user clearly intends to find knowledge related to the timeslot ratio of 7:3. However, since the part of speech of the core term “7:3” is a number and the weight of the number of words is too low, the top-ranked results only recalled articles containing the keyword “timeslot ratio” rather than both “7:3” and “timeslot ratio”, resulting in inaccurate search results.

To address this issue, we explored multiple solutions. Initially, we attempted syntactic analysis but found that standard syntactic parsing typically assumes a sentence has only one root word, whereas search queries often contain multiple root words. Moreover, our analysis of search engine logs showed that 85% of user queries were keyword-based rather than complete sentences. As these fragmented queries generally violate fundamental syntactic structures, conventional parsing methods become inapplicable. Subsequently, we investigated utilizing traditional NLP models, such as BERT [

38] and BiLSTM-CRF [

39], to quantify keyword importance in queries. However, the implementation of this methodology also encountered multiple difficulties. First, data annotation is very difficult as humans cannot simply and directly access the importance of each keyword and must search the corpus to determine it. Second, the classification task lacked clear boundaries, resulting in unsatisfactory model performance during experimental validation.

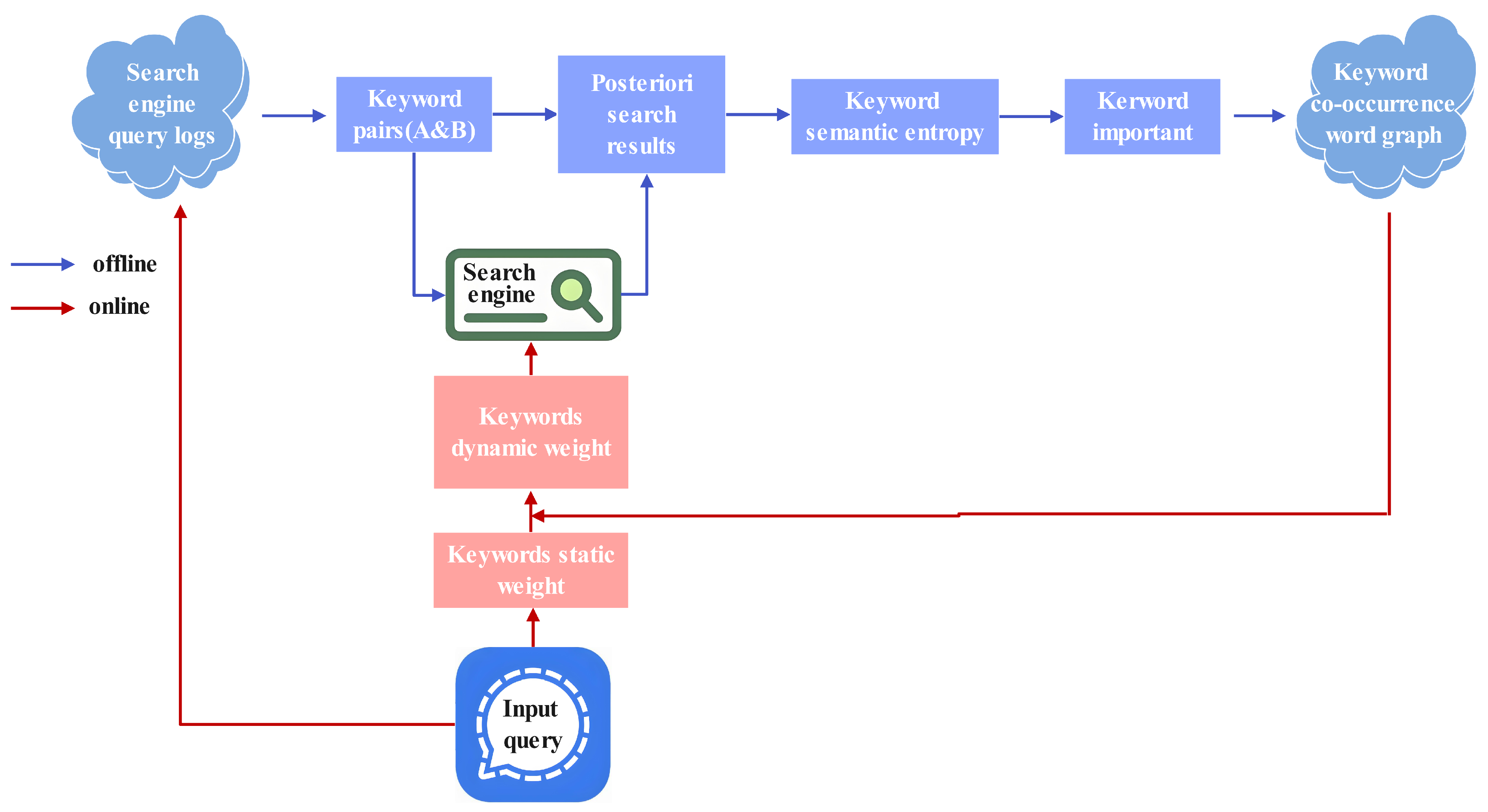

However, implementing these approaches revealed a key insight: the importance of query keywords is closely related to the corpus distribution. This suggests that leveraging the existing corpus to automatically determine keyword importance would be more effective. Therefore, we propose a semantic entropy-driven keyword importance analysis (SE-KIA) method, which associates search query logs, the search engine corpus, and semantic entropy. First, our method analyzes search query logs to identify user behavior patterns and extract domain-specific common keyword pairs. Next, with the help of the corpus, the posterior results of joint and separate searches of query keywords are obtained. This is because the corpus, a vital component of the retrieval system, encompasses extensive text data indexed and retrieved by the search engine, thereby offering a comprehensive context for comprehending the meanings and significance of words within the domain relevant to the search engine. Then, semantic entropy is applied to determine the relative importance of keywords in the keyword pairs. Finally, a co-occurrence keyword graph is constructed to dynamically weight query keywords online so as to improve the search engine’s ability to understand the users’ intention and improve the retrieval effectiveness. The SE-KIA method consists of both online and offline components, with their detailed implementations presented in Algorithms 1 and 2, respectively.

4.1. Semantic Entropy Theoretical Analysis

Consider a keyword pair (A, B). As illustrated in

Figure 2, the red circle represents posterior search results

Y when A and B are jointly queried; the green circle represents the posterior search results

when only A is queried; and the blue circle represents the posterior search results

when only B is queried. The overlapping part of the red circle and green circle represents the intersection of

Y and

:

The overlapping part of the red circle and blue circle represents the intersection of

Y and

:

| Algorithm 1 SE-KIA (offline component) |

- 1:

Input: Query logs , search corpus , co-occurrence threshold , search method Search(keywords, ) that returns an ordered list of documents ranked by relevance - 2:

Output: Keyword co-occurrence word graph - 3:

Step 1: Data Extraction - 4:

Parse to extract all queries containing exactly two keywords - 5:

Filter out pairs with frequency , obtaining set - 6:

Step 2: Posteriori Search Result Retrieval - 7:

for each do - 8:

- 9:

- 10:

- 11:

end for - 12:

Step 3: Semantic Entropy Calculation - 13:

for each do - 14:

Compute intersections: - 15:

- 16:

- 17:

Calculate occurrence counts: - 18:

number of documents in - 19:

number of documents in - 20:

Compute associated probabilities: - 21:

- 22:

- 23:

Compute semantic entropy: - 24:

- 25:

- 26:

end for - 27:

Step 4: Relative importance determination - 28:

for each do - 29:

if then - 30:

- 31:

else if then - 32:

- 33:

else - 34:

- 35:

end if - 36:

end for - 37:

Step 5: Graph Construction - 38:

Initialize where , - 39:

for each do - 40:

- 41:

- 42:

end for - 43:

return

|

| Algorithm 2 SE-KIA (online component) |

- 1:

Input: User query , keyword co-occurrence word graph , weight factor , POS weight table - 2:

Output: Keyword weights - 3:

Step 1: Static Weight Assignment - 4:

for each keyword do - 5:

Retrieve part-of-speech tag - 6:

Assign static weight: - 7:

end for - 8:

Step 2: Dynamic Weight Adjustment - 9:

Generate candidate pairs: - 10:

for each pair do - 11:

if exists in G then - 12:

Retrieve precomputed relative importance - 13:

if and then - 14:

- 15:

else if and then - 16:

- 17:

end if - 18:

end if - 19:

end for - 20:

Step 3: New Queries Logging - 21:

Insert q into log database - 22:

Periodically (e.g., daily): extract new pairs from with frequency - 23:

Update G with - 24:

return

|

Here, we define the associated probabilities. Suppose , , and . Let , where n is the number of elements at the intersection of Y and . Let , where m is the number of elements at the intersection of Y and .

For the sets

Y and

, we define the probability that element

occurs in set

as

where

is the number of occurrences of element

in set

. Since multiple distinct documents in the corpus may share the same title or correspond to the same semantic topic (e.g., different versions of an article),

counts all such documents collectively. Similarly, for the sets

Y and

, we define the probability that element

occurs in set

as

where

is the number of occurrences of element

in set

.

Now, we construct the similarity measurement formula based on semantic entropy. The similarity between sets

and

Y is determined as follows:

The similarity between set

and

Y is calculated as follows:

Then, we take as the semantic entropy of keyword A and as the semantic entropy of keyword B. In this way, we can measure the relative importance of keywords A and B according to the semantic entropy. Concretely, the smaller the semantic entropy, the higher the relative importance of keywords.

Based on this, when analyzing keyword importance in the search engine, we can dynamically adjust the weight of keywords according to their relative importance. Specifically, when a keyword in a pair is deemed more important but has a lower weight than its counterpart, its weight will be dynamically adjusted to the sum of the counterpart’s static weight and an additional weight factor

. This mechanism enhances the keyword’s contribution to the overall query. For example, if A has higher importance but a lower weight than B, its weight will be increased as follows:

Similarly, if B has higher importance but a lower weight than A, its weight will be increased as follows:

In this way, we can dynamically adjust the weights of keywords according to the context information in the query. This makes the assignment of keyword weights more accurate and improves search retrieval effectiveness.

4.2. SE-KIA Architecture

As illustrated in

Figure 3, the SE-KIA architecture comprises online and offline components.

The offline component constructs a keyword co-occurrence graph from search engine query logs, as described in Algorithm 1. The explanation of the steps are as follows:

Step 1: Data extraction: Search engine data, including user query logs and click-through behaviors, provides rich and valuable information. Especially for vertical search engines, mining user data helps us understand users’ usage habits and preferences regarding different keywords, enabling more accurate keyword importance analysis and intent understanding. To construct meaningful keyword pairs, we first extract a large volume of user query logs from our search engine database. Through statistical analysis of these logs, we make two key observations: (i) Queries composed of exactly two keywords account for a significant proportion of daily search traffic (approximately 68% in our dataset), indicating their practical relevance. (ii) For such two-keyword queries frequently submitted by users, the paired keywords tend to have strong semantic associations. They co-occur not only in user queries but also in the corpus with a significantly higher frequency than random keyword pairs. These observations motivate us to focus on frequent two-keyword queries, as they naturally form semantically coherent keyword pairs that align with real user search behaviors. In addition, longer queries (with three or more keywords) introduce higher combinatorial complexity and are often less frequent, leading to sparse and noisy data. Focusing on the most frequent two-keyword queries provides a robust and manageable set of keyword pairs for SE-KIA. Thus, we filter the query logs to retain only frequent queries consisting of exactly two keywords, using them as the basis for constructing our keyword pairs.

Step 2: Posteriori search result retrieval: For each keyword pair, we first retrieve the joint posteriori search results Y by querying both keywords concurrently against the search corpus. Subsequently, we obtain individual posteriori search results and by querying each keyword separately.

Step 3: Semantic entropy calculation: The semantic entropy of each keyword is computed based on the posterior search results. The semantic entropy of sets

Y and

is computed by Equation (

5), whereas the semantic entropy of sets

Y and

is computed by Equation (

6). A higher overlap in search results between sets

Y and

or sets

Y and

indicates lower semantic entropy (i.e., greater semantic certainty).

Step 4: Relative importance determination: We determine keyword relative importance through semantic entropy analysis. within each keyword pair, the keyword with lower semantic entropy is assigned higher relative importance.

Step 5: Graph construction: After extracting all keyword pairs and their relative importance, we construct a keyword co-occurrence word graph. This graph explicitly captures the importance relationships between co-occurring keywords in a query, enabling the search engine to dynamically adjust keyword weights during online query processing.

The online component is responsible for the dynamic keyword weighting process and uses the co-occurrence word graph during online query execution, as described in Algorithm 2. The explanation of the steps are as follows:

Step 1: Static weight assignment: When the users input a query, the search engine will first perform query preprocessing, query rewriting, query segmentation, and synonym extension. Then, our SE-KIA model first assigns a static initial weight to keywords based on their parts of speech. Words with different parts of speech will have different static weights. For example, for vertical search engines, some experts’ feedback business nouns will have a higher static weight.

Step 2: Dynamic weight adjustment: First, for each query, we generate all possible keyword pairs. For example, given query

, we construct candidate pairs

. Then, for each

, we search for existence in the keyword co-occurrence graph, and then retrieve the precomputed relative importance if co-occurrence is confirmed. For the keyword with higher importance in each keyword pair, we dynamically increase its weight by Equation (

7) or Equation (

8), thereby increasing its importance in the overall query. If a keyword appears in multiple co-occurring pairs with consistently high relative importance, indicating its critical role in the query, we select the maximum static weight from all its paired keywords and add the accumulated weight factors to adjust its weight. In this way, dynamic weight adjustment based on semantic entropy is realized, and the search recall results are more accurate.

Step 3: New query logging: In our search engine, new user queries are logged into the log database in real time. This enables the regular extraction of novel keyword pairs from query logs, which are then incorporated into the keyword co-occurrence graph. Therefore, the coverage of the graph continues to expand to include more keyword pairs.

5. HRS-MSLC: Hybrid Recall Strategy with Multi-Stage and Logical Combination Recall

In this section, we present a hybrid recall strategy for the recall module, integrating both multi-stage and logical combination recall approaches. Currently, there are several open-source search engines that can help us efficiently build an inverted index search engine, such as Solr and Elasticsearch. Our vertical search engine employs Solr for its robust phrase query and boolean query capabilities and integrates our hybrid recall strategy, thus significantly enhancing the accuracy of retrieval.

5.1. Multi-Stage Recall Strategy

The query segmentation component in a search engine usually adopts the method of multi-granularity word segmentation, aiming to comprehensively analyze the users’ intention and ensure the quantity of retrieved results. First, obtaining phrases or words through coarse-grained segmentation can capture the complete semantics, conform to users’ precise expressions, and improve the relevance of retrieval. Then, the phrases may be further segmented into word granularity or words into subword granularity through fine-grained segmentation to cover more information, enrich the retrieved results, and ensure the quantity of retrieved items. Let a query

q be segmented into the following multi-granularity terms:

The traditional single-stage scoring function for document

d is

where

is a generalized correlation scoring function, with

and

assessing the semantic relevance of term

p and term

s in document

d, respectively.

and

are term weights. It can be seen that this is a case of using phrases, words, and subwords simultaneously for retrieval. However, this will lead to a critical issue: high-frequency subwords may dominate the results, thereby suppressing higher-precision phrase and word matches and consequently compromising retrieval accuracy. For example, given the query “bts3203”, the segmentation component further decomposes it into the subwords “bts” and “3203”. The search engine then uses all three terms (the original query and subwords) concurrently to recall related documents. However, this approach creates a scoring imbalance: documents containing only the high-frequency subword “bts” receive inflated relevance scores and dominate the top results, while documents containing the exact term “bts3203”, although more semantically relevant, are deprioritized or even squeezed out of the recall results due to their lower scores, ultimately degrading retrieval precision.

Therefore, we propose a multi-stage recall strategy, replacing the original single-stage approach that combined phrases, words, and subwords with a two-stage recall approach. Specifically, suppose we need to retrieve the K documents that are the most relevant:

Stage 1: Phrase-priority retrieval: Keywords derived from coarse-grained word segmentation are used for initial retrieval.

Stage 2: Subword-controlled expansion (

): Keywords derived from fine-grained word segmentation are applied for supplementary retrieval.

To prevent the supplementary retrieval stage from over-representing the impact of subword matches, which are inherently more numerous but potentially less meaningful, we introduce the damping factor

. This factor controls the contribution of the subword-based retrieval score to the final ranked list.

Final Results:

where

is a fusion threshold. This hierarchical approach enhances retrieval effectiveness while mitigating the issue where subword matches disproportionately dominate coarse-grained term results.

In our implementation, we employ a multi-queue recall approach. The system processes both recall stages concurrently through separate queues, which are merged after completion. The primary queue (stage 1) results take precedence, while the secondary queue (stage 2) serves as a supplement when the initial recall yields insufficient results. This architecture reduces the time complexity from to , which reduces the time required for search retrieval. This improvement in time efficiency is achieved at the cost of minimal space overhead, with the space complexity increasing from to for storing document IDs and titles. This represents a highly favorable trade-off in search engine design, where we leverage readily available memory to achieve lower retrieval time while simultaneously securing a substantial improvement in retrieval effectiveness.

5.2. Logical Combination Recall Strategy

In the traditional search recall process, only the “OR” relation among the query keywords is used for retrieval. This approach leads to two significant limitations: First, documents containing frequent single-query terms often receive inflated relevance scores and are ranked high despite their potentially low actual relevance to the query. Second, more relevant documents that contain multiple-query terms may be ranked lower due to lower scores. This mismatch between retrieval scoring and true document relevance significantly compromises search quality. For instance, when searching for “5G enables smart fisheries”, the top-ranked result might be a document like “5G Wireless Technology Evolution White Paper”. Although it is completely irrelevant to applications in smart fisheries, it receives a high score solely due to it containing numerous terms such as “5G”. This demonstrates how term–frequency bias can lead to fundamentally mismatched results.

To address these limitations, we propose a logical combination recall strategy that combines both “OR” and “AND” relations between query terms. Recognizing the inherent complexity of real-world search queries, our approach dynamically balances (1) precision-focused retrieval by applying strict “AND” logic to search documents containing all specified keywords, making it particularly valuable for targeted queries requiring exact matches by ensuring that only highly relevant results are returned and (2) recall-oriented retrieval by Employing “OR” logic to capture documents containing any query term, ensuring comprehensive coverage of potentially relevant results. This dual-mode strategy achieves an optimal trade-off between precision and recall.

Our logical combination recall strategy’s processing pipeline implements the following steps:

Selective “AND” constraints: From all query keywords sorted by weight, we select the top 5 highest-weighted keywords, and when the total keywords is less than 5, the top 80% of highest-weighted keywords are selected (rounded up). These selected keywords form mandatory “AND” conditions.

Comprehensive “OR” coverage: All query keywords (including the AND-selected ones) participate in “OR” matching.

Combined logical operation: The final recall condition expression for the Solr search engine is as follows:

This balances the following: (1) Precise recall first: The“AND” operation strictly matches important keywords, with recall documents prioritizing the inclusion of all selected keywords. (2) Wide coverage guarantee: The “OR” operation maintains broad result coverage, with recall documents containing any keywords. Therefore, the search engine can prioritize recalling documents containing multiple query keywords, effectively addressing the problem that the retrieved documents only contain a single query keyword, making the recall results more accurate and highly relevant to the user’s query.

5.3. Hybrid Recall Strategy

To systematically integrate the advantages of both multi-stage and logical combination recall approaches, we propose a hybrid recall strategy with a multi-stage and logical combination (HRS-MSLC). The architecture of HRS-MSLC is shown in

Figure 4, and its processing is as follows:

Word segmentation: The domain-specific segmentation component analyzes each query using multi-granularity tokenization, generating both coarse- and fine-grained keyword sets.

Parallel retrieval: The recall module employs two concurrent retrieval queues. Queue1 uses coarse-grained keywords, and queue2 processes fine-grained keywords. At the same time, in these two queues, we incorporate the logical combination strategy, adding two types of logic, “AND” and “OR”, between the keywords.

Multi-queue fusion: The system merges results from the two queues through a prioritized fusion strategy: (1) Each queue’s results are first ranked by their recall scores. (2) Queue1 results take precedence in the final output. (3) Queue2 results supplement when queue1 returns insufficient matches. (4) Duplicate results are removed to ensure unique results.