1. Introduction

The (quantum) relative entropy is a central concept in quantum information theory, quantifying the distinguishability between quantum states and serving as a key tool in the analysis of information processing tasks.

One of its most important properties is the monotonicity under quantum channels (a quantum channel is a completely positive trace-preserving linear map; see later for a rigorous definition), which expresses the idea that state distinguishability cannot increase during the dynamical evolution of a quantum system that interacts with an environment. This property is also known as the data processing inequality (DPI).

The concept of relative entropy was first introduced by Umegaki in 1962 [

1] in the setting of

-finite von Neumann algebras, and it was later extended to arbitrary von Neumann algebras by Araki in 1976 [

2] by means of Tomita–Takesaki modular theory.

The proof of the monotonicity of relative entropy is closely related to the proof of the strong subadditivity (SSA) of the von Neumann entropy. The latter serves as a measure of the mixedness of quantum states, and the validity of the SSA ensures that quantum uncertainty behaves consistently across composite systems, placing fundamental constraints on how information and correlations can be distributed among subsystems.

The first step toward proving SSA was made in 1968 by Lanford and Robinson [

3], who established the subadditivity of the von Neumann entropy and conjectured its stronger form. In 1973, Lieb [

4], building on earlier work by Wigner, Yanase, and Dyson, proved several key properties concerning the convexity and concavity of operator functions and trace functionals.

These results enabled Lieb and Ruskai to establish the full proof of the strong subadditivity of the von Neumann entropy for both finite and infinite-dimensional Hilbert spaces later that same year in their landmark paper [

5]. In that work, they also derived, though without emphasizing it as such, the monotonicity of relative entropy under the partial trace operation, which constitutes a special case of quantum channels.

The first explicit and general proof of the monotonicity of relative entropy under the action of quantum channels was provided two years later by Lindblad in his seminal 1975 paper [

6], thanks to the results established by Lieb and Ruskai in [

5].

A further breakthrough was achieved by Uhlmann in 1977, who extended the property of monotonicity to a broader class of transformations: the adjoints of unital Schwarz maps [

7].

The equivalence between SSA and the monotonicity of relative entropy was rigorously established by Petz in the 1980s [

8,

9]. Later, in [

10], Petz proposed a new proof of monotonicity and posed the question of whether this property also holds for positive (but not necessarily completely positive) trace-preserving linear maps. This question remained open for several years until it was affirmatively resolved in 2023 by Müller-Hermes and Reeb in [

11]. Other pertinent references related to the monotonicity of relative entropy are [

12,

13,

14,

15].

In this paper, we focus specifically on the demonstration strategies proposed by Petz and Uhlmann in [

10] and [

7] respectively, which we believe offer particularly interesting and useful complementary perspectives. Petz’s proof relies on operator-algebraic methods and an explicit representation of the relative entropy in terms of a suitable inner product, an idea inspired by the previously quoted work of Araki.

However, as we show, his original use of Jensen’s operator inequality contains a subtle flaw when applied in its contractive form. We point out how Petz himself and Nielsen corrected the problem in order to restore the validity of Petz’s original approach.

Uhlmann’s proof, on the other hand, is formulated in terms of interpolations of positive sesquilinear forms, a technique that naturally extends to non-invertible states and arbitrary quantum channels.

Despite their foundational importance, both Petz’s and Uhlmann’s proofs are often considered technically demanding and conceptually opaque. Petz’s approach, while elegant, involves intricate manipulations of operator inequalities that can obscure the overall structure of the argument. Uhlmann’s method introduces a formalism that is unfamiliar to many working in quantum information theory and is rarely presented in full detail in the literature.

We aim to clarify and systematize both strategies by reformulating them in a unified, finite-dimensional, operator-theoretic framework, thus making both proofs more accessible to a wider audience.

This paper is structured as follows: In

Section 2, we recall the necessary preliminaries on operator convexity, partial trace, and quantum channels. In

Section 3, we analyze Petz’s proof, highlight its limitations, and discuss its correction. In

Section 4, we develop Uhlmann’s approach in detail and derive the monotonicity of relative entropy in the general setting.

2. Mathematical Preliminaries

In this section, we start recalling the basic definitions and results needed for the rest of the paper.

Given a finite-dimensional Hilbert state space over the field or , indicates the -algebra of linear (bounded) operators .

We recall that is as follows:

Positive semi-definite, written as if for all ;

Positive definite, written as if for all ;

Hermitian if , where is the adjoint operator of A, defined by the formula for all .

If , then a positive semi-definite, or positive definite, operator is automatically Hermitian, but this is not the case if .

Suppose now that is the state space of a quantum system. The density operator (also called the density matrix) associated with a given state s of the system is positive semi-definite, Hermitian, and such that . Hence, has eigenvalues , which sum up to 1.

As is well-known, if s is a pure state, then is a rank-one orthogonal projector and thus not invertible.

itself becomes an

-Hilbert space when it is endowed with the Hilbert–Schmidt operator inner product

The subset of

given by Hermitian operators on

, indicated with

, is a real Hilbert space with respect to the inner product inherited from

, and it is also a partially ordered set with respect to the

Löwner ordering, defined as follows: for all

,

.

Given a function

and

with spectral decomposition

, with

U being unitary and

D being diagonal, with entries given by the eigenvalues

of

A, all supposed to belong to

I, we write as usual

where

is diagonal, with non-trivial entries given by

.

If, for every finite-dimensional

and every couple of operators

, we have

then

f is said to be

operator monotone on

I. Instead, if we have

then

f is said to be

operator convex on

I. If the last inequality is reversed,

f is said to be

operator concave on

I.

By Löwner’s theorem (see, e.g., [

16] chapter V.4),

is operator monotone if and only if it has an analytic continuation that maps the upper half-plane

into itself. Notable examples of operator monotone functions on

are

and

.

If

is a continuous and operator monotone function on

I, then

, the function

given by

is operator convex on

I; see again [

16]. So, since

is operator monotone on

,

is operator convex on

, which implies that

is operator concave on

.

As is well-known, a convex function

satisfies the Jensen inequality

for all

and non-negative weights

such that

.

The Jensen inequality can be generalized to operator convex functions, as stated in the following celebrated theorem, proven by Hansen and Pedersen [

17].

Theorem 1 (Jensen’s operator inequality). For a continuous function , the following conditions are equivalent:

- (i)

f is operator convex on I.

- (ii)

For each natural number , the following inequality holds: for every n-tuple of bounded Hermitian operators on an arbitrary Hilbert space , with spectra contained in I, and every n-tuple of operators on satisfying

- (iii)

for every isometry from an arbitrary Hilbert space on an arbitrary Hilbert space and every Hermitian operator X on with spectrum contained in I.

An immediate consequence of this theorem is the following corollary, also known as Jensen’s contractive operator inequality.

Corollary 1 (Contractive version). Let be a continuous function, and suppose that . Then, f is operator convex on I and if and only if for some, hence, for every, inequality (8) is valid for every n-tuple of bounded Hermitian operators on an arbitrary Hilbert space , with spectra contained in I, and every n-tuple of operators on satisfying The reason for the adjective ‘contractive’ can be easily understood by setting

; in this case, it follows that

f is operator convex on an interval

I containing 0 with

if and only if

for every bounded Hermitian operator

X with spectrum in

I and every operator

A such that

, which implies that

A is a contraction, i.e.,

for all

or, equivalently,

.

Let us now consider two interacting quantum systems a and b with Hilbert state spaces and , respectively. The associated composite quantum system has Hilbert state space .

In the following, we indicate with generic operators of , , and , respectively.

The

partial trace over subsystem

b is a ‘superoperator’, i.e., a linear map

, defined as the linear extension to the whole

of the map

satisfies the following property:

See, e.g., [

18], page 100.

The partial trace is as follows:

Trace-preserving: ;

Positive: if , then .

As a consequence, maps states of into states of .

Moreover, is completely positive; i.e., is a positive linear map for all Hilbert spaces . A trace-preserving and completely positive (or ‘CPTP’) linear map is called a channel.

The partial trace is actually one of the three main elements of every channel. In fact, thanks to the Stinespring theorem (see [

19]), given any channel

, there exist an operator

and a unitary operator

U on

such that

By the Riesz representation theorem, we can define the adjoint of the partial trace

as the only operator

satisfying

Writing the inner products explicitly and using property (

11), we find

which allows us to write the explicit action of

as

This formula implies that

and that

is

unital; i.e., it maps the identity of its domain to the identity of its range

Being a completely positive unital transformation,

is a so-called

Schwarz map (see [

20], Corollary 2.8); i.e., it satisfies the following inequality:

This property is shared by the adjoint of any channel

.

Relative Entropy in Quantum Information Theory

We devote a separate subsection to relative entropy, as its proper treatment entails the detailed development of several technical aspects.

This is essential for addressing certain subtleties that are often overlooked in the literature yet play a crucial role in the rigorous analysis of relative entropy.

Given any finite-dimensional Hilbert space and any density operator with eigenvalues , we indicate with its spectrum and with the subset of composed only of strictly positive eigenvalues.

It will be convenient to have the following index sets at hand: and .

The

support of

, denoted with

, is the subset of

defined as follows:

where

is the eigenspace relative to the eigenvalue

.

From this definition and the spectral theorem for Hermitian operators, it immediately follows that

This implies that

, so the positive definite operator

has a trivial kernel; hence, it is invertible.

In particular, if is positive definite, then is invertible everywhere, and its image and support coincide with the entire . On the other hand, non-invertible density operators, for instance, those corresponding to pure states, have support strictly included in .

The deviation from purity, or mixedness, of

can be measured by its

von Neumann entropy, defined as follows:

The condition

is satisfied if and only if

is pure. In the literature, the precise definition of the logarithm of a matrix may vary according to the specific aims and context in which the matrix logarithm is employed.

For the purposes of this paper, the key property that the logarithm of a density operator

, or, more generally, of a Hermitian operator, must satisfy is

. For this reason, we adopt a definition of log via functional calculus in the extended real numbers with the following conventions:

of course justified by the limits

as

,

as

, and

as

.

Using the spectral theorem, if

is decomposed as

where

denotes the orthogonal projector onto the eigenspace

,

, and

is the orthogonal projector on

, then, using the previous conventions, we have

and so

Since the projectors satisfy the orthogonality relation

and by the convention

, which accounts for the zero eigenvalue, we obtain

where

is the multiplicity of the eigenvalue

, in the literature, it is actually more common to write just

with the understanding that each eigenvalue is repeated according to its multiplicity.

The von Neumann entropy does not, by itself, tell us how one state differs from another. To capture the distinguishability of two states, the concept of relative entropy (sometimes called the quantum Kullback–Leibler divergence) must be introduced.

The definition of the relative entropy between two density operators

and

is subjected to a condition on the compatibility of their supports, precisely the following:

which is known as the ‘

support-based definition’ of relative entropy.

In order to understand why the condition

is both necessary and sufficient for

to be well-defined, we first note that

so, the first term of Formula (

28) is minus the von Neumann entropy, which is always finite. In order to analyze the second term, let us consider, alongside Equation (

22), the analogous spectral decomposition of

so that

where

is the orthogonal projector on the eigenspace relative to the positive eigenvalue

of

, and

is the projector on

. Then,

where the contribution of

does not appear due to the convention

.

The first term is always finite, since only strictly positive eigenvalues appear. Instead, the behavior of the second term depends on the value of

. To compute it, let us consider any eigenbasis

of

so that

for all

and use the fact that orthogonal projectors are Hermitian and idempotent to write

The second term in Equation (

31) diverges to

if and only if

, i.e., when there exists at least one

such that

has a non-trivial projection onto

, i.e.,

is non-null, which is equivalent to saying that

. Therefore,

which justifies the definition given in (

27).

Instead, if

, then

; additionally, using again the convention

, the second term in Equation (

31) vanishes, and we remain just with the first term, which can be written in an alternative form. Consider now the orthonormal eigenbases

,

of

and

, respectively; then, we have the following well-known formula (see, e.g., [

21]):

which shows that the factor

codifies the transition probability between the pure states represented by the unit vectors

and

.

In summary, when

, the relative entropy between

and

can be written explicitly as follows:

Two cases are particularly relevant in practical contexts:

If

, then their supports coincide with the entire Hilbert space

, and so their relative entropy is the finite real number given by Equation (

35);

Instead, if but is not, then their relative entropy is infinite. This happens, for instance, when is a full-rank mixed state and is a pure state.

An equivalent and useful definition of the von Neumann and relative entropy appears in the literature (see, e.g., [

22]). Rather than relying on the support of the density operators, this alternative definition is based on a regularization procedure.

Specifically, given a state

on

, one defines the regularized operator

with

.

is a positive definite Hermitian operator, and the spectral decompositions of

and

are

We have

so the von Neumann entropy of

is well-defined via the limit

Similarly, setting

, with

, the relative entropy between

and

can be defined as

known as the ‘

regularized definition’ of relative entropy.

Let us verify that the regularized and support-based definitions of relative entropy coincide. Equation (

40) splits into two terms; the first equals minus the regularized definition of the von Neumann entropy, which is finite by Equation (

39), so the only issue to address concerns the second term of Equation (

40).

For that, we write the spectral decomposition of

as follows:

Repeating the same computations performed in the case of the support-based definition of

, we obtain

If

, then

when

and the last term in Equation (

42) is absent, so the limit converges to the correct value.

Instead, if

, then

when

; consequently, the second term in Equation (

42) diverges to

, thus matching the behavior of the support-based definition.

The relative entropy has several important properties (see, e.g., [

23]):

Klein’s inequality: for all , and if and only if . This property motivates the reason why the relative entropy, despite lacking symmetry in its arguments, is taken to be a measure of the distinguishability of states in quantum theories.

Invariance under unitary conjugation: for all unitary operators U acting on the same Hilbert space as and .

Additivity w.r.t. tensor product: for all density operators .

The

monotonicity of S under partial trace is represented by the inequality

which, together with Stinespring’s theorem and the three previously mentioned properties of

S, permits to prove that quantum distinguishability does not increase under the action of a generic channel

a formula also known as the

data processing inequality (DPI). In fact,

Inequality (

44) explains why, in the quantum information literature, a channel is often referred to as a

coarse-graining procedure, a term borrowed from statistical mechanics.

This terminology reflects the idea that information about different quantum states is lost through the action of the channel, as previously distinguishable states may become indistinguishable after the transformation.

While the first three properties of S mentioned above are relatively straightforward to prove, its monotonicity under partial trace is considerably more subtle. In the next two sections, we provide a detailed analysis of the proof originally proposed by Petz and Uhlmann.

3. Petz’s Proof of the Monotonicity of the Relative Entropy Under Partial Trace

In this section, we examine the strategy proposed by Petz in [

10] for proving the monotonicity of relative entropy under the partial trace operation, which is based on a clever reformulation of the expression of the relative entropy as a suitable inner product inspired by an analogous construction by Araki [

2].

We will show that Petz’s proof is flawed due to an incorrect application of the contractive version of Jensen’s operator inequality. We will explain how this issue can be circumvented, thus restoring the validity of Petz’s overall approach. Furthermore, we will show how to extend it to also incorporate non-invertible density operators.

The notation that will be used throughout this section is detailed below:

Given the finite-dimensional Hilbert spaces and , we define , with inner product induced by those of and ;

Operators of will be denoted as , and operators of will be denoted by ;

are two positive definite (invertible) density operators (actually, for the following analysis, only has to be invertible; however, as we noted with the support-based definition of the relative entropy, if , then its support is the entire , and so, for our analysis to be meaningful, we also have to demand ): .

In order to rewrite the relative entropy as an inner product, we must introduce some suitable superoperators.

Precisely, for fixed

,

, consider the Hermitian superoperators

given by the right and left operator multiplications and the relative modular operator, respectively, i.e.,

Clearly,

,

, and

All these superoperators are Hermitian; in fact,

and similarly for

. Regarding

, using Equation (

47), we have

If we consider the specific case in which

and

, then we obtain

Since

and

, applying formula (

50) to

gives

This last identity, the cyclic property of the trace, and the property

imply that

which is the ‘inner product reformulation’ of the relative entropy between the positive definite states

and

that we were searching for.

We can obtain an analogous formula for the relative entropy of the partial traces of

and

. To this end, if we fix

,

, then we can define the Hermitian superoperators

as follows:

By carrying out computations analogous to those in Equation (

52) but this time using the superoperators

, we obtain

Due to the minus sign in front of the inner products appearing in Equations (

52) and (

54), we have that

the monotonicity of the relative entropy under partial trace is equivalent to

These inner products are defined on two different Hilbert spaces,

and

; in order to perform a meaningful comparison and prove the inequality, Petz introduced a superoperator

through the explicit formula

which serves as a bridge between the reduced state

and the full state

. While, as we are going to show, this definition is computationally effective, it may at first appear somewhat ad hoc. Actually, a seemingly more natural choice for

would have been

for two reasons: first, as for

,

is a map between

and

, and, second, it satisfies the Schwarz inequality (

17), which, in the following, will play a crucial role in the proof of inequality (

55).

It turns out that is tightly related to the adjoint of the partial trace , not w.r.t. the original inner products of and , but w.r.t. suitably weighted inner products that naturally emerge from the previous structural analysis of the relative entropy in terms of superoperators.

In fact, the reformulations of the relative entropy obtained in Equations (

52) and (

54) involve inner products weighted by

and

, respectively. This observation suggests that the correct notion of adjoint to consider for

is the one defined relative to the inner products (the positive definiteness of these inner products is guaranteed by the fact that

)

We have

So, the adjoint of

w.r.t. the weighted inner products defined above is the operator

, which, for all

, satisfies

and, therefore,

; i.e.,

From this fact, we obtain that the explicit action of

on any

is

and this implies immediately that

transforms

into

:

Repeatedly using the cyclic property of the trace and Schwarz’s inequality (

17) satisfied by

, we can prove that

is a contraction

Note now that

Using the equality just proven, we can show that

; in fact,

where we again used Schwarz’s inequality, and, to write the last equality, we applied Equation (

64).

Since

is an operator monotone function, the inequality

implies

and so

Petz’s strategy to make the relative entropy

appear on the right-hand side of the previous inequality consists of using the fact that

is a contraction to apply the contractive Jensen operator inequality (

9) with

. In this way, due to (

62), we would get

and so the proof of the monotonicity of

S w.r.t. the partial trace

would be achieved.

For this argument to be valid, is required to be operator convex, which is true, to be well-defined at and to satisfy .

Petz circumvented the lack of definition of

in

by using the following integral identity:

Since the integral over

coincides with that over

, we may restrict our attention to strictly positive values of

. If we denote by

the identity operator on

, we have

moreover,

thus,

It follows that

and, analogously,

For all

, the function

given by

is well-defined for

, and it is operator convex and operator monotone (decreasing); see [

16] chapter V.1. Thanks to this last property, we have

However,

for all

, so

the contractive Jensen operator inequality cannot be used to write

which would lead to the proof of the monotonicity of the relative entropy with computations analogous to those shown in Formula (

68).

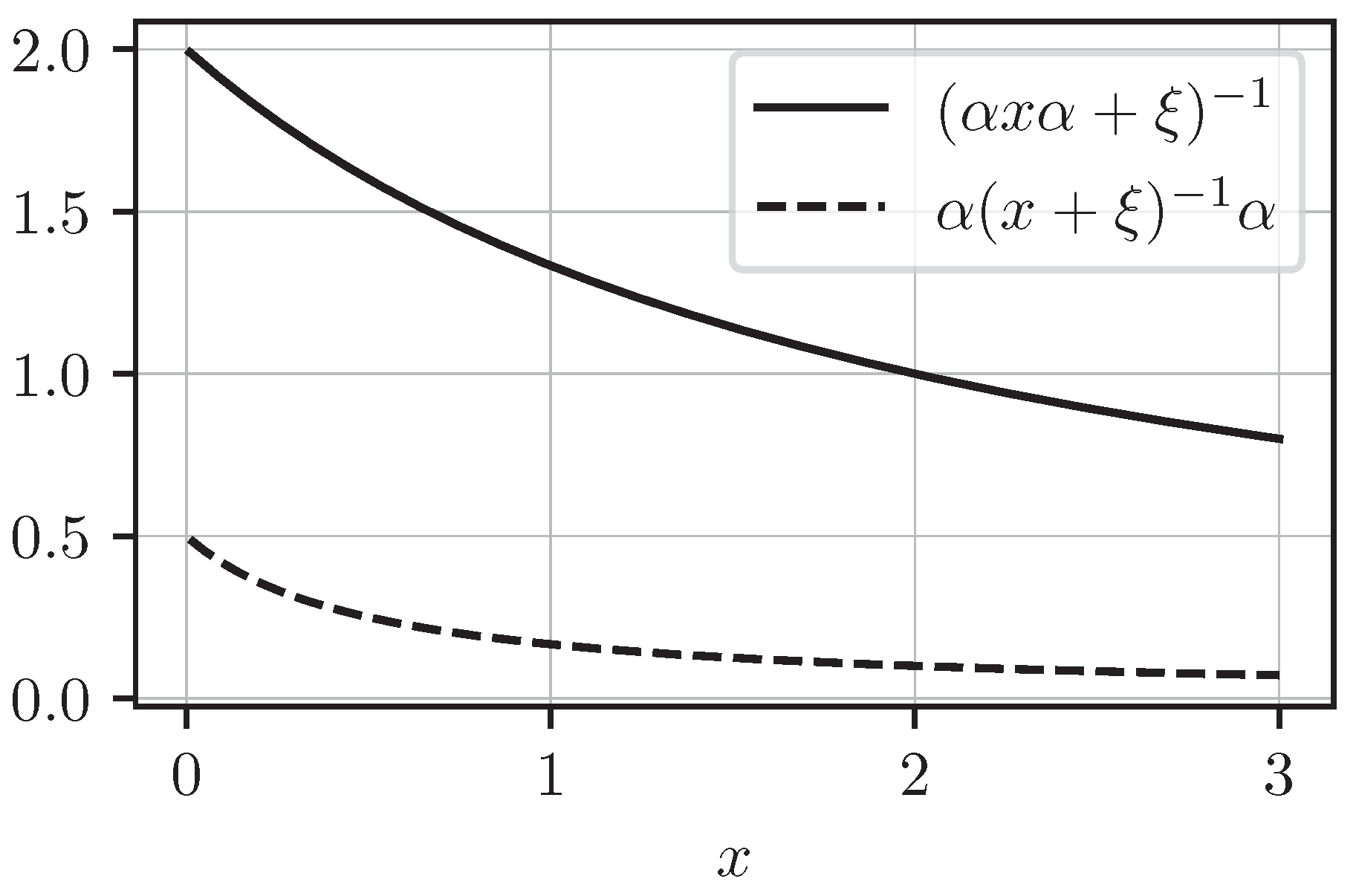

A simple counterexample illustrating the failure of inequality (

76) arises in the scalar case, where a contraction reduces to a multiplication by a real coefficient

and satisfies

. The inequality

is

false for all

, as shown in

Figure 1.

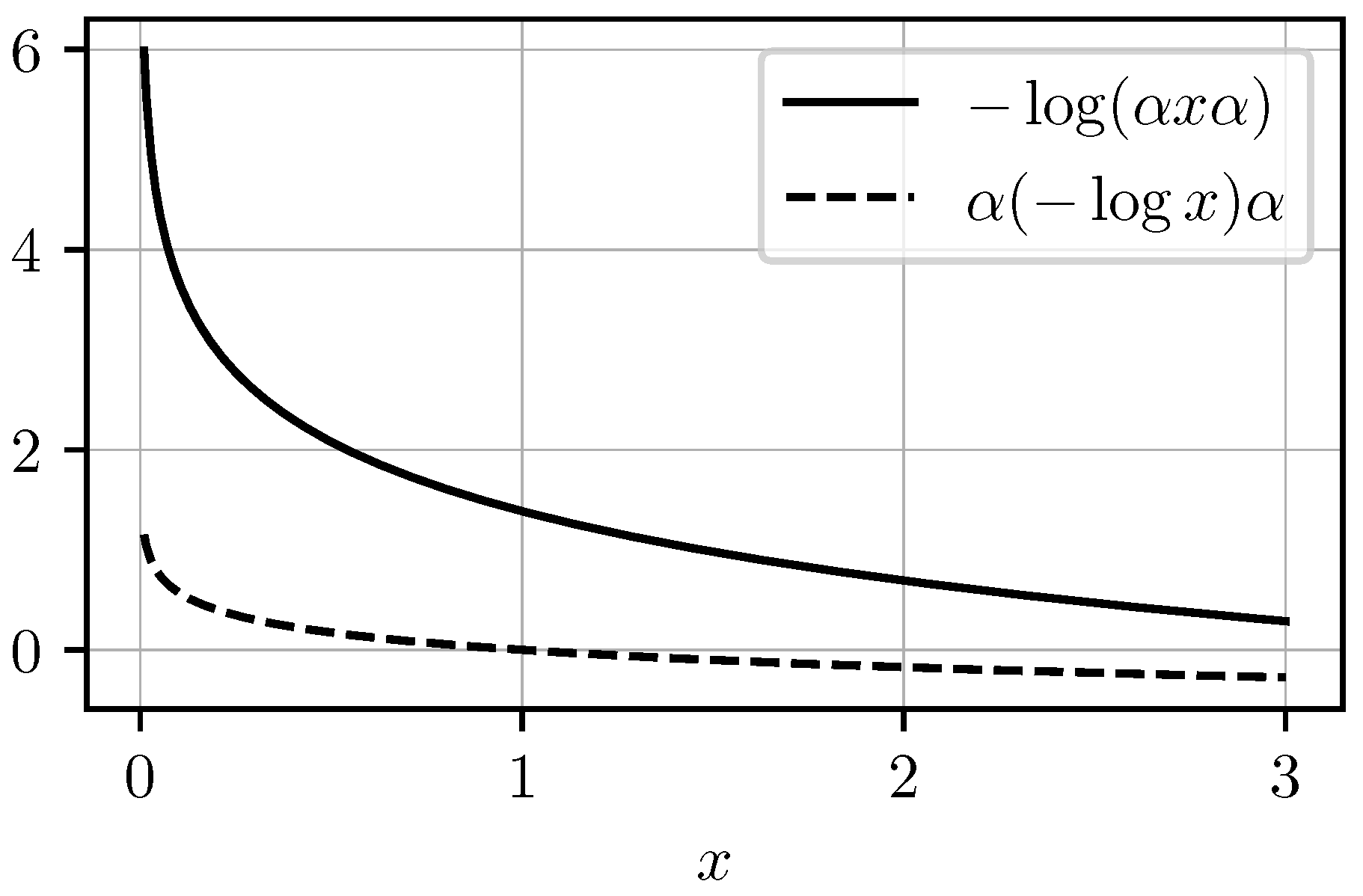

Note that the inequality

is also false for a generic contraction

V, as we show in

Figure 2 with a counterexample in the scalar case.

To summarize, showing that is a contraction does not permit the use of the contractive version of Jensen’s operator inequality to prove the monotonicity of the relative entropy.

3.1. Correction of Petz’s Strategy to Prove the Relative Entropy Monotonicity

There is a simple correction of Petz’s strategy that restores the validity of his approach to prove the monotonicity of the relative entropy in a rigorous way. The correction was provided by Petz himself, together with Nielsen, in [

24]. The same line of reasoning can also be found in [

25,

26].

The key idea of the correction lies in recognizing that the operator

is not merely a contraction but an isometry; i.e.,

is the identity operator on

. In fact, using Equations (

11) and (

56), we have

This allows us to apply point

of Theorem 1 with

,

and

, which ensures that the inequality

holds true, confirming the validity of the computations in (

68), thereby establishing the monotonicity of the relative entropy.

3.2. Extension of Petz’s Proof to Non-Invertible Density Operators

The corrected version of Petz’s strategy for proving the monotonicity of relative entropy can be extended to include non-invertible density operators. To this end, the interplay between the support-based and the regularized definition of relative entropy given in (

27) and (

40), respectively, will prove to be useful.

First of all, note that, if , then , and the monotonicity statement is trivially true.

We therefore restrict our attention to the case

, which ensures that the relative entropy is finite. Within this setting, we assume that

is non-invertible, while

may or may not be invertible. This covers all cases not addressed by Petz’s original analysis in [

10].

To establish the monotonicity of relative entropy in this broader context, we must first ensure that , i.e., that . Observe that it is not necessary to check this condition when , since, in that case, and are also positive definite, and, thus, their supports coincide with .

We need a preliminary lemma regarding the kernel of positive semi-definite operators.

Lemma 1. Let and be Hermitian positive semi-definite operators on a finite-dimensional Hilbert space . Then, Proof. Proving the inclusion is trivial: if we have , then , so .

To show the inclusion

, we first note that, thanks to the positive semi-definiteness of

and

, for all

, we have

with equality to 0 if and only if

, i.e., if and only if

because the eigenvalues of both

and

are non-negative.

Hence, if , then , so, due to the previous considerations, , and, therefore, . □

Proposition 1. For all density operators such that , it holds that Proof. Using the notation of

Section 2, consider again the spectral decompositions of

and

:

where

and

are the orthogonal projectors onto the eigenspaces corresponding to the positive eigenvalues

of

and

of

, respectively. Then, the following operator sums yield the orthogonal projectors onto

and

, respectively:

Using the linearity and positivity of the partial trace and the fact that multiplying a positive semi-definite operator by a strictly positive scalar does not change its support, we have

The same argument applies to

and

so that

Moreover, according to a standard result about orthogonal projectors, the inclusion

is equivalent to the fact that the operator

is an orthogonal projector on

. By the linearity of the partial trace, we can write

and, moreover, using the fact that

is a positive map and Lemma 1, we have

having used a standard property of the orthogonal complement of the intersection of two vector subspaces. Therefore,

as claimed. □

While the support-based definition of relative entropy is useful to prove Equation (

82), in order to prove the monotonicity of relative entropy for a non-invertible density operator

, the regularized definition (

40) turns out to be more adequate.

Since both and for any arbitrarily small , the modular operator is well-defined. Similarly, the modular operator is also well-defined.

By applying the same steps used in the corrected version of Petz’s proof and using Equation (

52), we obtain that, for any

,

Notice that we can deal with

instead of

even if they do not have unit trace because this property is used in Petz’s original (and flawed) argument only in Equation (

71), which does not play any role in the corrected version outlined in

Section 3.1.

By continuity, taking the limit

on both sides of the previous inequality leads to

which completes the proof for non-invertible

and general

.

As a final remark, we note that, in [

10], Petz claimed that his proof applies not only to quantum channels but also to adjoints of unital Schwarz maps. However, that claim relies on the flawed argument that we have pointed out. The validity of Petz’s proof can be restored by proving that

is an isometry, a property that holds if we are dealing with the partial trace but not with the adjoint of a general unital Schwarz map.

4. Uhlmann’s Proof of the Monotonicity of the Relative Entropy Under Partial Trace

The proof offered by Uhlmann in [

7] (see also [

27] for a review) was more general than that offered by Petz because it also naturally encompassed the case of non-invertible density operators. However, thanks to the correction and generalization of Petz’s proof outlined in the previous section, now, we can state that the two procedures have the same generality.

Uhlmann’s proof is based on the concept of interpolations of positive sesquilinear forms, which we recall in the following subsection. Coherently with the analysis developed so far, we will consider only the case of finite dimensional vector spaces.

4.1. Interpolations of Positive Sesquilinear Forms

Let V be a vector space of finite dimension d over the field or , and let be the set of sesquilinear forms over V, assumed to be linear in the second variable and conjugate-linear in the first. The results of this subsection also encompass the case of bilinear forms.

We say that is positive if , and we denote the space of positive sesquilinear forms over V by .

We can endow with a Löwner-like partial ordering: given , we say that if .

Fixing a basis of

V, for any form

, there exists a unique Hermitian operator

such that, for all

, written in coordinates as

, one has

It can be easily proven that, for a positive form

, the kernel of

coincides with the

isotropic cone

and they are both equal to the kernel of the positive semi-definite operator

T.

Let

now be a Hilbert space with inner product

,

be a linear surjective map, and

. Then,

is said to be

represented by, indicated with

, if

Two representations of positive sesquilinear forms

and

are said to be

compatible if

. As we shall see shortly, compatibility is a key concept in constructing a functional calculus for sesquilinear forms.

The following theorem shows that compatible representations exist, and its constructive proof provides a (non-unique) way to build them.

Theorem 2. Let . Then, there exist representations and (with the same mapping h) such that .

Proof. Let

be the kernel of the form

By fixing a basis of

V, we can associate

and

with two Hermitian and positive semi-definite operators

via Equation (

92). It follows that

where the last equality is provided by Lemma 1.

Now, by setting

, we can define the following inner product:

Note that this inner product is well-defined thanks to the equalities (

96).

By taking

h as the (surjective) quotient map

,

, we can define the following positive sesquilinear forms on

:

for all

.

Thanks to the Riesz representation theorem, there exists a unique couple of positive Hermitian operators

such that

It follows that, for all

,

and, hence,

, which implies that

A and

B commute. □

Consider now

, and let

J be the set of homogeneous (of degree 1), measurable, and locally bounded functions

. It is possible to develop the concept of function of positive sesquilinear forms thanks to the following theorem, whose quite lengthy proof can be consulted in [

28].

Theorem 3. Let V be a vector space, , and let and be two compatible representations of α and β. Then, for any , the function ,is a well-defined sesquilinear form on V; i.e., γ is independent of the choice of representations, and, for any given f, γ depends only on . Note that, on the right-hand side of (

103),

is intended as an operator function, which is well-defined since

A and

B commute, and so they can be simultaneously diagonalized.

Combining Theorems 2 and 3, every

can be extended to a function of positive sesquilinear forms. Keeping the same symbol for simplicity, we can define

as follows: given

and any compatible representations

and

, the sequilinear form

is represented as

The elements of

J used to define the concept of the interpolation of positive sesquilinear forms are the positive functions

, where

. Given

, we call

interpolation from α to β the positive sesquilinear form

which means that, for any compatible representation

and

,

The interpolation is said to go from

to

because, clearly,

and

. Moreover, the interpolation of two interpolations is another interpolation. In fact, given

, we have

with

.

The interpolation of

computed at the value

corresponds to a particularly important positive sesquilinear form, indicated with

and called the

geometric mean of

. Clearly, we have

An important property of the geometric mean of

and

will emerge in connection with the following concept: a positive sesquilinear form

is said to be

dominated by

if

The following theorem establishes that

is the ‘maximal’ positive sesquilinear form dominated by

and

.

Theorem 4. Let V be a vector space, and let ; then, is dominated by . Moreover, interpreting as a partially ordered set, let be the subset of positive sesquilinear forms dominated by ; then, , i.e., for all .

Proof. The first statement is simply an application of the Cauchy–Schwarz inequality. For all

and for any compatible representations of

and

, we have

having used the fact that

A and

B, and so their square roots are Hermitian operators. Thus,

so

is dominated by

and

.

To prove the second statement, consider a form

dominated by

, and let

and

be compatible representations. By applying the constructive proof of Theorem 2 to

r, we can find a positive operator

such that

; however, the representation

will not, in general, be compatible with that of

and

. Since

r is dominated by

and

, we have

or, thanks to the fact that

h is surjective on

,

Now, the second statement of the theorem, i.e.,

, means that

for all

and all

, which is equivalent to

for all

and all

C satisfying inequality (

115).

It follows that the second statement of the theorem will be proven if we manage to show that

. In order to obtain this result, a regularization procedure applied to the operators

will be helpful: since

and

, for all

,

,

, and their square roots

,

are Hermitian, positive, and invertible. Moreover, it is clear that

,

, hence

Finally,

and

are monotonically decreasing in

w.r.t. the Löwner ordering and

and

, as

. For all

, there exist unique vectors

such that

then inequality (

115) becomes

i.e.,

having used the inequalities written in (

116).

By considering

and taking into account that

is a positive operator, we can write

which implies that

, thus

. By taking the limit

, we get

, and so the second statement of the theorem is also proven. □

The property of the geometric mean just proven allows us to extend the ordering relation between two positive sesquilinear forms to their interpolations, in the sense specified by the following theorem.

Theorem 5. Let V be a vector space, and let such that and ; then, Proof. The statement is clearly satisfied for

. Setting

, thanks to the first part of Theorem 4, we get

where the second inequality follows from the hypotheses of this theorem. This means that

is dominated by

, and so the extremality of the geometric mean implies that

If we now use Equation (

108) with

and

, we get

By repeating the previous argument, this time using Equation (

123), we show that

.

By iterating this procedure, we can prove the statement of the theorem for any of the type , with , , which is a dense subset of .

Finally, the functions and are continuous for every fixed ; hence, the theorem holds for all . □

In a similar way, we can prove another important result. Let

be a linear map between vector spaces, and let

. Then,

allows us to

pull back these sesquilinear forms on

U as follows:

The following theorem shows that the pull-back of an interpolation of the forms in is always ‘smaller’ than the interpolation of their pull-backs, with respect to the partial ordering of .

Theorem 6. Let be vector spaces, be a linear map, and ; then, Proof. The argument that we use is quite similar to the one appearing in the previous proof. The statement is true for and because, in these cases, we have and , respectively.

Let us now consider

, which gives rise to the geometric mean, so

Since

is dominated by

, we can write

which shows that

is dominated by

,

. Thus, by the extremal property of the geometric mean, the statement of the theorem holds for

.

By iterating this reasoning as done in the proof of the previous theorem, the validity of inequality (

126) can be generalized to all

. □

4.2. Definition of the Relative Entropy in Terms of Interpolations of Forms

In this subsection, we apply the results previously established to reformulate the relative entropy in a manner that will facilitate the proof of its monotonicity under partial trace, which is to be presented in the next subsection.

Adopting notations analogous to those introduced at the beginning of

Section 3, we identify the vector space

V, on which the forms of interest for us will be defined, with

. As we know, this is a Hilbert space w.r.t. the Hilbert–Schmidt inner product

,

, which is a positive definite sesquilinear form.

Given two density operators

, we can define the following positive sesquilinear forms

:

where the operators

and

are defined as in Equation (

46). We immediately recognize the two representations

where

is the identity map on

. These representations are compatible because, thanks to Equation (

47),

.

We can now define the

relative entropy positive sesquilinear form between the two density operators

, indicated with

, as the rate of change of the interpolation

with respect to

Remark 1. The use of lim inf instead of an ordinary limit is motivated by the following two arguments:

- 1.

When ρ and σ are not invertible, the interpolation function may not be differentiable at . The ordinary limit of the differential quotient at might fail to exist due to oscillations or a lack of smoothness in the interpolation path. In such situations, the ordinary limit does not exist. However, lim inf always exists (possibly infinite), thereby ensuring that the entropy form is always well-defined.

- 2.

The function is convex in t, as it arises from the interpolation which is jointly operator convex for . For convex functions, the left and right derivatives at an endpoint may differ, and the correct notion of derivative from the right at is the lower right Dini derivative, i.e., the lim inf of the difference quotient. Thus, the use of lim inf aligns with standard practice in convex analysis.

The relative entropy between states can be recovered as follows.

Theorem 7. For all density operators , we have Proof. Let us first consider the case of invertible density operators

and

. Then,

In the case of

and

, which are not invertible, we can use the regularized version of relative entropy. We have seen an equivalent definition of the relative entropy in (

40), and, thus, it is natural to consider

Now, we have that both

are positive definite, and, thus,

,

are well-defined. By repeating the steps in (

134), we get that

We obtain the same definition of relative entropy as in (

27). □

4.3. Proof of the Monotonicity of the Relative Entropy Under Partial Trace

Thanks to formula (

133), the monotonicity of the relative entropy under the partial trace

will be proven if we show that

As in Petz’s proof, the adjoint of the partial trace plays a central, though conceptually distinct, role in establishing the monotonicity of relative entropy.

Specifically, in Uhlmann’s approach,

acts as a pull-back map; i.e., we define

and use it to pull back the positive sesquilinear forms

and

introduced in Equations (

129) and (

130), respectively. Thanks to Theorem 6, we have

i.e.,

for all

. Now, we can use the fact that

preserves density operators and that

is a Schwarz map to write

Replacing

with

, we find

Thus, we have proven that

and

, and so Theorem 5 implies that, for all

, it holds that

This result and inequality (

139) imply

for all

. By considering in particular

and recalling that

is a unital map, i.e.,

, we obtain

Now, since

is trace-preserving, we have

and, thus, we can rewrite inequality (

144) as follows:

Thanks to Equations (

133) and (

132), this last inequality implies the monotonicity of the relative entropy under partial trace.

5. Conclusions

We revisited the monotonicity of relative entropy under the action of quantum channels by focusing on two important proofs: those by Petz and Uhlmann. While both approaches are foundational, their complexity has often hindered their pedagogical dissemination.

Our aim was to clarify and reconstruct these strategies within a finite-dimensional operator framework. In particular, we pointed out a subtle flaw in Petz’s original argument, whose validity was nonetheless restored by Petz and Nielsen soon after, and we showed how to rigorously extend this approach to incorporate non-invertible density operators.

It is also worth noting that our explicit construction of the isometric operator defined in Equation (

60) sheds new light on its structural role within the broader context of quantum information theory. In particular, this operator can be seen as an essential component of the

Petz recovery map, originally introduced in [

9] in the setting of von Neumann algebras. Given a quantum channel

and a fixed full-rank state

, the Petz recovery map associated with

and

is defined by

or, equivalently, in terms of superoperators,

When

, this reduces to

or

So, just as the Petz recovery map characterizes the reversibility of quantum channels and identifies conditions for saturation of the monotonicity inequality, the operator

explicitly captures the mechanism by which relative entropy is contracted under partial trace.

In recent developments, particularly in the work of Fawzi and Renner [

29], the Petz recovery map plays a central role in quantitative refinements of the data processing inequality. Specifically, for states

and

and a channel

, the inequality

bounds the loss of distinguishability in terms of the fidelity

F between the original state

and its recovered approximation via

.

The explicit identification of offers a concrete realization of this recovery mechanism, reinforcing its interpretive clarity and suggesting further applications in entropy inequalities and recoverability conditions.