Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks

Abstract

1. Introduction

- We propose a novel PINN-based time-varying autoregressive modeling framework, which integrates the time-varying constraints with neural network-based function approximation to capture complex, non-stationary, and dynamic temporal dependencies.

- We conduct comprehensive empirical evaluations using both synthetic datasets—designed to simulate smooth and abrupt regime shifts across different lag orders (), and a real-world time series dataset, to systematically assess the performance and generalizability of all modeling frameworks. The comparison includes multiple evaluation metrics such as root mean squared error and trajectory reconstruction fidelity.

- We conduct a comprehensive comparative analysis of the PINN-based framework, focusing on its practical effectiveness in capturing dynamic temporal dependencies under non-stationary conditions. Specifically, we evaluate and discuss its strengths and limitations against GAM- and OKS-based methods and the stationary VAR model across several technical dimensions, including predictive accuracy in high-frequency regimes, robustness to structural breaks, and interpretability of time-varying coefficients.

- We release an open-source implementation of our PINN-based framework, developed using TensorFlow 2.18 for neural network training and SciPy for optimization and numerical solvers. The code is modular and extensible, supporting multiple configurations of lag structure, activation functions, training schedules (e.g., Adam, L-BFGS), and physical loss weighting schemes. Detailed examples and documentation are included to support reproducibility and ease of adoption.

2. Literature Review

3. Proposed Methods for Time-Varying Autoregressive Modeling

3.1. Generalized Additive-Based Method

3.2. Kernel Smoothing-Based Method

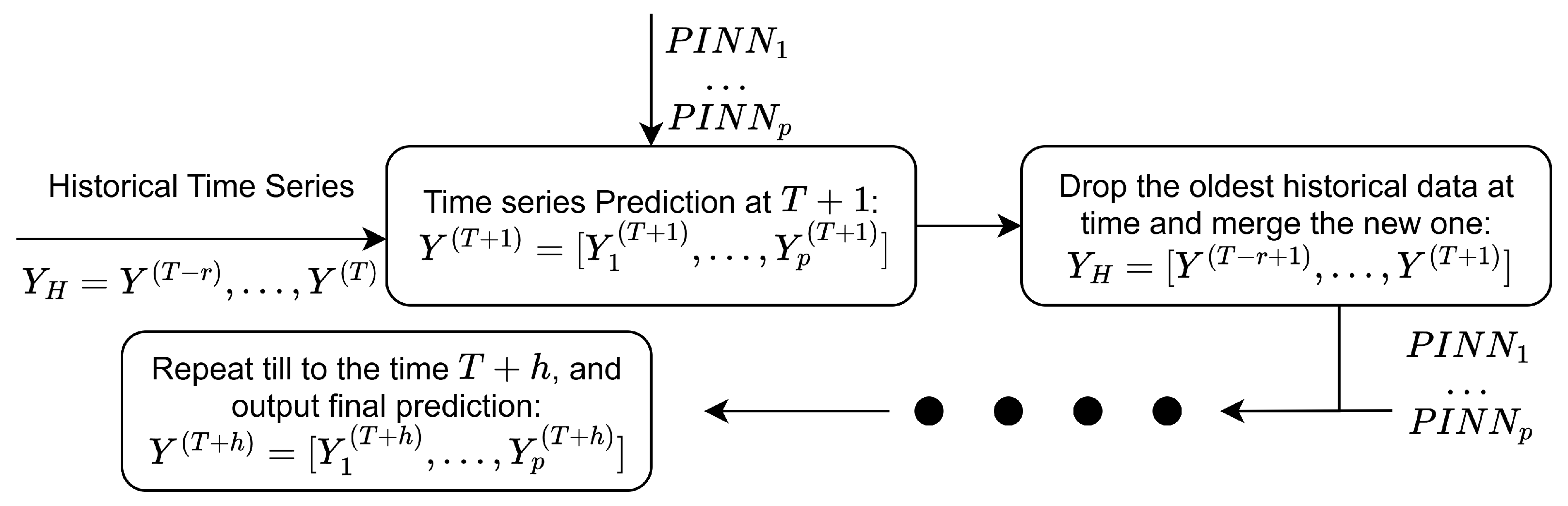

3.3. The Physics-Informed Neural Network-Based Method

- Define Physical Constraints: Formulate the mathematical model of the physical system, including the governing PDEs (or ODEs) and the associated initial and boundary conditions, which together define the structure of the solution space and guide the learning process.

- Initialize the Neural Network: Construct a neural network with randomly initialized weights and biases, where the network takes spatial or temporal variables as input and outputs the solution approximation.

- Construct the Loss Function: Define a composite loss function that penalizes deviations from the specified physical constraints, typically expressed aswhere each term measures the discrepancy between the network’s output and the corresponding physical condition.

- Train the Network: Optimize the network parameters by minimizing the total loss function, typically using gradient-based optimization algorithms, such as L-BFGS-B and the conjugate gradient method.

- Prediction and Evaluation: After training, the neural network can be considered as a mesh-free, continuous surrogate for the solution, allowing for efficient evaluation across the domain by inputting the independent variables.

4. Simulation

4.1. Simulation Preparation

- Scenario 1 (TV-AR): Consider a univariate time series with a lag order of , and an initial value sampled uniformly at random. The additive noise is modeled as Gaussian with zero mean and variance , scaled by a noise weight factor . The time-varying autoregressive coefficient is initialized with a random scalar , and evolves over time according to the update rule:where the time-varying term is defined as , which induces a smooth, sigmoid-like transition in the coefficient matrix entries over time. Additionally, the intercept vector is defined as , ensuring that the system’s dynamics evolve continuously over a short time window, with gradual shifts between high and low coefficient values.

- Scenario 2 (TV-AR): The setting of this scenario is similar to TV-VAR Scenario 1, by introducing quadratic changes in the time-varying coefficients instead of sigmoid-like transitions. Specifically, the temporal perturbation term is defined as , enabling evaluation of the performance of the model under non-stationary quadratic regimes.

- Scenario 3 (TV-VAR): Consider a multivariate time series with dimensionality , lag order , and an initial value sampled uniformly at random. The additive noise is modeled as Gaussian with zero mean and covariance matrix , and scaled by the noise weight . The time-varying coefficient matrix is initialized as a random matrix , normalized row-wise to form a row-stochastic matrix, and updated using the following functions:where the time-varying perturbation term is defined as , mirroring the structure used in Scenario 1 but with a smaller intercept vector specified as , enabling gradual regime shifts over time.

- Scenario 4 (TV-VAR): The setting of this scenario is similar to TV-VAR Scenario 3, but replaces the sigmoid-like time-varying component with a quadratic structure, similarly to the one in Scenario 2.

4.2. Simulation Results

4.3. Discussion

5. Real-World Applications

5.1. Data Preparation

5.2. Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Scholten, S.; Rubel, J.A.; Glombiewski, J.A.; Milde, C. What time-varying network models based on functional analysis tell us about the course of a patient’s problem. Psychother. Res. 2025, 35, 637–655. [Google Scholar] [CrossRef] [PubMed]

- Neumann, N.D.; Yperen, N.W.V.; Arens, C.R.; Brauers, J.J.; Lemmink, K.A.P.M.; Emerencia, A.C.; Meerhoff, L.A.; Frencken, W.G.P.; Brink, M.S.; Hartigh, R.J.R.D. How do psychological and physiological performance determinants interact within individual athletes? An analytical network approach. Int. J. Sport Exerc. Psychol. 2025, 23, 672–693. [Google Scholar] [CrossRef]

- Hamilton, J.D. Time Series Analysis; Princeton University Press: Princeton, NJ, USA, 1994. [Google Scholar]

- Jiang, X.Q.; Kitagawa, G. A time varying coefficient vector AR modeling of nonstationary covariance time series. Signal Process. 1993, 33, 315–331. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific Machine Learning Through Physics–Informed Neural Networks: Where we are and What’s Next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Haslbeck, J.M.B.; Waldorp, L.J. mgm: Estimating Time-Varying Mixed Graphical Models in High-Dimensional Data. J. Stat. Softw. 2020, 93, 1–46. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Guo, Y.; Cao, X.; Liu, B.; Gao, M. Solving Partial Differential Equations Using Deep Learning and Physical Constraints. Appl. Sci. 2020, 10, 5917. [Google Scholar] [CrossRef]

- Fazal, F.U.; Sulaiman, M.; Bassir, D.; Alshammari, F.S.; Laouini, G. Quantitative Analysis of the Fractional Fokker–Planck–Levy Equation via a Modified Physics-Informed Neural Network Architecture. Fractal Fract. 2024, 8, 671. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Karniadakis, G.E. Physics-Informed Neural Networks for Heat Transfer Problems. J. Heat Transf. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Tartakovsky, A.; Marrero, C.; Perdikaris, P.; Tartakovsky, G.; Barajas-Solano, D. Physics-Informed Deep Neural Networks for Learning Parameters and Constitutive Relationships in Subsurface Flow Problems. Water Resour. Res. 2020, 56, e2019WR026731. [Google Scholar] [CrossRef]

- Ramos, D.J.; Cunha, B.Z.; Daniel, G.B. Evaluation of physics-informed neural networks (PINN) in the solution of the Reynolds equation. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 568. [Google Scholar] [CrossRef]

- Hou, Q.; Li, Y.; Singh, V.P.; Sun, Z. Physics-informed neural network for diffusive wave model. J. Hydrol. 2024, 637, 131261. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, L.; Karniadakis, G.E. Learning in Modal Space: Solving Time-Dependent Stochastic PDEs Using Physics-Informed Neural Networks. SIAM J. Sci. Comput. 2020, 42, A639–A665. [Google Scholar] [CrossRef]

- CHEN, X.; DUAN, J.; KARNIADAKIS, G.E. Learning and meta-learning of stochastic advection–diffusion–reaction systems from sparse measurements. Eur. J. Appl. Math. 2021, 32, 397–420. [Google Scholar] [CrossRef]

- Yang, L.; Meng, X.; Karniadakis, G.E. B-PINNs: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. J. Comput. Phys. 2021, 425, 109913. [Google Scholar] [CrossRef]

- Elhareef, M.H.; Wu, Z. Physics-informed neural network method and application to nuclear reactor calculations: A pilot study. Nucl. Sci. Eng. 2023, 197, 601–622. [Google Scholar] [CrossRef]

- Lee, S.; Popovics, J. Applications of physics-informed neural networks for property characterization of complex materials. RILEM Tech. Lett. 2022, 7, 178–188. [Google Scholar] [CrossRef]

- Wang, D.; Jiang, X.; Song, Y.; Fu, M.; Zhang, Z.; Chen, X.; Zhang, M. Applications of Physics-Informed Neural Network for Optical Fiber Communications. IEEE Commun. Mag. 2022, 60, 32–37. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, M.; Dai, F.; Zou, D.; Lu, C.; Han, X.; Chen, Y.; Ji, C. Physics-Informed Fractional-Order Recurrent Neural Network for Fast Battery Degradation with Vehicle Charging Snippets. Fractal Fract. 2025, 9, 91. [Google Scholar] [CrossRef]

- Yang, Y.; Li, H. Neural Ordinary Differential Equations for robust parameter estimation in dynamic systems with physical priors. Appl. Soft Comput. 2025, 169, 112649. [Google Scholar] [CrossRef]

- Nie, F.; Fang, H.; Wang, J.; Zhao, L.; Jia, C.; Ma, S.; Wu, F.; Zhao, W.; Yang, S.; Wei, S.; et al. An Adaptive Solid-State Synapse with Bi-Directional Relaxation for Multimodal Recognition and Spatio-Temporal Learning. Adv. Mater. 2025, 37, 2412006. [Google Scholar] [CrossRef]

- Ren, D.; Wang, C.; Wei, X.; Zhang, Y.; Han, S.; Xu, W. Harmonizing physical and deep learning modeling: A computationally efficient and interpretable approach for property prediction. Scr. Mater. 2025, 255, 116350. [Google Scholar] [CrossRef]

- Yu, J.; Wang, H.; Chen, M.; Han, X.; Deng, Q.; Yang, C.; Zhu, W.; Ma, Y.; Yin, F.; Weng, Y.; et al. A novel method to select time-varying multivariate time series models for the surveillance of infectious diseases. BMC Infect. Dis. 2024, 24, 832. [Google Scholar] [CrossRef]

- Giudici, P.; Tarantino, B.; Roy, A. Bayesian time-varying autoregressive models of COVID-19 epidemics. Biom. J. 2023, 65, 2200054. [Google Scholar] [CrossRef]

- Maleki, M.; Bidram, H.; Wraith, D. Robust clustering of COVID-19 cases across U.S. counties using mixtures of asymmetric time series models with time varying and freely indexed covariates. J. Appl. Stat. 2023, 50, 2648–2662. [Google Scholar] [CrossRef] [PubMed]

- Azhar, M.A.R.; Adi Nugroho, H.; Wibirama, S. The Study of Multivariable Autoregression Methods to Forecast Infectious Diseases. In Proceedings of the 2021 IEEE 5th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Purwokerto, Indonesia, 24–25 November 2021; pp. 83–88. [Google Scholar] [CrossRef]

- Ding, F.; Xu, L.; Liu, P.; Wang, X. Two-stage parameter estimation methods for linear time-invariant continuous-time systems. Syst. Control Lett. 2025, 204, 106166. [Google Scholar] [CrossRef]

- Ji, Y.; Jiang, A. Filtering-Based Accelerated Estimation Approach for Generalized Time-Varying Systems With Disturbances and Colored Noises. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 206–210. [Google Scholar] [CrossRef]

- Ji, Y.; Liu, J.; Liu, H. An identification algorithm of generalized time-varying systems based on the Taylor series expansion and applied to a pH process. J. Process Control 2023, 128, 103007. [Google Scholar] [CrossRef]

- Zhao, Y.; Ji, Y. Weighted multi-innovation parameter estimation for a time-varying Volterra–Hammerstein system with colored noise. Optim. Control Appl. Methods 2025, 46, 271–291. [Google Scholar] [CrossRef]

- Sato, J.R.; Morettin, P.A.; Arantes, P.R.; Amaro, E. Wavelet based time-varying vector autoregressive modelling. Comput. Stat. Data Anal. 2007, 51, 5847–5866. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, L.; Xu, S.; Lu, K. A multiwavelet-based sparse time-varying autoregressive modeling for motor imagery EEG classification. Comput. Biol. Med. 2023, 155, 106196. [Google Scholar] [CrossRef]

- Bringmann, L.F.; Hamaker, E.L.; Vigo, D.E.; Aubert, A.; Borsboom, D.; Tuerlinckx, F. Changing dynamics: Time-varying autoregressive models using generalized additive modeling. Psychol. Methods 2017, 22, 409–425. [Google Scholar] [CrossRef] [PubMed]

- Bringmann, L.F.; Ferrer, E.; Hamaker, E.L.; Borsboom, D.; Tuerlinckx, F. Modeling Nonstationary Emotion Dynamics in Dyads using a Time-Varying Vector-Autoregressive Model. Multivar. Behav. Res. 2018, 53, 293–314. [Google Scholar] [CrossRef] [PubMed]

- Ren, B.; Lucey, B. Herding in the Chinese renewable energy market: Evidence from a bootstrapping time-varying coefficient autoregressive model. Energy Econ. 2023, 119, 106526. [Google Scholar] [CrossRef]

- Coronado, S.; Martinez, J.N.; Romero-Meza, R. Time-varying multivariate causality among infectious disease pandemic and emerging financial markets: The case of the Latin American stock and exchange markets. Appl. Econ. 2022, 54, 3924–3932. [Google Scholar] [CrossRef]

- Mohamad, A.; Inani, S.K. Price discovery in bitcoin spot or futures during the Covid-19 pandemic? Evidence from the time-varying parameter vector autoregressive model with stochastic volatility. Appl. Econ. Lett. 2023, 30, 2749–2757. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, Q.; Han, J.; Xiao, M. Measuring the Risk Spillover Effect of RCEP Stock Markets: Evidence from the TVP-VAR Model and Transfer Entropy. Entropy 2025, 27, 81. [Google Scholar] [CrossRef]

- Sheng, D.; Wang, D.; Zhang, J.; Wang, X.; Zhai, Y. A Time-Varying Mixture Integer-Valued Threshold Autoregressive Process Driven by Explanatory Variables. Entropy 2024, 26, 140. [Google Scholar] [CrossRef]

- Zhang, W.; Lin, Z.; Liu, X. Short-term offshore wind power forecasting—A hybrid model based on Discrete Wavelet Transform (DWT), Seasonal Autoregressive Integrated Moving Average (SARIMA), and deep-learning-based Long Short-Term Memory (LSTM). Renew. Energy 2022, 185, 611–628. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Liang, J.; Liu, L. DAFA-BiLSTM: Deep Autoregression Feature Augmented Bidirectional LSTM network for time series prediction. Neural Netw. 2023, 157, 240–256. [Google Scholar] [CrossRef]

- Laurenti, L.; Tinti, E.; Galasso, F.; Franco, L.; Marone, C. Deep learning for laboratory earthquake prediction and autoregressive forecasting of fault zone stress. Earth Planet. Sci. Lett. 2022, 598, 117825. [Google Scholar] [CrossRef]

- Nasir, J.; Aamir, M.; Haq, Z.U.; Khan, S.; Amin, M.Y.; Naeem, M. A New Approach for Forecasting Crude Oil Prices Based on Stochastic and Deterministic Influences of LMD Using ARIMA and LSTM Models. IEEE Access 2023, 11, 14322–14339. [Google Scholar] [CrossRef]

- Luo, K.; Zhao, J.; Wang, Y.; Li, J.; Wen, J.; Liang, J.; Soekmadji, H.; Liao, S. Physics-informed neural networks for PDE problems: A comprehensive review. Artif. Intell. Rev. 2025, 58, 323. [Google Scholar] [CrossRef]

- Rohrhofer, F.M.; Posch, S.; Gößnitzer, C.; Geiger, B.C. Data vs. Physics: The Apparent Pareto Front of Physics-Informed Neural Networks. IEEE Access 2023, 11, 86252–86261. [Google Scholar] [CrossRef]

- Barimah, A.K.; Onu, O.P.; Niculita, O.; Cowell, A.; McGlinchey, D. Scalable Data Transformation Models for Physics-Informed Neural Networks (PINNs) in Digital Twin-Enabled Prognostics and Health Management (PHM) Applications. Computers 2025, 14, 121. [Google Scholar] [CrossRef]

- Fernández de la Mata, F.; Gijón, A.; Molina-Solana, M.; Gómez-Romero, J. Physics-informed neural networks for data-driven simulation: Advantages, limitations, and opportunities. Phys. A Stat. Mech. Its Appl. 2023, 610, 128415. [Google Scholar] [CrossRef]

- Chen, C.; Tang, L.; Lu, Y.; Wang, Y.; Liu, Z.; Liu, Y.; Zhou, L.; Jiang, Z.; Yang, B. Reconstruction of long-term strain data for structural health monitoring with a hybrid deep-learning and autoregressive model considering thermal effects. Eng. Struct. 2023, 285, 116063. [Google Scholar] [CrossRef]

- Ghazi, M.M.; Sørensen, L.; Ourselin, S.; Nielsen, M. CARRNN: A Continuous Autoregressive Recurrent Neural Network for Deep Representation Learning From Sporadic Temporal Data. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 792–802. [Google Scholar] [CrossRef]

- Jia, Z.; Li, W.; Jiang, Y.; Liu, X. The Use of Minimization Solvers for Optimizing Time-Varying Autoregressive Models and Their Applications in Finance. Mathematics 2025, 13, 2230. [Google Scholar] [CrossRef]

- Hollingsworth, A.; Ruhm, C.J.; Simon, K. Macroeconomic conditions and opioid abuse. J. Health Econ. 2017, 56, 222–233. [Google Scholar] [CrossRef]

- Ruhm, C.J. Are recessions good for your health? Q. J. Econ. 2000, 115, 617–650. [Google Scholar] [CrossRef]

- Macmadu, A.; Batthala, S.; Gabel, A.M.C.; Rosenberg, M.; Ganguly, R.; Yedinak, J.L.; Hallowell, B.D.; Scagos, R.P.; Samuels, E.A.; Cerdá, M.; et al. Comparison of characteristics of deaths from drug overdose before vs during the COVID-19 pandemic in Rhode Island. JAMA Netw. Open 2021, 4, e2125538. [Google Scholar] [CrossRef]

- Martins, S.S.; Segura, L.E.; Marziali, M.E.; Bruzelius, E.; Levy, N.S.; Gutkind, S.; Santarin, K.; Sacks, K.; Fox, A. Higher unemployment benefits are associated with reduced drug overdose mortality in the United States before and during the COVID-19 pandemic. Int. J. Drug Policy 2024, 130, 104522. [Google Scholar] [CrossRef]

- Wu, P.; Evangelist, M. Unemployment insurance and opioid overdose mortality in the United States. Demography 2022, 59, 485–509. [Google Scholar] [CrossRef]

- Casal, B.; Iglesias, E.; Rivera, B.; Currais, L.; Storti, C.C. Identifying the impact of the business cycle on drug-related harms in European countries. Int. J. Drug Policy 2023, 122, 104240. [Google Scholar] [CrossRef]

| Method | H | MAE | RSV | Method | H | MAE | RSV |

|---|---|---|---|---|---|---|---|

| PINN (1) | 1 | PINN (1) | 2 | ||||

| PINN (2) | 1 | PINN (2) | 2 | ||||

| PINN (3) | 1 | PINN (3) | 2 | ||||

| GAM (1) | 1 | GAM (1) | 2 | ||||

| GAM (2) | 1 | GAM (2) | 2 | ||||

| GAM (3) | 1 | GAM (3) | 2 | ||||

| OKS (1) | 1 | OKS (1) | 2 | ||||

| OKS (2) | 1 | OKS (2) | 2 | ||||

| OKS (3) | 1 | OKS (3) | 2 | ||||

| AR (1) | 1 | AR (1) | 2 | ||||

| AR (2) | 1 | AR (2) | 2 | ||||

| AR (3) | 1 | AR (3) | 2 |

| Method | H | MAE | RSV | Method | H | MAE | RSV |

|---|---|---|---|---|---|---|---|

| PINN (1) | 1 | PINN (1) | 2 | ||||

| PINN (2) | 1 | PINN (2) | 2 | ||||

| PINN (3) | 1 | PINN (3) | 2 | ||||

| GAM (1) | 1 | GAM (1) | 2 | ||||

| GAM (2) | 1 | GAM (2) | 2 | ||||

| GAM (3) | 1 | GAM (3) | 2 | ||||

| OKS (1) | 1 | OKS (1) | 2 | ||||

| OKS (2) | 1 | OKS (2) | 2 | ||||

| OKS (3) | 1 | OKS (3) | 2 | ||||

| AR (1) | 1 | AR (1) | 2 | ||||

| AR (2) | 1 | AR (2) | 2 | ||||

| AR (3) | 1 | AR (3) | 2 |

| Method | H | MAE | RSV | Method | H | MAE | RSV |

|---|---|---|---|---|---|---|---|

| PINN (1) | 1 | PINN (1) | 2 | ||||

| PINN (2) | 1 | PINN (2) | 2 | ||||

| PINN (3) | 1 | PINN (3) | 2 | ||||

| GAM (1) | 1 | GAM (1) | 2 | ||||

| GAM (2) | 1 | GAM (2) | 2 | ||||

| GAM (3) | 1 | GAM (3) | 2 | ||||

| OKS (1) | 1 | OKS (1) | 2 | ||||

| OKS (2) | 1 | OKS (2) | 2 | ||||

| OKS (3) | 1 | OKS (3) | 2 | ||||

| VAR (1) | 1 | VAR (1) | 2 | ||||

| VAR (2) | 1 | VAR (2) | 2 | ||||

| VAR (3) | 1 | VAR (3) | 2 |

| Method | H | MAE | RSV | Method | H | MAE | RSV |

|---|---|---|---|---|---|---|---|

| PINN (1) | 1 | PINN (1) | 2 | ||||

| PINN (2) | 1 | PINN (2) | 2 | ||||

| PINN (3) | 1 | PINN (3) | 2 | ||||

| GAM (1) | 1 | GAM (1) | 2 | ||||

| GAM (2) | 1 | GAM (2) | 2 | ||||

| GAM (3) | 1 | GAM (3) | 2 | ||||

| OKS (1) | 1 | OKS (1) | 2 | ||||

| OKS (2) | 1 | OKS (2) | 2 | ||||

| OKS (3) | 1 | OKS (3) | 2 | ||||

| VAR (1) | 1 | VAR (1) | 2 | ||||

| VAR (2) | 1 | VAR (2) | 2 | ||||

| VAR (3) | 1 | VAR (3) | 2 |

| Methods | Lags | Second | Lags | Second | Lags | Second |

|---|---|---|---|---|---|---|

| AR | 1 | 0.35 | 2 | 0.48 | 3 | 0.66 |

| GAM | 1 | 3.51 | 2 | 4.56 | 3 | 8.78 |

| OKS | 1 | 10.10 | 2 | 17.06 | 3 | 22.76 |

| PINN | 1 | 68.11 | 2 | 68.04 | 3 | 68.63 |

| VAR | 1 | 0.77 | 2 | 0.33 | 3 | 0.21 |

| GAM | 1 | 7.88 | 2 | 10.68 | 3 | 12.83 |

| OKS | 1 | 56.66 | 2 | 48.66 | 3 | 37.10 |

| PINN | 1 | 136.92 | 2 | 130.05 | 3 | 130.86 |

| Method | Location | AE (UR, r = 1) | AE (DOD, r = 1) | AE (UR, r = 2) | AE (DOD, r = 2) |

|---|---|---|---|---|---|

| PINN | DC | ||||

| GAM | DC | ||||

| OKS | DC | ||||

| VAR | DC | ||||

| PINN | MD | ||||

| GAM | MD | ||||

| OKS | MD | ||||

| VAR | MD | ||||

| PINN | VA | ||||

| GAM | VA | ||||

| OKS | VA | ||||

| VAR | VA |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, Z.; Zhang, C. Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks. Entropy 2025, 27, 934. https://doi.org/10.3390/e27090934

Jia Z, Zhang C. Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks. Entropy. 2025; 27(9):934. https://doi.org/10.3390/e27090934

Chicago/Turabian StyleJia, Zhixuan, and Chengcheng Zhang. 2025. "Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks" Entropy 27, no. 9: 934. https://doi.org/10.3390/e27090934

APA StyleJia, Z., & Zhang, C. (2025). Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks. Entropy, 27(9), 934. https://doi.org/10.3390/e27090934