1. Introduction

The stringent demands imposed by next-generation communication systems underscore the importance of short error-correcting codes (ECCs). The rapid development of artificial intelligence (AI) and 6G communication systems has imposed unprecedented demands on ultra-low latency and ultra-high reliability, particularly in mission-critical applications such as autonomous vehicles, the industrial IoT, and real-time edge computing [

1]. In such scenarios, short ECCs are often preferred due to their ultra-low decoding latency and significantly low hardware complexity achieved by minimizing memory requirements compared to long codes [

2]. Moreover, with superior channel adaptability and deep AI-decoder integration, short codes emerge as a sustainable solution for stringent demands of 6G communication systems. However, short codes typically suffer from weak error correction capabilities, necessitating advanced decoding techniques to achieve near-optimal performance without excessive computational overhead [

3,

4,

5,

6].

Since their rediscovery, low-density parity-check (LDPC) codes have received a lot of research interest due to their remarkable error correction capabilities. It can be shown that long LDPC codes can achieve near Shannon-limit error correction performances under belief propagation (BP)-based iterative algorithms [

7,

8,

9,

10,

11]. On the other hand, the LDPC coding scheme designed for 6G communication systems should support a short code length (about one hundred bits) in order to meet the ultra-low latency requirement. Unfortunately, there is a considerable gap between the BP decoding performance and the corresponding theoretical bound for short LDPC codes, see, e.g., [

12,

13]. Some research works [

14,

15,

16] have designed methods for decoding short LDPC codes using the idea of the ordered statistic decoding (OSD) algorithm, which can be applied to any linear code with a given generator matrix. However, the OSD algorithm is not suitable for LDPC codes compared with other algebraic codes (e.g., BCH codes), since these codes are not represented by their generator matrices in general. As a consequence, designing effective decoding algorithms for short LDPC codes is still a challenging problem.

A cyclic redundancy check (CRC) code [

17] is an error detection code widely used in communication and storage systems to verify data integrity. In modern communication systems, an LDPC code is commonly concatenated with a CRC code for error correction [

18,

19,

20], which is called an LDPC-CRC concatenated code in this paper. In such a concatenation coding scheme, the information sequence is pre-coded by a CRC code and then is encoded by an LDPC code to obtain a transmitted codeword. Traditionally, the aim of such a scheme is to reduce the undetectable error rate of the system. Interestingly enough, it has been shown that the concatenation scheme can also facilitate the design of an effective decoding algorithm to improve the overall performance. To date, several research works have considered enhanced decoding methods for LDPC-CRC concatenated codes [

21,

22,

23]. For instance, the work in [

21] introduces an innovative multi-round BP decoding strategy with impulsive perturbation, which can effectively overcome the effects of trapping sets that often limit the performance of short codes. Furthermore, the work in [

22] demonstrates an enhanced decoding approach by integrating the CRC into the OSD process. Meanwhile, the authors of [

23] provide valuable insights into the performance–complexity trade-offs between regular and irregular codes under a CRC-aided hybrid decoding framework. This motivates a new decoding paradigm: instead of treating CRC merely as a post-decoding check, we can leverage it as an integral component of the decoding process.

In recent years, guessing random additive noise decoding (GRAND), a novel universal decoding approach proposed by Duffy et al. [

24], has received a lot of attention. GRAND performs the guessing process by ranking all the possible noise sequences in descending order of their likelihoods and removing them sequentially from the received sequence. The process terminates if a codeword is obtained or if the maximum guessing number is reached. It can be shown that GRAND can achieve near optimal performance with reasonable decoding complexity, especially for short linear codes. To date, various extensions of GRAND have been provided, see, e.g., [

25,

26,

27,

28,

29,

30]. Among them, the ordered reliability bits GRAND (ORBGRAND) algorithm [

26] is one of the most promising versions of GRAND since it introduces an effective method for scheduling the noise sequences.

In this paper, we propose an efficient decoding scheme for LDPC-CRC concatenated codes. Our scheme is motivated by the following observation: For most LDPC codes, the performance gain of BP-based iterative decoding is reduced as the maximum number of iterations increases. As an example, it has been shown that the performance gap of LDPC codes constructed from finite geometries is within 0.2 dB when the maximum number of iterations is set as 20 and 100, respectively; see, e.g., [

31] (Figure 10.2). This observation allows us to design a complexity-aware decoding scheme that dynamically adapts decoding efforts, particularly in combination with CRC-aided strategies. Concretely speaking, we provide a decoding approach that contains two stages. In the first stage, we use the traditional iterative algorithm (e.g., the min-sum algorithm) with a relative small maximum number of iterations. The decoding process terminates if an LDPC codeword is obtained in this stage. Otherwise, we perform the decoding of the CRC code in the second stage. To be specific, we apply the GRAND algorithm to the CRC code in the concatenation scheme and obtain a list of information sequences that satisfy the CRC check. Afterwards, we encode each information sequence into an LDPC codeword and choose the most likely codeword as the decoding output. The simulation results indicate that the proposed decoding approach can outperform the traditional iterative algorithm with a large maximum number of iterations. The analysis results suggest that the average complexity of the proposed algorithm is relatively low. To our knowledge, this represents the first successful integration of GRAND with LDPC-CRC concatenation structures.

The rest of this paper is organized as follows.

Section 2 presents the background knowledge. We provide the proposed decoding scheme for LDPC-CRC concatenated codes in

Section 3.

Section 4 presents the simulation results. Finally,

Section 5 concludes this paper.

2. Background Knowledge

In this section, we present essential background knowledge related to this work. We first introduce the fundamentals of LDPC codes and their iterative decoding algorithms. Then, we provide an overview of CRC codes and the GRAND algorithm. All the codes considered in this work are binary codes.

2.1. LDPC Codes and Iterative Decoding

An

LDPC code

is a linear code specified by a sparse parity-check matrix

of size

. (Note that

may have redundant rows, so we have

, where

is the rank of

over the binary finite field.) For convenience, we associate

with a bipartite graph (called the Tanner graph [

32]) that has

m check nodes and

n variable nodes corresponding to the

m rows and

n columns of

, respectively. The

i-th variable node

is connected to the

j-th check node

if and only if entry

in

is 1. Define

Traditionally, an LDPC code can be decoded by BP-based iterative algorithms. The decoding process can be viewed as message passing and updating on the edges of the Tanner graph that describes the code. Here, we describe the min-sum algorithm (MSA), one of the most prevalent BP-based iterative algorithms.

Suppose

is the log-likelihood ratio (LLR) of the

i-th bit, which can be calculated by

where

is the channel output sequence.

For the

k-th iteration, let

and

be the messages from

to

and from

to

, respectively. The MSA is performed as follows [

33].

Set

and the maximum number of iterations,

. For each

such that

, set

For

and each

,

For

and each

,

Create the hard-decision vector

such that

If holds or , stop the iteration process and output as the decoding result. Otherwise, set and go to Step 1.

2.2. CRC Codes and GRAND

A CRC code is a shortened cyclic code that is defined by a generator polynomial .

Assume that an information sequence

of length

k is transmitted. The CRC encoding procedure can be described as

In this manner, we obtain a binary linear code with length and dimension k.

The encoding procedure of a CRC code can also be performed under the following systematic manner

where

, the CRC polynomial of the information sequence, is given by

The systematic encoding of a CRC code can be efficiently implemented using a shift register; see, e.g., [

17] for details.

Compared with other linear codes, CRC codes have the advantage that the information length can be flexibly adjusted.

Suppose a codeword,

, of a binary linear code,

, with length

n is transmitted. Let the hard-decision sequence from the channel be

The GRAND algorithm operates by sequentially guessing noise sequences

introduced by the channel to recover the transmitted codeword. In other words, the decoder tries noises

such that

GRAND implements a maximum-likelihood (ML) decoding rule by prioritizing noise patterns based on their a priori likelihoods. Under the common assumption that the noise sequence has the lowest Hamming weight, the ML decoding is expressed as

In the classical GRAND scheme, candidate noise patterns are tested in ascending order of Hamming weights, i.e., noise patterns

with small

are examined first, focusing decoding efforts on the most probable error patterns.

2.3. ORBGRAND

Ordered reliability bits guessing random additive noise decoding (ORBGRAND) refines the original GRAND framework by incorporating ordered bitwise reliability into the prioritization of candidate noise patterns. Instead of exhaustively testing noise sequences based solely on their combinatorial simplicity, ORBGRAND utilizes probabilistic ranking derived from soft reliability metrics, typically based on LLR values magnitudes, to guide decoding. This ordered strategy enables the decoder to explore more probable error configuration patterns first, which can significantly improve the decoding accuracy.

Let the received soft-decision channel output

be

The hard-decision sequence

is

where

.

The bitwise reliability values,

, are defined by LLR magnitudes

where

.

A permutation,

, is introduced such that

Let

be a candidate error pattern defined over the reliability-sorted domain. To reflect the likelihood of each pattern, a logistic weight is assigned as

The decoder proceeds by enumerating all patterns

such that

for increasing values of

, i.e.,

To implement the decoding process, ORBGRAND explores error patterns in ascending order of logistic weight. For each weight level

, candidate patterns

are generated and evaluated sequentially. Each error pattern is then mapped back to the original bit order using the inverse permutation

, and the decoded candidate is formed as

3. Proposed Two-Stage Decoding Scheme

3.1. Motivation

Traditionally, an LDPC code is decoded with BP-based algorithms that are performed in an iterative manner. It is hoped that the performance becomes better as the number of iterations increases. However, the known results indicate that the performance gain of BP-based iterative decoding is reduced as the maximum number of iterations increases for most LDPC codes with short to moderate lengths [

31]. Moreover, as the signal-to-noise ratio (SNR) increases, the BP-based iterative decoding falls short of the optimal decoding. It is known that certain harmful structures, called trapping sets [

34,

35], in an LDPC matrix degrade the performance of BP-based iterative decoding. The identification of such structures and the performance prediction using the structures have received a lot of interest. For details, the reader can refer to [

36,

37,

38,

39].

On the other hand, an LDPC code is commonly concatenated with a CRC code in modern communication systems. For such an LDPC-CRC concatenated code, the information sequence is pre-coded by a CRC code and then is encoded by an LDPC code to obtain a codeword. Traditionally, we perform LDPC decoding at first and then perform CRC check if the decoding is successful. The CRC check can detect residual errors after LDPC decoding and reduce the undetectable error rate of LDPC decoding. (An LDPC decoding process is said to be successful if a codeword is obtained. However, the obtained codeword may not be the transmitted codeword. In this case, an undetectable error of LDPC decoding occurs.)This is especially helpful when the LDPC code has short to moderate lengths. Recently, it has been shown that CRC codes can be efficiently decoded by GRAND algorithms when these codes are used for error correction [

20]. This motivates us to design a two-stage decoding algorithm to compensate for the above-mentioned problem in BP-based iterative decoding.

3.2. Algorithm Design

As mentioned in the previous subsection, the proposed algorithm contains two stages. The traditional BP-based iterative algorithm is used to decode the LDPC code. The maximum number of iterations of LDPC decoding is set as a relatively small number, which is due to the aforementioned fact that performance improvement is limited when the number is increased to a certain value.

If the decoding is successful, i.e., an LDPC codeword is obtained, we terminate the decoding process and perform a CRC check. Otherwise, we consider the decoding of CRC codes as error correction codes. We apply the GRAND algorithm to the CRC code in the concatenation scheme and obtain a list of CRC codewords that can satisfy the CRC check. Finally, we encode each obtained CRC codeword to an LDPC codeword and choose the most likely codeword as the decoding output. If no CRC codeword is found, our decoding approach fails. In this case, we use the LDPC decoding result in the first stage as the decoding output.

In order to achieve desirable performances, the size of listed CRC codewords should be reasonably large. This is equivalent to setting a relatively large number of queries in the GRAND algorithm, which inevitably increases GRAND’s complexity. However, it is worth mentioning that the GRAND algorithm is performed only when LDPC decoding in the first-stage decoding fails. This indicates that the total complexity of the proposed two-stage decoding scheme is reasonable, especially when the SNR is moderate. The complexity analysis of the proposed decoding scheme is provided in the subsequent subsection.

Overall, the flowchart of the proposed decoding approach is provided in

Figure 1.

3.3. Complexity Analysis

In order to measure the complexity of the proposed two-stage decoding scheme, we use the number of operations required for decoding a received vector. This number can be derived based on the algorithm description in the previous subsection as well as the related references. For ease of analysis, we assume that the LDPC code is a -regular code described by an parity-check matrix in the following. The results can be generalized to irregular LDPC codes in a straightforward manner.

3.3.1. BP Decoding Complexity

Suppose the MSA is used for BP decoding an LDPC code. Let

I be the average number of iterations. According to [

40], we need

real additions,

real comparisons, and

binary additions (XORs) in one iteration. Then, BP decoding complexity can be estimated as

3.3.2. GRAND Complexity

Suppose the CRC code is specified by a parity-check matrix of size . The complexity of GRAND consists of two parts:

Initial sorting requires real comparisons to obtain the bit reliability order.

Error pattern testing requires binary operations. For an error pattern of Hamming weight t, at most , binary operations are needed. Hence, the complexity of error pattern testing is upper bounded by , where M is the number of tested error patterns, and is the average Hamming weight of tested error patterns.

It is worth mentioning that the actual number of binary operations can be reduced if an appropriate scheduling of error patterns is used [

41].

3.3.3. LDPC Encoding Complexity

Richardson and Urbanke [

42] pioneered the first efficient encoding algorithm for random LDPC codes. Their analysis suggested that gap parameter

g scales as

for random codes but remains sufficiently small in practice. Consequently, the online encoding complexity

reduces to

(see [

42] for details). For quasi-cyclic (QC) LDPC codes, the encoding complexity,

, attains

through shift register implementations [

43,

44].

3.3.4. Proposed Scheme Complexity

The complexity of the proposed two-stage decoding scheme, denoted by

, can be estimated as

where

is the probability of the second stage of decoding. Since the second stage of decoding is invoked only when the first stage of BP decoding does not find any valid codeword, parameter

is upper bounded by the block error rate (BLER) of LDPC decoding in the first stage. (Note that LDPC decoding errors contain both detectable errors and undetectable errors. If an undetectable error occurs, the second stage of decoding is not invoked.)

In typical channel conditions, the BLER of LDPC decoding is below . (For example, as shown in the next section, the BLER of a random (3,15)-regular LDPC code at 5 dB is about 0.05, where the MSA is used and the maximum number of iterations is set to 15). This indicates that most decoding tasks can be resolved in the first stage of decoding, and only a small fraction requires the second stage of decoding. Hence, the extra decoding complexity introduced by the proposed scheme is relatively low.

4. Simulation Results

In this section, we evaluate the performance of the proposed decoding scheme. For comparison purposes, the existing perturbation decoding scheme [

21] for LDPC-CRC concatenated codes is also evaluated. We assume binary phase-shift keying (BPSK) modulation over the additive white Gaussian noise (AWGN) channel in our simulation. A regular [110,88] random LDPC code and a regular [169,132] QC-LDPC code are used. Each code is concatenated with a CRC-16 code, and the resulting schemes are denoted as

and

, respectively. The parity-check matrix of the LDPC code in

is constructed based on the progressive-edge growth method [

45], and the parity-check matrix of the LDPC code in

is an array-based QC matrix [

46]. The parameters of the two LDPC-CRC concatenated codes are listed in

Table 1.

The decoding schemes evaluated include the following:

MSA15-GRAND: The proposed two-stage decoding scheme, where the maximum number of iterations in the standard MSA is set to 15. Parameter specifies the maximum number of error patterns tested (i.e., ) in the GRAND algorithm.

MSA15-NOISE: A two-stage decoding scheme where the standard MSA, limited to 15 iterations, is followed by noise-perturbed decoding, whose maximum number of decoding attempts is set to 30. The noise variance is optimized at a given SNR. For details, the reader can refer to [

21].

MSA15: The standard MSA with a maximum of 15 iterations.

MSA50: The standard MSA with a maximum of 50 iterations.

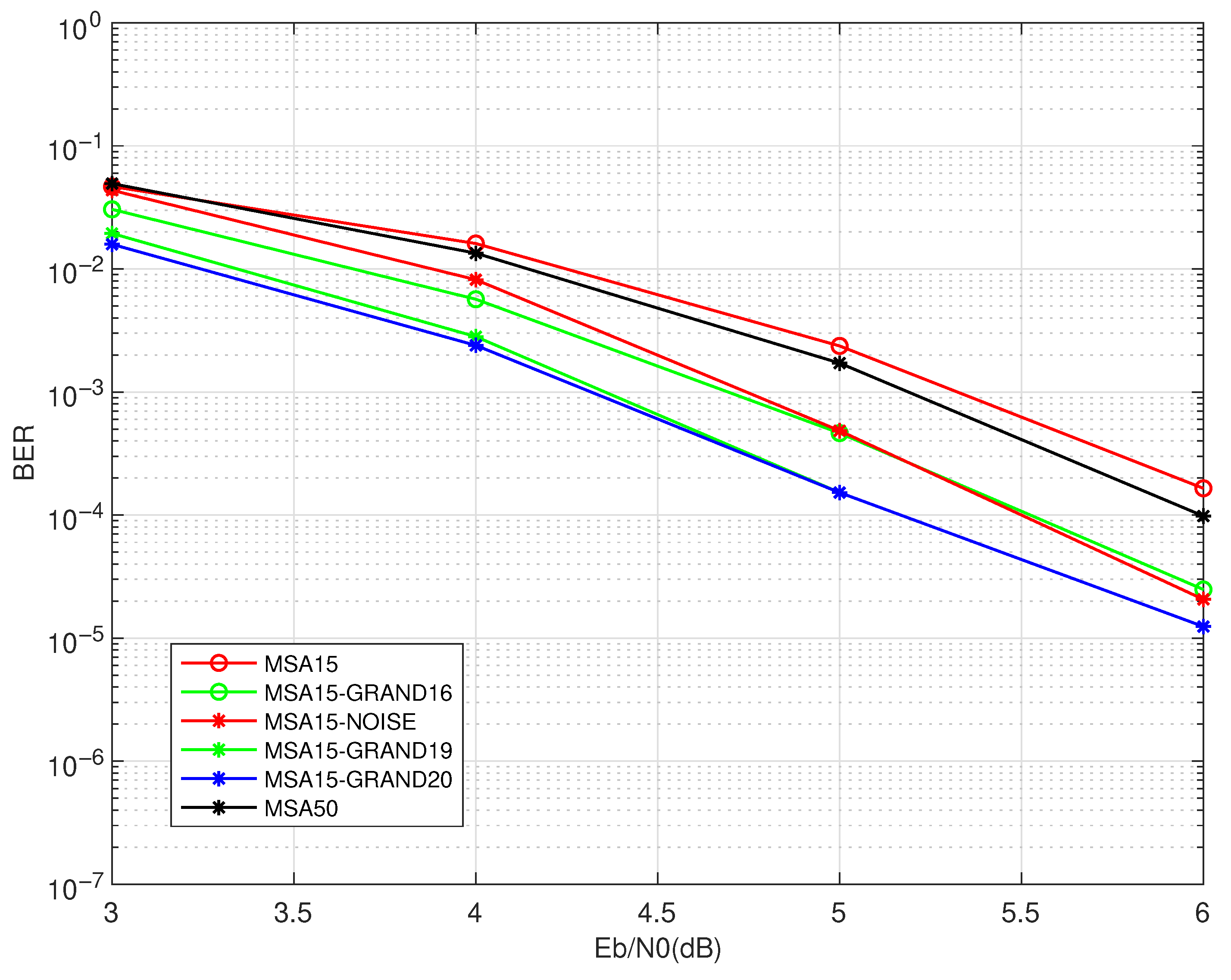

As shown in

Figure 2, increasing the maximum number of iterations in the MSA from 15 to 50 yields only a marginal improvement in the BER performance for

. This observation suggests that merely increasing the maximum number of iterations does not necessarily lead to significant performance.

In contrast to the limited improvement observed with increased MSA iterations, the proposed two-stage decoding schemes (MSA15-GRAND ) significantly outperform both MSA15 and MSA50 within the simulated SNR range. As increases from 16 to 20, the BER performance improves accordingly, demonstrating a controllable trade-off between the decoding complexity and performance. Notably, MSA15-GRAND20 achieves a better performance than MSA50, despite using a lower number of MSA iterations, confirming the effectiveness of the GRAND-enhanced post-processing stage.

Meanwhile, scheme MSA15-NOISE also outperforms MSA15, although the gain is less significant compared to the proposed two-stage decoding schemes (MSA15-GRAND ). This suggests that while noise-perturbed decoding can offer performance improvements, its potential is limited compared to the systematic test pattern exploration adopted by GRAND.

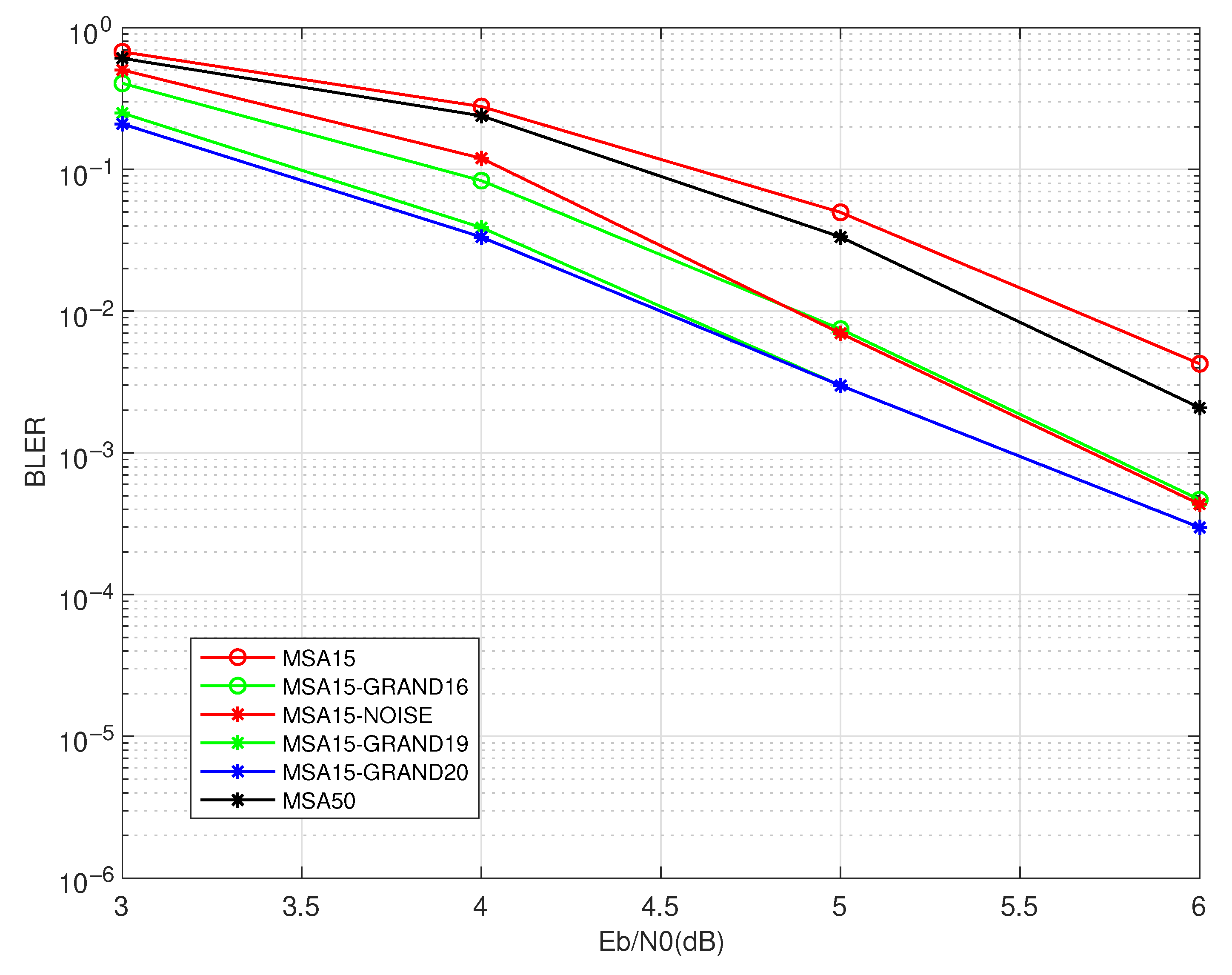

Figure 3 illustrates the BLER performance of various decoding schemes for

, reflecting decoding reliability at the block level. While the overall trend resembles that of the BER performance in

Figure 2, the impact of different decoding strategies on complete codeword recovery is more pronounced. In particular, the proposed MSA15-GRAND

schemes demonstrate significantly stronger block-level error correction, with MSA15-GRAND20 achieving up to an order-of-magnitude lower BLER compared to MSA50. This reinforces the suitability of GRAND-assisted decoding in systems requiring stringent block-level reliability. Although scheme MSA15-NOISE also reduces the BLER relative to the baseline, its improvements are less consistent, especially in the high-SNR regime.

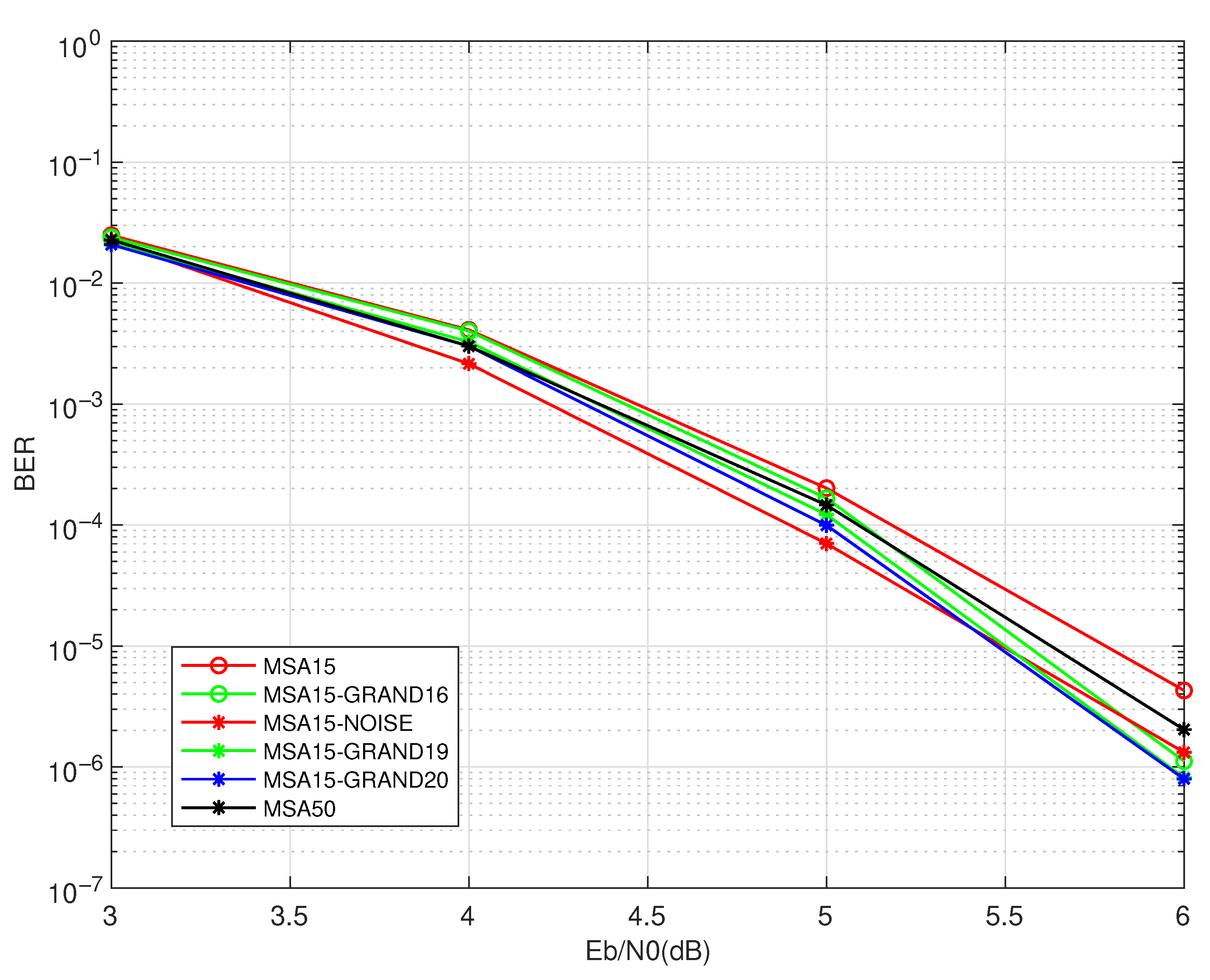

Figure 4 and

Figure 5 illustrate the BER performance and the BLER performance of various decoding schemes applied to

. Compared to the results observed in

, all decoding schemes achieve a substantially better error performance within the simulated SNR range, highlighting the advantages of structured code construction.

In

Figure 4, the proposed schemes MSA15-GRAND

continue to provide the best overall BER performance, particularly in the medium-to-high SNR region. Although the relative gains over MSA50 are smaller than those for

, the improvement remains consistent and measurable. Interestingly, MSA15-NOISE shows competitive behavior in the low-SNR regime (i.e., SNR <

dB), temporarily outperforming all other schemes. This indicates that in highly noisy conditions, stochastic perturbations may offer early decoding benefits. However, as the SNR increases, its performance quickly saturates and is eventually surpassed by the proposed scheme.

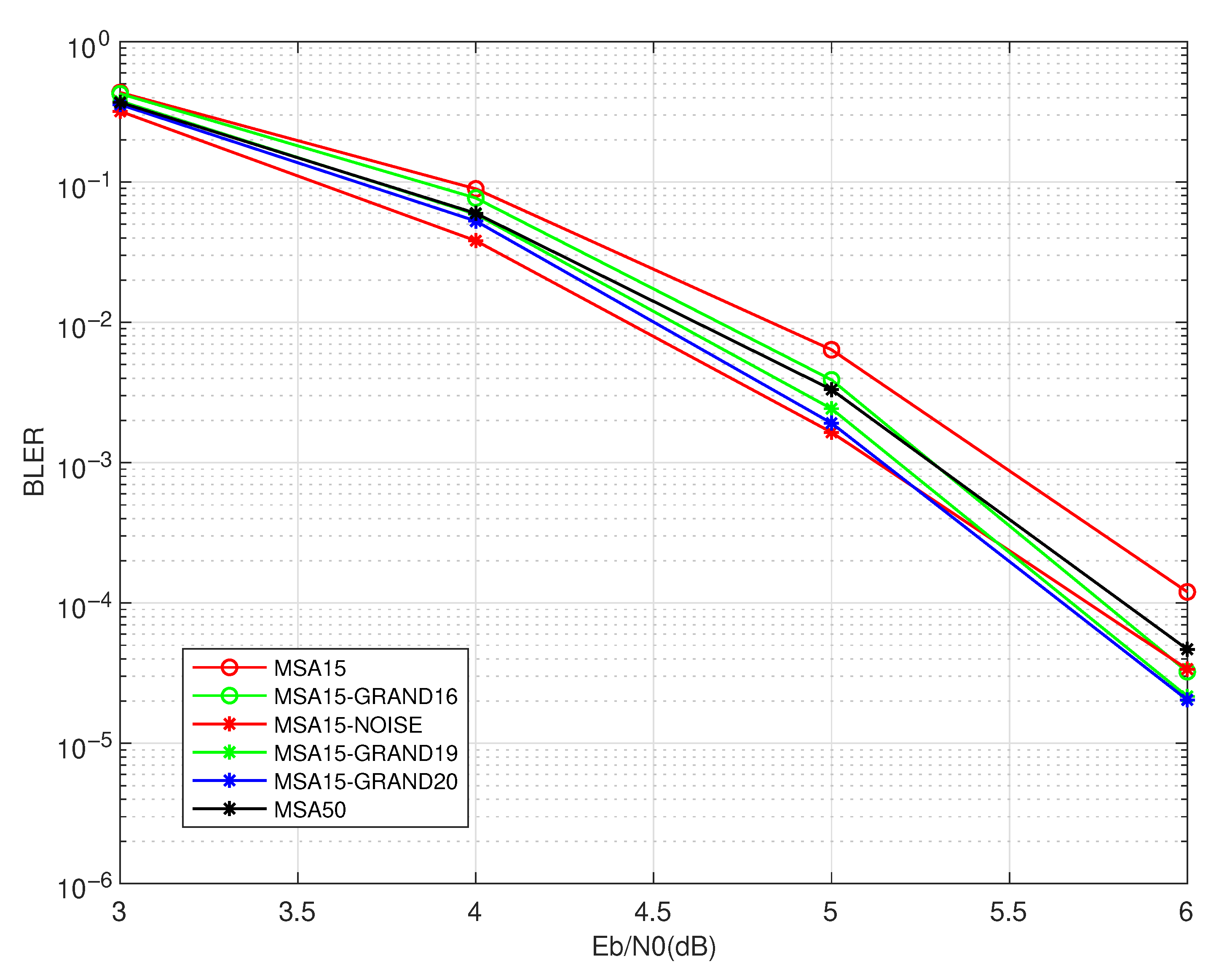

Figure 5 presents the BLER performance of

. The structured nature of

again leads to a significantly reduced BLER compared to

. The scheme MSA15-GRAND20 achieves the lowest BLER across most of the SNR range, confirming its effectiveness at the block level. The gain over MSA50, while modest, remains stable. Meanwhile, MSA15-NOISE maintains an edge in the low-SNR region but fails to preserve that advantage at the high-SNR region, suggesting a lack of robustness and generality.

Overall, the results for validate the general applicability of the proposed decoding scheme and demonstrate its advantages for QC-LDPC codes. Despite the narrow low-SNR advantage of MSA15-NOISE, schemes MSA15-GRAND consistently deliver the best performance in practical operating regimes, offering an effective trade-off between complexity and reliability.